Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into fix-7211

Showing

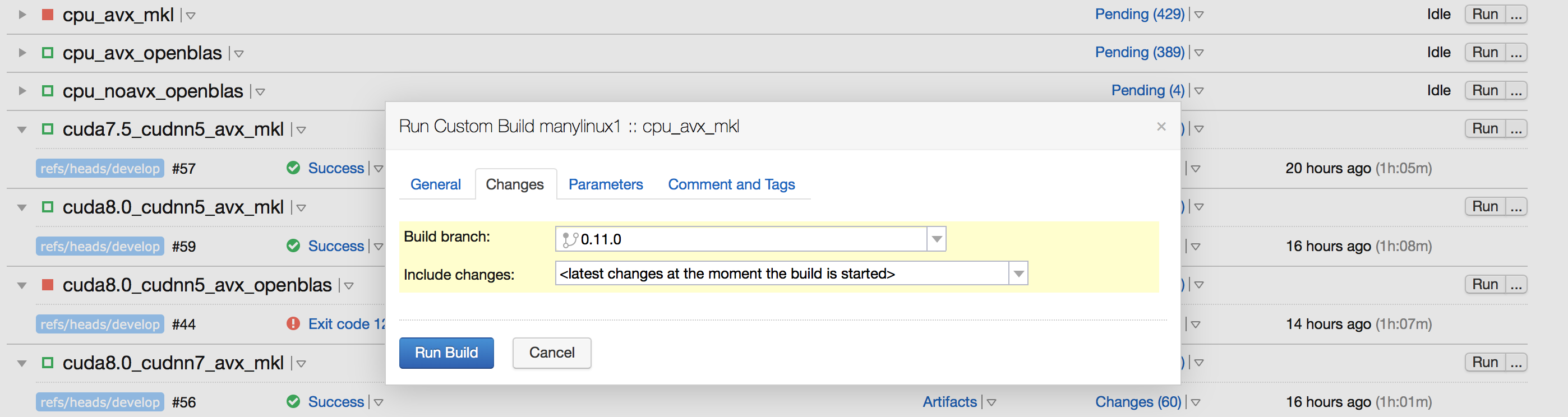

doc/design/ci_build_whl.png

0 → 100644

280.4 KB

83.3 KB

22.5 KB

39.7 KB

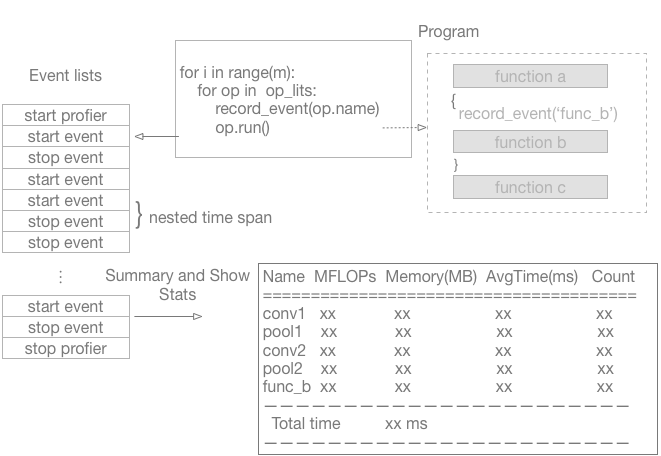

doc/design/images/profiler.png

0 → 100644

49.9 KB

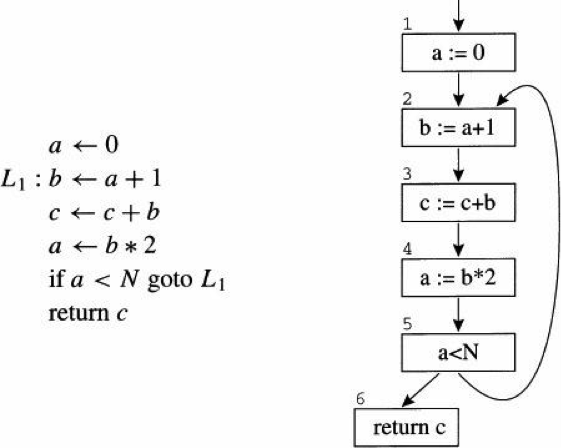

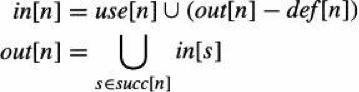

doc/design/memory_optimization.md

0 → 100644

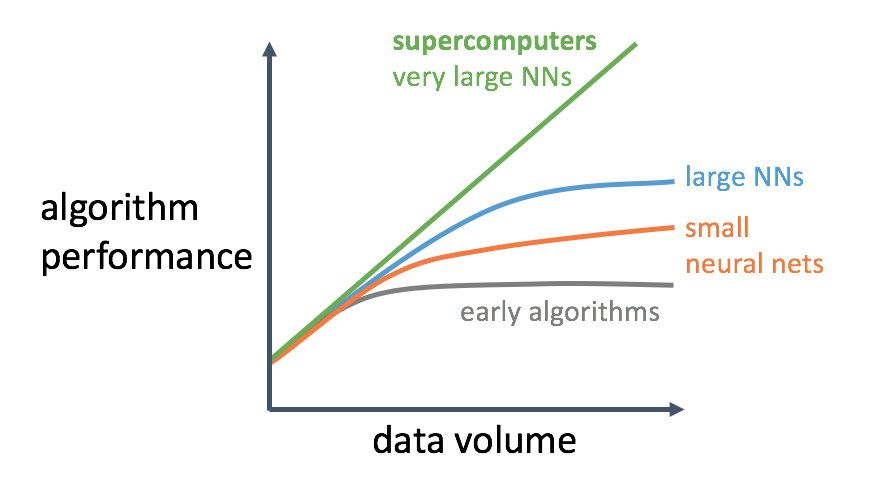

doc/design/profiler.md

0 → 100644

paddle/inference/CMakeLists.txt

0 → 100644

paddle/inference/example.cc

0 → 100644

paddle/inference/inference.cc

0 → 100644

paddle/inference/inference.h

0 → 100644

paddle/operators/norm_op.cc

0 → 100644

此差异已折叠。

paddle/operators/norm_op.cu

0 → 100644

此差异已折叠。

paddle/operators/norm_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

paddle/operators/tensor.save

已删除

100644 → 0

此差异已折叠。

paddle/platform/mkldnn_helper.h

0 → 100644

此差异已折叠。

paddle/platform/profiler.cc

0 → 100644

此差异已折叠。

paddle/platform/profiler.h

0 → 100644

此差异已折叠。

paddle/platform/profiler_test.cc

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。