Fom mobiles (#403)

* add compress network of fom

Showing

deploy/lite/README.md

0 → 100644

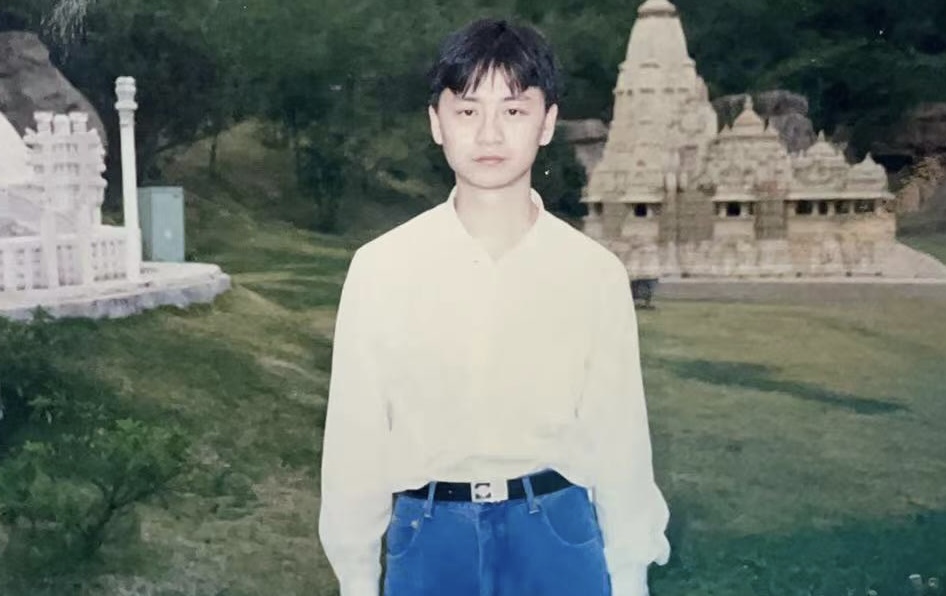

docs/imgs/father_23.jpg

0 → 100644

126.7 KB

docs/imgs/mayiyahei.MP4

0 → 100644

文件已添加

* add compress network of fom

126.7 KB