{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Text recognition practice\n",

"\n",

"In the theoretical part of the previous chapter, the main methods in the field of text recognition were introduced. Among them, CRNN was proposed earlier and is currently more widely used in the industry. This chapter will introduce in detail how to build, train, evaluate and predict the CRNN text recognition model based on PaddleOCR. The data set is icdar 2015, in which there are 4468 pieces in the training set and 2077 pieces in the test set.\n",

"\n",

"\n",

"Through the study of this chapter, you can master:\n",

"\n",

"1. How to use PaddleOCR whl package to quickly complete text recognition prediction\n",

"\n",

"2. The basic principles and network structure of CRNN\n",

"\n",

"3. The necessary steps and parameter adjustment methods for model training\n",

"\n",

"4. Use a custom data set to train the network\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## 1 Quick experience\n",

"\n",

"### 1.1 Install related dependencies and whl packages\n",

"\n",

"First confirm that paddle and paddleocr are installed. If they have been installed, ignore this step."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple\n",

"Requirement already satisfied: paddlepaddle-gpu in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (2.1.2.post101)\n",

"Requirement already satisfied: protobuf>=3.1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (3.14.0)\n",

"Requirement already satisfied: numpy>=1.13; python_version >= \"3.5\" and platform_system != \"Windows\" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (1.20.3)\n",

"Requirement already satisfied: Pillow in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (7.1.2)\n",

"Requirement already satisfied: six in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (1.15.0)\n",

"Requirement already satisfied: requests>=2.20.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (2.22.0)\n",

"Requirement already satisfied: astor in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (0.8.1)\n",

"Requirement already satisfied: decorator in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (4.4.2)\n",

"Requirement already satisfied: gast<=0.4.0,>=0.3.3; platform_system != \"Windows\" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu) (0.3.3)\n",

"Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu) (1.25.6)\n",

"Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu) (2019.9.11)\n",

"Requirement already satisfied: idna<2.9,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu) (2.8)\n",

"Requirement already satisfied: chardet<3.1.0,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu) (3.0.4)\n",

"Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple\n",

"Collecting pip\n",

"\u001b[?25l Downloading https://pypi.tuna.tsinghua.edu.cn/packages/a4/6d/6463d49a933f547439d6b5b98b46af8742cc03ae83543e4d7688c2420f8b/pip-21.3.1-py3-none-any.whl (1.7MB)\n",

"\u001b[K |████████████████████████████████| 1.7MB 8.4MB/s eta 0:00:01\n",

"\u001b[?25hInstalling collected packages: pip\n",

" Found existing installation: pip 19.2.3\n",

" Uninstalling pip-19.2.3:\n",

" Successfully uninstalled pip-19.2.3\n",

"Successfully installed pip-21.3.1\n",

"Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple\n",

"Collecting paddleocr\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/e1/b6/5486e674ce096667dff247b58bf0fb789c2ce17a10e546c2686a2bb07aec/paddleocr-2.3.0.2-py3-none-any.whl (250 kB)\n",

" |████████████████████████████████| 250 kB 3.3 MB/s \n",

"\u001b[?25hCollecting lmdb\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/2e/dd/ada2fd91cd7832979069c556607903f274470c3d3d2274e0a848908272e8/lmdb-1.2.1-cp37-cp37m-manylinux2010_x86_64.whl (299 kB)\n",

" |████████████████████████████████| 299 kB 12.8 MB/s \n",

"\u001b[?25hCollecting lxml\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/7b/01/16a9b80c8ce4339294bb944f08e157dbfcfbb09ba9031bde4ddf7e3e5499/lxml-4.7.1-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.manylinux_2_24_x86_64.whl (6.4 MB)\n",

" |████████████████████████████████| 6.4 MB 52.4 MB/s \n",

"\u001b[?25hCollecting python-Levenshtein\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/2a/dc/97f2b63ef0fa1fd78dcb7195aca577804f6b2b51e712516cc0e902a9a201/python-Levenshtein-0.12.2.tar.gz (50 kB)\n",

" |████████████████████████████████| 50 kB 1.6 MB/s \n",

"\u001b[?25h Preparing metadata (setup.py) ... \u001b[?25ldone\n",

"\u001b[?25hCollecting scikit-image\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/9a/44/8f8c7f9c9de7fde70587a656d7df7d056e6f05192a74491f7bc074a724d0/scikit_image-0.19.1-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (13.3 MB)\n",

" |████████████████████████████████| 13.3 MB 56.1 MB/s \n",

"\u001b[?25hRequirement already satisfied: numpy in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddleocr) (1.20.3)\n",

"Collecting imgaug==0.4.0\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/66/b1/af3142c4a85cba6da9f4ebb5ff4e21e2616309552caca5e8acefe9840622/imgaug-0.4.0-py2.py3-none-any.whl (948 kB)\n",

" |████████████████████████████████| 948 kB 62.9 MB/s \n",

"\u001b[?25hCollecting opencv-contrib-python==4.4.0.46\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/08/51/1e0a206dd5c70fea91084e6f43979dc13e8eb175760cc7a105083ec3eb68/opencv_contrib_python-4.4.0.46-cp37-cp37m-manylinux2014_x86_64.whl (55.7 MB)\n",

" |████████████████████████████████| 55.7 MB 44 kB/s 0:01\n",

"\u001b[?25hCollecting premailer\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/b1/07/4e8d94f94c7d41ca5ddf8a9695ad87b888104e2fd41a35546c1dc9ca74ac/premailer-3.10.0-py2.py3-none-any.whl (19 kB)\n",

"Collecting shapely\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ae/20/33ce377bd24d122a4d54e22ae2c445b9b1be8240edb50040b40add950cd9/Shapely-1.8.0-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.whl (1.1 MB)\n",

" |████████████████████████████████| 1.1 MB 14.5 MB/s \n",

"\u001b[?25hRequirement already satisfied: visualdl in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddleocr) (2.2.0)\n",

"Collecting fasttext==0.9.1\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/10/61/2e01f1397ec533756c1d893c22d9d5ed3fce3a6e4af1976e0d86bb13ea97/fasttext-0.9.1.tar.gz (57 kB)\n",

" |████████████████████████████████| 57 kB 9.0 MB/s \n",

"\u001b[?25h Preparing metadata (setup.py) ... \u001b[?25ldone\n",

"\u001b[?25hRequirement already satisfied: cython in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddleocr) (0.29)\n",

"Requirement already satisfied: openpyxl in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddleocr) (3.0.5)\n",

"Collecting pyclipper\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c5/fa/2c294127e4f88967149a68ad5b3e43636e94e3721109572f8f17ab15b772/pyclipper-1.3.0.post2-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.whl (603 kB)\n",

" |████████████████████████████████| 603 kB 7.6 MB/s \n",

"\u001b[?25hRequirement already satisfied: tqdm in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddleocr) (4.36.1)\n",

"Collecting pybind11>=2.2\n",

" Using cached https://pypi.tuna.tsinghua.edu.cn/packages/a8/3b/fc246e1d4c7547a7a07df830128e93c6215e9b93dcb118b2a47a70726153/pybind11-2.8.1-py2.py3-none-any.whl (208 kB)\n",

"Requirement already satisfied: setuptools>=0.7.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from fasttext==0.9.1->paddleocr) (56.2.0)\n",

"Requirement already satisfied: Pillow in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from imgaug==0.4.0->paddleocr) (7.1.2)\n",

"Requirement already satisfied: imageio in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from imgaug==0.4.0->paddleocr) (2.6.1)\n",

"Requirement already satisfied: scipy in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from imgaug==0.4.0->paddleocr) (1.6.3)\n",

"Requirement already satisfied: opencv-python in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from imgaug==0.4.0->paddleocr) (4.1.1.26)\n",

"Requirement already satisfied: matplotlib in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from imgaug==0.4.0->paddleocr) (2.2.3)\n",

"Requirement already satisfied: six in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from imgaug==0.4.0->paddleocr) (1.15.0)\n",

"Collecting PyWavelets>=1.1.1\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/a1/9c/564511b6e1c4e1d835ed2d146670436036960d09339a8fa2921fe42dad08/PyWavelets-1.2.0-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.whl (6.1 MB)\n",

" |████████████████████████████████| 6.1 MB 3.8 MB/s \n",

"\u001b[?25hRequirement already satisfied: packaging>=20.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image->paddleocr) (20.9)\n",

"Requirement already satisfied: networkx>=2.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image->paddleocr) (2.4)\n",

"Collecting tifffile>=2019.7.26\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/d8/38/85ae5ed77598ca90558c17a2f79ddaba33173b31cf8d8f545d34d9134f0d/tifffile-2021.11.2-py3-none-any.whl (178 kB)\n",

" |████████████████████████████████| 178 kB 7.1 MB/s \n",

"\u001b[?25hRequirement already satisfied: et-xmlfile in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from openpyxl->paddleocr) (1.0.1)\n",

"Requirement already satisfied: jdcal in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from openpyxl->paddleocr) (1.4.1)\n",

"Requirement already satisfied: requests in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from premailer->paddleocr) (2.22.0)\n",

"Collecting cssselect\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/3b/d4/3b5c17f00cce85b9a1e6f91096e1cc8e8ede2e1be8e96b87ce1ed09e92c5/cssselect-1.1.0-py2.py3-none-any.whl (16 kB)\n",

"Collecting cssutils\n",

" Downloading https://pypi.tuna.tsinghua.edu.cn/packages/24/c4/9db28fe567612896d360ab28ad02ee8ae107d0e92a22db39affd3fba6212/cssutils-2.3.0-py3-none-any.whl (404 kB)\n",

" |████████████████████████████████| 404 kB 134 kB/s \n",

"\u001b[?25hRequirement already satisfied: cachetools in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from premailer->paddleocr) (4.0.0)\n",

"Requirement already satisfied: pre-commit in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (1.21.0)\n",

"Requirement already satisfied: Flask-Babel>=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (1.0.0)\n",

"Requirement already satisfied: flask>=1.1.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (1.1.1)\n",

"Requirement already satisfied: flake8>=3.7.9 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (3.8.2)\n",

"Requirement already satisfied: shellcheck-py in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (0.7.1.1)\n",

"Requirement already satisfied: pandas in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (1.1.5)\n",

"Requirement already satisfied: bce-python-sdk in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (0.8.53)\n",

"Requirement already satisfied: protobuf>=3.11.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddleocr) (3.14.0)\n",

"Requirement already satisfied: pyflakes<2.3.0,>=2.2.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddleocr) (2.2.0)\n",

"Requirement already satisfied: pycodestyle<2.7.0,>=2.6.0a1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddleocr) (2.6.0)\n",

"Requirement already satisfied: importlib-metadata in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddleocr) (0.23)\n",

"Requirement already satisfied: mccabe<0.7.0,>=0.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddleocr) (0.6.1)\n",

"Requirement already satisfied: itsdangerous>=0.24 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddleocr) (1.1.0)\n",

"Requirement already satisfied: Jinja2>=2.10.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddleocr) (2.11.0)\n",

"Requirement already satisfied: click>=5.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddleocr) (7.0)\n",

"Requirement already satisfied: Werkzeug>=0.15 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddleocr) (0.16.0)\n",

"Requirement already satisfied: pytz in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl->paddleocr) (2019.3)\n",

"Requirement already satisfied: Babel>=2.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl->paddleocr) (2.8.0)\n",

"Requirement already satisfied: decorator>=4.3.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from networkx>=2.2->scikit-image->paddleocr) (4.4.2)\n",

"Requirement already satisfied: pyparsing>=2.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from packaging>=20.0->scikit-image->paddleocr) (2.4.2)\n",

"Requirement already satisfied: pycryptodome>=3.8.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl->paddleocr) (3.9.9)\n",

"Requirement already satisfied: future>=0.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl->paddleocr) (0.18.0)\n",

"Requirement already satisfied: kiwisolver>=1.0.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->imgaug==0.4.0->paddleocr) (1.1.0)\n",

"Requirement already satisfied: python-dateutil>=2.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->imgaug==0.4.0->paddleocr) (2.8.0)\n",

"Requirement already satisfied: cycler>=0.10 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->imgaug==0.4.0->paddleocr) (0.10.0)\n",

"Requirement already satisfied: aspy.yaml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddleocr) (1.3.0)\n",

"Requirement already satisfied: virtualenv>=15.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddleocr) (16.7.9)\n",

"Requirement already satisfied: nodeenv>=0.11.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddleocr) (1.3.4)\n",

"Requirement already satisfied: pyyaml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddleocr) (5.1.2)\n",

"Requirement already satisfied: cfgv>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddleocr) (2.0.1)\n",

"Requirement already satisfied: identify>=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddleocr) (1.4.10)\n",

"Requirement already satisfied: toml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddleocr) (0.10.0)\n",

"Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->premailer->paddleocr) (1.25.6)\n",

"Requirement already satisfied: chardet<3.1.0,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->premailer->paddleocr) (3.0.4)\n",

"Requirement already satisfied: idna<2.9,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->premailer->paddleocr) (2.8)\n",

"Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->premailer->paddleocr) (2019.9.11)\n",

"Requirement already satisfied: MarkupSafe>=0.23 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Jinja2>=2.10.1->flask>=1.1.1->visualdl->paddleocr) (1.1.1)\n",

"Requirement already satisfied: zipp>=0.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from importlib-metadata->flake8>=3.7.9->visualdl->paddleocr) (3.6.0)\n",

"Building wheels for collected packages: fasttext, python-Levenshtein\n",

" Building wheel for fasttext (setup.py) ... \u001b[?25ldone\n",

"\u001b[?25h Created wheel for fasttext: filename=fasttext-0.9.1-cp37-cp37m-linux_x86_64.whl size=2584156 sha256=acb4d4fde73d31c7dfdd2ae3de0da25a558c34c672d4904e6a5c4279185fe5af\n",

" Stored in directory: /home/aistudio/.cache/pip/wheels/a1/cb/b3/a25a8ce16c1a4ff102c1e40d6eaa4dfc9d5695b92d57331b36\n",

" Building wheel for python-Levenshtein (setup.py) ... \u001b[?25ldone\n",

"\u001b[?25h Created wheel for python-Levenshtein: filename=python_Levenshtein-0.12.2-cp37-cp37m-linux_x86_64.whl size=171687 sha256=56b4a2de4349a05004121050df68b488ffd253dcc59187ca07b89b62d40c0218\n",

" Stored in directory: /home/aistudio/.cache/pip/wheels/38/b9/a4/3729726160fb103833de468adb5ce019b58543ae41d0b0e446\n",

"Successfully built fasttext python-Levenshtein\n",

"Installing collected packages: tifffile, PyWavelets, shapely, scikit-image, pybind11, lxml, cssutils, cssselect, python-Levenshtein, pyclipper, premailer, opencv-contrib-python, lmdb, imgaug, fasttext, paddleocr\n",

"Successfully installed PyWavelets-1.2.0 cssselect-1.1.0 cssutils-2.3.0 fasttext-0.9.1 imgaug-0.4.0 lmdb-1.2.1 lxml-4.7.1 opencv-contrib-python-4.4.0.46 paddleocr-2.3.0.2 premailer-3.10.0 pybind11-2.8.1 pyclipper-1.3.0.post2 python-Levenshtein-0.12.2 scikit-image-0.19.1 shapely-1.8.0 tifffile-2021.11.2\n"

]

}

],

"source": [

"# Install PaddlePaddle GPU version\n",

"!pip install paddlepaddle-gpu\n",

"# Install PaddleOCR whl package\n",

"!pip install -U pip\n",

"!pip install paddleocr"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

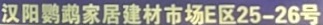

"### 1.2 Quickly predict text content\n",

"\n",

"The PaddleOCR whl package will automatically download the ppocr lightweight model as the default model\n",

"\n",

"The following shows how to use the whl package for recognition prediction:\n",

"\n",

"Test picture:\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"[2021/12/23 20:28:44] root WARNING: version 2.1 not support cls models, use version 2.0 instead\n",

"download https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_infer.tar to /home/aistudio/.paddleocr/2.2.1/ocr/det/ch/ch_PP-OCRv2_det_infer/ch_PP-OCRv2_det_infer.tar\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/skimage/morphology/_skeletonize.py:241: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.\n",

"Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations\n",

" 0, 1, 1, 0, 0, 1, 0, 0, 0], dtype=np.bool)\n",

"/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/skimage/morphology/_skeletonize.py:256: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.\n",

"Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations\n",

" 0, 0, 0, 0, 0, 0, 0, 0, 0], dtype=np.bool)\n",

" 0%| | 0.00/3.19M [00:00\n",

"\n",

"\n",

"### 2.2 Detailed algorithm\n",

"\n",

"CRNN's network structure system is as follows, from bottom to top, there are three parts: convolutional layer, recursive layer, and transcription layer:\n",

"\n",

"

\n",

"\n",

"1. Backbone:\n",

"\n",

"As the underlying backbone network, the convolutional network is used to extract feature sequences from the input image. Since `conv`, `max-pooling`, `elementwise` and activation functions all act on the local area, they are translation invariant. Therefore, each column of the feature map corresponds to a rectangular area (called a receptive field) of the original image, and these rectangular areas are in the same order from left to right as their corresponding columns on the feature map. Since CNN needs to scale the input image to a fixed size to meet its fixed input dimensionality, it is not suitable for sequence objects that vary greatly in length. In order to better support variable length sequences, CRNN sends the feature vector output from the last layer of backbone to the RNN layer and converts it into sequence features.\n",

"\n",

"

\n",

"\n",

"2. neck:\n",

"\n",

"The recursive layer builds a recursive network on the basis of the convolutional network, converts image features into sequence features, and predicts the label distribution of each frame.\n",

"RNN has a strong ability to capture sequence context information. Image-based sequence recognition using contextual cues is more effective than processing each pixel individually. Taking scene text recognition as an example, wide characters may require several consecutive frames to be fully described. In addition, some ambiguous characters are easier to distinguish when observing their context. Second, RNN can back-propagate the error differential back to the convolutional layer, so that the network can be trained uniformly. Third, RNN can operate on sequences of any length, which solves the problem of text images becoming longer. CRNN uses double-layer LSTM as the recursive layer to solve the problem of gradient disappearance and gradient explosion in the training process of long sequences.\n",

"\n",

"

\n",

"\n",

"\n",

"3. head:\n",

"\n",

"The transcription layer converts the prediction of each frame into the final label sequence through the fully connected network and the softmax activation function. Finally, CTC Loss is used to complete the joint training of CNN and RNN without sequence alignment. CTC has a special merging sequence mechanism. After LSTM outputs the sequence, it needs to be classified in time sequence to obtain the prediction result. There may be multiple time steps corresponding to the same category, so the same results need to be combined. In order to avoid merging the repeated characters that exist, CTC introduced a `blank` character to be inserted between the repeated characters.\n",

"\n",

"

\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 2.3 Code Implementation\n",

"\n",

"The entire network structure is very concise, and the code implementation is relatively simple. Modules can be built in sequence following the forecasting process. This section needs to be completed: data input, backbone construction, neck construction, head construction.\n",

"\n",

"**[Data Input]**\n",

"\n",

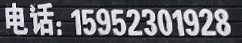

"The data needs to be scaled to a uniform size (3,32,320) before being sent to the network, and normalization is completed. The data enhancement part required during training is omitted here, and a single image is used as an example to show the necessary steps of preprocessing [source code location](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.3/ppocr/data/imaug/rec_img_aug.py#L126):\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [],

"source": [

"import cv2\n",

"import math\n",

"import numpy as np\n",

"\n",

"def resize_norm_img(img):\n",

" \"\"\"\n",

" Data scaling and normalization\n",

" :param img: input picture\n",

" \"\"\"\n",

"\n",

" # Default input size\n",

" imgC = 3\n",

" imgH = 32\n",

" imgW = 320\n",

"\n",

" # The real height and width of the picture\n",

" h, w = img.shape[:2]\n",

" # Picture real aspect ratio\n",

" ratio = w / float(h)\n",

"\n",

" # Scaling\n",

" if math.ceil(imgH * ratio) > imgW:\n",

" # If greater than the default width, the width is imgW\n",

" resized_w = imgW\n",

" else:\n",

" # If it is smaller than the default width, the actual width of the picture shall prevail\n",

" resized_w = int(math.ceil(imgH * ratio))\n",

" # Zoom\n",

" resized_image = cv2.resize(img, (resized_w, imgH))\n",

" resized_image = resized_image.astype('float32')\n",

" # Normalized\n",

" resized_image = resized_image.transpose((2, 0, 1)) / 255\n",

" resized_image -= 0.5\n",

" resized_image /= 0.5\n",

" # For the position with insufficient width, add 0\n",

" padding_im = np.zeros((imgC, imgH, imgW), dtype=np.float32)\n",

" padding_im[:, :, 0:resized_w] = resized_image\n",

" # Transpose the image after padding for visualization\n",

" draw_img = padding_im.transpose((1,2,0))\n",

" return padding_im, draw_img\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [

{

"name": "stderr",

"output_type": "stream",

"text": [

"Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).\n"

]

},

{

"data": {

"image/png": "iVBORw0KGgoAAAANSUhEUgAAAXQAAADbCAYAAAB9XmrcAAAABHNCSVQICAgIfAhkiAAAAAlwSFlzAAALEgAACxIB0t1+/AAAADl0RVh0U29mdHdhcmUAbWF0cGxvdGxpYiB2ZXJzaW9uIDIuMi4zLCBodHRwOi8vbWF0cGxvdGxpYi5vcmcvIxREBQAAIABJREFUeJztvWmMJdl1JvadePGWfLlUVlZW1tq19UKySZFNuqdFLRBkamhTHMM9HghjcowRfxDogS3CkiHDwxkBhgQY8MiQJXsAQYP2iB7OQBDFoehhQ6BHpqjWaCRITTa3Xtnd1dVLVXXtWbm8fPkyXry4/nFvvPNFVUQuXVmZlU/nA7LqZmTEjbtEnLjnu2cR5xwMBoPBsPcR7XYDDAaDwbA9MIFuMBgMIwIT6AaDwTAiMIFuMBgMIwIT6AaDwTAiMIFuMBgMIwIT6AaDwTAi2BaBLiKfEJFXROSsiHx+O+o0GAwGw9Ygd+pYJCI1AK8C+DiACwC+DeDTzrmX7rx5BoPBYNgs4m2o4zEAZ51z5wBARL4E4HEAlQJ930TdHZ5plfyFPy5SfrgUUlrkX4TqEOHz6Rw631W0JQrnS6THBmlWcX8FfzeLH9GKPlO7nMtKj5chElW6Cv2ke2aFxpTfnk/hO3K/3fBcPZnvGRXqc1TOSo8zpHJO3x1kg3Hzbam8muqpOn/9h3Rz9y+fjKpLy56jwrk8zoWaK57zqi5UNqDi/GH7Nj55M+NSaGJVnfwe5+8ovQuFd6jYgvK28PtC9xFHY1fV9OIk3NZWfkf5eFYy/1fmu1jsrG36DdgOgX4MwHn6/QKAH731JBF5AsATAHBofxNP/vJHwh/oRRcadEeTUTIXmatx3VQmgQY9p0bnx7WGlmMdAq4ny2hwI62z2WwCABoNrWPh5pKeWysXqIPBYFhO+1TOtHMx3cfF2t5ev19af+Ty5lH7Ym1XvV7X+ug+a2tr2q6UHjSqZ5CVC+l66D+gAiUZpMNjjbqe24y1vmyg90ySZFhOU72WUfgwROXMIAu0/JwqAcF1SOFjqXUMCvKx/Pyqa7Ps9oe0qg+bqWMz/ednKnN+HBsxHaMx5+eZ6yv0Py0XurWaPossxQof6ZJ3dEADym3lvsU1fUar+N9MLy3UU1hIUBsbDf+M8vPPz7yj+9ciff+5nzwX/HxLRu80ywtavcRNEqt5452+w02SHSz/1ui9yOXb537jz7AV7NimqHPuSefco865R/dN1De+wGAwGAxbwnas0C8CuI9+Px6ObQ4FvWUz35fN699CX/A6rcR5MdVZ0S/3zeXOsDy/sDgsr/X065qvLvjrPzczPSy3Wkoltalci3llLaXlwgqRfmm1xvQ4ra7ykiNNxJGG0lnVlcX8/PywfGN+YVg+f/6CXkur0l6vNyyz1jM2Pj4s52PQHpsYHps9sG9YPn360LBcozZKTcclAq0oaWKywupPy7WIV7R6bSPML6/gBjz/TZqLSOcuHeg9azV9Ror9p/kiFZmnK29i1SqbV7C84uTnKBvQKp5ehWadtKKBPospaW45FZb2qT+krdVAK86+9o3BmqvQu9inMYqpLYOU5o4anPcpprEYsFZY0Ep1zGN6/njl3hjTuYsbtBikd6fPGnCgQPnY1KQ+l91ud1guaJ90z7VU29sg7b7Z0v6zVrBKYxFFtNIPWpJEOrbjdX7+dA4HTJEFTXyrbON2rNC/DeBBETktIg0AnwLw1DbUazAYDIYt4I5X6M65VEQ+B+CPAdQAfME59+Idt8xgMBgMW8J2UC5wzn0dwNe3dE2JMlG5EV2yQVXj3elNWMSwKhyRmrecrA7L//HZ7w/Lr72htARp68j38GJq6+FpVcP+wd//e8Myq5yrKyvD8sTUpN5/Ve8vFVQAEqIc6kR/TEwBALqrqh6+cvbNYfn1N5VOuXZNKZf5JaWW1hLaLE2UonEZqb91VRcjLGu7goJXJ9V+fFz7cN/Zt4blY0f2D8snT54elg8cUFqG6YTuirYxog2lRoM2Wvt0Tl4Ht47m+a033xmWf/iatqtHG4EDunp8QmmuWqRj9MiPvH9YnppU+qlWC5RHTze2WmPtYfkczUXS1/vcXNA+MM0Q11VtT3tKEcxM67Nz4ujhYbkRzl/p6hw2mTZZ0zEcb2u7mX9kKiImmi8lyuHmTX2O37pwWe/f1PPbY77+5SWlLacmdSyOHdF283O+r6XtunbpujZxoM9cOzzzQFGGEOOBOGyKRrG+lys8zwOiooijWCO6yhH9NejTe0HUUb2h9Sf0HrXaKjDGQhuErVzWiIoiOpfYR7Ta/lmItki6mKeowWAwjAhMoBsMBsOIYFsoly3DSYFG0eMVfEnBEqaEqqlwbGEUrR+03B/o+WtUXiXGIyXb09yet05/H4gOI6t+NVKz4gpbYra4KTgF0U752KSq2RlZv1wJNMoLL782PPbCy68My4srSuf0iUJZ6qialxWcL8hunyw+aqR+shVF0gv1O1VDx5a1fQvzl4bli5dU5b50Te32T59W+uXo4SPD8sSYqtbiyD53oH2qk8VFHNrryMlrNdF+3pjXe56/qFTBIk10WqDltDwxqVTI0aNHh+VGS1X3Vm65QDp8QnPVIzrrP/zlt4blzgrNBT3+dZr/Bln2HJ5V6qpPdZ457Q3NGg0d5xr5BLCNOVtFRdA6IiF780zLffKb+OGrSle99EN97ro6RRgfb4W69Z5nTh4bltsTahU1VtM5fOGC1v36q68Py/PzN7VPRKMwRdIlqgth7CKiMJvkP1Er2KxruU5zV2P/GLBVDp1DlB6xMqhTG3OrrFpN6xuje0bkN7D/gL7nx07652xQyUOXw1boBoPBMCIwgW4wGAwjgt2hXO4AOb1SRa04omf4FHZmYKcNVmiywvlajstiX5B2ys4+CXEuZKhQsGAZsPswO7+QKujqRHnUddf8yk3d/X/5lbMAgO+9oFai5y+p08i4shZojakVQkQqdI3oooToioQcKwaJ1pmShUaulTbJ2YPdntdIC75xQy0o+skbwzJbH3E4hfYxtYSoE/3TJ4ufmNYjLqcLqD/8AHDfVla1YV012kBK88/OP1FNaRF2shF+fcL9M3aNp3v21lQnX+1pmVgDkCEKIqIF2ZP8yoJaX83d1PLp++/3hZjd4ak/YPqFrDn4JSEKwQk7x1BoB5ojbu+CsiLorfrnpU3+cDwuDXqea2RCdnFFLYFevHZtWH7nkt6oPaGUW0QUSa9H1Fled40o1Kv03NB4MhNaiPdUHvmjEJ+Iz+EwF/WCQ9kgHNNz2bKGy2fOHBiWxw54Woqp4s3AVugGg8EwIjCBbjAYDCOCe4py2VQo1ZJzK+kX5lOiuLQsNY7BwOdQPRxtL+ygC0eGJA4n5WhwHEuDdC6Ot8J6cUyqKFMxlyn2ynef16jEL778MgBgcZmsTNSAAHWKgcH8z9iEHmcqKCariYQsRAoxVlgDDMedK7/uyAF1FOmvqaq8Ss4Zly5f1Xa1tfExRZs8RPFh6g0ao57yJUPLDR5z0ok5BglbEEXkIdYkykvIyoXjd9RE6x+Q5VDucJbRsYiooojipHCMlSZRaxlxVDE9IzGp80tdHcc3LqgV0dwRHz7pxFG1FCrGFCGLmwFTbhzWmKMgMv1CJhzUrgL9EJWU+R1y5ZRPj96/LlV4g2ieeY6DQ68oO0glNYrgGWixjBtAIWBSppP42abIn3y8xhFOWRYQLeaIdmTqrh7uxXVkFEuH2DesUp+zcL6rkG1VsBW6wWAwjAjuqRV6VcR4DsKvq3G2nwb9Xcu8QC+s6GnFn9HXesBlurbPG63Du0vp39do02xQsCvnjRKKx0yrNcT6lV9Z1k3El147Nyx/+7kXhuXLl/0Xfb/upUBoJXBtXlcC9YaW9x/Q3dIzZ+4flotx1bUthbjqNI5Li961++JFDa554/KNYbm3pn3IaDOV84HcXFS37jff1k0xKcSsVlv140e0s31a0Q7C88Ab2xnHo6dd7B7tRHZWefeL47rr4ZhshXtr5RudcP4CXrVLg1bopH0t06ZskmkdvUTvMzamWsE4bUT2Ul2hX7immtv06z4lwez+2eGxJoVsqJG9d5rqszCgVWkh8qDoALC2styh1SVtehd061CNI7+OlEOJ8iqfNJfWlEYtrVN4DCyqDwFPV7+nY9HhjeagJLJhA49nOiiPwV+niIgSU9tJi+EVMNu5X1zUZ52mHe1QHqPXvMaCiQTNSkqhLxKvxWZmh24wGAx/M2EC3WAwGEYE9xTlsqmNzqEd+sZ1uEK6KD2H6RK2j03J95pMteHYnz+cE0Xl6iRvxAhtsg5ItWZ3XqnxOeTWf12pixd/qG7Q1+ZJRQ6XcmS8VYreyHbgswfVJfx9731oWH7Pe7RcI8olpqiODWojuz7n0fmuHlc1/+pltR9+hTZwOe0eu7inNBecYEQuaHTEMYpeN0HlmFziJVA6hdSBUj4XvBE9oA1dtivu6b4tyGu8sOnpCnbo9dvqc0Qz9chou0mbeT2iMAb8LFLdfaYXKcJfKjqm74QwEFdvaITDCYr2WKeQAPxu9VKlCpg3KdjT0/2bLa1nrK1t6ZHfQr5byu/FoLDhqteNtbW+QV/raBPnRYEvi2l3aVNyokkJMSZ8/Y5TTVJ5uaOb6Rw+gelPHiNOmcjhOZrjHGWUU9nRpnPgfVpE4bToQatR2IzxSTUKKIuMshnYCt1gMBhGBCbQDQaDYUSwe5RLhUXL8M8F+qW2wd85wUV5vcJ5N6mckdrKFi+cAL0QETHUT9o0BhwagGx/QQkL+mSfzeEBoqaqbQmp5RcuXtHyeY0OWGM73BDtL00KNgZDPHhaI/N9gBIz3H/mhJ7E6h/ZG2cUPq5PbtUgm+jxoBafOqqUy5EZtRknL3Scf0ttpq+Ry3qNVOGMKLKFRVWLL76jtuqTlNP06GG1eMkj4gnZNnHShVqD3c35ONmykzpdI1osqrENO6vlZAkS1kZMwxTc5zkhC1lHJAOlPNKC5ZS2ZYHy3jItwhTR+cuecnn1rIZVmJ5QOmNuVi1IJNaxyECRLOlBL9BJNKbc/4yic6acpyG8PMT4obuq1NJKV/sTNZUifOSBB4blg2TlstLVa6Ma5xSl+aI5nWj7Z1Do2XrjDY3kePZ1HaN3LilFmBUS5VCCC6pn//TMsHz0pIanOPOgRpN0EeUJDdxdLdNjY0QVRpn2bXJMx/zonL9PnazNNgNboRsMBsOIwAS6wWAwjAjuKSuXKmzG+qUcZOVAXIWQGhNxpgqmS4oeBNqWcLorOCfxuaRykyUMR+lb61OOzJidRlRJvXZDc4AuUtKIfdOqWsbBiWhxQWkDMnjBA6dODcsPndZyg3bckzW9dozonzpZGQxYnyZrnTyqXNykPKcU3P/973vPsNzpaN+uUsKClBxbYrLgWCNaanFFaYkb5GRy4sR92q5AnWWcyIT90SO2bNHDHIWR81uOkUs+J/VgaxWmyGphrpnaaxLNw0kd2OInobqjBs0tRSFkK4sa0zxEF64seguh1986Pzx28rjSAFNT6kzWJAsmppky4nNcxdjxGPVp7Nj9pR6erzpFOxwj65g6hVLgCKODRJ/FuTF6R4k64iQcqzT+TBHFgTrkHLnvowQbS1eUwrzeZ6swSmRBMmKC2v7gcQ2t8MEPfXBYHp+m9taIugymZkLWT216/7KC8x3l6x3m161I+lOBTa/QReQLInJVRF6gYzMi8g0ReS38v3+9OgwGg8Fw97AVyuVfAfjELcc+D+CbzrkHAXwz/G4wGAyGXcCmKRfn3J+LyKlbDj8O4KdD+YsA/gzAP96oLpFi3BBFxfdlA4uYYuV0WcGygJJQEOXBUQ2ZWmHHojrdvxmiAGZUB1stLHdUhTpAjgJ12oV3tFOfkkp16bKqgpeuqJUL+W9gktS/pWVPXfA++IeI5niYrAbGOZYMUSgtihMSUWAJToLRKET7p2JQC6OC35U+UpOkKt93QlXeRXIgunBJ+8ltYSrmyhVN6nF47uCwfJWcr44dnfP3p8ZwTk+huCYRRV4cOFVzmy2KTkkOShyzg+PQtCeUxnDBsYafa3by4v40OfPDTaWQOGxHxFkY6Nq1AdN1eq9W27eRc+Q+96I6dk3tU6uR+08qVZV1dC5SzrXLOXVp0scpv22dPK6aLUogsuJphsYEOaFR4JdCjCXqT5OeP459kmZ6LTsLNjiCKdcq/vwxygzSWVGHqzblqB2nwCrEBBWSZ6QUP8f19P2eIuoqpiQwklFymDB3UcZxfyhmUCFqK8mrnKrJ7hLlUoFDzrncHu0ygEN3WJ/BYDAY3iW2zcrF+Z3Lys+JiDwhIs+KyLMLlHXeYDAYDNuDO7VyuSIiR5xzl0TkCICrVSc6554E8CQAvPfE1Nb0iG0AW8cUElZQmdVljqXA5+QKEgf3zyoYIbYaSAtOG4o+WWWw9QvnEmxyGFaikVrBomRqTI8d3K/70lOU4KJODeavuJAqGJHVRI2dSeieEauI+THuUIE30DvNzqhDxoED6hD0zhV17FijxBeNQswSrefGgqrOa+z8FOgVTqRQyBfLFk9STsXx/PM4VzmuFY6Hsqvw/KlxKN9+Qpfxc0mxT3j+idqYmdD5rZP1yfJNP47drlIo80tavnxV6alDh1SRbo6po1YGpRbYgqVOVNigML7lyTZzQxRX4hB4G6jPQpRLzHFl6Zljy7FCvBk6J3f4GlDdnOul2aJ5iSn2TiGqLluocYhjpejWelqeIIos4orC+5URtRTTM8LvHMfSdcPjO0u5PAXgM6H8GQBfu8P6DAaDwfAusRWzxd8H8FcA3iMiF0TkswD+GYCPi8hrAP52+N1gMBgMu4CtWLl8uuJPP7P12wpKvyUVOUXLG7S1XHusflfRLMUynb/BZy8j2oLPHZCqNsjYCULB1g+9Xq/0OGcPEhqjPO/kfnIaObhfqY1xoi1cpup0RNYMINW+QLmwNu2YiqG8myVOXoU0rvTbvn3UxjmN/VJ/TfvWo3i/LQoxK+R8skA0QmdFxyt3IolqrIZzqFtuGMUAobpjUpszCubDar5U0HUiefheHauCvxk77XBcD8r1usY0Hs1/m+b3+PHj2l7q37lVr/4vLWqcnMVlreP8RY2lc/SYOsccOaT0V8T0H1lCNSnbEtMcGT2LBSIubxYxLpmUU458XUq/ZRRXyBGn5wphfRVlc81xmhxZpAwoA9WArFwoGRUyUfqPAgyjQxYsnD0qypSWapCDYJbLAM5RyhnYQP3k9ob+y1ZkIsz132AwGEYGJtANBoNhRLArsVycc4X4LDt+/woLFlbXSRMvJF6W3KSFVciKvnA4UrZaiKjyPmV6WSWnBU5kG0dKnXBcj8EgOFC0VN3jjD41UlXX1tiygmgB3pHnfhQSb5dbYuS0VyRFcmH4Z+pznWLWTJHDEbeXY2/wPQtUCI3pcldjf/SC5ciYEM0Ulc9LIQE1zQWXbzF50MNs/SO3W7Rw+F4ew5ieLQrlgVaL3MJSirFDljCtWNt1+ODcsFyniq6+8zYA4CaxaRy+9vINdc46T0m9p/cptRVT/J4+xYdOiP5hLq6QkJvulVu8sAVRVcJ2tjhjHxqeOzasGuB2K6vb6gnPN89+j2iuNaJN1ugkpryY0e2TvFijbEQJJRXPChQwZ88ehD4w5cQWZHycaNmC9cvmYSt0g8FgGBGYQDcYDIYRwZ4In7tVi5YyZGSpURWOt9LKhZ0fohLLDlKnOAJvRmo7Wz/EjXIKgR1rOEsSZwlKOTxs3iemh+hcVk+Z8qnFZE3AY8GWGGSjwcqfFBxr8pPLrUlqFFZ3jXb+YxrDcaJfFlco9gk5YmSFwdDO5kmqAaAfnLLGyLKHUT3neo4U5pyvpaxWbNFUQr8M6NyYqZ2IqRgKu0sjVmMLjgGfo9dOjWscmHGiq2ZnfEaiyw2aH6p7mWK2vHn+7WH5wIxa0LD1S4M8cdYoZG6tziGmq5y1iv8DtzyLhSeKkqSz9QezWVn5s8hwRZsXf664W47c3pZ+4TjVQG1PqZw4toohxza6NqLfslArO03VCqGR3G3n+vbmtJFZuRgMBsPfSJhANxgMhhHBrlEu5ZYhd06tVIEpByflmU6KyXD1aC263cql0HpS7QrxYHg3nx2CiJdxpMJlKVuiUP2F5DGc7Nq33RHNIgXHGqqk6jihYD/AFEVV/JIwFqzacqLjel2tJrI1dQJip6UWxSnheCd9jmtD5YjuxY5YRVrmdhQSIFfMS2WZVGtXCIPKdQbVmi2IaOLIrwWOLJiylGkxPYfpFxBdF1F5kuiX40ePAgAuvK3JkFc7GmuEnbYuX9WQS2/Q+e1JtXiZPaDxXgZEucQU10XIyqbMcqwQS4fGn8sFqoaolVrG70g5dcJgijA/h99szlLGWZJqFSKHLWvY4Imfy1XKNrS2T6lDkEVN7lAlVCG3K6qiPF0em6i8fVWwFbrBYDCMCEygGwwGw4hg96xctsFyZStgOmNAYT2zrPybVoiMyupkrhYVrCDYgoIrKQ+lGtfL46EUaAMyJuBMMqDkxbVAaQg5nhTijpBKzM5RacE6g/pBTS86StBfOAtQPjBshcD0E3EIWYWjDluNsJNVo6kqrCO9uEdqLse7yeeAKS+2moBjKoTGk+ORMC1A7WUqpBiThywXSqxcCs5RNC9N6k+XMyPRkHOclmygz25vVa1V4kidjOZCJqczZ84Mj517/bVheYEol+6q9u3tCxeG5f37p4flqUkN08sJqxtEuRQcsQg5zVBFswxQTrnERLPEFfNSc+XvVIFGDM9ARscalLGqJfoOUVTdQvJwfr9jfi0pefmAnr+E49CQ81FursYxWQY05yyA2ZqJqditwFboBoPBMCLYpRW63LICXB8bfXWqauLrOBdkxgu0im0WvjbiTcFQrmq/0IZnIQA/22rTCoE3ZQcZ2/VSbkraRKuRe3ae4MBFvPpWOOrFoLhDNSzGhZ6WR8QrjAZ1ezDcuKHVFC+KKUxB1aYlbzL1KWFFa5w0ESlfofPqfnjPQnfK55ZXP4VclLy6LmyKsoZCq8usZBOLtBVKr1pYQTYKG4sU4oEXdhw9kN3WKYGFkNY51fZ1njqptuSXLp0bljsahLGw0XZjXsMnXLysSTCO3KcbzpMNzY2b1bTtTmjTuxDtsOSNrVhlF1aoNHacYCQqvEflER75bdT3jq/jTWZ6/2jMo4oKWQ+J6QGL6X3lNhYewmF7yzd2s2LD6fbvjsGwFbrBYDCMCEygGwwGw4hgVyiXKIrRnvSB9ZeX1VZ2bEztahvs7s4RBkMUukaDqA3OLZmoqhjVtHtpn2gGsn3OKKr9eF034lqRqpZJV+vP25hGqm46UuEWVrStE+PqVu3IJnt+STf/0oFeOzauyQaWV94clgdjtFlElE4SNss6ND7dNVXP0wFtOIGSZLA2R4kMeEORwXRJn+mH4MNcoIFo86lLduI1GvN0maLdEZ3kaON2jaIN8mbpGIUKyEjpTgb+nP5A78mJMWoNUo/ZKLx2u7s1AMQcKZE20WVAzyVFJMw3MeucVIQGOiGqJmrqGGVOKSQex5SoKKFQAWP0xq5156ntvv6jh9SW/NABHavuotIpFNQTy0tafvuC1jdzSMuzqb6X8x0doxUKQ7iS6Fg0o9BI2mWMqP/7W2SznVCIg0K0Sy6SfwY/fsL0y+2bjj3aZC1c15wclnv0jmRMXSba3iY1a9CjxB/0XDT7Wo6IinF5rluic4R+4fARmWMfkxJ/l03AVugGg8EwIjCBbjAYDCOCXaFcMpdhZdWrxpeuXhsej+j7wrbafXIbR6BXpvfpzvu+KVWh6g21LE1p57nGqjXvlJMtKduVUkrDQtS83FWXkzdwfQXagkMvsv0JnROT+h3XymmRHkVhbNA5UaArVlbUUqGzTPr0LFkWEP006Gl9TW5jVdIOzsHJ+R0DzTAgFZIpL97Br5NlT5X98oDyeLKNOdNlrTqp9kTj5OPOlifCCS4KLtZZ6XFGRLYNBQsGTuDBiRfyw4MCJ0C3KbfO4Ah/PCq80mL6h0MLxBydMISzYLf2U6ePDcsrFG3xwts39Z6UX2O5o3P3xluagzRqq306Yn2/YvIV4PfOBYpsQPRXn57hpKcUSoP4jIyoMEd+ANWrThpTNmIJ8zUouNJzxEa954BpDrZOKVi80PvNMoVDNbCFTsZ+LoPwP9XBFiyFhMVsfne7pc5msOkVuojcJyJPi8hLIvKiiPxiOD4jIt8QkdfC//s3qstgMBgM24+tUC4pgF92zj0M4KMAfkFEHgbweQDfdM49COCb4XeDwWAw7DA2Tbk45y4BuBTKyyLyMoBjAB4H8NPhtC8C+DMA/3i9ugaDDJ1AE1x4R1W7t85p4P2Y3Nn7CUWbC/+fOK5uzw+/9z3D8v79qiAknJczLlf565HuWtdJu2nSyPRp17oR5eocO0qoCtmMKI9mjSgMUpU5euN4S9sy0dabkhEPaqTbc7ty54N+TxNDrHbUbIEjvI21lJ7oJUrLsEs6O1llFS7WtYJqG1y8B7xTz8kj2GpD+8/5TQc0txzhjum3OqmlbHEy1VbarRUcXiIOTcDJI5iKSfm43jMuSV6yHorOUqHMneCxqnBsK5xecFQqv2dB5S/kepXbbnTi+PFheZkciG7eUPolIyunZaLrzp8/PyzvO3hwWG5Pz+g9abxqZN2UBcqFnfkSnn/6wwQ9l4UojOxMxGNXoJn4nJKxq3BIqgKfwhRO1ap3q1E7dwLvalNURE4B+DCAZwAcCsIeAC4DOFRxmcFgMBjuIrYs0EVkAsAfAvgl59wS/835z1HpJ0lEnhCRZ0Xk2aWVpOwUg8FgMNwBtmTlIiJ1eGH+e865r4bDV0TkiHPukogcAXC17Frn3JMAngSA+49NuTSoSMsdpQsWVBMsRMRLOWRHODy5T3fQBzV1fGhNqHNOSpHpslW9T0x0SYOoGC5zIoWI80gGNa5W2Pkm5wi2QqBySs4REcXAiMnhZZy8Rph+WaXoeM3odl0w40h6S4t6TzremFKHkz5RPpzrtJhTk9VVTsLBySxuj3BYo0okVnX6Js3ztavXh+XlJZp0QjMZwzfLAAAgAElEQVRWFT4tRKfTdu2bVOumVjDXKMTmoPpipoKY8mDjo4JVSgUVVZEoYziMFUukrCKUn6swuGEDnc1kOcjP76/pOLfaaoVy+JDSJlcOqtPQyvLFYZkeF9TI++jaNbVEa6yqtcoqvVOFaIqhLXWix1LHMY7IyYscsbKUrNmqEqyAQLxIVohamt+nPMJhwYClItxoMYLqxg53G1Eu1Uk6NqJqtkbZbMXKRQD8LoCXnXO/SX96CsBnQvkzAL62pRYYDAaDYVuwlRX6TwD4hwCeF5Hvh2P/FMA/A/BlEfksgLcA/P3tbaLBYDAYNoOtWLn8Baqt3H9mKzcViRDXvSNCo6V0yfikUhED2gmvkWNJEmJGrCSqwq0m2o21TFW45VWl+JM1sj5pkfpNDi+cVICtBdKULVduj80QUdzNYvIEVU+RkTpJ59dq2t7xttIMB2Y0Dsz8eVWRU1KFJdfzycpk8ebN0vL0mDp+CMes4FguBYWNVFiyFuqTtUKu5tbIJKdByRDiMbVCeeuS0iyXrqgK31kuj71S5fzlUj1nuq1j1AgOUmy1IaTyc9/YsgQFioZpAY7xUk65cJ7afN457C7TBhxW906Su3D9BauYnAIkCq27onGS9u/bNyyfPKEORxcuKkNar1GcEoo9dOXKlWE5WtQ6O+SsFBWogeDkRXk8Hc1Fn2kmsoQa0PsiJSGrgVvCyrIjXCEOrb8/02ZsWcTEBOd9ZQc5ro8TxTDYcikthAdmq68gLwrUCnWBu1N6l63BXP8NBoNhRGAC3WAwGEYEuxLLRaII9eBQML1frVJ++Io6FlEU2IIyl2vU86SqX1vU8r5VVXdWE/1etSjuREzWF6yLObbQADsfUejd3BKG1G1WTyPO+jPg/JPsHUGmFRR6d3xcKZejhw8PyxeuaroZDo+bO0LVSCVcIcuDK9c0ZOrMlKrcLQ4fTKGHb0mIqm0nqxyO6zJMKcrxYMiaoUehRq/PK/0zP6/9IQYHMdXdWyXnI+rzgWntB4dbzi1t+jQvNC2I6VGPXDn9wk47rEKDrJUKeTLZWiZUFG1ChWaHoEJmLM6Aw9mrSjIj3dqWvCNsqbNG2Z2mKOzwkUPqlHdoVq1fVntKrbDj3M15fY4a42t0DsetKcnSE1H4ajq1SyFz1xKyICs4lhWS9uo5BeszPYVz2Q4zaXGu26jcWsQVrLm2ZlGyJSuXghQrv6dRLgaDwWAYwgS6wWAwjAh2h3KBqsj7aPed44dm5ORQJ905t9DoZ3qsl3KcVorvMcmxUVTNG9B9uj3dtV/u6k3TQmYeigMTduUHoqpnq6WWHYyqxMisZrGlRLOlzj9Hjmiy3+m31BKhd41Cn4ax4DCy3VXt59sXNE7O1KSO89E5VbPrlAA4JYqo0HRyuKrXtZwFHZn70O2pCn327TeH5VfOaXmenImkwdl4ia6hLEzjNL4nT58elhvN2x/fghrMFBLRQpzEOGNrHqqHKZeIuIAC5VII8Zo7kGgdUmE1xHQeO7YUnFwIBbXcVZjoBP6Hab46zZsjmm98TJ+X+8+cHJZ57s5fpWxINL+c1JszLDHysctIvPA4J2TBxvFeagXLFqqQrVXYQoiHt8QpqJgXvfxdrELRsah8jhxZQmXFBpTUU+5YVDHl7xq2QjcYDIYRgQl0g8FgGBHsCuXioCpQneiCVlOtFpI+JXumuB5JSMy7Sll35pd0N3+xq8dZVY4bWkePMiBdW9CwovMUTKbTJdW1zqF3vVpKEWtRI0qIQ4qCY1aQY0VB+SP9q1HXsZia1jDA4+NKi8h1jdWS+xM5msbFjlJIg74680ztU2eeWl3H+djRo9ou4rkSyrAUk7VCneiPKKj8PXIw6fR0PJ978eVh+dxFtZRgWmKMnKnY+WQQ6Ty2pzVmy/0PnhmW2REpCzr6oCr2BRth0BwNWD0uhPXIysvscFSIz5GXObsV375cba/x+azalze9aIkxYHop0AxEibTGdZ4dUyWiz9nJY0rtvfOOPiNvnFear9kg6oQoH3YmYopE6Q0eW46NQ+8FZ6/ihM0F559yVMVHyemd8vkpjn/lilbKKRrZMPZKRVu5fcwy8jnbEGrXVugGg8EwIjCBbjAYDCOCXaFc4BwkqK4H9qv1xYFZpRk6XXVyWFxWNb6WJ6MlJ6BXzr0+LPdInzlxXOmEQV9pgcUltRQ5/46qlhxjxBHN48hpYhD08kZbjx05qplhauS0VKe4Jmy1w0ly1yh5riOao9HUOCUPv/+Dw/L1eUr2e8FTKhQlFdMzasFyjWJw/PV3nhuWL9/QGDcnrqiTzzEar4OUpYZxs6P01o0Fbwlx7ty54bGz517Tcyl8MfkbFcL0Doj+SCiTUp2ShB8hWqBJzlcJJaROQ4hhR6pyRHX0iMKTWBvQpgTjKVEYzTGi6LqUeJucuJptmt++5+BYbebMWAWapcKZhc9pt/T+HBKXE6ZP79O2Lyz5Z6FOmb4GfX3O+Pkbo7g++/drHSdOEP1C78KVBX1eVgfkWLRGGbHIyasdxo6f7VlqK4fdXe7qu90kq506Z/iqYCIytj6i4zmlw2GdWdA1m0qRcswejhnUpkxKHD8nJeurCaK0ChZK9IBHga6UijjJBQqrJGOWu1tJog0Gg8Fwb8MEusFgMIwIdodyEQy3xUkrwtysxnU5e+6dYZmigOLAEa9SNlpq+bFIVi7Pv/CDYfm1s68My2OUjDkhtWmFrGVS9nJgiwtyMuqFuCI1UvJmKB5Ns6HqbEoqXD/htEuU4YfUrJSsYthZZ3ZGE/O+//3vH5br9VcBAIvzavmSrOl9WhNK23DGnHNv69i+/oYmAx6neB8NsgrifhQca4KzCqvQix3KrqRaNvbvJ3qC+j+/oHPHGufJU0pjnTyp4V6JUUBCPMLQcYbVWUpH1CfHmtVE29uleCesNtc5Tgj57/T7+rwkVJZwUq1gKUPJzelB7xKF019jyoUstMiypEHlOGZrEYqDEso8V3GdKR+9f0IUTkZc2IF9+rwcO6zxXpa6en6TnotVCsTjaC6yLFjUpHRsoOUaUV5TZMHV6ygVylQE0xVS9DjSkrDFjYRz9Tqmf5hCiSuck9jJrsFOaQNOqq2C6eD0rNZfiEPj/2NrqqjCmqXMsWyrfke2QjcYDIYRgQl0g8FgGBHsUiwXhzjoIrzLfOrkfcPy629pKN2lRaUUlpf8rniDqAXOv8uJYZc7ahGQpGS1UrBE0CGQGsWH6dG1lD13qu3PObh/enhs7qCqW22yjnCkkvPuuJAFTZ2cdgYUY9RRlqSZaaVcHjqjsUzS4N30AxqfRXKOGp9kCkV37QeU1afW1LZ0qJ/pitIC7FjDDjJ5KOH6mMagObxP/77cVQuahKwzupS8iS0YHrhf5/9vfUQtew7N6Fh3lymWDanxWUjUzZYlFMoEMceMIQsKjvzLzmecdaZB1A3TBYOMsj3lzzFRNRz3JCNVPaY6aFoKdTcpC1Sb6Zc6q/McY3pwW5tqxP+w01JCNCNHct5PoYlPnVKa69K8OqhdodDHa0QXNcYoVHUYRw4vnK6pNQvPYaej94yZzqOB5CxghTJnBCfk9JKj+po00C2KQdMmS6VCnBpa6jYpkXujQNFQG/n4FsLGFOPElBzfIudiK3SDwWAYEeySHbpuYvEmB9vEfviDt2/+AcArr/nVwuUrusqYUvN1TExqHQUbb95AK3wKOcSjfqFTsuHljZP7jvvEE488/ODwWLupdTTp3IQ3gtjelJYuGX3aebVacDfv66ZUo64nnQhtcZTz89ybbw3LV6+ru/0q5SJtjZHhOmHAyzWalwaFPuBNx7WwuZbQxmK9SSt4Gmbe2OYgiWceUtv3H/mAzvlh8k9wqS7pY6dj2oxpAzTYkPMmc8wr8ez21eyt5YjOH9CKusbdp43IPj0j+cqtkIylEL1Sr+ONywFvvvZu3+QEgBaFahgMuB56vsL9eV+f79OKOWKontRPKMTChLb+8KxqhccOq09ClzZUB5QnN6aHd6wehbZqW4TGVjLaoOTnnJ8/6j+/C7zRybIjo3IenqEQPoGu41yrXQqVQQv0QkTIAY2RcGBV0pAKURjpGSxTIgrZVzkMQcSr9bx8l+zQRaQlIt8SkR+IyIsi8mvh+GkReUZEzorIH4hIY6O6DAaDwbD92ArlsgbgY865DwF4BMAnROSjAH4dwG855x4AcBPAZ7e/mQaDwWDYCJumXJzfScx33OrhxwH4GIB/EI5/EcCvAvid9WvLgOBCnJIdbJ02tI4dUdvuuPnwsNwaewMAcPZNtZ9eI7WGXfyXl8n2k4O60fm1WFW7yUndLDl2SFX+GaKCPvCg35R834Mnhsf6K7pRxPp5TBs4jYhUTqJZOJFGRIk6WnXKr7mkm1Ix1X/skN8sPHxQNw0PHVb+6QWKdnj+wuVheY3olwa7mBOFUEjUQO11xBHk5uesVo719cJjR7St983p2B4/rhu7DzzwgF5L7uOrC+p6HlPYwoPTek5G6r8b5Go+bawRFZSR7XlM9AvTQkyFsZofUx4H3sRPUq2zHjaXhezNOTIj24QztcDjTAwJaswQOaZuyPWeqKha4Fw4dypv8vGb3qBNwQHRNmurFGKDnrPjR9QmvUvPjnMaWmKF8seuhg31AW1+tyh6ZsQhLmhu0woqjOkaUFKRLOKNU6ZR/XGh8JnjFO4goz6zWftUm3xCaMOX/SMmxnTspqfUht5VbtDmm5sVUTULG6G3X3dX7dBFpCYi3wdwFcA3ALwOYMG54ShfAHCs6nqDwWAw3D1sSaA75wbOuUcAHAfwGID3bvZaEXlCRJ4VkWcXO/2NLzAYDAbDlvCurFyccwsi8jSAHwMwLSJxWKUfB3Cx4ponATwJAA8dn3TZ0Baa1E9K9rlGduDTk6pmP/a3PgQAOHlKKY9L15SSWFpR1a5g5UK2xGxjHkdqnzo3p6rloUOHhuX9k6paTQUTjYxykbo1ypEpqpLFhV17Nk5l9ZsizGUcEkDrYWsJpo4i1wv3pAiTR9QmfmryPxmWz1N+0fPvEP1CY9RZJZd0slXmYP+sxrZbXi1tkgo/Na7j+cj71BLoINmSzxygnKaU4KRPbYnJFKbGLvTsbp+yGu/buFbw0ycLDqKw7jumc3uwT0kyyPjYkTrP9uEH59T6o0FR+2qBu+H8mxy9kX0fHnjg/mH5ENn7r7J7Or0XkxNKi3GkRMeWU6EbNU4SwckjCjlS6Tmjc9hXwNHzN0PUwtycPl8c54Cfo9yiJKP3bJLymE7QM9InC66okJCiPMFIreD6X0655BEKB/TcLi8pLbpKER45Lep4W/0p+nV6/mmcx4iiLFJnhWSy2qf83aSYFYXokRX9ebfYipXLQRGZDuUxAB8H8DKApwH8XDjtMwC+dsetMhgMBsOWsZUV+hEAXxSRGvyH4MvOuT8SkZcAfElE/hcA3wPwu3ehnQaDwWDYAFuxcnkOwIdLjp+D59O3hpJd4ZTUrwFbJTRVFZqa9hHh6qT6TB9Qy44B0RkRqYR9Sp7AEQMjcr3nnKbjZHHBKr8LOTN7K0qzjLEFCzuwkPpdc+W73C7iiHh0PohmIct+VyPqKIxRjyI51hrqNHRkVqPnTe9TtfkIUQ51yoeakFVEP+HoeeUqbz24pI+RW/VES8dzktT5MbLy4MhzyQqpv2QhMjFBzjT0LKx2Fuh8siIK9TuygqiRM9l+iiT5njOn9JyGWjD1iYro18hahuocp3406rdTGsVolDTndOpD71HLHnZgKTgcEV1Ui3ReJtqcKYScX8J92Tms0eAEK3ou5+uNKdzFgNqbFJyWdE5PHdckGLMH9b3j56iehy0gh5w6zflEk2lJ7U5aCDGB0jK75RRymhbOCE5mNJ8z5Kj2/offMywfnNN3YWJKaUGmHFs0zzWiwmYPqiXeMj2XHAZgaK1CpjKca5bpJPY4yimcrWYZNdd/g8FgGBGYQDcYDIYRwe7EcoFDbt3CVhOrXVUtx1qqcjcp9shasMToUdD9ep1Uftq2Luy8E20ysU8pnAZdy9YvKzfVEiSiXe6JoE6Ok4NBjaIkcvD8PnuKEOqi9+S4GgNW1Th5AVnCCKm/k8HiYWyMxpDol5VlVQMd6YEHJ1UVX6MkBeNEOdVrStGwas/qYs6QFPIlkqruiLbp97WNA7DzDecU1fN7q9qPOpkFjI/r3LGVS07/9DmYCdEPNYpIMUVRKMfaSrl06do10WdxQNZHfE+O8aHKsfaHHXhaNR3zLkW1bNLzN0aWIJFoG4Xi19SEnb+oHOg9zos51tb7r5BD0BpZMzWJZuA8rv0VjkNEllhNpiL1WSxYXwVaLqOHpVGgHHRse5RfN6axKNh+0PNX9LQpj4MyjNVElVy7ou9zc1z7fHBGaaPxSaUomXKZmNB3YXVZ86t2lihAEaqchUJ+U1470zvPr06fDWUqkmBsBFuhGwwGw4jABLrBYDCMCMS9y6X9Hd1U5BqAFQDXNzp3BDAL6+eo4W9KX62fu4+TzrmDG5/msSsCHQBE5Fnn3KO7cvMdhPVz9PA3pa/Wz70Ho1wMBoNhRGAC3WAwGEYEuynQn9zFe+8krJ+jh78pfbV+7jHsGoduMBgMhu2FUS4Gg8EwIjCBbjAYDCOCXRHoIvIJEXlFRM6KyOd3ow13AyJyn4g8LSIviciLIvKL4fiMiHxDRF4L/+/fqK69gJCS8Hsi8kfh99Mi8kyY1z8QIX/7PQoRmRaRr4jID0XkZRH5sVGcTxH5H8Iz+4KI/L6ItEZhPkXkCyJyVUReoGOl8yce/zz09zkR+cjutfzdYccFeoin/tsAfhbAwwA+LSIPr3/VnkEK4Jedcw8D+CiAXwh9+zyAbzrnHgTwzfD7KOAX4ZOc5Ph1AL/lnHsAwE0An92VVm0v/k8A/945914AH4Lv70jNp4gcA/DfA3jUOfcB+JRan8JozOe/AvCJW45Vzd/PAngw/DyBDZPd33vYjRX6YwDOOufOOR9d6EsAHt+Fdmw7nHOXnHPfDeVl+Jf/GHz/vhhO+yKAv7s7Ldw+iMhxAH8HwL8MvwuAjwH4Sjhlz/dTRPYB+CmEpC3OucQ5t4ARnE/4QH1jIhIDaAO4hBGYT+fcnwOYv+Vw1fw9DuBfO4+/hk+veQR7CLsh0I8BOE+/XwjHRgoicgo+IcgzAA455/KknpcBHKq4bC/h/wDwP0ETIR4AsOA0w8QozOtpANcA/N+BWvqXIjKOEZtP59xFAL8B4G14Qb4I4DsYvfnMUTV/e1422aboXYCITAD4QwC/5Jxb4r85bye6p21FReS/AHDVOfed3W7LXUYM4CMAfsc592H4+EMFemVE5nM//Or0NICjAMZxO00xkhiF+WPshkC/COA++v14ODYSEJE6vDD/PefcV8PhK7nqFv6/ulvt2yb8BID/UkTehKfMPgbPNU8HlR0YjXm9AOCCc+6Z8PtX4AX8qM3n3wbwhnPumnOuD+Cr8HM8avOZo2r+9rxs2g2B/m0AD4Yd9Ab85stTu9CObUfgkX8XwMvOud+kPz0F4DOh/BkAX9vptm0nnHP/xDl33Dl3Cn7+/tQ5998AeBrAz4XTRqGflwGcF5E8CeXPAHgJIzaf8FTLR0WkHZ7hvJ8jNZ+Eqvl7CsDPB2uXjwJYJGpmb8A5t+M/AD4J4FUArwP4ld1ow13q10/Cq2/PAfh++PkkPL/8TQCvAfgTADO73dZt7PNPA/ijUD4D4FsAzgL4twCau92+bejfIwCeDXP67wDsH8X5BPBrAH4I4AUA/wZAcxTmE8Dvw+8L9OE1rs9WzR982qHfDnLpeXirn13vw1Z+zPXfYDAYRgS2KWowGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjAhPoBoPBMCIwgW4wGAwjgjsS6CLyCRF5RUTOisjnt6tRBoPBYNg6xDn37i4UqQF4FcDHAVwA8G0An3bOvbR9zTMYDAbDZhHfwbWPATjrnDsHACLyJQCPA6gU6OM1cdN1YPgJccDweyKAIPyIPxSJLzvnr6nVgFoEJAmGJ7sMyDL/dxHACZA5rS8Sfw0ADAb+3HC7YTuiGt0j8tc4BwwyABm1LxyXUPetx5HX6bQP/L0UQQGhmcN+uNBeOP1bfrnL9L75PfL/+FqhyvM+iQBRBEgEZANt8/BUmodM/Hn5n6MwdvkYX1mDwWDYeVx3zh3c6KQ7EejHAJyn3y8A+NFbTxKRJwA8AQD7asA/OgpkEYAgiDP4/+PI8z+tGGgEIdLpAmcvAi8AmPeXoAXgwwBO7QNmp4CoAaQJ0EuBLPYnxC0gin19SQZ0loA33wB+CODlsp70/X+TAD4I4LHTQDv2wizLgDT1/zfC7+2GPz9JgcaEb1gvCXU1gE7H92ei5ducJECWAoj0vJzrarf9uUnP3y8G0GgBSIFeT09sRKGuMGa9HhDH/qfX9fW3G8BUDDTgy+0pf2038W1IUqA9ASwt+etyYd3rAI0YaLWBbgakcRjsyI9xmgLzS0DWAH6tdAANBsNdxlubOelOBPqm4Jx7EsCTAHC0KS5DEG5ZsQG5gMuyIPABLPSALwO4dVH4CoD/dQZopUBnAZia9UIJEZAlvtJGwwuyv3geeHqTbV0G8JcA/vIN4DSAH98PnDgOIAHSnhd6cUqDFgHPPg98raSuOoCfPwIcPRwEdgrMLwDfugKcA7ACoBnOPQrgp5rAqRPAUgeYiHx/ZmdCfwAsLAFRG7i6AHx/0X+cgrxGG8CjdeDwNDCVAa9eAhbC3zMAPXghH4dxzsLv+Zin4f+W7yogwPSEF/oL80C3B/QyYPboJgfSYDDsCu5EoF8EcB/9fjwcWx9MY4RVIOAFeRR5Yd8Lf++mtwvzHL3EC6VGHFbRmV+VN1p+Ffr2O8C/Pw+8/i46BgBvAHjjJvCjN4GPnAZmpoEoAaLMfyyyxGsQb1Zc3wdw7hIwPeO1jjQFlrrA83RO3rc3AHTXgL+34O/TaPkVe5qp0I3g7/nOIvCdcGyF6pruA40u8NzK5j9glXDwX7eAGoCHAHzs6p1WbDAY7ibuxMrl2wAeFJHTItIA8CkAT2366iC0c047L6eZXzGm0JV6GTq98D2I/TUZPGWAFvDmdeDf3YEwZzwD4Nzb/gOSQu+FCEAcVrQVyP+WBtqm16s+9wqAywtAktebeSqll/ofROuPRwy/gj+7hb5tFgN4qurtxbtQucFg2Da8a4HunEsBfA7AH8O/7192zr24mWtz7px/94WwgM+F1zr6QzcB0giIWv7cuOH59HeuA199fTOqwubxpwPgpVe95pDF4WPT8AJ0PRUnRaArwmp7I33o633g6rynZ5ABSz3Px3c6vq4oLo4bI4EX/N2td2/TuJt1GwyGO8cdcejOua8D+PpWr8sy5XIj+H9yoZ6QxFpv9dsNm6AJ/Oo3agCdBPjWW361uxEEnnueCG24tM65fQB/PgCmrwNHp/3GYRQ0iSoBC3gB2O0CWSus0jf4fK4BOHcNmMtpl67/cAGeXmpFyneXodXyfbqx/m3eNZbuUr0Gg2F7cNc3RQtwGHLoGQJnjuKGaG79AqwvvNKwgu8FCxc0gMtXgR9s0IQP7/f/T034+zcibxHS6wLvnPc2l/2S61YAPHsDeDQL1jVRsBRZ515DagZ+1d1d7wsV8B8BnLoKHD/sJycNy+IsBeKs+n49+DadGgPOr258n62iDuDwPgBGuxgM9yx2VqDfiiDN8xV7/nuUt6qxzqWx/+l0vKCMG8Cb6yzNmwB+/KAXwgDQDgI5S7ygnGsDhx8ETlwH/uQmUCYTXwNwagmYmPIr9NYGAr0Hby4Yx75PrRbKvxa34IVFYHbWXzf8qJH1TxlS+L48NAdMXAWWVr1des/5/6cmvFbz0ipwc5173wdg5hCQ9fwm8HQMRD0/XifmYALdYLiHYbFcDAaDYUSwoyv03GMxzh1XcvBnJdaV6Hr89FInbIqGupY63ja7Cj85Dsy29PdW7DlpNPz1reDcFE8Djy0B/2FQXs87A+B4BLQafgW+HnoIWkBKWscm8D0AJy4AH3hIefcsW39PAfBj206BMxNA2g5tSNQaqAu/kn+m4vqDAB69z2sSWTBKnwAQt/2Fke2KGgz3NHaccomi4KGY74oScl49od+r0B0EOiLQHkvz1ZuhH4LfzGxF2uE4AxqptifKgCj19zw6B+y/VE5NvA3gA0nYtMzWbyMANNqeCsk9TjeL764Bp3JPVPjN1SQFoiZKjfPzqmP4PYEk9R+SVkO5/FYMLCzffi3X0U6Cw1LPt7uRBSekCMhMoBsM9zR2h0O/VQpmtxTDqnSjVW0SNkTTzDv5VOFUM3DB8Lww4FfjjcgL9jj/yAQBPdXyjjRlK9lleK/NRjCXXE9GRwgfC/iP1Fb4rfMAzl0ATh33v/cyz4FHMUoFeoZgJx95zSCLwj5DXg5/i2vwhuUluAkvxKfgG5wL9DjSegwGw72LHRfo2UZLWqDoHrnOKWka4qwA6FQEjdwHb5WSeytFmV6fx3vJhW4e2ySKgLkDqLT/u74MTE17U8n1kCEIw8iv/rc62N9aBubyD1Ab6HaKZp2M3IQyd8hih6v8w5MC6GxwzyRYGsVZoIpyE9NcqzIYDPcsdt/KpeRQmgvd9QR6TQV6tA6f3QC852XqV5ssEOPUr1rzsANxsMNIod8AAA7iSURBVLLJzRHrKDdKWUAQdo3SLhT6Mvw2ZRp0rAz3NYHzt6y8rwB4Kbi7PvSgF+pphSaSC/RcE4hSPZZrPQk25uE78Fx7/uFrZP4h4Rg7BoPh3sSOCnTnNl6h50IaWP/cOMRwyYL54HrURxY2TjNaoWfwwj0KwhyBT08bPlYLUu+kU2al14MK8nXvCz0xt3mvwtFZ4PLF2z8gfxX+n74OHD3u47UUgrgE5II6AtBIoLb+UKHeioCpBsptMgMWUmCppR/TVqT275vSrgwGw65h51foGwiFLPWRDYH1PSujnMoIAbnWXTw2vOBOUBTouSaARIV6CiBO1hdeKdbXHnKwkN1ohR4DeHgf8IMKO+/nbgKzcz7wGK6VtwmRj0DZChuijdzOPzhhpTEws4FA76bAQuy1jyjzVjIt+B8T6AbDvY2dXaGD6BQUhXAeuiXLQgILhLjcFcjjmuRUQNVKOYH/W5KFELj0t6F8ioLFSljFZ10NElaGzW4QRvA3H8Z9j4pJKwroAkdngOuL5XFoLgK4MB9MCEsQQsF7iiRoHlnoQ4TAp28UqwDe8ocjYuabqUlqFLrBcK9jRwV6HPs4KDPTnibpdeCpjZbnhntZMPELUjdLfejWMqOMt5eBw3NeEL+9TljXRXgLmLk8cQZZuQCe3umG0AGNCf9/p+cDb3VLqA3AUy7dxAcG22gAkxBHvdHyH5SqhH+9Jc97f/AkcLEilP3T14ADFdfPAWgFj9n8Y5drBSm8NdBS6vu2HqYGwFSYlyyEC44BpG5jE02DwbC72FGB3k+Bv74CpFf8jXvwjivvBXDikOeuczNCAN50rqKufLMT8EKsYuEKAOguAY05v0ptkyVLlvk6GsGZKUmD/XakySDKPiYt6Cp4o1gubHueriMRW/BtazV8KqiqaJFVgbeW4D8Y6S3xcQDSUqCx5qvQgF+lZymQDkI9sv41BoPh3sCOC/S/Kjl+GcAnloDp2bB5F1bo7cwL6rKF8oT4NHFZ5D8Ec+MVJ8JHLZxoe4EX5yngoiBgg/BOA82Qe1UuLVWaa2MOgdq4hcIpw1Cgb+BYlNvCZwnw6IPAxdc2qPgWhEW1bsSmt2yK5tTPRpvSUOrJYDDsLdwTtOg7ALK2pzmSnHZJvGCfqLimgeAYBG+JMTPl6ZkyzDsAadEyJgvSLk29wO/1/Kq73fCC9c11kiHPwps8Rsn6Aj1FqD/k80zXsxkMK/6k49vxI+ucWoYEABqB7w7mmWnJT1LF+XCbMyBzZNvuCrS6wWC4R7GjAp2zyTNW4LP1XO0ACx0VuPlGaRnyDcdc6rRawImKc88CuHpV7bQTqMDLUkriHBIlZ/Bp4aow3fTCPErXF+gJfN1p+ECtu+odYOj4NH8dOHXQJ63eLNbg6ZQyQT78iKXrm1kCKtCZprHFusGwN7CjAj2qVQvdCwOgkwLxhN+cbEz41WaVR3+EICQB79kI4FTFuSsAXrjpk06nwXwvifxP2gDiltcOUgDXl4DvrhOG9yC85pCGD8lmVui5N+t6yGkRZKqdfGCDa27FUjd8QPLVeC7UQQJ+gzqGK3TYitxg2GvYUKCLyH0i8rSIvCQiL4rIL4bjvyoiF0Xk++Hnk5u54VzF8asAerEX6EnD/1yeXyd2d+DAhyZ5ETB3yHt3luEZAK9eA5JYf5Yyf5/WLNAKmYh+eMPHPa/CKcDbdAPDGClVGJpVhl82GuzcBn+q5YX67KS3aqmybLkVvCIvrNDTgjKzfhvgKZZbrBdNuBsMewCb2RRNAfyyc+67IjIJ4Dsi8o3wt99yzv3GVm54uMKf/g0A6UUv8HPLuvUSlEbBozPPBJQEn/czAF6puOZpAJdDrrlTdWBm1tezlPpcpN9dWz/5gwCYGPdmjkkGTGyQJBrw5ooINtxxldkMgImaOivFEZB2/Ebuo01/7I/X4fRzdAbAVJDEMdmR5x+W9SisHDEACBAR127C3GDYG9hQoDvnLiGk3HTOLYvIy/CWdQaDwWC4h7Als0UROQXgw/AMxk8A+JyI/DyAZ+FX8bctcEXkCQBPAMBkDZg7jEoj6/PhZyMcQnCBD7RLmvgNwTTyeS/PLlabHL6c/9/H+pmhS3AKnmbpdOGXrfH60Qsdih6l0Xor9Lby3/kGapYAU8HAft/axtnf8giUecTECH5M0kh/38xqO48+mWW+E0O79ko3V4PBcC9g05uiIjIB4A8B/JJzbgnA7wC4H8Aj8KLxfy+7zjn3pHPuUefco+2a9xB9zx02+mEAs9PeC7PX8QKoFWKxTDSAH9+KecgmcQDA7Lg3cUwG3qwvamw8gL0kbIwm69uh5/HYkz6Gwndp2ZsxJp3NbZD2oA5BqQs/wSY9CkJ+oy94HhMG8H0ctk/I4ctgMNyT2NQrKiJ1eGH+e865rwKAc+6Kc27gnMsA/F8AHttMXQ0Aj+5/l60NOHUMmG6p6WAcBFUcrE5mp4H339ktCjgI4AMHQ5CqLPwfPk4bORb1+l4wJqlP2FyFJAQIyxDCBYSZSfr+5+i+jXmuBCU24y4IZud58Y3aGwG3pQGM4G344x11QzMYDFvFZqxcBMDvAnjZOfebdPwInfZfAXhhw7s5v1KdnQb+Tg0YexcN/q8PBi/NkE1nIvIepXHiowzGPSBdAE7tA/7T5uYtRKrwfgDvbQKYB2Zjn8pubgyYjoCotzGF0QOQycbBvJKeF5itmtcAogho12hTswcc3+BeLdw+oRwGIBa/+boeOIRCJPQTmUA3GO51bOYV/QkA/xDA8yLy/XDsnwL4tIg8As+qvgngH21Ukcu8YIpib2f90xnwzhrwg0004j4A7637RM/JPIYJkdm1PcrtrDNvWz7VBtpd4NyicuebwTh8fJkHDgDtto8Fk/S8EG8ECd5Lgagb0rVVYD+AhvhYM1ni27Yft1vSTEJXwY1IaZJGBESBc++ueYF9BNXU/62TyREtI/iV+kLV5gIhQ+DRGyGfKDYXLthgMOwuNmPl8hcod/D8+pbvFlzwe10gXQWm9wOHjwNH571DTwbP/3JAwOk6cHgWmGh5Adq5DkxFnm5BiNWdx/xOMv+xmIj9Jun8ghdGHzgEnEmBV2+oY003/CTwQqsN79J/vA6cmAKm2xpydzqEJejM+/u2G4HiSYATdeDDZIbZhbb/qPgPQpYoFfLecM9OOC8GcBghGmPmefZ2yEyUuNA/qHnk0dDeDkK43PAzB2CGxi0X5vkEZwDadeCBKSC5AVxAMazCBLwG0B739E8ch1U5tAKLh24w3NvYeSU69VYiM5PezX5hHj4W+JQXQN1usGABMD3jV8bz14H5xK/OZ6d8mNgoA7o9L3QmWt5RKKcXssw750xMAEs9YL7jheVD+/wmZd7zdlh+xlkQYIG6iXsh/gq8gO2kfkNwouU1i1aQqFnmN2NPiW8rAFxPQuTDEOsl7QbhL8B0sJvvDYCZpqa6y1fBWc8L8bkWcH3F13M0fEobEdAdeMHbFs/HN4LVSQyvKeTp50LCpQLVgshz8VdvAIdrwOEIaAf1Is58/Z0FoLfiPXqjLGyCxl776WYYJgcxGAz3JsS5nbNDE5FlVPv97FXMAri+243YRlh/7n2MWp+sPxvjpHPu4EYn7fQK/RXn3KM7fM+7ChF5dpT6ZP259zFqfbL+bB9sq8tgMBhGBCbQDQaDYUSw0wL9yR2+305g1Ppk/bn3MWp9sv5sE3Z0U9RgMBgMdw9GuRgMBsOIYMcEuoh8QkReEZGzIvL5nbrvdkJE3hSR50NCj2fDsRkR+YaIvBb+v8NINXcXIvIFEbkqIi/QsdI+iMc/D3P2nIh8ZPdaXo6K/lQmXxGRfxL684qI/Oe70+pqrJNQZk/O0btJkLMH5qglIt8SkR+EPv1aOH5aRJ4Jbf8DEWmE483w+9nw91N3rXHOubv+A5+/+XX4/BMNeG//h3fi3tvcjzcBzN5y7H8D8PlQ/jyAX9/tdm7Qh58C8BEAL2zUBwCfBPD/wnsKfxTAM7vd/k3251cB/I8l5z4cnr0mgNPhmaztdh9uaeMRAB8J5UkAr4Z278k5Wqc/e3mOBMBEKNfhw4l/FMCXAXwqHP8XAP7bUP7vAPyLUP4UgD+4W23bqRX6YwDOOufOOecSAF8C8PgO3ftu43EAXwzlLwL4u7vYlg3hnPtzAPO3HK7qw+MA/rXz+GsA07cEZdt1VPSnCo8D+JJzbs059wZ8/vBNRQndKTjnLjnnvhvKy/BhiI5hj87ROv2pwl6YI+ecy1Mh1MOPA/AxAF8Jx2+do3zuvgLgZ0LQw23HTgn0YyjmrriAvZn1yAH4/0TkOyFxBwAccj6rEwBchs+/sddQ1Ye9PG+fCxTEF4gG21P9uSWhzJ6fo1v6A+zhORKRWghWeBXAN+A1iQXnXB4uits97FP4+yLuPBBsKWxTdGv4SefcRwD8LIBfEJGf4j86r1PtabOhUegDNpl85V5GSUKZIfbiHL3bBDn3KpzPBfEIfEy7x+Dj7u06dkqgX4SPgJvjOCoT0d27cM5dDP9fBfD/wE/klVzFDf9f3b0WvmtU9WFPzpurTr6yJ/pTllAGe3iOtpgg557vD8M5twCff/7H4OmuPJwKt3vYp/D3fQBu3I327JRA/zaAB8MucAN+Y+CpHbr3tkBExkVkMi8D+M/gk3o8BeAz4bTPAPja7rTwjlDVh6cA/HywpPgogEVS++9ZSHXylacAfCpYHZwG8CCAb+10+9ZD4FZvSyiDPTpHVf3Z43N0UESmQ3kMwMfh9waeBvBz4bRb5yifu58D8KdBy9p+7ODO8Cfhd7hfB/ArO3XfbWz/Gfjd9x8AeDHvAzwX9k0ArwH4EwAzu93WDfrx+/Aqbh+e5/tsVR/gd/N/O8zZ8wAe3e32b7I//ya09zn4l+kInf8roT+vAPjZ3W5/SX9+Ep5OeQ7A98PPJ/fqHK3Tn708Rx8E8L3Q9hcA/M/h+Bn4j89ZAP8WQDMcb4Xfz4a/n7lbbTNPUYPBYBgR2KaowWAwjAhMoBsMBsOIwAS6wWAwjAhMoBsMBsOIwAS6wWAwjAhMoBsMBsOIwAS6wWAwjAhMoBsMBsOI4P8HQw6G7YaZIk4AAAAASUVORK5CYII=",

"text/plain": [

""

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"import matplotlib.pyplot as plt\n",

"# Read the picture\n",

"raw_img = cv2.imread(\"/home/aistudio/work/word_1.png\")\n",

"plt.figure()\n",

"plt.subplot(2,1,1)\n",

"# Visualize the original image\n",

"plt.imshow(raw_img)\n",

"# Scale and normalize\n",

"padding_im, draw_img = resize_norm_img(raw_img)\n",

"plt.subplot(2,1,2)\n",

"# Visual network input diagram\n",

"plt.imshow(draw_img)\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"**[Network Structure]**\n",

"\n",

"* backbone\n",

"\n",

"PaddleOCR uses MobileNetV3 as the backbone network. The networking sequence is consistent with the network structure. First, define the public modules in the network ([source code location](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.3/ppocr/modeling/backbones/rec_mobilenet_v3.py)): `ConvBNLayer`, `ResidualUnit`, and `make_divisible`."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [],

"source": [

"import paddle\n",

"import paddle.nn as nn\n",

"import paddle.nn.functional as F\n",

"\n",

"class ConvBNLayer(nn.Layer):\n",

" def __init__(self,\n",

" in_channels,\n",

" out_channels,\n",

" kernel_size,\n",

" stride,\n",

" padding,\n",

" groups=1,\n",

" if_act=True,\n",

" act=None):\n",

" \"\"\"\n",

" Convolutional BN layer\n",

" :param in_channels: number of input channels\n",

" :param out_channels: Number of output channels\n",

" :param kernel_size: Convolution kernel size\n",

" :parma stride: stride size\n",

" :param padding: padding size\n",

" :param groups: the number of groups of the two-dimensional convolutional layer\n",

" :param if_act: whether to add activation function\n",

" :param act: activation function\n",

" \"\"\"\n",

" super(ConvBNLayer, self).__init__()\n",

" self.if_act = if_act\n",

" self.act = act\n",

" self.conv = nn.Conv2D(\n",

" in_channels=in_channels,\n",

" out_channels=out_channels,\n",

" kernel_size=kernel_size,\n",

" stride=stride,\n",

" padding=padding,\n",

" groups=groups,\n",

" bias_attr=False)\n",

"\n",

" self.bn = nn.BatchNorm(num_channels=out_channels, act=None)\n",

"\n",

" def forward(self, x):\n",

" # conv layer\n",

" x = self.conv(x)\n",

" # batchnorm layer\n",

" x = self.bn(x)\n",

" # Whether to use activation function\n",

" if self.if_act:\n",

" if self.act == \"relu\":\n",

" x = F.relu(x)\n",

" elif self.act == \"hardswish\":\n",

" x = F.hardswish(x)\n",

" else:\n",

" print(\"The activation function({}) is selected incorrectly.\".\n",

" format(self.act))\n",

" exit()\n",

" return x\n",

"\n",

"class SEModule(nn.Layer):\n",

" def __init__(self, in_channels, reduction=4):\n",

" \"\"\"\n",

" SE module\n",

" :param in_channels: number of input channels\n",

" :param reduction: channel zoom ratio\n",

" \"\"\" \n",

" super(SEModule, self).__init__()\n",

" self.avg_pool = nn.AdaptiveAvgPool2D(1)\n",

" self.conv1 = nn.Conv2D(\n",

" in_channels=in_channels,\n",

" out_channels=in_channels // reduction,\n",

" kernel_size=1,\n",

" stride=1,\n",

" padding=0)\n",

" self.conv2 = nn.Conv2D(\n",

" in_channels=in_channels // reduction,\n",

" out_channels=in_channels,\n",

" kernel_size=1,\n",

" stride=1,\n",

" padding=0)\n",

"\n",

" def forward(self, inputs):\n",

" # Average pooling\n",

" outputs = self.avg_pool(inputs)\n",

" # First convolutional layer\n",

" outputs = self.conv1(outputs)\n",

" # relu activation function\n",

" outputs = F.relu(outputs)\n",

" # The second convolutional layer\n",

" outputs = self.conv2(outputs)\n",

" # hardsigmoid activation function\n",

" outputs = F.hardsigmoid(outputs, slope=0.2, offset=0.5)\n",

" return inputs * outputs\n",

"\n",

"\n",

"class ResidualUnit(nn.Layer):\n",

" def __init__(self,\n",

" in_channels,\n",

" mid_channels,\n",

" out_channels,\n",

" kernel_size,\n",

" stride,\n",

" use_se,\n",

" act=None):\n",

" \"\"\"\n",

" Residual layer\n",

" :param in_channels: number of input channels\n",

" :param mid_channels: number of intermediate channels\n",

" :param out_channels: Number of output channels\n",

" :param kernel_size: Convolution kernel size\n",

" :parma stride: stride size\n",

" :param use_se: whether to use se module\n",

" :param act: activation function\n",

" \"\"\" \n",

" super(ResidualUnit, self).__init__()\n",

" self.if_shortcut = stride == 1 and in_channels == out_channels\n",

" self.if_se = use_se\n",

"\n",

" self.expand_conv = ConvBNLayer(\n",

" in_channels=in_channels,\n",

" out_channels=mid_channels,\n",

" kernel_size=1,\n",

" stride=1,\n",

" padding=0,\n",

" if_act=True,\n",

" act=act)\n",

" self.bottleneck_conv = ConvBNLayer(\n",

" in_channels=mid_channels,\n",

" out_channels=mid_channels,\n",

" kernel_size=kernel_size,\n",

" stride=stride,\n",

" padding=int((kernel_size - 1) // 2),\n",

" groups=mid_channels,\n",

" if_act=True,\n",

" act=act)\n",

" if self.if_se:\n",

" self.mid_se = SEModule(mid_channels)\n",

" self.linear_conv = ConvBNLayer(\n",

" in_channels=mid_channels,\n",

" out_channels=out_channels,\n",

" kernel_size=1,\n",

" stride=1,\n",

" padding=0,\n",

" if_act=False,\n",

" act=None)\n",

"\n",

" def forward(self, inputs):\n",

" x = self.expand_conv(inputs)\n",

" x = self.bottleneck_conv(x)\n",

" if self.if_se:\n",

" x = self.mid_se(x)\n",

" x = self.linear_conv(x)\n",

" if self.if_shortcut:\n",

" x = paddle.add(inputs, x)\n",

" return x\n",

"\n",

"\n",

"def make_divisible(v, divisor=8, min_value=None):\n",

" \"\"\"\n",

" Make sure to be divisible by 8\n",

" \"\"\" \n",

" if min_value is None:\n",

" min_value = divisor\n",

" new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)\n",

" if new_v < 0.9 * v:\n",

" new_v += divisor\n",

" return new_v\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Use public modules to build backbone networks:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [],

"source": [

"class MobileNetV3(nn.Layer):\n",

" def __init__(self,\n",

" in_channels=3,\n",

" model_name='small',\n",

" scale=0.5,\n",

" small_stride=None,\n",

" disable_se=False,\n",

" **kwargs):\n",

" super(MobileNetV3, self).__init__()\n",

" self.disable_se = disable_se\n",

" \n",

" small_stride = [1, 2, 2, 2]\n",

"\n",

" if model_name == \"small\":\n",

" cfg = [\n",

" # k, exp, c, se, nl, s,\n",

" [3, 16, 16, True, 'relu', (small_stride[0], 1)],\n",

" [3, 72, 24, False, 'relu', (small_stride[1], 1)],\n",

" [3, 88, 24, False, 'relu', 1],\n",

" [5, 96, 40, True, 'hardswish', (small_stride[2], 1)],\n",

" [5, 240, 40, True, 'hardswish', 1],\n",

" [5, 240, 40, True, 'hardswish', 1],\n",

" [5, 120, 48, True, 'hardswish', 1],\n",

" [5, 144, 48, True, 'hardswish', 1],\n",

" [5, 288, 96, True, 'hardswish', (small_stride[3], 1)],\n",

" [5, 576, 96, True, 'hardswish', 1],\n",

" [5, 576, 96, True, 'hardswish', 1],\n",

" ]\n",

" cls_ch_squeeze = 576\n",

" else:\n",

" raise NotImplementedError(\"mode[\" + model_name +\n",

" \"_model] is not implemented!\")\n",

"\n",

" supported_scale = [0.35, 0.5, 0.75, 1.0, 1.25]\n",

" assert scale in supported_scale, \\\n",

" \"supported scales are {} but input scale is {}\".format(supported_scale, scale)\n",

"\n",

" inplanes = 16\n",

" # conv1\n",

" self.conv1 = ConvBNLayer(\n",

" in_channels=in_channels,\n",

" out_channels=make_divisible(inplanes * scale),\n",

" kernel_size=3,\n",

" stride=2,\n",

" padding=1,\n",

" groups=1,\n",

" if_act=True,\n",

" act='hardswish')\n",

" i = 0\n",

" block_list = []\n",

" inplanes = make_divisible(inplanes * scale)\n",

" for (k, exp, c, se, nl, s) in cfg:\n",

" se = se and not self.disable_se\n",

" block_list.append(\n",

" ResidualUnit(\n",

" in_channels=inplanes,\n",

" mid_channels=make_divisible(scale * exp),\n",

" out_channels=make_divisible(scale * c),\n",

" kernel_size=k,\n",

" stride=s,\n",

" use_se=se,\n",

" act=nl))\n",

" inplanes = make_divisible(scale * c)\n",

" i += 1\n",

" self.blocks = nn.Sequential(*block_list)\n",

"\n",

" self.conv2 = ConvBNLayer(\n",

" in_channels=inplanes,\n",

" out_channels=make_divisible(scale * cls_ch_squeeze),\n",

" kernel_size=1,\n",

" stride=1,\n",

" padding=0,\n",

" groups=1,\n",

" if_act=True,\n",

" act='hardswish')\n",

"\n",

" self.pool = nn.MaxPool2D(kernel_size=2, stride=2, padding=0)\n",

" self.out_channels = make_divisible(scale * cls_ch_squeeze)\n",

"\n",

" def forward(self, x):\n",

" x = self.conv1(x)\n",

" x = self.blocks(x)\n",

" x = self.conv2(x)\n",

" x = self.pool(x)\n",

" return x\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"At this point, the definition of the backbone network is completed, and the entire network structure can be visualized through the paddle.summary structure:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"-------------------------------------------------------------------------------\n",

" Layer (type) Input Shape Output Shape Param # \n",

"===============================================================================\n",

" Conv2D-1 [[1, 3, 32, 320]] [1, 8, 16, 160] 216 \n",

" BatchNorm-1 [[1, 8, 16, 160]] [1, 8, 16, 160] 32 \n",

" ConvBNLayer-1 [[1, 3, 32, 320]] [1, 8, 16, 160] 0 \n",

" Conv2D-2 [[1, 8, 16, 160]] [1, 8, 16, 160] 64 \n",

" BatchNorm-2 [[1, 8, 16, 160]] [1, 8, 16, 160] 32 \n",

" ConvBNLayer-2 [[1, 8, 16, 160]] [1, 8, 16, 160] 0 \n",

" Conv2D-3 [[1, 8, 16, 160]] [1, 8, 16, 160] 72 \n",

" BatchNorm-3 [[1, 8, 16, 160]] [1, 8, 16, 160] 32 \n",

" ConvBNLayer-3 [[1, 8, 16, 160]] [1, 8, 16, 160] 0 \n",

"AdaptiveAvgPool2D-1 [[1, 8, 16, 160]] [1, 8, 1, 1] 0 \n",

" Conv2D-4 [[1, 8, 1, 1]] [1, 2, 1, 1] 18 \n",

" Conv2D-5 [[1, 2, 1, 1]] [1, 8, 1, 1] 24 \n",

" SEModule-1 [[1, 8, 16, 160]] [1, 8, 16, 160] 0 \n",

" Conv2D-6 [[1, 8, 16, 160]] [1, 8, 16, 160] 64 \n",

" BatchNorm-4 [[1, 8, 16, 160]] [1, 8, 16, 160] 32 \n",

" ConvBNLayer-4 [[1, 8, 16, 160]] [1, 8, 16, 160] 0 \n",

" ResidualUnit-1 [[1, 8, 16, 160]] [1, 8, 16, 160] 0 \n",

" Conv2D-7 [[1, 8, 16, 160]] [1, 40, 16, 160] 320 \n",

" BatchNorm-5 [[1, 40, 16, 160]] [1, 40, 16, 160] 160 \n",

" ConvBNLayer-5 [[1, 8, 16, 160]] [1, 40, 16, 160] 0 \n",

" Conv2D-8 [[1, 40, 16, 160]] [1, 40, 8, 160] 360 \n",

" BatchNorm-6 [[1, 40, 8, 160]] [1, 40, 8, 160] 160 \n",

" ConvBNLayer-6 [[1, 40, 16, 160]] [1, 40, 8, 160] 0 \n",

" Conv2D-9 [[1, 40, 8, 160]] [1, 16, 8, 160] 640 \n",

" BatchNorm-7 [[1, 16, 8, 160]] [1, 16, 8, 160] 64 \n",

" ConvBNLayer-7 [[1, 40, 8, 160]] [1, 16, 8, 160] 0 \n",

" ResidualUnit-2 [[1, 8, 16, 160]] [1, 16, 8, 160] 0 \n",

" Conv2D-10 [[1, 16, 8, 160]] [1, 48, 8, 160] 768 \n",

" BatchNorm-8 [[1, 48, 8, 160]] [1, 48, 8, 160] 192 \n",

" ConvBNLayer-8 [[1, 16, 8, 160]] [1, 48, 8, 160] 0 \n",

" Conv2D-11 [[1, 48, 8, 160]] [1, 48, 8, 160] 432 \n",

" BatchNorm-9 [[1, 48, 8, 160]] [1, 48, 8, 160] 192 \n",

" ConvBNLayer-9 [[1, 48, 8, 160]] [1, 48, 8, 160] 0 \n",

" Conv2D-12 [[1, 48, 8, 160]] [1, 16, 8, 160] 768 \n",

" BatchNorm-10 [[1, 16, 8, 160]] [1, 16, 8, 160] 64 \n",

" ConvBNLayer-10 [[1, 48, 8, 160]] [1, 16, 8, 160] 0 \n",

" ResidualUnit-3 [[1, 16, 8, 160]] [1, 16, 8, 160] 0 \n",

" Conv2D-13 [[1, 16, 8, 160]] [1, 48, 8, 160] 768 \n",

" BatchNorm-11 [[1, 48, 8, 160]] [1, 48, 8, 160] 192 \n",

" ConvBNLayer-11 [[1, 16, 8, 160]] [1, 48, 8, 160] 0 \n",

" Conv2D-14 [[1, 48, 8, 160]] [1, 48, 4, 160] 1,200 \n",

" BatchNorm-12 [[1, 48, 4, 160]] [1, 48, 4, 160] 192 \n",

" ConvBNLayer-12 [[1, 48, 8, 160]] [1, 48, 4, 160] 0 \n",

"AdaptiveAvgPool2D-2 [[1, 48, 4, 160]] [1, 48, 1, 1] 0 \n",

" Conv2D-15 [[1, 48, 1, 1]] [1, 12, 1, 1] 588 \n",

" Conv2D-16 [[1, 12, 1, 1]] [1, 48, 1, 1] 624 \n",

" SEModule-2 [[1, 48, 4, 160]] [1, 48, 4, 160] 0 \n",

" Conv2D-17 [[1, 48, 4, 160]] [1, 24, 4, 160] 1,152 \n",

" BatchNorm-13 [[1, 24, 4, 160]] [1, 24, 4, 160] 96 \n",

" ConvBNLayer-13 [[1, 48, 4, 160]] [1, 24, 4, 160] 0 \n",

" ResidualUnit-4 [[1, 16, 8, 160]] [1, 24, 4, 160] 0 \n",

" Conv2D-18 [[1, 24, 4, 160]] [1, 120, 4, 160] 2,880 \n",

" BatchNorm-14 [[1, 120, 4, 160]] [1, 120, 4, 160] 480 \n",

" ConvBNLayer-14 [[1, 24, 4, 160]] [1, 120, 4, 160] 0 \n",

" Conv2D-19 [[1, 120, 4, 160]] [1, 120, 4, 160] 3,000 \n",

" BatchNorm-15 [[1, 120, 4, 160]] [1, 120, 4, 160] 480 \n",

" ConvBNLayer-15 [[1, 120, 4, 160]] [1, 120, 4, 160] 0 \n",

"AdaptiveAvgPool2D-3 [[1, 120, 4, 160]] [1, 120, 1, 1] 0 \n",

" Conv2D-20 [[1, 120, 1, 1]] [1, 30, 1, 1] 3,630 \n",

" Conv2D-21 [[1, 30, 1, 1]] [1, 120, 1, 1] 3,720 \n",

" SEModule-3 [[1, 120, 4, 160]] [1, 120, 4, 160] 0 \n",

" Conv2D-22 [[1, 120, 4, 160]] [1, 24, 4, 160] 2,880 \n",

" BatchNorm-16 [[1, 24, 4, 160]] [1, 24, 4, 160] 96 \n",

" ConvBNLayer-16 [[1, 120, 4, 160]] [1, 24, 4, 160] 0 \n",

" ResidualUnit-5 [[1, 24, 4, 160]] [1, 24, 4, 160] 0 \n",

" Conv2D-23 [[1, 24, 4, 160]] [1, 120, 4, 160] 2,880 \n",

" BatchNorm-17 [[1, 120, 4, 160]] [1, 120, 4, 160] 480 \n",

" ConvBNLayer-17 [[1, 24, 4, 160]] [1, 120, 4, 160] 0 \n",

" Conv2D-24 [[1, 120, 4, 160]] [1, 120, 4, 160] 3,000 \n",

" BatchNorm-18 [[1, 120, 4, 160]] [1, 120, 4, 160] 480 \n",

" ConvBNLayer-18 [[1, 120, 4, 160]] [1, 120, 4, 160] 0 \n",

"AdaptiveAvgPool2D-4 [[1, 120, 4, 160]] [1, 120, 1, 1] 0 \n",

" Conv2D-25 [[1, 120, 1, 1]] [1, 30, 1, 1] 3,630 \n",

" Conv2D-26 [[1, 30, 1, 1]] [1, 120, 1, 1] 3,720 \n",

" SEModule-4 [[1, 120, 4, 160]] [1, 120, 4, 160] 0 \n",

" Conv2D-27 [[1, 120, 4, 160]] [1, 24, 4, 160] 2,880 \n",

" BatchNorm-19 [[1, 24, 4, 160]] [1, 24, 4, 160] 96 \n",

" ConvBNLayer-19 [[1, 120, 4, 160]] [1, 24, 4, 160] 0 \n",

" ResidualUnit-6 [[1, 24, 4, 160]] [1, 24, 4, 160] 0 \n",

" Conv2D-28 [[1, 24, 4, 160]] [1, 64, 4, 160] 1,536 \n",

" BatchNorm-20 [[1, 64, 4, 160]] [1, 64, 4, 160] 256 \n",

" ConvBNLayer-20 [[1, 24, 4, 160]] [1, 64, 4, 160] 0 \n",

" Conv2D-29 [[1, 64, 4, 160]] [1, 64, 4, 160] 1,600 \n",

" BatchNorm-21 [[1, 64, 4, 160]] [1, 64, 4, 160] 256 \n",

" ConvBNLayer-21 [[1, 64, 4, 160]] [1, 64, 4, 160] 0 \n",

"AdaptiveAvgPool2D-5 [[1, 64, 4, 160]] [1, 64, 1, 1] 0 \n",

" Conv2D-30 [[1, 64, 1, 1]] [1, 16, 1, 1] 1,040 \n",

" Conv2D-31 [[1, 16, 1, 1]] [1, 64, 1, 1] 1,088 \n",

" SEModule-5 [[1, 64, 4, 160]] [1, 64, 4, 160] 0 \n",

" Conv2D-32 [[1, 64, 4, 160]] [1, 24, 4, 160] 1,536 \n",

" BatchNorm-22 [[1, 24, 4, 160]] [1, 24, 4, 160] 96 \n",

" ConvBNLayer-22 [[1, 64, 4, 160]] [1, 24, 4, 160] 0 \n",

" ResidualUnit-7 [[1, 24, 4, 160]] [1, 24, 4, 160] 0 \n",

" Conv2D-33 [[1, 24, 4, 160]] [1, 72, 4, 160] 1,728 \n",

" BatchNorm-23 [[1, 72, 4, 160]] [1, 72, 4, 160] 288 \n",

" ConvBNLayer-23 [[1, 24, 4, 160]] [1, 72, 4, 160] 0 \n",

" Conv2D-34 [[1, 72, 4, 160]] [1, 72, 4, 160] 1,800 \n",

" BatchNorm-24 [[1, 72, 4, 160]] [1, 72, 4, 160] 288 \n",

" ConvBNLayer-24 [[1, 72, 4, 160]] [1, 72, 4, 160] 0 \n",

"AdaptiveAvgPool2D-6 [[1, 72, 4, 160]] [1, 72, 1, 1] 0 \n",

" Conv2D-35 [[1, 72, 1, 1]] [1, 18, 1, 1] 1,314 \n",

" Conv2D-36 [[1, 18, 1, 1]] [1, 72, 1, 1] 1,368 \n",

" SEModule-6 [[1, 72, 4, 160]] [1, 72, 4, 160] 0 \n",

" Conv2D-37 [[1, 72, 4, 160]] [1, 24, 4, 160] 1,728 \n",

" BatchNorm-25 [[1, 24, 4, 160]] [1, 24, 4, 160] 96 \n",

" ConvBNLayer-25 [[1, 72, 4, 160]] [1, 24, 4, 160] 0 \n",

" ResidualUnit-8 [[1, 24, 4, 160]] [1, 24, 4, 160] 0 \n",

" Conv2D-38 [[1, 24, 4, 160]] [1, 144, 4, 160] 3,456 \n",

" BatchNorm-26 [[1, 144, 4, 160]] [1, 144, 4, 160] 576 \n",

" ConvBNLayer-26 [[1, 24, 4, 160]] [1, 144, 4, 160] 0 \n",

" Conv2D-39 [[1, 144, 4, 160]] [1, 144, 2, 160] 3,600 \n",

" BatchNorm-27 [[1, 144, 2, 160]] [1, 144, 2, 160] 576 \n",

" ConvBNLayer-27 [[1, 144, 4, 160]] [1, 144, 2, 160] 0 \n",

"AdaptiveAvgPool2D-7 [[1, 144, 2, 160]] [1, 144, 1, 1] 0 \n",

" Conv2D-40 [[1, 144, 1, 1]] [1, 36, 1, 1] 5,220 \n",

" Conv2D-41 [[1, 36, 1, 1]] [1, 144, 1, 1] 5,328 \n",

" SEModule-7 [[1, 144, 2, 160]] [1, 144, 2, 160] 0 \n",

" Conv2D-42 [[1, 144, 2, 160]] [1, 48, 2, 160] 6,912 \n",

" BatchNorm-28 [[1, 48, 2, 160]] [1, 48, 2, 160] 192 \n",

" ConvBNLayer-28 [[1, 144, 2, 160]] [1, 48, 2, 160] 0 \n",

" ResidualUnit-9 [[1, 24, 4, 160]] [1, 48, 2, 160] 0 \n",

" Conv2D-43 [[1, 48, 2, 160]] [1, 288, 2, 160] 13,824 \n",

" BatchNorm-29 [[1, 288, 2, 160]] [1, 288, 2, 160] 1,152 \n",

" ConvBNLayer-29 [[1, 48, 2, 160]] [1, 288, 2, 160] 0 \n",

" Conv2D-44 [[1, 288, 2, 160]] [1, 288, 2, 160] 7,200 \n",

" BatchNorm-30 [[1, 288, 2, 160]] [1, 288, 2, 160] 1,152 \n",

" ConvBNLayer-30 [[1, 288, 2, 160]] [1, 288, 2, 160] 0 \n",

"AdaptiveAvgPool2D-8 [[1, 288, 2, 160]] [1, 288, 1, 1] 0 \n",

" Conv2D-45 [[1, 288, 1, 1]] [1, 72, 1, 1] 20,808 \n",

" Conv2D-46 [[1, 72, 1, 1]] [1, 288, 1, 1] 21,024 \n",

" SEModule-8 [[1, 288, 2, 160]] [1, 288, 2, 160] 0 \n",

" Conv2D-47 [[1, 288, 2, 160]] [1, 48, 2, 160] 13,824 \n",

" BatchNorm-31 [[1, 48, 2, 160]] [1, 48, 2, 160] 192 \n",

" ConvBNLayer-31 [[1, 288, 2, 160]] [1, 48, 2, 160] 0 \n",

" ResidualUnit-10 [[1, 48, 2, 160]] [1, 48, 2, 160] 0 \n",

" Conv2D-48 [[1, 48, 2, 160]] [1, 288, 2, 160] 13,824 \n",

" BatchNorm-32 [[1, 288, 2, 160]] [1, 288, 2, 160] 1,152 \n",

" ConvBNLayer-32 [[1, 48, 2, 160]] [1, 288, 2, 160] 0 \n",

" Conv2D-49 [[1, 288, 2, 160]] [1, 288, 2, 160] 7,200 \n",

" BatchNorm-33 [[1, 288, 2, 160]] [1, 288, 2, 160] 1,152 \n",

" ConvBNLayer-33 [[1, 288, 2, 160]] [1, 288, 2, 160] 0 \n",

"AdaptiveAvgPool2D-9 [[1, 288, 2, 160]] [1, 288, 1, 1] 0 \n",

" Conv2D-50 [[1, 288, 1, 1]] [1, 72, 1, 1] 20,808 \n",

" Conv2D-51 [[1, 72, 1, 1]] [1, 288, 1, 1] 21,024 \n",

" SEModule-9 [[1, 288, 2, 160]] [1, 288, 2, 160] 0 \n",

" Conv2D-52 [[1, 288, 2, 160]] [1, 48, 2, 160] 13,824 \n",

" BatchNorm-34 [[1, 48, 2, 160]] [1, 48, 2, 160] 192 \n",

" ConvBNLayer-34 [[1, 288, 2, 160]] [1, 48, 2, 160] 0 \n",

" ResidualUnit-11 [[1, 48, 2, 160]] [1, 48, 2, 160] 0 \n",

" Conv2D-53 [[1, 48, 2, 160]] [1, 288, 2, 160] 13,824 \n",

" BatchNorm-35 [[1, 288, 2, 160]] [1, 288, 2, 160] 1,152 \n",

" ConvBNLayer-35 [[1, 48, 2, 160]] [1, 288, 2, 160] 0 \n",

" MaxPool2D-1 [[1, 288, 2, 160]] [1, 288, 1, 80] 0 \n",

"===============================================================================\n",

"Total params: 259,056\n",

"Trainable params: 246,736\n",

"Non-trainable params: 12,320\n",

"-------------------------------------------------------------------------------\n",

"Input size (MB): 0.12\n",

"Forward/backward pass size (MB): 44.38\n",

"Params size (MB): 0.99\n",

"Estimated Total Size (MB): 45.48\n",

"-------------------------------------------------------------------------------\n",

"\n"

]

},

{

"data": {

"text/plain": [

"{'total_params': 259056, 'trainable_params': 246736}"

]

},

"execution_count": null,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# Define the network input shape\n",

"IMAGE_SHAPE_C = 3\n",

"IMAGE_SHAPE_H = 32\n",

"IMAGE_SHAPE_W = 320\n",

"\n",

"\n",

"# Visual network structure\n",

"paddle.summary(MobileNetV3(),[(1, IMAGE_SHAPE_C, IMAGE_SHAPE_H, IMAGE_SHAPE_W)])"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"backbone output: [1, 288, 1, 80]\n"

]

}

],

"source": [

"# Picture input backbone network\n",

"backbone = MobileNetV3()\n",

"# Convert numpy data to Tensor\n",

"input_data = paddle.to_tensor([padding_im])\n",

"# Backbone network output\n",

"feature = backbone(input_data)\n",

"# View the latitude of the feature map\n",

"print(\"backbone output:\", feature.shape)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"* neck\n",

"\n",

"The neck part converts the visual feature map output by the backbone into a 1-dimensional vector input and sends it to the LSTM network, and outputs the sequence feature ([source code location](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.3/ppocr/modeling/necks/rnn.py)):"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [],

"source": [

"class Im2Seq(nn.Layer):\n",

" def __init__(self, in_channels, **kwargs):\n",

" \"\"\"\n",

" Image feature is converted to sequence feature\n",

" :param in_channels: number of input channels\n",

" \"\"\" \n",

" super().__init__()\n",

" self.out_channels = in_channels\n",

"\n",

" def forward(self, x):\n",

" B, C, H, W = x.shape\n",

" assert H == 1\n",