@@ -185,12 +190,28 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

- SER (Semantic entity recognition)

-

+

+

+

+

+

+

+

+

+

- RE (Relation Extraction)

-

+

+

+

+

+

+

+

+

+

diff --git a/README_ch.md b/README_ch.md

index c52d5f3dd17839254c3f58794e016f08dc0b21bc..8ffa7a3755970374e1559d3c771bd82c02010a61 100755

--- a/README_ch.md

+++ b/README_ch.md

@@ -27,21 +27,20 @@ PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力

## 近期更新

-- **🔥2022.7 发布[OCR场景应用集合](./applications)**

- - 发布OCR场景应用集合,包含数码管、液晶屏、车牌、高精度SVTR模型等**7个垂类模型**,覆盖通用,制造、金融、交通行业的主要OCR垂类应用。

+- **🔥2022.8.24 发布 PaddleOCR [release/2.6](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.6)**

+ - 发布[PP-Structurev2](./ppstructure/),系统功能性能全面升级,适配中文场景,新增支持[版面复原](./ppstructure/recovery),支持**一行命令完成PDF转Word**;

+ - [版面分析](./ppstructure/layout)模型优化:模型存储减少95%,速度提升11倍,平均CPU耗时仅需41ms;

+ - [表格识别](./ppstructure/table)模型优化:设计3大优化策略,预测耗时不变情况下,模型精度提升6%;

+ - [关键信息抽取](./ppstructure/kie)模型优化:设计视觉无关模型结构,语义实体识别精度提升2.8%,关系抽取精度提升9.1%。

-- **🔥2022.5.9 发布PaddleOCR [release/2.5](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.5)**

+- **🔥2022.8 发布 [OCR场景应用集合](./applications)**

+ - 包含数码管、液晶屏、车牌、高精度SVTR模型、手写体识别等**9个垂类模型**,覆盖通用,制造、金融、交通行业的主要OCR垂类应用。

+

+- **2022.5.9 发布 PaddleOCR [release/2.5](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.5)**

- 发布[PP-OCRv3](./doc/doc_ch/ppocr_introduction.md#pp-ocrv3),速度可比情况下,中文场景效果相比于PP-OCRv2再提升5%,英文场景提升11%,80语种多语言模型平均识别准确率提升5%以上;

- 发布半自动标注工具[PPOCRLabelv2](./PPOCRLabel):新增表格文字图像、图像关键信息抽取任务和不规则文字图像的标注功能;

- 发布OCR产业落地工具集:打通22种训练部署软硬件环境与方式,覆盖企业90%的训练部署环境需求;

- 发布交互式OCR开源电子书[《动手学OCR》](./doc/doc_ch/ocr_book.md),覆盖OCR全栈技术的前沿理论与代码实践,并配套教学视频。

-- 2021.12.21 发布PaddleOCR [release/2.4](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.4)

- - OCR算法新增1种文本检测算法([PSENet](./doc/doc_ch/algorithm_det_psenet.md)),3种文本识别算法([NRTR](./doc/doc_ch/algorithm_rec_nrtr.md)、[SEED](./doc/doc_ch/algorithm_rec_seed.md)、[SAR](./doc/doc_ch/algorithm_rec_sar.md));

- - 文档结构化算法新增1种关键信息提取算法([SDMGR](./ppstructure/docs/kie.md)),3种[DocVQA](./ppstructure/vqa)算法(LayoutLM、LayoutLMv2,LayoutXLM)。

-- 2021.9.7 发布PaddleOCR [release/2.3](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.3)

- - 发布[PP-OCRv2](./doc/doc_ch/ppocr_introduction.md#pp-ocrv2),CPU推理速度相比于PP-OCR server提升220%;效果相比于PP-OCR mobile 提升7%。

-- 2021.8.3 发布PaddleOCR [release/2.2](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.2)

- - 发布文档结构分析[PP-Structure](./ppstructure/README_ch.md)工具包,支持版面分析与表格识别(含Excel导出)。

> [更多](./doc/doc_ch/update.md)

@@ -49,7 +48,9 @@ PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力

支持多种OCR相关前沿算法,在此基础上打造产业级特色模型[PP-OCR](./doc/doc_ch/ppocr_introduction.md)和[PP-Structure](./ppstructure/README_ch.md),并打通数据生产、模型训练、压缩、预测部署全流程。

-

+

+

+

> 上述内容的使用方法建议从文档教程中的快速开始体验

@@ -213,14 +214,30 @@ PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力

- SER(语义实体识别)

-

+

+

+

+

+

+

+

+

+

- RE(关系提取)

-

+

+

+

+

+

+

+

+

+

diff --git a/__init__.py b/__init__.py

index 15a9aca4da19a981b9e678e7cc93e33cf40fc81c..11436094c163db1b91f5ac38f2936a53017016c1 100644

--- a/__init__.py

+++ b/__init__.py

@@ -16,5 +16,6 @@ from .paddleocr import *

__version__ = paddleocr.VERSION

__all__ = [

'PaddleOCR', 'PPStructure', 'draw_ocr', 'draw_structure_result',

- 'save_structure_res', 'download_with_progressbar'

+ 'save_structure_res', 'download_with_progressbar', 'sorted_layout_boxes',

+ 'convert_info_docx'

]

diff --git a/applications/README.md b/applications/README.md

index 017c2a9f6f696904e9bf2f1180104e66c90ee712..2637cd6eaf0c3c59d56673c5e2d294ee7fca2b8b 100644

--- a/applications/README.md

+++ b/applications/README.md

@@ -20,10 +20,10 @@ PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR

### 通用

-| 类别 | 亮点 | 模型下载 | 教程 |

-| ---------------------- | ------------ | -------------- | --------------------------------------- |

-| 高精度中文识别模型SVTR | 比PP-OCRv3识别模型精度高3%,可用于数据挖掘或对预测效率要求不高的场景。| [模型下载](#2) | [中文](./高精度中文识别模型.md)/English |

-| 手写体识别 | 新增字形支持 | | |

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| ---------------------- | ------------------------------------------------------------ | -------------- | --------------------------------------- | ------------------------------------------------------------ |

+| 高精度中文识别模型SVTR | 比PP-OCRv3识别模型精度高3%,

可用于数据挖掘或对预测效率要求不高的场景。 | [模型下载](#2) | [中文](./高精度中文识别模型.md)/English |

|

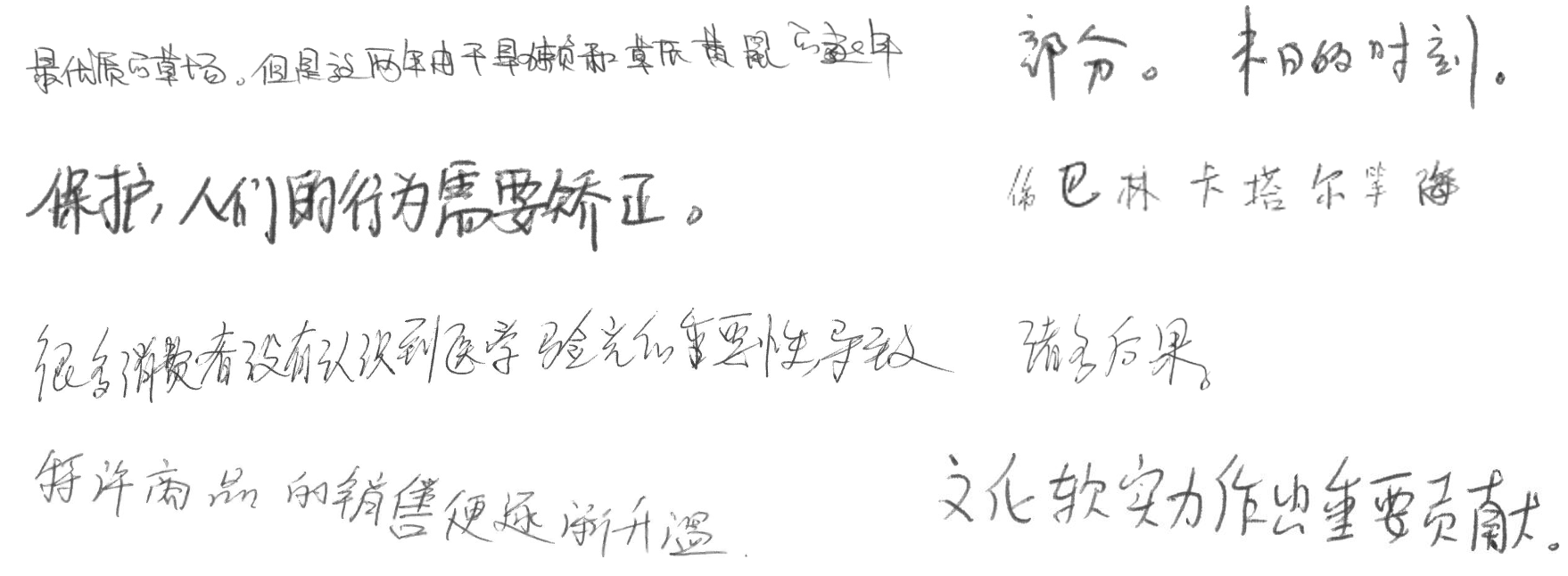

+| 手写体识别 | 新增字形支持 | [模型下载](#2) | [中文](./手写文字识别.md)/English |

|

@@ -42,14 +42,14 @@ PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR

### 金融

-| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

-| -------------- | ------------------------ | -------------- | ----------------------------------- | ------------------------------------------------------------ |

-| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |

|

-| 增值税发票 | 尽请期待 | | | |

-| 印章检测与识别 | 端到端弯曲文本识别 | | | |

-| 通用卡证识别 | 通用结构化提取 | | | |

-| 身份证识别 | 结构化提取、图像阴影 | | | |

-| 合同比对 | 密集文本检测、NLP串联 | | | |

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| -------------- | ----------------------------- | -------------- | ------------------------------------- | ------------------------------------------------------------ |

+| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |

|

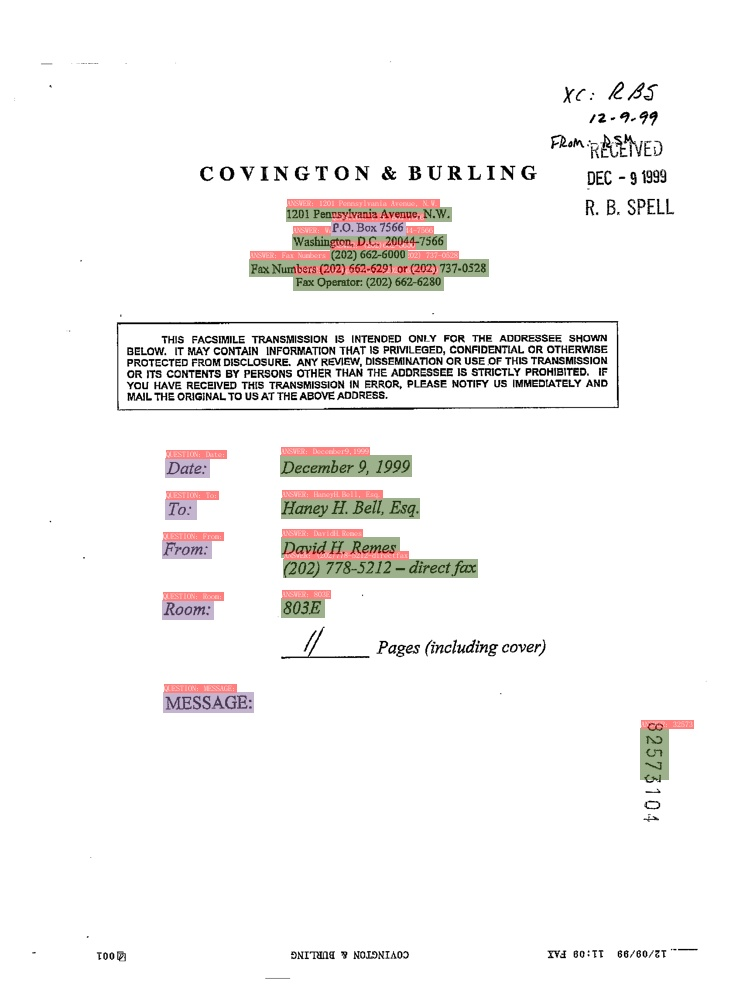

+| 增值税发票 | 关键信息抽取,SER、RE任务训练 | [模型下载](#2) | [中文](./发票关键信息抽取.md)/English |

|

+| 印章检测与识别 | 端到端弯曲文本识别 | | | |

+| 通用卡证识别 | 通用结构化提取 | | | |

+| 身份证识别 | 结构化提取、图像阴影 | | | |

+| 合同比对 | 密集文本检测、NLP串联 | | | |

diff --git a/applications/README_en.md b/applications/README_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..95c56a1f740faa95e1fe3adeaeb90bfe902f8ed8

--- /dev/null

+++ b/applications/README_en.md

@@ -0,0 +1,79 @@

+English| [简体中文](README.md)

+

+# Application

+

+PaddleOCR scene application covers general, manufacturing, finance, transportation industry of the main OCR vertical applications, on the basis of the general capabilities of PP-OCR, PP-Structure, in the form of notebook to show the use of scene data fine-tuning, model optimization methods, data augmentation and other content, for developers to quickly land OCR applications to provide demonstration and inspiration.

+

+- [Tutorial](#1)

+ - [General](#11)

+ - [Manufacturing](#12)

+ - [Finance](#13)

+ - [Transportation](#14)

+

+- [Model Download](#2)

+

+

+

+## Tutorial

+

+

+

+### General

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ---------------------------------------------- | ---------------- | -------------------- | --------------------------------------- | ------------------------------------------------------------ |

+| High-precision Chineses recognition model SVTR | New model | [Model Download](#2) | [中文](./高精度中文识别模型.md)/English |

|

+| Chinese handwriting recognition | New font support | [Model Download](#2) | [中文](./手写文字识别.md)/English |

|

+

+

+

+### Manufacturing

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ------------------------------ | ------------------------------------------------------------ | -------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

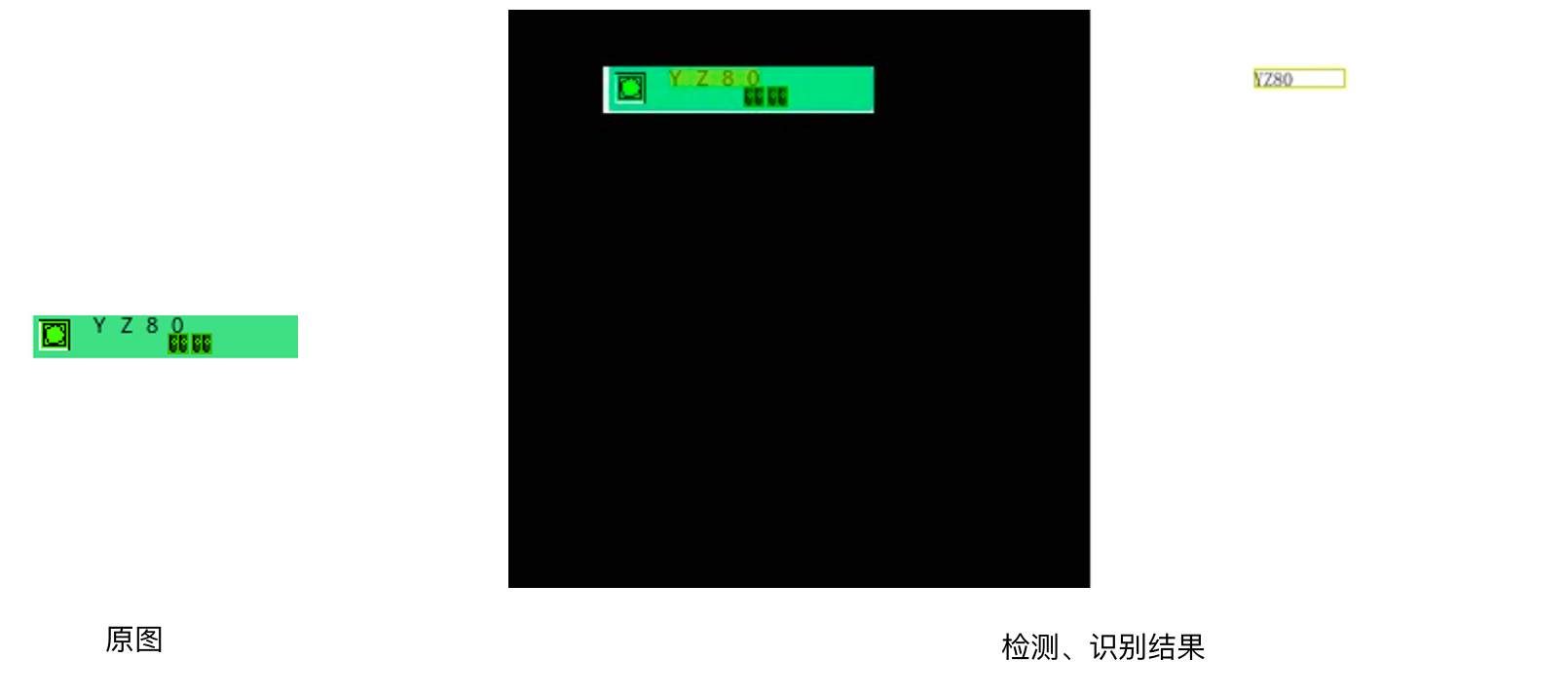

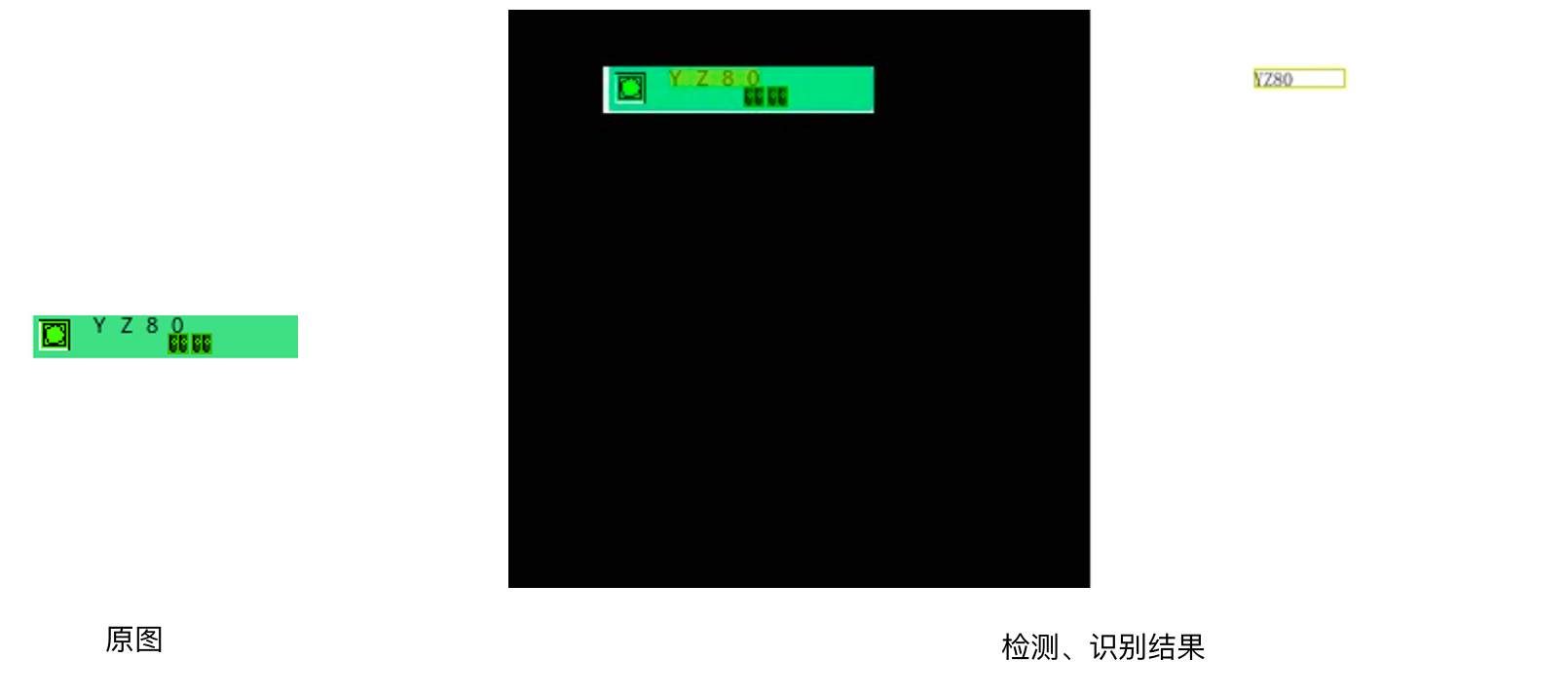

+| Digital tube | Digital tube data sythesis, recognition model fine-tuning | [Model Download](#2) | [中文](./光功率计数码管字符识别/光功率计数码管字符识别.md)/English |

|

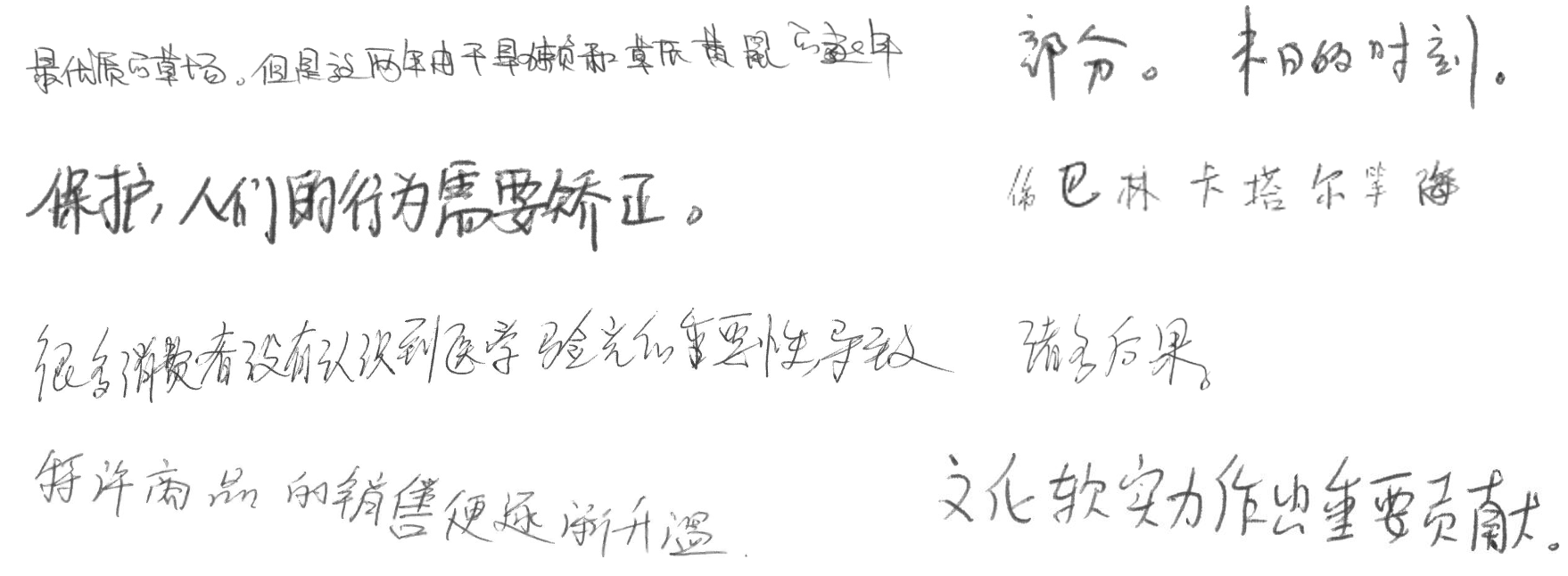

+| LCD screen | Detection model distillation, serving deployment | [Model Download](#2) | [中文](./液晶屏读数识别.md)/English |

|

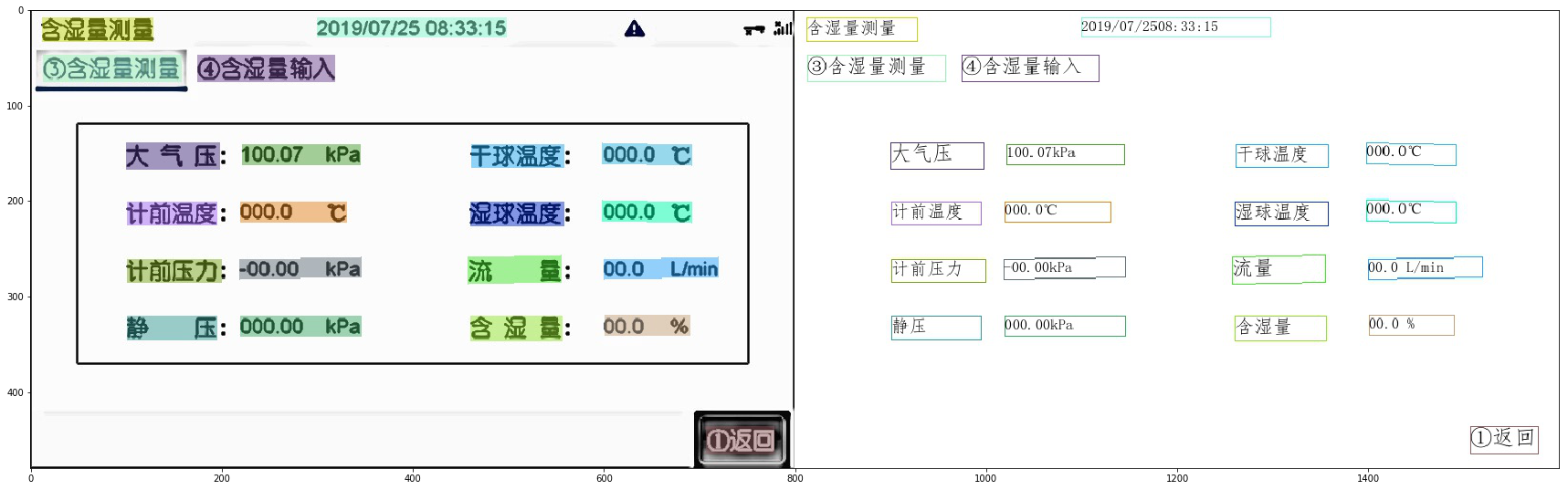

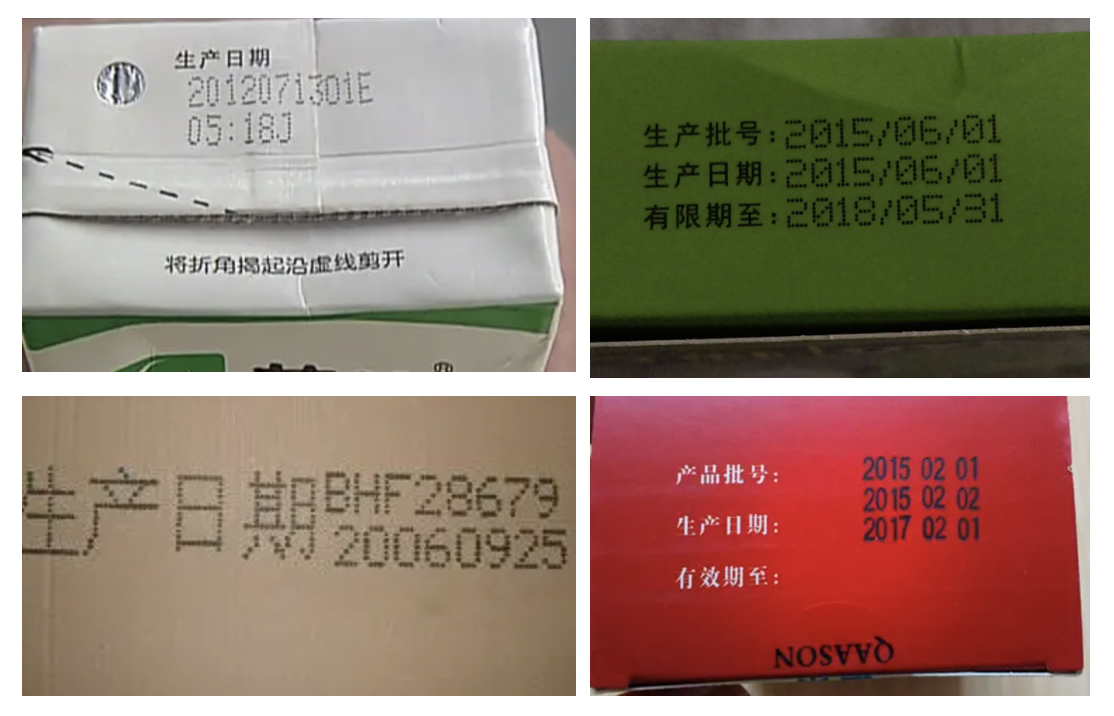

+| Packaging production data | Dot matrix character synthesis, overexposure and overdark text recognition | [Model Download](#2) | [中文](./包装生产日期识别.md)/English |

|

+| PCB text recognition | Small size text detection and recognition | [Model Download](#2) | [中文](./PCB字符识别/PCB字符识别.md)/English |

|

+| Meter text recognition | High-resolution image detection fine-tuning | [Model Download](#2) | | |

+| LCD character defect detection | Non-text character recognition | | | |

+

+

+

+### Finance

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ----------------------------------- | -------------------------------------------------- | -------------------- | ------------------------------------- | ------------------------------------------------------------ |

+| Form visual question and answer | Multimodal general form structured extraction | [Model Download](#2) | [中文](./多模态表单识别.md)/English |

|

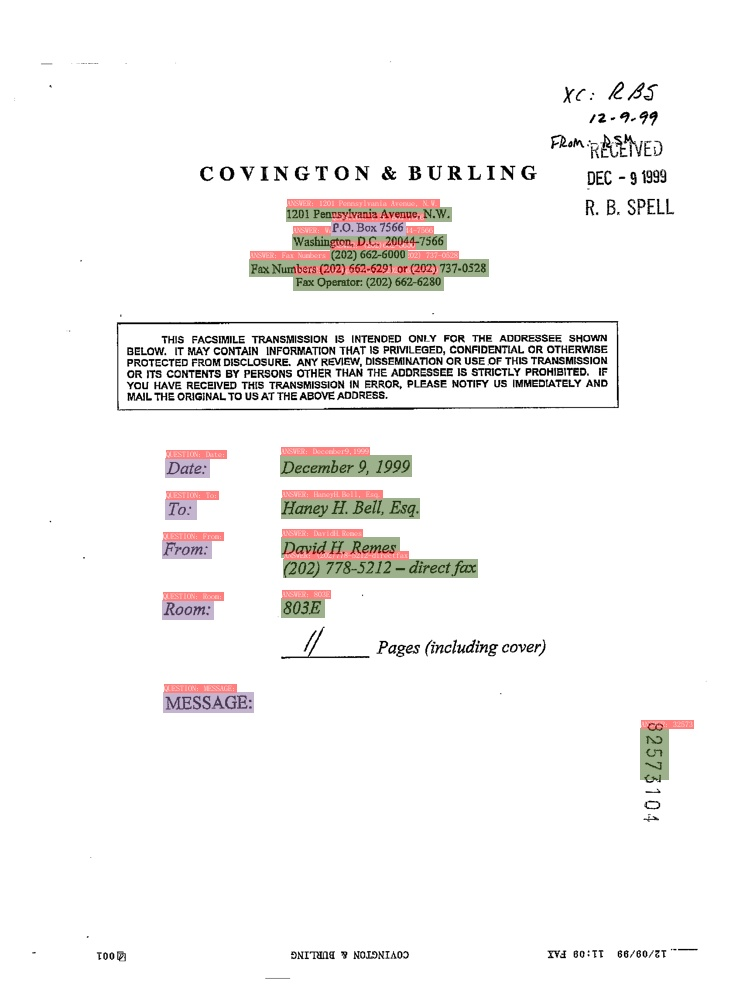

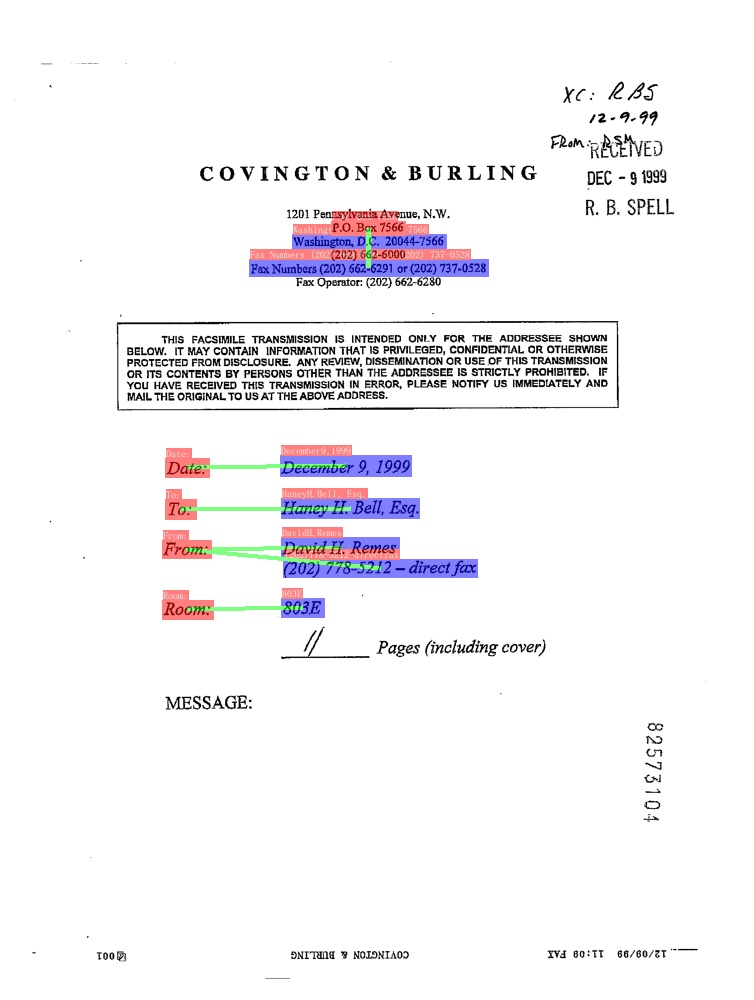

+| VAT invoice | Key information extraction, SER, RE task fine-tune | [Model Download](#2) | [中文](./发票关键信息抽取.md)/English |

|

+| Seal detection and recognition | End-to-end curved text recognition | | | |

+| Universal card recognition | Universal structured extraction | | | |

+| ID card recognition | Structured extraction, image shading | | | |

+| Contract key information extraction | Dense text detection, NLP concatenation | | | |

+

+

+

+### Transportation

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ----------------------------------------------- | ------------------------------------------------------------ | -------------------- | ----------------------------------- | ------------------------------------------------------------ |

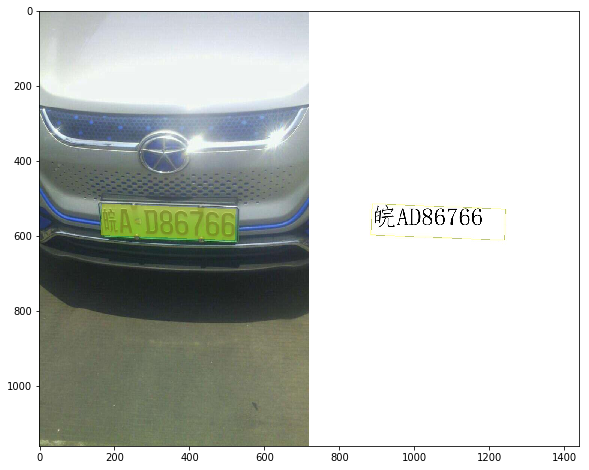

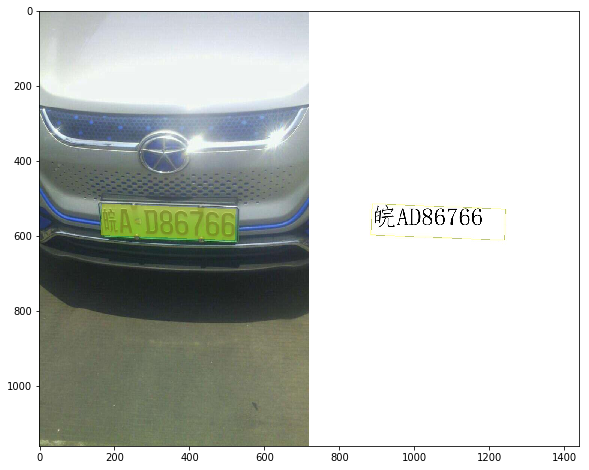

+| License plate recognition | Multi-angle images, lightweight models, edge-side deployment | [Model Download](#2) | [中文](./轻量级车牌识别.md)/English |

|

+| Driver's license/driving license identification | coming soon | | | |

+| Express text recognition | coming soon | | | |

+

+

+

+## Model Download

+

+- For international developers: We're building a way to download these trained models, and since the current tutorials are Chinese, if you are good at both Chinese and English, or willing to polish English documents, please let us know in [discussion](https://github.com/PaddlePaddle/PaddleOCR/discussions).

+- For Chinese developer: If you want to download the trained application model in the above scenarios, scan the QR code below with your WeChat, follow the PaddlePaddle official account to fill in the questionnaire, and join the PaddleOCR official group to get the 20G OCR learning materials (including "Dive into OCR" e-book, course video, application models and other materials)

+

+

+

+

+

+ If you are an enterprise developer and have not found a suitable solution in the above scenarios, you can fill in the [OCR Application Cooperation Survey Questionnaire](https://paddle.wjx.cn/vj/QwF7GKw.aspx) to carry out different levels of cooperation with the official team **for free**, including but not limited to problem abstraction, technical solution determination, project Q&A, joint research and development, etc. If you have already used paddleOCR in your project, you can also fill out this questionnaire to jointly promote with the PaddlePaddle and enhance the technical publicity of enterprises. Looking forward to your submission!

+

+

+ +

+

diff --git "a/applications/\345\217\221\347\245\250\345\205\263\351\224\256\344\277\241\346\201\257\346\212\275\345\217\226.md" "b/applications/\345\217\221\347\245\250\345\205\263\351\224\256\344\277\241\346\201\257\346\212\275\345\217\226.md"

index cd7fa1a0b3c988b21b33fe8f123e7d7c3e851ca5..14a6a1c8f1dd2350767afa162063b06791e79dd4 100644

--- "a/applications/\345\217\221\347\245\250\345\205\263\351\224\256\344\277\241\346\201\257\346\212\275\345\217\226.md"

+++ "b/applications/\345\217\221\347\245\250\345\205\263\351\224\256\344\277\241\346\201\257\346\212\275\345\217\226.md"

@@ -279,6 +279,12 @@ LayoutXLM与VI-LayoutXLM针对该场景的训练结果如下所示。

可以看出,对于VI-LayoutXLM相比LayoutXLM的Hmean高了1.3%。

+如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

+

+

+

+

#### 4.4.3 模型评估

diff --git a/deploy/hubserving/readme.md b/deploy/hubserving/readme.md

index 8144c2e7cefaed6f64763e414101445b2d80b81a..c583cc96ede437a1f65f9b1bddb69e84b7c54852 100755

--- a/deploy/hubserving/readme.md

+++ b/deploy/hubserving/readme.md

@@ -20,13 +20,14 @@ PaddleOCR提供2种服务部署方式:

# 基于PaddleHub Serving的服务部署

-hubserving服务部署目录下包括文本检测、文本方向分类,文本识别、文本检测+文本方向分类+文本识别3阶段串联,表格识别和PP-Structure六种服务包,请根据需求选择相应的服务包进行安装和启动。目录结构如下:

+hubserving服务部署目录下包括文本检测、文本方向分类,文本识别、文本检测+文本方向分类+文本识别3阶段串联,版面分析、表格识别和PP-Structure七种服务包,请根据需求选择相应的服务包进行安装和启动。目录结构如下:

```

deploy/hubserving/

└─ ocr_cls 文本方向分类模块服务包

└─ ocr_det 文本检测模块服务包

└─ ocr_rec 文本识别模块服务包

└─ ocr_system 文本检测+文本方向分类+文本识别串联服务包

+ └─ structure_layout 版面分析服务包

└─ structure_table 表格识别服务包

└─ structure_system PP-Structure服务包

```

@@ -41,6 +42,7 @@ deploy/hubserving/ocr_system/

```

## 1. 近期更新

+* 2022.08.23 新增版面分析服务。

* 2022.05.05 新增PP-OCRv3检测和识别模型。

* 2022.03.30 新增PP-Structure和表格识别两种服务。

@@ -59,9 +61,9 @@ pip3 install paddlehub==2.1.0 --upgrade -i https://mirror.baidu.com/pypi/simple

检测模型:./inference/ch_PP-OCRv3_det_infer/

识别模型:./inference/ch_PP-OCRv3_rec_infer/

方向分类器:./inference/ch_ppocr_mobile_v2.0_cls_infer/

-版面分析模型:./inference/layout_infer/

+版面分析模型:./inference/picodet_lcnet_x1_0_fgd_layout_infer/

表格结构识别模型:./inference/ch_ppstructure_mobile_v2.0_SLANet_infer/

-```

+```

**模型路径可在`params.py`中查看和修改。** 更多模型可以从PaddleOCR提供的模型库[PP-OCR](../../doc/doc_ch/models_list.md)和[PP-Structure](../../ppstructure/docs/models_list.md)下载,也可以替换成自己训练转换好的模型。

@@ -87,6 +89,9 @@ hub install deploy/hubserving/structure_table/

# 或,安装PP-Structure服务模块:

hub install deploy/hubserving/structure_system/

+

+# 或,安装版面分析服务模块:

+hub install deploy/hubserving/structure_layout/

```

* 在Windows环境下(文件夹的分隔符为`\`),安装示例如下:

@@ -108,6 +113,9 @@ hub install deploy\hubserving\structure_table\

# 或,安装PP-Structure服务模块:

hub install deploy\hubserving\structure_system\

+

+# 或,安装版面分析服务模块:

+hub install deploy\hubserving\structure_layout\

```

### 2.4 启动服务

@@ -118,7 +126,7 @@ $ hub serving start --modules [Module1==Version1, Module2==Version2, ...] \

--port XXXX \

--use_multiprocess \

--workers \

-```

+```

**参数:**

@@ -168,7 +176,7 @@ $ hub serving start --modules [Module1==Version1, Module2==Version2, ...] \

```shell

export CUDA_VISIBLE_DEVICES=3

hub serving start -c deploy/hubserving/ocr_system/config.json

-```

+```

## 3. 发送预测请求

配置好服务端,可使用以下命令发送预测请求,获取预测结果:

@@ -185,6 +193,7 @@ hub serving start -c deploy/hubserving/ocr_system/config.json

`http://127.0.0.1:8868/predict/ocr_system`

`http://127.0.0.1:8869/predict/structure_table`

`http://127.0.0.1:8870/predict/structure_system`

+`http://127.0.0.1:8870/predict/structure_layout`

- **image_dir**:测试图像路径,可以是单张图片路径,也可以是图像集合目录路径

- **visualize**:是否可视化结果,默认为False

- **output**:可视化结果保存路径,默认为`./hubserving_result`

@@ -203,17 +212,19 @@ hub serving start -c deploy/hubserving/ocr_system/config.json

|text_region|list|文本位置坐标|

|html|str|表格的html字符串|

|regions|list|版面分析+表格识别+OCR的结果,每一项为一个list,包含表示区域坐标的`bbox`,区域类型的`type`和区域结果的`res`三个字段|

+|layout|list|版面分析的结果,每一项一个dict,包含版面区域坐标的`bbox`,区域类型的`label`|

不同模块返回的字段不同,如,文本识别服务模块返回结果不含`text_region`字段,具体信息如下:

-| 字段名/模块名 | ocr_det | ocr_cls | ocr_rec | ocr_system | structure_table | structure_system |

-| --- | --- | --- | --- | --- | --- |--- |

-|angle| | ✔ | | ✔ | ||

-|text| | |✔|✔| | ✔ |

-|confidence| |✔ |✔| | | ✔|

-|text_region| ✔| | |✔ | | ✔|

-|html| | | | |✔ |✔|

-|regions| | | | |✔ |✔ |

+| 字段名/模块名 | ocr_det | ocr_cls | ocr_rec | ocr_system | structure_table | structure_system | Structure_layout |

+| --- | --- | --- | --- | --- | --- | --- | --- |

+|angle| | ✔ | | ✔ | |||

+|text| | |✔|✔| | ✔ | |

+|confidence| |✔ |✔| | | ✔| |

+|text_region| ✔| | |✔ | | ✔| |

+|html| | | | |✔ |✔||

+|regions| | | | |✔ |✔ | |

+|layout| | | | | | | ✔ |

**说明:** 如果需要增加、删除、修改返回字段,可在相应模块的`module.py`文件中进行修改,完整流程参考下一节自定义修改服务模块。

diff --git a/deploy/hubserving/readme_en.md b/deploy/hubserving/readme_en.md

index 06eaaebacb51744844473c0ffe8b189dc545492c..f09fe46417c7567305e5ce05a14be74d33450c31 100755

--- a/deploy/hubserving/readme_en.md

+++ b/deploy/hubserving/readme_en.md

@@ -20,13 +20,14 @@ PaddleOCR provides 2 service deployment methods:

# Service deployment based on PaddleHub Serving

-The hubserving service deployment directory includes six service packages: text detection, text angle class, text recognition, text detection+text angle class+text recognition three-stage series connection, table recognition and PP-Structure. Please select the corresponding service package to install and start service according to your needs. The directory is as follows:

+The hubserving service deployment directory includes seven service packages: text detection, text angle class, text recognition, text detection+text angle class+text recognition three-stage series connection, layout analysis, table recognition and PP-Structure. Please select the corresponding service package to install and start service according to your needs. The directory is as follows:

```

deploy/hubserving/

└─ ocr_det text detection module service package

└─ ocr_cls text angle class module service package

└─ ocr_rec text recognition module service package

└─ ocr_system text detection+text angle class+text recognition three-stage series connection service package

+ └─ structure_layout layout analysis service package

└─ structure_table table recognition service package

└─ structure_system PP-Structure service package

```

@@ -43,6 +44,7 @@ deploy/hubserving/ocr_system/

* 2022.05.05 add PP-OCRv3 text detection and recognition models.

* 2022.03.30 add PP-Structure and table recognition services。

+* 2022.08.23 add layout analysis services。

## 2. Quick start service

@@ -61,7 +63,7 @@ Before installing the service module, you need to prepare the inference model an

text detection model: ./inference/ch_PP-OCRv3_det_infer/

text recognition model: ./inference/ch_PP-OCRv3_rec_infer/

text angle classifier: ./inference/ch_ppocr_mobile_v2.0_cls_infer/

-layout parse model: ./inference/layout_infer/

+layout parse model: ./inference/picodet_lcnet_x1_0_fgd_layout_infer/

tanle recognition: ./inference/ch_ppstructure_mobile_v2.0_SLANet_infer/

```

@@ -89,6 +91,9 @@ hub install deploy/hubserving/structure_table/

# Or install PP-Structure service module

hub install deploy/hubserving/structure_system/

+

+# Or install layout analysis service module

+hub install deploy/hubserving/structure_layout/

```

* On Windows platform, the examples are as follows.

@@ -110,6 +115,9 @@ hub install deploy/hubserving/structure_table/

# Or install PP-Structure service module

hub install deploy\hubserving\structure_system\

+

+# Or install layout analysis service module

+hub install deploy\hubserving\structure_layout\

```

### 2.4 Start service

@@ -190,8 +198,9 @@ For example, if using the configuration file to start the text angle classificat

`http://127.0.0.1:8866/predict/ocr_cls`

`http://127.0.0.1:8867/predict/ocr_rec`

`http://127.0.0.1:8868/predict/ocr_system`

-`http://127.0.0.1:8869/predict/structure_table`

+`http://127.0.0.1:8869/predict/structure_table`

`http://127.0.0.1:8870/predict/structure_system`

+`http://127.0.0.1:8870/predict/structure_layout`

- **image_dir**:Test image path, can be a single image path or an image directory path

- **visualize**:Whether to visualize the results, the default value is False

- **output**:The floder to save Visualization result, default value is `./hubserving_result`

@@ -212,17 +221,19 @@ The returned result is a list. Each item in the list is a dict. The dict may con

|text_region|list|text location coordinates|

|html|str|table html str|

|regions|list|The result of layout analysis + table recognition + OCR, each item is a list, including `bbox` indicating area coordinates, `type` of area type and `res` of area results|

+|layout|list|The result of layout analysis, each item is a dict, including `bbox` indicating area coordinates, `label` of area type|

The fields returned by different modules are different. For example, the results returned by the text recognition service module do not contain `text_region`. The details are as follows:

-| field name/module name | ocr_det | ocr_cls | ocr_rec | ocr_system | structure_table | structure_system |

-| --- | --- | --- | --- | --- | --- |--- |

-|angle| | ✔ | | ✔ | ||

-|text| | |✔|✔| | ✔ |

-|confidence| |✔ |✔| | | ✔|

-|text_region| ✔| | |✔ | | ✔|

-|html| | | | |✔ |✔|

-|regions| | | | |✔ |✔ |

+| field name/module name | ocr_det | ocr_cls | ocr_rec | ocr_system | structure_table | structure_system | structure_layout |

+| --- | --- | --- | --- | --- | --- |--- |--- |

+|angle| | ✔ | | ✔ | || |

+|text| | |✔|✔| | ✔ | |

+|confidence| |✔ |✔| | | ✔| |

+|text_region| ✔| | |✔ | | ✔| |

+|html| | | | |✔ |✔| |

+|regions| | | | |✔ |✔ | |

+|layout| | | | | | |✔ |

**Note:** If you need to add, delete or modify the returned fields, you can modify the file `module.py` of the corresponding module. For the complete process, refer to the user-defined modification service module in the next section.

diff --git a/deploy/hubserving/structure_layout/__init__.py b/deploy/hubserving/structure_layout/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..c747d3e7aeca842933e083dffc01ef1fba3f4e85

--- /dev/null

+++ b/deploy/hubserving/structure_layout/__init__.py

@@ -0,0 +1,13 @@

+# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

\ No newline at end of file

diff --git a/deploy/hubserving/structure_layout/config.json b/deploy/hubserving/structure_layout/config.json

new file mode 100644

index 0000000000000000000000000000000000000000..bc52c1ab603d5659f90a5ed8a72cdb06638fb9e5

--- /dev/null

+++ b/deploy/hubserving/structure_layout/config.json

@@ -0,0 +1,16 @@

+{

+ "modules_info": {

+ "structure_layout": {

+ "init_args": {

+ "version": "1.0.0",

+ "use_gpu": true

+ },

+ "predict_args": {

+ }

+ }

+ },

+ "port": 8871,

+ "use_multiprocess": false,

+ "workers": 2

+}

+

diff --git a/deploy/hubserving/structure_layout/module.py b/deploy/hubserving/structure_layout/module.py

new file mode 100644

index 0000000000000000000000000000000000000000..7091f123fc0039e4886d8763096952d7c445184c

--- /dev/null

+++ b/deploy/hubserving/structure_layout/module.py

@@ -0,0 +1,143 @@

+# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import os

+import sys

+sys.path.insert(0, ".")

+import copy

+

+import time

+import paddlehub

+from paddlehub.common.logger import logger

+from paddlehub.module.module import moduleinfo, runnable, serving

+import cv2

+import paddlehub as hub

+

+from tools.infer.utility import base64_to_cv2

+from ppstructure.layout.predict_layout import LayoutPredictor as _LayoutPredictor

+from ppstructure.utility import parse_args

+from deploy.hubserving.structure_layout.params import read_params

+

+

+@moduleinfo(

+ name="structure_layout",

+ version="1.0.0",

+ summary="PP-Structure layout service",

+ author="paddle-dev",

+ author_email="paddle-dev@baidu.com",

+ type="cv/structure_layout")

+class LayoutPredictor(hub.Module):

+ def _initialize(self, use_gpu=False, enable_mkldnn=False):

+ """

+ initialize with the necessary elements

+ """

+ cfg = self.merge_configs()

+ cfg.use_gpu = use_gpu

+ if use_gpu:

+ try:

+ _places = os.environ["CUDA_VISIBLE_DEVICES"]

+ int(_places[0])

+ print("use gpu: ", use_gpu)

+ print("CUDA_VISIBLE_DEVICES: ", _places)

+ cfg.gpu_mem = 8000

+ except:

+ raise RuntimeError(

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES via export CUDA_VISIBLE_DEVICES=cuda_device_id."

+ )

+ cfg.ir_optim = True

+ cfg.enable_mkldnn = enable_mkldnn

+

+ self.layout_predictor = _LayoutPredictor(cfg)

+

+ def merge_configs(self):

+ # deafult cfg

+ backup_argv = copy.deepcopy(sys.argv)

+ sys.argv = sys.argv[:1]

+ cfg = parse_args()

+

+ update_cfg_map = vars(read_params())

+

+ for key in update_cfg_map:

+ cfg.__setattr__(key, update_cfg_map[key])

+

+ sys.argv = copy.deepcopy(backup_argv)

+ return cfg

+

+ def read_images(self, paths=[]):

+ images = []

+ for img_path in paths:

+ assert os.path.isfile(

+ img_path), "The {} isn't a valid file.".format(img_path)

+ img = cv2.imread(img_path)

+ if img is None:

+ logger.info("error in loading image:{}".format(img_path))

+ continue

+ images.append(img)

+ return images

+

+ def predict(self, images=[], paths=[]):

+ """

+ Get the chinese texts in the predicted images.

+ Args:

+ images (list(numpy.ndarray)): images data, shape of each is [H, W, C]. If images not paths

+ paths (list[str]): The paths of images. If paths not images

+ Returns:

+ res (list): The layout results of images.

+ """

+

+ if images != [] and isinstance(images, list) and paths == []:

+ predicted_data = images

+ elif images == [] and isinstance(paths, list) and paths != []:

+ predicted_data = self.read_images(paths)

+ else:

+ raise TypeError("The input data is inconsistent with expectations.")

+

+ assert predicted_data != [], "There is not any image to be predicted. Please check the input data."

+

+ all_results = []

+ for img in predicted_data:

+ if img is None:

+ logger.info("error in loading image")

+ all_results.append([])

+ continue

+ starttime = time.time()

+ res, _ = self.layout_predictor(img)

+ elapse = time.time() - starttime

+ logger.info("Predict time: {}".format(elapse))

+

+ for item in res:

+ item['bbox'] = item['bbox'].tolist()

+ all_results.append({'layout': res})

+ return all_results

+

+ @serving

+ def serving_method(self, images, **kwargs):

+ """

+ Run as a service.

+ """

+ images_decode = [base64_to_cv2(image) for image in images]

+ results = self.predict(images_decode, **kwargs)

+ return results

+

+

+if __name__ == '__main__':

+ layout = LayoutPredictor()

+ layout._initialize()

+ image_path = ['./ppstructure/docs/table/1.png']

+ res = layout.predict(paths=image_path)

+ print(res)

diff --git a/deploy/hubserving/structure_layout/params.py b/deploy/hubserving/structure_layout/params.py

new file mode 100755

index 0000000000000000000000000000000000000000..448b66ac42dac555f084299f525ee9e91ad481d8

--- /dev/null

+++ b/deploy/hubserving/structure_layout/params.py

@@ -0,0 +1,32 @@

+# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+

+class Config(object):

+ pass

+

+

+def read_params():

+ cfg = Config()

+

+ # params for layout analysis

+ cfg.layout_model_dir = './inference/picodet_lcnet_x1_0_fgd_layout_infer/'

+ cfg.layout_dict_path = './ppocr/utils/dict/layout_dict/layout_publaynet_dict.txt'

+ cfg.layout_score_threshold = 0.5

+ cfg.layout_nms_threshold = 0.5

+ return cfg

diff --git a/doc/doc_ch/algorithm_rec_srn.md b/doc/doc_ch/algorithm_rec_srn.md

index ca7961359eb902fafee959b26d02f324aece233a..dd61a388c7024fabdadec1c120bd3341ed0197cc 100644

--- a/doc/doc_ch/algorithm_rec_srn.md

+++ b/doc/doc_ch/algorithm_rec_srn.md

@@ -78,7 +78,7 @@ python3 tools/export_model.py -c configs/rec/rec_r50_fpn_srn.yml -o Global.pretr

SRN文本识别模型推理,可以执行如下命令:

```

-python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words/en/word_1.png" --rec_model_dir="./inference/rec_srn/" --rec_image_shape="1,64,256" --rec_char_type="ch" --rec_algorithm="SRN" --rec_char_dict_path=./ppocr/utils/ic15_dict.txt --use_space_char=False

+python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words/en/word_1.png" --rec_model_dir="./inference/rec_srn/" --rec_image_shape="1,64,256" --rec_algorithm="SRN" --rec_char_dict_path=./ppocr/utils/ic15_dict.txt --use_space_char=False

```

diff --git a/doc/doc_ch/dataset/kie_datasets.md b/doc/doc_ch/dataset/kie_datasets.md

index 7f8d14cbc4ad724621f28c7d6ca1f8c2ac79f097..be5624dbf257150745a79db25f0367ccee339559 100644

--- a/doc/doc_ch/dataset/kie_datasets.md

+++ b/doc/doc_ch/dataset/kie_datasets.md

@@ -1,6 +1,6 @@

# 关键信息抽取数据集

-这里整理了常见的DocVQA数据集,持续更新中,欢迎各位小伙伴贡献数据集~

+这里整理了常见的关键信息抽取数据集,持续更新中,欢迎各位小伙伴贡献数据集~

- [FUNSD数据集](#funsd)

- [XFUND数据集](#xfund)

diff --git a/doc/doc_ch/inference_args.md b/doc/doc_ch/inference_args.md

index fa188ab7c800eaabae8a4ff54413af162dd60e43..36efc6fbf7a6ec62bc700964dc13261fecdb9bd5 100644

--- a/doc/doc_ch/inference_args.md

+++ b/doc/doc_ch/inference_args.md

@@ -15,7 +15,7 @@

| save_crop_res | bool | False | 是否保存OCR的识别文本图像 |

| crop_res_save_dir | str | "./output" | 保存OCR识别出来的文本图像路径 |

| use_mp | bool | False | 是否开启多进程预测 |

-| total_process_num | int | 6 | 开启的进城数,`use_mp`为`True`时生效 |

+| total_process_num | int | 6 | 开启的进程数,`use_mp`为`True`时生效 |

| process_id | int | 0 | 当前进程的id号,无需自己修改 |

| benchmark | bool | False | 是否开启benchmark,对预测速度、显存占用等进行统计 |

| save_log_path | str | "./log_output/" | 开启`benchmark`时,日志结果的保存文件夹 |

@@ -39,10 +39,10 @@

| 参数名称 | 类型 | 默认值 | 含义 |

| :--: | :--: | :--: | :--: |

-| det_algorithm | str | "DB" | 文本检测算法名称,目前支持`DB`, `EAST`, `SAST`, `PSE` |

+| det_algorithm | str | "DB" | 文本检测算法名称,目前支持`DB`, `EAST`, `SAST`, `PSE`, `DB++`, `FCE` |

| det_model_dir | str | xx | 检测inference模型路径 |

| det_limit_side_len | int | 960 | 检测的图像边长限制 |

-| det_limit_type | str | "max" | 检测的变成限制类型,目前支持`min`, `max`,`min`表示保证图像最短边不小于`det_limit_side_len`,`max`表示保证图像最长边不大于`det_limit_side_len` |

+| det_limit_type | str | "max" | 检测的边长限制类型,目前支持`min`和`max`,`min`表示保证图像最短边不小于`det_limit_side_len`,`max`表示保证图像最长边不大于`det_limit_side_len` |

其中,DB算法相关参数如下

@@ -85,9 +85,9 @@ PSE算法相关参数如下

| 参数名称 | 类型 | 默认值 | 含义 |

| :--: | :--: | :--: | :--: |

-| rec_algorithm | str | "CRNN" | 文本识别算法名称,目前支持`CRNN`, `SRN`, `RARE`, `NETR`, `SAR` |

+| rec_algorithm | str | "CRNN" | 文本识别算法名称,目前支持`CRNN`, `SRN`, `RARE`, `NETR`, `SAR`, `ViTSTR`, `ABINet`, `VisionLAN`, `SPIN`, `RobustScanner`, `SVTR`, `SVTR_LCNet` |

| rec_model_dir | str | 无,如果使用识别模型,该项是必填项 | 识别inference模型路径 |

-| rec_image_shape | list | [3, 32, 320] | 识别时的图像尺寸, |

+| rec_image_shape | list | [3, 48, 320] | 识别时的图像尺寸 |

| rec_batch_num | int | 6 | 识别的batch size |

| max_text_length | int | 25 | 识别结果最大长度,在`SRN`中有效 |

| rec_char_dict_path | str | "./ppocr/utils/ppocr_keys_v1.txt" | 识别的字符字典文件 |

diff --git a/doc/doc_ch/inference_ppocr.md b/doc/doc_ch/inference_ppocr.md

index 622ac995d37ce290ee51af06164b0c2aef8b5a14..514f905393984e2189b4c9c920ca4aeb91ac6da1 100644

--- a/doc/doc_ch/inference_ppocr.md

+++ b/doc/doc_ch/inference_ppocr.md

@@ -158,3 +158,5 @@ python3 tools/infer/predict_system.py --image_dir="./doc/imgs/00018069.jpg" --de

执行命令后,识别结果图像如下:

+

+更多关于推理超参数的配置与解释,请参考:[模型推理超参数解释教程](./inference_args.md)。

diff --git a/doc/doc_en/algorithm_en.md b/doc/doc_en/algorithm_en.md

deleted file mode 100644

index c880336b4ad528eab2cce479edf11fce0b43f435..0000000000000000000000000000000000000000

--- a/doc/doc_en/algorithm_en.md

+++ /dev/null

@@ -1,11 +0,0 @@

-# Academic Algorithms and Models

-

-PaddleOCR will add cutting-edge OCR algorithms and models continuously. Check out the supported models and tutorials by clicking the following list:

-

-

-- [text detection algorithms](./algorithm_overview_en.md#11)

-- [text recognition algorithms](./algorithm_overview_en.md#12)

-- [end-to-end algorithms](./algorithm_overview_en.md#2)

-- [table recognition algorithms](./algorithm_overview_en.md#3)

-

-Developers are welcome to contribute more algorithms! Please refer to [add new algorithm](./add_new_algorithm_en.md) guideline.

diff --git a/doc/doc_en/algorithm_overview_en.md b/doc/doc_en/algorithm_overview_en.md

index 3f59bf9c829920fb43fa7f89858b4586ceaac26f..5bf569e3e1649cfabbe196be7e1a55d1caa3bf61 100755

--- a/doc/doc_en/algorithm_overview_en.md

+++ b/doc/doc_en/algorithm_overview_en.md

@@ -7,7 +7,11 @@

- [3. Table Recognition Algorithms](#3)

- [4. Key Information Extraction Algorithms](#4)

-This tutorial lists the OCR algorithms supported by PaddleOCR, as well as the models and metrics of each algorithm on **English public datasets**. It is mainly used for algorithm introduction and algorithm performance comparison. For more models on other datasets including Chinese, please refer to [PP-OCR v2.0 models list](./models_list_en.md).

+This tutorial lists the OCR algorithms supported by PaddleOCR, as well as the models and metrics of each algorithm on **English public datasets**. It is mainly used for algorithm introduction and algorithm performance comparison. For more models on other datasets including Chinese, please refer to [PP-OCRv3 models list](./models_list_en.md).

+

+>>

+Developers are welcome to contribute more algorithms! Please refer to [add new algorithm](./add_new_algorithm_en.md) guideline.

+

diff --git a/doc/doc_en/dataset/kie_datasets_en.md b/doc/doc_en/dataset/kie_datasets_en.md

index 3a8b744fc0b2653aab5c1435996a2ef73dd336e4..7b476f77d0380496d026c448937e59b23ee24c87 100644

--- a/doc/doc_en/dataset/kie_datasets_en.md

+++ b/doc/doc_en/dataset/kie_datasets_en.md

@@ -1,9 +1,10 @@

-## Key Imnformation Extraction dataset

+## Key Information Extraction dataset

+

+Here are the common datasets key information extraction, which are being updated continuously. Welcome to contribute datasets.

-Here are the common DocVQA datasets, which are being updated continuously. Welcome to contribute datasets.

- [FUNSD dataset](#funsd)

- [XFUND dataset](#xfund)

-- [wildreceipt dataset](#wildreceipt数据集)

+- [wildreceipt dataset](#wildreceipt-dataset)

#### 1. FUNSD dataset

@@ -20,7 +21,8 @@ Here are the common DocVQA datasets, which are being updated continuously. Welco

#### 2. XFUND dataset

- **Data source**: https://github.com/doc-analysis/XFUND

-- **Data introduction**: XFUND is a multilingual form comprehension dataset, which contains form data in 7 different languages, and all are manually annotated in the form of key-value pairs. The data for each language contains 199 form data, which are divided into 149 training sets and 50 test sets. Part of the image and the annotation box visualization are shown below:

+- **Data introduction**: XFUND is a multilingual form comprehension dataset, which contains form data in 7 different languages, and all are manually annotated in the form of key-value pairs. The data for each language contains 199 form data, which are divided into 149 training sets and 50 test sets. Part of the image and the annotation box visualization are shown below.

+

diff --git a/doc/doc_en/dataset/layout_datasets_en.md b/doc/doc_en/dataset/layout_datasets_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..54c88609d0f25f65b4878fac96a43de5f1cc3164

--- /dev/null

+++ b/doc/doc_en/dataset/layout_datasets_en.md

@@ -0,0 +1,55 @@

+## Layout Analysis Dataset

+

+Here are the common datasets of layout anlysis, which are being updated continuously. Welcome to contribute datasets.

+

+- [PubLayNet dataset](#publaynet)

+- [CDLA dataset](#CDLA)

+- [TableBank dataset](#TableBank)

+

+

+Most of the layout analysis datasets are object detection datasets. In addition to open source datasets, you can also label or synthesize datasets using tools such as [labelme](https://github.com/wkentaro/labelme) and so on.

+

+

+

+

+#### 1. PubLayNet dataset

+

+- **Data source**: https://github.com/ibm-aur-nlp/PubLayNet

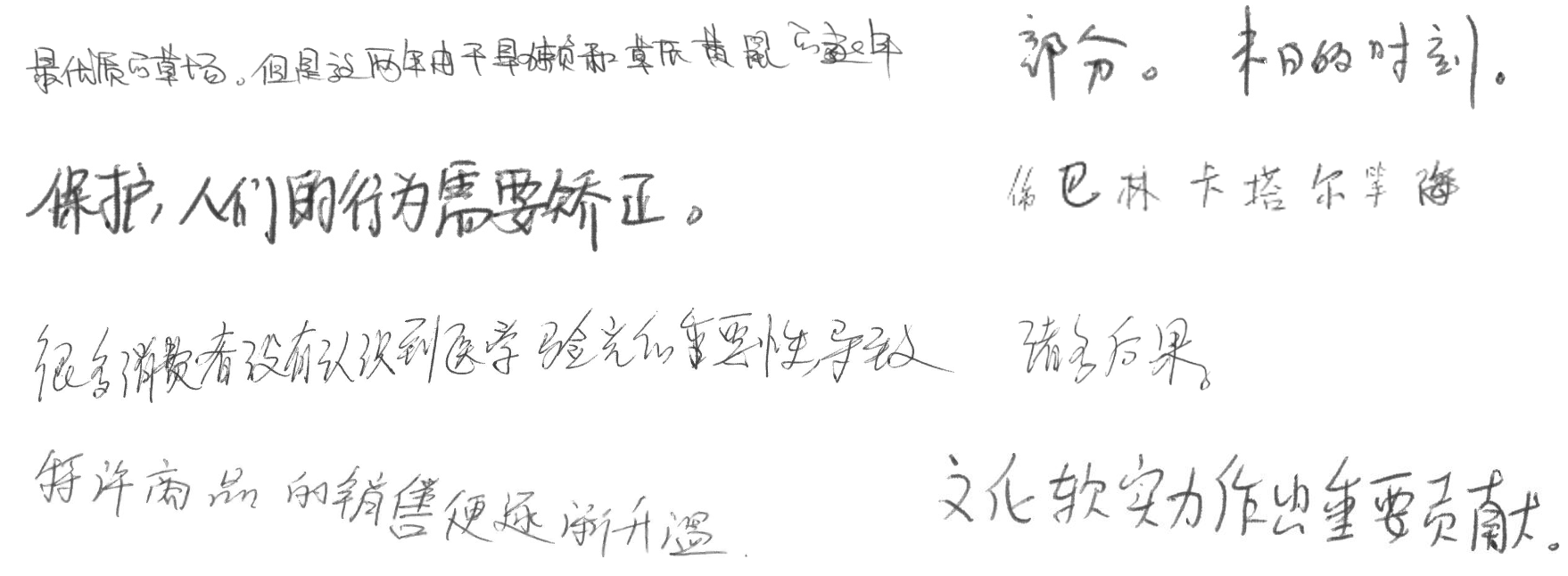

+- **Data introduction**: The PubLayNet dataset contains 350000 training images and 11000 validation images. There are 5 categories in total, namely: `text, title, list, table, figure`. Some images and their annotations as shown below.

+

+

+

+

+

+

+- **Download address**: https://developer.ibm.com/exchanges/data/all/publaynet/

+- **Note**: When using this dataset, you need to follow [CDLA-Permissive](https://cdla.io/permissive-1-0/) license.

+

+

+

+

+#### 2、CDLA数据集

+- **Data source**: https://github.com/buptlihang/CDLA

+- **Data introduction**: CDLA dataset contains 5000 training images and 1000 validation images with 10 categories, which are `Text, Title, Figure, Figure caption, Table, Table caption, Header, Footer, Reference, Equation`. Some images and their annotations as shown below.

+

+

+

+

+

+

+- **Download address**: https://github.com/buptlihang/CDLA

+- **Note**: When you train detection model on CDLA dataset using [PaddleDetection](https://github.com/PaddlePaddle/PaddleDetection/tree/develop), you need to remove the label `__ignore__` and `_background_`.

+

+

+

+#### 3、TableBank dataet

+- **Data source**: https://doc-analysis.github.io/tablebank-page/index.html

+- **Data introduction**: TableBank dataset contains 2 types of document: Latex (187199 training images, 7265 validation images and 5719 testing images) and Word (73383 training images 2735 validation images and 2281 testing images). Some images and their annotations as shown below.

+

+

+

+

+

+

+- **Data source**: https://doc-analysis.github.io/tablebank-page/index.html

+- **Note**: When using this dataset, you need to follow [Apache-2.0](https://github.com/doc-analysis/TableBank/blob/master/LICENSE) license.

diff --git a/doc/doc_en/inference_args_en.md b/doc/doc_en/inference_args_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..f2c99fc8297d47f27a219bf7d8e7f2ea518257f0

--- /dev/null

+++ b/doc/doc_en/inference_args_en.md

@@ -0,0 +1,120 @@

+# PaddleOCR Model Inference Parameter Explanation

+

+When using PaddleOCR for model inference, you can customize the modification parameters to modify the model, data, preprocessing, postprocessing, etc.(parameter file:[utility.py](../../tools/infer/utility.py)),The detailed parameter explanation is as follows:

+

+* Global parameters

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| image_dir | str | None, must be specified explicitly | Image or folder path |

+| vis_font_path | str | "./doc/fonts/simfang.ttf" | font path for visualization |

+| drop_score | float | 0.5 | Results with a recognition score less than this value will be discarded and will not be returned as results |

+| use_pdserving | bool | False | Whether to use Paddle Serving for prediction |

+| warmup | bool | False | Whether to enable warmup, this method can be used when statistical prediction time |

+| draw_img_save_dir | str | "./inference_results" | The saving folder of the system's tandem prediction OCR results |

+| save_crop_res | bool | False | Whether to save the recognized text image for OCR |

+| crop_res_save_dir | str | "./output" | Save the text image path recognized by OCR |

+| use_mp | bool | False | Whether to enable multi-process prediction |

+| total_process_num | int | 6 | The number of processes, which takes effect when `use_mp` is `True` |

+| process_id | int | 0 | The id number of the current process, no need to modify it yourself |

+| benchmark | bool | False | Whether to enable benchmark, and make statistics on prediction speed, memory usage, etc. |

+| save_log_path | str | "./log_output/" | Folder where log results are saved when `benchmark` is enabled |

+| show_log | bool | True | Whether to show the log information in the inference |

+| use_onnx | bool | False | Whether to enable onnx prediction |

+

+

+* Prediction engine related parameters

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| use_gpu | bool | True | Whether to use GPU for prediction |

+| ir_optim | bool | True | Whether to analyze and optimize the calculation graph. The prediction process can be accelerated when `ir_optim` is enabled |

+| use_tensorrt | bool | False | Whether to enable tensorrt |

+| min_subgraph_size | int | 15 | The minimum subgraph size in tensorrt. When the size of the subgraph is greater than this value, it will try to use the trt engine to calculate the subgraph. |

+| precision | str | fp32 | The precision of prediction, supports `fp32`, `fp16`, `int8` |

+| enable_mkldnn | bool | True | Whether to enable mkldnn |

+| cpu_threads | int | 10 | When mkldnn is enabled, the number of threads predicted by the cpu |

+

+* Text detection model related parameters

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| det_algorithm | str | "DB" | Text detection algorithm name, currently supports `DB`, `EAST`, `SAST`, `PSE`, `DB++`, `FCE` |

+| det_model_dir | str | xx | Detection inference model paths |

+| det_limit_side_len | int | 960 | image side length limit |

+| det_limit_type | str | "max" | The side length limit type, currently supports `min`and `max`. `min` means to ensure that the shortest side of the image is not less than `det_limit_side_len`, `max` means to ensure that the longest side of the image is not greater than `det_limit_side_len` |

+

+The relevant parameters of the DB algorithm are as follows

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| det_db_thresh | float | 0.3 | In the probability map output by DB, only pixels with a score greater than this threshold will be considered as text pixels |

+| det_db_box_thresh | float | 0.6 | Within the detection box, when the average score of all pixels is greater than the threshold, the result will be considered as a text area |

+| det_db_unclip_ratio | float | 1.5 | The expansion factor of the `Vatti clipping` algorithm, which is used to expand the text area |

+| max_batch_size | int | 10 | max batch size |

+| use_dilation | bool | False | Whether to inflate the segmentation results to obtain better detection results |

+| det_db_score_mode | str | "fast" | DB detection result score calculation method, supports `fast` and `slow`, `fast` calculates the average score according to all pixels within the bounding rectangle of the polygon, `slow` calculates the average score according to all pixels within the original polygon, The calculation speed is relatively slower, but more accurate. |

+

+The relevant parameters of the EAST algorithm are as follows

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| det_east_score_thresh | float | 0.8 | Threshold for score map in EAST postprocess |

+| det_east_cover_thresh | float | 0.1 | Average score threshold for text boxes in EAST postprocess |

+| det_east_nms_thresh | float | 0.2 | Threshold of nms in EAST postprocess |

+

+The relevant parameters of the SAST algorithm are as follows

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| det_sast_score_thresh | float | 0.5 | Score thresholds in SAST postprocess |

+| det_sast_nms_thresh | float | 0.5 | Thresholding of nms in SAST postprocess |

+| det_sast_polygon | bool | False | Whether polygon detection, curved text scene (such as Total-Text) is set to True |

+

+The relevant parameters of the PSE algorithm are as follows

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| det_pse_thresh | float | 0.0 | Threshold for binarizing the output image |

+| det_pse_box_thresh | float | 0.85 | Threshold for filtering boxes, below this threshold is discarded |

+| det_pse_min_area | float | 16 | The minimum area of the box, below this threshold is discarded |

+| det_pse_box_type | str | "box" | The type of the returned box, box: four point coordinates, poly: all point coordinates of the curved text |

+| det_pse_scale | int | 1 | The ratio of the input image relative to the post-processed image, such as an image of `640*640`, the network output is `160*160`, and when the scale is 2, the shape of the post-processed image is `320*320`. Increasing this value can speed up the post-processing speed, but it will bring about a decrease in accuracy |

+

+* Text recognition model related parameters

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| rec_algorithm | str | "CRNN" | Text recognition algorithm name, currently supports `CRNN`, `SRN`, `RARE`, `NETR`, `SAR`, `ViTSTR`, `ABINet`, `VisionLAN`, `SPIN`, `RobustScanner`, `SVTR`, `SVTR_LCNet` |

+| rec_model_dir | str | None, it is required if using the recognition model | recognition inference model paths |

+| rec_image_shape | list | [3, 48, 320] | Image size at the time of recognition |

+| rec_batch_num | int | 6 | batch size |

+| max_text_length | int | 25 | The maximum length of the recognition result, valid in `SRN` |

+| rec_char_dict_path | str | "./ppocr/utils/ppocr_keys_v1.txt" | character dictionary file |

+| use_space_char | bool | True | Whether to include spaces, if `True`, the `space` character will be added at the end of the character dictionary |

+

+

+* End-to-end text detection and recognition model related parameters

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| e2e_algorithm | str | "PGNet" | End-to-end algorithm name, currently supports `PGNet` |

+| e2e_model_dir | str | None, it is required if using the end-to-end model | end-to-end model inference model path |

+| e2e_limit_side_len | int | 768 | End-to-end input image side length limit |

+| e2e_limit_type | str | "max" | End-to-end side length limit type, currently supports `min` and `max`. `min` means to ensure that the shortest side of the image is not less than `e2e_limit_side_len`, `max` means to ensure that the longest side of the image is not greater than `e2e_limit_side_len` |

+| e2e_pgnet_score_thresh | float | 0.5 | End-to-end score threshold, results below this threshold are discarded |

+| e2e_char_dict_path | str | "./ppocr/utils/ic15_dict.txt" | Recognition dictionary file path |

+| e2e_pgnet_valid_set | str | "totaltext" | The name of the validation set, currently supports `totaltext`, `partvgg`, the post-processing methods corresponding to different data sets are different, and it can be consistent with the training process |

+| e2e_pgnet_mode | str | "fast" | PGNet's detection result score calculation method, supports `fast` and `slow`, `fast` calculates the average score according to all pixels within the bounding rectangle of the polygon, `slow` calculates the average score according to all pixels within the original polygon, The calculation speed is relatively slower, but more accurate. |

+

+

+* Angle classifier model related parameters

+

+| parameters | type | default | implication |

+| :--: | :--: | :--: | :--: |

+| use_angle_cls | bool | False | whether to use an angle classifier |

+| cls_model_dir | str | None, if you need to use, you must specify the path explicitly | angle classifier inference model path |

+| cls_image_shape | list | [3, 48, 192] | prediction shape |

+| label_list | list | ['0', '180'] | The angle value corresponding to the class id |

+| cls_batch_num | int | 6 | batch size |

+| cls_thresh | float | 0.9 | Prediction threshold, when the model prediction result is 180 degrees, and the score is greater than the threshold, the final prediction result is considered to be 180 degrees and needs to be flipped |

diff --git a/doc/doc_en/inference_ppocr_en.md b/doc/doc_en/inference_ppocr_en.md

index 0f57b0ba6b226c19ecb1e0b60afdfa34302b8e78..4c9db51e1d23e5ac05cfcb3ec43748df75c0b36c 100755

--- a/doc/doc_en/inference_ppocr_en.md

+++ b/doc/doc_en/inference_ppocr_en.md

@@ -160,3 +160,5 @@ python3 tools/infer/predict_system.py --image_dir="./doc/imgs/00018069.jpg" --de

After executing the command, the recognition result image is as follows:

+

+For more configuration and explanation of inference parameters, please refer to:[Model Inference Parameters Explained Tutorial](./inference_args_en.md)。

diff --git a/doc/features.png b/doc/features.png

deleted file mode 100644

index 273e4beb74771b723ab732f703863fa2a3a4c21c..0000000000000000000000000000000000000000

Binary files a/doc/features.png and /dev/null differ

diff --git a/doc/features_en.png b/doc/features_en.png

deleted file mode 100644

index 310a1b7e50920304521a5fa68c5c2e2a881d3917..0000000000000000000000000000000000000000

Binary files a/doc/features_en.png and /dev/null differ

diff --git a/paddleocr.py b/paddleocr.py

index f6fb095af34a58cc91b9fd0f22b2e95bf833e010..1a236f2474cf3d5ef1fc6ab61955157bb1837db2 100644

--- a/paddleocr.py

+++ b/paddleocr.py

@@ -286,11 +286,17 @@ MODEL_URLS = {

}

},

'layout': {

- 'ch': {

+ 'en': {

'url':

- 'https://paddleocr.bj.bcebos.com/ppstructure/models/layout/picodet_lcnet_x1_0_layout_infer.tar',

+ 'https://paddleocr.bj.bcebos.com/ppstructure/models/layout/picodet_lcnet_x1_0_fgd_layout_infer.tar',

'dict_path':

'ppocr/utils/dict/layout_dict/layout_publaynet_dict.txt'

+ },

+ 'ch': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/ppstructure/models/layout/picodet_lcnet_x1_0_fgd_layout_cdla_infer.tar',

+ 'dict_path':

+ 'ppocr/utils/dict/layout_dict/layout_cdla_dict.txt'

}

}

}

@@ -556,7 +562,7 @@ class PPStructure(StructureSystem):

params.table_model_dir,

os.path.join(BASE_DIR, 'whl', 'table'), table_model_config['url'])

layout_model_config = get_model_config(

- 'STRUCTURE', params.structure_version, 'layout', 'ch')

+ 'STRUCTURE', params.structure_version, 'layout', lang)

params.layout_model_dir, layout_url = confirm_model_dir_url(

params.layout_model_dir,

os.path.join(BASE_DIR, 'whl', 'layout'), layout_model_config['url'])

@@ -578,7 +584,7 @@ class PPStructure(StructureSystem):

logger.debug(params)

super().__init__(params)

- def __call__(self, img, return_ocr_result_in_table=False):

+ def __call__(self, img, return_ocr_result_in_table=False, img_idx=0):

if isinstance(img, str):

# download net image

if img.startswith('http'):

@@ -596,7 +602,8 @@ class PPStructure(StructureSystem):

if isinstance(img, np.ndarray) and len(img.shape) == 2:

img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

- res, _ = super().__call__(img, return_ocr_result_in_table)

+ res, _ = super().__call__(

+ img, return_ocr_result_in_table, img_idx=img_idx)

return res

@@ -631,10 +638,54 @@ def main():

for line in result:

logger.info(line)

elif args.type == 'structure':

- result = engine(img_path)

- save_structure_res(result, args.output, img_name)

-

- for item in result:

+ img, flag_gif, flag_pdf = check_and_read(img_path)

+ if not flag_gif and not flag_pdf:

+ img = cv2.imread(img_path)

+

+ if not flag_pdf:

+ if img is None:

+ logger.error("error in loading image:{}".format(image_file))

+ continue

+ img_paths = [[img_path, img]]

+ else:

+ img_paths = []

+ for index, pdf_img in enumerate(img):

+ os.makedirs(

+ os.path.join(args.output, img_name), exist_ok=True)

+ pdf_img_path = os.path.join(

+ args.output, img_name,

+ img_name + '_' + str(index) + '.jpg')

+ cv2.imwrite(pdf_img_path, pdf_img)

+ img_paths.append([pdf_img_path, pdf_img])

+

+ all_res = []

+ for index, (new_img_path, img) in enumerate(img_paths):

+ logger.info('processing {}/{} page:'.format(index + 1,

+ len(img_paths)))

+ new_img_name = os.path.basename(new_img_path).split('.')[0]

+ result = engine(new_img_path, img_idx=index)

+ save_structure_res(result, args.output, img_name, index)

+

+ if args.recovery and result != []:

+ from copy import deepcopy

+ from ppstructure.recovery.recovery_to_doc import sorted_layout_boxes

+ h, w, _ = img.shape

+ result_cp = deepcopy(result)

+ result_sorted = sorted_layout_boxes(result_cp, w)

+ all_res += result_sorted

+

+ if args.recovery and all_res != []:

+ try:

+ from ppstructure.recovery.recovery_to_doc import convert_info_docx

+ convert_info_docx(img, all_res, args.output, img_name,

+ args.save_pdf)

+ except Exception as ex:

+ logger.error(

+ "error in layout recovery image:{}, err msg: {}".format(

+ img_name, ex))

+ continue

+

+ for item in all_res:

item.pop('img')

item.pop('res')

logger.info(item)

diff --git a/ppocr/utils/save_load.py b/ppocr/utils/save_load.py

index f86125521d19342f63a9fcb3bdcaed02cc4c6463..aa65f290c0a5f4f13b3103fb4404815e2ae74a88 100644

--- a/ppocr/utils/save_load.py

+++ b/ppocr/utils/save_load.py

@@ -104,8 +104,9 @@ def load_model(config, model, optimizer=None, model_type='det'):

continue

pre_value = params[key]

if pre_value.dtype == paddle.float16:

- pre_value = pre_value.astype(paddle.float32)

is_float16 = True

+ if pre_value.dtype != value.dtype:

+ pre_value = pre_value.astype(value.dtype)

if list(value.shape) == list(pre_value.shape):

new_state_dict[key] = pre_value

else:

@@ -162,8 +163,9 @@ def load_pretrained_params(model, path):

logger.warning("The pretrained params {} not in model".format(k1))

else:

if params[k1].dtype == paddle.float16:

- params[k1] = params[k1].astype(paddle.float32)

is_float16 = True

+ if params[k1].dtype != state_dict[k1].dtype:

+ params[k1] = params[k1].astype(state_dict[k1].dtype)

if list(state_dict[k1].shape) == list(params[k1].shape):

new_state_dict[k1] = params[k1]

else:

diff --git a/ppstructure/README.md b/ppstructure/README.md

index 66df10b2ec4d52fb743c40893d5fc5aa7d6ab5be..fb3697bc1066262833ee20bcbb8f79833f264f14 100644

--- a/ppstructure/README.md

+++ b/ppstructure/README.md

@@ -1,120 +1,115 @@

English | [简体中文](README_ch.md)

- [1. Introduction](#1-introduction)

-- [2. Update log](#2-update-log)

-- [3. Features](#3-features)

-- [4. Results](#4-results)

- - [4.1 Layout analysis and table recognition](#41-layout-analysis-and-table-recognition)

- - [4.2 KIE](#42-kie)

-- [5. Quick start](#5-quick-start)

-- [6. PP-Structure System](#6-pp-structure-system)

- - [6.1 Layout analysis and table recognition](#61-layout-analysis-and-table-recognition)

- - [6.1.1 Layout analysis](#611-layout-analysis)

- - [6.1.2 Table recognition](#612-table-recognition)

- - [6.2 KIE](#62-kie)

-- [7. Model List](#7-model-list)

- - [7.1 Layout analysis model](#71-layout-analysis-model)

- - [7.2 OCR and table recognition model](#72-ocr-and-table-recognition-model)

- - [7.3 KIE model](#73-kie-model)

+- [2. Features](#2-features)

+- [3. Results](#3-results)

+ - [3.1 Layout analysis and table recognition](#31-layout-analysis-and-table-recognition)

+ - [3.2 Layout Recovery](#32-layout-recovery)

+ - [3.3 KIE](#33-kie)

+- [4. Quick start](#4-quick-start)

+- [5. Model List](#5-model-list)

## 1. Introduction

-PP-Structure is an OCR toolkit that can be used for document analysis and processing with complex structures, designed to help developers better complete document understanding tasks

+PP-Structure is an intelligent document analysis system developed by the PaddleOCR team, which aims to help developers better complete tasks related to document understanding such as layout analysis and table recognition.

-## 2. Update log

-* 2022.02.12 KIE add LayoutLMv2 model。

-* 2021.12.07 add [KIE SER and RE tasks](kie/README.md)。

+The pipeline of PP-Structurev2 system is shown below. The document image first passes through the image direction correction module to identify the direction of the entire image and complete the direction correction. Then, two tasks of layout information analysis and key information extraction can be completed.

-## 3. Features

+- In the layout analysis task, the image first goes through the layout analysis model to divide the image into different areas such as text, table, and figure, and then analyze these areas separately. For example, the table area is sent to the form recognition module for structured recognition, and the text area is sent to the OCR engine for text recognition. Finally, the layout recovery module restores it to a word or pdf file with the same layout as the original image;

+- In the key information extraction task, the OCR engine is first used to extract the text content, and then the SER(semantic entity recognition) module obtains the semantic entities in the image, and finally the RE(relationship extraction) module obtains the correspondence between the semantic entities, thereby extracting the required key information.

+

-The main features of PP-Structure are as follows:

+More technical details: 👉 [PP-Structurev2 Technical Report](docs/PP-Structurev2_introduction.md)

-- Support the layout analysis of documents, divide the documents into 5 types of areas **text, title, table, image and list** (conjunction with Layout-Parser)

-- Support to extract the texts from the text, title, picture and list areas (used in conjunction with PP-OCR)

-- Support to extract excel files from the table areas

-- Support python whl package and command line usage, easy to use

-- Support custom training for layout analysis and table structure tasks

-- Support Document Key Information Extraction (KIE) tasks: Semantic Entity Recognition (SER) and Relation Extraction (RE)

+PP-Structurev2 supports independent use or flexible collocation of each module. For example, you can use layout analysis alone or table recognition alone. Click the corresponding link below to get the tutorial for each independent module:

-## 4. Results

+- [Layout Analysis](layout/README.md)

+- [Table Recognition](table/README.md)

+- [Key Information Extraction](kie/README.md)

+- [Layout Recovery](recovery/README.md)

-### 4.1 Layout analysis and table recognition

+## 2. Features

-

-

-The figure shows the pipeline of layout analysis + table recognition. The image is first divided into four areas of image, text, title and table by layout analysis, and then OCR detection and recognition is performed on the three areas of image, text and title, and the table is performed table recognition, where the image will also be stored for use.

-

-### 4.2 KIE

-

-* SER

-*

- |

----|---

-

-Different colored boxes in the figure represent different categories. For xfun dataset, there are three categories: query, answer and header:

+The main features of PP-Structurev2 are as follows:

+- Support layout analysis of documents in the form of images/pdfs, which can be divided into areas such as **text, titles, tables, figures, formulas, etc.**;

+- Support common Chinese and English **table detection** tasks;

+- Support structured table recognition, and output the final result to **Excel file**;

+- Support multimodal-based Key Information Extraction (KIE) tasks - **Semantic Entity Recognition** (SER) and **Relation Extraction (RE);

+- Support **layout recovery**, that is, restore the document in word or pdf format with the same layout as the original image;

+- Support customized training and multiple inference deployment methods such as python whl package quick start;

+- Connect with the semi-automatic data labeling tool PPOCRLabel, which supports the labeling of layout analysis, table recognition, and SER.

-* Dark purple: header

-* Light purple: query

-* Army green: answer

+## 3. Results

-The corresponding category and OCR recognition results are also marked at the top left of the OCR detection box.

+PP-Structurev2 supports the independent use or flexible collocation of each module. For example, layout analysis can be used alone, or table recognition can be used alone. Only the visualization effects of several representative usage methods are shown here.

+### 3.1 Layout analysis and table recognition

-* RE

-

- |

----|---

+The figure shows the pipeline of layout analysis + table recognition. The image is first divided into four areas of image, text, title and table by layout analysis, and then OCR detection and recognition is performed on the three areas of image, text and title, and the table is performed table recognition, where the image will also be stored for use.

+

+### 3.2 Layout recovery

-In the figure, the red box represents the question, the blue box represents the answer, and the question and answer are connected by green lines. The corresponding category and OCR recognition results are also marked at the top left of the OCR detection box.

+The following figure shows the effect of layout recovery based on the results of layout analysis and table recognition in the previous section.

+

-## 5. Quick start

+### 3.3 KIE

-Start from [Quick Installation](./docs/quickstart.md)

+* SER

-## 6. PP-Structure System

+Different colored boxes in the figure represent different categories.

-### 6.1 Layout analysis and table recognition

+

+

+

-

+

+

+

-In PP-Structure, the image will be divided into 5 types of areas **text, title, image list and table**. For the first 4 types of areas, directly use PP-OCR system to complete the text detection and recognition. For the table area, after the table structuring process, the table in image is converted into an Excel file with the same table style.

+

+

+

-#### 6.1.1 Layout analysis

+

+

+

-Layout analysis classifies image by region, including the use of Python scripts of layout analysis tools, extraction of designated category detection boxes, performance indicators, and custom training layout analysis models. For details, please refer to [document](layout/README.md).

+

+

+

-#### 6.1.2 Table recognition

+* RE

-Table recognition converts table images into excel documents, which include the detection and recognition of table text and the prediction of table structure and cell coordinates. For detailed instructions, please refer to [document](table/README.md)

+In the figure, the red box represents `Question`, the blue box represents `Answer`, and `Question` and `Answer` are connected by green lines.

-### 6.2 KIE

+

+

+

-Multi-modal based Key Information Extraction (KIE) methods include Semantic Entity Recognition (SER) and Relation Extraction (RE) tasks. Based on SER task, text recognition and classification in images can be completed. Based on THE RE task, we can extract the relation of the text content in the image, such as judge the problem pair. For details, please refer to [document](kie/README.md)

+

+

+

-## 7. Model List

+

+

+

-PP-Structure Series Model List (Updating)

+

+

+

-### 7.1 Layout analysis model

+## 4. Quick start

-|model name|description|download|label_map|

-| --- | --- | --- |--- |

-| ppyolov2_r50vd_dcn_365e_publaynet | The layout analysis model trained on the PubLayNet dataset can divide image into 5 types of areas **text, title, table, picture, and list** | [PubLayNet](https://paddle-model-ecology.bj.bcebos.com/model/layout-parser/ppyolov2_r50vd_dcn_365e_publaynet.tar) | {0: "Text", 1: "Title", 2: "List", 3:"Table", 4:"Figure"}|

+Start from [Quick Start](./docs/quickstart_en.md).

-### 7.2 OCR and table recognition model

+## 5. Model List

-|model name|description|model size|download|

-| --- | --- | --- | --- |

-|ch_PP-OCRv3_det| [New] Lightweight model, supporting Chinese, English, multilingual text detection | 3.8M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar)|

-|ch_PP-OCRv3_rec| [New] Lightweight model, supporting Chinese, English, multilingual text recognition | 12.4M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar) |

-|ch_ppstructure_mobile_v2.0_SLANet|Chinese table recognition model based on SLANet|9.3M|[inference model](https://paddleocr.bj.bcebos.com/ppstructure/models/slanet/ch_ppstructure_mobile_v2.0_SLANet_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/ppstructure/models/slanet/ch_ppstructure_mobile_v2.0_SLANet_train.tar) |

+Some tasks need to use both the structured analysis models and the OCR models. For example, the table recognition task needs to use the table recognition model for structured analysis, and the OCR model to recognize the text in the table. Please select the appropriate models according to your specific needs.

-### 7.3 KIE model

+For structural analysis related model downloads, please refer to:

+- [PP-Structure Model Zoo](./docs/models_list_en.md)

-|model name|description|model size|download|

-| --- | --- | --- | --- |

-|ser_LayoutXLM_xfun_zhd|SER model trained on xfun Chinese dataset based on LayoutXLM|1.4G|[inference model coming soon]() / [trained model](https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutXLM_xfun_zh.tar) |

-|re_LayoutXLM_xfun_zh|RE model trained on xfun Chinese dataset based on LayoutXLM|1.4G|[inference model coming soon]() / [trained model](https://paddleocr.bj.bcebos.com/pplayout/re_LayoutXLM_xfun_zh.tar) |

+For OCR related model downloads, please refer to:

+- [PP-OCR Model Zoo](../doc/doc_en/models_list_en.md)

-If you need to use other models, you can download the model in [PPOCR model_list](../doc/doc_en/models_list_en.md) and [PPStructure model_list](./docs/models_list.md)

diff --git a/ppstructure/README_ch.md b/ppstructure/README_ch.md

index 6539002bfe1497853dfa11eb774cf3c453567988..87a9c625b32c32e9c7fffb8ebc9b9fdf3b2130db 100644

--- a/ppstructure/README_ch.md

+++ b/ppstructure/README_ch.md

@@ -21,7 +21,7 @@ PP-Structurev2系统流程图如下所示,文档图像首先经过图像矫正

- 关键信息抽取任务中,首先使用OCR引擎提取文本内容,然后由语义实体识别模块获取图像中的语义实体,最后经关系抽取模块获取语义实体之间的对应关系,从而提取需要的关键信息。

-更多技术细节:👉 [PP-Structurev2技术报告]()

+更多技术细节:👉 [PP-Structurev2技术报告](docs/PP-Structurev2_introduction.md)

PP-Structurev2支持各个模块独立使用或灵活搭配,如,可以单独使用版面分析,或单独使用表格识别,点击下面相应链接获取各个独立模块的使用教程:

@@ -76,6 +76,14 @@ PP-Structurev2支持各个模块独立使用或灵活搭配,如,可以单独

+

+

+

+

+

+

+

+

* RE

图中红色框表示`问题`,蓝色框表示`答案`,`问题`和`答案`之间使用绿色线连接。

@@ -88,6 +96,14 @@ PP-Structurev2支持各个模块独立使用或灵活搭配,如,可以单独

+

+ +

+ +

+  +

+ +

+

+

+  +

+ +

+

+

+ +

+

+

+ +

+ +

+  +

+

+

+ |

+| 手写体识别 | 新增字形支持 | [模型下载](#2) | [中文](./手写文字识别.md)/English |

|

+| 手写体识别 | 新增字形支持 | [模型下载](#2) | [中文](./手写文字识别.md)/English |  |

@@ -42,14 +42,14 @@ PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR

### 金融

-| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

-| -------------- | ------------------------ | -------------- | ----------------------------------- | ------------------------------------------------------------ |

-| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |

|

@@ -42,14 +42,14 @@ PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR

### 金融

-| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

-| -------------- | ------------------------ | -------------- | ----------------------------------- | ------------------------------------------------------------ |

-| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |  |

-| 增值税发票 | 尽请期待 | | | |

-| 印章检测与识别 | 端到端弯曲文本识别 | | | |

-| 通用卡证识别 | 通用结构化提取 | | | |

-| 身份证识别 | 结构化提取、图像阴影 | | | |

-| 合同比对 | 密集文本检测、NLP串联 | | | |

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| -------------- | ----------------------------- | -------------- | ------------------------------------- | ------------------------------------------------------------ |

+| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |

|

-| 增值税发票 | 尽请期待 | | | |

-| 印章检测与识别 | 端到端弯曲文本识别 | | | |

-| 通用卡证识别 | 通用结构化提取 | | | |

-| 身份证识别 | 结构化提取、图像阴影 | | | |

-| 合同比对 | 密集文本检测、NLP串联 | | | |

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| -------------- | ----------------------------- | -------------- | ------------------------------------- | ------------------------------------------------------------ |

+| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |  |

+| 增值税发票 | 关键信息抽取,SER、RE任务训练 | [模型下载](#2) | [中文](./发票关键信息抽取.md)/English |

|

+| 增值税发票 | 关键信息抽取,SER、RE任务训练 | [模型下载](#2) | [中文](./发票关键信息抽取.md)/English |  |

+| 印章检测与识别 | 端到端弯曲文本识别 | | | |

+| 通用卡证识别 | 通用结构化提取 | | | |

+| 身份证识别 | 结构化提取、图像阴影 | | | |

+| 合同比对 | 密集文本检测、NLP串联 | | | |

diff --git a/applications/README_en.md b/applications/README_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..95c56a1f740faa95e1fe3adeaeb90bfe902f8ed8

--- /dev/null

+++ b/applications/README_en.md

@@ -0,0 +1,79 @@

+English| [简体中文](README.md)

+

+# Application

+

+PaddleOCR scene application covers general, manufacturing, finance, transportation industry of the main OCR vertical applications, on the basis of the general capabilities of PP-OCR, PP-Structure, in the form of notebook to show the use of scene data fine-tuning, model optimization methods, data augmentation and other content, for developers to quickly land OCR applications to provide demonstration and inspiration.

+

+- [Tutorial](#1)

+ - [General](#11)

+ - [Manufacturing](#12)

+ - [Finance](#13)

+ - [Transportation](#14)

+

+- [Model Download](#2)

+

+

+

+## Tutorial

+

+

+

+### General

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ---------------------------------------------- | ---------------- | -------------------- | --------------------------------------- | ------------------------------------------------------------ |

+| High-precision Chineses recognition model SVTR | New model | [Model Download](#2) | [中文](./高精度中文识别模型.md)/English |

|

+| 印章检测与识别 | 端到端弯曲文本识别 | | | |

+| 通用卡证识别 | 通用结构化提取 | | | |

+| 身份证识别 | 结构化提取、图像阴影 | | | |

+| 合同比对 | 密集文本检测、NLP串联 | | | |

diff --git a/applications/README_en.md b/applications/README_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..95c56a1f740faa95e1fe3adeaeb90bfe902f8ed8

--- /dev/null

+++ b/applications/README_en.md

@@ -0,0 +1,79 @@

+English| [简体中文](README.md)

+

+# Application

+

+PaddleOCR scene application covers general, manufacturing, finance, transportation industry of the main OCR vertical applications, on the basis of the general capabilities of PP-OCR, PP-Structure, in the form of notebook to show the use of scene data fine-tuning, model optimization methods, data augmentation and other content, for developers to quickly land OCR applications to provide demonstration and inspiration.

+

+- [Tutorial](#1)

+ - [General](#11)

+ - [Manufacturing](#12)

+ - [Finance](#13)

+ - [Transportation](#14)

+

+- [Model Download](#2)

+

+

+

+## Tutorial

+

+

+

+### General

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ---------------------------------------------- | ---------------- | -------------------- | --------------------------------------- | ------------------------------------------------------------ |

+| High-precision Chineses recognition model SVTR | New model | [Model Download](#2) | [中文](./高精度中文识别模型.md)/English |  |

+| Chinese handwriting recognition | New font support | [Model Download](#2) | [中文](./手写文字识别.md)/English |

|

+| Chinese handwriting recognition | New font support | [Model Download](#2) | [中文](./手写文字识别.md)/English |  |

+

+

+

+### Manufacturing

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ------------------------------ | ------------------------------------------------------------ | -------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

+| Digital tube | Digital tube data sythesis, recognition model fine-tuning | [Model Download](#2) | [中文](./光功率计数码管字符识别/光功率计数码管字符识别.md)/English |

|

+

+

+

+### Manufacturing

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ------------------------------ | ------------------------------------------------------------ | -------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |