Merge pull request #3467 from WenmuZhou/table2

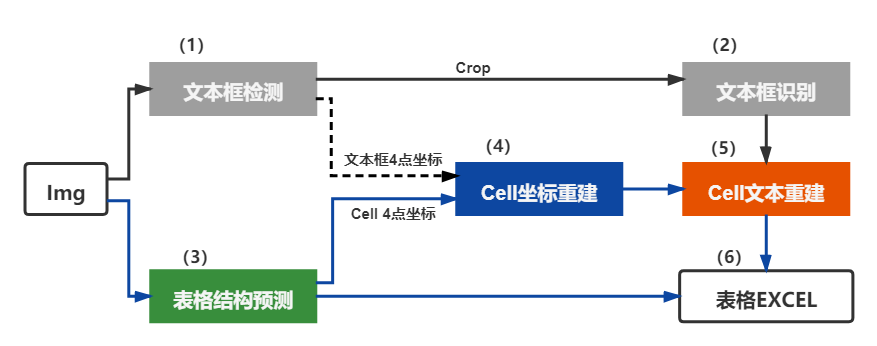

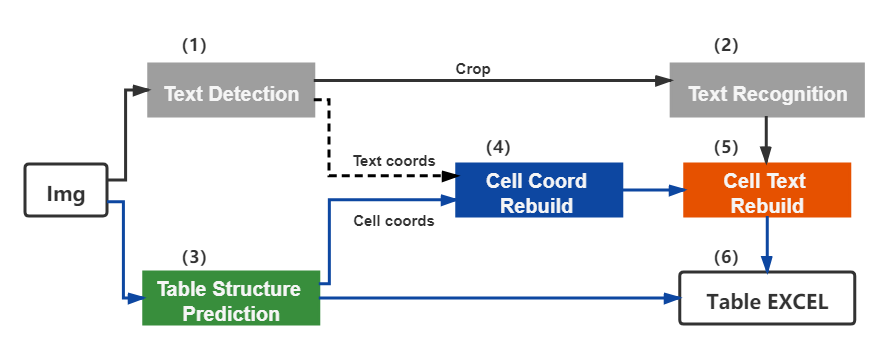

replace image in layoutparse doc

Showing

55.4 KB

doc/table/layout.jpg

0 → 100644

671.5 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

ppstructure/layout/README_en.md

0 → 100644