update

Showing

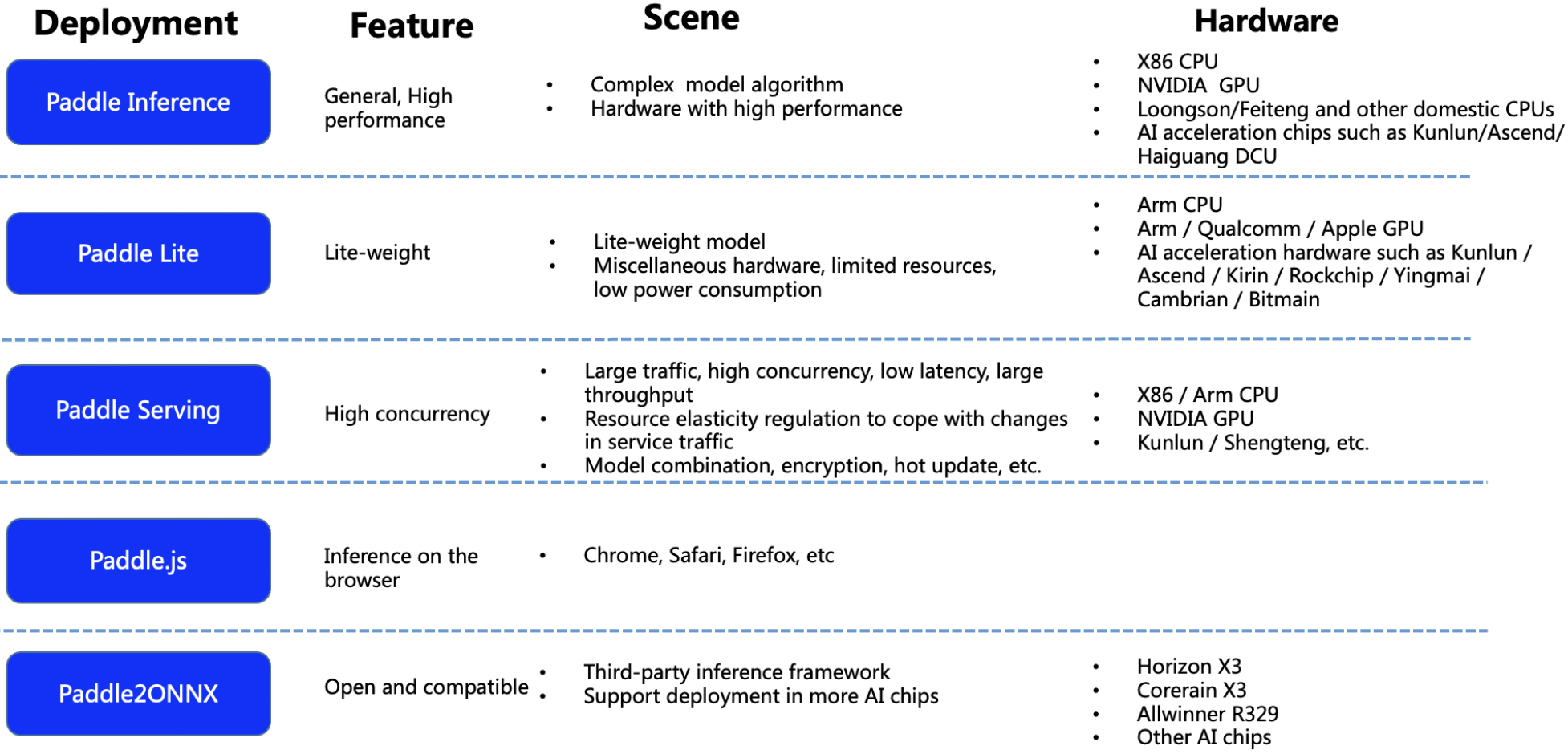

deploy/README.md

0 → 100644

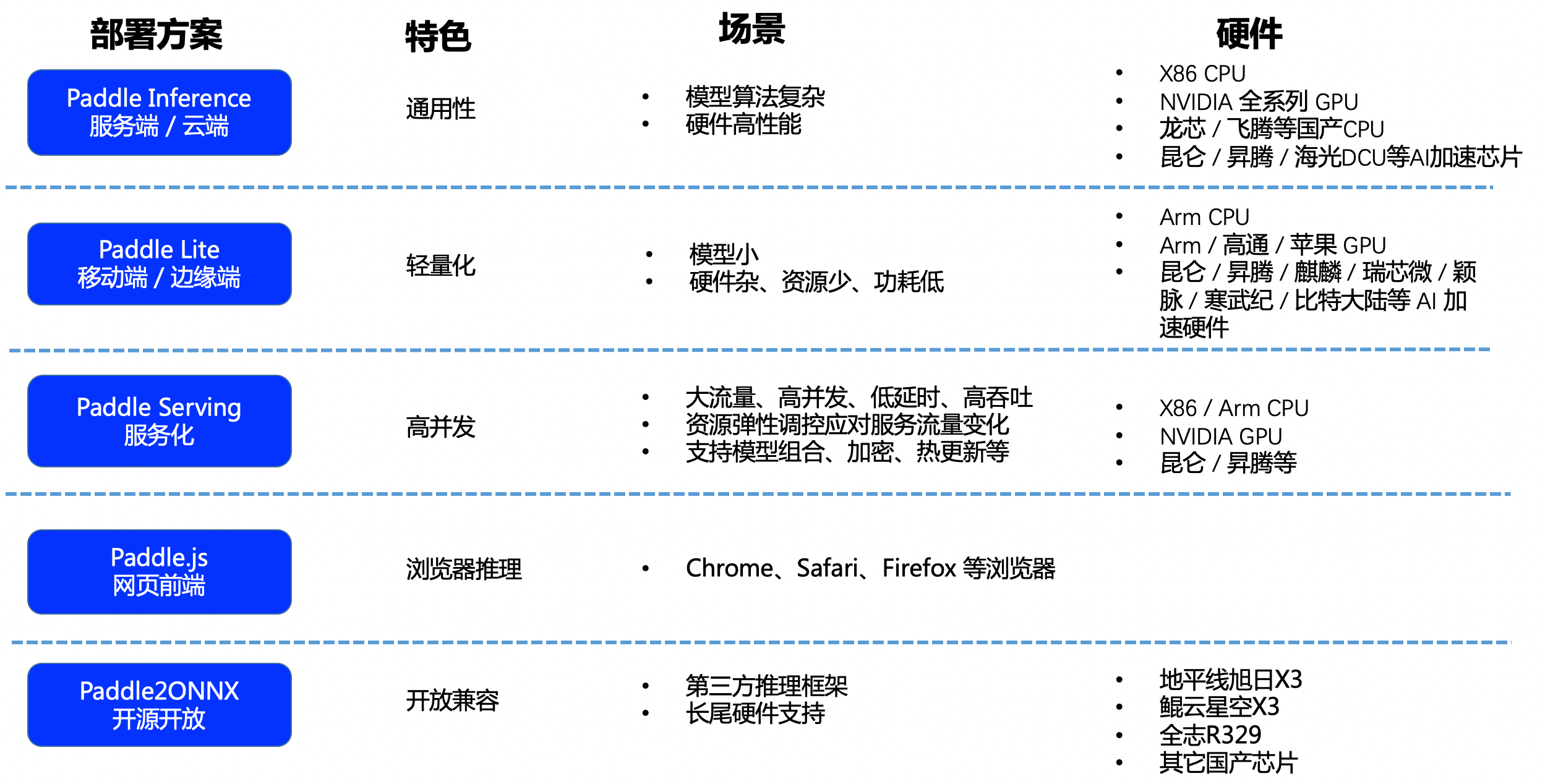

deploy/README_ch.md

0 → 100644

deploy/lite/readme_ch.md

0 → 100644

deploy/lite/readme_en.md

已删除

100644 → 0

deploy/paddle2onnx/readme_ch.md

0 → 100644

deploy/paddlejs/README.md

0 → 100644

deploy/paddlejs/README_ch.md

0 → 100644

deploy/paddlejs/paddlejs_demo.gif

0 → 100644

553.7 KB

deploy/readme_ch.md

已删除

100644 → 0

doc/deployment.png

0 → 100644

992.0 KB

doc/deployment_en.png

0 → 100644

650.2 KB