diff --git a/README_ch.md b/README_ch.md

index f837980498c4b2e2c55a44192b7d3a2c7afb0ba9..e801ce561cb41aafb376f81a3016f0a6b838320d 100755

--- a/README_ch.md

+++ b/README_ch.md

@@ -71,6 +71,8 @@ PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力

## 《动手学OCR》电子书

- [《动手学OCR》电子书📚](./doc/doc_ch/ocr_book.md)

+## 场景应用

+- PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR垂类应用,在PP-OCR、PP-Structure的通用能力基础之上,以notebook的形式展示利用场景数据微调、模型优化方法、数据增广等内容,为开发者快速落地OCR应用提供示范与启发。详情可查看[README](./applications)。

## 开源社区

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/PCB\345\255\227\347\254\246\350\257\206\345\210\253.md" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/PCB\345\255\227\347\254\246\350\257\206\345\210\253.md"

index a5052e2897ab9f09a6ed7b747f9fa1198af2a8ab..ee13bacffdb65e6300a034531a527fdca4ed29f9 100644

--- "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/PCB\345\255\227\347\254\246\350\257\206\345\210\253.md"

+++ "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/PCB\345\255\227\347\254\246\350\257\206\345\210\253.md"

@@ -206,7 +206,11 @@ Eval.dataset.transforms.DetResizeForTest: 尺寸

limit_type: 'min'

```

-然后执行评估代码

+如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

+

+

+

+

+

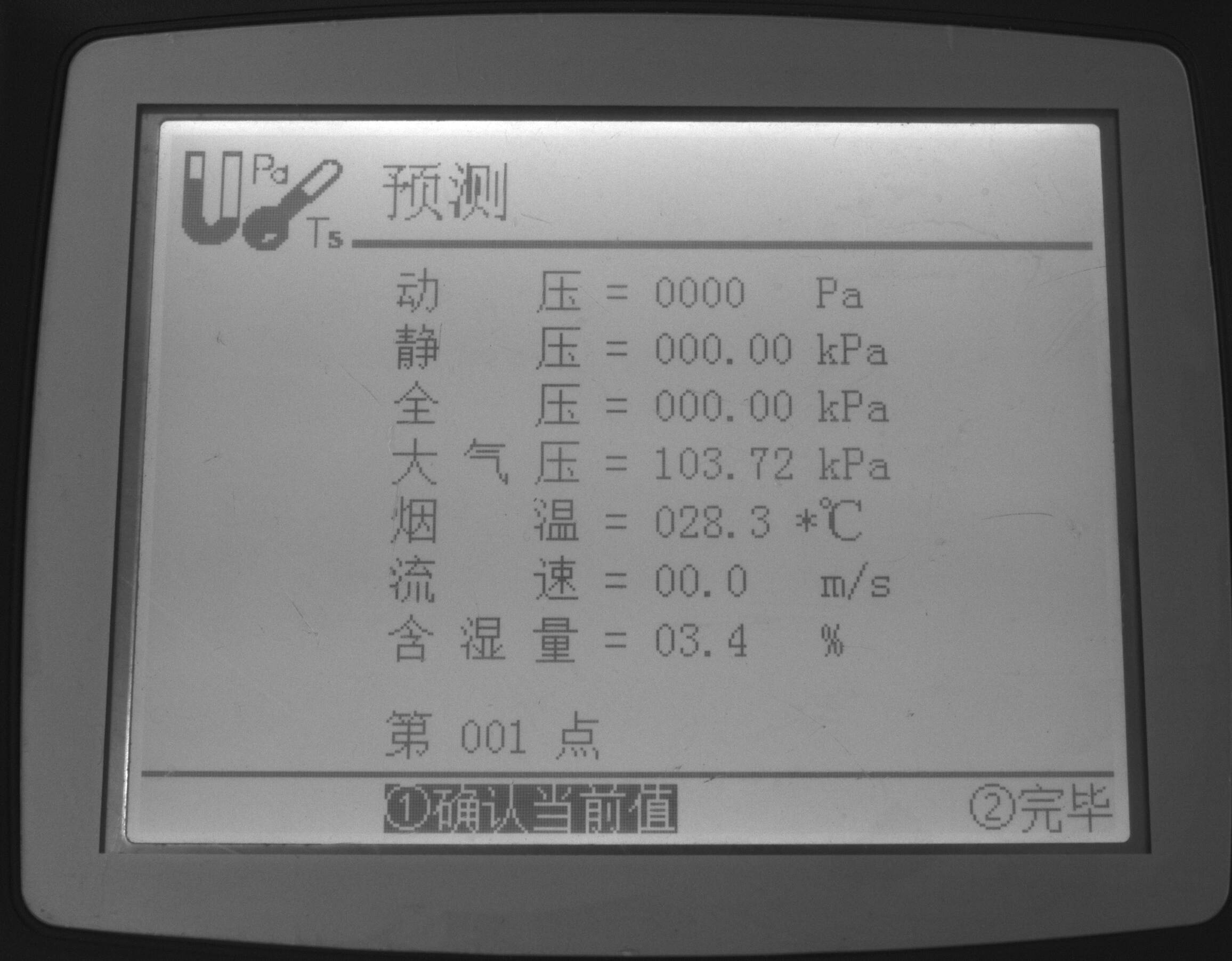

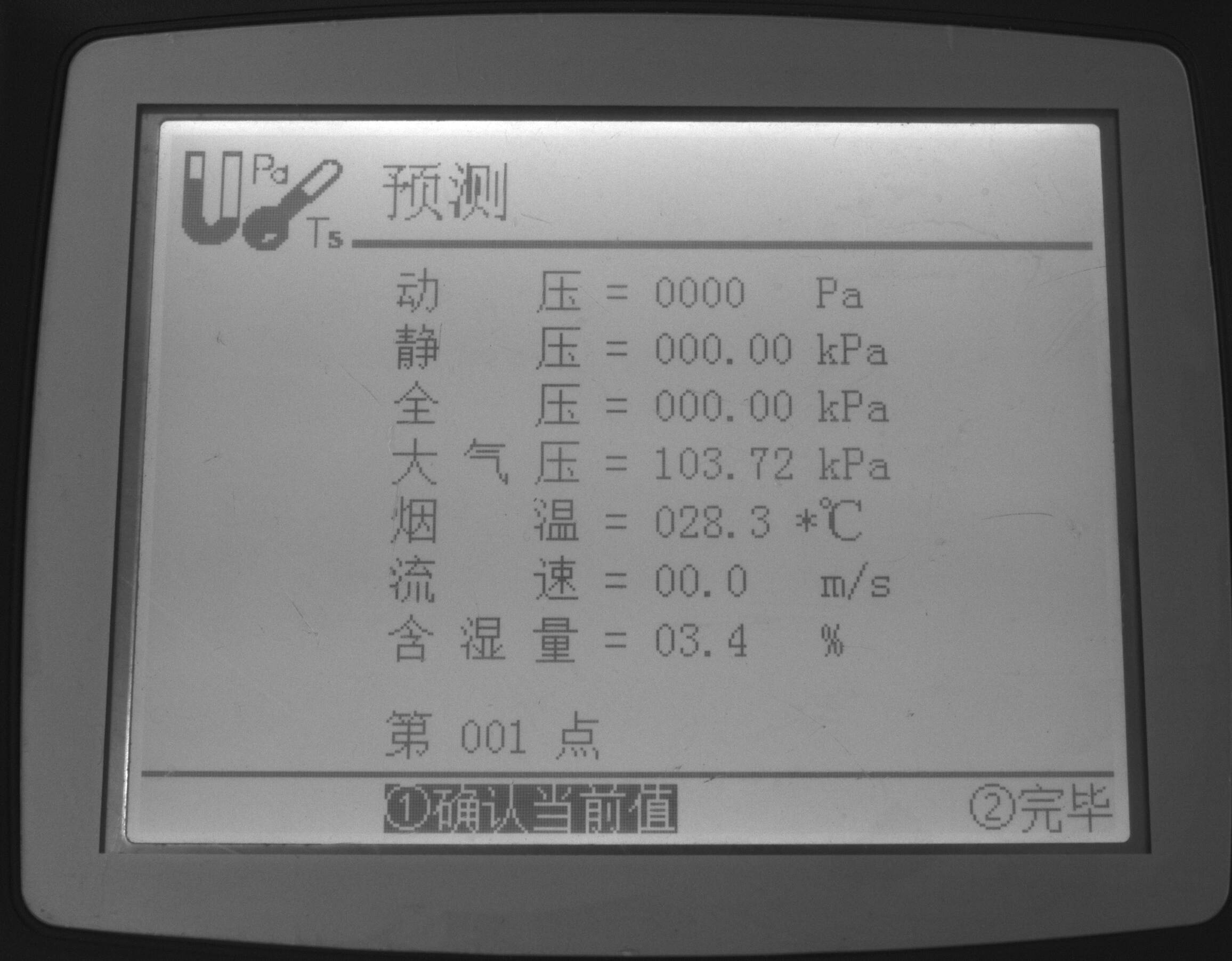

+目前光功率计缺少将数据直接输出的功能,需要人工读数。这一项工作单调重复,如果可以使用机器替代人工,将节约大量成本。针对上述问题,希望通过摄像头拍照->智能读数的方式高效地完成此任务。

+

+为实现智能读数,通常会采取文本检测+文本识别的方案:

+

+第一步,使用文本检测模型定位出光功率计中的数字部分;

+

+第二步,使用文本识别模型获得准确的数字和单位信息。

+

+本项目主要介绍如何完成第二步文本识别部分,包括:真实评估集的建立、训练数据的合成、基于 PP-OCRv3 和 SVTR_Tiny 两个模型进行训练,以及评估和推理。

+

+本项目难点如下:

+

+- 光功率计数码管字符数据较少,难以获取。

+- 数码管中小数点占像素较少,容易漏识别。

+

+针对以上问题, 本例选用 PP-OCRv3 和 SVTR_Tiny 两个高精度模型训练,同时提供了真实数据挖掘案例和数据合成案例。基于 PP-OCRv3 模型,在构建的真实评估集上精度从 52% 提升至 72%,SVTR_Tiny 模型精度可达到 78.9%。

+

+aistudio项目链接: [光功率计数码管字符识别](https://aistudio.baidu.com/aistudio/projectdetail/4049044?contributionType=1)

+

+## 2. PaddleOCR 快速使用

+

+PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,助力开发者训练出更好的模型,并应用落地。

+

+

+

+

+官方提供了适用于通用场景的高精轻量模型,首先使用官方提供的 PP-OCRv3 模型预测图片,验证下当前模型在光功率计场景上的效果。

+

+- 准备环境

+

+```

+python3 -m pip install -U pip

+python3 -m pip install paddleocr

+```

+

+

+- 测试效果

+

+测试图:

+

+

+

+

+```

+paddleocr --lang=ch --det=Fase --image_dir=data

+```

+

+得到如下测试结果:

+

+```

+('.7000', 0.6885431408882141)

+```

+

+发现数字识别较准,然而对负号和小数点识别不准确。 由于PP-OCRv3的训练数据大多为通用场景数据,在特定的场景上效果可能不够好。因此需要基于场景数据进行微调。

+

+下面就主要介绍如何在光功率计(数码管)场景上微调训练。

+

+

+## 3. 开始训练

+

+### 3.1 数据准备

+

+特定的工业场景往往很难获取开源的真实数据集,光功率计也是如此。在实际工业场景中,可以通过摄像头采集的方法收集大量真实数据,本例中重点介绍数据合成方法和真实数据挖掘方法,如何利用有限的数据优化模型精度。

+

+数据集分为两个部分:合成数据,真实数据, 其中合成数据由 text_renderer 工具批量生成得到, 真实数据通过爬虫等方式在百度图片中搜索并使用 PPOCRLabel 标注得到。

+

+

+- 合成数据

+

+本例中数据合成工具使用的是 [text_renderer](https://github.com/Sanster/text_renderer), 该工具可以合成用于文本识别训练的文本行数据:

+

+

+

+

+

+

+```

+export https_proxy=http://172.19.57.45:3128

+git clone https://github.com/oh-my-ocr/text_renderer

+```

+

+```

+import os

+python3 setup.py develop

+python3 -m pip install -r docker/requirements.txt

+python3 main.py \

+ --config example_data/example.py \

+ --dataset img \

+ --num_processes 2 \

+ --log_period 10

+```

+

+给定字体和语料,就可以合成较为丰富样式的文本行数据。 光功率计识别场景,目标是正确识别数码管文本,因此需要收集部分数码管字体,训练语料,用于合成文本识别数据。

+

+将收集好的语料存放在 example_data 路径下:

+

+```

+ln -s ./fonts/DS* text_renderer/example_data/font/

+ln -s ./corpus/digital.txt text_renderer/example_data/text/

+```

+

+修改 text_renderer/example_data/font_list/font_list.txt ,选择需要的字体开始合成:

+

+```

+python3 main.py \

+ --config example_data/digital_example.py \

+ --dataset img \

+ --num_processes 2 \

+ --log_period 10

+```

+

+合成图片会被存在目录 text_renderer/example_data/digital/chn_data 下

+

+查看合成的数据样例:

+

+

+

+

+- 真实数据挖掘

+

+模型训练需要使用真实数据作为评价指标,否则很容易过拟合到简单的合成数据中。没有开源数据的情况下,可以利用部分无标注数据+标注工具获得真实数据。

+

+

+1. 数据搜集

+

+使用[爬虫工具](https://github.com/Joeclinton1/google-images-download.git)获得无标注数据

+

+2. [PPOCRLabel](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.5/PPOCRLabel) 完成半自动标注

+

+PPOCRLabel是一款适用于OCR领域的半自动化图形标注工具,内置PP-OCR模型对数据自动标注和重新识别。使用Python3和PyQT5编写,支持矩形框标注、表格标注、不规则文本标注、关键信息标注模式,导出格式可直接用于PaddleOCR检测和识别模型的训练。

+

+

+

+

+收集完数据后就可以进行分配了,验证集中一般都是真实数据,训练集中包含合成数据+真实数据。本例中标注了155张图片,其中训练集和验证集的数目为100和55。

+

+

+最终 `data` 文件夹应包含以下几部分:

+

+```

+|-data

+ |- synth_train.txt

+ |- real_train.txt

+ |- real_eval.txt

+ |- synthetic_data

+ |- word_001.png

+ |- word_002.jpg

+ |- word_003.jpg

+ | ...

+ |- real_data

+ |- word_001.png

+ |- word_002.jpg

+ |- word_003.jpg

+ | ...

+ ...

+```

+

+### 3.2 模型选择

+

+本案例提供了2种文本识别模型:PP-OCRv3 识别模型 和 SVTR_Tiny:

+

+[PP-OCRv3 识别模型](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md):PP-OCRv3的识别模块是基于文本识别算法SVTR优化。SVTR不再采用RNN结构,通过引入Transformers结构更加有效地挖掘文本行图像的上下文信息,从而提升文本识别能力。并进行了一系列结构改进加速模型预测。

+

+[SVTR_Tiny](https://arxiv.org/abs/2205.00159):SVTR提出了一种用于场景文本识别的单视觉模型,该模型在patch-wise image tokenization框架内,完全摒弃了序列建模,在精度具有竞争力的前提下,模型参数量更少,速度更快。

+

+以上两个策略在自建中文数据集上的精度和速度对比如下:

+

+| ID | 策略 | 模型大小 | 精度 | 预测耗时(CPU + MKLDNN)|

+|-----|-----|--------|----| --- |

+| 01 | PP-OCRv2 | 8M | 74.8% | 8.54ms |

+| 02 | SVTR_Tiny | 21M | 80.1% | 97ms |

+| 03 | SVTR_LCNet(h32) | 12M | 71.9% | 6.6ms |

+| 04 | SVTR_LCNet(h48) | 12M | 73.98% | 7.6ms |

+| 05 | + GTC | 12M | 75.8% | 7.6ms |

+| 06 | + TextConAug | 12M | 76.3% | 7.6ms |

+| 07 | + TextRotNet | 12M | 76.9% | 7.6ms |

+| 08 | + UDML | 12M | 78.4% | 7.6ms |

+| 09 | + UIM | 12M | 79.4% | 7.6ms |

+

+

+### 3.3 开始训练

+

+首先下载 PaddleOCR 代码库

+

+```

+git clone -b release/2.5 https://github.com/PaddlePaddle/PaddleOCR.git

+```

+

+PaddleOCR提供了训练脚本、评估脚本和预测脚本,本节将以 PP-OCRv3 中文识别模型为例:

+

+**Step1:下载预训练模型**

+

+首先下载 pretrain model,您可以下载训练好的模型在自定义数据上进行finetune

+

+```

+cd PaddleOCR/

+# 下载PP-OCRv3 中文预训练模型

+wget -P ./pretrain_models/ https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar

+# 解压模型参数

+cd pretrain_models

+tar -xf ch_PP-OCRv3_rec_train.tar && rm -rf ch_PP-OCRv3_rec_train.tar

+```

+

+**Step2:自定义字典文件**

+

+接下来需要提供一个字典({word_dict_name}.txt),使模型在训练时,可以将所有出现的字符映射为字典的索引。

+

+因此字典需要包含所有希望被正确识别的字符,{word_dict_name}.txt需要写成如下格式,并以 `utf-8` 编码格式保存:

+

+```

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+-

+.

+```

+

+word_dict.txt 每行有一个单字,将字符与数字索引映射在一起,“3.14” 将被映射成 [3, 11, 1, 4]

+

+* 内置字典

+

+PaddleOCR内置了一部分字典,可以按需使用。

+

+`ppocr/utils/ppocr_keys_v1.txt` 是一个包含6623个字符的中文字典

+

+`ppocr/utils/ic15_dict.txt` 是一个包含36个字符的英文字典

+

+* 自定义字典

+

+内置字典面向通用场景,具体的工业场景中,可能需要识别特殊字符,或者只需识别某几个字符,此时自定义字典会更提升模型精度。例如在光功率计场景中,需要识别数字和单位。

+

+遍历真实数据标签中的字符,制作字典`digital_dict.txt`如下所示:

+

+```

+-

+.

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+B

+E

+F

+H

+L

+N

+T

+W

+d

+k

+m

+n

+o

+z

+```

+

+

+

+

+**Step3:修改配置文件**

+

+为了更好的使用预训练模型,训练推荐使用[ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml)配置文件,并参考下列说明修改配置文件:

+

+以 `ch_PP-OCRv3_rec_distillation.yml` 为例:

+```

+Global:

+ ...

+ # 添加自定义字典,如修改字典请将路径指向新字典

+ character_dict_path: ppocr/utils/dict/digital_dict.txt

+ ...

+ # 识别空格

+ use_space_char: True

+

+

+Optimizer:

+ ...

+ # 添加学习率衰减策略

+ lr:

+ name: Cosine

+ learning_rate: 0.001

+ ...

+

+...

+

+Train:

+ dataset:

+ # 数据集格式,支持LMDBDataSet以及SimpleDataSet

+ name: SimpleDataSet

+ # 数据集路径

+ data_dir: ./data/

+ # 训练集标签文件

+ label_file_list:

+ - ./train_data/digital_img/digital_train.txt #11w

+ - ./train_data/digital_img/real_train.txt #100

+ - ./train_data/digital_img/dbm_img/dbm.txt #3w

+ ratio_list:

+ - 0.3

+ - 1.0

+ - 1.0

+ transforms:

+ ...

+ - RecResizeImg:

+ # 修改 image_shape 以适应长文本

+ image_shape: [3, 48, 320]

+ ...

+ loader:

+ ...

+ # 单卡训练的batch_size

+ batch_size_per_card: 256

+ ...

+

+Eval:

+ dataset:

+ # 数据集格式,支持LMDBDataSet以及SimpleDataSet

+ name: SimpleDataSet

+ # 数据集路径

+ data_dir: ./data

+ # 验证集标签文件

+ label_file_list:

+ - ./train_data/digital_img/real_val.txt

+ transforms:

+ ...

+ - RecResizeImg:

+ # 修改 image_shape 以适应长文本

+ image_shape: [3, 48, 320]

+ ...

+ loader:

+ # 单卡验证的batch_size

+ batch_size_per_card: 256

+ ...

+```

+**注意,训练/预测/评估时的配置文件请务必与训练一致。**

+

+**Step4:启动训练**

+

+*如果您安装的是cpu版本,请将配置文件中的 `use_gpu` 字段修改为false*

+

+```

+# GPU训练 支持单卡,多卡训练

+# 训练数码管数据 训练日志会自动保存为 "{save_model_dir}" 下的train.log

+

+#单卡训练(训练周期长,不建议)

+python3 tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=./pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy

+

+#多卡训练,通过--gpus参数指定卡号

+python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=./pretrain_models/en_PP-OCRv3_rec_train/best_accuracy

+```

+

+

+PaddleOCR支持训练和评估交替进行, 可以在 `configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml` 中修改 `eval_batch_step` 设置评估频率,默认每500个iter评估一次。评估过程中默认将最佳acc模型,保存为 `output/ch_PP-OCRv3_rec_distill/best_accuracy` 。

+

+如果验证集很大,测试将会比较耗时,建议减少评估次数,或训练完再进行评估。

+

+### SVTR_Tiny 训练

+

+SVTR_Tiny 训练步骤与上面一致,SVTR支持的配置和模型训练权重可以参考[算法介绍文档](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/algorithm_rec_svtr.md)

+

+**Step1:下载预训练模型**

+

+```

+# 下载 SVTR_Tiny 中文识别预训练模型和配置文件

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/rec_svtr_tiny_none_ctc_ch_train.tar

+# 解压模型参数

+tar -xf rec_svtr_tiny_none_ctc_ch_train.tar && rm -rf rec_svtr_tiny_none_ctc_ch_train.tar

+```

+**Step2:自定义字典文件**

+

+字典依然使用自定义的 digital_dict.txt

+

+**Step3:修改配置文件**

+

+配置文件中对应修改字典路径和数据路径

+

+**Step4:启动训练**

+

+```

+## 单卡训练

+python tools/train.py -c rec_svtr_tiny_none_ctc_ch_train/rec_svtr_tiny_6local_6global_stn_ch.yml \

+ -o Global.pretrained_model=./rec_svtr_tiny_none_ctc_ch_train/best_accuracy

+```

+

+### 3.4 验证效果

+

+如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

+

+目前光功率计缺少将数据直接输出的功能,需要人工读数。这一项工作单调重复,如果可以使用机器替代人工,将节约大量成本。针对上述问题,希望通过摄像头拍照->智能读数的方式高效地完成此任务。

+

+为实现智能读数,通常会采取文本检测+文本识别的方案:

+

+第一步,使用文本检测模型定位出光功率计中的数字部分;

+

+第二步,使用文本识别模型获得准确的数字和单位信息。

+

+本项目主要介绍如何完成第二步文本识别部分,包括:真实评估集的建立、训练数据的合成、基于 PP-OCRv3 和 SVTR_Tiny 两个模型进行训练,以及评估和推理。

+

+本项目难点如下:

+

+- 光功率计数码管字符数据较少,难以获取。

+- 数码管中小数点占像素较少,容易漏识别。

+

+针对以上问题, 本例选用 PP-OCRv3 和 SVTR_Tiny 两个高精度模型训练,同时提供了真实数据挖掘案例和数据合成案例。基于 PP-OCRv3 模型,在构建的真实评估集上精度从 52% 提升至 72%,SVTR_Tiny 模型精度可达到 78.9%。

+

+aistudio项目链接: [光功率计数码管字符识别](https://aistudio.baidu.com/aistudio/projectdetail/4049044?contributionType=1)

+

+## 2. PaddleOCR 快速使用

+

+PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,助力开发者训练出更好的模型,并应用落地。

+

+

+

+

+官方提供了适用于通用场景的高精轻量模型,首先使用官方提供的 PP-OCRv3 模型预测图片,验证下当前模型在光功率计场景上的效果。

+

+- 准备环境

+

+```

+python3 -m pip install -U pip

+python3 -m pip install paddleocr

+```

+

+

+- 测试效果

+

+测试图:

+

+

+

+

+```

+paddleocr --lang=ch --det=Fase --image_dir=data

+```

+

+得到如下测试结果:

+

+```

+('.7000', 0.6885431408882141)

+```

+

+发现数字识别较准,然而对负号和小数点识别不准确。 由于PP-OCRv3的训练数据大多为通用场景数据,在特定的场景上效果可能不够好。因此需要基于场景数据进行微调。

+

+下面就主要介绍如何在光功率计(数码管)场景上微调训练。

+

+

+## 3. 开始训练

+

+### 3.1 数据准备

+

+特定的工业场景往往很难获取开源的真实数据集,光功率计也是如此。在实际工业场景中,可以通过摄像头采集的方法收集大量真实数据,本例中重点介绍数据合成方法和真实数据挖掘方法,如何利用有限的数据优化模型精度。

+

+数据集分为两个部分:合成数据,真实数据, 其中合成数据由 text_renderer 工具批量生成得到, 真实数据通过爬虫等方式在百度图片中搜索并使用 PPOCRLabel 标注得到。

+

+

+- 合成数据

+

+本例中数据合成工具使用的是 [text_renderer](https://github.com/Sanster/text_renderer), 该工具可以合成用于文本识别训练的文本行数据:

+

+

+

+

+

+

+```

+export https_proxy=http://172.19.57.45:3128

+git clone https://github.com/oh-my-ocr/text_renderer

+```

+

+```

+import os

+python3 setup.py develop

+python3 -m pip install -r docker/requirements.txt

+python3 main.py \

+ --config example_data/example.py \

+ --dataset img \

+ --num_processes 2 \

+ --log_period 10

+```

+

+给定字体和语料,就可以合成较为丰富样式的文本行数据。 光功率计识别场景,目标是正确识别数码管文本,因此需要收集部分数码管字体,训练语料,用于合成文本识别数据。

+

+将收集好的语料存放在 example_data 路径下:

+

+```

+ln -s ./fonts/DS* text_renderer/example_data/font/

+ln -s ./corpus/digital.txt text_renderer/example_data/text/

+```

+

+修改 text_renderer/example_data/font_list/font_list.txt ,选择需要的字体开始合成:

+

+```

+python3 main.py \

+ --config example_data/digital_example.py \

+ --dataset img \

+ --num_processes 2 \

+ --log_period 10

+```

+

+合成图片会被存在目录 text_renderer/example_data/digital/chn_data 下

+

+查看合成的数据样例:

+

+

+

+

+- 真实数据挖掘

+

+模型训练需要使用真实数据作为评价指标,否则很容易过拟合到简单的合成数据中。没有开源数据的情况下,可以利用部分无标注数据+标注工具获得真实数据。

+

+

+1. 数据搜集

+

+使用[爬虫工具](https://github.com/Joeclinton1/google-images-download.git)获得无标注数据

+

+2. [PPOCRLabel](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.5/PPOCRLabel) 完成半自动标注

+

+PPOCRLabel是一款适用于OCR领域的半自动化图形标注工具,内置PP-OCR模型对数据自动标注和重新识别。使用Python3和PyQT5编写,支持矩形框标注、表格标注、不规则文本标注、关键信息标注模式,导出格式可直接用于PaddleOCR检测和识别模型的训练。

+

+

+

+

+收集完数据后就可以进行分配了,验证集中一般都是真实数据,训练集中包含合成数据+真实数据。本例中标注了155张图片,其中训练集和验证集的数目为100和55。

+

+

+最终 `data` 文件夹应包含以下几部分:

+

+```

+|-data

+ |- synth_train.txt

+ |- real_train.txt

+ |- real_eval.txt

+ |- synthetic_data

+ |- word_001.png

+ |- word_002.jpg

+ |- word_003.jpg

+ | ...

+ |- real_data

+ |- word_001.png

+ |- word_002.jpg

+ |- word_003.jpg

+ | ...

+ ...

+```

+

+### 3.2 模型选择

+

+本案例提供了2种文本识别模型:PP-OCRv3 识别模型 和 SVTR_Tiny:

+

+[PP-OCRv3 识别模型](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md):PP-OCRv3的识别模块是基于文本识别算法SVTR优化。SVTR不再采用RNN结构,通过引入Transformers结构更加有效地挖掘文本行图像的上下文信息,从而提升文本识别能力。并进行了一系列结构改进加速模型预测。

+

+[SVTR_Tiny](https://arxiv.org/abs/2205.00159):SVTR提出了一种用于场景文本识别的单视觉模型,该模型在patch-wise image tokenization框架内,完全摒弃了序列建模,在精度具有竞争力的前提下,模型参数量更少,速度更快。

+

+以上两个策略在自建中文数据集上的精度和速度对比如下:

+

+| ID | 策略 | 模型大小 | 精度 | 预测耗时(CPU + MKLDNN)|

+|-----|-----|--------|----| --- |

+| 01 | PP-OCRv2 | 8M | 74.8% | 8.54ms |

+| 02 | SVTR_Tiny | 21M | 80.1% | 97ms |

+| 03 | SVTR_LCNet(h32) | 12M | 71.9% | 6.6ms |

+| 04 | SVTR_LCNet(h48) | 12M | 73.98% | 7.6ms |

+| 05 | + GTC | 12M | 75.8% | 7.6ms |

+| 06 | + TextConAug | 12M | 76.3% | 7.6ms |

+| 07 | + TextRotNet | 12M | 76.9% | 7.6ms |

+| 08 | + UDML | 12M | 78.4% | 7.6ms |

+| 09 | + UIM | 12M | 79.4% | 7.6ms |

+

+

+### 3.3 开始训练

+

+首先下载 PaddleOCR 代码库

+

+```

+git clone -b release/2.5 https://github.com/PaddlePaddle/PaddleOCR.git

+```

+

+PaddleOCR提供了训练脚本、评估脚本和预测脚本,本节将以 PP-OCRv3 中文识别模型为例:

+

+**Step1:下载预训练模型**

+

+首先下载 pretrain model,您可以下载训练好的模型在自定义数据上进行finetune

+

+```

+cd PaddleOCR/

+# 下载PP-OCRv3 中文预训练模型

+wget -P ./pretrain_models/ https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar

+# 解压模型参数

+cd pretrain_models

+tar -xf ch_PP-OCRv3_rec_train.tar && rm -rf ch_PP-OCRv3_rec_train.tar

+```

+

+**Step2:自定义字典文件**

+

+接下来需要提供一个字典({word_dict_name}.txt),使模型在训练时,可以将所有出现的字符映射为字典的索引。

+

+因此字典需要包含所有希望被正确识别的字符,{word_dict_name}.txt需要写成如下格式,并以 `utf-8` 编码格式保存:

+

+```

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+-

+.

+```

+

+word_dict.txt 每行有一个单字,将字符与数字索引映射在一起,“3.14” 将被映射成 [3, 11, 1, 4]

+

+* 内置字典

+

+PaddleOCR内置了一部分字典,可以按需使用。

+

+`ppocr/utils/ppocr_keys_v1.txt` 是一个包含6623个字符的中文字典

+

+`ppocr/utils/ic15_dict.txt` 是一个包含36个字符的英文字典

+

+* 自定义字典

+

+内置字典面向通用场景,具体的工业场景中,可能需要识别特殊字符,或者只需识别某几个字符,此时自定义字典会更提升模型精度。例如在光功率计场景中,需要识别数字和单位。

+

+遍历真实数据标签中的字符,制作字典`digital_dict.txt`如下所示:

+

+```

+-

+.

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+B

+E

+F

+H

+L

+N

+T

+W

+d

+k

+m

+n

+o

+z

+```

+

+

+

+

+**Step3:修改配置文件**

+

+为了更好的使用预训练模型,训练推荐使用[ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml)配置文件,并参考下列说明修改配置文件:

+

+以 `ch_PP-OCRv3_rec_distillation.yml` 为例:

+```

+Global:

+ ...

+ # 添加自定义字典,如修改字典请将路径指向新字典

+ character_dict_path: ppocr/utils/dict/digital_dict.txt

+ ...

+ # 识别空格

+ use_space_char: True

+

+

+Optimizer:

+ ...

+ # 添加学习率衰减策略

+ lr:

+ name: Cosine

+ learning_rate: 0.001

+ ...

+

+...

+

+Train:

+ dataset:

+ # 数据集格式,支持LMDBDataSet以及SimpleDataSet

+ name: SimpleDataSet

+ # 数据集路径

+ data_dir: ./data/

+ # 训练集标签文件

+ label_file_list:

+ - ./train_data/digital_img/digital_train.txt #11w

+ - ./train_data/digital_img/real_train.txt #100

+ - ./train_data/digital_img/dbm_img/dbm.txt #3w

+ ratio_list:

+ - 0.3

+ - 1.0

+ - 1.0

+ transforms:

+ ...

+ - RecResizeImg:

+ # 修改 image_shape 以适应长文本

+ image_shape: [3, 48, 320]

+ ...

+ loader:

+ ...

+ # 单卡训练的batch_size

+ batch_size_per_card: 256

+ ...

+

+Eval:

+ dataset:

+ # 数据集格式,支持LMDBDataSet以及SimpleDataSet

+ name: SimpleDataSet

+ # 数据集路径

+ data_dir: ./data

+ # 验证集标签文件

+ label_file_list:

+ - ./train_data/digital_img/real_val.txt

+ transforms:

+ ...

+ - RecResizeImg:

+ # 修改 image_shape 以适应长文本

+ image_shape: [3, 48, 320]

+ ...

+ loader:

+ # 单卡验证的batch_size

+ batch_size_per_card: 256

+ ...

+```

+**注意,训练/预测/评估时的配置文件请务必与训练一致。**

+

+**Step4:启动训练**

+

+*如果您安装的是cpu版本,请将配置文件中的 `use_gpu` 字段修改为false*

+

+```

+# GPU训练 支持单卡,多卡训练

+# 训练数码管数据 训练日志会自动保存为 "{save_model_dir}" 下的train.log

+

+#单卡训练(训练周期长,不建议)

+python3 tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=./pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy

+

+#多卡训练,通过--gpus参数指定卡号

+python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=./pretrain_models/en_PP-OCRv3_rec_train/best_accuracy

+```

+

+

+PaddleOCR支持训练和评估交替进行, 可以在 `configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml` 中修改 `eval_batch_step` 设置评估频率,默认每500个iter评估一次。评估过程中默认将最佳acc模型,保存为 `output/ch_PP-OCRv3_rec_distill/best_accuracy` 。

+

+如果验证集很大,测试将会比较耗时,建议减少评估次数,或训练完再进行评估。

+

+### SVTR_Tiny 训练

+

+SVTR_Tiny 训练步骤与上面一致,SVTR支持的配置和模型训练权重可以参考[算法介绍文档](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/algorithm_rec_svtr.md)

+

+**Step1:下载预训练模型**

+

+```

+# 下载 SVTR_Tiny 中文识别预训练模型和配置文件

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/rec_svtr_tiny_none_ctc_ch_train.tar

+# 解压模型参数

+tar -xf rec_svtr_tiny_none_ctc_ch_train.tar && rm -rf rec_svtr_tiny_none_ctc_ch_train.tar

+```

+**Step2:自定义字典文件**

+

+字典依然使用自定义的 digital_dict.txt

+

+**Step3:修改配置文件**

+

+配置文件中对应修改字典路径和数据路径

+

+**Step4:启动训练**

+

+```

+## 单卡训练

+python tools/train.py -c rec_svtr_tiny_none_ctc_ch_train/rec_svtr_tiny_6local_6global_stn_ch.yml \

+ -o Global.pretrained_model=./rec_svtr_tiny_none_ctc_ch_train/best_accuracy

+```

+

+### 3.4 验证效果

+

+如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

+

+

+

+

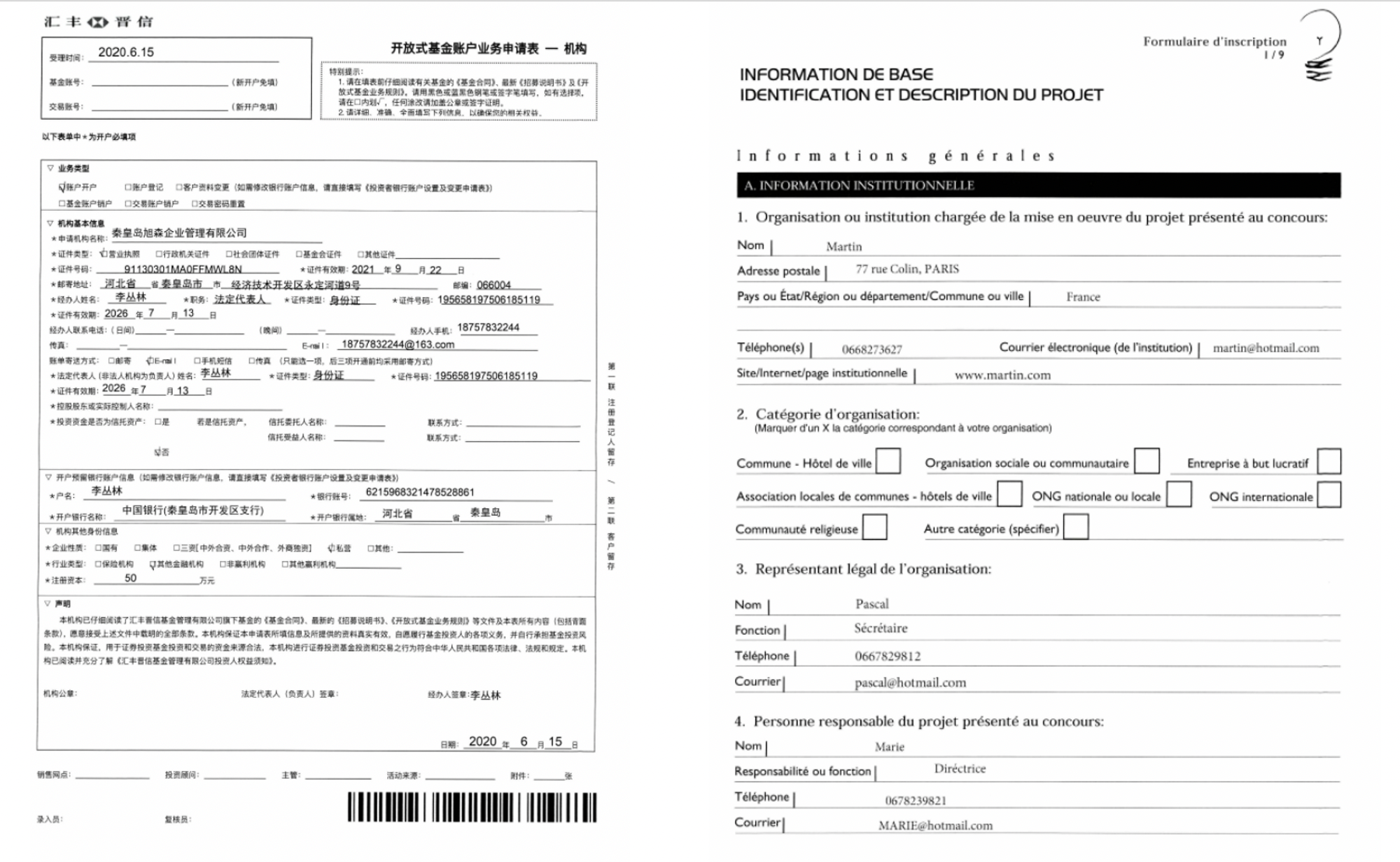

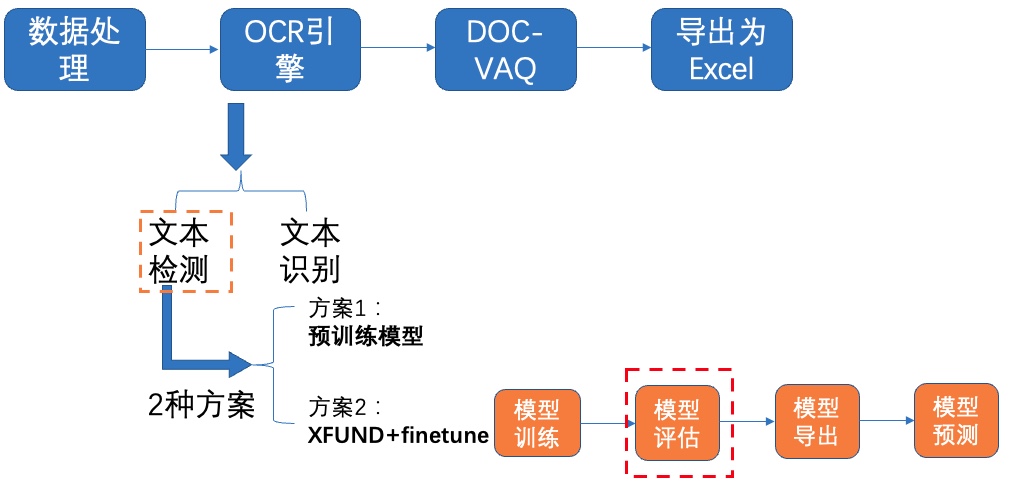

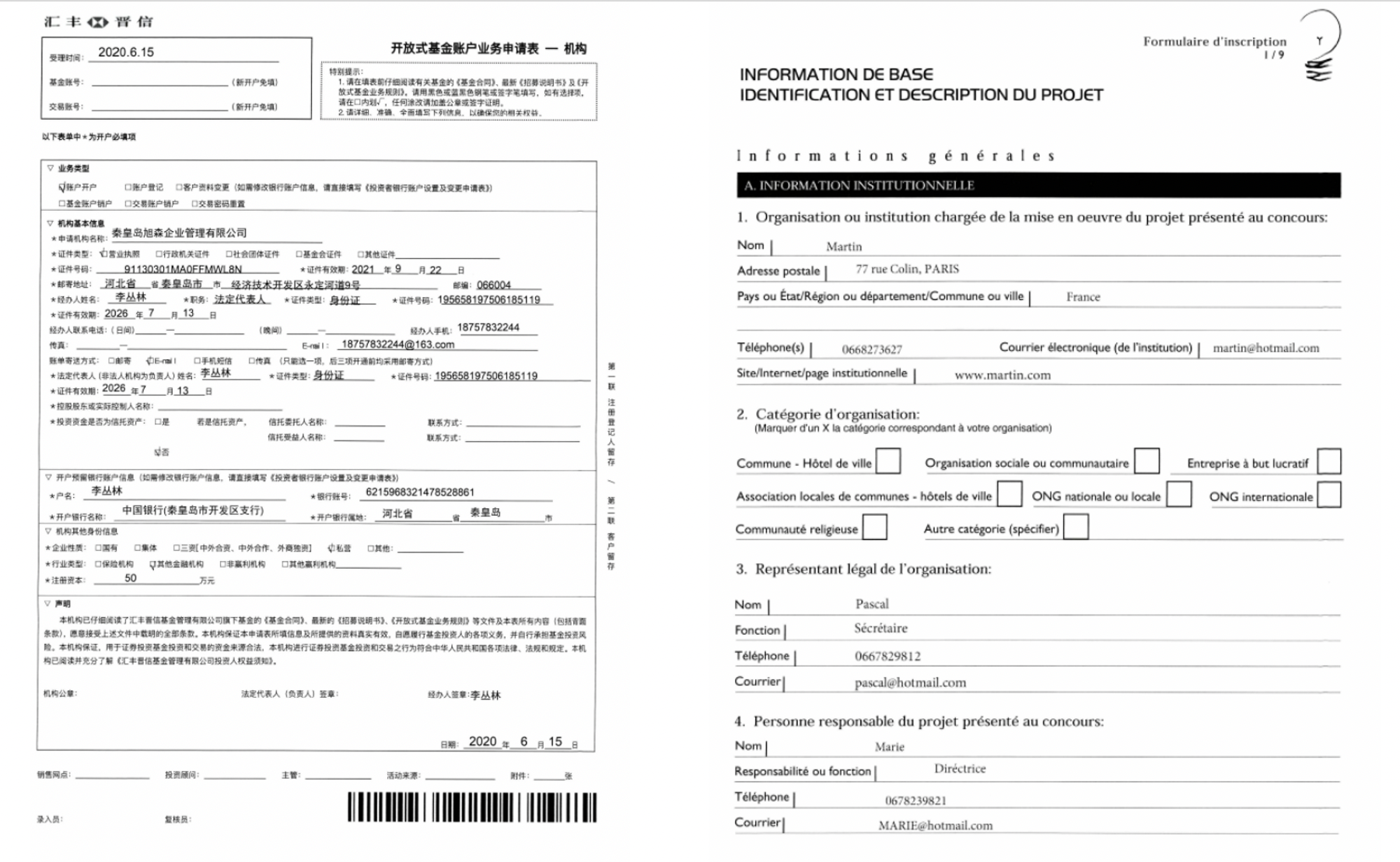

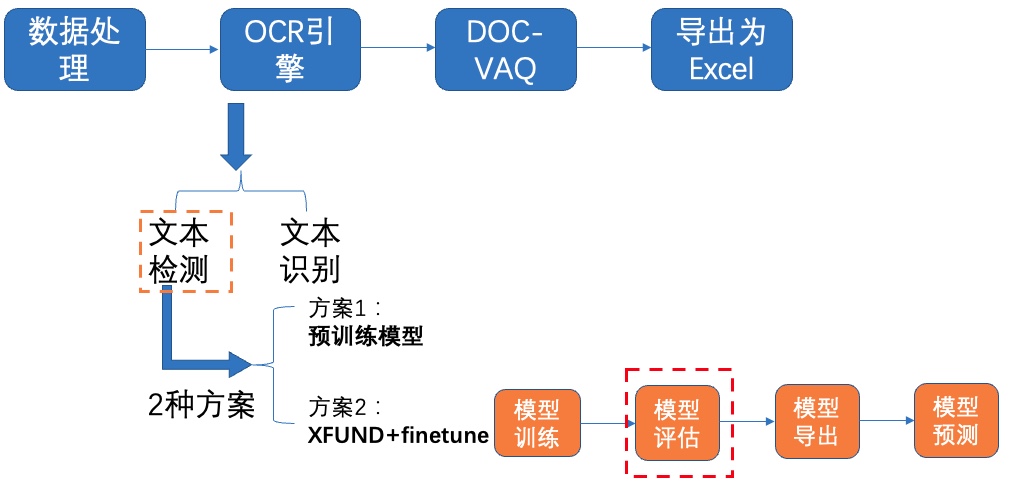

图1 多模态表单识别流程图

-注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3884375)(配备Tesla V100、A100等高级算力资源)

+注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3884375?contributionType=1)

-

-

-# 2 安装说明

+## 2 安装说明

下载PaddleOCR源码,上述AIStudio项目中已经帮大家打包好的PaddleOCR(已经修改好配置文件),无需下载解压即可,只需安装依赖环境~

```python

-! unzip -q PaddleOCR.zip

+unzip -q PaddleOCR.zip

```

```python

# 如仍需安装or安装更新,可以执行以下步骤

-# ! git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

-# ! git clone https://gitee.com/PaddlePaddle/PaddleOCR

+# git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

+# git clone https://gitee.com/PaddlePaddle/PaddleOCR

```

```python

# 安装依赖包

-! pip install -U pip

-! pip install -r /home/aistudio/PaddleOCR/requirements.txt

-! pip install paddleocr

+pip install -U pip

+pip install -r /home/aistudio/PaddleOCR/requirements.txt

+pip install paddleocr

-! pip install yacs gnureadline paddlenlp==2.2.1

-! pip install xlsxwriter

+pip install yacs gnureadline paddlenlp==2.2.1

+pip install xlsxwriter

```

-# 3 数据准备

+## 3 数据准备

这里使用[XFUN数据集](https://github.com/doc-analysis/XFUND)做为实验数据集。 XFUN数据集是微软提出的一个用于KIE任务的多语言数据集,共包含七个数据集,每个数据集包含149张训练集和50张验证集

@@ -59,7 +86,7 @@

图1 多模态表单识别流程图

-注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3884375)(配备Tesla V100、A100等高级算力资源)

+注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3884375?contributionType=1)

-

-

-# 2 安装说明

+## 2 安装说明

下载PaddleOCR源码,上述AIStudio项目中已经帮大家打包好的PaddleOCR(已经修改好配置文件),无需下载解压即可,只需安装依赖环境~

```python

-! unzip -q PaddleOCR.zip

+unzip -q PaddleOCR.zip

```

```python

# 如仍需安装or安装更新,可以执行以下步骤

-# ! git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

-# ! git clone https://gitee.com/PaddlePaddle/PaddleOCR

+# git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

+# git clone https://gitee.com/PaddlePaddle/PaddleOCR

```

```python

# 安装依赖包

-! pip install -U pip

-! pip install -r /home/aistudio/PaddleOCR/requirements.txt

-! pip install paddleocr

+pip install -U pip

+pip install -r /home/aistudio/PaddleOCR/requirements.txt

+pip install paddleocr

-! pip install yacs gnureadline paddlenlp==2.2.1

-! pip install xlsxwriter

+pip install yacs gnureadline paddlenlp==2.2.1

+pip install xlsxwriter

```

-# 3 数据准备

+## 3 数据准备

这里使用[XFUN数据集](https://github.com/doc-analysis/XFUND)做为实验数据集。 XFUN数据集是微软提出的一个用于KIE任务的多语言数据集,共包含七个数据集,每个数据集包含149张训练集和50张验证集

@@ -59,7 +86,7 @@

图2 数据集样例,左中文,右法语

-## 3.1 下载处理好的数据集

+### 3.1 下载处理好的数据集

处理好的XFUND中文数据集下载地址:[https://paddleocr.bj.bcebos.com/dataset/XFUND.tar](https://paddleocr.bj.bcebos.com/dataset/XFUND.tar) ,可以运行如下指令完成中文数据集下载和解压。

@@ -69,13 +96,13 @@

```python

-! wget https://paddleocr.bj.bcebos.com/dataset/XFUND.tar

-! tar -xf XFUND.tar

+wget https://paddleocr.bj.bcebos.com/dataset/XFUND.tar

+tar -xf XFUND.tar

# XFUN其他数据集使用下面的代码进行转换

# 代码链接:https://github.com/PaddlePaddle/PaddleOCR/blob/release%2F2.4/ppstructure/vqa/helper/trans_xfun_data.py

# %cd PaddleOCR

-# !python3 ppstructure/vqa/tools/trans_xfun_data.py --ori_gt_path=path/to/json_path --output_path=path/to/save_path

+# python3 ppstructure/vqa/tools/trans_xfun_data.py --ori_gt_path=path/to/json_path --output_path=path/to/save_path

# %cd ../

```

@@ -119,7 +146,7 @@

}

```

-## 3.2 转换为PaddleOCR检测和识别格式

+### 3.2 转换为PaddleOCR检测和识别格式

使用XFUND训练PaddleOCR检测和识别模型,需要将数据集格式改为训练需求的格式。

@@ -147,7 +174,7 @@ train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单

```python

-! unzip -q /home/aistudio/data/data140302/XFUND_ori.zip -d /home/aistudio/data/data140302/

+unzip -q /home/aistudio/data/data140302/XFUND_ori.zip -d /home/aistudio/data/data140302/

```

已经提供转换脚本,执行如下代码即可转换成功:

@@ -155,21 +182,20 @@ train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单

```python

%cd /home/aistudio/

-! python trans_xfund_data.py

+python trans_xfund_data.py

```

-# 4 OCR

+## 4 OCR

选用飞桨OCR开发套件[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/README_ch.md)中的PP-OCRv2模型进行文本检测和识别。PP-OCRv2在PP-OCR的基础上,进一步在5个方面重点优化,检测模型采用CML协同互学习知识蒸馏策略和CopyPaste数据增广策略;识别模型采用LCNet轻量级骨干网络、UDML 改进知识蒸馏策略和[Enhanced CTC loss](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/doc/doc_ch/enhanced_ctc_loss.md)损失函数改进,进一步在推理速度和预测效果上取得明显提升。更多细节请参考PP-OCRv2[技术报告](https://arxiv.org/abs/2109.03144)。

-

-## 4.1 文本检测

+### 4.1 文本检测

我们使用2种方案进行训练、评估:

- **PP-OCRv2中英文超轻量检测预训练模型**

- **XFUND数据集+fine-tune**

-### **4.1.1 方案1:预训练模型**

+#### 4.1.1 方案1:预训练模型

**1)下载预训练模型**

@@ -195,8 +221,8 @@ PaddleOCR已经提供了PP-OCR系列模型,部分模型展示如下表所示

```python

%cd /home/aistudio/PaddleOCR/pretrain/

-! wget https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar

-! tar -xf ch_PP-OCRv2_det_distill_train.tar && rm -rf ch_PP-OCRv2_det_distill_train.tar

+wget https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar

+tar -xf ch_PP-OCRv2_det_distill_train.tar && rm -rf ch_PP-OCRv2_det_distill_train.tar

% cd ..

```

@@ -226,7 +252,7 @@ Eval.dataset.label_file_list:指向验证集标注文件

```python

%cd /home/aistudio/PaddleOCR

-! python tools/eval.py \

+python tools/eval.py \

-c configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_distill.yml \

-o Global.checkpoints="./pretrain_models/ch_PP-OCRv2_det_distill_train/best_accuracy"

```

@@ -237,9 +263,9 @@ Eval.dataset.label_file_list:指向验证集标注文件

| -------- | -------- |

| PP-OCRv2中英文超轻量检测预训练模型 | 77.26% |

-使用文本检测预训练模型在XFUND验证集上评估,达到77%左右,充分说明ppocr提供的预训练模型有一定的泛化能力。

+使用文本检测预训练模型在XFUND验证集上评估,达到77%左右,充分说明ppocr提供的预训练模型具有泛化能力。

-### **4.1.2 方案2:XFUND数据集+fine-tune**

+#### 4.1.2 方案2:XFUND数据集+fine-tune

PaddleOCR提供的蒸馏预训练模型包含了多个模型的参数,我们提取Student模型的参数,在XFUND数据集上进行finetune,可以参考如下代码:

@@ -281,7 +307,7 @@ Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

```python

-! CUDA_VISIBLE_DEVICES=0 python tools/train.py \

+CUDA_VISIBLE_DEVICES=0 python tools/train.py \

-c configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_student.yml

```

@@ -290,12 +316,18 @@ Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

图2 数据集样例,左中文,右法语

-## 3.1 下载处理好的数据集

+### 3.1 下载处理好的数据集

处理好的XFUND中文数据集下载地址:[https://paddleocr.bj.bcebos.com/dataset/XFUND.tar](https://paddleocr.bj.bcebos.com/dataset/XFUND.tar) ,可以运行如下指令完成中文数据集下载和解压。

@@ -69,13 +96,13 @@

```python

-! wget https://paddleocr.bj.bcebos.com/dataset/XFUND.tar

-! tar -xf XFUND.tar

+wget https://paddleocr.bj.bcebos.com/dataset/XFUND.tar

+tar -xf XFUND.tar

# XFUN其他数据集使用下面的代码进行转换

# 代码链接:https://github.com/PaddlePaddle/PaddleOCR/blob/release%2F2.4/ppstructure/vqa/helper/trans_xfun_data.py

# %cd PaddleOCR

-# !python3 ppstructure/vqa/tools/trans_xfun_data.py --ori_gt_path=path/to/json_path --output_path=path/to/save_path

+# python3 ppstructure/vqa/tools/trans_xfun_data.py --ori_gt_path=path/to/json_path --output_path=path/to/save_path

# %cd ../

```

@@ -119,7 +146,7 @@

}

```

-## 3.2 转换为PaddleOCR检测和识别格式

+### 3.2 转换为PaddleOCR检测和识别格式

使用XFUND训练PaddleOCR检测和识别模型,需要将数据集格式改为训练需求的格式。

@@ -147,7 +174,7 @@ train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单

```python

-! unzip -q /home/aistudio/data/data140302/XFUND_ori.zip -d /home/aistudio/data/data140302/

+unzip -q /home/aistudio/data/data140302/XFUND_ori.zip -d /home/aistudio/data/data140302/

```

已经提供转换脚本,执行如下代码即可转换成功:

@@ -155,21 +182,20 @@ train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单

```python

%cd /home/aistudio/

-! python trans_xfund_data.py

+python trans_xfund_data.py

```

-# 4 OCR

+## 4 OCR

选用飞桨OCR开发套件[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/README_ch.md)中的PP-OCRv2模型进行文本检测和识别。PP-OCRv2在PP-OCR的基础上,进一步在5个方面重点优化,检测模型采用CML协同互学习知识蒸馏策略和CopyPaste数据增广策略;识别模型采用LCNet轻量级骨干网络、UDML 改进知识蒸馏策略和[Enhanced CTC loss](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/doc/doc_ch/enhanced_ctc_loss.md)损失函数改进,进一步在推理速度和预测效果上取得明显提升。更多细节请参考PP-OCRv2[技术报告](https://arxiv.org/abs/2109.03144)。

-

-## 4.1 文本检测

+### 4.1 文本检测

我们使用2种方案进行训练、评估:

- **PP-OCRv2中英文超轻量检测预训练模型**

- **XFUND数据集+fine-tune**

-### **4.1.1 方案1:预训练模型**

+#### 4.1.1 方案1:预训练模型

**1)下载预训练模型**

@@ -195,8 +221,8 @@ PaddleOCR已经提供了PP-OCR系列模型,部分模型展示如下表所示

```python

%cd /home/aistudio/PaddleOCR/pretrain/

-! wget https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar

-! tar -xf ch_PP-OCRv2_det_distill_train.tar && rm -rf ch_PP-OCRv2_det_distill_train.tar

+wget https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar

+tar -xf ch_PP-OCRv2_det_distill_train.tar && rm -rf ch_PP-OCRv2_det_distill_train.tar

% cd ..

```

@@ -226,7 +252,7 @@ Eval.dataset.label_file_list:指向验证集标注文件

```python

%cd /home/aistudio/PaddleOCR

-! python tools/eval.py \

+python tools/eval.py \

-c configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_distill.yml \

-o Global.checkpoints="./pretrain_models/ch_PP-OCRv2_det_distill_train/best_accuracy"

```

@@ -237,9 +263,9 @@ Eval.dataset.label_file_list:指向验证集标注文件

| -------- | -------- |

| PP-OCRv2中英文超轻量检测预训练模型 | 77.26% |

-使用文本检测预训练模型在XFUND验证集上评估,达到77%左右,充分说明ppocr提供的预训练模型有一定的泛化能力。

+使用文本检测预训练模型在XFUND验证集上评估,达到77%左右,充分说明ppocr提供的预训练模型具有泛化能力。

-### **4.1.2 方案2:XFUND数据集+fine-tune**

+#### 4.1.2 方案2:XFUND数据集+fine-tune

PaddleOCR提供的蒸馏预训练模型包含了多个模型的参数,我们提取Student模型的参数,在XFUND数据集上进行finetune,可以参考如下代码:

@@ -281,7 +307,7 @@ Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

```python

-! CUDA_VISIBLE_DEVICES=0 python tools/train.py \

+CUDA_VISIBLE_DEVICES=0 python tools/train.py \

-c configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_student.yml

```

@@ -290,12 +316,18 @@ Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

图8 文本检测方案2-模型评估

-使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/ch_db_mv3-student1600-finetune/best_accuracy`,[模型下载地址](https://paddleocr.bj.bcebos.com/fanliku/sheet_recognition/ch_db_mv3-student1600-finetune.zip)

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`。如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

+

图8 文本检测方案2-模型评估

-使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/ch_db_mv3-student1600-finetune/best_accuracy`,[模型下载地址](https://paddleocr.bj.bcebos.com/fanliku/sheet_recognition/ch_db_mv3-student1600-finetune.zip)

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`。如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

+

+

+

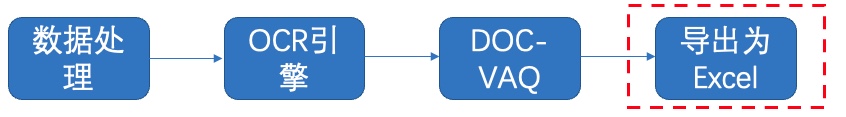

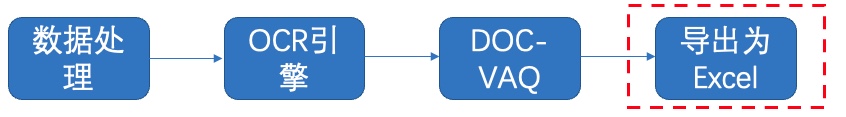

图27 导出Excel

@@ -859,7 +879,7 @@ with open('output/re/infer_results.txt', 'r', encoding='utf-8') as fin:

workbook.close()

```

-# 更多资源

+## 更多资源

- 更多深度学习知识、产业案例、面试宝典等,请参考:[awesome-DeepLearning](https://github.com/paddlepaddle/awesome-DeepLearning)

@@ -869,7 +889,7 @@ workbook.close()

- 飞桨框架相关资料,请参考:[飞桨深度学习平台](https://www.paddlepaddle.org.cn/?fr=paddleEdu_aistudio)

-# 参考链接

+## 参考链接

- LayoutXLM: Multimodal Pre-training for Multilingual Visually-rich Document Understanding, https://arxiv.org/pdf/2104.08836.pdf

diff --git "a/applications/\346\266\262\346\231\266\345\261\217\350\257\273\346\225\260\350\257\206\345\210\253.md" "b/applications/\346\266\262\346\231\266\345\261\217\350\257\273\346\225\260\350\257\206\345\210\253.md"

new file mode 100644

index 0000000000000000000000000000000000000000..ff2fb2cb4812f4f8366605b3c26af5b9aaaa290e

--- /dev/null

+++ "b/applications/\346\266\262\346\231\266\345\261\217\350\257\273\346\225\260\350\257\206\345\210\253.md"

@@ -0,0 +1,616 @@

+# 基于PP-OCRv3的液晶屏读数识别

+

+- [1. 项目背景及意义](#1-项目背景及意义)

+- [2. 项目内容](#2-项目内容)

+- [3. 安装环境](#3-安装环境)

+- [4. 文字检测](#4-文字检测)

+ - [4.1 PP-OCRv3检测算法介绍](#41-PP-OCRv3检测算法介绍)

+ - [4.2 数据准备](#42-数据准备)

+ - [4.3 模型训练](#43-模型训练)

+ - [4.3.1 预训练模型直接评估](#431-预训练模型直接评估)

+ - [4.3.2 预训练模型直接finetune](#432-预训练模型直接finetune)

+ - [4.3.3 基于预训练模型Finetune_student模型](#433-基于预训练模型Finetune_student模型)

+ - [4.3.4 基于预训练模型Finetune_teacher模型](#434-基于预训练模型Finetune_teacher模型)

+ - [4.3.5 采用CML蒸馏进一步提升student模型精度](#435-采用CML蒸馏进一步提升student模型精度)

+ - [4.3.6 模型导出推理](#436-4.3.6-模型导出推理)

+- [5. 文字识别](#5-文字识别)

+ - [5.1 PP-OCRv3识别算法介绍](#51-PP-OCRv3识别算法介绍)

+ - [5.2 数据准备](#52-数据准备)

+ - [5.3 模型训练](#53-模型训练)

+ - [5.4 模型导出推理](#54-模型导出推理)

+- [6. 系统串联](#6-系统串联)

+ - [6.1 后处理](#61-后处理)

+- [7. PaddleServing部署](#7-PaddleServing部署)

+

+

+## 1. 项目背景及意义

+目前光学字符识别(OCR)技术在我们的生活当中被广泛使用,但是大多数模型在通用场景下的准确性还有待提高,针对于此我们借助飞桨提供的PaddleOCR套件较容易的实现了在垂类场景下的应用。

+

+该项目以国家质量基础(NQI)为准绳,充分利用大数据、云计算、物联网等高新技术,构建覆盖计量端、实验室端、数据端和硬件端的完整计量解决方案,解决传统计量校准中存在的难题,拓宽计量检测服务体系和服务领域;解决无数传接口或数传接口不统一、不公开的计量设备,以及计量设备所处的环境比较恶劣,不适合人工读取数据。通过OCR技术实现远程计量,引领计量行业向智慧计量转型和发展。

+

+## 2. 项目内容

+本项目基于PaddleOCR开源套件,以PP-OCRv3检测和识别模型为基础,针对液晶屏读数识别场景进行优化。

+

+Aistudio项目链接:[OCR液晶屏读数识别](https://aistudio.baidu.com/aistudio/projectdetail/4080130)

+

+## 3. 安装环境

+

+```python

+# 首先git官方的PaddleOCR项目,安装需要的依赖

+# 第一次运行打开该注释

+# git clone https://gitee.com/PaddlePaddle/PaddleOCR.git

+cd PaddleOCR

+pip install -r requirements.txt

+```

+

+## 4. 文字检测

+文本检测的任务是定位出输入图像中的文字区域。近年来学术界关于文本检测的研究非常丰富,一类方法将文本检测视为目标检测中的一个特定场景,基于通用目标检测算法进行改进适配,如TextBoxes[1]基于一阶段目标检测器SSD[2]算法,调整目标框使之适合极端长宽比的文本行,CTPN[3]则是基于Faster RCNN[4]架构改进而来。但是文本检测与目标检测在目标信息以及任务本身上仍存在一些区别,如文本一般长宽比较大,往往呈“条状”,文本行之间可能比较密集,弯曲文本等,因此又衍生了很多专用于文本检测的算法。本项目基于PP-OCRv3算法进行优化。

+

+### 4.1 PP-OCRv3检测算法介绍

+PP-OCRv3检测模型是对PP-OCRv2中的CML(Collaborative Mutual Learning) 协同互学习文本检测蒸馏策略进行了升级。如下图所示,CML的核心思想结合了①传统的Teacher指导Student的标准蒸馏与 ②Students网络之间的DML互学习,可以让Students网络互学习的同时,Teacher网络予以指导。PP-OCRv3分别针对教师模型和学生模型进行进一步效果优化。其中,在对教师模型优化时,提出了大感受野的PAN结构LK-PAN和引入了DML(Deep Mutual Learning)蒸馏策略;在对学生模型优化时,提出了残差注意力机制的FPN结构RSE-FPN。

+

+

+详细优化策略描述请参考[PP-OCRv3优化策略](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md#2)

+

+### 4.2 数据准备

+[计量设备屏幕字符检测数据集](https://aistudio.baidu.com/aistudio/datasetdetail/127845)数据来源于实际项目中各种计量设备的数显屏,以及在网上搜集的一些其他数显屏,包含训练集755张,测试集355张。

+

+```python

+# 在PaddleOCR下创建新的文件夹train_data

+mkdir train_data

+# 下载数据集并解压到指定路径下

+unzip icdar2015.zip -d train_data

+```

+

+```python

+# 随机查看文字检测数据集图片

+from PIL import Image

+import matplotlib.pyplot as plt

+import numpy as np

+import os

+

+

+train = './train_data/icdar2015/text_localization/test'

+# 从指定目录中选取一张图片

+def get_one_image(train):

+ plt.figure()

+ files = os.listdir(train)

+ n = len(files)

+ ind = np.random.randint(0,n)

+ img_dir = os.path.join(train,files[ind])

+ image = Image.open(img_dir)

+ plt.imshow(image)

+ plt.show()

+ image = image.resize([208, 208])

+

+get_one_image(train)

+```

+

+

+### 4.3 模型训练

+

+#### 4.3.1 预训练模型直接评估

+下载我们需要的PP-OCRv3检测预训练模型,更多选择请自行选择其他的[文字检测模型](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/models_list.md#1-%E6%96%87%E6%9C%AC%E6%A3%80%E6%B5%8B%E6%A8%A1%E5%9E%8B)

+

+```python

+#使用该指令下载需要的预训练模型

+wget -P ./pretrained_models/ https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar

+# 解压预训练模型文件

+tar -xf ./pretrained_models/ch_PP-OCRv3_det_distill_train.tar -C pretrained_models

+```

+

+在训练之前,我们可以直接使用下面命令来评估预训练模型的效果:

+

+```python

+# 评估预训练模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model="./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy"

+```

+

+结果如下:

+

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+

+#### 4.3.2 预训练模型直接finetune

+##### 修改配置文件

+我们使用configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml,主要修改训练轮数和学习率参相关参数,设置预训练模型路径,设置数据集路径。 另外,batch_size可根据自己机器显存大小进行调整。 具体修改如下几个地方:

+```

+epoch:100

+save_epoch_step:10

+eval_batch_step:[0, 50]

+save_model_dir: ./output/ch_PP-OCR_v3_det/

+pretrained_model: ./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy

+learning_rate: 0.00025

+num_workers: 0 # 如果单卡训练,建议将Train和Eval的loader部分的num_workers设置为0,否则会出现`/dev/shm insufficient`的报错

+```

+

+##### 开始训练

+使用我们上面修改的配置文件configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml,训练命令如下:

+

+```python

+# 开始训练模型

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+

+#### 4.3.3 基于预训练模型Finetune_student模型

+

+我们使用configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml,主要修改训练轮数和学习率参相关参数,设置预训练模型路径,设置数据集路径。 另外,batch_size可根据自己机器显存大小进行调整。 具体修改如下几个地方:

+```

+epoch:100

+save_epoch_step:10

+eval_batch_step:[0, 50]

+save_model_dir: ./output/ch_PP-OCR_v3_det_student/

+pretrained_model: ./pretrained_models/ch_PP-OCRv3_det_distill_train/student

+learning_rate: 0.00025

+num_workers: 0 # 如果单卡训练,建议将Train和Eval的loader部分的num_workers设置为0,否则会出现`/dev/shm insufficient`的报错

+```

+

+训练命令如下:

+

+```python

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/student

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det_student/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+| 2 | PP-OCRv3中英文超轻量检测预训练模型fintune学生模型 |80.0%|

+

+#### 4.3.4 基于预训练模型Finetune_teacher模型

+

+首先需要从提供的预训练模型best_accuracy.pdparams中提取teacher参数,组合成适合dml训练的初始化模型,提取代码如下:

+

+```python

+cd ./pretrained_models/

+# transform teacher params in best_accuracy.pdparams into teacher_dml.paramers

+import paddle

+

+# load pretrained model

+all_params = paddle.load("ch_PP-OCRv3_det_distill_train/best_accuracy.pdparams")

+# print(all_params.keys())

+

+# keep teacher params

+t_params = {key[len("Teacher."):]: all_params[key] for key in all_params if "Teacher." in key}

+

+# print(t_params.keys())

+

+s_params = {"Student." + key: t_params[key] for key in t_params}

+s2_params = {"Student2." + key: t_params[key] for key in t_params}

+s_params = {**s_params, **s2_params}

+# print(s_params.keys())

+

+paddle.save(s_params, "ch_PP-OCRv3_det_distill_train/teacher_dml.pdparams")

+

+```

+

+我们使用configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml,主要修改训练轮数和学习率参相关参数,设置预训练模型路径,设置数据集路径。 另外,batch_size可根据自己机器显存大小进行调整。 具体修改如下几个地方:

+```

+epoch:100

+save_epoch_step:10

+eval_batch_step:[0, 50]

+save_model_dir: ./output/ch_PP-OCR_v3_det_teacher/

+pretrained_model: ./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_dml

+learning_rate: 0.00025

+num_workers: 0 # 如果单卡训练,建议将Train和Eval的loader部分的num_workers设置为0,否则会出现`/dev/shm insufficient`的报错

+```

+

+训练命令如下:

+

+```python

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_dml

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det_teacher/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+| 2 | PP-OCRv3中英文超轻量检测预训练模型fintune学生模型 |80.0%|

+| 3 | PP-OCRv3中英文超轻量检测预训练模型fintune教师模型 |84.8%|

+

+#### 4.3.5 采用CML蒸馏进一步提升student模型精度

+

+需要从4.3.3和4.3.4训练得到的best_accuracy.pdparams中提取各自代表student和teacher的参数,组合成适合cml训练的初始化模型,提取代码如下:

+

+```python

+# transform teacher params and student parameters into cml model

+import paddle

+

+all_params = paddle.load("./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy.pdparams")

+# print(all_params.keys())

+

+t_params = paddle.load("./output/ch_PP-OCR_v3_det_teacher/best_accuracy.pdparams")

+# print(t_params.keys())

+

+s_params = paddle.load("./output/ch_PP-OCR_v3_det_student/best_accuracy.pdparams")

+# print(s_params.keys())

+

+for key in all_params:

+ # teacher is OK

+ if "Teacher." in key:

+ new_key = key.replace("Teacher", "Student")

+ #print("{} >> {}\n".format(key, new_key))

+ assert all_params[key].shape == t_params[new_key].shape

+ all_params[key] = t_params[new_key]

+

+ if "Student." in key:

+ new_key = key.replace("Student.", "")

+ #print("{} >> {}\n".format(key, new_key))

+ assert all_params[key].shape == s_params[new_key].shape

+ all_params[key] = s_params[new_key]

+

+ if "Student2." in key:

+ new_key = key.replace("Student2.", "")

+ print("{} >> {}\n".format(key, new_key))

+ assert all_params[key].shape == s_params[new_key].shape

+ all_params[key] = s_params[new_key]

+

+paddle.save(all_params, "./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_cml_student.pdparams")

+```

+

+训练命令如下:

+

+```python

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_cml_student Global.save_model_dir=./output/ch_PP-OCR_v3_det_finetune/

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det_finetune/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+| 2 | PP-OCRv3中英文超轻量检测预训练模型fintune学生模型 |80.0%|

+| 3 | PP-OCRv3中英文超轻量检测预训练模型fintune教师模型 |84.8%|

+| 4 | 基于2和3训练好的模型fintune |82.7%|

+

+如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

图27 导出Excel

@@ -859,7 +879,7 @@ with open('output/re/infer_results.txt', 'r', encoding='utf-8') as fin:

workbook.close()

```

-# 更多资源

+## 更多资源

- 更多深度学习知识、产业案例、面试宝典等,请参考:[awesome-DeepLearning](https://github.com/paddlepaddle/awesome-DeepLearning)

@@ -869,7 +889,7 @@ workbook.close()

- 飞桨框架相关资料,请参考:[飞桨深度学习平台](https://www.paddlepaddle.org.cn/?fr=paddleEdu_aistudio)

-# 参考链接

+## 参考链接

- LayoutXLM: Multimodal Pre-training for Multilingual Visually-rich Document Understanding, https://arxiv.org/pdf/2104.08836.pdf

diff --git "a/applications/\346\266\262\346\231\266\345\261\217\350\257\273\346\225\260\350\257\206\345\210\253.md" "b/applications/\346\266\262\346\231\266\345\261\217\350\257\273\346\225\260\350\257\206\345\210\253.md"

new file mode 100644

index 0000000000000000000000000000000000000000..ff2fb2cb4812f4f8366605b3c26af5b9aaaa290e

--- /dev/null

+++ "b/applications/\346\266\262\346\231\266\345\261\217\350\257\273\346\225\260\350\257\206\345\210\253.md"

@@ -0,0 +1,616 @@

+# 基于PP-OCRv3的液晶屏读数识别

+

+- [1. 项目背景及意义](#1-项目背景及意义)

+- [2. 项目内容](#2-项目内容)

+- [3. 安装环境](#3-安装环境)

+- [4. 文字检测](#4-文字检测)

+ - [4.1 PP-OCRv3检测算法介绍](#41-PP-OCRv3检测算法介绍)

+ - [4.2 数据准备](#42-数据准备)

+ - [4.3 模型训练](#43-模型训练)

+ - [4.3.1 预训练模型直接评估](#431-预训练模型直接评估)

+ - [4.3.2 预训练模型直接finetune](#432-预训练模型直接finetune)

+ - [4.3.3 基于预训练模型Finetune_student模型](#433-基于预训练模型Finetune_student模型)

+ - [4.3.4 基于预训练模型Finetune_teacher模型](#434-基于预训练模型Finetune_teacher模型)

+ - [4.3.5 采用CML蒸馏进一步提升student模型精度](#435-采用CML蒸馏进一步提升student模型精度)

+ - [4.3.6 模型导出推理](#436-4.3.6-模型导出推理)

+- [5. 文字识别](#5-文字识别)

+ - [5.1 PP-OCRv3识别算法介绍](#51-PP-OCRv3识别算法介绍)

+ - [5.2 数据准备](#52-数据准备)

+ - [5.3 模型训练](#53-模型训练)

+ - [5.4 模型导出推理](#54-模型导出推理)

+- [6. 系统串联](#6-系统串联)

+ - [6.1 后处理](#61-后处理)

+- [7. PaddleServing部署](#7-PaddleServing部署)

+

+

+## 1. 项目背景及意义

+目前光学字符识别(OCR)技术在我们的生活当中被广泛使用,但是大多数模型在通用场景下的准确性还有待提高,针对于此我们借助飞桨提供的PaddleOCR套件较容易的实现了在垂类场景下的应用。

+

+该项目以国家质量基础(NQI)为准绳,充分利用大数据、云计算、物联网等高新技术,构建覆盖计量端、实验室端、数据端和硬件端的完整计量解决方案,解决传统计量校准中存在的难题,拓宽计量检测服务体系和服务领域;解决无数传接口或数传接口不统一、不公开的计量设备,以及计量设备所处的环境比较恶劣,不适合人工读取数据。通过OCR技术实现远程计量,引领计量行业向智慧计量转型和发展。

+

+## 2. 项目内容

+本项目基于PaddleOCR开源套件,以PP-OCRv3检测和识别模型为基础,针对液晶屏读数识别场景进行优化。

+

+Aistudio项目链接:[OCR液晶屏读数识别](https://aistudio.baidu.com/aistudio/projectdetail/4080130)

+

+## 3. 安装环境

+

+```python

+# 首先git官方的PaddleOCR项目,安装需要的依赖

+# 第一次运行打开该注释

+# git clone https://gitee.com/PaddlePaddle/PaddleOCR.git

+cd PaddleOCR

+pip install -r requirements.txt

+```

+

+## 4. 文字检测

+文本检测的任务是定位出输入图像中的文字区域。近年来学术界关于文本检测的研究非常丰富,一类方法将文本检测视为目标检测中的一个特定场景,基于通用目标检测算法进行改进适配,如TextBoxes[1]基于一阶段目标检测器SSD[2]算法,调整目标框使之适合极端长宽比的文本行,CTPN[3]则是基于Faster RCNN[4]架构改进而来。但是文本检测与目标检测在目标信息以及任务本身上仍存在一些区别,如文本一般长宽比较大,往往呈“条状”,文本行之间可能比较密集,弯曲文本等,因此又衍生了很多专用于文本检测的算法。本项目基于PP-OCRv3算法进行优化。

+

+### 4.1 PP-OCRv3检测算法介绍

+PP-OCRv3检测模型是对PP-OCRv2中的CML(Collaborative Mutual Learning) 协同互学习文本检测蒸馏策略进行了升级。如下图所示,CML的核心思想结合了①传统的Teacher指导Student的标准蒸馏与 ②Students网络之间的DML互学习,可以让Students网络互学习的同时,Teacher网络予以指导。PP-OCRv3分别针对教师模型和学生模型进行进一步效果优化。其中,在对教师模型优化时,提出了大感受野的PAN结构LK-PAN和引入了DML(Deep Mutual Learning)蒸馏策略;在对学生模型优化时,提出了残差注意力机制的FPN结构RSE-FPN。

+

+

+详细优化策略描述请参考[PP-OCRv3优化策略](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md#2)

+

+### 4.2 数据准备

+[计量设备屏幕字符检测数据集](https://aistudio.baidu.com/aistudio/datasetdetail/127845)数据来源于实际项目中各种计量设备的数显屏,以及在网上搜集的一些其他数显屏,包含训练集755张,测试集355张。

+

+```python

+# 在PaddleOCR下创建新的文件夹train_data

+mkdir train_data

+# 下载数据集并解压到指定路径下

+unzip icdar2015.zip -d train_data

+```

+

+```python

+# 随机查看文字检测数据集图片

+from PIL import Image

+import matplotlib.pyplot as plt

+import numpy as np

+import os

+

+

+train = './train_data/icdar2015/text_localization/test'

+# 从指定目录中选取一张图片

+def get_one_image(train):

+ plt.figure()

+ files = os.listdir(train)

+ n = len(files)

+ ind = np.random.randint(0,n)

+ img_dir = os.path.join(train,files[ind])

+ image = Image.open(img_dir)

+ plt.imshow(image)

+ plt.show()

+ image = image.resize([208, 208])

+

+get_one_image(train)

+```

+

+

+### 4.3 模型训练

+

+#### 4.3.1 预训练模型直接评估

+下载我们需要的PP-OCRv3检测预训练模型,更多选择请自行选择其他的[文字检测模型](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/models_list.md#1-%E6%96%87%E6%9C%AC%E6%A3%80%E6%B5%8B%E6%A8%A1%E5%9E%8B)

+

+```python

+#使用该指令下载需要的预训练模型

+wget -P ./pretrained_models/ https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar

+# 解压预训练模型文件

+tar -xf ./pretrained_models/ch_PP-OCRv3_det_distill_train.tar -C pretrained_models

+```

+

+在训练之前,我们可以直接使用下面命令来评估预训练模型的效果:

+

+```python

+# 评估预训练模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model="./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy"

+```

+

+结果如下:

+

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+

+#### 4.3.2 预训练模型直接finetune

+##### 修改配置文件

+我们使用configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml,主要修改训练轮数和学习率参相关参数,设置预训练模型路径,设置数据集路径。 另外,batch_size可根据自己机器显存大小进行调整。 具体修改如下几个地方:

+```

+epoch:100

+save_epoch_step:10

+eval_batch_step:[0, 50]

+save_model_dir: ./output/ch_PP-OCR_v3_det/

+pretrained_model: ./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy

+learning_rate: 0.00025

+num_workers: 0 # 如果单卡训练,建议将Train和Eval的loader部分的num_workers设置为0,否则会出现`/dev/shm insufficient`的报错

+```

+

+##### 开始训练

+使用我们上面修改的配置文件configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml,训练命令如下:

+

+```python

+# 开始训练模型

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+

+#### 4.3.3 基于预训练模型Finetune_student模型

+

+我们使用configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml,主要修改训练轮数和学习率参相关参数,设置预训练模型路径,设置数据集路径。 另外,batch_size可根据自己机器显存大小进行调整。 具体修改如下几个地方:

+```

+epoch:100

+save_epoch_step:10

+eval_batch_step:[0, 50]

+save_model_dir: ./output/ch_PP-OCR_v3_det_student/

+pretrained_model: ./pretrained_models/ch_PP-OCRv3_det_distill_train/student

+learning_rate: 0.00025

+num_workers: 0 # 如果单卡训练,建议将Train和Eval的loader部分的num_workers设置为0,否则会出现`/dev/shm insufficient`的报错

+```

+

+训练命令如下:

+

+```python

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/student

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det_student/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+| 2 | PP-OCRv3中英文超轻量检测预训练模型fintune学生模型 |80.0%|

+

+#### 4.3.4 基于预训练模型Finetune_teacher模型

+

+首先需要从提供的预训练模型best_accuracy.pdparams中提取teacher参数,组合成适合dml训练的初始化模型,提取代码如下:

+

+```python

+cd ./pretrained_models/

+# transform teacher params in best_accuracy.pdparams into teacher_dml.paramers

+import paddle

+

+# load pretrained model

+all_params = paddle.load("ch_PP-OCRv3_det_distill_train/best_accuracy.pdparams")

+# print(all_params.keys())

+

+# keep teacher params

+t_params = {key[len("Teacher."):]: all_params[key] for key in all_params if "Teacher." in key}

+

+# print(t_params.keys())

+

+s_params = {"Student." + key: t_params[key] for key in t_params}

+s2_params = {"Student2." + key: t_params[key] for key in t_params}

+s_params = {**s_params, **s2_params}

+# print(s_params.keys())

+

+paddle.save(s_params, "ch_PP-OCRv3_det_distill_train/teacher_dml.pdparams")

+

+```

+

+我们使用configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml,主要修改训练轮数和学习率参相关参数,设置预训练模型路径,设置数据集路径。 另外,batch_size可根据自己机器显存大小进行调整。 具体修改如下几个地方:

+```

+epoch:100

+save_epoch_step:10

+eval_batch_step:[0, 50]

+save_model_dir: ./output/ch_PP-OCR_v3_det_teacher/

+pretrained_model: ./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_dml

+learning_rate: 0.00025

+num_workers: 0 # 如果单卡训练,建议将Train和Eval的loader部分的num_workers设置为0,否则会出现`/dev/shm insufficient`的报错

+```

+

+训练命令如下:

+

+```python

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_dml

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det_teacher/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+| 2 | PP-OCRv3中英文超轻量检测预训练模型fintune学生模型 |80.0%|

+| 3 | PP-OCRv3中英文超轻量检测预训练模型fintune教师模型 |84.8%|

+

+#### 4.3.5 采用CML蒸馏进一步提升student模型精度

+

+需要从4.3.3和4.3.4训练得到的best_accuracy.pdparams中提取各自代表student和teacher的参数,组合成适合cml训练的初始化模型,提取代码如下:

+

+```python

+# transform teacher params and student parameters into cml model

+import paddle

+

+all_params = paddle.load("./pretrained_models/ch_PP-OCRv3_det_distill_train/best_accuracy.pdparams")

+# print(all_params.keys())

+

+t_params = paddle.load("./output/ch_PP-OCR_v3_det_teacher/best_accuracy.pdparams")

+# print(t_params.keys())

+

+s_params = paddle.load("./output/ch_PP-OCR_v3_det_student/best_accuracy.pdparams")

+# print(s_params.keys())

+

+for key in all_params:

+ # teacher is OK

+ if "Teacher." in key:

+ new_key = key.replace("Teacher", "Student")

+ #print("{} >> {}\n".format(key, new_key))

+ assert all_params[key].shape == t_params[new_key].shape

+ all_params[key] = t_params[new_key]

+

+ if "Student." in key:

+ new_key = key.replace("Student.", "")

+ #print("{} >> {}\n".format(key, new_key))

+ assert all_params[key].shape == s_params[new_key].shape

+ all_params[key] = s_params[new_key]

+

+ if "Student2." in key:

+ new_key = key.replace("Student2.", "")

+ print("{} >> {}\n".format(key, new_key))

+ assert all_params[key].shape == s_params[new_key].shape

+ all_params[key] = s_params[new_key]

+

+paddle.save(all_params, "./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_cml_student.pdparams")

+```

+

+训练命令如下:

+

+```python

+python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model=./pretrained_models/ch_PP-OCRv3_det_distill_train/teacher_cml_student Global.save_model_dir=./output/ch_PP-OCR_v3_det_finetune/

+```

+

+评估训练好的模型:

+

+```python

+# 评估训练好的模型

+python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml -o Global.pretrained_model="./output/ch_PP-OCR_v3_det_finetune/best_accuracy"

+```

+

+结果如下:

+| | 方案 |hmeans|

+|---|---------------------------|---|

+| 0 | PP-OCRv3中英文超轻量检测预训练模型直接预测 |47.5%|

+| 1 | PP-OCRv3中英文超轻量检测预训练模型fintune |65.2%|

+| 2 | PP-OCRv3中英文超轻量检测预训练模型fintune学生模型 |80.0%|

+| 3 | PP-OCRv3中英文超轻量检测预训练模型fintune教师模型 |84.8%|

+| 4 | 基于2和3训练好的模型fintune |82.7%|

+

+如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

+

+

+

+

+

+

+

+

', ''] + dict_character

- return dict_character

-

-

class CTCLabelEncode(BaseRecLabelEncode):

""" Convert between text-label and text-index """

@@ -290,15 +259,26 @@ class E2ELabelEncodeTrain(object):

class KieLabelEncode(object):

- def __init__(self, character_dict_path, norm=10, directed=False, **kwargs):

+ def __init__(self,

+ character_dict_path,

+ class_path,

+ norm=10,

+ directed=False,

+ **kwargs):

super(KieLabelEncode, self).__init__()

self.dict = dict({'': 0})

+ self.label2classid_map = dict()

with open(character_dict_path, 'r', encoding='utf-8') as fr:

idx = 1

for line in fr:

char = line.strip()

self.dict[char] = idx

idx += 1

+ with open(class_path, "r") as fin:

+ lines = fin.readlines()

+ for idx, line in enumerate(lines):

+ line = line.strip("\n")

+ self.label2classid_map[line] = idx

self.norm = norm

self.directed = directed

@@ -439,7 +419,7 @@ class KieLabelEncode(object):

text_ind = [self.dict[c] for c in text if c in self.dict]

text_inds.append(text_ind)

if 'label' in ann.keys():

- labels.append(ann['label'])

+ labels.append(self.label2classid_map[ann['label']])

elif 'key_cls' in ann.keys():

labels.append(ann['key_cls'])

else:

@@ -907,15 +887,16 @@ class VQATokenLabelEncode(object):

for info in ocr_info:

if train_re:

# for re

- if len(info["text"]) == 0:

+ if len(info["transcription"]) == 0:

empty_entity.add(info["id"])

continue

id2label[info["id"]] = info["label"]

relations.extend([tuple(sorted(l)) for l in info["linking"]])

# smooth_box

+ info["bbox"] = self.trans_poly_to_bbox(info["points"])

bbox = self._smooth_box(info["bbox"], height, width)

- text = info["text"]

+ text = info["transcription"]

encode_res = self.tokenizer.encode(

text, pad_to_max_seq_len=False, return_attention_mask=True)

@@ -931,7 +912,7 @@ class VQATokenLabelEncode(object):

label = info['label']

gt_label = self._parse_label(label, encode_res)

- # construct entities for re

+# construct entities for re

if train_re:

if gt_label[0] != self.label2id_map["O"]:

entity_id_to_index_map[info["id"]] = len(entities)

@@ -975,29 +956,29 @@ class VQATokenLabelEncode(object):

data['entity_id_to_index_map'] = entity_id_to_index_map

return data

- def _load_ocr_info(self, data):

- def trans_poly_to_bbox(poly):

- x1 = np.min([p[0] for p in poly])

- x2 = np.max([p[0] for p in poly])

- y1 = np.min([p[1] for p in poly])

- y2 = np.max([p[1] for p in poly])

- return [x1, y1, x2, y2]

+ def trans_poly_to_bbox(self, poly):

+ x1 = np.min([p[0] for p in poly])

+ x2 = np.max([p[0] for p in poly])

+ y1 = np.min([p[1] for p in poly])

+ y2 = np.max([p[1] for p in poly])

+ return [x1, y1, x2, y2]

+ def _load_ocr_info(self, data):

if self.infer_mode:

ocr_result = self.ocr_engine.ocr(data['image'], cls=False)

ocr_info = []

for res in ocr_result:

ocr_info.append({

- "text": res[1][0],

- "bbox": trans_poly_to_bbox(res[0]),

- "poly": res[0],

+ "transcription": res[1][0],

+ "bbox": self.trans_poly_to_bbox(res[0]),

+ "points": res[0],

})

return ocr_info

else:

info = data['label']

# read text info

info_dict = json.loads(info)

- return info_dict["ocr_info"]

+ return info_dict

def _smooth_box(self, bbox, height, width):

bbox[0] = int(bbox[0] * 1000.0 / width)

@@ -1008,7 +989,7 @@ class VQATokenLabelEncode(object):

def _parse_label(self, label, encode_res):

gt_label = []

- if label.lower() == "other":

+ if label.lower() in ["other", "others", "ignore"]:

gt_label.extend([0] * len(encode_res["input_ids"]))

else:

gt_label.append(self.label2id_map[("b-" + label).upper()])

@@ -1046,3 +1027,99 @@ class MultiLabelEncode(BaseRecLabelEncode):

data_out['label_sar'] = sar['label']

data_out['length'] = ctc['length']

return data_out

+

+

+class NRTRLabelEncode(BaseRecLabelEncode):

+ """ Convert between text-label and text-index """

+

+ def __init__(self,

+ max_text_length,

+ character_dict_path=None,

+ use_space_char=False,

+ **kwargs):

+

+ super(NRTRLabelEncode, self).__init__(

+ max_text_length, character_dict_path, use_space_char)

+

+ def __call__(self, data):

+ text = data['label']

+ text = self.encode(text)

+ if text is None:

+ return None

+ if len(text) >= self.max_text_len - 1:

+ return None

+ data['length'] = np.array(len(text))

+ text.insert(0, 2)

+ text.append(3)

+ text = text + [0] * (self.max_text_len - len(text))

+ data['label'] = np.array(text)

+ return data

+

+ def add_special_char(self, dict_character):

+ dict_character = ['blank', '', '', ''] + dict_character

+ return dict_character

+

+

+class ViTSTRLabelEncode(BaseRecLabelEncode):

+ """ Convert between text-label and text-index """

+

+ def __init__(self,

+ max_text_length,

+ character_dict_path=None,

+ use_space_char=False,

+ ignore_index=0,

+ **kwargs):

+

+ super(ViTSTRLabelEncode, self).__init__(

+ max_text_length, character_dict_path, use_space_char)

+ self.ignore_index = ignore_index

+

+ def __call__(self, data):

+ text = data['label']

+ text = self.encode(text)

+ if text is None:

+ return None

+ if len(text) >= self.max_text_len:

+ return None

+ data['length'] = np.array(len(text))

+ text.insert(0, self.ignore_index)

+ text.append(1)

+ text = text + [self.ignore_index] * (self.max_text_len + 2 - len(text))

+ data['label'] = np.array(text)

+ return data

+

+ def add_special_char(self, dict_character):

+ dict_character = ['', ''] + dict_character

+ return dict_character

+

+

+class ABINetLabelEncode(BaseRecLabelEncode):

+ """ Convert between text-label and text-index """

+

+ def __init__(self,

+ max_text_length,

+ character_dict_path=None,

+ use_space_char=False,

+ ignore_index=100,

+ **kwargs):

+

+ super(ABINetLabelEncode, self).__init__(

+ max_text_length, character_dict_path, use_space_char)

+ self.ignore_index = ignore_index

+

+ def __call__(self, data):

+ text = data['label']

+ text = self.encode(text)

+ if text is None:

+ return None

+ if len(text) >= self.max_text_len:

+ return None

+ data['length'] = np.array(len(text))

+ text.append(0)

+ text = text + [self.ignore_index] * (self.max_text_len + 1 - len(text))

+ data['label'] = np.array(text)

+ return data

+

+ def add_special_char(self, dict_character):

+ dict_character = [''] + dict_character

+ return dict_character

diff --git a/ppocr/data/imaug/operators.py b/ppocr/data/imaug/operators.py

index 070fb7afa0b366c016edadb2b734ba5a6798743a..04cc2848fb4d25baaf553c6eda235ddb0e86511f 100644

--- a/ppocr/data/imaug/operators.py

+++ b/ppocr/data/imaug/operators.py

@@ -67,39 +67,6 @@ class DecodeImage(object):

return data

-class NRTRDecodeImage(object):

- """ decode image """

-

- def __init__(self, img_mode='RGB', channel_first=False, **kwargs):

- self.img_mode = img_mode

- self.channel_first = channel_first

-

- def __call__(self, data):

- img = data['image']

- if six.PY2:

- assert type(img) is str and len(

- img) > 0, "invalid input 'img' in DecodeImage"

- else:

- assert type(img) is bytes and len(

- img) > 0, "invalid input 'img' in DecodeImage"

- img = np.frombuffer(img, dtype='uint8')

-

- img = cv2.imdecode(img, 1)

-

- if img is None:

- return None

- if self.img_mode == 'GRAY':

- img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

- elif self.img_mode == 'RGB':

- assert img.shape[2] == 3, 'invalid shape of image[%s]' % (img.shape)

- img = img[:, :, ::-1]

- img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

- if self.channel_first:

- img = img.transpose((2, 0, 1))

- data['image'] = img

- return data

-

-

class NormalizeImage(object):

""" normalize image such as substract mean, divide std

"""

diff --git a/ppocr/data/imaug/rec_img_aug.py b/ppocr/data/imaug/rec_img_aug.py

index 32de2b3fc36757fee954de41686d09999ce4c640..26773d0a516dfb0877453c7a5c8c8a2b5da92045 100644

--- a/ppocr/data/imaug/rec_img_aug.py

+++ b/ppocr/data/imaug/rec_img_aug.py

@@ -19,6 +19,8 @@ import random

import copy

from PIL import Image

from .text_image_aug import tia_perspective, tia_stretch, tia_distort

+from .abinet_aug import CVGeometry, CVDeterioration, CVColorJitter

+from paddle.vision.transforms import Compose

class RecAug(object):

@@ -94,6 +96,36 @@ class BaseDataAugmentation(object):

return data

+class ABINetRecAug(object):

+ def __init__(self,

+ geometry_p=0.5,

+ deterioration_p=0.25,

+ colorjitter_p=0.25,

+ **kwargs):

+ self.transforms = Compose([

+ CVGeometry(

+ degrees=45,

+ translate=(0.0, 0.0),

+ scale=(0.5, 2.),

+ shear=(45, 15),

+ distortion=0.5,

+ p=geometry_p), CVDeterioration(

+ var=20, degrees=6, factor=4, p=deterioration_p),

+ CVColorJitter(

+ brightness=0.5,

+ contrast=0.5,

+ saturation=0.5,

+ hue=0.1,

+ p=colorjitter_p)

+ ])

+

+ def __call__(self, data):

+ img = data['image']

+ img = self.transforms(img)

+ data['image'] = img

+ return data

+

+

class RecConAug(object):

def __init__(self,

prob=0.5,

@@ -148,46 +180,6 @@ class ClsResizeImg(object):

return data

-class NRTRRecResizeImg(object):

- def __init__(self, image_shape, resize_type, padding=False, **kwargs):

- self.image_shape = image_shape

- self.resize_type = resize_type

- self.padding = padding

-

- def __call__(self, data):

- img = data['image']

- img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

- image_shape = self.image_shape

- if self.padding:

- imgC, imgH, imgW = image_shape

- # todo: change to 0 and modified image shape

- h = img.shape[0]

- w = img.shape[1]

- ratio = w / float(h)

- if math.ceil(imgH * ratio) > imgW:

- resized_w = imgW

- else:

- resized_w = int(math.ceil(imgH * ratio))

- resized_image = cv2.resize(img, (resized_w, imgH))

- norm_img = np.expand_dims(resized_image, -1)

- norm_img = norm_img.transpose((2, 0, 1))

- resized_image = norm_img.astype(np.float32) / 128. - 1.

- padding_im = np.zeros((imgC, imgH, imgW), dtype=np.float32)

- padding_im[:, :, 0:resized_w] = resized_image

- data['image'] = padding_im

- return data

- if self.resize_type == 'PIL':

- image_pil = Image.fromarray(np.uint8(img))

- img = image_pil.resize(self.image_shape, Image.ANTIALIAS)

- img = np.array(img)

- if self.resize_type == 'OpenCV':

- img = cv2.resize(img, self.image_shape)

- norm_img = np.expand_dims(img, -1)

- norm_img = norm_img.transpose((2, 0, 1))

- data['image'] = norm_img.astype(np.float32) / 128. - 1.

- return data

-

-

class RecResizeImg(object):

def __init__(self,

image_shape,

@@ -268,6 +260,84 @@ class PRENResizeImg(object):

return data

+class GrayRecResizeImg(object):

+ def __init__(self,

+ image_shape,

+ resize_type,

+ inter_type='Image.ANTIALIAS',

+ scale=True,

+ padding=False,

+ **kwargs):

+ self.image_shape = image_shape

+ self.resize_type = resize_type

+ self.padding = padding

+ self.inter_type = eval(inter_type)

+ self.scale = scale

+

+ def __call__(self, data):

+ img = data['image']

+ img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

+ image_shape = self.image_shape

+ if self.padding:

+ imgC, imgH, imgW = image_shape

+ # todo: change to 0 and modified image shape

+ h = img.shape[0]

+ w = img.shape[1]

+ ratio = w / float(h)

+ if math.ceil(imgH * ratio) > imgW:

+ resized_w = imgW

+ else:

+ resized_w = int(math.ceil(imgH * ratio))

+ resized_image = cv2.resize(img, (resized_w, imgH))

+ norm_img = np.expand_dims(resized_image, -1)

+ norm_img = norm_img.transpose((2, 0, 1))

+ resized_image = norm_img.astype(np.float32) / 128. - 1.

+ padding_im = np.zeros((imgC, imgH, imgW), dtype=np.float32)

+ padding_im[:, :, 0:resized_w] = resized_image

+ data['image'] = padding_im

+ return data

+ if self.resize_type == 'PIL':

+ image_pil = Image.fromarray(np.uint8(img))

+ img = image_pil.resize(self.image_shape, self.inter_type)

+ img = np.array(img)

+ if self.resize_type == 'OpenCV':

+ img = cv2.resize(img, self.image_shape)

+ norm_img = np.expand_dims(img, -1)

+ norm_img = norm_img.transpose((2, 0, 1))

+ if self.scale:

+ data['image'] = norm_img.astype(np.float32) / 128. - 1.

+ else:

+ data['image'] = norm_img.astype(np.float32) / 255.

+ return data

+

+

+class ABINetRecResizeImg(object):

+ def __init__(self, image_shape, **kwargs):

+ self.image_shape = image_shape

+

+ def __call__(self, data):

+ img = data['image']

+ norm_img, valid_ratio = resize_norm_img_abinet(img, self.image_shape)

+ data['image'] = norm_img

+ data['valid_ratio'] = valid_ratio

+ return data

+

+

+class SVTRRecResizeImg(object):

+ def __init__(self, image_shape, padding=True, **kwargs):

+ self.image_shape = image_shape

+ self.padding = padding

+

+ def __call__(self, data):

+ img = data['image']

+

+ norm_img, valid_ratio = resize_norm_img(img, self.image_shape,

+ self.padding)

+ data['image'] = norm_img

+ data['valid_ratio'] = valid_ratio

+ return data

+

+

def resize_norm_img_sar(img, image_shape, width_downsample_ratio=0.25):

imgC, imgH, imgW_min, imgW_max = image_shape

h = img.shape[0]

@@ -386,6 +456,26 @@ def resize_norm_img_srn(img, image_shape):

return np.reshape(img_black, (c, row, col)).astype(np.float32)

+def resize_norm_img_abinet(img, image_shape):

+ imgC, imgH, imgW = image_shape

+

+ resized_image = cv2.resize(

+ img, (imgW, imgH), interpolation=cv2.INTER_LINEAR)

+ resized_w = imgW

+ resized_image = resized_image.astype('float32')

+ resized_image = resized_image / 255.

+

+ mean = np.array([0.485, 0.456, 0.406])

+ std = np.array([0.229, 0.224, 0.225])

+ resized_image = (

+ resized_image - mean[None, None, ...]) / std[None, None, ...]

+ resized_image = resized_image.transpose((2, 0, 1))

+ resized_image = resized_image.astype('float32')

+

+ valid_ratio = min(1.0, float(resized_w / imgW))

+ return resized_image, valid_ratio

+

+

def srn_other_inputs(image_shape, num_heads, max_text_length):

imgC, imgH, imgW = image_shape

diff --git a/ppocr/data/imaug/vqa/__init__.py b/ppocr/data/imaug/vqa/__init__.py

index a5025e7985198e7ee40d6c92d8e1814eb1797032..bde175115536a3f644750260082204fe5f10dc05 100644

--- a/ppocr/data/imaug/vqa/__init__.py

+++ b/ppocr/data/imaug/vqa/__init__.py

@@ -13,7 +13,12 @@

# limitations under the License.

from .token import VQATokenPad, VQASerTokenChunk, VQAReTokenChunk, VQAReTokenRelation

+from .augment import DistortBBox

__all__ = [

- 'VQATokenPad', 'VQASerTokenChunk', 'VQAReTokenChunk', 'VQAReTokenRelation'

+ 'VQATokenPad',

+ 'VQASerTokenChunk',

+ 'VQAReTokenChunk',

+ 'VQAReTokenRelation',

+ 'DistortBBox',

]

diff --git a/ppocr/data/imaug/vqa/augment.py b/ppocr/data/imaug/vqa/augment.py

new file mode 100644

index 0000000000000000000000000000000000000000..fcdc9685e9855c3a2d8e9f6f5add270f95f15a6c

--- /dev/null

+++ b/ppocr/data/imaug/vqa/augment.py

@@ -0,0 +1,37 @@

+# copyright (c) 2022 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import os

+import sys

+import numpy as np

+import random

+

+

+class DistortBBox:

+ def __init__(self, prob=0.5, max_scale=1, **kwargs):

+ """Random distort bbox

+ """

+ self.prob = prob

+ self.max_scale = max_scale

+

+ def __call__(self, data):

+ if random.random() > self.prob:

+ return data

+ bbox = np.array(data['bbox'])

+ rnd_scale = (np.random.rand(*bbox.shape) - 0.5) * 2 * self.max_scale

+ bbox = np.round(bbox + rnd_scale).astype(bbox.dtype)

+ data['bbox'] = np.clip(data['bbox'], 0, 1000)

+ data['bbox'] = bbox.tolist()

+ sys.stdout.flush()

+ return data

diff --git a/ppocr/losses/__init__.py b/ppocr/losses/__init__.py

index de8419b7c1cf6a30ab7195a1cbcbb10a5e52642d..7bea87f62f335a9a47c881d4bc789ce34aaa734a 100755

--- a/ppocr/losses/__init__.py

+++ b/ppocr/losses/__init__.py

@@ -30,7 +30,7 @@ from .det_fce_loss import FCELoss

from .rec_ctc_loss import CTCLoss

from .rec_att_loss import AttentionLoss

from .rec_srn_loss import SRNLoss

-from .rec_nrtr_loss import NRTRLoss

+from .rec_ce_loss import CELoss

from .rec_sar_loss import SARLoss

from .rec_aster_loss import AsterLoss

from .rec_pren_loss import PRENLoss

@@ -60,7 +60,7 @@ def build_loss(config):