Merge branch 'develop' into core_inference_example

Showing

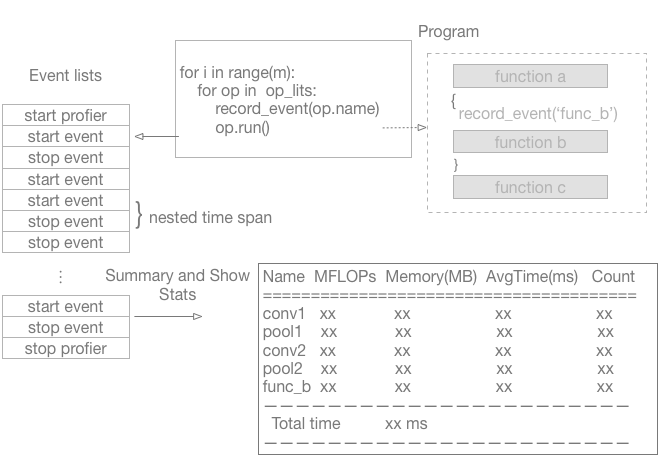

doc/design/images/profiler.png

0 → 100644

49.9 KB

doc/design/profiler.md

0 → 100644

paddle/platform/profiler.cc

0 → 100644

paddle/platform/profiler.h

0 → 100644

paddle/platform/profiler_test.cc

0 → 100644