diff --git a/configs/det/det_r50_vd_sast_icdar15.yml b/configs/det/det_r50_vd_sast_icdar15.yml

index c24cae90132c68d662e9edb7a7975e358fb40d9c..c90327b22b9c73111c997e84cfdd47d0721ee5b9 100755

--- a/configs/det/det_r50_vd_sast_icdar15.yml

+++ b/configs/det/det_r50_vd_sast_icdar15.yml

@@ -14,12 +14,13 @@ Global:

load_static_weights: True

cal_metric_during_train: False

pretrained_model: ./pretrain_models/ResNet50_vd_ssld_pretrained/

- checkpoints:

+ checkpoints:

save_inference_dir:

use_visualdl: False

- infer_img:

+ infer_img:

save_res_path: ./output/sast_r50_vd_ic15/predicts_sast.txt

+

Architecture:

model_type: det

algorithm: SAST

diff --git a/configs/e2e/e2e_r50_vd_pg.yml b/configs/e2e/e2e_r50_vd_pg.yml

new file mode 100644

index 0000000000000000000000000000000000000000..0a232f7a4f3b9ca214bbc6fd1840cec186c027e4

--- /dev/null

+++ b/configs/e2e/e2e_r50_vd_pg.yml

@@ -0,0 +1,114 @@

+Global:

+ use_gpu: True

+ epoch_num: 600

+ log_smooth_window: 20

+ print_batch_step: 10

+ save_model_dir: ./output/pgnet_r50_vd_totaltext/

+ save_epoch_step: 10

+ # evaluation is run every 0 iterationss after the 1000th iteration

+ eval_batch_step: [ 0, 1000 ]

+ # 1. If pretrained_model is saved in static mode, such as classification pretrained model

+ # from static branch, load_static_weights must be set as True.

+ # 2. If you want to finetune the pretrained models we provide in the docs,

+ # you should set load_static_weights as False.

+ load_static_weights: False

+ cal_metric_during_train: False

+ pretrained_model:

+ checkpoints:

+ save_inference_dir:

+ use_visualdl: False

+ infer_img:

+ valid_set: totaltext # two mode: totaltext valid curved words, partvgg valid non-curved words

+ save_res_path: ./output/pgnet_r50_vd_totaltext/predicts_pgnet.txt

+ character_dict_path: ppocr/utils/ic15_dict.txt

+ character_type: EN

+ max_text_length: 50 # the max length in seq

+ max_text_nums: 30 # the max seq nums in a pic

+ tcl_len: 64

+

+Architecture:

+ model_type: e2e

+ algorithm: PGNet

+ Transform:

+ Backbone:

+ name: ResNet

+ layers: 50

+ Neck:

+ name: PGFPN

+ Head:

+ name: PGHead

+

+Loss:

+ name: PGLoss

+ tcl_bs: 64

+ max_text_length: 50 # the same as Global: max_text_length

+ max_text_nums: 30 # the same as Global:max_text_nums

+ pad_num: 36 # the length of dict for pad

+

+Optimizer:

+ name: Adam

+ beta1: 0.9

+ beta2: 0.999

+ lr:

+ learning_rate: 0.001

+ regularizer:

+ name: 'L2'

+ factor: 0

+

+

+PostProcess:

+ name: PGPostProcess

+ score_thresh: 0.5

+Metric:

+ name: E2EMetric

+ character_dict_path: ppocr/utils/ic15_dict.txt

+ main_indicator: f_score_e2e

+

+Train:

+ dataset:

+ name: PGDataSet

+ label_file_list: [.././train_data/total_text/train/]

+ ratio_list: [1.0]

+ data_format: icdar #two data format: icdar/textnet

+ transforms:

+ - DecodeImage: # load image

+ img_mode: BGR

+ channel_first: False

+ - PGProcessTrain:

+ batch_size: 14 # same as loader: batch_size_per_card

+ min_crop_size: 24

+ min_text_size: 4

+ max_text_size: 512

+ - KeepKeys:

+ keep_keys: [ 'images', 'tcl_maps', 'tcl_label_maps', 'border_maps','direction_maps', 'training_masks', 'label_list', 'pos_list', 'pos_mask' ] # dataloader will return list in this order

+ loader:

+ shuffle: True

+ drop_last: True

+ batch_size_per_card: 14

+ num_workers: 16

+

+Eval:

+ dataset:

+ name: PGDataSet

+ data_dir: ./train_data/

+ label_file_list: [./train_data/total_text/test/]

+ transforms:

+ - DecodeImage: # load image

+ img_mode: RGB

+ channel_first: False

+ - E2ELabelEncode:

+ - E2EResizeForTest:

+ max_side_len: 768

+ - NormalizeImage:

+ scale: 1./255.

+ mean: [ 0.485, 0.456, 0.406 ]

+ std: [ 0.229, 0.224, 0.225 ]

+ order: 'hwc'

+ - ToCHWImage:

+ - KeepKeys:

+ keep_keys: [ 'image', 'shape', 'polys', 'strs', 'tags' ]

+ loader:

+ shuffle: False

+ drop_last: False

+ batch_size_per_card: 1 # must be 1

+ num_workers: 2

\ No newline at end of file

diff --git a/doc/doc_ch/inference.md b/doc/doc_ch/inference.md

index 7968b355ea936d465b3c173c0fcdb3e08f12f16e..1288d90692e154220b8ceb22cd7b6d98f53d3efb 100755

--- a/doc/doc_ch/inference.md

+++ b/doc/doc_ch/inference.md

@@ -12,7 +12,8 @@ inference 模型(`paddle.jit.save`保存的模型)

- [一、训练模型转inference模型](#训练模型转inference模型)

- [检测模型转inference模型](#检测模型转inference模型)

- [识别模型转inference模型](#识别模型转inference模型)

- - [方向分类模型转inference模型](#方向分类模型转inference模型)

+ - [方向分类模型转inference模型](#方向分类模型转inference模型)

+ - [端到端模型转inference模型](#端到端模型转inference模型)

- [二、文本检测模型推理](#文本检测模型推理)

- [1. 超轻量中文检测模型推理](#超轻量中文检测模型推理)

@@ -27,10 +28,13 @@ inference 模型(`paddle.jit.save`保存的模型)

- [4. 自定义文本识别字典的推理](#自定义文本识别字典的推理)

- [5. 多语言模型的推理](#多语言模型的推理)

-- [四、方向分类模型推理](#方向识别模型推理)

+- [四、端到端模型推理](#端到端模型推理)

+ - [1. PGNet端到端模型推理](#PGNet端到端模型推理)

+

+- [五、方向分类模型推理](#方向识别模型推理)

- [1. 方向分类模型推理](#方向分类模型推理)

-- [五、文本检测、方向分类和文字识别串联推理](#文本检测、方向分类和文字识别串联推理)

+- [六、文本检测、方向分类和文字识别串联推理](#文本检测、方向分类和文字识别串联推理)

- [1. 超轻量中文OCR模型推理](#超轻量中文OCR模型推理)

- [2. 其他模型推理](#其他模型推理)

@@ -118,6 +122,32 @@ python3 tools/export_model.py -c configs/cls/cls_mv3.yml -o Global.pretrained_mo

├── inference.pdiparams.info # 分类inference模型的参数信息,可忽略

└── inference.pdmodel # 分类inference模型的program文件

```

+

+### 端到端模型转inference模型

+

+下载端到端模型:

+```

+wget -P ./ch_lite/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar && tar xf ./ch_lite/ch_ppocr_mobile_v2.0_cls_train.tar -C ./ch_lite/

+```

+

+端到端模型转inference模型与检测的方式相同,如下:

+```

+# -c 后面设置训练算法的yml配置文件

+# -o 配置可选参数

+# Global.pretrained_model 参数设置待转换的训练模型地址,不用添加文件后缀 .pdmodel,.pdopt或.pdparams。

+# Global.load_static_weights 参数需要设置为 False。

+# Global.save_inference_dir参数设置转换的模型将保存的地址。

+

+python3 tools/export_model.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./ch_lite/ch_ppocr_mobile_v2.0_cls_train/best_accuracy Global.load_static_weights=False Global.save_inference_dir=./inference/e2e/

+```

+

+转换成功后,在目录下有三个文件:

+```

+/inference/e2e/

+ ├── inference.pdiparams # 分类inference模型的参数文件

+ ├── inference.pdiparams.info # 分类inference模型的参数信息,可忽略

+ └── inference.pdmodel # 分类inference模型的program文件

+```

## 二、文本检测模型推理

@@ -332,8 +362,38 @@ python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words/korean/1.jpg" -

Predicts of ./doc/imgs_words/korean/1.jpg:('바탕으로', 0.9948904)

```

+

+## 四、端到端模型推理

+

+端到端模型推理,默认使用PGNet模型的配置参数。当不使用PGNet模型时,在推理时,需要通过传入相应的参数进行算法适配,细节参考下文。

+

+### 1. PGNet端到端模型推理

+#### (1). 四边形文本检测模型(ICDAR2015)

+首先将PGNet端到端训练过程中保存的模型,转换成inference model。以基于Resnet50_vd骨干网络,在ICDAR2015英文数据集训练的模型为例([模型下载地址](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar)),可以使用如下命令进行转换:

+```

+python3 tools/export_model.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./en_server_pgnetA/iter_epoch_450 Global.load_static_weights=False Global.save_inference_dir=./inference/e2e

+```

+**PGNet端到端模型推理,需要设置参数`--e2e_algorithm="PGNet"`**,可以执行如下命令:

+```

+python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img_10.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=False

+```

+可视化文本检测结果默认保存到`./inference_results`文件夹里面,结果文件的名称前缀为'e2e_res'。结果示例如下:

+

+

+

+#### (2). 弯曲文本检测模型(Total-Text)

+和四边形文本检测模型共用一个推理模型

+**PGNet端到端模型推理,需要设置参数`--e2e_algorithm="PGNet"`,同时,还需要增加参数`--e2e_pgnet_polygon=True`,**可以执行如下命令:

+```

+python3.7 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True

+```

+可视化文本端到端结果默认保存到`./inference_results`文件夹里面,结果文件的名称前缀为'e2e_res'。结果示例如下:

+

+

+

+

-## 四、方向分类模型推理

+## 五、方向分类模型推理

下面将介绍方向分类模型推理。

@@ -358,7 +418,7 @@ Predicts of ./doc/imgs_words/ch/word_4.jpg:['0', 0.9999982]

```

-## 五、文本检测、方向分类和文字识别串联推理

+## 六、文本检测、方向分类和文字识别串联推理

### 1. 超轻量中文OCR模型推理

diff --git a/doc/doc_ch/multi_languages.md b/doc/doc_ch/multi_languages.md

new file mode 100644

index 0000000000000000000000000000000000000000..a8f7c2b77f64285e0edfbd22c248e84f0bb84d42

--- /dev/null

+++ b/doc/doc_ch/multi_languages.md

@@ -0,0 +1,284 @@

+# 多语言模型

+

+**近期更新**

+

+- 2021.4.9 支持**80种**语言的检测和识别

+- 2021.4.9 支持**轻量高精度**英文模型检测识别

+

+- [1 安装](#安装)

+ - [1.1 paddle 安装](#paddle安装)

+ - [1.2 paddleocr package 安装](#paddleocr_package_安装)

+

+- [2 快速使用](#快速使用)

+ - [2.1 命令行运行](#命令行运行)

+ - [2.1.1 整图预测](#bash_检测+识别)

+ - [2.1.2 识别预测](#bash_识别)

+ - [2.1.3 检测预测](#bash_检测)

+ - [2.2 python 脚本运行](#python_脚本运行)

+ - [2.2.1 整图预测](#python_检测+识别)

+ - [2.2.2 识别预测](#python_识别)

+ - [2.2.3 检测预测](#python_检测)

+- [3 自定义训练](#自定义训练)

+- [4 支持语种及缩写](#语种缩写)

+

+

+## 1 安装

+

+

+### 1.1 paddle 安装

+```

+# cpu

+pip install paddlepaddle

+

+# gpu

+pip instll paddlepaddle-gpu

+```

+

+

+### 1.2 paddleocr package 安装

+

+

+pip 安装

+```

+pip install "paddleocr>=2.0.4" # 推荐使用2.0.4版本

+```

+本地构建并安装

+```

+python3 setup.py bdist_wheel

+pip3 install dist/paddleocr-x.x.x-py3-none-any.whl # x.x.x是paddleocr的版本号

+```

+

+

+## 2 快速使用

+

+

+### 2.1 命令行运行

+

+查看帮助信息

+

+```

+paddleocr -h

+```

+

+* 整图预测(检测+识别)

+

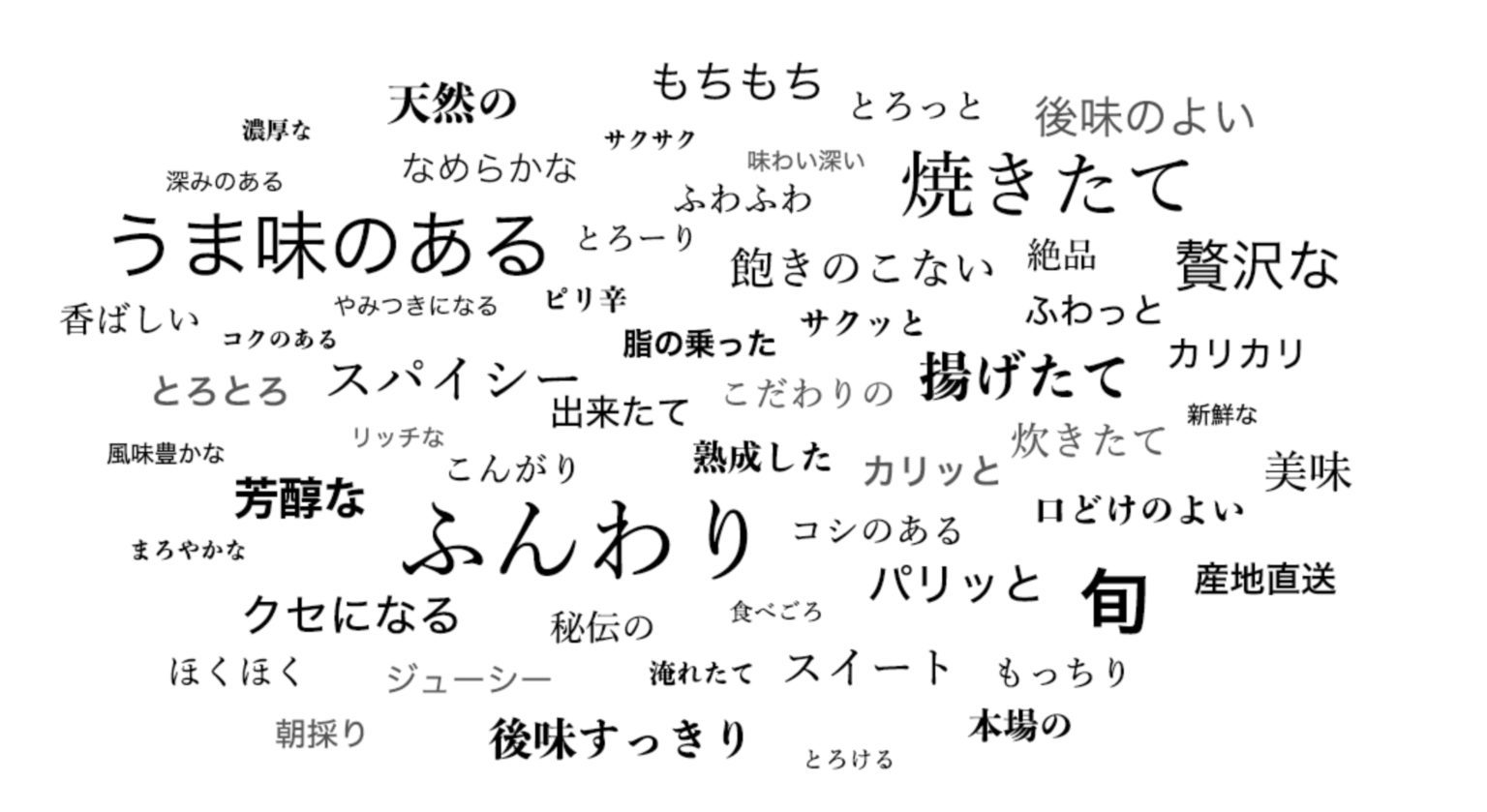

+Paddleocr目前支持80个语种,可以通过修改--lang参数进行切换,具体支持的[语种](#语种缩写)可查看表格。

+

+``` bash

+

+paddleocr --image_dir doc/imgs/japan_2.jpg --lang=japan

+```

+

+

+结果是一个list,每个item包含了文本框,文字和识别置信度

+```text

+[[[671.0, 60.0], [847.0, 63.0], [847.0, 104.0], [671.0, 102.0]], ('もちもち', 0.9993342)]

+[[[394.0, 82.0], [536.0, 77.0], [538.0, 127.0], [396.0, 132.0]], ('天然の', 0.9919842)]

+[[[880.0, 89.0], [1014.0, 93.0], [1013.0, 127.0], [879.0, 124.0]], ('とろっと', 0.9976762)]

+[[[1067.0, 101.0], [1294.0, 101.0], [1294.0, 138.0], [1067.0, 138.0]], ('後味のよい', 0.9988712)]

+......

+```

+

+* 识别预测

+

+```bash

+paddleocr --image_dir doc/imgs_words/japan/1.jpg --det false --lang=japan

+```

+

+

+

+结果是一个tuple,返回识别结果和识别置信度

+

+```text

+('したがって', 0.99965394)

+```

+

+* 检测预测

+

+```

+paddleocr --image_dir PaddleOCR/doc/imgs/11.jpg --rec false

+```

+

+结果是一个list,每个item只包含文本框

+

+```

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+

+### 2.2 python 脚本运行

+

+ppocr 也支持在python脚本中运行,便于嵌入到您自己的代码中:

+

+* 整图预测(检测+识别)

+

+```

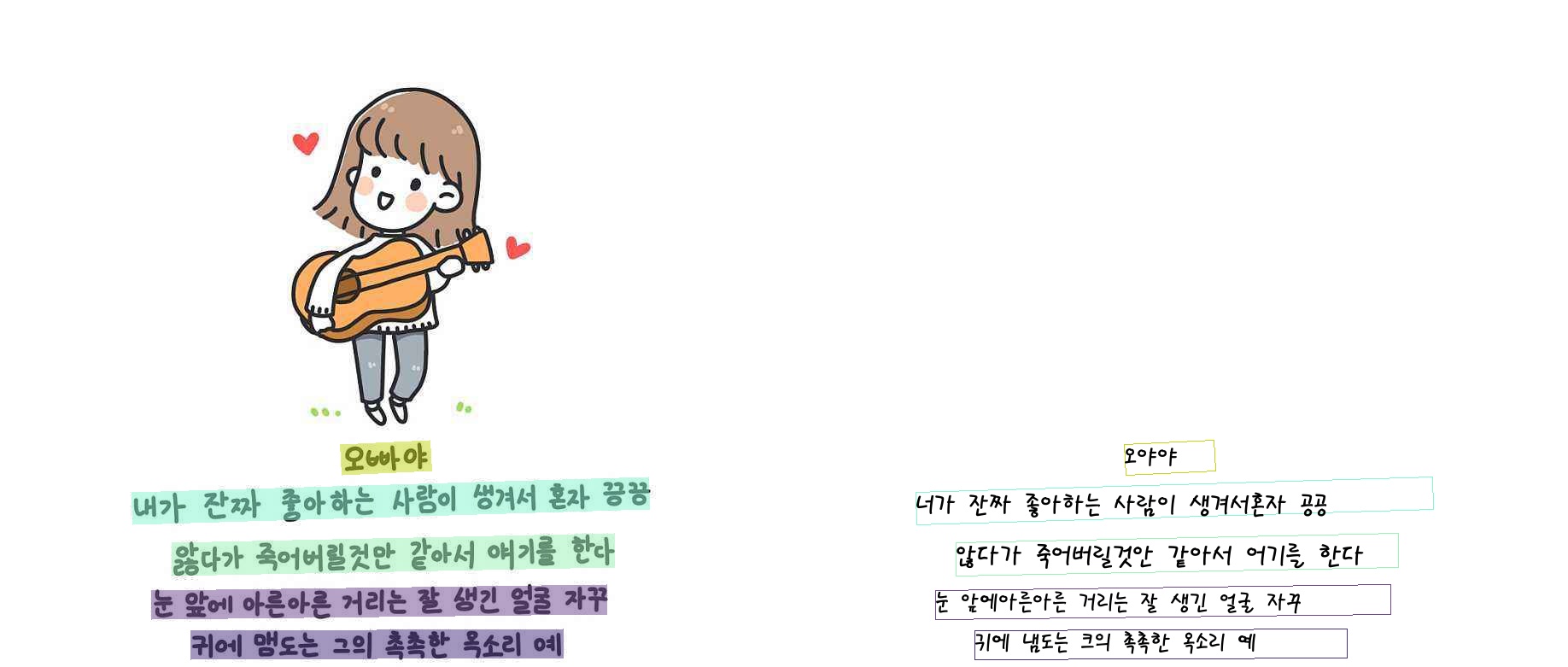

+from paddleocr import PaddleOCR, draw_ocr

+

+# 同样也是通过修改 lang 参数切换语种

+ocr = PaddleOCR(lang="korean") # 首次执行会自动下载模型文件

+img_path = 'doc/imgs/korean_1.jpg '

+result = ocr.ocr(img_path)

+# 打印检测框和识别结果

+for line in result:

+ print(line)

+

+# 可视化

+from PIL import Image

+image = Image.open(img_path).convert('RGB')

+boxes = [line[0] for line in result]

+txts = [line[1][0] for line in result]

+scores = [line[1][1] for line in result]

+im_show = draw_ocr(image, boxes, txts, scores, font_path='/path/to/PaddleOCR/doc/korean.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+

+结果可视化:

+

+

+

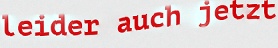

+* 识别预测

+

+```

+from paddleocr import PaddleOCR

+ocr = PaddleOCR(lang="german")

+img_path = 'PaddleOCR/doc/imgs_words/german/1.jpg'

+result = ocr.ocr(img_path, det=False, cls=True)

+for line in result:

+ print(line)

+```

+

+

+

+结果是一个tuple,只包含识别结果和识别置信度

+

+```

+('leider auch jetzt', 0.97538936)

+```

+

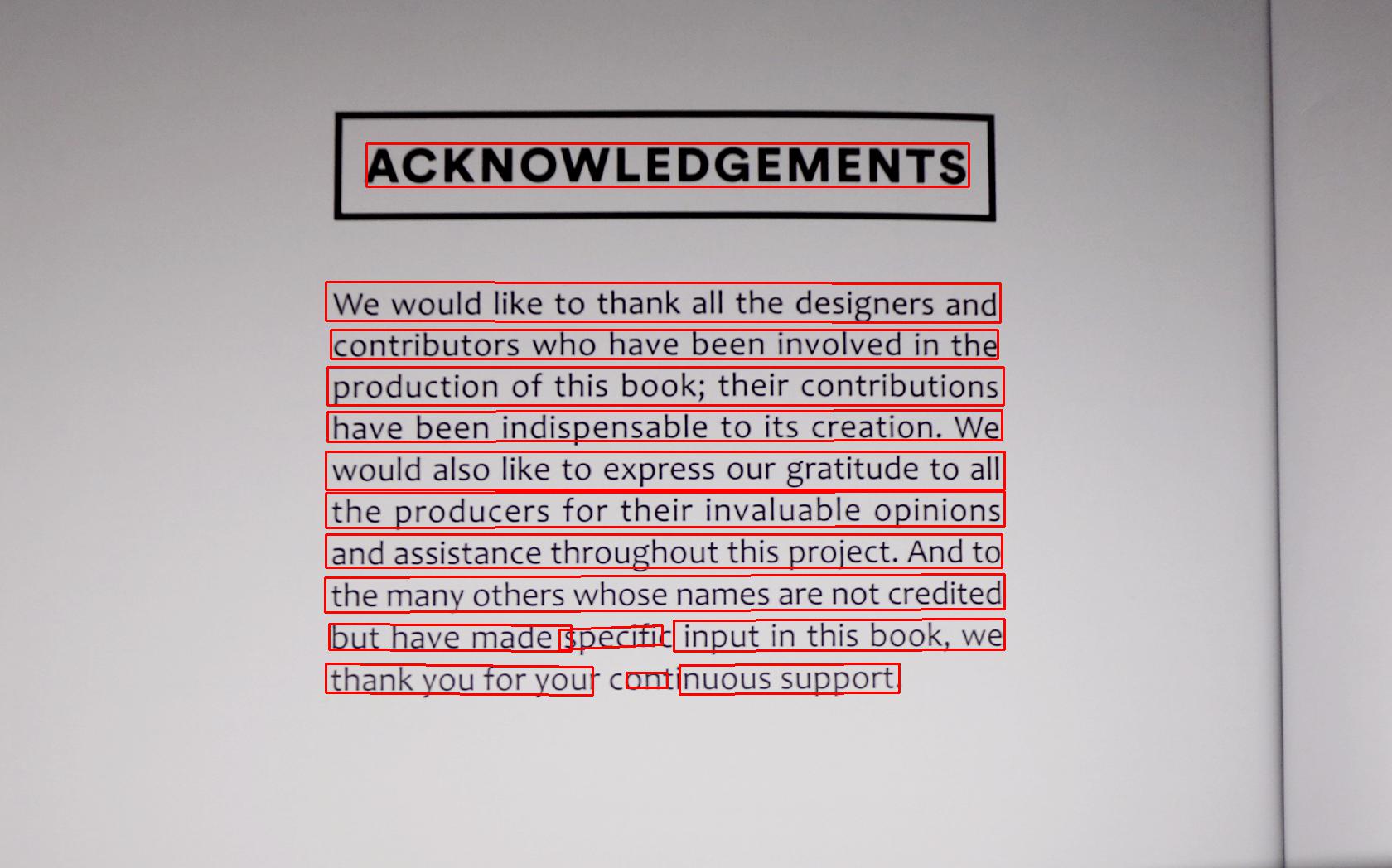

+* 检测预测

+

+```python

+from paddleocr import PaddleOCR, draw_ocr

+ocr = PaddleOCR() # need to run only once to download and load model into memory

+img_path = 'PaddleOCR/doc/imgs_en/img_12.jpg'

+result = ocr.ocr(img_path, rec=False)

+for line in result:

+ print(line)

+

+# 显示结果

+from PIL import Image

+

+image = Image.open(img_path).convert('RGB')

+im_show = draw_ocr(image, result, txts=None, scores=None, font_path='/path/to/PaddleOCR/doc/fonts/simfang.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+结果是一个list,每个item只包含文本框

+```bash

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+结果可视化 :

+

+

+ppocr 还支持方向分类, 更多使用方式请参考:[whl包使用说明](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md)。

+

+

+## 3 自定义训练

+

+ppocr 支持使用自己的数据进行自定义训练或finetune, 其中识别模型可以参考 [法语配置文件](../../configs/rec/multi_language/rec_french_lite_train.yml)

+修改训练数据路径、字典等参数。

+

+具体数据准备、训练过程可参考:[文本检测](../doc_ch/detection.md)、[文本识别](../doc_ch/recognition.md),更多功能如预测部署、

+数据标注等功能可以阅读完整的[文档教程](../../README_ch.md)。

+

+

+## 4 支持语种及缩写

+

+| 语种 | 描述 | 缩写 |

+| --- | --- | --- |

+|中文|chinese and english|ch|

+|英文|english|en|

+|法文|french|fr|

+|德文|german|german|

+|日文|japan|japan|

+|韩文|korean|korean|

+|中文繁体|chinese traditional |ch_tra|

+|意大利文| Italian |it|

+|西班牙文|Spanish |es|

+|葡萄牙文| Portuguese|pt|

+|俄罗斯文|Russia|ru|

+|阿拉伯文|Arabic|ar|

+|印地文|Hindi|hi|

+|维吾尔|Uyghur|ug|

+|波斯文|Persian|fa|

+|乌尔都文|Urdu|ur|

+|塞尔维亚文(latin)| Serbian(latin) |rs_latin|

+|欧西坦文|Occitan |oc|

+|马拉地文|Marathi|mr|

+|尼泊尔文|Nepali|ne|

+|塞尔维亚文(cyrillic)|Serbian(cyrillic)|rs_cyrillic|

+|保加利亚文|Bulgarian |bg|

+|乌克兰文|Ukranian|uk|

+|白俄罗斯文|Belarusian|be|

+|泰卢固文|Telugu |te|

+|卡纳达文|Kannada |kn|

+|泰米尔文|Tamil |ta|

+|南非荷兰文 |Afrikaans |af|

+|阿塞拜疆文 |Azerbaijani |az|

+|波斯尼亚文|Bosnian|bs|

+|捷克文|Czech|cs|

+|威尔士文 |Welsh |cy|

+|丹麦文 |Danish|da|

+|爱沙尼亚文 |Estonian |et|

+|爱尔兰文 |Irish |ga|

+|克罗地亚文|Croatian |hr|

+|匈牙利文|Hungarian |hu|

+|印尼文|Indonesian|id|

+|冰岛文 |Icelandic|is|

+|库尔德文 |Kurdish|ku|

+|立陶宛文|Lithuanian |lt|

+|拉脱维亚文 |Latvian |lv|

+|毛利文|Maori|mi|

+|马来文 |Malay|ms|

+|马耳他文 |Maltese |mt|

+|荷兰文 |Dutch |nl|

+|挪威文 |Norwegian |no|

+|波兰文|Polish |pl|

+| 罗马尼亚文|Romanian |ro|

+| 斯洛伐克文|Slovak |sk|

+| 斯洛文尼亚文|Slovenian |sl|

+| 阿尔巴尼亚文|Albanian |sq|

+| 瑞典文|Swedish |sv|

+| 西瓦希里文|Swahili |sw|

+| 塔加洛文|Tagalog |tl|

+| 土耳其文|Turkish |tr|

+| 乌兹别克文|Uzbek |uz|

+| 越南文|Vietnamese |vi|

+| 蒙古文|Mongolian |mn|

+| 阿巴扎文|Abaza |abq|

+| 阿迪赫文|Adyghe |ady|

+| 卡巴丹文|Kabardian |kbd|

+| 阿瓦尔文|Avar |ava|

+| 达尔瓦文|Dargwa |dar|

+| 因古什文|Ingush |inh|

+| 拉克文|Lak |lbe|

+| 莱兹甘文|Lezghian |lez|

+|塔巴萨兰文 |Tabassaran |tab|

+| 比尔哈文|Bihari |bh|

+| 迈蒂利文|Maithili |mai|

+| 昂加文|Angika |ang|

+| 孟加拉文|Bhojpuri |bho|

+| 摩揭陀文 |Magahi |mah|

+| 那格浦尔文|Nagpur |sck|

+| 尼瓦尔文|Newari |new|

+| 保加利亚文 |Goan Konkani|gom|

+| 沙特阿拉伯文|Saudi Arabia|sa|

diff --git a/doc/doc_ch/pgnet.md b/doc/doc_ch/pgnet.md

index abe4122dfe4b5de618ff449582827eae264a7275..165b4bb44b6a151f8433952186bc07adc38b3763 100644

--- a/doc/doc_ch/pgnet.md

+++ b/doc/doc_ch/pgnet.md

@@ -1,14 +1,9 @@

-

-# 端对端OCR算法-PGNet

-

-----

# 端对端OCR算法-PGNet

- [一、简介](#简介)

- [二、环境配置](#环境配置)

- [三、快速使用](#快速使用)

- [四、模型训练、评估、推理](#快速训练)

-

## 一、简介

OCR算法可以分为两阶段算法和端对端的算法。二阶段OCR算法一般分为两个部分,文本检测和文本识别算法,文件检测算法从图像中得到文本行的检测框,然后识别算法去识别文本框中的内容。而端对端OCR算法可以在一个算法中完成文字检测和文字识别,其基本思想是设计一个同时具有检测单元和识别模块的模型,共享其中两者的CNN特征,并联合训练。由于一个算法即可完成文字识别,端对端模型更小,速度更快。

@@ -62,13 +57,12 @@ python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/im

# 如果想使用CPU进行预测,需设置use_gpu参数为False

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True --use_gpu=False

```

-

### 可视化结果

可视化文本检测结果默认保存到./inference_results文件夹里面,结果文件的名称前缀为'e2e_res'。结果示例如下:

-## 四、快速训练

+## 四、模型训练、评估、推理

本节以totaltext数据集为例,介绍PaddleOCR中端到端模型的训练、评估与测试。

### 准备数据

@@ -103,7 +97,6 @@ wget -P ./pretrain_models/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/tr

└─ best_accuracy.states

└─ best_accuracy.pdparams

```

-

*如果您安装的是cpu版本,请将配置文件中的 `use_gpu` 字段修改为false*

```shell

@@ -121,8 +114,13 @@ python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/

python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Optimizer.base_lr=0.0001

```

+#### 断点训练

+如果训练程序中断,如果希望加载训练中断的模型从而恢复训练,可以通过指定Global.checkpoints指定要加载的模型路径:

+```shell

+python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.checkpoints=./your/trained/model

+```

-### 模型评估

+**注意**:`Global.checkpoints`的优先级高于`Global.pretrain_weights`的优先级,即同时指定两个参数时,优先加载`Global.checkpoints`指定的模型,如果`Global.checkpoints`指定的模型路径有误,会加载`Global.pretrain_weights`指定的模型。

PaddleOCR计算三个OCR端到端相关的指标,分别是:Precision、Recall、Hmean。

diff --git a/doc/imgs_results/whl/12_det.jpg b/doc/imgs_results/whl/12_det.jpg

index 1d5ccf2a6b5d3fa9516560e0cb2646ad6b917da6..71627f0b8db8fdc6e1bf0c4601f0311160d3164d 100644

Binary files a/doc/imgs_results/whl/12_det.jpg and b/doc/imgs_results/whl/12_det.jpg differ

diff --git a/paddleocr.py b/paddleocr.py

index c3741b264503534ef3e64531c2576273d8ccfd11..47e1267ac40effbe8b4ab80723c66eb5378be179 100644

--- a/paddleocr.py

+++ b/paddleocr.py

@@ -66,6 +66,46 @@ model_urls = {

'url':

'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/japan_mobile_v2.0_rec_infer.tar',

'dict_path': './ppocr/utils/dict/japan_dict.txt'

+ },

+ 'chinese_cht': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/chinese_cht_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/chinese_cht_dict.txt'

+ },

+ 'ta': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ta_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/ta_dict.txt'

+ },

+ 'te': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/te_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/te_dict.txt'

+ },

+ 'ka': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ka_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/ka_dict.txt'

+ },

+ 'latin': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/latin_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/latin_dict.txt'

+ },

+ 'arabic': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/arabic_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/arabic_dict.txt'

+ },

+ 'cyrillic': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/cyrillic_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/cyrillic_dict.txt'

+ },

+ 'devanagari': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/devanagari_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/devanagari_dict.txt'

}

},

'cls':

@@ -233,6 +273,29 @@ class PaddleOCR(predict_system.TextSystem):

postprocess_params.__dict__.update(**kwargs)

self.use_angle_cls = postprocess_params.use_angle_cls

lang = postprocess_params.lang

+ latin_lang = [

+ 'af', 'az', 'bs', 'cs', 'cy', 'da', 'de', 'en', 'es', 'et', 'fr',

+ 'ga', 'hr', 'hu', 'id', 'is', 'it', 'ku', 'la', 'lt', 'lv', 'mi',

+ 'ms', 'mt', 'nl', 'no', 'oc', 'pi', 'pl', 'pt', 'ro', 'rs_latin',

+ 'sk', 'sl', 'sq', 'sv', 'sw', 'tl', 'tr', 'uz', 'vi'

+ ]

+ arabic_lang = ['ar', 'fa', 'ug', 'ur']

+ cyrillic_lang = [

+ 'ru', 'rs_cyrillic', 'be', 'bg', 'uk', 'mn', 'abq', 'ady', 'kbd',

+ 'ava', 'dar', 'inh', 'che', 'lbe', 'lez', 'tab'

+ ]

+ devanagari_lang = [

+ 'hi', 'mr', 'ne', 'bh', 'mai', 'ang', 'bho', 'mah', 'sck', 'new',

+ 'gom', 'sa', 'bgc'

+ ]

+ if lang in latin_lang:

+ lang = "latin"

+ elif lang in arabic_lang:

+ lang = "arabic"

+ elif lang in cyrillic_lang:

+ lang = "cyrillic"

+ elif lang in devanagari_lang:

+ lang = "devanagari"

assert lang in model_urls[

'rec'], 'param lang must in {}, but got {}'.format(

model_urls['rec'].keys(), lang)

diff --git a/ppocr/data/__init__.py b/ppocr/data/__init__.py

index 7cb50d7a62aa3f24811e517768e0635ac7b7321a..728b8317f54687ee76b519cba18f4d7807493821 100644

--- a/ppocr/data/__init__.py

+++ b/ppocr/data/__init__.py

@@ -34,6 +34,7 @@ import paddle.distributed as dist

from ppocr.data.imaug import transform, create_operators

from ppocr.data.simple_dataset import SimpleDataSet

from ppocr.data.lmdb_dataset import LMDBDataSet

+from ppocr.data.pgnet_dataset import PGDataSet

__all__ = ['build_dataloader', 'transform', 'create_operators']

@@ -54,7 +55,7 @@ signal.signal(signal.SIGTERM, term_mp)

def build_dataloader(config, mode, device, logger, seed=None):

config = copy.deepcopy(config)

- support_dict = ['SimpleDataSet', 'LMDBDataSet']

+ support_dict = ['SimpleDataSet', 'LMDBDataSet', 'PGDataSet']

module_name = config[mode]['dataset']['name']

assert module_name in support_dict, Exception(

'DataSet only support {}'.format(support_dict))

@@ -72,14 +73,14 @@ def build_dataloader(config, mode, device, logger, seed=None):

else:

use_shared_memory = True

if mode == "Train":

- #Distribute data to multiple cards

+ # Distribute data to multiple cards

batch_sampler = DistributedBatchSampler(

dataset=dataset,

batch_size=batch_size,

shuffle=shuffle,

drop_last=drop_last)

else:

- #Distribute data to single card

+ # Distribute data to single card

batch_sampler = BatchSampler(

dataset=dataset,

batch_size=batch_size,

diff --git a/ppocr/data/imaug/__init__.py b/ppocr/data/imaug/__init__.py

index 250ac75e7683df2353d9fad02ef42b9e133681d3..a808fd586b0676751da1ee31d379179b026fd51d 100644

--- a/ppocr/data/imaug/__init__.py

+++ b/ppocr/data/imaug/__init__.py

@@ -28,6 +28,7 @@ from .label_ops import *

from .east_process import *

from .sast_process import *

+from .pg_process import *

def transform(data, ops=None):

diff --git a/ppocr/data/imaug/label_ops.py b/ppocr/data/imaug/label_ops.py

index 7a32d870bfc7f532896ce6b11aac5508a6369993..47e0cbf07d8bd8b6ad838fa2d211345c65a6751a 100644

--- a/ppocr/data/imaug/label_ops.py

+++ b/ppocr/data/imaug/label_ops.py

@@ -187,6 +187,34 @@ class CTCLabelEncode(BaseRecLabelEncode):

return dict_character

+class E2ELabelEncode(BaseRecLabelEncode):

+ def __init__(self,

+ max_text_length,

+ character_dict_path=None,

+ character_type='EN',

+ use_space_char=False,

+ **kwargs):

+ super(E2ELabelEncode,

+ self).__init__(max_text_length, character_dict_path,

+ character_type, use_space_char)

+ self.pad_num = len(self.dict) # the length to pad

+

+ def __call__(self, data):

+ text_label_index_list, temp_text = [], []

+ texts = data['strs']

+ for text in texts:

+ text = text.lower()

+ temp_text = []

+ for c_ in text:

+ if c_ in self.dict:

+ temp_text.append(self.dict[c_])

+ temp_text = temp_text + [self.pad_num] * (self.max_text_len -

+ len(temp_text))

+ text_label_index_list.append(temp_text)

+ data['strs'] = np.array(text_label_index_list)

+ return data

+

+

class AttnLabelEncode(BaseRecLabelEncode):

""" Convert between text-label and text-index """

diff --git a/ppocr/data/imaug/operators.py b/ppocr/data/imaug/operators.py

index eacfdf3b243af5b9051ad726ced6edacddff45ed..9c48b09647527cf718113ea1b5df152ff7befa04 100644

--- a/ppocr/data/imaug/operators.py

+++ b/ppocr/data/imaug/operators.py

@@ -197,7 +197,6 @@ class DetResizeForTest(object):

sys.exit(0)

ratio_h = resize_h / float(h)

ratio_w = resize_w / float(w)

- # return img, np.array([h, w])

return img, [ratio_h, ratio_w]

def resize_image_type2(self, img):

@@ -206,7 +205,6 @@ class DetResizeForTest(object):

resize_w = w

resize_h = h

- # Fix the longer side

if resize_h > resize_w:

ratio = float(self.resize_long) / resize_h

else:

@@ -223,3 +221,72 @@ class DetResizeForTest(object):

ratio_w = resize_w / float(w)

return img, [ratio_h, ratio_w]

+

+

+class E2EResizeForTest(object):

+ def __init__(self, **kwargs):

+ super(E2EResizeForTest, self).__init__()

+ self.max_side_len = kwargs['max_side_len']

+ self.valid_set = kwargs['valid_set']

+

+ def __call__(self, data):

+ img = data['image']

+ src_h, src_w, _ = img.shape

+ if self.valid_set == 'totaltext':

+ im_resized, [ratio_h, ratio_w] = self.resize_image_for_totaltext(

+ img, max_side_len=self.max_side_len)

+ else:

+ im_resized, (ratio_h, ratio_w) = self.resize_image(

+ img, max_side_len=self.max_side_len)

+ data['image'] = im_resized

+ data['shape'] = np.array([src_h, src_w, ratio_h, ratio_w])

+ return data

+

+ def resize_image_for_totaltext(self, im, max_side_len=512):

+

+ h, w, _ = im.shape

+ resize_w = w

+ resize_h = h

+ ratio = 1.25

+ if h * ratio > max_side_len:

+ ratio = float(max_side_len) / resize_h

+ resize_h = int(resize_h * ratio)

+ resize_w = int(resize_w * ratio)

+

+ max_stride = 128

+ resize_h = (resize_h + max_stride - 1) // max_stride * max_stride

+ resize_w = (resize_w + max_stride - 1) // max_stride * max_stride

+ im = cv2.resize(im, (int(resize_w), int(resize_h)))

+ ratio_h = resize_h / float(h)

+ ratio_w = resize_w / float(w)

+ return im, (ratio_h, ratio_w)

+

+ def resize_image(self, im, max_side_len=512):

+ """

+ resize image to a size multiple of max_stride which is required by the network

+ :param im: the resized image

+ :param max_side_len: limit of max image size to avoid out of memory in gpu

+ :return: the resized image and the resize ratio

+ """

+ h, w, _ = im.shape

+

+ resize_w = w

+ resize_h = h

+

+ # Fix the longer side

+ if resize_h > resize_w:

+ ratio = float(max_side_len) / resize_h

+ else:

+ ratio = float(max_side_len) / resize_w

+

+ resize_h = int(resize_h * ratio)

+ resize_w = int(resize_w * ratio)

+

+ max_stride = 128

+ resize_h = (resize_h + max_stride - 1) // max_stride * max_stride

+ resize_w = (resize_w + max_stride - 1) // max_stride * max_stride

+ im = cv2.resize(im, (int(resize_w), int(resize_h)))

+ ratio_h = resize_h / float(h)

+ ratio_w = resize_w / float(w)

+

+ return im, (ratio_h, ratio_w)

diff --git a/ppocr/data/imaug/pg_process.py b/ppocr/data/imaug/pg_process.py

new file mode 100644

index 0000000000000000000000000000000000000000..0c9439d7a274af27ca8d296d5e737bafdec3bd1f

--- /dev/null

+++ b/ppocr/data/imaug/pg_process.py

@@ -0,0 +1,906 @@

+# copyright (c) 2021 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import math

+import cv2

+import numpy as np

+

+__all__ = ['PGProcessTrain']

+

+

+class PGProcessTrain(object):

+ def __init__(self,

+ character_dict_path,

+ max_text_length,

+ max_text_nums,

+ tcl_len,

+ batch_size=14,

+ min_crop_size=24,

+ min_text_size=4,

+ max_text_size=512,

+ **kwargs):

+ self.tcl_len = tcl_len

+ self.max_text_length = max_text_length

+ self.max_text_nums = max_text_nums

+ self.batch_size = batch_size

+ self.min_crop_size = min_crop_size

+ self.min_text_size = min_text_size

+ self.max_text_size = max_text_size

+ self.Lexicon_Table = self.get_dict(character_dict_path)

+ self.pad_num = len(self.Lexicon_Table)

+ self.img_id = 0

+

+ def get_dict(self, character_dict_path):

+ character_str = ""

+ with open(character_dict_path, "rb") as fin:

+ lines = fin.readlines()

+ for line in lines:

+ line = line.decode('utf-8').strip("\n").strip("\r\n")

+ character_str += line

+ dict_character = list(character_str)

+ return dict_character

+

+ def quad_area(self, poly):

+ """

+ compute area of a polygon

+ :param poly:

+ :return:

+ """

+ edge = [(poly[1][0] - poly[0][0]) * (poly[1][1] + poly[0][1]),

+ (poly[2][0] - poly[1][0]) * (poly[2][1] + poly[1][1]),

+ (poly[3][0] - poly[2][0]) * (poly[3][1] + poly[2][1]),

+ (poly[0][0] - poly[3][0]) * (poly[0][1] + poly[3][1])]

+ return np.sum(edge) / 2.

+

+ def gen_quad_from_poly(self, poly):

+ """

+ Generate min area quad from poly.

+ """

+ point_num = poly.shape[0]

+ min_area_quad = np.zeros((4, 2), dtype=np.float32)

+ rect = cv2.minAreaRect(poly.astype(

+ np.int32)) # (center (x,y), (width, height), angle of rotation)

+ box = np.array(cv2.boxPoints(rect))

+

+ first_point_idx = 0

+ min_dist = 1e4

+ for i in range(4):

+ dist = np.linalg.norm(box[(i + 0) % 4] - poly[0]) + \

+ np.linalg.norm(box[(i + 1) % 4] - poly[point_num // 2 - 1]) + \

+ np.linalg.norm(box[(i + 2) % 4] - poly[point_num // 2]) + \

+ np.linalg.norm(box[(i + 3) % 4] - poly[-1])

+ if dist < min_dist:

+ min_dist = dist

+ first_point_idx = i

+ for i in range(4):

+ min_area_quad[i] = box[(first_point_idx + i) % 4]

+

+ return min_area_quad

+

+ def check_and_validate_polys(self, polys, tags, xxx_todo_changeme):

+ """

+ check so that the text poly is in the same direction,

+ and also filter some invalid polygons

+ :param polys:

+ :param tags:

+ :return:

+ """

+ (h, w) = xxx_todo_changeme

+ if polys.shape[0] == 0:

+ return polys, np.array([]), np.array([])

+ polys[:, :, 0] = np.clip(polys[:, :, 0], 0, w - 1)

+ polys[:, :, 1] = np.clip(polys[:, :, 1], 0, h - 1)

+

+ validated_polys = []

+ validated_tags = []

+ hv_tags = []

+ for poly, tag in zip(polys, tags):

+ quad = self.gen_quad_from_poly(poly)

+ p_area = self.quad_area(quad)

+ if abs(p_area) < 1:

+ print('invalid poly')

+ continue

+ if p_area > 0:

+ if tag == False:

+ print('poly in wrong direction')

+ tag = True # reversed cases should be ignore

+ poly = poly[(0, 15, 14, 13, 12, 11, 10, 9, 8, 7, 6, 5, 4, 3, 2,

+ 1), :]

+ quad = quad[(0, 3, 2, 1), :]

+

+ len_w = np.linalg.norm(quad[0] - quad[1]) + np.linalg.norm(quad[3] -

+ quad[2])

+ len_h = np.linalg.norm(quad[0] - quad[3]) + np.linalg.norm(quad[1] -

+ quad[2])

+ hv_tag = 1

+

+ if len_w * 2.0 < len_h:

+ hv_tag = 0

+

+ validated_polys.append(poly)

+ validated_tags.append(tag)

+ hv_tags.append(hv_tag)

+ return np.array(validated_polys), np.array(validated_tags), np.array(

+ hv_tags)

+

+ def crop_area(self,

+ im,

+ polys,

+ tags,

+ hv_tags,

+ txts,

+ crop_background=False,

+ max_tries=25):

+ """

+ make random crop from the input image

+ :param im:

+ :param polys: [b,4,2]

+ :param tags:

+ :param crop_background:

+ :param max_tries: 50 -> 25

+ :return:

+ """

+ h, w, _ = im.shape

+ pad_h = h // 10

+ pad_w = w // 10

+ h_array = np.zeros((h + pad_h * 2), dtype=np.int32)

+ w_array = np.zeros((w + pad_w * 2), dtype=np.int32)

+ for poly in polys:

+ poly = np.round(poly, decimals=0).astype(np.int32)

+ minx = np.min(poly[:, 0])

+ maxx = np.max(poly[:, 0])

+ w_array[minx + pad_w:maxx + pad_w] = 1

+ miny = np.min(poly[:, 1])

+ maxy = np.max(poly[:, 1])

+ h_array[miny + pad_h:maxy + pad_h] = 1

+ # ensure the cropped area not across a text

+ h_axis = np.where(h_array == 0)[0]

+ w_axis = np.where(w_array == 0)[0]

+ if len(h_axis) == 0 or len(w_axis) == 0:

+ return im, polys, tags, hv_tags, txts

+ for i in range(max_tries):

+ xx = np.random.choice(w_axis, size=2)

+ xmin = np.min(xx) - pad_w

+ xmax = np.max(xx) - pad_w

+ xmin = np.clip(xmin, 0, w - 1)

+ xmax = np.clip(xmax, 0, w - 1)

+ yy = np.random.choice(h_axis, size=2)

+ ymin = np.min(yy) - pad_h

+ ymax = np.max(yy) - pad_h

+ ymin = np.clip(ymin, 0, h - 1)

+ ymax = np.clip(ymax, 0, h - 1)

+ if xmax - xmin < self.min_crop_size or \

+ ymax - ymin < self.min_crop_size:

+ continue

+ if polys.shape[0] != 0:

+ poly_axis_in_area = (polys[:, :, 0] >= xmin) & (polys[:, :, 0] <= xmax) \

+ & (polys[:, :, 1] >= ymin) & (polys[:, :, 1] <= ymax)

+ selected_polys = np.where(

+ np.sum(poly_axis_in_area, axis=1) == 4)[0]

+ else:

+ selected_polys = []

+ if len(selected_polys) == 0:

+ # no text in this area

+ if crop_background:

+ txts_tmp = []

+ for selected_poly in selected_polys:

+ txts_tmp.append(txts[selected_poly])

+ txts = txts_tmp

+ return im[ymin: ymax + 1, xmin: xmax + 1, :], \

+ polys[selected_polys], tags[selected_polys], hv_tags[selected_polys], txts

+ else:

+ continue

+ im = im[ymin:ymax + 1, xmin:xmax + 1, :]

+ polys = polys[selected_polys]

+ tags = tags[selected_polys]

+ hv_tags = hv_tags[selected_polys]

+ txts_tmp = []

+ for selected_poly in selected_polys:

+ txts_tmp.append(txts[selected_poly])

+ txts = txts_tmp

+ polys[:, :, 0] -= xmin

+ polys[:, :, 1] -= ymin

+ return im, polys, tags, hv_tags, txts

+

+ return im, polys, tags, hv_tags, txts

+

+ def fit_and_gather_tcl_points_v2(self,

+ min_area_quad,

+ poly,

+ max_h,

+ max_w,

+ fixed_point_num=64,

+ img_id=0,

+ reference_height=3):

+ """

+ Find the center point of poly as key_points, then fit and gather.

+ """

+ key_point_xys = []

+ point_num = poly.shape[0]

+ for idx in range(point_num // 2):

+ center_point = (poly[idx] + poly[point_num - 1 - idx]) / 2.0

+ key_point_xys.append(center_point)

+

+ tmp_image = np.zeros(

+ shape=(

+ max_h,

+ max_w, ), dtype='float32')

+ cv2.polylines(tmp_image, [np.array(key_point_xys).astype('int32')],

+ False, 1.0)

+ ys, xs = np.where(tmp_image > 0)

+ xy_text = np.array(list(zip(xs, ys)), dtype='float32')

+

+ left_center_pt = (

+ (min_area_quad[0] - min_area_quad[1]) / 2.0).reshape(1, 2)

+ right_center_pt = (

+ (min_area_quad[1] - min_area_quad[2]) / 2.0).reshape(1, 2)

+ proj_unit_vec = (right_center_pt - left_center_pt) / (

+ np.linalg.norm(right_center_pt - left_center_pt) + 1e-6)

+ proj_unit_vec_tile = np.tile(proj_unit_vec,

+ (xy_text.shape[0], 1)) # (n, 2)

+ left_center_pt_tile = np.tile(left_center_pt,

+ (xy_text.shape[0], 1)) # (n, 2)

+ xy_text_to_left_center = xy_text - left_center_pt_tile

+ proj_value = np.sum(xy_text_to_left_center * proj_unit_vec_tile, axis=1)

+ xy_text = xy_text[np.argsort(proj_value)]

+

+ # convert to np and keep the num of point not greater then fixed_point_num

+ pos_info = np.array(xy_text).reshape(-1, 2)[:, ::-1] # xy-> yx

+ point_num = len(pos_info)

+ if point_num > fixed_point_num:

+ keep_ids = [

+ int((point_num * 1.0 / fixed_point_num) * x)

+ for x in range(fixed_point_num)

+ ]

+ pos_info = pos_info[keep_ids, :]

+

+ keep = int(min(len(pos_info), fixed_point_num))

+ if np.random.rand() < 0.2 and reference_height >= 3:

+ dl = (np.random.rand(keep) - 0.5) * reference_height * 0.3

+ random_float = np.array([1, 0]).reshape([1, 2]) * dl.reshape(

+ [keep, 1])

+ pos_info += random_float

+ pos_info[:, 0] = np.clip(pos_info[:, 0], 0, max_h - 1)

+ pos_info[:, 1] = np.clip(pos_info[:, 1], 0, max_w - 1)

+

+ # padding to fixed length

+ pos_l = np.zeros((self.tcl_len, 3), dtype=np.int32)

+ pos_l[:, 0] = np.ones((self.tcl_len, )) * img_id

+ pos_m = np.zeros((self.tcl_len, 1), dtype=np.float32)

+ pos_l[:keep, 1:] = np.round(pos_info).astype(np.int32)

+ pos_m[:keep] = 1.0

+ return pos_l, pos_m

+

+ def generate_direction_map(self, poly_quads, n_char, direction_map):

+ """

+ """

+ width_list = []

+ height_list = []

+ for quad in poly_quads:

+ quad_w = (np.linalg.norm(quad[0] - quad[1]) +

+ np.linalg.norm(quad[2] - quad[3])) / 2.0

+ quad_h = (np.linalg.norm(quad[0] - quad[3]) +

+ np.linalg.norm(quad[2] - quad[1])) / 2.0

+ width_list.append(quad_w)

+ height_list.append(quad_h)

+ norm_width = max(sum(width_list) / n_char, 1.0)

+ average_height = max(sum(height_list) / len(height_list), 1.0)

+ k = 1

+ for quad in poly_quads:

+ direct_vector_full = (

+ (quad[1] + quad[2]) - (quad[0] + quad[3])) / 2.0

+ direct_vector = direct_vector_full / (

+ np.linalg.norm(direct_vector_full) + 1e-6) * norm_width

+ direction_label = tuple(

+ map(float,

+ [direct_vector[0], direct_vector[1], 1.0 / average_height]))

+ cv2.fillPoly(direction_map,

+ quad.round().astype(np.int32)[np.newaxis, :, :],

+ direction_label)

+ k += 1

+ return direction_map

+

+ def calculate_average_height(self, poly_quads):

+ """

+ """

+ height_list = []

+ for quad in poly_quads:

+ quad_h = (np.linalg.norm(quad[0] - quad[3]) +

+ np.linalg.norm(quad[2] - quad[1])) / 2.0

+ height_list.append(quad_h)

+ average_height = max(sum(height_list) / len(height_list), 1.0)

+ return average_height

+

+ def generate_tcl_ctc_label(self,

+ h,

+ w,

+ polys,

+ tags,

+ text_strs,

+ ds_ratio,

+ tcl_ratio=0.3,

+ shrink_ratio_of_width=0.15):

+ """

+ Generate polygon.

+ """

+ score_map_big = np.zeros(

+ (

+ h,

+ w, ), dtype=np.float32)

+ h, w = int(h * ds_ratio), int(w * ds_ratio)

+ polys = polys * ds_ratio

+

+ score_map = np.zeros(

+ (

+ h,

+ w, ), dtype=np.float32)

+ score_label_map = np.zeros(

+ (

+ h,

+ w, ), dtype=np.float32)

+ tbo_map = np.zeros((h, w, 5), dtype=np.float32)

+ training_mask = np.ones(

+ (

+ h,

+ w, ), dtype=np.float32)

+ direction_map = np.ones((h, w, 3)) * np.array([0, 0, 1]).reshape(

+ [1, 1, 3]).astype(np.float32)

+

+ label_idx = 0

+ score_label_map_text_label_list = []

+ pos_list, pos_mask, label_list = [], [], []

+ for poly_idx, poly_tag in enumerate(zip(polys, tags)):

+ poly = poly_tag[0]

+ tag = poly_tag[1]

+

+ # generate min_area_quad

+ min_area_quad, center_point = self.gen_min_area_quad_from_poly(poly)

+ min_area_quad_h = 0.5 * (

+ np.linalg.norm(min_area_quad[0] - min_area_quad[3]) +

+ np.linalg.norm(min_area_quad[1] - min_area_quad[2]))

+ min_area_quad_w = 0.5 * (

+ np.linalg.norm(min_area_quad[0] - min_area_quad[1]) +

+ np.linalg.norm(min_area_quad[2] - min_area_quad[3]))

+

+ if min(min_area_quad_h, min_area_quad_w) < self.min_text_size * ds_ratio \

+ or min(min_area_quad_h, min_area_quad_w) > self.max_text_size * ds_ratio:

+ continue

+

+ if tag:

+ cv2.fillPoly(training_mask,

+ poly.astype(np.int32)[np.newaxis, :, :], 0.15)

+ else:

+ text_label = text_strs[poly_idx]

+ text_label = self.prepare_text_label(text_label,

+ self.Lexicon_Table)

+

+ text_label_index_list = [[self.Lexicon_Table.index(c_)]

+ for c_ in text_label

+ if c_ in self.Lexicon_Table]

+ if len(text_label_index_list) < 1:

+ continue

+

+ tcl_poly = self.poly2tcl(poly, tcl_ratio)

+ tcl_quads = self.poly2quads(tcl_poly)

+ poly_quads = self.poly2quads(poly)

+

+ stcl_quads, quad_index = self.shrink_poly_along_width(

+ tcl_quads,

+ shrink_ratio_of_width=shrink_ratio_of_width,

+ expand_height_ratio=1.0 / tcl_ratio)

+

+ cv2.fillPoly(score_map,

+ np.round(stcl_quads).astype(np.int32), 1.0)

+ cv2.fillPoly(score_map_big,

+ np.round(stcl_quads / ds_ratio).astype(np.int32),

+ 1.0)

+

+ for idx, quad in enumerate(stcl_quads):

+ quad_mask = np.zeros((h, w), dtype=np.float32)

+ quad_mask = cv2.fillPoly(

+ quad_mask,

+ np.round(quad[np.newaxis, :, :]).astype(np.int32), 1.0)

+ tbo_map = self.gen_quad_tbo(poly_quads[quad_index[idx]],

+ quad_mask, tbo_map)

+

+ # score label map and score_label_map_text_label_list for refine

+ if label_idx == 0:

+ text_pos_list_ = [[len(self.Lexicon_Table)], ]

+ score_label_map_text_label_list.append(text_pos_list_)

+

+ label_idx += 1

+ cv2.fillPoly(score_label_map,

+ np.round(poly_quads).astype(np.int32), label_idx)

+ score_label_map_text_label_list.append(text_label_index_list)

+

+ # direction info, fix-me

+ n_char = len(text_label_index_list)

+ direction_map = self.generate_direction_map(poly_quads, n_char,

+ direction_map)

+

+ # pos info

+ average_shrink_height = self.calculate_average_height(

+ stcl_quads)

+ pos_l, pos_m = self.fit_and_gather_tcl_points_v2(

+ min_area_quad,

+ poly,

+ max_h=h,

+ max_w=w,

+ fixed_point_num=64,

+ img_id=self.img_id,

+ reference_height=average_shrink_height)

+

+ label_l = text_label_index_list

+ if len(text_label_index_list) < 2:

+ continue

+

+ pos_list.append(pos_l)

+ pos_mask.append(pos_m)

+ label_list.append(label_l)

+

+ # use big score_map for smooth tcl lines

+ score_map_big_resized = cv2.resize(

+ score_map_big, dsize=None, fx=ds_ratio, fy=ds_ratio)

+ score_map = np.array(score_map_big_resized > 1e-3, dtype='float32')

+

+ return score_map, score_label_map, tbo_map, direction_map, training_mask, \

+ pos_list, pos_mask, label_list, score_label_map_text_label_list

+

+ def adjust_point(self, poly):

+ """

+ adjust point order.

+ """

+ point_num = poly.shape[0]

+ if point_num == 4:

+ len_1 = np.linalg.norm(poly[0] - poly[1])

+ len_2 = np.linalg.norm(poly[1] - poly[2])

+ len_3 = np.linalg.norm(poly[2] - poly[3])

+ len_4 = np.linalg.norm(poly[3] - poly[0])

+

+ if (len_1 + len_3) * 1.5 < (len_2 + len_4):

+ poly = poly[[1, 2, 3, 0], :]

+

+ elif point_num > 4:

+ vector_1 = poly[0] - poly[1]

+ vector_2 = poly[1] - poly[2]

+ cos_theta = np.dot(vector_1, vector_2) / (

+ np.linalg.norm(vector_1) * np.linalg.norm(vector_2) + 1e-6)

+ theta = np.arccos(np.round(cos_theta, decimals=4))

+

+ if abs(theta) > (70 / 180 * math.pi):

+ index = list(range(1, point_num)) + [0]

+ poly = poly[np.array(index), :]

+ return poly

+

+ def gen_min_area_quad_from_poly(self, poly):

+ """

+ Generate min area quad from poly.

+ """

+ point_num = poly.shape[0]

+ min_area_quad = np.zeros((4, 2), dtype=np.float32)

+ if point_num == 4:

+ min_area_quad = poly

+ center_point = np.sum(poly, axis=0) / 4

+ else:

+ rect = cv2.minAreaRect(poly.astype(

+ np.int32)) # (center (x,y), (width, height), angle of rotation)

+ center_point = rect[0]

+ box = np.array(cv2.boxPoints(rect))

+

+ first_point_idx = 0

+ min_dist = 1e4

+ for i in range(4):

+ dist = np.linalg.norm(box[(i + 0) % 4] - poly[0]) + \

+ np.linalg.norm(box[(i + 1) % 4] - poly[point_num // 2 - 1]) + \

+ np.linalg.norm(box[(i + 2) % 4] - poly[point_num // 2]) + \

+ np.linalg.norm(box[(i + 3) % 4] - poly[-1])

+ if dist < min_dist:

+ min_dist = dist

+ first_point_idx = i

+

+ for i in range(4):

+ min_area_quad[i] = box[(first_point_idx + i) % 4]

+

+ return min_area_quad, center_point

+

+ def shrink_quad_along_width(self,

+ quad,

+ begin_width_ratio=0.,

+ end_width_ratio=1.):

+ """

+ Generate shrink_quad_along_width.

+ """

+ ratio_pair = np.array(

+ [[begin_width_ratio], [end_width_ratio]], dtype=np.float32)

+ p0_1 = quad[0] + (quad[1] - quad[0]) * ratio_pair

+ p3_2 = quad[3] + (quad[2] - quad[3]) * ratio_pair

+ return np.array([p0_1[0], p0_1[1], p3_2[1], p3_2[0]])

+

+ def shrink_poly_along_width(self,

+ quads,

+ shrink_ratio_of_width,

+ expand_height_ratio=1.0):

+ """

+ shrink poly with given length.

+ """

+ upper_edge_list = []

+

+ def get_cut_info(edge_len_list, cut_len):

+ for idx, edge_len in enumerate(edge_len_list):

+ cut_len -= edge_len

+ if cut_len <= 0.000001:

+ ratio = (cut_len + edge_len_list[idx]) / edge_len_list[idx]

+ return idx, ratio

+

+ for quad in quads:

+ upper_edge_len = np.linalg.norm(quad[0] - quad[1])

+ upper_edge_list.append(upper_edge_len)

+

+ # length of left edge and right edge.

+ left_length = np.linalg.norm(quads[0][0] - quads[0][

+ 3]) * expand_height_ratio

+ right_length = np.linalg.norm(quads[-1][1] - quads[-1][

+ 2]) * expand_height_ratio

+

+ shrink_length = min(left_length, right_length,

+ sum(upper_edge_list)) * shrink_ratio_of_width

+ # shrinking length

+ upper_len_left = shrink_length

+ upper_len_right = sum(upper_edge_list) - shrink_length

+

+ left_idx, left_ratio = get_cut_info(upper_edge_list, upper_len_left)

+ left_quad = self.shrink_quad_along_width(

+ quads[left_idx], begin_width_ratio=left_ratio, end_width_ratio=1)

+ right_idx, right_ratio = get_cut_info(upper_edge_list, upper_len_right)

+ right_quad = self.shrink_quad_along_width(

+ quads[right_idx], begin_width_ratio=0, end_width_ratio=right_ratio)

+

+ out_quad_list = []

+ if left_idx == right_idx:

+ out_quad_list.append(

+ [left_quad[0], right_quad[1], right_quad[2], left_quad[3]])

+ else:

+ out_quad_list.append(left_quad)

+ for idx in range(left_idx + 1, right_idx):

+ out_quad_list.append(quads[idx])

+ out_quad_list.append(right_quad)

+

+ return np.array(out_quad_list), list(range(left_idx, right_idx + 1))

+

+ def prepare_text_label(self, label_str, Lexicon_Table):

+ """

+ Prepare text lablel by given Lexicon_Table.

+ """

+ if len(Lexicon_Table) == 36:

+ return label_str.lower()

+ else:

+ return label_str

+

+ def vector_angle(self, A, B):

+ """

+ Calculate the angle between vector AB and x-axis positive direction.

+ """

+ AB = np.array([B[1] - A[1], B[0] - A[0]])

+ return np.arctan2(*AB)

+

+ def theta_line_cross_point(self, theta, point):

+ """

+ Calculate the line through given point and angle in ax + by + c =0 form.

+ """

+ x, y = point

+ cos = np.cos(theta)

+ sin = np.sin(theta)

+ return [sin, -cos, cos * y - sin * x]

+

+ def line_cross_two_point(self, A, B):

+ """

+ Calculate the line through given point A and B in ax + by + c =0 form.

+ """

+ angle = self.vector_angle(A, B)

+ return self.theta_line_cross_point(angle, A)

+

+ def average_angle(self, poly):

+ """

+ Calculate the average angle between left and right edge in given poly.

+ """

+ p0, p1, p2, p3 = poly

+ angle30 = self.vector_angle(p3, p0)

+ angle21 = self.vector_angle(p2, p1)

+ return (angle30 + angle21) / 2

+

+ def line_cross_point(self, line1, line2):

+ """

+ line1 and line2 in 0=ax+by+c form, compute the cross point of line1 and line2

+ """

+ a1, b1, c1 = line1

+ a2, b2, c2 = line2

+ d = a1 * b2 - a2 * b1

+

+ if d == 0:

+ print('Cross point does not exist')

+ return np.array([0, 0], dtype=np.float32)

+ else:

+ x = (b1 * c2 - b2 * c1) / d

+ y = (a2 * c1 - a1 * c2) / d

+

+ return np.array([x, y], dtype=np.float32)

+

+ def quad2tcl(self, poly, ratio):

+ """

+ Generate center line by poly clock-wise point. (4, 2)

+ """

+ ratio_pair = np.array(

+ [[0.5 - ratio / 2], [0.5 + ratio / 2]], dtype=np.float32)

+ p0_3 = poly[0] + (poly[3] - poly[0]) * ratio_pair

+ p1_2 = poly[1] + (poly[2] - poly[1]) * ratio_pair

+ return np.array([p0_3[0], p1_2[0], p1_2[1], p0_3[1]])

+

+ def poly2tcl(self, poly, ratio):

+ """

+ Generate center line by poly clock-wise point.

+ """

+ ratio_pair = np.array(

+ [[0.5 - ratio / 2], [0.5 + ratio / 2]], dtype=np.float32)

+ tcl_poly = np.zeros_like(poly)

+ point_num = poly.shape[0]

+

+ for idx in range(point_num // 2):

+ point_pair = poly[idx] + (poly[point_num - 1 - idx] - poly[idx]

+ ) * ratio_pair

+ tcl_poly[idx] = point_pair[0]

+ tcl_poly[point_num - 1 - idx] = point_pair[1]

+ return tcl_poly

+

+ def gen_quad_tbo(self, quad, tcl_mask, tbo_map):

+ """

+ Generate tbo_map for give quad.

+ """

+ # upper and lower line function: ax + by + c = 0;

+ up_line = self.line_cross_two_point(quad[0], quad[1])

+ lower_line = self.line_cross_two_point(quad[3], quad[2])

+

+ quad_h = 0.5 * (np.linalg.norm(quad[0] - quad[3]) +

+ np.linalg.norm(quad[1] - quad[2]))

+ quad_w = 0.5 * (np.linalg.norm(quad[0] - quad[1]) +

+ np.linalg.norm(quad[2] - quad[3]))

+

+ # average angle of left and right line.

+ angle = self.average_angle(quad)

+

+ xy_in_poly = np.argwhere(tcl_mask == 1)

+ for y, x in xy_in_poly:

+ point = (x, y)

+ line = self.theta_line_cross_point(angle, point)

+ cross_point_upper = self.line_cross_point(up_line, line)

+ cross_point_lower = self.line_cross_point(lower_line, line)

+ ##FIX, offset reverse

+ upper_offset_x, upper_offset_y = cross_point_upper - point

+ lower_offset_x, lower_offset_y = cross_point_lower - point

+ tbo_map[y, x, 0] = upper_offset_y

+ tbo_map[y, x, 1] = upper_offset_x

+ tbo_map[y, x, 2] = lower_offset_y

+ tbo_map[y, x, 3] = lower_offset_x

+ tbo_map[y, x, 4] = 1.0 / max(min(quad_h, quad_w), 1.0) * 2

+ return tbo_map

+

+ def poly2quads(self, poly):

+ """

+ Split poly into quads.

+ """

+ quad_list = []

+ point_num = poly.shape[0]

+

+ # point pair

+ point_pair_list = []

+ for idx in range(point_num // 2):

+ point_pair = [poly[idx], poly[point_num - 1 - idx]]

+ point_pair_list.append(point_pair)

+

+ quad_num = point_num // 2 - 1

+ for idx in range(quad_num):

+ # reshape and adjust to clock-wise

+ quad_list.append((np.array(point_pair_list)[[idx, idx + 1]]

+ ).reshape(4, 2)[[0, 2, 3, 1]])

+

+ return np.array(quad_list)

+

+ def rotate_im_poly(self, im, text_polys):

+ """

+ rotate image with 90 / 180 / 270 degre

+ """

+ im_w, im_h = im.shape[1], im.shape[0]

+ dst_im = im.copy()

+ dst_polys = []

+ rand_degree_ratio = np.random.rand()

+ rand_degree_cnt = 1

+ if rand_degree_ratio > 0.5:

+ rand_degree_cnt = 3

+ for i in range(rand_degree_cnt):

+ dst_im = np.rot90(dst_im)

+ rot_degree = -90 * rand_degree_cnt

+ rot_angle = rot_degree * math.pi / 180.0

+ n_poly = text_polys.shape[0]

+ cx, cy = 0.5 * im_w, 0.5 * im_h

+ ncx, ncy = 0.5 * dst_im.shape[1], 0.5 * dst_im.shape[0]

+ for i in range(n_poly):

+ wordBB = text_polys[i]

+ poly = []

+ for j in range(4): # 16->4

+ sx, sy = wordBB[j][0], wordBB[j][1]

+ dx = math.cos(rot_angle) * (sx - cx) - math.sin(rot_angle) * (

+ sy - cy) + ncx

+ dy = math.sin(rot_angle) * (sx - cx) + math.cos(rot_angle) * (

+ sy - cy) + ncy

+ poly.append([dx, dy])

+ dst_polys.append(poly)

+ return dst_im, np.array(dst_polys, dtype=np.float32)

+

+ def __call__(self, data):

+ input_size = 512

+ im = data['image']

+ text_polys = data['polys']

+ text_tags = data['tags']

+ text_strs = data['strs']

+ h, w, _ = im.shape

+ text_polys, text_tags, hv_tags = self.check_and_validate_polys(

+ text_polys, text_tags, (h, w))

+ if text_polys.shape[0] <= 0:

+ return None

+ # set aspect ratio and keep area fix

+ asp_scales = np.arange(1.0, 1.55, 0.1)

+ asp_scale = np.random.choice(asp_scales)

+ if np.random.rand() < 0.5:

+ asp_scale = 1.0 / asp_scale

+ asp_scale = math.sqrt(asp_scale)

+

+ asp_wx = asp_scale

+ asp_hy = 1.0 / asp_scale

+ im = cv2.resize(im, dsize=None, fx=asp_wx, fy=asp_hy)

+ text_polys[:, :, 0] *= asp_wx

+ text_polys[:, :, 1] *= asp_hy

+

+ h, w, _ = im.shape

+ if max(h, w) > 2048:

+ rd_scale = 2048.0 / max(h, w)

+ im = cv2.resize(im, dsize=None, fx=rd_scale, fy=rd_scale)

+ text_polys *= rd_scale

+ h, w, _ = im.shape

+ if min(h, w) < 16:

+ return None

+

+ # no background

+ im, text_polys, text_tags, hv_tags, text_strs = self.crop_area(

+ im,

+ text_polys,

+ text_tags,

+ hv_tags,

+ text_strs,

+ crop_background=False)

+

+ if text_polys.shape[0] == 0:

+ return None

+ # # continue for all ignore case

+ if np.sum((text_tags * 1.0)) >= text_tags.size:

+ return None

+ new_h, new_w, _ = im.shape

+ if (new_h is None) or (new_w is None):

+ return None

+ # resize image

+ std_ratio = float(input_size) / max(new_w, new_h)

+ rand_scales = np.array(

+ [0.25, 0.375, 0.5, 0.625, 0.75, 0.875, 1.0, 1.0, 1.0, 1.0, 1.0])

+ rz_scale = std_ratio * np.random.choice(rand_scales)

+ im = cv2.resize(im, dsize=None, fx=rz_scale, fy=rz_scale)

+ text_polys[:, :, 0] *= rz_scale

+ text_polys[:, :, 1] *= rz_scale

+

+ # add gaussian blur

+ if np.random.rand() < 0.1 * 0.5:

+ ks = np.random.permutation(5)[0] + 1

+ ks = int(ks / 2) * 2 + 1

+ im = cv2.GaussianBlur(im, ksize=(ks, ks), sigmaX=0, sigmaY=0)

+ # add brighter

+ if np.random.rand() < 0.1 * 0.5:

+ im = im * (1.0 + np.random.rand() * 0.5)

+ im = np.clip(im, 0.0, 255.0)

+ # add darker

+ if np.random.rand() < 0.1 * 0.5:

+ im = im * (1.0 - np.random.rand() * 0.5)

+ im = np.clip(im, 0.0, 255.0)

+

+ # Padding the im to [input_size, input_size]

+ new_h, new_w, _ = im.shape

+ if min(new_w, new_h) < input_size * 0.5:

+ return None

+ im_padded = np.ones((input_size, input_size, 3), dtype=np.float32)

+ im_padded[:, :, 2] = 0.485 * 255

+ im_padded[:, :, 1] = 0.456 * 255

+ im_padded[:, :, 0] = 0.406 * 255

+

+ # Random the start position

+ del_h = input_size - new_h

+ del_w = input_size - new_w

+ sh, sw = 0, 0

+ if del_h > 1:

+ sh = int(np.random.rand() * del_h)

+ if del_w > 1:

+ sw = int(np.random.rand() * del_w)

+

+ # Padding

+ im_padded[sh:sh + new_h, sw:sw + new_w, :] = im.copy()

+ text_polys[:, :, 0] += sw

+ text_polys[:, :, 1] += sh

+

+ score_map, score_label_map, border_map, direction_map, training_mask, \

+ pos_list, pos_mask, label_list, score_label_map_text_label = self.generate_tcl_ctc_label(input_size,

+ input_size,

+ text_polys,

+ text_tags,

+ text_strs, 0.25)

+ if len(label_list) <= 0: # eliminate negative samples

+ return None

+ pos_list_temp = np.zeros([64, 3])

+ pos_mask_temp = np.zeros([64, 1])

+ label_list_temp = np.zeros([self.max_text_length, 1]) + self.pad_num

+

+ for i, label in enumerate(label_list):

+ n = len(label)

+ if n > self.max_text_length:

+ label_list[i] = label[:self.max_text_length]

+ continue

+ while n < self.max_text_length:

+ label.append([self.pad_num])

+ n += 1

+

+ for i in range(len(label_list)):

+ label_list[i] = np.array(label_list[i])

+

+ if len(pos_list) <= 0 or len(pos_list) > self.max_text_nums:

+ return None

+ for __ in range(self.max_text_nums - len(pos_list), 0, -1):

+ pos_list.append(pos_list_temp)

+ pos_mask.append(pos_mask_temp)

+ label_list.append(label_list_temp)

+

+ if self.img_id == self.batch_size - 1:

+ self.img_id = 0

+ else:

+ self.img_id += 1

+

+ im_padded[:, :, 2] -= 0.485 * 255

+ im_padded[:, :, 1] -= 0.456 * 255

+ im_padded[:, :, 0] -= 0.406 * 255

+ im_padded[:, :, 2] /= (255.0 * 0.229)

+ im_padded[:, :, 1] /= (255.0 * 0.224)

+ im_padded[:, :, 0] /= (255.0 * 0.225)

+ im_padded = im_padded.transpose((2, 0, 1))

+ images = im_padded[::-1, :, :]

+ tcl_maps = score_map[np.newaxis, :, :]

+ tcl_label_maps = score_label_map[np.newaxis, :, :]

+ border_maps = border_map.transpose((2, 0, 1))

+ direction_maps = direction_map.transpose((2, 0, 1))

+ training_masks = training_mask[np.newaxis, :, :]

+ pos_list = np.array(pos_list)

+ pos_mask = np.array(pos_mask)

+ label_list = np.array(label_list)

+ data['images'] = images

+ data['tcl_maps'] = tcl_maps

+ data['tcl_label_maps'] = tcl_label_maps

+ data['border_maps'] = border_maps

+ data['direction_maps'] = direction_maps

+ data['training_masks'] = training_masks

+ data['label_list'] = label_list

+ data['pos_list'] = pos_list

+ data['pos_mask'] = pos_mask

+ return data

diff --git a/ppocr/data/pgnet_dataset.py b/ppocr/data/pgnet_dataset.py

new file mode 100644

index 0000000000000000000000000000000000000000..ae0638350ad02f10202a67bc6cd531daf742f984

--- /dev/null

+++ b/ppocr/data/pgnet_dataset.py

@@ -0,0 +1,175 @@

+# copyright (c) 2021 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import numpy as np

+import os

+from paddle.io import Dataset

+from .imaug import transform, create_operators

+import random

+

+

+class PGDataSet(Dataset):

+ def __init__(self, config, mode, logger, seed=None):

+ super(PGDataSet, self).__init__()

+

+ self.logger = logger

+ self.seed = seed

+ self.mode = mode

+ global_config = config['Global']

+ dataset_config = config[mode]['dataset']

+ loader_config = config[mode]['loader']

+

+ label_file_list = dataset_config.pop('label_file_list')

+ data_source_num = len(label_file_list)

+ ratio_list = dataset_config.get("ratio_list", [1.0])

+ if isinstance(ratio_list, (float, int)):

+ ratio_list = [float(ratio_list)] * int(data_source_num)

+ self.data_format = dataset_config.get('data_format', 'icdar')

+ assert len(

+ ratio_list

+ ) == data_source_num, "The length of ratio_list should be the same as the file_list."

+ self.do_shuffle = loader_config['shuffle']

+

+ logger.info("Initialize indexs of datasets:%s" % label_file_list)

+ self.data_lines = self.get_image_info_list(label_file_list, ratio_list,

+ self.data_format)

+ self.data_idx_order_list = list(range(len(self.data_lines)))

+ if mode.lower() == "train":

+ self.shuffle_data_random()

+

+ self.ops = create_operators(dataset_config['transforms'], global_config)

+

+ def shuffle_data_random(self):

+ if self.do_shuffle:

+ random.seed(self.seed)

+ random.shuffle(self.data_lines)

+ return

+

+ def extract_polys(self, poly_txt_path):

+ """

+ Read text_polys, txt_tags, txts from give txt file.

+ """

+ text_polys, txt_tags, txts = [], [], []

+ with open(poly_txt_path) as f:

+ for line in f.readlines():

+ poly_str, txt = line.strip().split('\t')

+ poly = list(map(float, poly_str.split(',')))

+ if self.mode.lower() == "eval":

+ while len(poly) < 100:

+ poly.append(-1)

+ text_polys.append(

+ np.array(

+ poly, dtype=np.float32).reshape(-1, 2))

+ txts.append(txt)

+ txt_tags.append(txt == '###')

+

+ return np.array(list(map(np.array, text_polys))), \

+ np.array(txt_tags, dtype=np.bool), txts

+

+ def extract_info_textnet(self, im_fn, img_dir=''):

+ """

+ Extract information from line in textnet format.

+ """

+ info_list = im_fn.split('\t')

+ img_path = ''

+ for ext in [

+ 'jpg', 'bmp', 'png', 'jpeg', 'rgb', 'tif', 'tiff', 'gif', 'JPG'

+ ]:

+ if os.path.exists(os.path.join(img_dir, info_list[0] + "." + ext)):

+ img_path = os.path.join(img_dir, info_list[0] + "." + ext)

+ break

+

+ if img_path == '':

+ print('Image {0} NOT found in {1}, and it will be ignored.'.format(

+ info_list[0], img_dir))

+

+ nBox = (len(info_list) - 1) // 9

+ wordBBs, txts, txt_tags = [], [], []

+ for n in range(0, nBox):

+ wordBB = list(map(float, info_list[n * 9 + 1:(n + 1) * 9]))

+ txt = info_list[(n + 1) * 9]

+ wordBBs.append([[wordBB[0], wordBB[1]], [wordBB[2], wordBB[3]],

+ [wordBB[4], wordBB[5]], [wordBB[6], wordBB[7]]])

+ txts.append(txt)

+ if txt == '###':

+ txt_tags.append(True)

+ else:

+ txt_tags.append(False)

+ return img_path, np.array(wordBBs, dtype=np.float32), txt_tags, txts

+

+ def get_image_info_list(self, file_list, ratio_list, data_format='textnet'):

+ if isinstance(file_list, str):

+ file_list = [file_list]

+ data_lines = []

+ for idx, data_source in enumerate(file_list):

+ image_files = []

+ if data_format == 'icdar':

+ image_files = [(data_source, x) for x in

+ os.listdir(os.path.join(data_source, 'rgb'))

+ if x.split('.')[-1] in [

+ 'jpg', 'bmp', 'png', 'jpeg', 'rgb', 'tif',

+ 'tiff', 'gif', 'JPG'

+ ]]

+ elif data_format == 'textnet':

+ with open(data_source) as f:

+ image_files = [(data_source, x.strip())

+ for x in f.readlines()]

+ else:

+ print("Unrecognized data format...")

+ exit(-1)

+ random.seed(self.seed)

+ image_files = random.sample(

+ image_files, round(len(image_files) * ratio_list[idx]))

+ data_lines.extend(image_files)

+ return data_lines

+

+ def __getitem__(self, idx):

+ file_idx = self.data_idx_order_list[idx]

+ data_path, data_line = self.data_lines[file_idx]

+ try:

+ if self.data_format == 'icdar':

+ im_path = os.path.join(data_path, 'rgb', data_line)

+ if self.mode.lower() == "eval":

+ poly_path = os.path.join(data_path, 'poly_gt',

+ data_line.split('.')[0] + '.txt')

+ else:

+ poly_path = os.path.join(data_path, 'poly',

+ data_line.split('.')[0] + '.txt')

+ text_polys, text_tags, text_strs = self.extract_polys(poly_path)

+ else:

+ image_dir = os.path.join(os.path.dirname(data_path), 'image')

+ im_path, text_polys, text_tags, text_strs = self.extract_info_textnet(

+ data_line, image_dir)

+

+ data = {

+ 'img_path': im_path,

+ 'polys': text_polys,

+ 'tags': text_tags,

+ 'strs': text_strs

+ }

+ with open(data['img_path'], 'rb') as f:

+ img = f.read()

+ data['image'] = img

+ outs = transform(data, self.ops)

+

+ except Exception as e:

+ self.logger.error(

+ "When parsing line {}, error happened with msg: {}".format(

+ self.data_idx_order_list[idx], e))

+ outs = None

+ if outs is None:

+ return self.__getitem__(np.random.randint(self.__len__()))

+ return outs

+

+ def __len__(self):

+ return len(self.data_idx_order_list)

diff --git a/ppocr/losses/__init__.py b/ppocr/losses/__init__.py

index 3881abf7741b8be78306bd070afb11df15606327..223ae6b1da996478ac607e29dd37173ca51d9903 100755

--- a/ppocr/losses/__init__.py

+++ b/ppocr/losses/__init__.py

@@ -29,10 +29,11 @@ def build_loss(config):

# cls loss

from .cls_loss import ClsLoss

+ # e2e loss

+ from .e2e_pg_loss import PGLoss

support_dict = [

'DBLoss', 'EASTLoss', 'SASTLoss', 'CTCLoss', 'ClsLoss', 'AttentionLoss',

- 'SRNLoss'

- ]

+ 'SRNLoss', 'PGLoss']

config = copy.deepcopy(config)

module_name = config.pop('name')

diff --git a/ppocr/losses/e2e_pg_loss.py b/ppocr/losses/e2e_pg_loss.py

new file mode 100644

index 0000000000000000000000000000000000000000..10a8ed0aa907123b155976ba498426604f23c2b0

--- /dev/null

+++ b/ppocr/losses/e2e_pg_loss.py

@@ -0,0 +1,140 @@

+# copyright (c) 2021 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+from paddle import nn

+import paddle

+

+from .det_basic_loss import DiceLoss

+from ppocr.utils.e2e_utils.extract_batchsize import pre_process

+

+

+class PGLoss(nn.Layer):

+ def __init__(self,

+ tcl_bs,

+ max_text_length,

+ max_text_nums,

+ pad_num,

+ eps=1e-6,

+ **kwargs):

+ super(PGLoss, self).__init__()

+ self.tcl_bs = tcl_bs

+ self.max_text_nums = max_text_nums

+ self.max_text_length = max_text_length

+ self.pad_num = pad_num

+ self.dice_loss = DiceLoss(eps=eps)

+

+ def border_loss(self, f_border, l_border, l_score, l_mask):

+ l_border_split, l_border_norm = paddle.tensor.split(

+ l_border, num_or_sections=[4, 1], axis=1)

+ f_border_split = f_border

+ b, c, h, w = l_border_norm.shape

+ l_border_norm_split = paddle.expand(

+ x=l_border_norm, shape=[b, 4 * c, h, w])

+ b, c, h, w = l_score.shape

+ l_border_score = paddle.expand(x=l_score, shape=[b, 4 * c, h, w])

+ b, c, h, w = l_mask.shape

+ l_border_mask = paddle.expand(x=l_mask, shape=[b, 4 * c, h, w])

+ border_diff = l_border_split - f_border_split

+ abs_border_diff = paddle.abs(border_diff)

+ border_sign = abs_border_diff < 1.0

+ border_sign = paddle.cast(border_sign, dtype='float32')

+ border_sign.stop_gradient = True

+ border_in_loss = 0.5 * abs_border_diff * abs_border_diff * border_sign + \

+ (abs_border_diff - 0.5) * (1.0 - border_sign)

+ border_out_loss = l_border_norm_split * border_in_loss

+ border_loss = paddle.sum(border_out_loss * l_border_score * l_border_mask) / \

+ (paddle.sum(l_border_score * l_border_mask) + 1e-5)

+ return border_loss

+

+ def direction_loss(self, f_direction, l_direction, l_score, l_mask):

+ l_direction_split, l_direction_norm = paddle.tensor.split(

+ l_direction, num_or_sections=[2, 1], axis=1)

+ f_direction_split = f_direction

+ b, c, h, w = l_direction_norm.shape

+ l_direction_norm_split = paddle.expand(

+ x=l_direction_norm, shape=[b, 2 * c, h, w])

+ b, c, h, w = l_score.shape

+ l_direction_score = paddle.expand(x=l_score, shape=[b, 2 * c, h, w])

+ b, c, h, w = l_mask.shape

+ l_direction_mask = paddle.expand(x=l_mask, shape=[b, 2 * c, h, w])

+ direction_diff = l_direction_split - f_direction_split

+ abs_direction_diff = paddle.abs(direction_diff)

+ direction_sign = abs_direction_diff < 1.0

+ direction_sign = paddle.cast(direction_sign, dtype='float32')

+ direction_sign.stop_gradient = True

+ direction_in_loss = 0.5 * abs_direction_diff * abs_direction_diff * direction_sign + \

+ (abs_direction_diff - 0.5) * (1.0 - direction_sign)

+ direction_out_loss = l_direction_norm_split * direction_in_loss

+ direction_loss = paddle.sum(direction_out_loss * l_direction_score * l_direction_mask) / \

+ (paddle.sum(l_direction_score * l_direction_mask) + 1e-5)

+ return direction_loss

+

+ def ctcloss(self, f_char, tcl_pos, tcl_mask, tcl_label, label_t):

+ f_char = paddle.transpose(f_char, [0, 2, 3, 1])

+ tcl_pos = paddle.reshape(tcl_pos, [-1, 3])

+ tcl_pos = paddle.cast(tcl_pos, dtype=int)

+ f_tcl_char = paddle.gather_nd(f_char, tcl_pos)

+ f_tcl_char = paddle.reshape(f_tcl_char,

+ [-1, 64, 37]) # len(Lexicon_Table)+1

+ f_tcl_char_fg, f_tcl_char_bg = paddle.split(f_tcl_char, [36, 1], axis=2)

+ f_tcl_char_bg = f_tcl_char_bg * tcl_mask + (1.0 - tcl_mask) * 20.0

+ b, c, l = tcl_mask.shape

+ tcl_mask_fg = paddle.expand(x=tcl_mask, shape=[b, c, 36 * l])

+ tcl_mask_fg.stop_gradient = True

+ f_tcl_char_fg = f_tcl_char_fg * tcl_mask_fg + (1.0 - tcl_mask_fg) * (

+ -20.0)

+ f_tcl_char_mask = paddle.concat([f_tcl_char_fg, f_tcl_char_bg], axis=2)

+ f_tcl_char_ld = paddle.transpose(f_tcl_char_mask, (1, 0, 2))

+ N, B, _ = f_tcl_char_ld.shape

+ input_lengths = paddle.to_tensor([N] * B, dtype='int64')

+ cost = paddle.nn.functional.ctc_loss(

+ log_probs=f_tcl_char_ld,

+ labels=tcl_label,

+ input_lengths=input_lengths,

+ label_lengths=label_t,

+ blank=self.pad_num,

+ reduction='none')

+ cost = cost.mean()

+ return cost

+

+ def forward(self, predicts, labels):

+ images, tcl_maps, tcl_label_maps, border_maps \

+ , direction_maps, training_masks, label_list, pos_list, pos_mask = labels

+ # for all the batch_size

+ pos_list, pos_mask, label_list, label_t = pre_process(

+ label_list, pos_list, pos_mask, self.max_text_length,

+ self.max_text_nums, self.pad_num, self.tcl_bs)

+

+ f_score, f_border, f_direction, f_char = predicts['f_score'], predicts['f_border'], predicts['f_direction'], \

+ predicts['f_char']

+ score_loss = self.dice_loss(f_score, tcl_maps, training_masks)

+ border_loss = self.border_loss(f_border, border_maps, tcl_maps,

+ training_masks)

+ direction_loss = self.direction_loss(f_direction, direction_maps,

+ tcl_maps, training_masks)

+ ctc_loss = self.ctcloss(f_char, pos_list, pos_mask, label_list, label_t)

+ loss_all = score_loss + border_loss + direction_loss + 5 * ctc_loss

+

+ losses = {

+ 'loss': loss_all,

+ "score_loss": score_loss,

+ "border_loss": border_loss,

+ "direction_loss": direction_loss,

+ "ctc_loss": ctc_loss

+ }

+ return losses

diff --git a/ppocr/metrics/__init__.py b/ppocr/metrics/__init__.py

index a0e7d91207277d5c1696d99473f6bc5f685591fc..f913010dbd994633d3df1cf996abb994d246a11a 100644

--- a/ppocr/metrics/__init__.py

+++ b/ppocr/metrics/__init__.py

@@ -26,8 +26,9 @@ def build_metric(config):

from .det_metric import DetMetric

from .rec_metric import RecMetric

from .cls_metric import ClsMetric

+ from .e2e_metric import E2EMetric

- support_dict = ['DetMetric', 'RecMetric', 'ClsMetric']

+ support_dict = ['DetMetric', 'RecMetric', 'ClsMetric', 'E2EMetric']

config = copy.deepcopy(config)

module_name = config.pop('name')

diff --git a/ppocr/metrics/e2e_metric.py b/ppocr/metrics/e2e_metric.py

new file mode 100644

index 0000000000000000000000000000000000000000..684d77421c659d4150ea4a28a99b4ae43d678b69

--- /dev/null

+++ b/ppocr/metrics/e2e_metric.py

@@ -0,0 +1,81 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+__all__ = ['E2EMetric']

+

+from ppocr.utils.e2e_metric.Deteval import get_socre, combine_results

+from ppocr.utils.e2e_utils.extract_textpoint import get_dict

+

+

+class E2EMetric(object):

+ def __init__(self,

+ character_dict_path,

+ main_indicator='f_score_e2e',

+ **kwargs):

+ self.label_list = get_dict(character_dict_path)

+ self.max_index = len(self.label_list)

+ self.main_indicator = main_indicator

+ self.reset()

+