diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/PCB\345\255\227\347\254\246\350\257\206\345\210\253.md" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/PCB\345\255\227\347\254\246\350\257\206\345\210\253.md"

new file mode 100644

index 0000000000000000000000000000000000000000..a5052e2897ab9f09a6ed7b747f9fa1198af2a8ab

--- /dev/null

+++ "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/PCB\345\255\227\347\254\246\350\257\206\345\210\253.md"

@@ -0,0 +1,648 @@

+# 基于PP-OCRv3的PCB字符识别

+

+- [1. 项目介绍](#1-项目介绍)

+- [2. 安装说明](#2-安装说明)

+- [3. 数据准备](#3-数据准备)

+- [4. 文本检测](#4-文本检测)

+ - [4.1 预训练模型直接评估](#41-预训练模型直接评估)

+ - [4.2 预训练模型+验证集padding直接评估](#42-预训练模型验证集padding直接评估)

+ - [4.3 预训练模型+fine-tune](#43-预训练模型fine-tune)

+- [5. 文本识别](#5-文本识别)

+ - [5.1 预训练模型直接评估](#51-预训练模型直接评估)

+ - [5.2 三种fine-tune方案](#52-三种fine-tune方案)

+- [6. 模型导出](#6-模型导出)

+- [7. 端对端评测](#7-端对端评测)

+- [8. Jetson部署](#8-Jetson部署)

+- [9. 总结](#9-总结)

+- [更多资源](#更多资源)

+

+# 1. 项目介绍

+

+印刷电路板(PCB)是电子产品中的核心器件,对于板件质量的测试与监控是生产中必不可少的环节。在一些场景中,通过PCB中信号灯颜色和文字组合可以定位PCB局部模块质量问题,PCB文字识别中存在如下难点:

+

+- 裁剪出的PCB图片宽高比例较小

+- 文字区域整体面积也较小

+- 包含垂直、水平多种方向文本

+

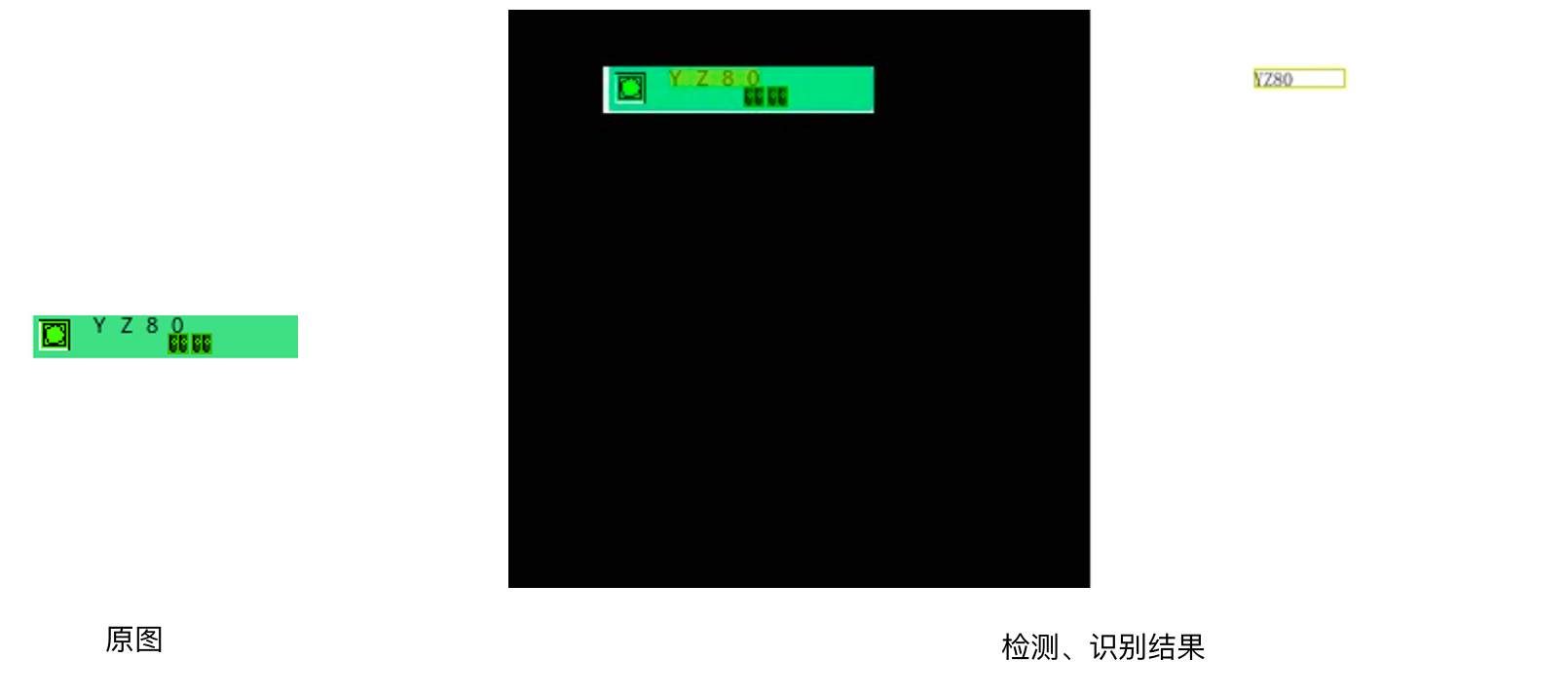

+针对本场景,PaddleOCR基于全新的PP-OCRv3通过合成数据、微调以及其他场景适配方法完成小字符文本识别任务,满足企业上线要求。PCB检测、识别效果如 **图1** 所示:

+

+

+图1 PCB检测识别效果

+

+注:欢迎在AIStudio领取免费算力体验线上实训,项目链接: [基于PP-OCRv3实现PCB字符识别](https://aistudio.baidu.com/aistudio/projectdetail/4008973)

+

+# 2. 安装说明

+

+

+下载PaddleOCR源码,安装依赖环境。

+

+

+```python

+# 如仍需安装or安装更新,可以执行以下步骤

+git clone https://github.com/PaddlePaddle/PaddleOCR.git

+# git clone https://gitee.com/PaddlePaddle/PaddleOCR

+```

+

+

+```python

+# 安装依赖包

+pip install -r /home/aistudio/PaddleOCR/requirements.txt

+```

+

+# 3. 数据准备

+

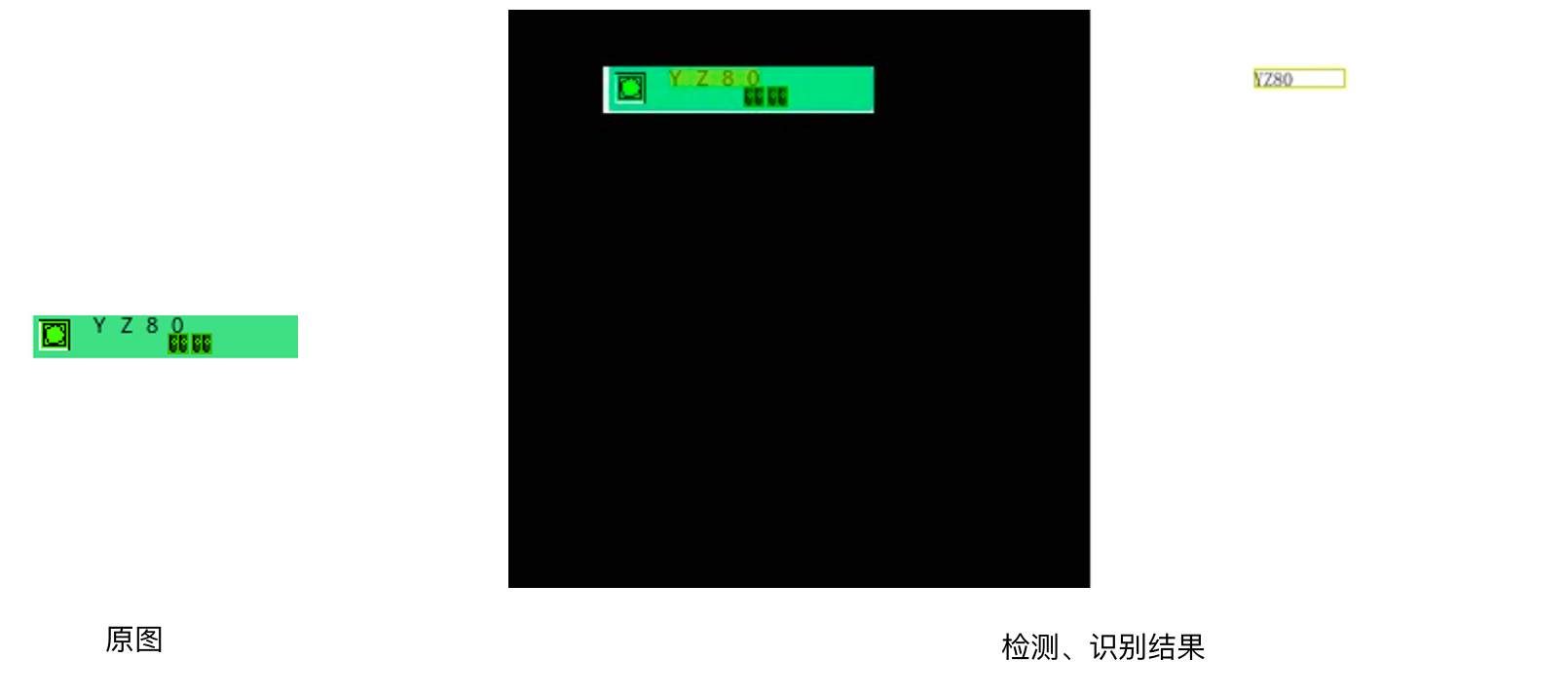

+我们通过图片合成工具生成 **图2** 所示的PCB图片,整图只有高25、宽150左右、文字区域高9、宽45左右,包含垂直和水平2种方向的文本:

+

+

+图2 数据集示例

+

+暂时不开源生成的PCB数据集,但是通过更换背景,通过如下代码生成数据即可:

+

+```

+cd gen_data

+python3 gen.py --num_img=10

+```

+

+生成图片参数解释:

+

+```

+num_img:生成图片数量

+font_min_size、font_max_size:字体最大、最小尺寸

+bg_path:文字区域背景存放路径

+det_bg_path:整图背景存放路径

+fonts_path:字体路径

+corpus_path:语料路径

+output_dir:生成图片存储路径

+```

+

+这里生成 **100张** 相同尺寸和文本的图片,如 **图3** 所示,方便大家跑通实验。通过如下代码解压数据集:

+

+

+图3 案例提供数据集示例

+

+

+```python

+tar xf ./data/data148165/dataset.tar -C ./

+```

+

+在生成数据集的时需要生成检测和识别训练需求的格式:

+

+

+- **文本检测**

+

+标注文件格式如下,中间用'\t'分隔:

+

+```

+" 图像文件名 json.dumps编码的图像标注信息"

+ch4_test_images/img_61.jpg [{"transcription": "MASA", "points": [[310, 104], [416, 141], [418, 216], [312, 179]]}, {...}]

+```

+

+json.dumps编码前的图像标注信息是包含多个字典的list,字典中的 `points` 表示文本框的四个点的坐标(x, y),从左上角的点开始顺时针排列。 `transcription` 表示当前文本框的文字,***当其内容为“###”时,表示该文本框无效,在训练时会跳过。***

+

+- **文本识别**

+

+标注文件的格式如下, txt文件中默认请将图片路径和图片标签用'\t'分割,如用其他方式分割将造成训练报错。

+

+```

+" 图像文件名 图像标注信息 "

+

+train_data/rec/train/word_001.jpg 简单可依赖

+train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单

+...

+```

+

+

+# 4. 文本检测

+

+选用飞桨OCR开发套件[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR)中的PP-OCRv3模型进行文本检测和识别。针对检测模型和识别模型,进行了共计9个方面的升级:

+

+- PP-OCRv3检测模型对PP-OCRv2中的CML协同互学习文本检测蒸馏策略进行了升级,分别针对教师模型和学生模型进行进一步效果优化。其中,在对教师模型优化时,提出了大感受野的PAN结构LK-PAN和引入了DML蒸馏策略;在对学生模型优化时,提出了残差注意力机制的FPN结构RSE-FPN。

+

+- PP-OCRv3的识别模块是基于文本识别算法SVTR优化。SVTR不再采用RNN结构,通过引入Transformers结构更加有效地挖掘文本行图像的上下文信息,从而提升文本识别能力。PP-OCRv3通过轻量级文本识别网络SVTR_LCNet、Attention损失指导CTC损失训练策略、挖掘文字上下文信息的数据增广策略TextConAug、TextRotNet自监督预训练模型、UDML联合互学习策略、UIM无标注数据挖掘方案,6个方面进行模型加速和效果提升。

+

+更多细节请参考PP-OCRv3[技术报告](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md)。

+

+

+我们使用 **3种方案** 进行检测模型的训练、评估:

+- **PP-OCRv3英文超轻量检测预训练模型直接评估**

+- PP-OCRv3英文超轻量检测预训练模型 + **验证集padding**直接评估

+- PP-OCRv3英文超轻量检测预训练模型 + **fine-tune**

+

+## **4.1 预训练模型直接评估**

+

+我们首先通过PaddleOCR提供的预训练模型在验证集上进行评估,如果评估指标能满足效果,可以直接使用预训练模型,不再需要训练。

+

+使用预训练模型直接评估步骤如下:

+

+**1)下载预训练模型**

+

+

+PaddleOCR已经提供了PP-OCR系列模型,部分模型展示如下表所示:

+

+| 模型简介 | 模型名称 | 推荐场景 | 检测模型 | 方向分类器 | 识别模型 |

+| ------------------------------------- | ----------------------- | --------------- | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

+| 中英文超轻量PP-OCRv3模型(16.2M) | ch_PP-OCRv3_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar) |

+| 英文超轻量PP-OCRv3模型(13.4M) | en_PP-OCRv3_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_train.tar) |

+| 中英文超轻量PP-OCRv2模型(13.0M) | ch_PP-OCRv2_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_train.tar) |

+| 中英文超轻量PP-OCR mobile模型(9.4M) | ch_ppocr_mobile_v2.0_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_pre.tar) |

+| 中英文通用PP-OCR server模型(143.4M) | ch_ppocr_server_v2.0_xx | 服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_pre.tar) |

+

+更多模型下载(包括多语言),可以参[考PP-OCR系列模型下载](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/models_list.md)

+

+这里我们使用PP-OCRv3英文超轻量检测模型,下载并解压预训练模型:

+

+

+

+

+```python

+# 如果更换其他模型,更新下载链接和解压指令就可以

+cd /home/aistudio/PaddleOCR

+mkdir pretrain_models

+cd pretrain_models

+# 下载英文预训练模型

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_distill_train.tar

+tar xf en_PP-OCRv3_det_distill_train.tar && rm -rf en_PP-OCRv3_det_distill_train.tar

+%cd ..

+```

+

+**模型评估**

+

+

+首先修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml`中的以下字段:

+```

+Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

+Eval.dataset.label_file_list:指向验证集标注文件,'/home/aistudio/dataset/det_gt_val.txt'

+Eval.dataset.transforms.DetResizeForTest: 尺寸

+ limit_side_len: 48

+ limit_type: 'min'

+```

+

+然后在验证集上进行评估,具体代码如下:

+

+

+

+```python

+cd /home/aistudio/PaddleOCR

+python tools/eval.py \

+ -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml \

+ -o Global.checkpoints="./pretrain_models/en_PP-OCRv3_det_distill_train/best_accuracy"

+```

+

+## **4.2 预训练模型+验证集padding直接评估**

+

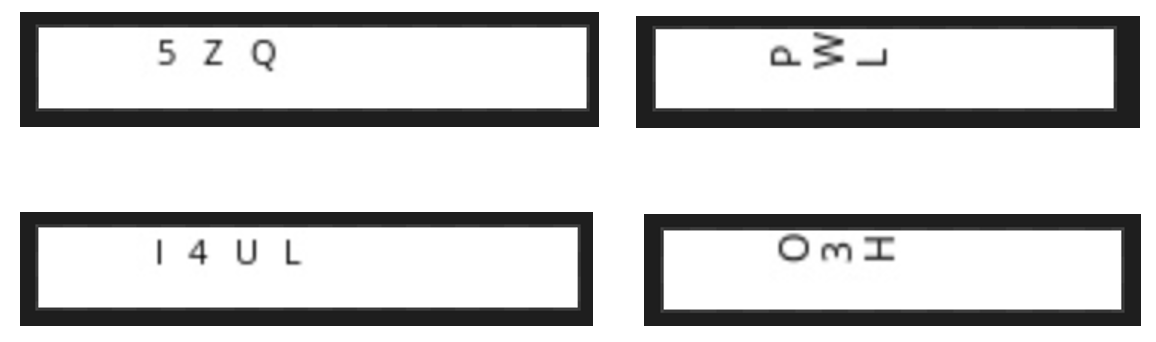

+考虑到PCB图片比较小,宽度只有25左右、高度只有140-170左右,我们在原图的基础上进行padding,再进行检测评估,padding前后效果对比如 **图4** 所示:

+

+

+图4 padding前后对比图

+

+将图片都padding到300*300大小,因为坐标信息发生了变化,我们同时要修改标注文件,在`/home/aistudio/dataset`目录里也提供了padding之后的图片,大家也可以尝试训练和评估:

+

+同上,我们需要修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml`中的以下字段:

+```

+Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

+Eval.dataset.label_file_list:指向验证集标注文件,/home/aistudio/dataset/det_gt_padding_val.txt

+Eval.dataset.transforms.DetResizeForTest: 尺寸

+ limit_side_len: 1100

+ limit_type: 'min'

+```

+

+然后执行评估代码

+

+

+```python

+cd /home/aistudio/PaddleOCR

+python tools/eval.py \

+ -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml \

+ -o Global.checkpoints="./pretrain_models/en_PP-OCRv3_det_distill_train/best_accuracy"

+```

+

+## **4.3 预训练模型+fine-tune**

+

+

+基于预训练模型,在生成的1500图片上进行fine-tune训练和评估,其中train数据1200张,val数据300张,修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml`中的以下字段:

+```

+Global.epoch_num: 这里设置为1,方便快速跑通,实际中根据数据量调整该值

+Global.save_model_dir:模型保存路径

+Global.pretrained_model:指向预训练模型路径,'./pretrain_models/en_PP-OCRv3_det_distill_train/student.pdparams'

+Optimizer.lr.learning_rate:调整学习率,本实验设置为0.0005

+Train.dataset.data_dir:指向训练集图片存放目录,'/home/aistudio/dataset'

+Train.dataset.label_file_list:指向训练集标注文件,'/home/aistudio/dataset/det_gt_train.txt'

+Train.dataset.transforms.EastRandomCropData.size:训练尺寸改为[480,64]

+Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset/'

+Eval.dataset.label_file_list:指向验证集标注文件,'/home/aistudio/dataset/det_gt_val.txt'

+Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

+ limit_side_len: 64

+ limit_type:'min'

+```

+执行下面命令启动训练:

+

+

+```python

+cd /home/aistudio/PaddleOCR/

+python tools/train.py \

+ -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml

+```

+

+**模型评估**

+

+

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`:

+

+

+```python

+cd /home/aistudio/PaddleOCR/

+python3 tools/eval.py \

+ -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml \

+ -o Global.checkpoints="./output/ch_PP-OCR_V3_det/latest"

+```

+

+使用训练好的模型进行评估,指标如下所示:

+

+

+| 序号 | 方案 | hmean | 效果提升 | 实验分析 |

+| -------- | -------- | -------- | -------- | -------- |

+| 1 | PP-OCRv3英文超轻量检测预训练模型 | 64.64% | - | 提供的预训练模型具有泛化能力 |

+| 2 | PP-OCRv3英文超轻量检测预训练模型 + 验证集padding | 72.13% |+7.5% | padding可以提升尺寸较小图片的检测效果|

+| 3 | PP-OCRv3英文超轻量检测预训练模型 + fine-tune | 100% | +27.9% | fine-tune会提升垂类场景效果 |

+

+

+```

+注:上述实验结果均是在1500张图片(1200张训练集,300张测试集)上训练、评估的得到,AIstudio只提供了100张数据,所以指标有所差异属于正常,只要策略有效、规律相同即可。

+```

+

+# 5. 文本识别

+

+我们分别使用如下4种方案进行训练、评估:

+

+- **方案1**:**PP-OCRv3中英文超轻量识别预训练模型直接评估**

+- **方案2**:PP-OCRv3中英文超轻量检测预训练模型 + **fine-tune**

+- **方案3**:PP-OCRv3中英文超轻量检测预训练模型 + fine-tune + **公开通用识别数据集**

+- **方案4**:PP-OCRv3中英文超轻量检测预训练模型 + fine-tune + **增加PCB图像数量**

+

+

+## **5.1 预训练模型直接评估**

+

+同检测模型,我们首先使用PaddleOCR提供的识别预训练模型在PCB验证集上进行评估。

+

+使用预训练模型直接评估步骤如下:

+

+**1)下载预训练模型**

+

+

+我们使用PP-OCRv3中英文超轻量文本识别模型,下载并解压预训练模型:

+

+

+```python

+# 如果更换其他模型,更新下载链接和解压指令就可以

+cd /home/aistudio/PaddleOCR/pretrain_models/

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar

+tar xf ch_PP-OCRv3_rec_train.tar && rm -rf ch_PP-OCRv3_rec_train.tar

+cd ..

+```

+

+**模型评估**

+

+

+首先修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv2_rec_distillation.yml`中的以下字段:

+

+```

+Metric.ignore_space: True:忽略空格

+Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

+Eval.dataset.label_file_list:指向验证集标注文件,'/home/aistudio/dataset/rec_gt_val.txt'

+```

+

+我们使用下载的预训练模型进行评估:

+

+

+```python

+cd /home/aistudio/PaddleOCR

+python3 tools/eval.py \

+ -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml \

+ -o Global.checkpoints=pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy

+

+```

+

+## **5.2 三种fine-tune方案**

+

+方案2、3、4训练和评估方式是相同的,因此在我们了解每个技术方案之后,再具体看修改哪些参数是相同,哪些是不同的。

+

+**方案介绍:**

+

+1) **方案2**:预训练模型 + **fine-tune**

+

+- 在预训练模型的基础上进行fine-tune,使用1500张PCB进行训练和评估,其中训练集1200张,验证集300张。

+

+

+2) **方案3**:预训练模型 + fine-tune + **公开通用识别数据集**

+

+- 当识别数据比较少的情况,可以考虑添加公开通用识别数据集。在方案2的基础上,添加公开通用识别数据集,如lsvt、rctw等。

+

+3)**方案4**:预训练模型 + fine-tune + **增加PCB图像数量**

+

+- 如果能够获取足够多真实场景,我们可以通过增加数据量提升模型效果。在方案2的基础上,增加PCB的数量到2W张左右。

+

+

+**参数修改:**

+

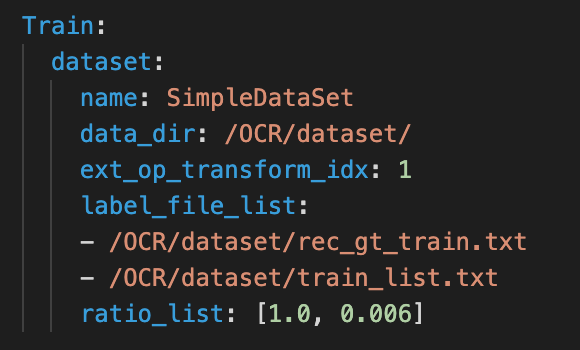

+接着我们看需要修改的参数,以上方案均需要修改配置文件`configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml`的参数,**修改一次即可**:

+

+```

+Global.pretrained_model:指向预训练模型路径,'pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy'

+Optimizer.lr.values:学习率,本实验设置为0.0005

+Train.loader.batch_size_per_card: batch size,默认128,因为数据量小于128,因此我们设置为8,数据量大可以按默认的训练

+Eval.loader.batch_size_per_card: batch size,默认128,设置为4

+Metric.ignore_space: 忽略空格,本实验设置为True

+```

+

+**更换不同的方案**每次需要修改的参数:

+```

+Global.epoch_num: 这里设置为1,方便快速跑通,实际中根据数据量调整该值

+Global.save_model_dir:指向模型保存路径

+Train.dataset.data_dir:指向训练集图片存放目录

+Train.dataset.label_file_list:指向训练集标注文件

+Eval.dataset.data_dir:指向验证集图片存放目录

+Eval.dataset.label_file_list:指向验证集标注文件

+```

+

+同时**方案3**修改以下参数

+```

+Eval.dataset.label_file_list:添加公开通用识别数据标注文件

+Eval.dataset.ratio_list:数据和公开通用识别数据每次采样比例,按实际修改即可

+```

+如 **图5** 所示:

+

+图5 添加公开通用识别数据配置文件示例

+

+

+我们提取Student模型的参数,在PCB数据集上进行fine-tune,可以参考如下代码:

+

+

+```python

+import paddle

+# 加载预训练模型

+all_params = paddle.load("./pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy.pdparams")

+# 查看权重参数的keys

+print(all_params.keys())

+# 学生模型的权重提取

+s_params = {key[len("student_model."):]: all_params[key] for key in all_params if "student_model." in key}

+# 查看学生模型权重参数的keys

+print(s_params.keys())

+# 保存

+paddle.save(s_params, "./pretrain_models/ch_PP-OCRv3_rec_train/student.pdparams")

+```

+

+修改参数后,**每个方案**都执行如下命令启动训练:

+

+

+

+```python

+cd /home/aistudio/PaddleOCR/

+python3 tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml

+```

+

+

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`:

+

+

+```python

+cd /home/aistudio/PaddleOCR/

+python3 tools/eval.py \

+ -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml \

+ -o Global.checkpoints=./output/rec_ppocr_v3/latest

+```

+

+所有方案评估指标如下:

+

+| 序号 | 方案 | acc | 效果提升 | 实验分析 |

+| -------- | -------- | -------- | -------- | -------- |

+| 1 | PP-OCRv3中英文超轻量识别预训练模型直接评估 | 46.67% | - | 提供的预训练模型具有泛化能力 |

+| 2 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune | 42.02% |-4.6% | 在数据量不足的情况,反而比预训练模型效果低(也可以通过调整超参数再试试)|

+| 3 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 公开通用识别数据集 | 77% | +30% | 在数据量不足的情况下,可以考虑补充公开数据训练 |

+| 4 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 99.99% | +23% | 如果能获取更多数据量的情况,可以通过增加数据量提升效果 |

+

+```

+注:上述实验结果均是在1500张图片(1200张训练集,300张测试集)、2W张图片、添加公开通用识别数据集上训练、评估的得到,AIstudio只提供了100张数据,所以指标有所差异属于正常,只要策略有效、规律相同即可。

+```

+

+# 6. 模型导出

+

+inference 模型(paddle.jit.save保存的模型) 一般是模型训练,把模型结构和模型参数保存在文件中的固化模型,多用于预测部署场景。 训练过程中保存的模型是checkpoints模型,保存的只有模型的参数,多用于恢复训练等。 与checkpoints模型相比,inference 模型会额外保存模型的结构信息,在预测部署、加速推理上性能优越,灵活方便,适合于实际系统集成。

+

+

+```python

+# 导出检测模型

+python3 tools/export_model.py \

+ -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml \

+ -o Global.pretrained_model="./output/ch_PP-OCR_V3_det/latest" \

+ Global.save_inference_dir="./inference_model/ch_PP-OCR_V3_det/"

+```

+

+因为上述模型只训练了1个epoch,因此我们使用训练最优的模型进行预测,存储在`/home/aistudio/best_models/`目录下,解压即可

+

+

+```python

+cd /home/aistudio/best_models/

+wget https://paddleocr.bj.bcebos.com/fanliku/PCB/det_ppocr_v3_en_infer_PCB.tar

+tar xf /home/aistudio/best_models/det_ppocr_v3_en_infer_PCB.tar -C /home/aistudio/PaddleOCR/pretrain_models/

+```

+

+

+```python

+# 检测模型inference模型预测

+cd /home/aistudio/PaddleOCR/

+python3 tools/infer/predict_det.py \

+ --image_dir="/home/aistudio/dataset/imgs/0000.jpg" \

+ --det_algorithm="DB" \

+ --det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB/" \

+ --det_limit_side_len=48 \

+ --det_limit_type='min' \

+ --det_db_unclip_ratio=2.5 \

+ --use_gpu=True

+```

+

+结果存储在`inference_results`目录下,检测如下图所示:

+

+图6 检测结果

+

+

+同理,导出识别模型并进行推理。

+

+```python

+# 导出识别模型

+python3 tools/export_model.py \

+ -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml \

+ -o Global.pretrained_model="./output/rec_ppocr_v3/latest" \

+ Global.save_inference_dir="./inference_model/rec_ppocr_v3/"

+

+```

+

+同检测模型,识别模型也只训练了1个epoch,因此我们使用训练最优的模型进行预测,存储在`/home/aistudio/best_models/`目录下,解压即可

+

+

+```python

+cd /home/aistudio/best_models/

+wget https://paddleocr.bj.bcebos.com/fanliku/PCB/rec_ppocr_v3_ch_infer_PCB.tar

+tar xf /home/aistudio/best_models/rec_ppocr_v3_ch_infer_PCB.tar -C /home/aistudio/PaddleOCR/pretrain_models/

+```

+

+

+```python

+# 识别模型inference模型预测

+cd /home/aistudio/PaddleOCR/

+python3 tools/infer/predict_rec.py \

+ --image_dir="../test_imgs/0000_rec.jpg" \

+ --rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

+ --rec_image_shape="3, 48, 320" \

+ --use_space_char=False \

+ --use_gpu=True

+```

+

+```python

+# 检测+识别模型inference模型预测

+cd /home/aistudio/PaddleOCR/

+python3 tools/infer/predict_system.py \

+ --image_dir="../test_imgs/0000.jpg" \

+ --det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB" \

+ --det_limit_side_len=48 \

+ --det_limit_type='min' \

+ --det_db_unclip_ratio=2.5 \

+ --rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

+ --rec_image_shape="3, 48, 320" \

+ --draw_img_save_dir=./det_rec_infer/ \

+ --use_space_char=False \

+ --use_angle_cls=False \

+ --use_gpu=True

+

+```

+

+端到端预测结果存储在`det_res_infer`文件夹内,结果如下图所示:

+

+图7 检测+识别结果

+

+# 7. 端对端评测

+

+接下来介绍文本检测+文本识别的端对端指标评估方式。主要分为三步:

+

+1)首先运行`tools/infer/predict_system.py`,将`image_dir`改为需要评估的数据文件家,得到保存的结果:

+

+

+```python

+# 检测+识别模型inference模型预测

+python3 tools/infer/predict_system.py \

+ --image_dir="../dataset/imgs/" \

+ --det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB" \

+ --det_limit_side_len=48 \

+ --det_limit_type='min' \

+ --det_db_unclip_ratio=2.5 \

+ --rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

+ --rec_image_shape="3, 48, 320" \

+ --draw_img_save_dir=./det_rec_infer/ \

+ --use_space_char=False \

+ --use_angle_cls=False \

+ --use_gpu=True

+```

+

+得到保存结果,文本检测识别可视化图保存在`det_rec_infer/`目录下,预测结果保存在`det_rec_infer/system_results.txt`中,格式如下:`0018.jpg [{"transcription": "E295", "points": [[88, 33], [137, 33], [137, 40], [88, 40]]}]`

+

+2)然后将步骤一保存的数据转换为端对端评测需要的数据格式: 修改 `tools/end2end/convert_ppocr_label.py`中的代码,convert_label函数中设置输入标签路径,Mode,保存标签路径等,对预测数据的GTlabel和预测结果的label格式进行转换。

+```

+ppocr_label_gt = "/home/aistudio/dataset/det_gt_val.txt"

+convert_label(ppocr_label_gt, "gt", "./save_gt_label/")

+

+ppocr_label_gt = "/home/aistudio/PaddleOCR/PCB_result/det_rec_infer/system_results.txt"

+convert_label(ppocr_label_gt, "pred", "./save_PPOCRV2_infer/")

+```

+

+运行`convert_ppocr_label.py`:

+

+

+```python

+ python3 tools/end2end/convert_ppocr_label.py

+```

+

+得到如下结果:

+```

+├── ./save_gt_label/

+├── ./save_PPOCRV2_infer/

+```

+

+3) 最后,执行端对端评测,运行`tools/end2end/eval_end2end.py`计算端对端指标,运行方式如下:

+

+

+```python

+pip install editdistance

+python3 tools/end2end/eval_end2end.py ./save_gt_label/ ./save_PPOCRV2_infer/

+```

+

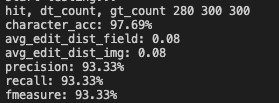

+使用`预训练模型+fine-tune'检测模型`、`预训练模型 + 2W张PCB图片funetune`识别模型,在300张PCB图片上评估得到如下结果,fmeasure为主要关注的指标:

+

+图8 端到端评估指标

+

+```

+注: 使用上述命令不能跑出该结果,因为数据集不相同,可以更换为自己训练好的模型,按上述流程运行

+```

+

+# 8. Jetson部署

+

+我们只需要以下步骤就可以完成Jetson nano部署模型,简单易操作:

+

+**1、在Jetson nano开发版上环境准备:**

+

+* 安装PaddlePaddle

+

+* 下载PaddleOCR并安装依赖

+

+**2、执行预测**

+

+* 将推理模型下载到jetson

+

+* 执行检测、识别、串联预测即可

+

+详细[参考流程](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/deploy/Jetson/readme_ch.md)。

+

+# 9. 总结

+

+检测实验分别使用PP-OCRv3预训练模型在PCB数据集上进行了直接评估、验证集padding、 fine-tune 3种方案,识别实验分别使用PP-OCRv3预训练模型在PCB数据集上进行了直接评估、 fine-tune、添加公开通用识别数据集、增加PCB图片数量4种方案,指标对比如下:

+

+* 检测

+

+

+| 序号 | 方案 | hmean | 效果提升 | 实验分析 |

+| ---- | -------------------------------------------------------- | ------ | -------- | ------------------------------------- |

+| 1 | PP-OCRv3英文超轻量检测预训练模型直接评估 | 64.64% | - | 提供的预训练模型具有泛化能力 |

+| 2 | PP-OCRv3英文超轻量检测预训练模型 + 验证集padding直接评估 | 72.13% | +7.5% | padding可以提升尺寸较小图片的检测效果 |

+| 3 | PP-OCRv3英文超轻量检测预训练模型 + fine-tune | 100% | +27.9% | fine-tune会提升垂类场景效果 |

+

+* 识别

+

+| 序号 | 方案 | acc | 效果提升 | 实验分析 |

+| ---- | ------------------------------------------------------------ | ------ | -------- | ------------------------------------------------------------ |

+| 1 | PP-OCRv3中英文超轻量识别预训练模型直接评估 | 46.67% | - | 提供的预训练模型具有泛化能力 |

+| 2 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune | 42.02% | -4.6% | 在数据量不足的情况,反而比预训练模型效果低(也可以通过调整超参数再试试) |

+| 3 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 公开通用识别数据集 | 77% | +30% | 在数据量不足的情况下,可以考虑补充公开数据训练 |

+| 4 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 99.99% | +23% | 如果能获取更多数据量的情况,可以通过增加数据量提升效果 |

+

+* 端到端

+

+| det | rec | fmeasure |

+| --------------------------------------------- | ------------------------------------------------------------ | -------- |

+| PP-OCRv3英文超轻量检测预训练模型 + fine-tune | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 93.3% |

+

+*结论*

+

+PP-OCRv3的检测模型在未经过fine-tune的情况下,在PCB数据集上也有64.64%的精度,说明具有泛化能力。验证集padding之后,精度提升7.5%,在图片尺寸较小的情况,我们可以通过padding的方式提升检测效果。经过 fine-tune 后能够极大的提升检测效果,精度达到100%。

+

+PP-OCRv3的识别模型方案1和方案2对比可以发现,当数据量不足的情况,预训练模型精度可能比fine-tune效果还要高,所以我们可以先尝试预训练模型直接评估。如果在数据量不足的情况下想进一步提升模型效果,可以通过添加公开通用识别数据集,识别效果提升30%,非常有效。最后如果我们能够采集足够多的真实场景数据集,可以通过增加数据量提升模型效果,精度达到99.99%。

+

+# 更多资源

+

+- 更多深度学习知识、产业案例、面试宝典等,请参考:[awesome-DeepLearning](https://github.com/paddlepaddle/awesome-DeepLearning)

+

+- 更多PaddleOCR使用教程,请参考:[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR/tree/dygraph)

+

+

+- 飞桨框架相关资料,请参考:[飞桨深度学习平台](https://www.paddlepaddle.org.cn/?fr=paddleEdu_aistudio)

+

+# 参考

+

+* 数据生成代码库:https://github.com/zcswdt/Color_OCR_image_generator

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/background/bg.jpg" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/background/bg.jpg"

new file mode 100644

index 0000000000000000000000000000000000000000..3cb6eab819c3b7d4f68590d2cdc9d36351590197

Binary files /dev/null and "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/background/bg.jpg" differ

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/corpus/text.txt" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/corpus/text.txt"

new file mode 100644

index 0000000000000000000000000000000000000000..8b8cb793ef6755bf00ed8c154e24638b07a519c2

--- /dev/null

+++ "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/corpus/text.txt"

@@ -0,0 +1,30 @@

+5ZQ

+I4UL

+PWL

+SNOG

+ZL02

+1C30

+O3H

+YHRS

+N03S

+1U5Y

+JTK

+EN4F

+YKJ

+DWNH

+R42W

+X0V

+4OF5

+08AM

+Y93S

+GWE2

+0KR

+9U2A

+DBQ

+Y6J

+ROZ

+K06

+KIEY

+NZQJ

+UN1B

+6X4

\ No newline at end of file

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/1.png" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/1.png"

new file mode 100644

index 0000000000000000000000000000000000000000..8a49eaa6862113044e05d17e32941a0a20911426

Binary files /dev/null and "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/1.png" differ

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/2.png" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/2.png"

new file mode 100644

index 0000000000000000000000000000000000000000..c3fcc0c92826b97b5f6abd970f1a0580eede0f5d

Binary files /dev/null and "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/2.png" differ

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/gen.py" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/gen.py"

new file mode 100644

index 0000000000000000000000000000000000000000..4c768067f998b6b4bbe0b2f5982f46a3f01fc872

--- /dev/null

+++ "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/gen.py"

@@ -0,0 +1,261 @@

+# copyright (c) 2020 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""

+This code is refer from:

+https://github.com/zcswdt/Color_OCR_image_generator

+"""

+import os

+import random

+from PIL import Image, ImageDraw, ImageFont

+import json

+import argparse

+

+

+def get_char_lines(txt_root_path):

+ """

+ desc:get corpus line

+ """

+ txt_files = os.listdir(txt_root_path)

+ char_lines = []

+ for txt in txt_files:

+ f = open(os.path.join(txt_root_path, txt), mode='r', encoding='utf-8')

+ lines = f.readlines()

+ f.close()

+ for line in lines:

+ char_lines.append(line.strip())

+ return char_lines

+

+

+def get_horizontal_text_picture(image_file, chars, fonts_list, cf):

+ """

+ desc:gen horizontal text picture

+ """

+ img = Image.open(image_file)

+ if img.mode != 'RGB':

+ img = img.convert('RGB')

+ img_w, img_h = img.size

+

+ # random choice font

+ font_path = random.choice(fonts_list)

+ # random choice font size

+ font_size = random.randint(cf.font_min_size, cf.font_max_size)

+ font = ImageFont.truetype(font_path, font_size)

+

+ ch_w = []

+ ch_h = []

+ for ch in chars:

+ wt, ht = font.getsize(ch)

+ ch_w.append(wt)

+ ch_h.append(ht)

+ f_w = sum(ch_w)

+ f_h = max(ch_h)

+

+ # add space

+ char_space_width = max(ch_w)

+ f_w += (char_space_width * (len(chars) - 1))

+

+ x1 = random.randint(0, img_w - f_w)

+ y1 = random.randint(0, img_h - f_h)

+ x2 = x1 + f_w

+ y2 = y1 + f_h

+

+ crop_y1 = y1

+ crop_x1 = x1

+ crop_y2 = y2

+ crop_x2 = x2

+

+ best_color = (0, 0, 0)

+ draw = ImageDraw.Draw(img)

+ for i, ch in enumerate(chars):

+ draw.text((x1, y1), ch, best_color, font=font)

+ x1 += (ch_w[i] + char_space_width)

+ crop_img = img.crop((crop_x1, crop_y1, crop_x2, crop_y2))

+ return crop_img, chars

+

+

+def get_vertical_text_picture(image_file, chars, fonts_list, cf):

+ """

+ desc:gen vertical text picture

+ """

+ img = Image.open(image_file)

+ if img.mode != 'RGB':

+ img = img.convert('RGB')

+ img_w, img_h = img.size

+ # random choice font

+ font_path = random.choice(fonts_list)

+ # random choice font size

+ font_size = random.randint(cf.font_min_size, cf.font_max_size)

+ font = ImageFont.truetype(font_path, font_size)

+

+ ch_w = []

+ ch_h = []

+ for ch in chars:

+ wt, ht = font.getsize(ch)

+ ch_w.append(wt)

+ ch_h.append(ht)

+ f_w = max(ch_w)

+ f_h = sum(ch_h)

+

+ x1 = random.randint(0, img_w - f_w)

+ y1 = random.randint(0, img_h - f_h)

+ x2 = x1 + f_w

+ y2 = y1 + f_h

+

+ crop_y1 = y1

+ crop_x1 = x1

+ crop_y2 = y2

+ crop_x2 = x2

+

+ best_color = (0, 0, 0)

+ draw = ImageDraw.Draw(img)

+ i = 0

+ for ch in chars:

+ draw.text((x1, y1), ch, best_color, font=font)

+ y1 = y1 + ch_h[i]

+ i = i + 1

+ crop_img = img.crop((crop_x1, crop_y1, crop_x2, crop_y2))

+ crop_img = crop_img.transpose(Image.ROTATE_90)

+ return crop_img, chars

+

+

+def get_fonts(fonts_path):

+ """

+ desc: get all fonts

+ """

+ font_files = os.listdir(fonts_path)

+ fonts_list=[]

+ for font_file in font_files:

+ font_path=os.path.join(fonts_path, font_file)

+ fonts_list.append(font_path)

+ return fonts_list

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--num_img', type=int, default=30, help="Number of images to generate")

+ parser.add_argument('--font_min_size', type=int, default=11)

+ parser.add_argument('--font_max_size', type=int, default=12,

+ help="Help adjust the size of the generated text and the size of the picture")

+ parser.add_argument('--bg_path', type=str, default='./background',

+ help='The generated text pictures will be pasted onto the pictures of this folder')

+ parser.add_argument('--det_bg_path', type=str, default='./det_background',

+ help='The generated text pictures will use the pictures of this folder as the background')

+ parser.add_argument('--fonts_path', type=str, default='../../StyleText/fonts',

+ help='The font used to generate the picture')

+ parser.add_argument('--corpus_path', type=str, default='./corpus',

+ help='The corpus used to generate the text picture')

+ parser.add_argument('--output_dir', type=str, default='./output/', help='Images save dir')

+

+

+ cf = parser.parse_args()

+ # save path

+ if not os.path.exists(cf.output_dir):

+ os.mkdir(cf.output_dir)

+

+ # get corpus

+ txt_root_path = cf.corpus_path

+ char_lines = get_char_lines(txt_root_path=txt_root_path)

+

+ # get all fonts

+ fonts_path = cf.fonts_path

+ fonts_list = get_fonts(fonts_path)

+

+ # rec bg

+ img_root_path = cf.bg_path

+ imnames=os.listdir(img_root_path)

+

+ # det bg

+ det_bg_path = cf.det_bg_path

+ bg_pics = os.listdir(det_bg_path)

+

+ # OCR det files

+ det_val_file = open(cf.output_dir + 'det_gt_val.txt', 'w', encoding='utf-8')

+ det_train_file = open(cf.output_dir + 'det_gt_train.txt', 'w', encoding='utf-8')

+ # det imgs

+ det_save_dir = 'imgs/'

+ if not os.path.exists(cf.output_dir + det_save_dir):

+ os.mkdir(cf.output_dir + det_save_dir)

+ det_val_save_dir = 'imgs_val/'

+ if not os.path.exists(cf.output_dir + det_val_save_dir):

+ os.mkdir(cf.output_dir + det_val_save_dir)

+

+ # OCR rec files

+ rec_val_file = open(cf.output_dir + 'rec_gt_val.txt', 'w', encoding='utf-8')

+ rec_train_file = open(cf.output_dir + 'rec_gt_train.txt', 'w', encoding='utf-8')

+ # rec imgs

+ rec_save_dir = 'rec_imgs/'

+ if not os.path.exists(cf.output_dir + rec_save_dir):

+ os.mkdir(cf.output_dir + rec_save_dir)

+ rec_val_save_dir = 'rec_imgs_val/'

+ if not os.path.exists(cf.output_dir + rec_val_save_dir):

+ os.mkdir(cf.output_dir + rec_val_save_dir)

+

+

+ val_ratio = cf.num_img * 0.2 # val dataset ratio

+

+ print('start generating...')

+ for i in range(0, cf.num_img):

+ imname = random.choice(imnames)

+ img_path = os.path.join(img_root_path, imname)

+

+ rnd = random.random()

+ # gen horizontal text picture

+ if rnd < 0.5:

+ gen_img, chars = get_horizontal_text_picture(img_path, char_lines[i], fonts_list, cf)

+ ori_w, ori_h = gen_img.size

+ gen_img = gen_img.crop((0, 3, ori_w, ori_h))

+ # gen vertical text picture

+ else:

+ gen_img, chars = get_vertical_text_picture(img_path, char_lines[i], fonts_list, cf)

+ ori_w, ori_h = gen_img.size

+ gen_img = gen_img.crop((3, 0, ori_w, ori_h))

+

+ ori_w, ori_h = gen_img.size

+

+ # rec imgs

+ save_img_name = str(i).zfill(4) + '.jpg'

+ if i < val_ratio:

+ save_dir = os.path.join(rec_val_save_dir, save_img_name)

+ line = save_dir + '\t' + char_lines[i] + '\n'

+ rec_val_file.write(line)

+ else:

+ save_dir = os.path.join(rec_save_dir, save_img_name)

+ line = save_dir + '\t' + char_lines[i] + '\n'

+ rec_train_file.write(line)

+ gen_img.save(cf.output_dir + save_dir, quality = 95, subsampling=0)

+

+ # det img

+ # random choice bg

+ bg_pic = random.sample(bg_pics, 1)[0]

+ det_img = Image.open(os.path.join(det_bg_path, bg_pic))

+ # the PCB position is fixed, modify it according to your own scenario

+ if bg_pic == '1.png':

+ x1 = 38

+ y1 = 3

+ else:

+ x1 = 34

+ y1 = 1

+

+ det_img.paste(gen_img, (x1, y1))

+ # text pos

+ chars_pos = [[x1, y1], [x1 + ori_w, y1], [x1 + ori_w, y1 + ori_h], [x1, y1 + ori_h]]

+ label = [{"transcription":char_lines[i], "points":chars_pos}]

+ if i < val_ratio:

+ save_dir = os.path.join(det_val_save_dir, save_img_name)

+ det_val_file.write(save_dir + '\t' + json.dumps(

+ label, ensure_ascii=False) + '\n')

+ else:

+ save_dir = os.path.join(det_save_dir, save_img_name)

+ det_train_file.write(save_dir + '\t' + json.dumps(

+ label, ensure_ascii=False) + '\n')

+ det_img.save(cf.output_dir + save_dir, quality = 95, subsampling=0)

diff --git "a/applications/\350\275\273\351\207\217\347\272\247\350\275\246\347\211\214\350\257\206\345\210\253.md" "b/applications/\350\275\273\351\207\217\347\272\247\350\275\246\347\211\214\350\257\206\345\210\253.md"

index 31b1b427db107dd191363838de7604bd099c10ac..7012c7f4bb022e58972c521452de0aade0fdb436 100644

--- "a/applications/\350\275\273\351\207\217\347\272\247\350\275\246\347\211\214\350\257\206\345\210\253.md"

+++ "b/applications/\350\275\273\351\207\217\347\272\247\350\275\246\347\211\214\350\257\206\345\210\253.md"

@@ -249,7 +249,7 @@ tar -xf ch_PP-OCRv3_det_distill_train.tar

cd /home/aistudio/PaddleOCR

```

-预训练模型下载完成后,我们使用[ch_PP-OCRv3_det_student.yml](../configs/chepai/ch_PP-OCRv3_det_student.yml) 配置文件进行后续实验,在开始评估之前需要对配置文件中部分字段进行设置,具体如下:

+预训练模型下载完成后,我们使用[ch_PP-OCRv3_det_student.yml](../configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml) 配置文件进行后续实验,在开始评估之前需要对配置文件中部分字段进行设置,具体如下:

1. 模型存储和训练相关:

1. Global.pretrained_model: 指向PP-OCRv3文本检测预训练模型地址

@@ -787,12 +787,12 @@ python tools/infer/predict_system.py \

- 端侧部署

-端侧部署我们采用基于 PaddleLite 的 cpp 推理。Paddle Lite是飞桨轻量化推理引擎,为手机、IOT端提供高效推理能力,并广泛整合跨平台硬件,为端侧部署及应用落地问题提供轻量化的部署方案。具体可参考 [PaddleOCR lite教程](../dygraph/deploy/lite/readme_ch.md)

+端侧部署我们采用基于 PaddleLite 的 cpp 推理。Paddle Lite是飞桨轻量化推理引擎,为手机、IOT端提供高效推理能力,并广泛整合跨平台硬件,为端侧部署及应用落地问题提供轻量化的部署方案。具体可参考 [PaddleOCR lite教程](../deploy/lite/readme_ch.md)

### 4.5 实验总结

-我们分别使用PP-OCRv3中英文超轻量预训练模型在车牌数据集上进行了直接评估和 fine-tune 和 fine-tune +量化3种方案的实验,并基于[PaddleOCR lite教程](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/deploy/lite/readme_ch.md)进行了速度测试,指标对比如下:

+我们分别使用PP-OCRv3中英文超轻量预训练模型在车牌数据集上进行了直接评估和 fine-tune 和 fine-tune +量化3种方案的实验,并基于[PaddleOCR lite教程](../deploy/lite/readme_ch.md)进行了速度测试,指标对比如下:

- 检测

diff --git a/deploy/cpp_infer/src/ocr_rec.cpp b/deploy/cpp_infer/src/ocr_rec.cpp

index 3438d4074e9a4ebb60a9ee6b0d9673c99c08df38..0f90ddfab4872f97829da081e64cb7437e72493a 100644

--- a/deploy/cpp_infer/src/ocr_rec.cpp

+++ b/deploy/cpp_infer/src/ocr_rec.cpp

@@ -83,7 +83,7 @@ void CRNNRecognizer::Run(std::vector img_list,

int out_num = std::accumulate(predict_shape.begin(), predict_shape.end(), 1,

std::multiplies());

predict_batch.resize(out_num);

-

+ // predict_batch is the result of Last FC with softmax

output_t->CopyToCpu(predict_batch.data());

auto inference_end = std::chrono::steady_clock::now();

inference_diff += inference_end - inference_start;

@@ -98,9 +98,11 @@ void CRNNRecognizer::Run(std::vector img_list,

float max_value = 0.0f;

for (int n = 0; n < predict_shape[1]; n++) {

+ // get idx

argmax_idx = int(Utility::argmax(

&predict_batch[(m * predict_shape[1] + n) * predict_shape[2]],

&predict_batch[(m * predict_shape[1] + n + 1) * predict_shape[2]]));

+ // get score

max_value = float(*std::max_element(

&predict_batch[(m * predict_shape[1] + n) * predict_shape[2]],

&predict_batch[(m * predict_shape[1] + n + 1) * predict_shape[2]]));

diff --git a/deploy/pdserving/README.md b/deploy/pdserving/README.md

index 55e03c4c2654f336ed942ae03e61e88b61940006..83329a11cdb57fea003a800fe9ca73791da9f6da 100644

--- a/deploy/pdserving/README.md

+++ b/deploy/pdserving/README.md

@@ -136,7 +136,7 @@ The recognition model is the same.

2. Run the following command to start the service.

```

# Start the service and save the running log in log.txt

- python3 web_service.py &>log.txt &

+ python3 web_service.py --config=config.yml &>log.txt &

```

After the service is successfully started, a log similar to the following will be printed in log.txt

@@ -217,7 +217,7 @@ The C++ service deployment is the same as python in the environment setup and da

2. Run the following command to start the service.

```

# Start the service and save the running log in log.txt

- python3 -m paddle_serving_server.serve --model ppocr_det_v3_serving ppocr_rec_v3_serving --op GeneralDetectionOp GeneralInferOp --port 9293 &>log.txt &

+ python3 -m paddle_serving_server.serve --model ppocr_det_v3_serving ppocr_rec_v3_serving --op GeneralDetectionOp GeneralInferOp --port 8181 &>log.txt &

```

After the service is successfully started, a log similar to the following will be printed in log.txt

diff --git a/deploy/pdserving/README_CN.md b/deploy/pdserving/README_CN.md

index 0891611db5f39d322473354f7d988b10afa78cbd..ab05b766e32bc8435d2c0568e848190bf6761173 100644

--- a/deploy/pdserving/README_CN.md

+++ b/deploy/pdserving/README_CN.md

@@ -135,7 +135,7 @@ python3 -m paddle_serving_client.convert --dirname ./ch_PP-OCRv3_rec_infer/ \

2. 启动服务可运行如下命令:

```

# 启动服务,运行日志保存在log.txt

- python3 web_service.py &>log.txt &

+ python3 web_service.py --config=config.yml &>log.txt &

```

成功启动服务后,log.txt中会打印类似如下日志

@@ -230,7 +230,7 @@ cp -rf general_detection_op.cpp Serving/core/general-server/op

```

# 启动服务,运行日志保存在log.txt

- python3 -m paddle_serving_server.serve --model ppocr_det_v3_serving ppocr_rec_v3_serving --op GeneralDetectionOp GeneralInferOp --port 9293 &>log.txt &

+ python3 -m paddle_serving_server.serve --model ppocr_det_v3_serving ppocr_rec_v3_serving --op GeneralDetectionOp GeneralInferOp --port 8181 &>log.txt &

```

成功启动服务后,log.txt中会打印类似如下日志

diff --git a/deploy/pdserving/ocr_cpp_client.py b/deploy/pdserving/ocr_cpp_client.py

index 7f9333dd858aad5440ff256d501cf1e5d2f5fb1f..3aaf03155953ce2129fd548deef15033e91e9a09 100755

--- a/deploy/pdserving/ocr_cpp_client.py

+++ b/deploy/pdserving/ocr_cpp_client.py

@@ -22,15 +22,16 @@ import cv2

from paddle_serving_app.reader import Sequential, URL2Image, ResizeByFactor

from paddle_serving_app.reader import Div, Normalize, Transpose

from ocr_reader import OCRReader

+import codecs

client = Client()

# TODO:load_client need to load more than one client model.

# this need to figure out some details.

client.load_client_config(sys.argv[1:])

-client.connect(["127.0.0.1:9293"])

+client.connect(["127.0.0.1:8181"])

import paddle

-test_img_dir = "../../doc/imgs/"

+test_img_dir = "../../doc/imgs/1.jpg"

ocr_reader = OCRReader(char_dict_path="../../ppocr/utils/ppocr_keys_v1.txt")

@@ -40,14 +41,43 @@ def cv2_to_base64(image):

'utf8') #data.tostring()).decode('utf8')

-for img_file in os.listdir(test_img_dir):

- with open(os.path.join(test_img_dir, img_file), 'rb') as file:

+def _check_image_file(path):

+ img_end = {'jpg', 'bmp', 'png', 'jpeg', 'rgb', 'tif', 'tiff', 'gif'}

+ return any([path.lower().endswith(e) for e in img_end])

+

+

+test_img_list = []

+if os.path.isfile(test_img_dir) and _check_image_file(test_img_dir):

+ test_img_list.append(test_img_dir)

+elif os.path.isdir(test_img_dir):

+ for single_file in os.listdir(test_img_dir):

+ file_path = os.path.join(test_img_dir, single_file)

+ if os.path.isfile(file_path) and _check_image_file(file_path):

+ test_img_list.append(file_path)

+if len(test_img_list) == 0:

+ raise Exception("not found any img file in {}".format(test_img_dir))

+

+for img_file in test_img_list:

+ with open(img_file, 'rb') as file:

image_data = file.read()

image = cv2_to_base64(image_data)

res_list = []

fetch_map = client.predict(feed={"x": image}, fetch=[], batch=True)

- one_batch_res = ocr_reader.postprocess(fetch_map, with_score=True)

- for res in one_batch_res:

- res_list.append(res[0])

- res = {"res": str(res_list)}

- print(res)

+ if fetch_map is None:

+ print('no results')

+ else:

+ if "text" in fetch_map:

+ for x in fetch_map["text"]:

+ x = codecs.encode(x)

+ words = base64.b64decode(x).decode('utf-8')

+ res_list.append(words)

+ else:

+ try:

+ one_batch_res = ocr_reader.postprocess(

+ fetch_map, with_score=True)

+ for res in one_batch_res:

+ res_list.append(res[0])

+ except:

+ print('no results')

+ res = {"res": str(res_list)}

+ print(res)

diff --git a/deploy/pdserving/ocr_reader.py b/deploy/pdserving/ocr_reader.py

index 75f0f3d5c3aea488f82ec01a72e20310663d565b..d488cc0920391eded6c08945597b5c938b7c7a42 100644

--- a/deploy/pdserving/ocr_reader.py

+++ b/deploy/pdserving/ocr_reader.py

@@ -339,7 +339,7 @@ class CharacterOps(object):

class OCRReader(object):

def __init__(self,

algorithm="CRNN",

- image_shape=[3, 32, 320],

+ image_shape=[3, 48, 320],

char_type="ch",

batch_num=1,

char_dict_path="./ppocr_keys_v1.txt"):

@@ -356,7 +356,7 @@ class OCRReader(object):

def resize_norm_img(self, img, max_wh_ratio):

imgC, imgH, imgW = self.rec_image_shape

if self.character_type == "ch":

- imgW = int(32 * max_wh_ratio)

+ imgW = int(imgH * max_wh_ratio)

h = img.shape[0]

w = img.shape[1]

ratio = w / float(h)

@@ -377,7 +377,7 @@ class OCRReader(object):

def preprocess(self, img_list):

img_num = len(img_list)

norm_img_batch = []

- max_wh_ratio = 0

+ max_wh_ratio = 320/48.

for ino in range(img_num):

h, w = img_list[ino].shape[0:2]

wh_ratio = w * 1.0 / h

diff --git a/deploy/pdserving/pipeline_http_client.py b/deploy/pdserving/pipeline_http_client.py

index a1226a9469cc96090603f57f5e44f9dad1d64b5f..0a86a6398701ef6715afdd9643cb9cb8ccbb58b2 100644

--- a/deploy/pdserving/pipeline_http_client.py

+++ b/deploy/pdserving/pipeline_http_client.py

@@ -36,11 +36,27 @@ def cv2_to_base64(image):

return base64.b64encode(image).decode('utf8')

+def _check_image_file(path):

+ img_end = {'jpg', 'bmp', 'png', 'jpeg', 'rgb', 'tif', 'tiff', 'gif'}

+ return any([path.lower().endswith(e) for e in img_end])

+

+

url = "http://127.0.0.1:9998/ocr/prediction"

test_img_dir = args.image_dir

-for idx, img_file in enumerate(os.listdir(test_img_dir)):

- with open(os.path.join(test_img_dir, img_file), 'rb') as file:

+test_img_list = []

+if os.path.isfile(test_img_dir) and _check_image_file(test_img_dir):

+ test_img_list.append(test_img_dir)

+elif os.path.isdir(test_img_dir):

+ for single_file in os.listdir(test_img_dir):

+ file_path = os.path.join(test_img_dir, single_file)

+ if os.path.isfile(file_path) and _check_image_file(file_path):

+ test_img_list.append(file_path)

+if len(test_img_list) == 0:

+ raise Exception("not found any img file in {}".format(test_img_dir))

+

+for idx, img_file in enumerate(test_img_list):

+ with open(img_file, 'rb') as file:

image_data1 = file.read()

# print file name

print('{}{}{}'.format('*' * 10, img_file, '*' * 10))

@@ -70,4 +86,4 @@ for idx, img_file in enumerate(os.listdir(test_img_dir)):

print(

"For details about error message, see PipelineServingLogs/pipeline.log"

)

-print("==> total number of test imgs: ", len(os.listdir(test_img_dir)))

+print("==> total number of test imgs: ", len(test_img_list))

diff --git a/deploy/pdserving/serving_client_conf.prototxt b/deploy/pdserving/serving_client_conf.prototxt

new file mode 100644

index 0000000000000000000000000000000000000000..33960540a2ea1a7a1e37bda07ab62197a54e272d

--- /dev/null

+++ b/deploy/pdserving/serving_client_conf.prototxt

@@ -0,0 +1,16 @@

+feed_var {

+ name: "x"

+ alias_name: "x"

+ is_lod_tensor: false

+ feed_type: 20

+ shape: 1

+}

+fetch_var {

+ name: "save_infer_model/scale_0.tmp_1"

+ alias_name: "save_infer_model/scale_0.tmp_1"

+ is_lod_tensor: false

+ fetch_type: 1

+ shape: 1

+ shape: 640

+ shape: 640

+}

diff --git a/deploy/pdserving/web_service.py b/deploy/pdserving/web_service.py

index f05806ce030238144568a3ca137798a9132027e4..b6fadb91d59d6a27c6ba8ba80459f5acc6aa3516 100644

--- a/deploy/pdserving/web_service.py

+++ b/deploy/pdserving/web_service.py

@@ -19,7 +19,7 @@ import copy

import cv2

import base64

# from paddle_serving_app.reader import OCRReader

-from ocr_reader import OCRReader, DetResizeForTest

+from ocr_reader import OCRReader, DetResizeForTest, ArgsParser

from paddle_serving_app.reader import Sequential, ResizeByFactor

from paddle_serving_app.reader import Div, Normalize, Transpose

from paddle_serving_app.reader import DBPostProcess, FilterBoxes, GetRotateCropImage, SortedBoxes

@@ -63,7 +63,6 @@ class DetOp(Op):

dt_boxes_list = self.post_func(det_out, [ratio_list])

dt_boxes = self.filter_func(dt_boxes_list[0], [self.ori_h, self.ori_w])

out_dict = {"dt_boxes": dt_boxes, "image": self.raw_im}

-

return out_dict, None, ""

@@ -86,7 +85,7 @@ class RecOp(Op):

dt_boxes = copy.deepcopy(self.dt_list)

feed_list = []

img_list = []

- max_wh_ratio = 0

+ max_wh_ratio = 320 / 48.

## Many mini-batchs, the type of feed_data is list.

max_batch_size = 6 # len(dt_boxes)

@@ -150,7 +149,8 @@ class RecOp(Op):

for i in range(dt_num):

text = rec_list[i]

dt_box = self.dt_list[i]

- result_list.append([text, dt_box.tolist()])

+ if text[1] >= 0.5:

+ result_list.append([text, dt_box.tolist()])

res = {"result": str(result_list)}

return res, None, ""

@@ -163,5 +163,6 @@ class OcrService(WebService):

uci_service = OcrService(name="ocr")

-uci_service.prepare_pipeline_config("config.yml")

+FLAGS = ArgsParser().parse_args()

+uci_service.prepare_pipeline_config(yml_dict=FLAGS.conf_dict)

uci_service.run_service()

diff --git a/doc/doc_ch/FAQ.md b/doc/doc_ch/FAQ.md

index 2dad829284806e199801ff3ac55d2e760c3e2583..a4437b8b783ca28fed60bb0a9648191e7ec4d0db 100644

--- a/doc/doc_ch/FAQ.md

+++ b/doc/doc_ch/FAQ.md

@@ -720,6 +720,13 @@ C++TensorRT预测需要使用支持TRT的预测库并在编译时打开[-DWITH_T

注:建议使用TensorRT大于等于6.1.0.5以上的版本。

+#### Q: 为什么识别模型做预测的时候,预测图片的数量数量还会影响预测的精度

+**A**: 推理时识别模型默认的batch_size=6, 如预测图片长度变化大,可能影响预测效果。如果出现上述问题可在推理的时候设置识别bs=1,命令如下:

+

+```

+python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words/ch/word_4.jpg" --rec_model_dir="./ch_PP-OCRv3_rec_infer/" --rec_batch_num=1

+```

+

### 2.13 推理部署

diff --git a/doc/doc_ch/dataset/layout_datasets.md b/doc/doc_ch/dataset/layout_datasets.md

index e7055b4e607aae358a9ec1e93f3640b2b68ea4a1..728a9be5fdd33a78482adb1e705afea7117a3037 100644

--- a/doc/doc_ch/dataset/layout_datasets.md

+++ b/doc/doc_ch/dataset/layout_datasets.md

@@ -15,8 +15,8 @@

- **数据简介**:publaynet数据集的训练集合中包含35万张图像,验证集合中包含1.1万张图像。总共包含5个类别,分别是: `text, title, list, table, figure`。部分图像以及标注框可视化如下所示。

- **下载地址**:https://developer.ibm.com/exchanges/data/all/publaynet/

@@ -30,8 +30,8 @@

- **数据简介**:CDLA据集的训练集合中包含5000张图像,验证集合中包含1000张图像。总共包含10个类别,分别是: `Text, Title, Figure, Figure caption, Table, Table caption, Header, Footer, Reference, Equation`。部分图像以及标注框可视化如下所示。

- **下载地址**:https://github.com/buptlihang/CDLA

@@ -45,8 +45,8 @@

- **数据简介**:TableBank数据集包含Latex(训练集187199张,验证集7265张,测试集5719张)与Word(训练集73383张,验证集2735张,测试集2281张)两种类别的文档。仅包含`Table` 1个类别。部分图像以及标注框可视化如下所示。

- **下载地址**:https://doc-analysis.github.io/tablebank-page/index.html

diff --git a/doc/doc_ch/detection.md b/doc/doc_ch/detection.md

index 8a71b75c249b794e7ecda0ad14dc8cd2f07447e0..2cf0732219ac9cd2309ae24896d7de1499986461 100644

--- a/doc/doc_ch/detection.md

+++ b/doc/doc_ch/detection.md

@@ -13,6 +13,7 @@

- [2.5 分布式训练](#25-分布式训练)

- [2.6 知识蒸馏训练](#26-知识蒸馏训练)

- [2.7 其他训练环境](#27-其他训练环境)

+ - [2.8 模型微调](#28-模型微调)

- [3. 模型评估与预测](#3-模型评估与预测)

- [3.1 指标评估](#31-指标评估)

- [3.2 测试检测效果](#32-测试检测效果)

@@ -141,7 +142,8 @@ python3 tools/train.py -c configs/det/det_mv3_db.yml \

Global.use_amp=True Global.scale_loss=1024.0 Global.use_dynamic_loss_scaling=True

```

-

+

+

## 2.5 分布式训练

多机多卡训练时,通过 `--ips` 参数设置使用的机器IP地址,通过 `--gpus` 参数设置使用的GPU ID:

@@ -151,7 +153,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/MobileNetV3_large_x0_5_pretrained

```

-**注意:** 采用多机多卡训练时,需要替换上面命令中的ips值为您机器的地址,机器之间需要能够相互ping通。另外,训练时需要在多个机器上分别启动命令。查看机器ip地址的命令为`ifconfig`。

+**注意:** (1)采用多机多卡训练时,需要替换上面命令中的ips值为您机器的地址,机器之间需要能够相互ping通;(2)训练时需要在多个机器上分别启动命令。查看机器ip地址的命令为`ifconfig`;(3)更多关于分布式训练的性能优势等信息,请参考:[分布式训练教程](./distributed_training.md)。

@@ -177,6 +179,13 @@ Windows平台只支持`单卡`的训练与预测,指定GPU进行训练`set CUD

- Linux DCU

DCU设备上运行需要设置环境变量 `export HIP_VISIBLE_DEVICES=0,1,2,3`,其余训练评估预测命令与Linux GPU完全相同。

+

+

+## 2.8 模型微调

+

+实际使用过程中,建议加载官方提供的预训练模型,在自己的数据集中进行微调,关于检测模型的微调方法,请参考:[模型微调教程](./finetune.md)。

+

+

# 3. 模型评估与预测

@@ -196,6 +205,7 @@ python3 tools/eval.py -c configs/det/det_mv3_db.yml -o Global.checkpoints="{pat

## 3.2 测试检测效果

测试单张图像的检测效果:

+

```shell

python3 tools/infer_det.py -c configs/det/det_mv3_db.yml -o Global.infer_img="./doc/imgs_en/img_10.jpg" Global.pretrained_model="./output/det_db/best_accuracy"

```

@@ -226,14 +236,19 @@ python3 tools/export_model.py -c configs/det/det_mv3_db.yml -o Global.pretrained

```

DB检测模型inference 模型预测:

+

```shell

python3 tools/infer/predict_det.py --det_algorithm="DB" --det_model_dir="./output/det_db_inference/" --image_dir="./doc/imgs/" --use_gpu=True

```

如果是其他检测,比如EAST模型,det_algorithm参数需要修改为EAST,默认为DB算法:

+

```shell

python3 tools/infer/predict_det.py --det_algorithm="EAST" --det_model_dir="./output/det_db_inference/" --image_dir="./doc/imgs/" --use_gpu=True

```

+更多关于推理超参数的配置与解释,请参考:[模型推理超参数解释教程](./inference_args.md)。

+

+

# 5. FAQ

diff --git a/doc/doc_ch/distributed_training.md b/doc/doc_ch/distributed_training.md

index e0251b21ea1157084e4e1b1d77429264d452aa20..6afa4a5b9f77ce238cb18fcb4160e49f7b465369 100644

--- a/doc/doc_ch/distributed_training.md

+++ b/doc/doc_ch/distributed_training.md

@@ -41,11 +41,16 @@ python3 -m paddle.distributed.launch \

## 性能效果测试

-* 基于单机8卡P40,和2机8卡P40,在26W公开识别数据集(LSVT, RCTW, MTWI)上进行训练,最终耗时如下。

+* 在2机8卡P40的机器上,基于26W公开识别数据集(LSVT, RCTW, MTWI)上进行训练,最终耗时如下。

-| 模型 | 配置文件 | 机器数量 | 每台机器的GPU数量 | 训练时间 | 识别Acc | 加速比 |

-| :----------------------: | :------------: | :------------: | :---------------: | :----------: | :-----------: | :-----------: |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 1 | 8 | 60h | 66.7% | - |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 2 | 8 | 40h | 67.0% | 150% |

+| 模型 | 配置 | 精度 | 单机8卡耗时 | 2机8卡耗时 | 加速比 |

+|------|-----|--------|--------|--------|-----|

+| CRNN | [rec_chinese_lite_train_v2.0.yml](../../configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml) | 67.0% | 2.50d | 1.67d | **1.5** |

-可以看出,精度没有下降的情况下,训练时间由60h缩短为了40h,加速比可以达到60h/40h=150%,效率为60h/(40h*2)=75%。

+

+* 在4机8卡V100的机器上,基于全量数据训练,最终耗时如下

+

+

+| 模型 | 配置 | 精度 | 单机8卡耗时 | 4机8卡耗时 | 加速比 |

+|------|-----|--------|--------|--------|-----|

+| SVTR | [ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml) | 74.0% | 10d | 2.84d | **3.5** |

diff --git a/doc/doc_ch/inference_args.md b/doc/doc_ch/inference_args.md

new file mode 100644

index 0000000000000000000000000000000000000000..fa188ab7c800eaabae8a4ff54413af162dd60e43

--- /dev/null

+++ b/doc/doc_ch/inference_args.md

@@ -0,0 +1,120 @@

+# PaddleOCR模型推理参数解释

+

+在使用PaddleOCR进行模型推理时,可以自定义修改参数,来修改模型、数据、预处理、后处理等内容(参数文件:[utility.py](../../tools/infer/utility.py)),详细的参数解释如下所示。

+

+* 全局信息

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| image_dir | str | 无,必须显式指定 | 图像或者文件夹路径 |

+| vis_font_path | str | "./doc/fonts/simfang.ttf" | 用于可视化的字体路径 |

+| drop_score | float | 0.5 | 识别得分小于该值的结果会被丢弃,不会作为返回结果 |

+| use_pdserving | bool | False | 是否使用Paddle Serving进行预测 |

+| warmup | bool | False | 是否开启warmup,在统计预测耗时的时候,可以使用这种方法 |

+| draw_img_save_dir | str | "./inference_results" | 系统串联预测OCR结果的保存文件夹 |

+| save_crop_res | bool | False | 是否保存OCR的识别文本图像 |

+| crop_res_save_dir | str | "./output" | 保存OCR识别出来的文本图像路径 |

+| use_mp | bool | False | 是否开启多进程预测 |

+| total_process_num | int | 6 | 开启的进城数,`use_mp`为`True`时生效 |

+| process_id | int | 0 | 当前进程的id号,无需自己修改 |

+| benchmark | bool | False | 是否开启benchmark,对预测速度、显存占用等进行统计 |

+| save_log_path | str | "./log_output/" | 开启`benchmark`时,日志结果的保存文件夹 |

+| show_log | bool | True | 是否显示预测中的日志信息 |

+| use_onnx | bool | False | 是否开启onnx预测 |

+

+

+* 预测引擎相关

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| use_gpu | bool | True | 是否使用GPU进行预测 |

+| ir_optim | bool | True | 是否对计算图进行分析与优化,开启后可以加速预测过程 |

+| use_tensorrt | bool | False | 是否开启tensorrt |

+| min_subgraph_size | int | 15 | tensorrt中最小子图size,当子图的size大于该值时,才会尝试对该子图使用trt engine计算 |

+| precision | str | fp32 | 预测的精度,支持`fp32`, `fp16`, `int8` 3种输入 |

+| enable_mkldnn | bool | True | 是否开启mkldnn |

+| cpu_threads | int | 10 | 开启mkldnn时,cpu预测的线程数 |

+

+* 文本检测模型相关

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| det_algorithm | str | "DB" | 文本检测算法名称,目前支持`DB`, `EAST`, `SAST`, `PSE` |

+| det_model_dir | str | xx | 检测inference模型路径 |

+| det_limit_side_len | int | 960 | 检测的图像边长限制 |

+| det_limit_type | str | "max" | 检测的变成限制类型,目前支持`min`, `max`,`min`表示保证图像最短边不小于`det_limit_side_len`,`max`表示保证图像最长边不大于`det_limit_side_len` |

+

+其中,DB算法相关参数如下

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| det_db_thresh | float | 0.3 | DB输出的概率图中,得分大于该阈值的像素点才会被认为是文字像素点 |

+| det_db_box_thresh | float | 0.6 | 检测结果边框内,所有像素点的平均得分大于该阈值时,该结果会被认为是文字区域 |

+| det_db_unclip_ratio | float | 1.5 | `Vatti clipping`算法的扩张系数,使用该方法对文字区域进行扩张 |

+| max_batch_size | int | 10 | 预测的batch size |

+| use_dilation | bool | False | 是否对分割结果进行膨胀以获取更优检测效果 |

+| det_db_score_mode | str | "fast" | DB的检测结果得分计算方法,支持`fast`和`slow`,`fast`是根据polygon的外接矩形边框内的所有像素计算平均得分,`slow`是根据原始polygon内的所有像素计算平均得分,计算速度相对较慢一些,但是更加准确一些。 |

+

+EAST算法相关参数如下

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| det_east_score_thresh | float | 0.8 | EAST后处理中score map的阈值 |

+| det_east_cover_thresh | float | 0.1 | EAST后处理中文本框的平均得分阈值 |

+| det_east_nms_thresh | float | 0.2 | EAST后处理中nms的阈值 |

+

+SAST算法相关参数如下

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| det_sast_score_thresh | float | 0.5 | SAST后处理中的得分阈值 |

+| det_sast_nms_thresh | float | 0.5 | SAST后处理中nms的阈值 |

+| det_sast_polygon | bool | False | 是否多边形检测,弯曲文本场景(如Total-Text)设置为True |

+

+PSE算法相关参数如下

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| det_pse_thresh | float | 0.0 | 对输出图做二值化的阈值 |

+| det_pse_box_thresh | float | 0.85 | 对box进行过滤的阈值,低于此阈值的丢弃 |

+| det_pse_min_area | float | 16 | box的最小面积,低于此阈值的丢弃 |

+| det_pse_box_type | str | "box" | 返回框的类型,box:四点坐标,poly: 弯曲文本的所有点坐标 |

+| det_pse_scale | int | 1 | 输入图像相对于进后处理的图的比例,如`640*640`的图像,网络输出为`160*160`,scale为2的情况下,进后处理的图片shape为`320*320`。这个值调大可以加快后处理速度,但是会带来精度的下降 |

+

+* 文本识别模型相关

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| rec_algorithm | str | "CRNN" | 文本识别算法名称,目前支持`CRNN`, `SRN`, `RARE`, `NETR`, `SAR` |

+| rec_model_dir | str | 无,如果使用识别模型,该项是必填项 | 识别inference模型路径 |

+| rec_image_shape | list | [3, 32, 320] | 识别时的图像尺寸, |

+| rec_batch_num | int | 6 | 识别的batch size |

+| max_text_length | int | 25 | 识别结果最大长度,在`SRN`中有效 |

+| rec_char_dict_path | str | "./ppocr/utils/ppocr_keys_v1.txt" | 识别的字符字典文件 |

+| use_space_char | bool | True | 是否包含空格,如果为`True`,则会在最后字符字典中补充`空格`字符 |

+

+

+* 端到端文本检测与识别模型相关

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| e2e_algorithm | str | "PGNet" | 端到端算法名称,目前支持`PGNet` |

+| e2e_model_dir | str | 无,如果使用端到端模型,该项是必填项 | 端到端模型inference模型路径 |

+| e2e_limit_side_len | int | 768 | 端到端的输入图像边长限制 |

+| e2e_limit_type | str | "max" | 端到端的边长限制类型,目前支持`min`, `max`,`min`表示保证图像最短边不小于`e2e_limit_side_len`,`max`表示保证图像最长边不大于`e2e_limit_side_len` |

+| e2e_pgnet_score_thresh | float | 0.5 | 端到端得分阈值,小于该阈值的结果会被丢弃 |

+| e2e_char_dict_path | str | "./ppocr/utils/ic15_dict.txt" | 识别的字典文件路径 |

+| e2e_pgnet_valid_set | str | "totaltext" | 验证集名称,目前支持`totaltext`, `partvgg`,不同数据集对应的后处理方式不同,与训练过程保持一致即可 |

+| e2e_pgnet_mode | str | "fast" | PGNet的检测结果得分计算方法,支持`fast`和`slow`,`fast`是根据polygon的外接矩形边框内的所有像素计算平均得分,`slow`是根据原始polygon内的所有像素计算平均得分,计算速度相对较慢一些,但是更加准确一些。 |

+

+

+* 方向分类器模型相关

+

+| 参数名称 | 类型 | 默认值 | 含义 |

+| :--: | :--: | :--: | :--: |

+| use_angle_cls | bool | False | 是否使用方向分类器 |

+| cls_model_dir | str | 无,如果需要使用,则必须显式指定路径 | 方向分类器inference模型路径 |

+| cls_image_shape | list | [3, 48, 192] | 预测尺度 |

+| label_list | list | ['0', '180'] | class id对应的角度值 |

+| cls_batch_num | int | 6 | 方向分类器预测的batch size |

+| cls_thresh | float | 0.9 | 预测阈值,模型预测结果为180度,且得分大于该阈值时,认为最终预测结果为180度,需要翻转 |

diff --git a/doc/doc_ch/ppocr_introduction.md b/doc/doc_ch/ppocr_introduction.md

index 59de124e2ab855d0b4abb90d0a356aefd6db586d..bd62087c8b212098cba6b0ee1cbaf413ab23015f 100644

--- a/doc/doc_ch/ppocr_introduction.md

+++ b/doc/doc_ch/ppocr_introduction.md

@@ -30,11 +30,11 @@ PP-OCR系统pipeline如下:

PP-OCR系统在持续迭代优化,目前已发布PP-OCR和PP-OCRv2两个版本:

-PP-OCR从骨干网络选择和调整、预测头部的设计、数据增强、学习率变换策略、正则化参数选择、预训练模型使用以及模型自动裁剪量化8个方面,采用19个有效策略,对各个模块的模型进行效果调优和瘦身(如绿框所示),最终得到整体大小为3.5M的超轻量中英文OCR和2.8M的英文数字OCR。更多细节请参考PP-OCR技术方案 https://arxiv.org/abs/2009.09941

+PP-OCR从骨干网络选择和调整、预测头部的设计、数据增强、学习率变换策略、正则化参数选择、预训练模型使用以及模型自动裁剪量化8个方面,采用19个有效策略,对各个模块的模型进行效果调优和瘦身(如绿框所示),最终得到整体大小为3.5M的超轻量中英文OCR和2.8M的英文数字OCR。更多细节请参考[PP-OCR技术报告](https://arxiv.org/abs/2009.09941)。

#### PP-OCRv2

-PP-OCRv2在PP-OCR的基础上,进一步在5个方面重点优化,检测模型采用CML协同互学习知识蒸馏策略和CopyPaste数据增广策略;识别模型采用LCNet轻量级骨干网络、UDML 改进知识蒸馏策略和[Enhanced CTC loss](./enhanced_ctc_loss.md)损失函数改进(如上图红框所示),进一步在推理速度和预测效果上取得明显提升。更多细节请参考PP-OCRv2[技术报告](https://arxiv.org/abs/2109.03144)。

+PP-OCRv2在PP-OCR的基础上,进一步在5个方面重点优化,检测模型采用CML协同互学习知识蒸馏策略和CopyPaste数据增广策略;识别模型采用LCNet轻量级骨干网络、UDML 改进知识蒸馏策略和[Enhanced CTC loss](./enhanced_ctc_loss.md)损失函数改进(如上图红框所示),进一步在推理速度和预测效果上取得明显提升。更多细节请参考[PP-OCRv2技术报告](https://arxiv.org/abs/2109.03144)。

#### PP-OCRv3

@@ -48,7 +48,7 @@ PP-OCRv3系统pipeline如下:

-更多细节请参考PP-OCRv3[技术报告](./PP-OCRv3_introduction.md)。

+更多细节请参考[PP-OCRv3技术报告](https://arxiv.org/abs/2206.03001v2) 👉[中文简洁版](./PP-OCRv3_introduction.md)

diff --git a/doc/doc_ch/recognition.md b/doc/doc_ch/recognition.md

index 8457df69ff4c09b196b0f0f91271a92344217d75..acf09f7bdecf41adfeee26efde6afaff8db7a41e 100644

--- a/doc/doc_ch/recognition.md

+++ b/doc/doc_ch/recognition.md

@@ -18,6 +18,7 @@

- [2.6. 知识蒸馏训练](#26-知识蒸馏训练)

- [2.7. 多语言模型训练](#27-多语言模型训练)

- [2.8. 其他训练环境](#28-其他训练环境)

+ - [2.9. 模型微调](#29-模型微调)

- [3. 模型评估与预测](#3-模型评估与预测)

- [3.1. 指标评估](#31-指标评估)

- [3.2. 测试识别效果](#32-测试识别效果)

@@ -217,6 +218,30 @@ python3 tools/train.py -c configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml -o Global.pre

python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml -o Global.pretrained_model=./pretrain_models/en_PP-OCRv3_rec_train/best_accuracy

```

+正常启动训练后,会看到以下log输出:

+

+```

+[2022/02/22 07:58:05] root INFO: epoch: [1/800], iter: 10, lr: 0.000000, loss: 0.754281, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.55541 s, batch_cost: 0.91654 s, samples: 1408, ips: 153.62133

+[2022/02/22 07:58:13] root INFO: epoch: [1/800], iter: 20, lr: 0.000001, loss: 0.924677, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.00236 s, batch_cost: 0.28528 s, samples: 1280, ips: 448.68599

+[2022/02/22 07:58:23] root INFO: epoch: [1/800], iter: 30, lr: 0.000002, loss: 0.967231, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.14527 s, batch_cost: 0.42714 s, samples: 1280, ips: 299.66507

+[2022/02/22 07:58:31] root INFO: epoch: [1/800], iter: 40, lr: 0.000003, loss: 0.895318, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.00173 s, batch_cost: 0.27719 s, samples: 1280, ips: 461.77252

+```

+

+log 中自动打印如下信息:

+

+| 字段 | 含义 |

+| :----: | :------: |

+| epoch | 当前迭代轮次 |

+| iter | 当前迭代次数 |

+| lr | 当前学习率 |

+| loss | 当前损失函数 |

+| acc | 当前batch的准确率 |

+| norm_edit_dis | 当前 batch 的编辑距离 |

+| reader_cost | 当前 batch 数据处理耗时 |

+| batch_cost | 当前 batch 总耗时 |

+| samples | 当前 batch 内的样本数 |

+| ips | 每秒处理图片的数量 |

+

PaddleOCR支持训练和评估交替进行, 可以在 `configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml` 中修改 `eval_batch_step` 设置评估频率,默认每500个iter评估一次。评估过程中默认将最佳acc模型,保存为 `output/en_PP-OCRv3_rec/best_accuracy` 。

@@ -363,7 +388,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/en_PP-OCRv3_rec_train/best_accuracy

```

-**注意:** 采用多机多卡训练时,需要替换上面命令中的ips值为您机器的地址,机器之间需要能够相互ping通。另外,训练时需要在多个机器上分别启动命令。查看机器ip地址的命令为`ifconfig`。

+**注意:** (1)采用多机多卡训练时,需要替换上面命令中的ips值为您机器的地址,机器之间需要能够相互ping通;(2)训练时需要在多个机器上分别启动命令。查看机器ip地址的命令为`ifconfig`;(3)更多关于分布式训练的性能优势等信息,请参考:[分布式训练教程](./distributed_training.md)。

## 2.6. 知识蒸馏训练

@@ -438,6 +463,11 @@ Windows平台只支持`单卡`的训练与预测,指定GPU进行训练`set CUD

- Linux DCU

DCU设备上运行需要设置环境变量 `export HIP_VISIBLE_DEVICES=0,1,2,3`,其余训练评估预测命令与Linux GPU完全相同。

+## 2.9 模型微调

+

+实际使用过程中,建议加载官方提供的预训练模型,在自己的数据集中进行微调,关于识别模型的微调方法,请参考:[模型微调教程](./finetune.md)。

+

+

# 3. 模型评估与预测

## 3.1. 指标评估

@@ -540,12 +570,13 @@ inference/en_PP-OCRv3_rec/

- 自定义模型推理

- 如果训练时修改了文本的字典,在使用inference模型预测时,需要通过`--rec_char_dict_path`指定使用的字典路径

+ 如果训练时修改了文本的字典,在使用inference模型预测时,需要通过`--rec_char_dict_path`指定使用的字典路径,更多关于推理超参数的配置与解释,请参考:[模型推理超参数解释教程](./inference_args.md)。

```

python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words_en/word_336.png" --rec_model_dir="./your inference model" --rec_image_shape="3, 48, 320" --rec_char_dict_path="your text dict path"

```

+

# 5. FAQ

Q1: 训练模型转inference 模型之后预测效果不一致?

diff --git a/doc/doc_en/detection_en.md b/doc/doc_en/detection_en.md

index 76e0f8509b92dfaae62dce7ba2b4b73d39da1600..f85bf585cb66332d90de8d66ed315cb04ece7636 100644

--- a/doc/doc_en/detection_en.md

+++ b/doc/doc_en/detection_en.md

@@ -159,7 +159,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/MobileNetV3_large_x0_5_pretrained

```

-**Note:** When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. In addition, training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`.

+**Note:** (1) When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. (2) Training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`. (3) For more details about the distributed training speedup ratio, please refer to [Distributed Training Tutorial](./distributed_training_en.md).

### 2.6 Training with knowledge distillation

diff --git a/doc/doc_en/distributed_training.md b/doc/doc_en/distributed_training_en.md

similarity index 70%

rename from doc/doc_en/distributed_training.md

rename to doc/doc_en/distributed_training_en.md

index 2822ee5e4ea52720a458e4060d8a09be7b98846b..5a219ed2b494d6239096ff634dfdc702c4be9419 100644

--- a/doc/doc_en/distributed_training.md

+++ b/doc/doc_en/distributed_training_en.md

@@ -40,11 +40,17 @@ python3 -m paddle.distributed.launch \

## Performance comparison

-* Based on 26W public recognition dataset (LSVT, rctw, mtwi), training on single 8-card P40 and dual 8-card P40, the final time consumption is as follows.

+* On two 8-card P40 graphics cards, the final time consumption and speedup ratio for public recognition dataset (LSVT, RCTW, MTWI) containing 260k images are as follows.

-| Model | Config file | Number of machines | Number of GPUs per machine | Training time | Recognition acc | Speedup ratio |

-| :-------: | :------------: | :----------------: | :----------------------------: | :------------------: | :--------------: | :-----------: |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 1 | 8 | 60h | 66.7% | - |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 2 | 8 | 40h | 67.0% | 150% |

-It can be seen that the training time is shortened from 60h to 40h, the speedup ratio can reach 150% (60h / 40h), and the efficiency is 75% (60h / (40h * 2)).

+| Model | Config file | Recognition acc | single 8-card training time | two 8-card training time | Speedup ratio |

+|------|-----|--------|--------|--------|-----|

+| CRNN | [rec_chinese_lite_train_v2.0.yml](../../configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml) | 67.0% | 2.50d | 1.67d | **1.5** |

+

+

+* On four 8-card V100 graphics cards, the final time consumption and speedup ratio for full data are as follows.

+

+

+| Model | Config file | Recognition acc | single 8-card training time | four 8-card training time | Speedup ratio |

+|------|-----|--------|--------|--------|-----|

+| SVTR | [ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml) | 74.0% | 10d | 2.84d | **3.5** |

diff --git a/doc/doc_en/ppocr_introduction_en.md b/doc/doc_en/ppocr_introduction_en.md

index b13d7f9bf1915de4bbbbec7b384d278e1d7ab8b4..d28ccb3529a46bdf0d3fd1d1c81f14137d10f2ea 100644

--- a/doc/doc_en/ppocr_introduction_en.md

+++ b/doc/doc_en/ppocr_introduction_en.md

@@ -29,10 +29,10 @@ PP-OCR pipeline is as follows:

PP-OCR system is in continuous optimization. At present, PP-OCR and PP-OCRv2 have been released:

-PP-OCR adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module (as shown in the green box above). The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to the PP-OCR technical article (https://arxiv.org/abs/2009.09941).

+PP-OCR adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module (as shown in the green box above). The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to [PP-OCR technical report](https://arxiv.org/abs/2009.09941).

#### PP-OCRv2

-On the basis of PP-OCR, PP-OCRv2 is further optimized in five aspects. The detection model adopts CML(Collaborative Mutual Learning) knowledge distillation strategy and CopyPaste data expansion strategy. The recognition model adopts LCNet lightweight backbone network, U-DML knowledge distillation strategy and enhanced CTC loss function improvement (as shown in the red box above), which further improves the inference speed and prediction effect. For more details, please refer to the technical report of PP-OCRv2 (https://arxiv.org/abs/2109.03144).

+On the basis of PP-OCR, PP-OCRv2 is further optimized in five aspects. The detection model adopts CML(Collaborative Mutual Learning) knowledge distillation strategy and CopyPaste data expansion strategy. The recognition model adopts LCNet lightweight backbone network, U-DML knowledge distillation strategy and enhanced CTC loss function improvement (as shown in the red box above), which further improves the inference speed and prediction effect. For more details, please refer to [PP-OCRv2 technical report](https://arxiv.org/abs/2109.03144).

#### PP-OCRv3

@@ -46,7 +46,7 @@ PP-OCRv3 pipeline is as follows:

-更多细节请参考PP-OCRv3[技术报告](./PP-OCRv3_introduction.md)。

+更多细节请参考[PP-OCRv3技术报告](https://arxiv.org/abs/2206.03001v2) 👉[中文简洁版](./PP-OCRv3_introduction.md)

diff --git a/doc/doc_ch/recognition.md b/doc/doc_ch/recognition.md

index 8457df69ff4c09b196b0f0f91271a92344217d75..acf09f7bdecf41adfeee26efde6afaff8db7a41e 100644

--- a/doc/doc_ch/recognition.md

+++ b/doc/doc_ch/recognition.md

@@ -18,6 +18,7 @@

- [2.6. 知识蒸馏训练](#26-知识蒸馏训练)

- [2.7. 多语言模型训练](#27-多语言模型训练)

- [2.8. 其他训练环境](#28-其他训练环境)

+ - [2.9. 模型微调](#29-模型微调)

- [3. 模型评估与预测](#3-模型评估与预测)

- [3.1. 指标评估](#31-指标评估)

- [3.2. 测试识别效果](#32-测试识别效果)

@@ -217,6 +218,30 @@ python3 tools/train.py -c configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml -o Global.pre

python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml -o Global.pretrained_model=./pretrain_models/en_PP-OCRv3_rec_train/best_accuracy

```

+正常启动训练后,会看到以下log输出:

+

+```

+[2022/02/22 07:58:05] root INFO: epoch: [1/800], iter: 10, lr: 0.000000, loss: 0.754281, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.55541 s, batch_cost: 0.91654 s, samples: 1408, ips: 153.62133

+[2022/02/22 07:58:13] root INFO: epoch: [1/800], iter: 20, lr: 0.000001, loss: 0.924677, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.00236 s, batch_cost: 0.28528 s, samples: 1280, ips: 448.68599

+[2022/02/22 07:58:23] root INFO: epoch: [1/800], iter: 30, lr: 0.000002, loss: 0.967231, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.14527 s, batch_cost: 0.42714 s, samples: 1280, ips: 299.66507

+[2022/02/22 07:58:31] root INFO: epoch: [1/800], iter: 40, lr: 0.000003, loss: 0.895318, acc: 0.000000, norm_edit_dis: 0.000008, reader_cost: 0.00173 s, batch_cost: 0.27719 s, samples: 1280, ips: 461.77252

+```

+

+log 中自动打印如下信息:

+

+| 字段 | 含义 |

+| :----: | :------: |

+| epoch | 当前迭代轮次 |

+| iter | 当前迭代次数 |

+| lr | 当前学习率 |

+| loss | 当前损失函数 |

+| acc | 当前batch的准确率 |

+| norm_edit_dis | 当前 batch 的编辑距离 |

+| reader_cost | 当前 batch 数据处理耗时 |

+| batch_cost | 当前 batch 总耗时 |

+| samples | 当前 batch 内的样本数 |

+| ips | 每秒处理图片的数量 |

+

PaddleOCR支持训练和评估交替进行, 可以在 `configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml` 中修改 `eval_batch_step` 设置评估频率,默认每500个iter评估一次。评估过程中默认将最佳acc模型,保存为 `output/en_PP-OCRv3_rec/best_accuracy` 。

@@ -363,7 +388,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/en_PP-OCRv3_rec_train/best_accuracy

```

-**注意:** 采用多机多卡训练时,需要替换上面命令中的ips值为您机器的地址,机器之间需要能够相互ping通。另外,训练时需要在多个机器上分别启动命令。查看机器ip地址的命令为`ifconfig`。

+**注意:** (1)采用多机多卡训练时,需要替换上面命令中的ips值为您机器的地址,机器之间需要能够相互ping通;(2)训练时需要在多个机器上分别启动命令。查看机器ip地址的命令为`ifconfig`;(3)更多关于分布式训练的性能优势等信息,请参考:[分布式训练教程](./distributed_training.md)。

## 2.6. 知识蒸馏训练

@@ -438,6 +463,11 @@ Windows平台只支持`单卡`的训练与预测,指定GPU进行训练`set CUD

- Linux DCU

DCU设备上运行需要设置环境变量 `export HIP_VISIBLE_DEVICES=0,1,2,3`,其余训练评估预测命令与Linux GPU完全相同。

+## 2.9 模型微调

+

+实际使用过程中,建议加载官方提供的预训练模型,在自己的数据集中进行微调,关于识别模型的微调方法,请参考:[模型微调教程](./finetune.md)。

+

+

# 3. 模型评估与预测

## 3.1. 指标评估

@@ -540,12 +570,13 @@ inference/en_PP-OCRv3_rec/

- 自定义模型推理

- 如果训练时修改了文本的字典,在使用inference模型预测时,需要通过`--rec_char_dict_path`指定使用的字典路径

+ 如果训练时修改了文本的字典,在使用inference模型预测时,需要通过`--rec_char_dict_path`指定使用的字典路径,更多关于推理超参数的配置与解释,请参考:[模型推理超参数解释教程](./inference_args.md)。

```

python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words_en/word_336.png" --rec_model_dir="./your inference model" --rec_image_shape="3, 48, 320" --rec_char_dict_path="your text dict path"

```

+

# 5. FAQ

Q1: 训练模型转inference 模型之后预测效果不一致?