Merge pull request #4615 from cuicheng01/dygraph

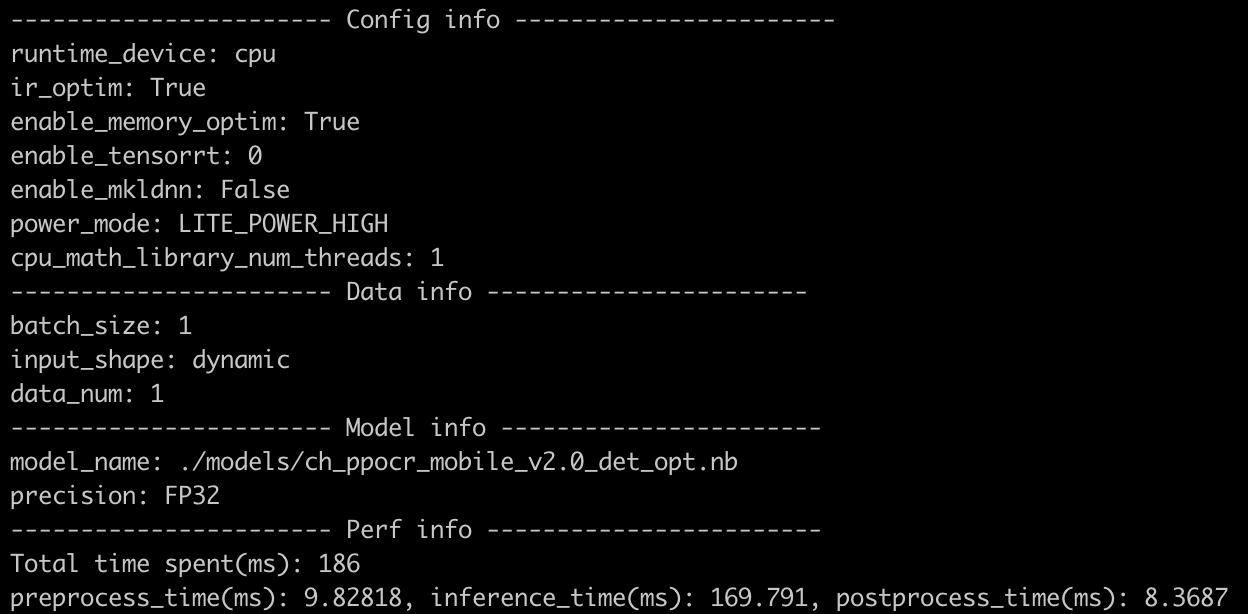

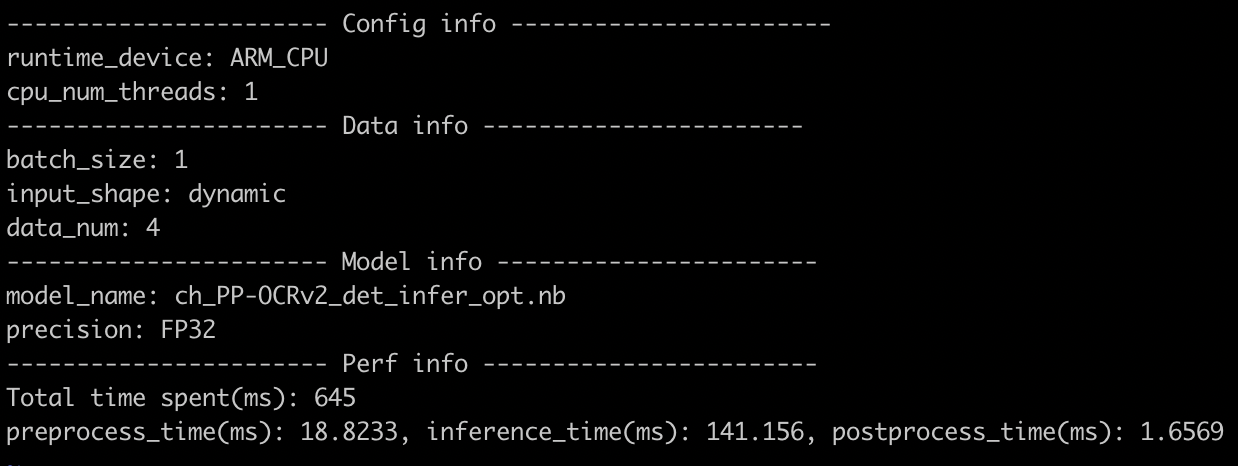

update tipc lite demo

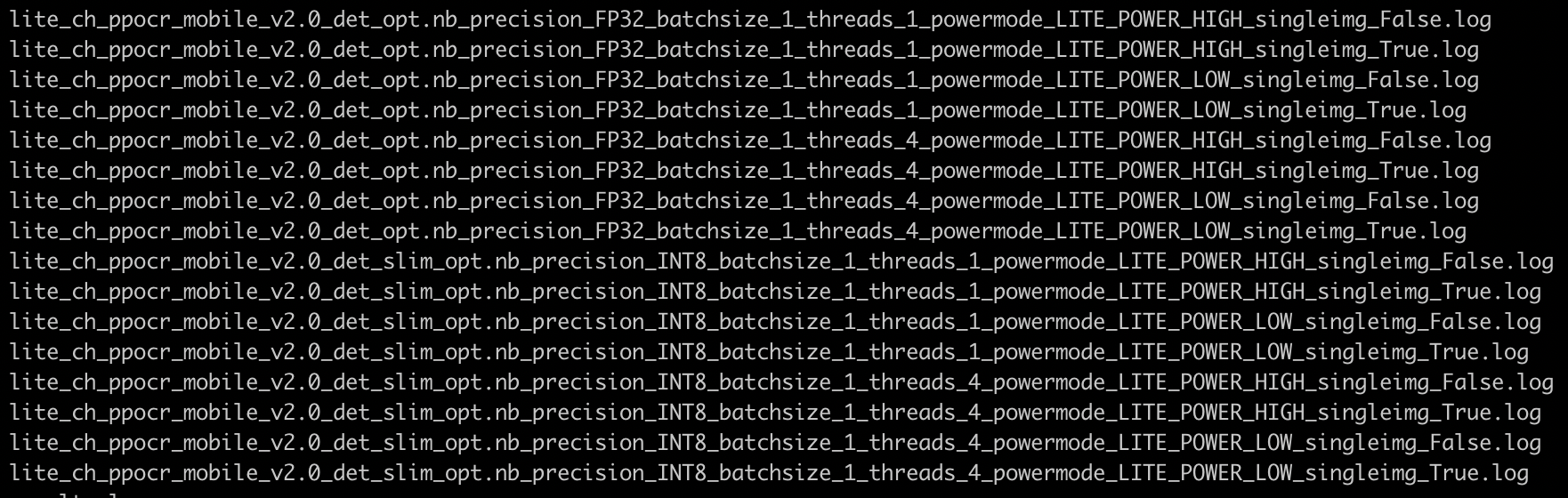

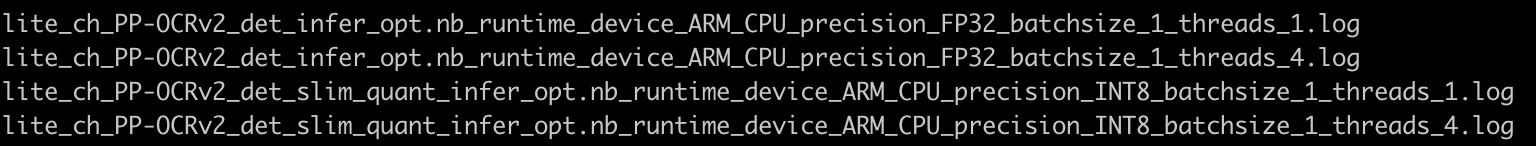

Showing

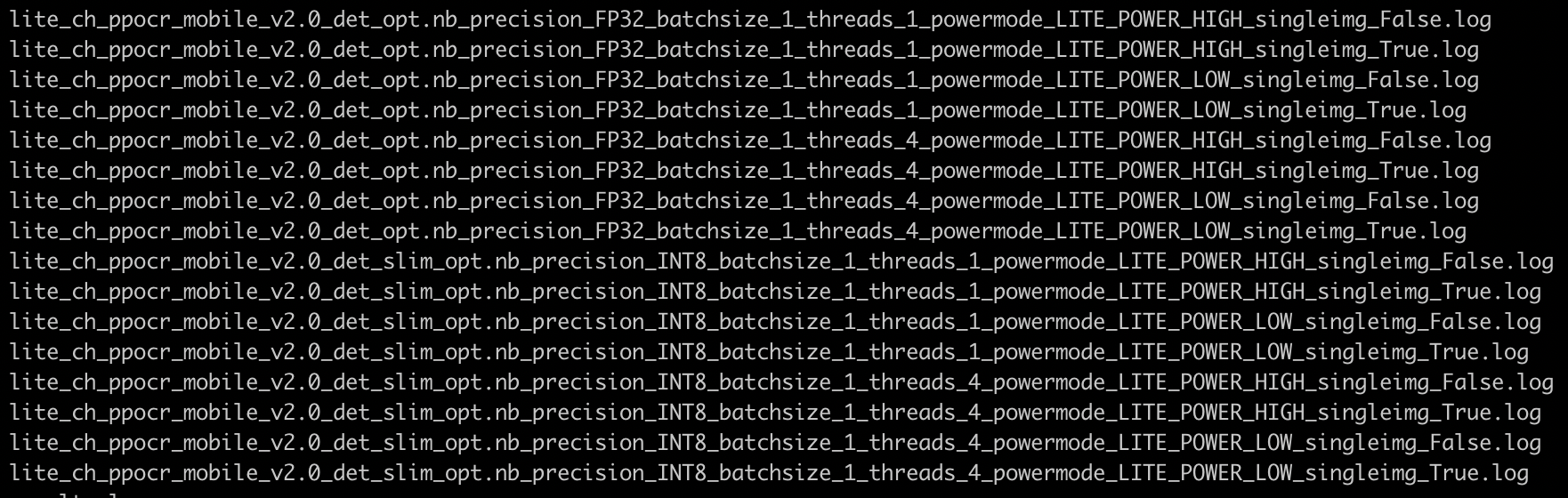

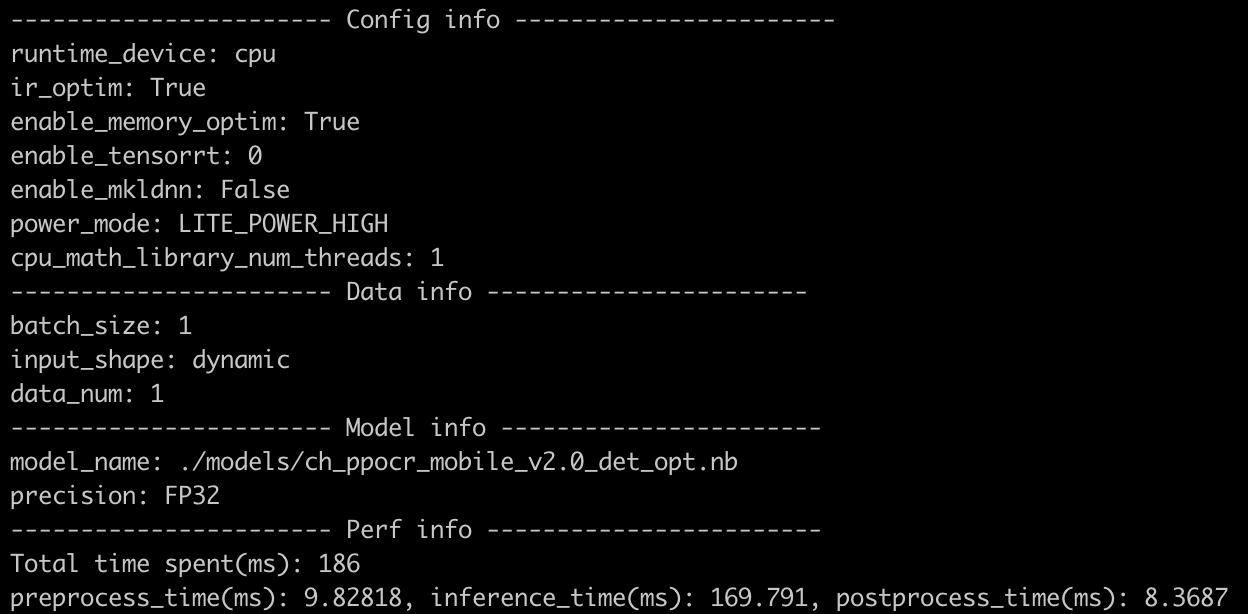

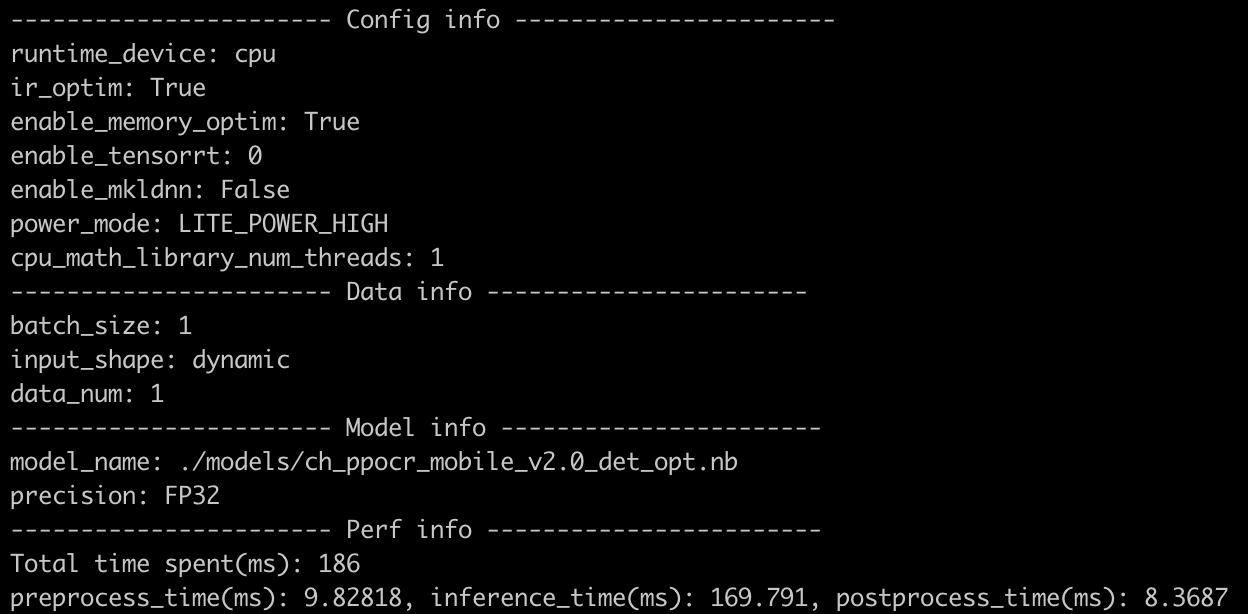

| W: | H:

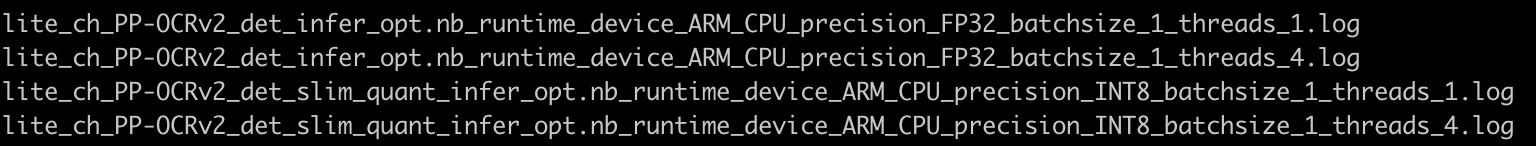

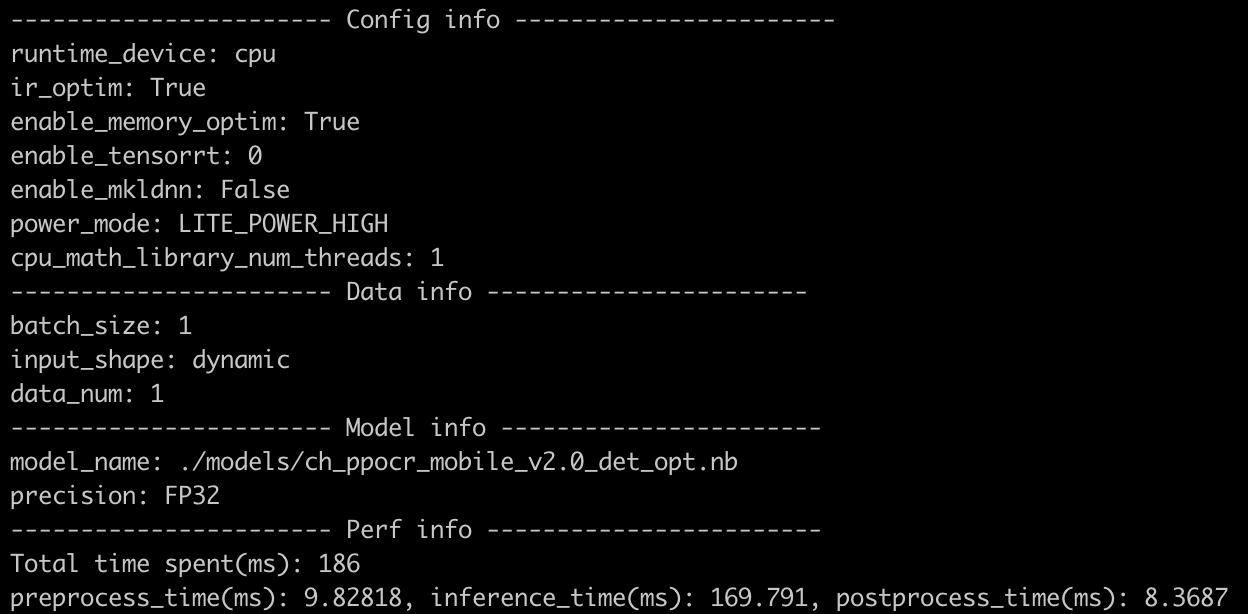

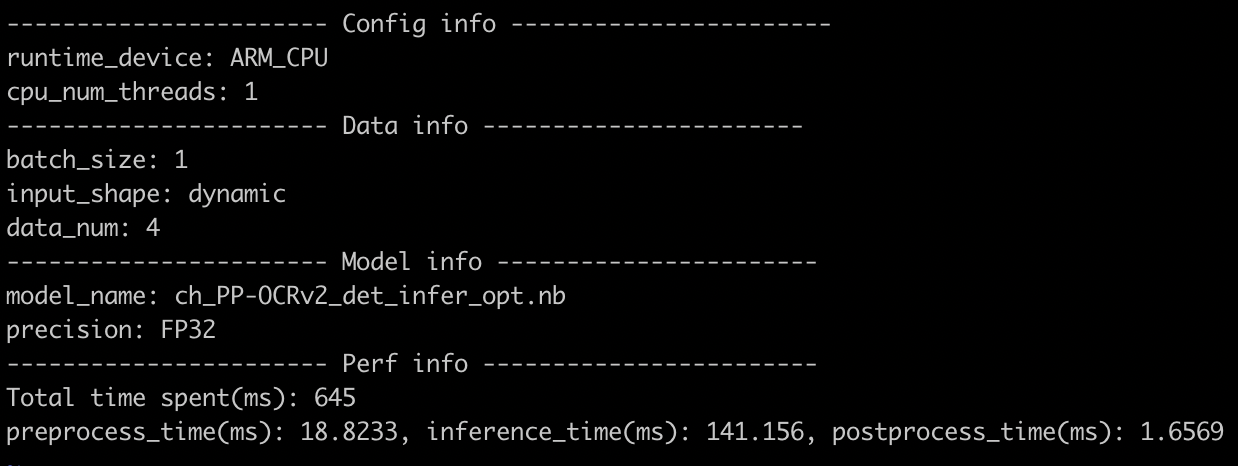

| W: | H:

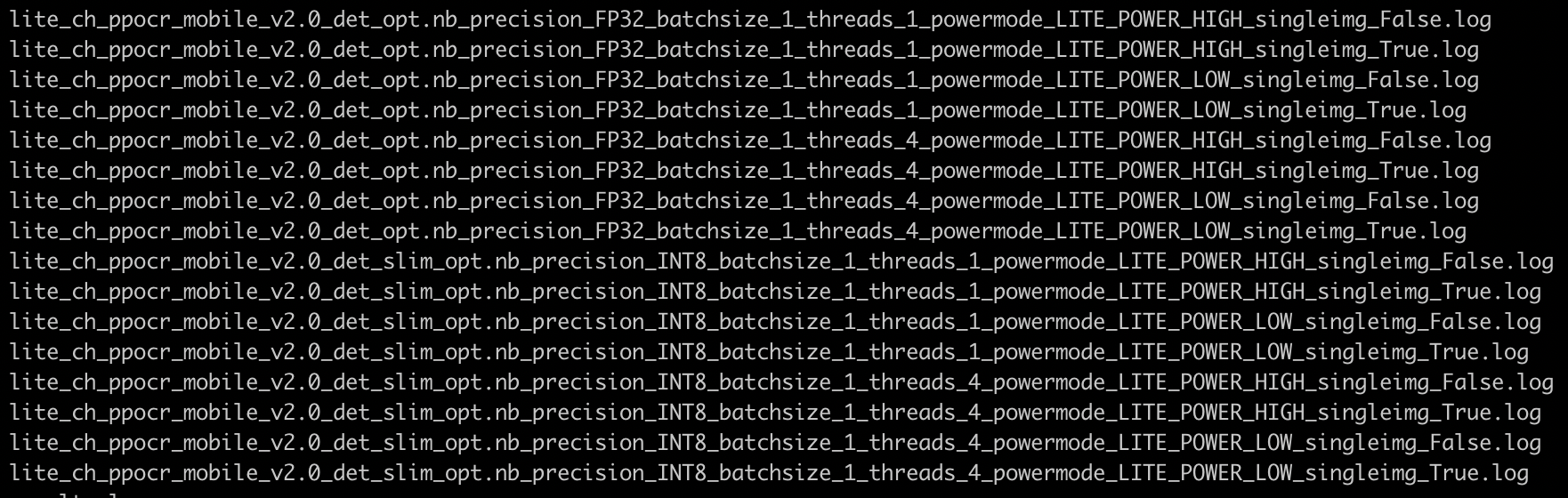

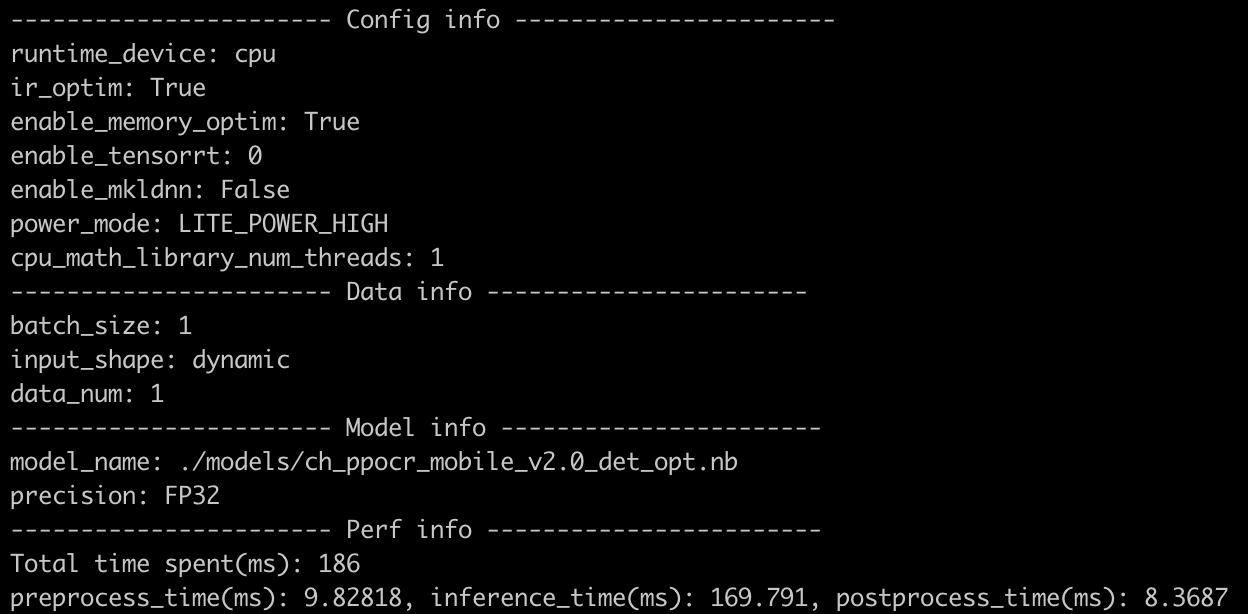

| W: | H:

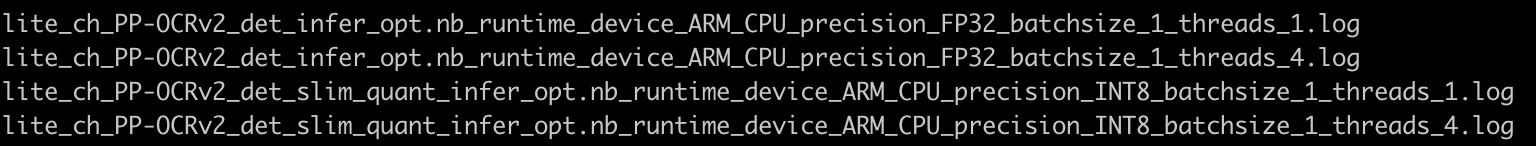

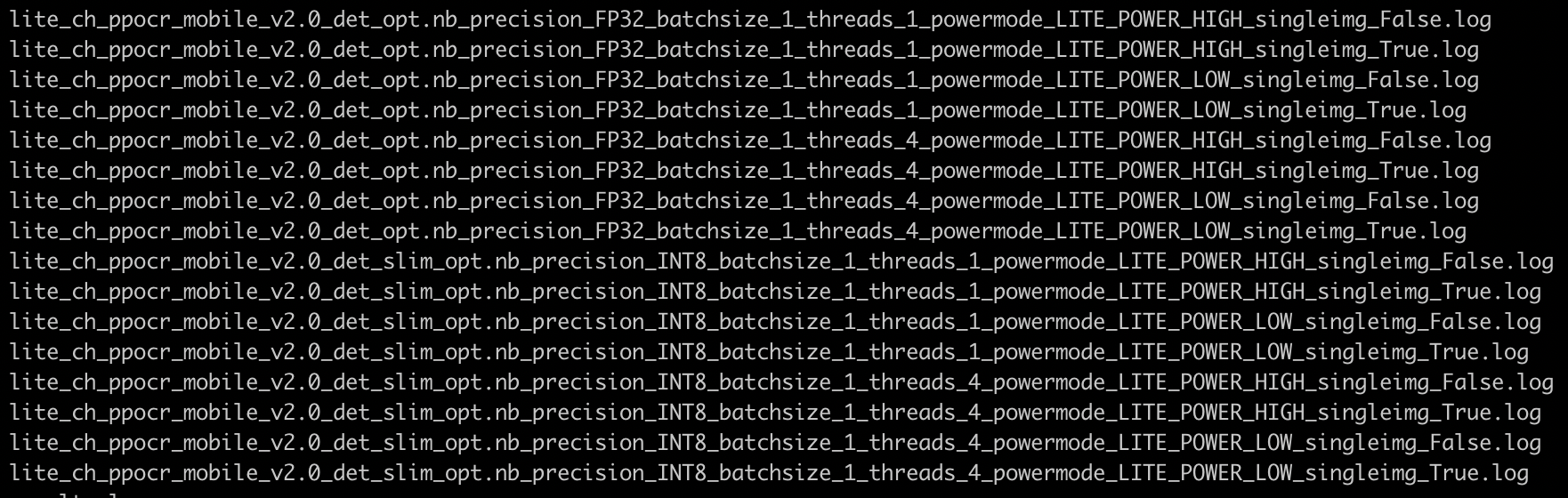

| W: | H:

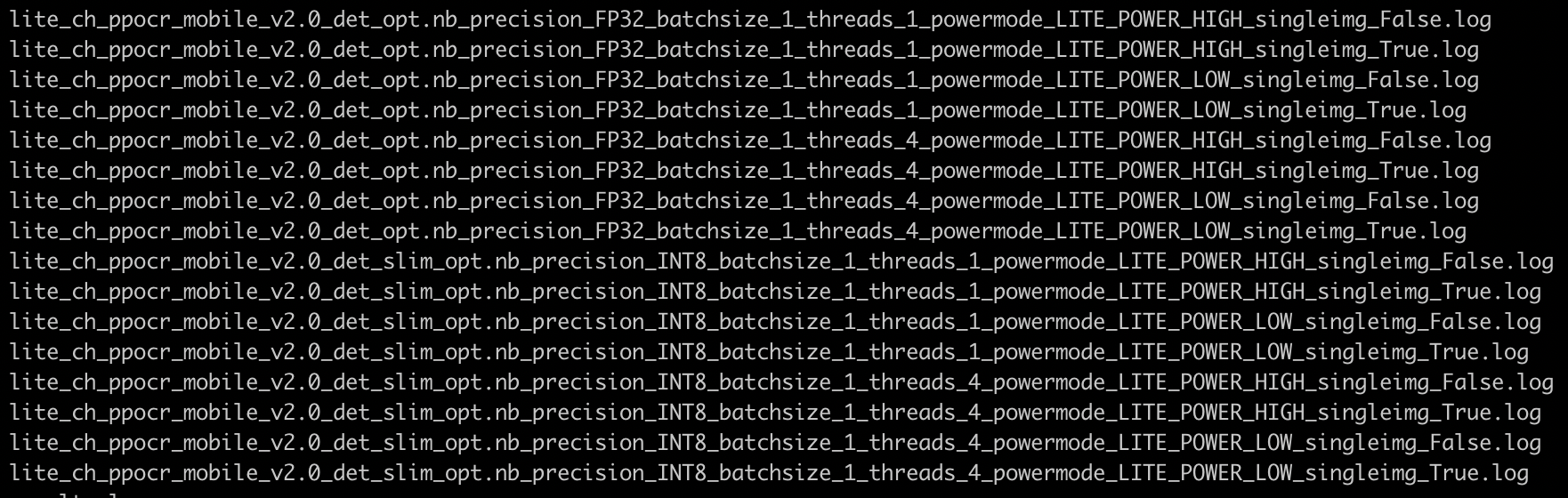

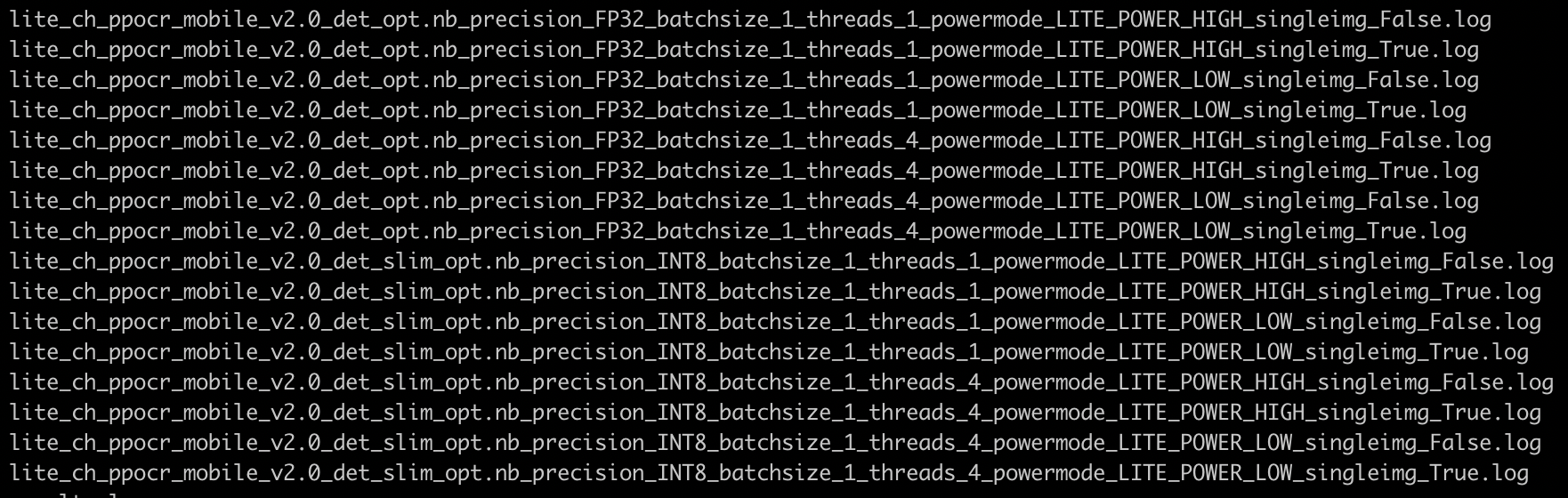

test_tipc/prepare_lite.sh

0 → 100644

update tipc lite demo

289.9 KB | W: | H:

209.8 KB | W: | H:

775.5 KB | W: | H:

168.7 KB | W: | H: