diff --git a/README_ch.md b/README_ch.md

index c9f71fa28727dc0a1ea4cd9677dcb859a9735ce7..9de3110531ae10004e3b29497a9baeb5fb6fc449 100755

--- a/README_ch.md

+++ b/README_ch.md

@@ -126,11 +126,7 @@ PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力

- [文本识别算法](./doc/doc_ch/algorithm_overview.md#12-%E6%96%87%E6%9C%AC%E8%AF%86%E5%88%AB%E7%AE%97%E6%B3%95)

- [端到端算法](./doc/doc_ch/algorithm_overview.md#2-%E6%96%87%E6%9C%AC%E8%AF%86%E5%88%AB%E7%AE%97%E6%B3%95)

- [使用PaddleOCR架构添加新算法](./doc/doc_ch/add_new_algorithm.md)

-- [场景应用](./doc/doc_ch/application.md)

- - [金融场景(表单/票据等)]()

- - [工业场景(电表度数/车牌等)]()

- - [教育场景(手写体/公式等)]()

- - [医疗场景(化验单等)]()

+- [场景应用](./applications)

- 数据标注与合成

- [半自动标注工具PPOCRLabel](./PPOCRLabel/README_ch.md)

- [数据合成工具Style-Text](./StyleText/README_ch.md)

diff --git "a/applications/\345\244\232\346\250\241\346\200\201\350\241\250\345\215\225\350\257\206\345\210\253.md" "b/applications/\345\244\232\346\250\241\346\200\201\350\241\250\345\215\225\350\257\206\345\210\253.md"

index e64a22e169482ae51cadf8b25d75c5d98651e80b..d47bbe77045d502d82a5a8d8b8eca685963e6380 100644

--- "a/applications/\345\244\232\346\250\241\346\200\201\350\241\250\345\215\225\350\257\206\345\210\253.md"

+++ "b/applications/\345\244\232\346\250\241\346\200\201\350\241\250\345\215\225\350\257\206\345\210\253.md"

@@ -16,14 +16,14 @@

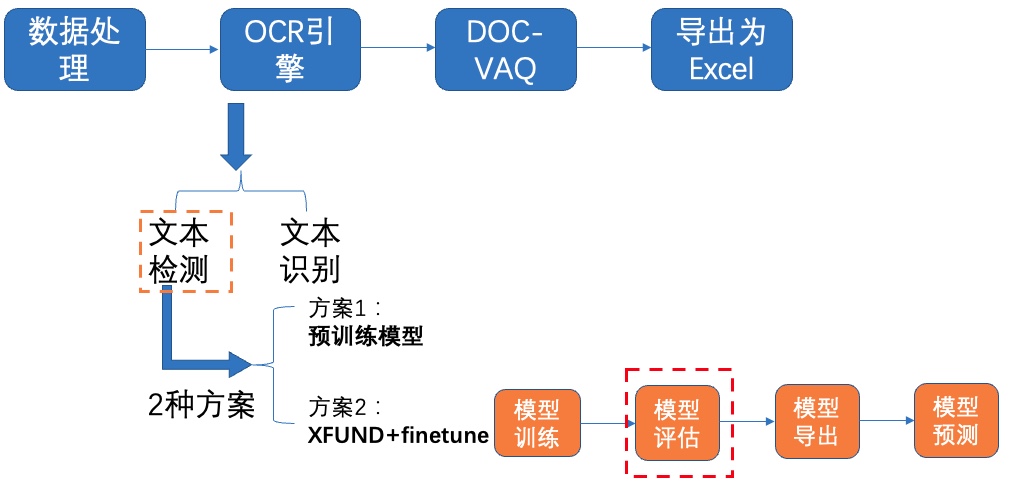

图1 多模态表单识别流程图

-注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3815918)(配备Tesla V100、A100等高级算力资源)

+注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3884375)(配备Tesla V100、A100等高级算力资源)

# 2 安装说明

-下载PaddleOCR源码,本项目中已经帮大家打包好的PaddleOCR(已经修改好配置文件),无需下载解压即可,只需安装依赖环境~

+下载PaddleOCR源码,上述AIStudio项目中已经帮大家打包好的PaddleOCR(已经修改好配置文件),无需下载解压即可,只需安装依赖环境~

```python

@@ -33,7 +33,7 @@

```python

# 如仍需安装or安装更新,可以执行以下步骤

-! git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

+# ! git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

# ! git clone https://gitee.com/PaddlePaddle/PaddleOCR

```

@@ -290,7 +290,7 @@ Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

图1 多模态表单识别流程图

-注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3815918)(配备Tesla V100、A100等高级算力资源)

+注:欢迎再AIStudio领取免费算力体验线上实训,项目链接: [多模态表单识别](https://aistudio.baidu.com/aistudio/projectdetail/3884375)(配备Tesla V100、A100等高级算力资源)

# 2 安装说明

-下载PaddleOCR源码,本项目中已经帮大家打包好的PaddleOCR(已经修改好配置文件),无需下载解压即可,只需安装依赖环境~

+下载PaddleOCR源码,上述AIStudio项目中已经帮大家打包好的PaddleOCR(已经修改好配置文件),无需下载解压即可,只需安装依赖环境~

```python

@@ -33,7 +33,7 @@

```python

# 如仍需安装or安装更新,可以执行以下步骤

-! git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

+# ! git clone https://github.com/PaddlePaddle/PaddleOCR.git -b dygraph

# ! git clone https://gitee.com/PaddlePaddle/PaddleOCR

```

@@ -290,7 +290,7 @@ Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

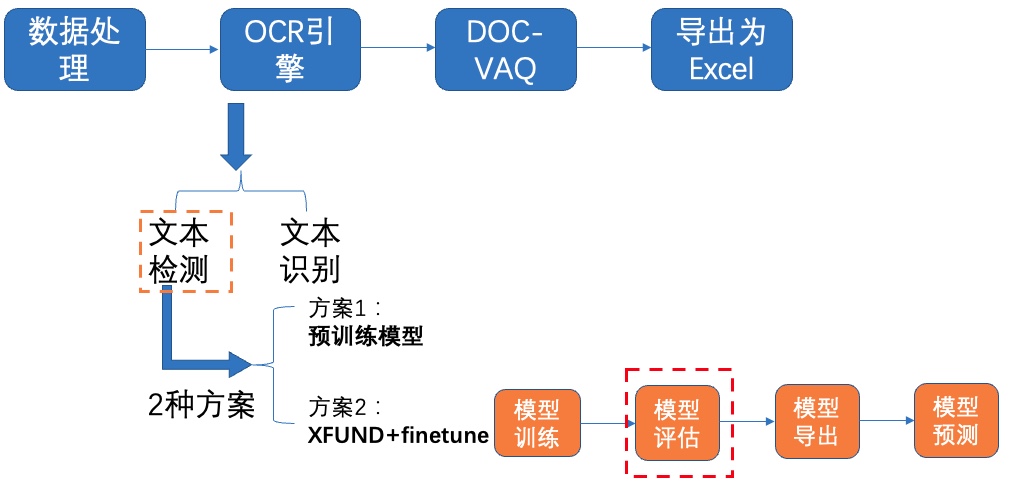

图8 文本检测方案2-模型评估

-使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/ch_db_mv3-student1600-finetune/best_accuracy`

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/ch_db_mv3-student1600-finetune/best_accuracy`,[模型下载地址](https://paddleocr.bj.bcebos.com/fanliku/sheet_recognition/ch_db_mv3-student1600-finetune.zip)

```python

@@ -538,7 +538,7 @@ Train.dataset.ratio_list:动态采样

图16 文本识别方案3-模型评估

-使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/rec_mobile_pp-OCRv2-student-readldata/best_accuracy`

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/rec_mobile_pp-OCRv2-student-readldata/best_accuracy`,[模型下载地址](https://paddleocr.bj.bcebos.com/fanliku/sheet_recognition/rec_mobile_pp-OCRv2-student-realdata.zip)

```python

diff --git a/doc/doc_ch/application.md b/doc/doc_ch/application.md

deleted file mode 100644

index 6dd465f9e71951bfbc1f749b0ca93d66cbfeb220..0000000000000000000000000000000000000000

--- a/doc/doc_ch/application.md

+++ /dev/null

@@ -1 +0,0 @@

-# 场景应用

\ No newline at end of file

diff --git a/doc/doc_en/ocr_book_en.md b/doc/doc_en/ocr_book_en.md

index 91a759cbc2be4fca9d1ec698ea3774a9f1ebeeda..b0455fe61afe8ae456f224e57d346b1fed553eb4 100644

--- a/doc/doc_en/ocr_book_en.md

+++ b/doc/doc_en/ocr_book_en.md

@@ -1,6 +1,6 @@

# E-book: *Dive Into OCR*

-"Dive Into OCR" is a textbook that combines OCR theory and practice, written by the PaddleOCR team, Chen Zhineng, a Junior Research Fellow at Fudan University, Huang Wenhui, a senior expert in the field of vision at China Mobile Research Institute, and other industry-university-research colleagues, as well as OCR developers. The main features are as follows:

+"Dive Into OCR" is a textbook that combines OCR theory and practice, written by the PaddleOCR team, Chen Zhineng, a Pre-tenure Professor at Fudan University, Huang Wenhui, a senior expert in the field of vision at China Mobile Research Institute, and other industry-university-research colleagues, as well as OCR developers. The main features are as follows:

- OCR full-stack technology covering text detection, recognition and document analysis

- Closely integrate theory and practice, cross the code implementation gap, and supporting instructional videos

diff --git a/paddleocr.py b/paddleocr.py

index f7871db6470c75db82e8251dff5361c099c4adda..9eff2d0f36bc2b092191abd891426088a4b76ded 100644

--- a/paddleocr.py

+++ b/paddleocr.py

@@ -47,8 +47,8 @@ __all__ = [

]

SUPPORT_DET_MODEL = ['DB']

-VERSION = '2.5.0.1'

-SUPPORT_REC_MODEL = ['CRNN']

+VERSION = '2.5.0.2'

+SUPPORT_REC_MODEL = ['CRNN', 'SVTR_LCNet']

BASE_DIR = os.path.expanduser("~/.paddleocr/")

DEFAULT_OCR_MODEL_VERSION = 'PP-OCRv3'

diff --git a/ppstructure/docs/table/recovery.jpg b/ppstructure/docs/table/recovery.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..bee2e2fb3499ec4b348e2b2f1475a87c9c562190

Binary files /dev/null and b/ppstructure/docs/table/recovery.jpg differ

diff --git a/ppstructure/predict_system.py b/ppstructure/predict_system.py

index 7f18fcdf8e6b57be6e129f3271f5bb583f4da616..b0ede5f3a1b88df6efed53d7ca33a696bc7a7fff 100644

--- a/ppstructure/predict_system.py

+++ b/ppstructure/predict_system.py

@@ -23,6 +23,7 @@ sys.path.append(os.path.abspath(os.path.join(__dir__, '..')))

os.environ["FLAGS_allocator_strategy"] = 'auto_growth'

import cv2

import json

+import numpy as np

import time

import logging

from copy import deepcopy

@@ -33,6 +34,7 @@ from ppocr.utils.logging import get_logger

from tools.infer.predict_system import TextSystem

from ppstructure.table.predict_table import TableSystem, to_excel

from ppstructure.utility import parse_args, draw_structure_result

+from ppstructure.recovery.docx import convert_info_docx

logger = get_logger()

@@ -104,7 +106,12 @@ class StructureSystem(object):

return_ocr_result_in_table)

else:

if self.text_system is not None:

- filter_boxes, filter_rec_res = self.text_system(roi_img)

+ if args.recovery:

+ wht_im = np.ones(ori_im.shape, dtype=ori_im.dtype)

+ wht_im[y1:y2, x1:x2, :] = roi_img

+ filter_boxes, filter_rec_res = self.text_system(wht_im)

+ else:

+ filter_boxes, filter_rec_res = self.text_system(roi_img)

# remove style char

style_token = [

'

图8 文本检测方案2-模型评估

-使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/ch_db_mv3-student1600-finetune/best_accuracy`

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/ch_db_mv3-student1600-finetune/best_accuracy`,[模型下载地址](https://paddleocr.bj.bcebos.com/fanliku/sheet_recognition/ch_db_mv3-student1600-finetune.zip)

```python

@@ -538,7 +538,7 @@ Train.dataset.ratio_list:动态采样

图16 文本识别方案3-模型评估

-使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/rec_mobile_pp-OCRv2-student-readldata/best_accuracy`

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`,这里为大家提供训练好的模型`./pretrain/rec_mobile_pp-OCRv2-student-readldata/best_accuracy`,[模型下载地址](https://paddleocr.bj.bcebos.com/fanliku/sheet_recognition/rec_mobile_pp-OCRv2-student-realdata.zip)

```python

diff --git a/doc/doc_ch/application.md b/doc/doc_ch/application.md

deleted file mode 100644

index 6dd465f9e71951bfbc1f749b0ca93d66cbfeb220..0000000000000000000000000000000000000000

--- a/doc/doc_ch/application.md

+++ /dev/null

@@ -1 +0,0 @@

-# 场景应用

\ No newline at end of file

diff --git a/doc/doc_en/ocr_book_en.md b/doc/doc_en/ocr_book_en.md

index 91a759cbc2be4fca9d1ec698ea3774a9f1ebeeda..b0455fe61afe8ae456f224e57d346b1fed553eb4 100644

--- a/doc/doc_en/ocr_book_en.md

+++ b/doc/doc_en/ocr_book_en.md

@@ -1,6 +1,6 @@

# E-book: *Dive Into OCR*

-"Dive Into OCR" is a textbook that combines OCR theory and practice, written by the PaddleOCR team, Chen Zhineng, a Junior Research Fellow at Fudan University, Huang Wenhui, a senior expert in the field of vision at China Mobile Research Institute, and other industry-university-research colleagues, as well as OCR developers. The main features are as follows:

+"Dive Into OCR" is a textbook that combines OCR theory and practice, written by the PaddleOCR team, Chen Zhineng, a Pre-tenure Professor at Fudan University, Huang Wenhui, a senior expert in the field of vision at China Mobile Research Institute, and other industry-university-research colleagues, as well as OCR developers. The main features are as follows:

- OCR full-stack technology covering text detection, recognition and document analysis

- Closely integrate theory and practice, cross the code implementation gap, and supporting instructional videos

diff --git a/paddleocr.py b/paddleocr.py

index f7871db6470c75db82e8251dff5361c099c4adda..9eff2d0f36bc2b092191abd891426088a4b76ded 100644

--- a/paddleocr.py

+++ b/paddleocr.py

@@ -47,8 +47,8 @@ __all__ = [

]

SUPPORT_DET_MODEL = ['DB']

-VERSION = '2.5.0.1'

-SUPPORT_REC_MODEL = ['CRNN']

+VERSION = '2.5.0.2'

+SUPPORT_REC_MODEL = ['CRNN', 'SVTR_LCNet']

BASE_DIR = os.path.expanduser("~/.paddleocr/")

DEFAULT_OCR_MODEL_VERSION = 'PP-OCRv3'

diff --git a/ppstructure/docs/table/recovery.jpg b/ppstructure/docs/table/recovery.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..bee2e2fb3499ec4b348e2b2f1475a87c9c562190

Binary files /dev/null and b/ppstructure/docs/table/recovery.jpg differ

diff --git a/ppstructure/predict_system.py b/ppstructure/predict_system.py

index 7f18fcdf8e6b57be6e129f3271f5bb583f4da616..b0ede5f3a1b88df6efed53d7ca33a696bc7a7fff 100644

--- a/ppstructure/predict_system.py

+++ b/ppstructure/predict_system.py

@@ -23,6 +23,7 @@ sys.path.append(os.path.abspath(os.path.join(__dir__, '..')))

os.environ["FLAGS_allocator_strategy"] = 'auto_growth'

import cv2

import json

+import numpy as np

import time

import logging

from copy import deepcopy

@@ -33,6 +34,7 @@ from ppocr.utils.logging import get_logger

from tools.infer.predict_system import TextSystem

from ppstructure.table.predict_table import TableSystem, to_excel

from ppstructure.utility import parse_args, draw_structure_result

+from ppstructure.recovery.docx import convert_info_docx

logger = get_logger()

@@ -104,7 +106,12 @@ class StructureSystem(object):

return_ocr_result_in_table)

else:

if self.text_system is not None:

- filter_boxes, filter_rec_res = self.text_system(roi_img)

+ if args.recovery:

+ wht_im = np.ones(ori_im.shape, dtype=ori_im.dtype)

+ wht_im[y1:y2, x1:x2, :] = roi_img

+ filter_boxes, filter_rec_res = self.text_system(wht_im)

+ else:

+ filter_boxes, filter_rec_res = self.text_system(roi_img)

# remove style char

style_token = [

'', '', '', '', '',

@@ -118,7 +125,8 @@ class StructureSystem(object):

for token in style_token:

if token in rec_str:

rec_str = rec_str.replace(token, '')

- box += [x1, y1]

+ if not args.recovery:

+ box += [x1, y1]

res.append({

'text': rec_str,

'confidence': float(rec_conf),

@@ -192,6 +200,8 @@ def main(args):

# img_save_path = os.path.join(save_folder, img_name + '.jpg')

cv2.imwrite(img_save_path, draw_img)

logger.info('result save to {}'.format(img_save_path))

+ if args.recovery:

+ convert_info_docx(img, res, save_folder, img_name)

elapse = time.time() - starttime

logger.info("Predict time : {:.3f}s".format(elapse))

diff --git a/ppstructure/recovery/README.md b/ppstructure/recovery/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..883dbef3e829dfa213644b610af1ca279dac8641

--- /dev/null

+++ b/ppstructure/recovery/README.md

@@ -0,0 +1,86 @@

+English | [简体中文](README_ch.md)

+

+- [Getting Started](#getting-started)

+ - [1. Introduction](#1)

+ - [2. Install](#2)

+ - [2.1 Installation dependencies](#2.1)

+ - [2.2 Install PaddleOCR](#2.2)

+ - [3. Quick Start](#3)

+

+

+

+## 1. Introduction

+

+Layout recovery means that after OCR recognition, the content is still arranged like the original document pictures, and the paragraphs are output to word document in the same order.

+

+Layout recovery combines [layout analysis](../layout/README.md)、[table recognition](../table/README.md) to better recover images, tables, titles, etc.

+The following figure shows the result:

+

+

+

+

+

+

+

+ +

+