Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into image_v2

Showing

cmake/cross_compiling/host.cmake

0 → 100644

demo/mnist/light_mnist.py

0 → 100644

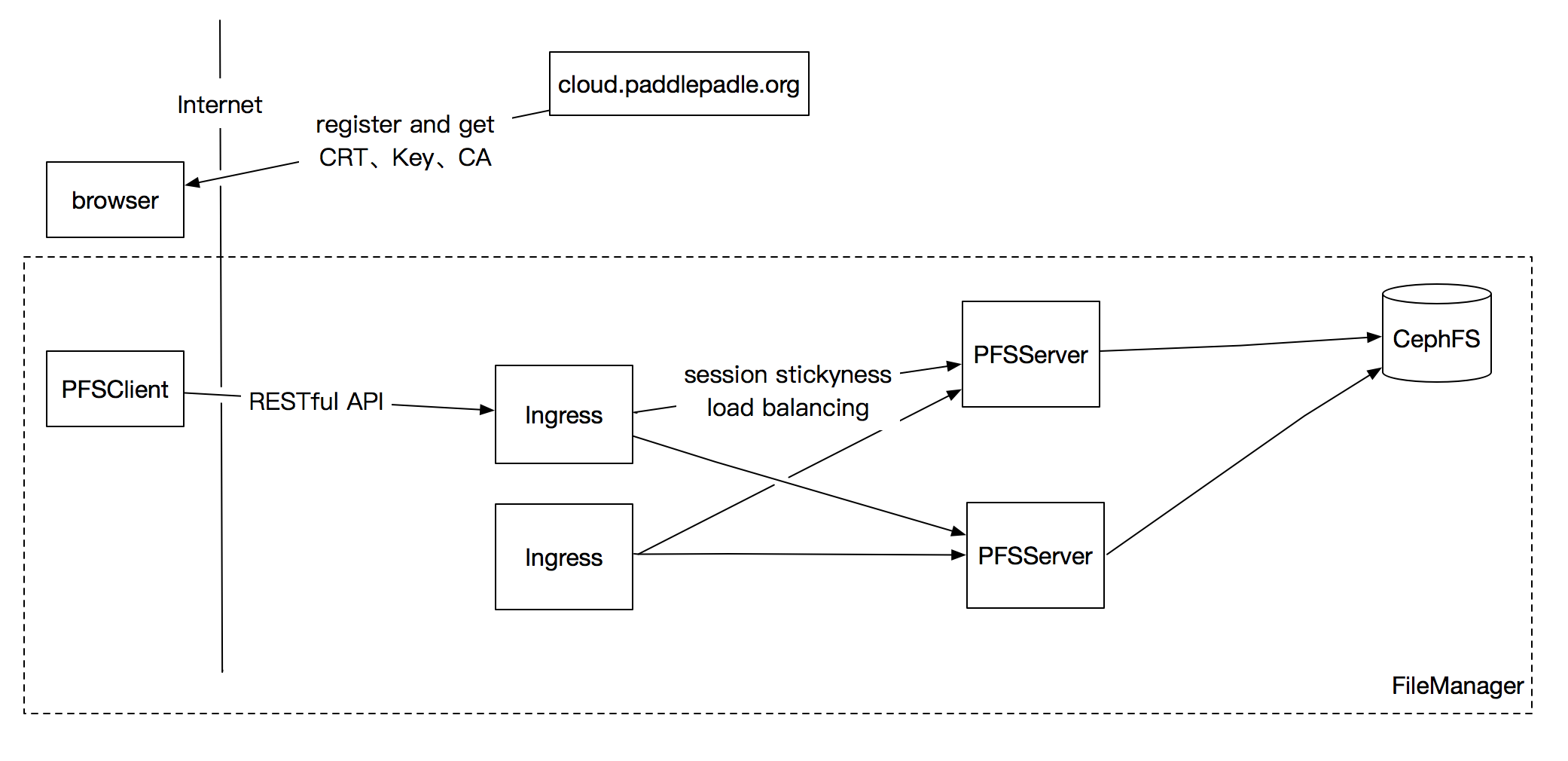

doc/design/file_manager/README.md

0 → 100644

文件已添加

141.7 KB