Merge pull request #15 from frankwhzhang/master

fix model for new version

Showing

| W: | H:

| W: | H:

文件已移动

models/multitask/mmoe/data/run.sh

0 → 100644

fix model for new version

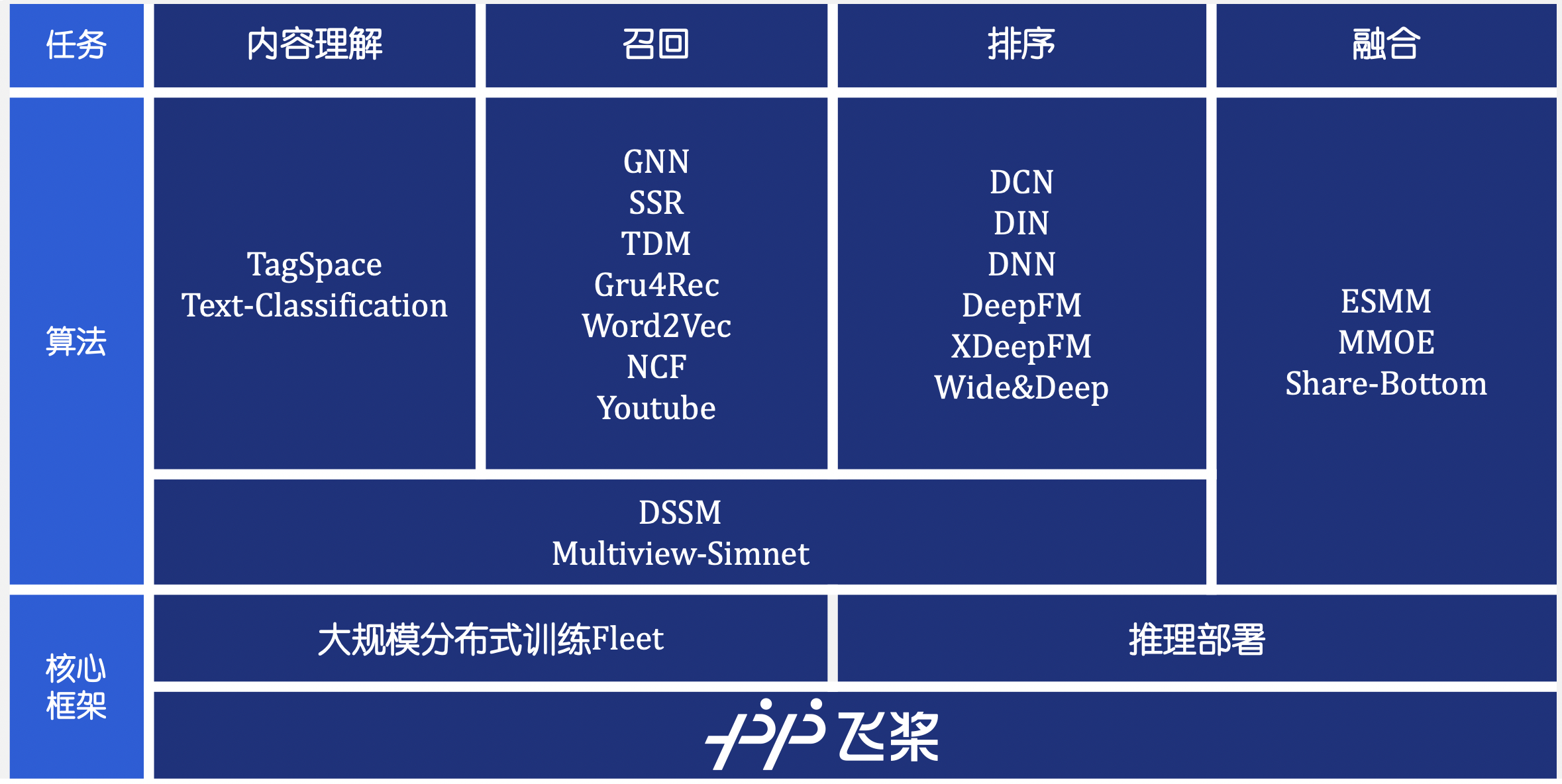

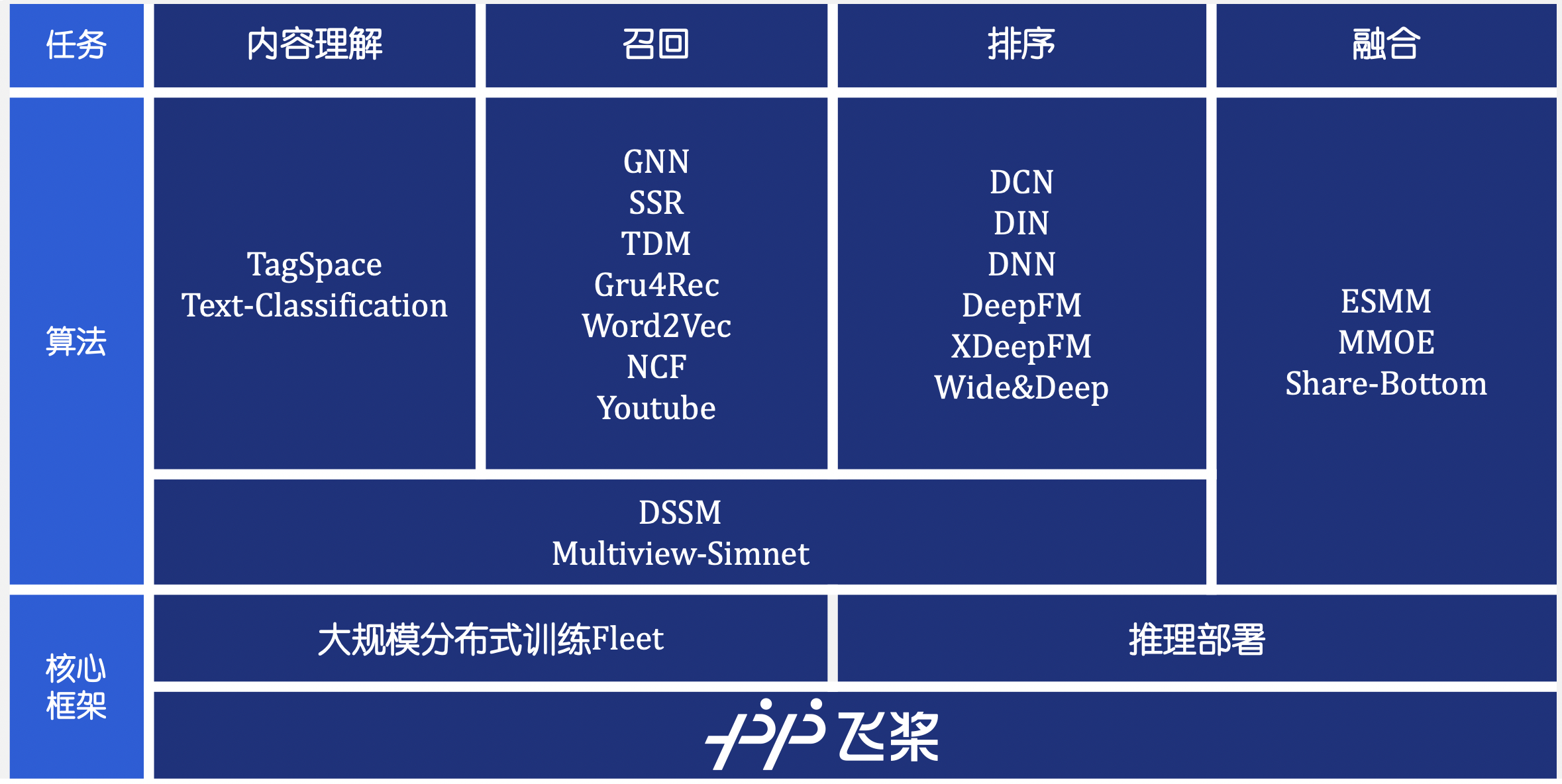

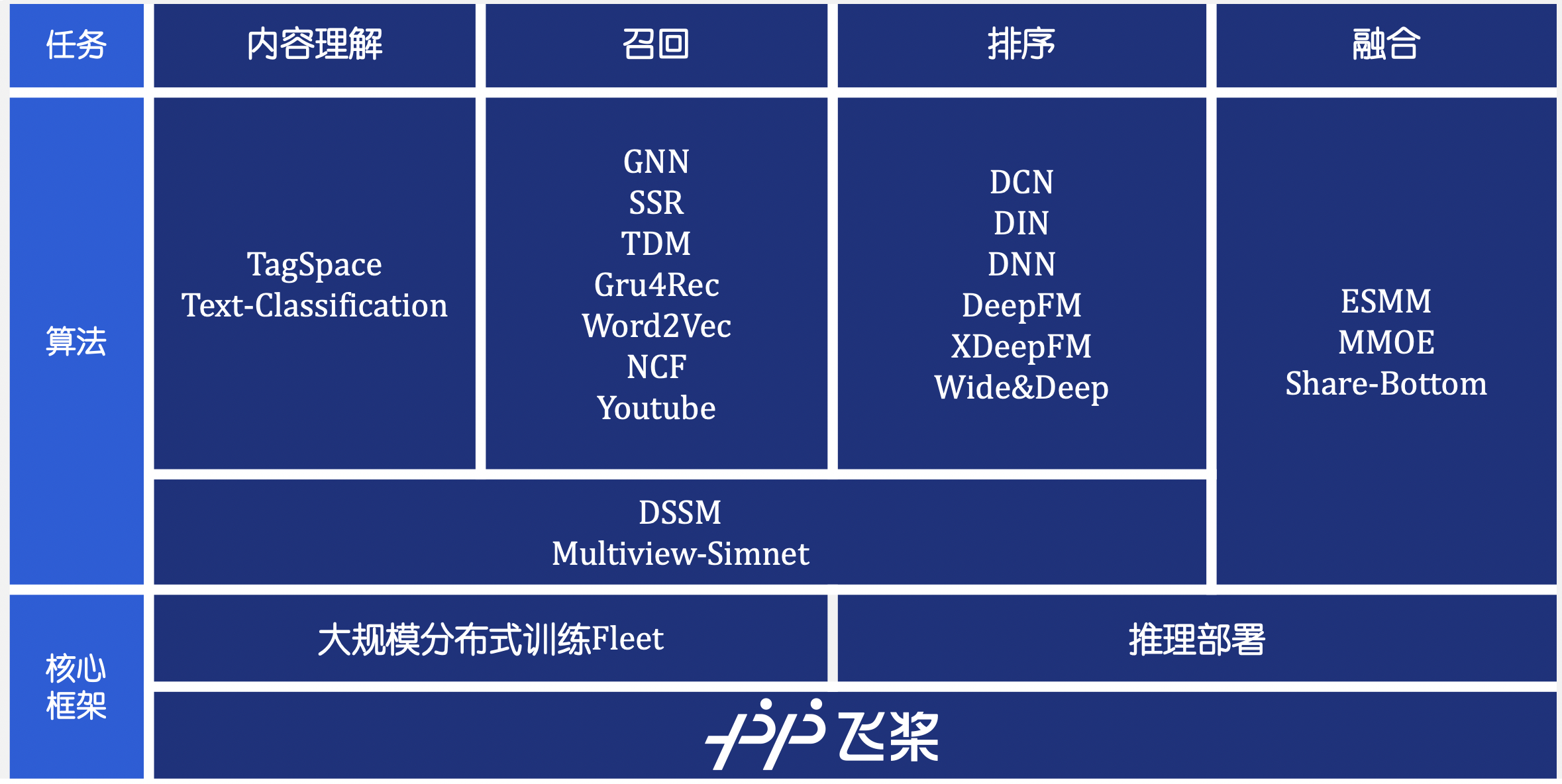

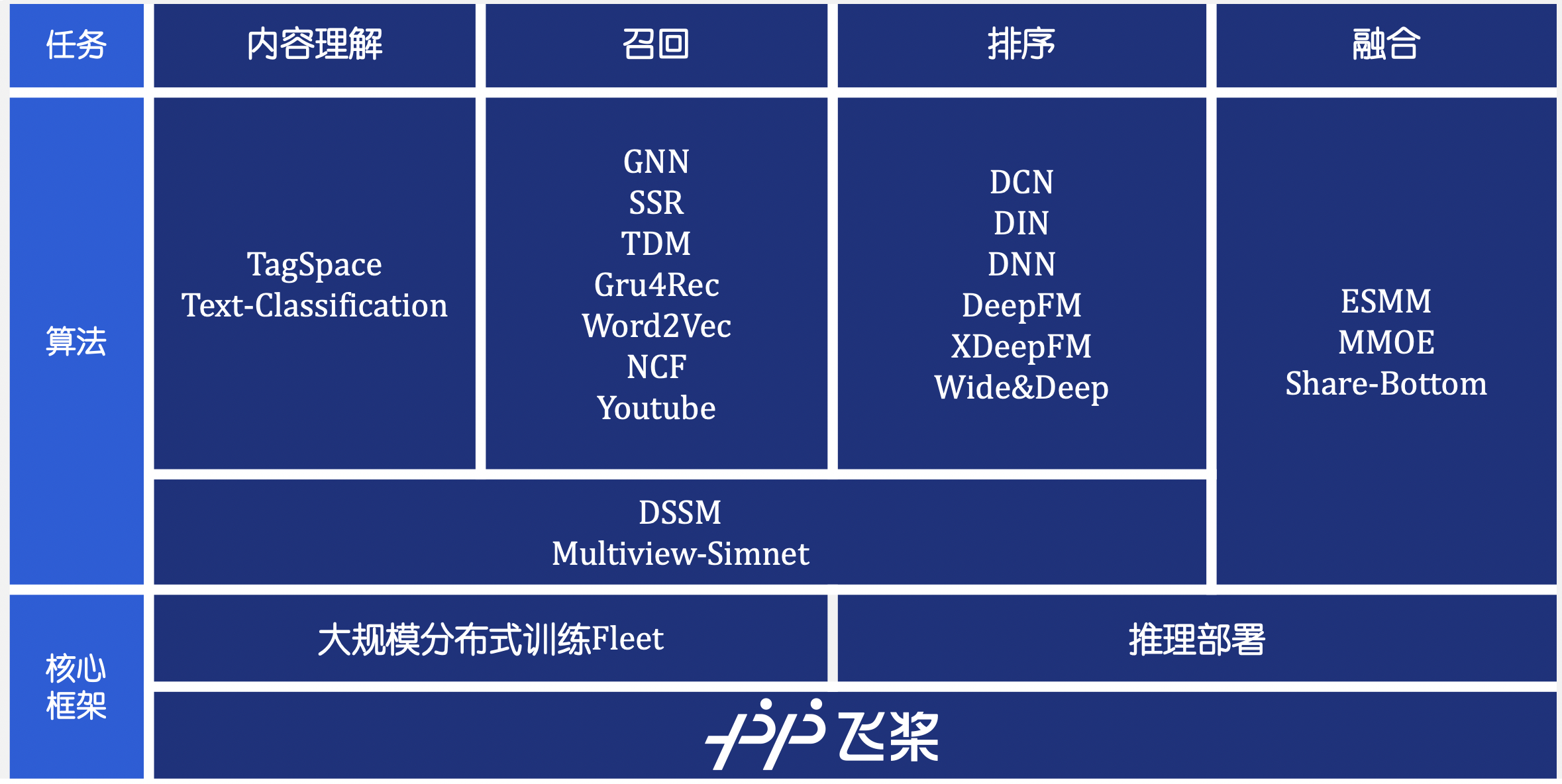

698.6 KB | W: | H:

217.7 KB | W: | H: