Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into dev_add_random_crop_op

Showing

benchmark/cluster/README.md

已删除

100644 → 0

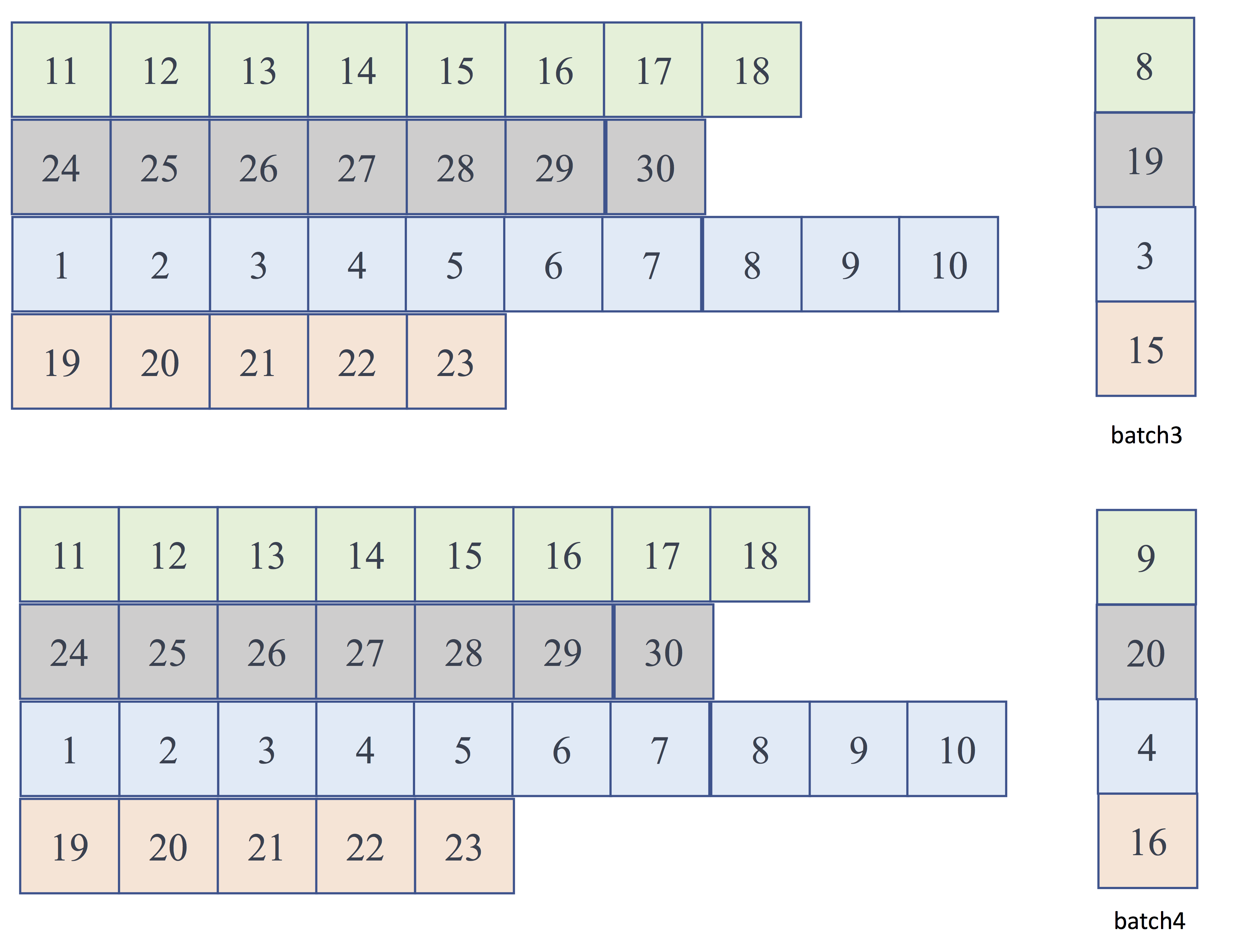

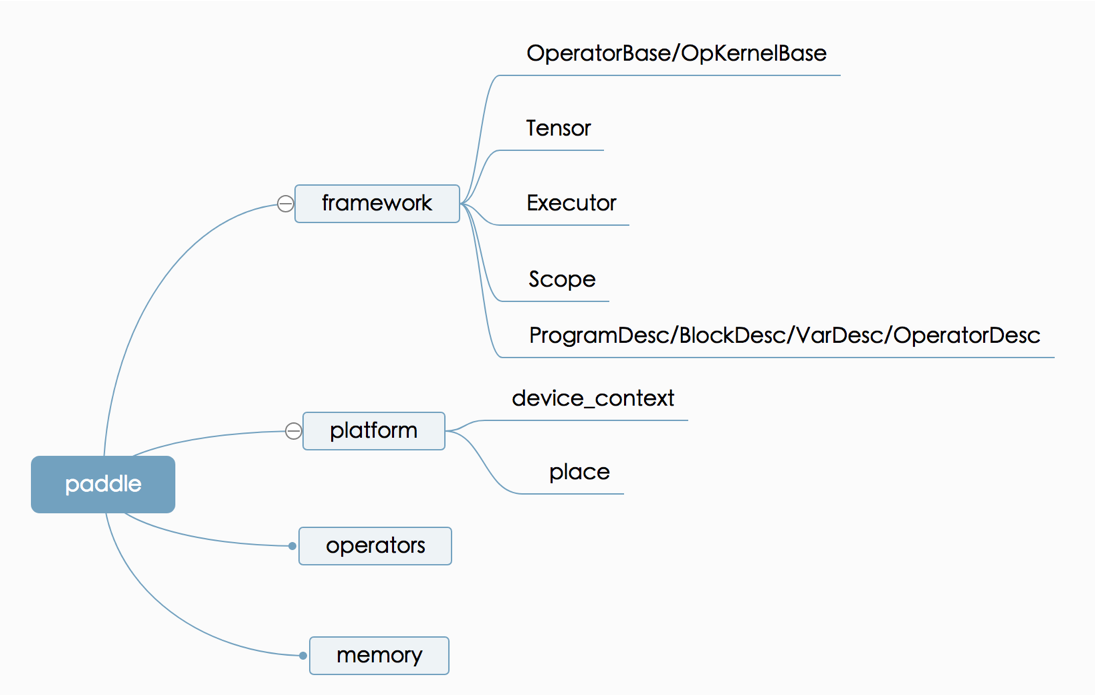

doc/fluid/images/1.png

0 → 100644

147.5 KB

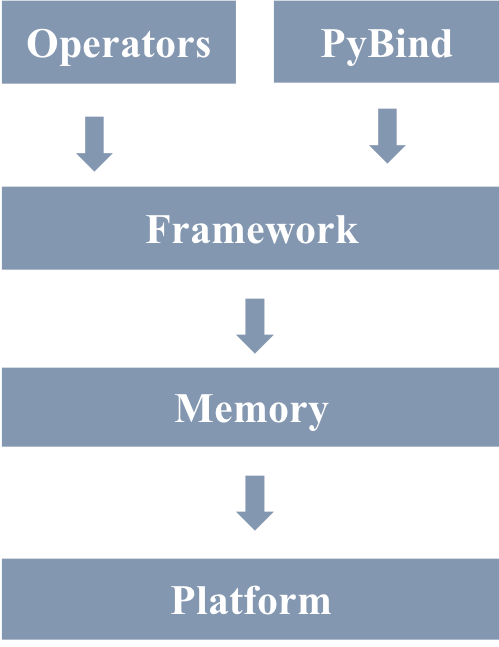

doc/fluid/images/2.png

0 → 100644

420.3 KB

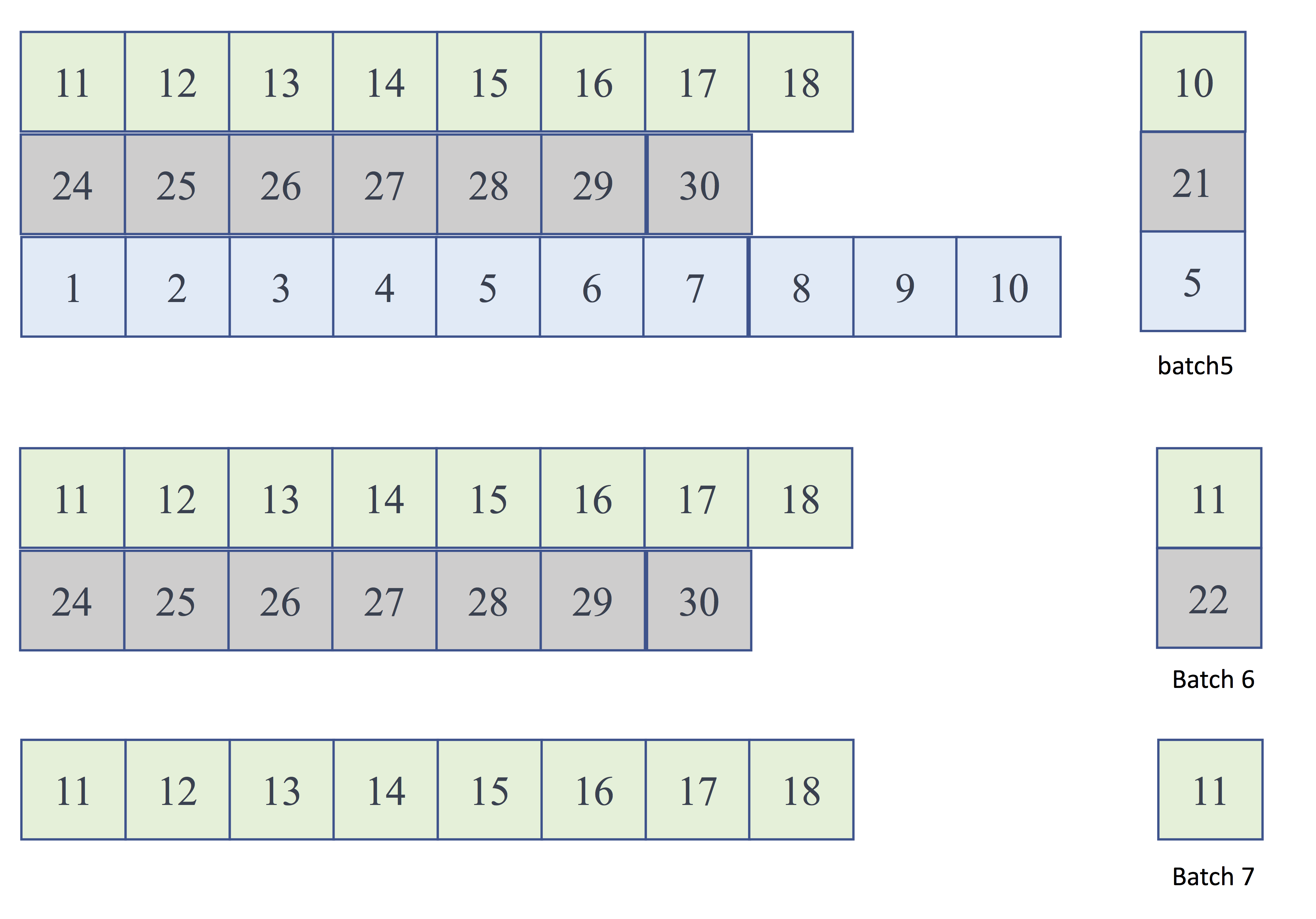

doc/fluid/images/3.png

0 → 100644

416.8 KB

doc/fluid/images/4.png

0 → 100644

359.9 KB

doc/fluid/images/LoDTensor.png

0 → 100644

109.1 KB

121.8 KB

doc/fluid/images/executor.png

0 → 100644

188.7 KB

188.3 KB

122.5 KB

28.2 KB

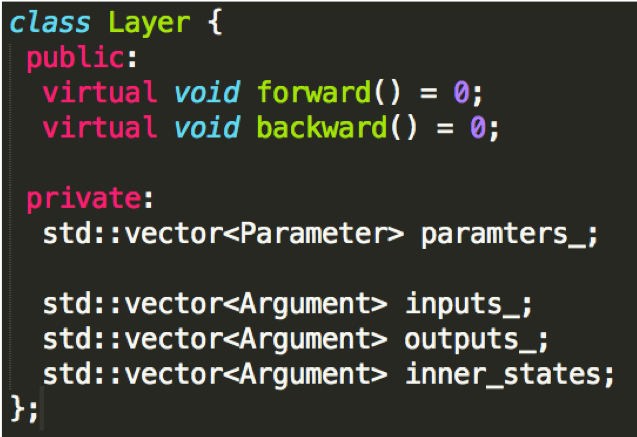

doc/fluid/images/layer.png

0 → 100644

122.3 KB

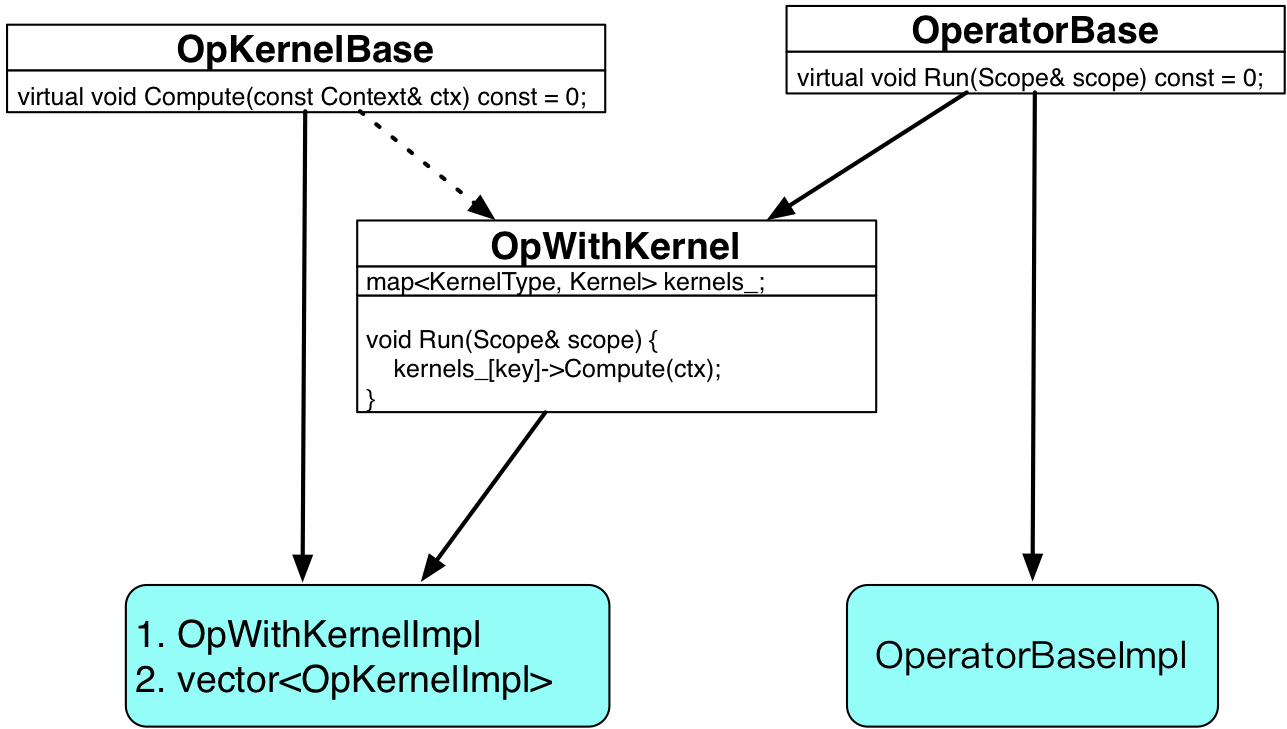

doc/fluid/images/operator1.png

0 → 100644

108.9 KB

doc/fluid/images/operator2.png

0 → 100644

137.7 KB

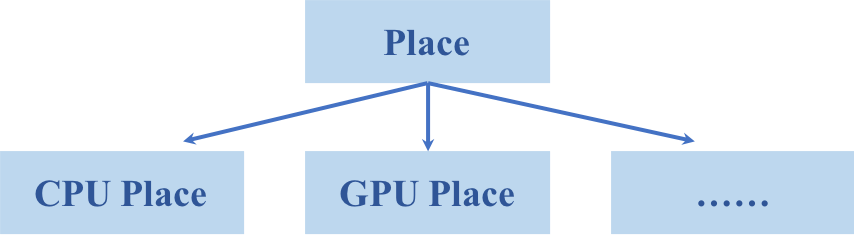

doc/fluid/images/place.png

0 → 100644

18.8 KB

91.3 KB

43.7 KB

41.6 KB

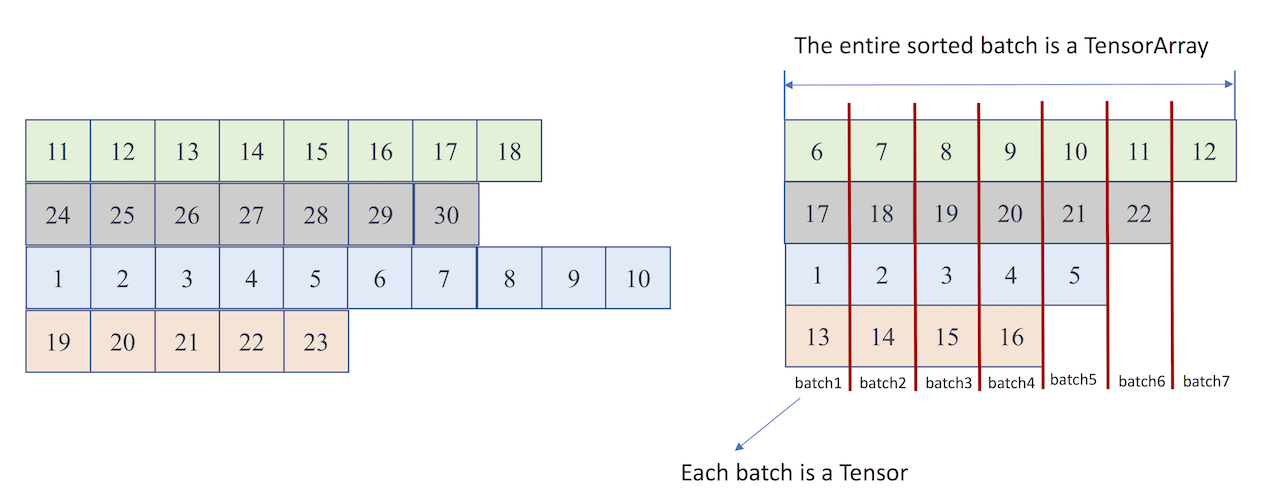

doc/fluid/images/raw_input.png

0 → 100644

341.5 KB

121.0 KB

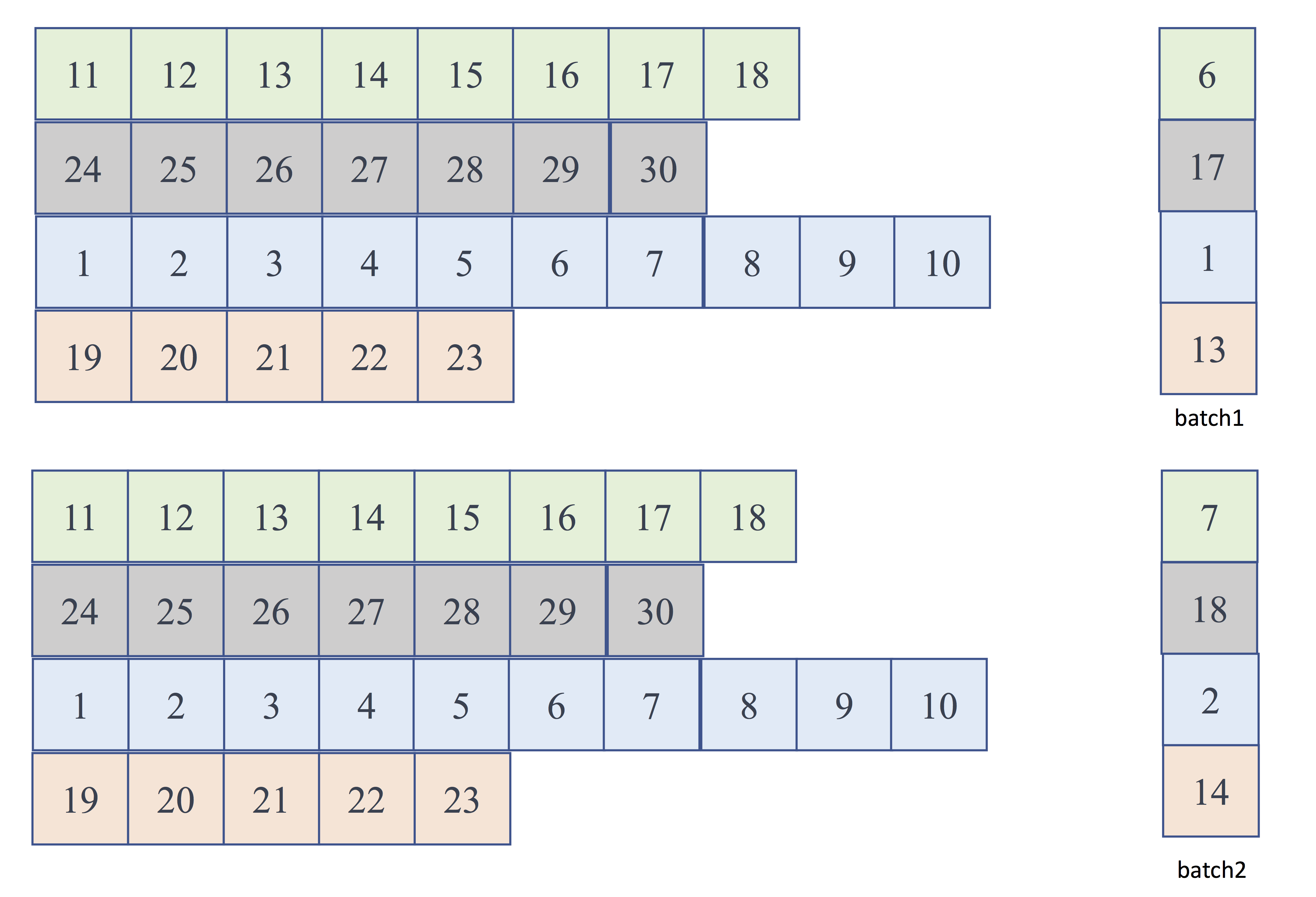

doc/fluid/images/sorted_input.png

0 → 100644

268.6 KB

doc/fluid/images/transpiler.png

0 → 100644

53.4 KB

95.7 KB

paddle/contrib/CMakeLists.txt

0 → 100644

paddle/scripts/docker/test.sh

已删除

100755 → 0

文件已删除