Figure 1. Archetecture of Deep Speech 2 Network.

We don't have to persist on this 2-3-7-1-1-1 depth \[[2](#references)\]. Similar networks with different depths might also work well. As in \[[1](#references)\], authors use a different depth (e.g. 2-2-3-1-1-1) for final experiments.

Key ingredients about the layers:

- **Data Layers**:

- Frame sequences data of audio **spectrogram** (with FFT).

- Token sequences data of **transcription** text (labels).

- These two type of sequences do not have the same lengthes, thus a CTC-loss layer is required.

- **2D Convolution Layers**:

- Not only temporal convolution, but also **frequency convolution**. Like a 2D image convolution, but with a variable dimension (i.e. temporal dimension).

- With striding for only the first convlution layer.

- No pooling for all convolution layers.

- **Uni-directional RNNs**

- Uni-directional + row convolution: for low-latency inference.

- Bi-direcitional + without row convolution: if we don't care about the inference latency.

- **Row convolution**:

- For looking only a few steps ahead into the feature, instead of looking into a whole sequence in bi-directional RNNs.

- Not nessesary if with bi-direcitional RNNs.

- "**Row**" means convolutions are done within each frequency dimension (row), and no convolution kernels shared across.

- **Batch Normalization Layers**:

- Added to all above layers (except for data and loss layer).

- Sequence-wise normalization for RNNs: BatchNorm only performed on input-state projection and not state-state projection, for efficiency consideration.

Required Components | PaddlePaddle Support | Need to Develop

:------------------------------------- | :-------------------------------------- | :-----------------------

Data Layer I (Spectrogram) | Not supported yet. | TBD (Task 3)

Data Layer II (Transcription) | `paddle.data_type.integer_value_sequence` | -

2D Convolution Layer | `paddle.layer.image_conv_layer` | -

DataType Converter (vec2seq) | `paddle.layer.block_expand` | -

Bi-/Uni-directional RNNs | `paddle.layer.recurrent_group` | -

Row Convolution Layer | Not supported yet. | TBD (Task 4)

CTC-loss Layer | `paddle.layer.warp_ctc` | -

Batch Normalization Layer | `paddle.layer.batch_norm` | -

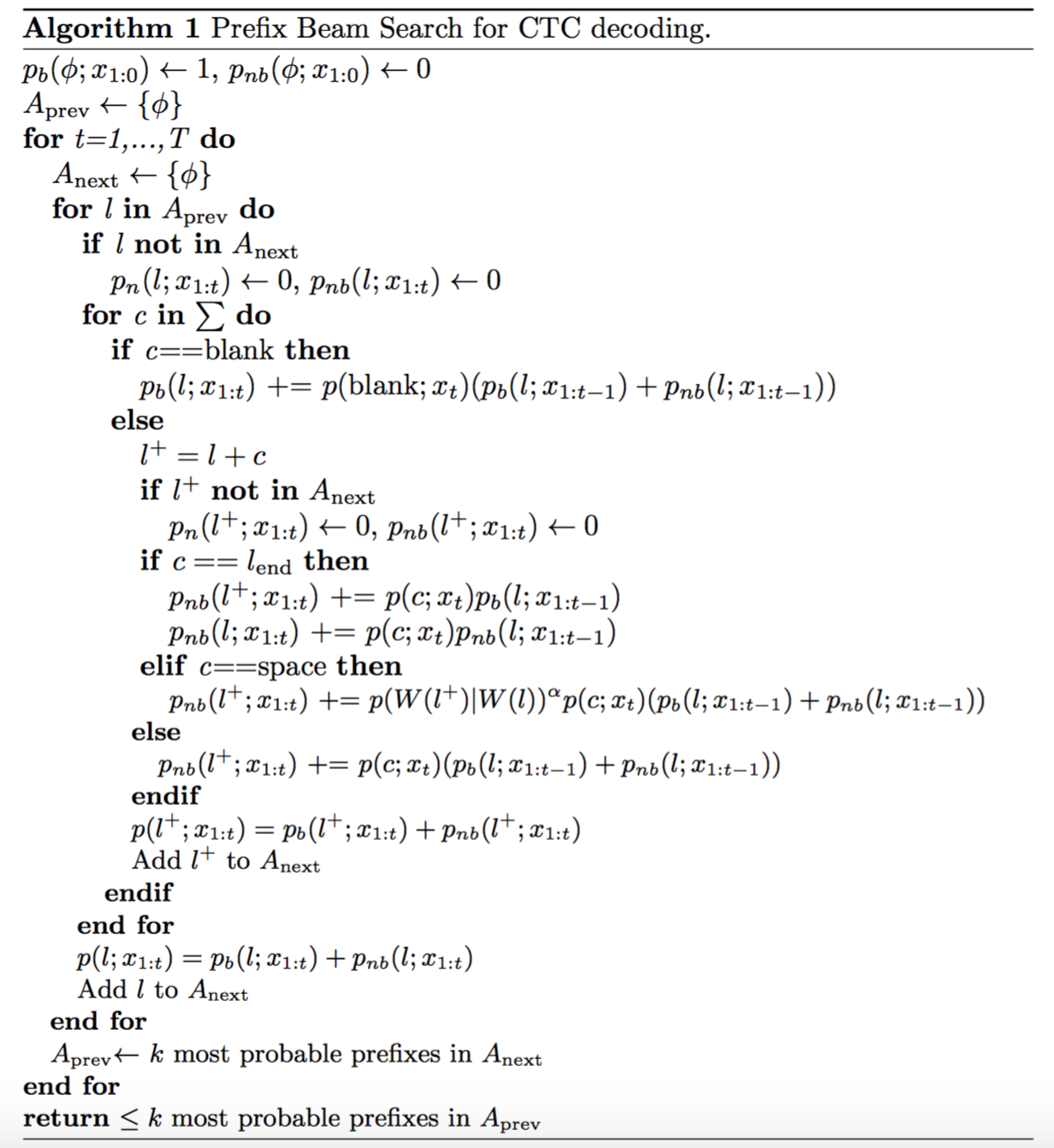

CTC-Beam search | Not supported yet. | TBD (Task 6)

### Row Convolution

TODO by Assignees

### Beam Search with CTC and LM