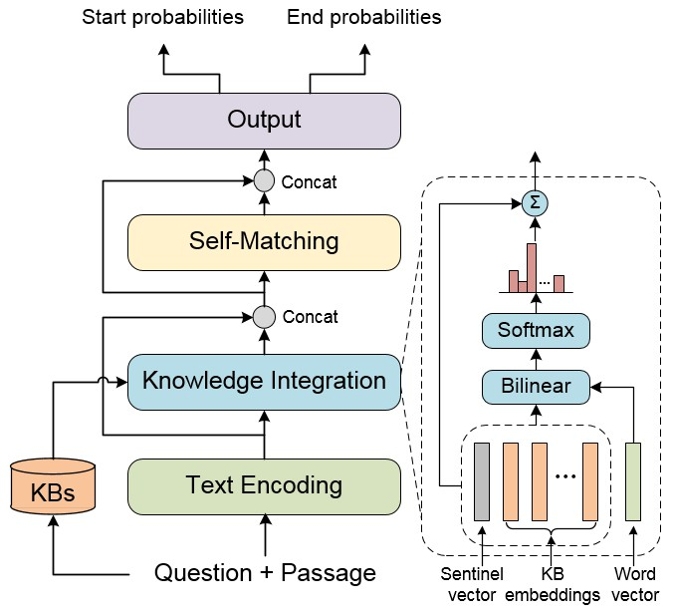

Add the workspace of ACL2019-KTNET into PaddleNLP Research Version (#3244)

* add readme for KTNET * update readme * update readme * update readme * update readme of KTNET * update readme of KTNET * add source files for KTNET * update files for KTNET * update files for KTNET * update draft of readme for KTNET * modified scripts for KTNET * fix typos in readme.md for KTNET * update scripts for KTNET * update scripts for KTNET * update readme for KTNET * edit two-staged training scripts for KTNET * add details in the readme of KTNET * fix typos in the readme of KTNET * added eval scripts for KTNET * rename folders for KTNET * add copyright in the code and add links in readme for KTNET * add the remaining download link for KTNET * add md5sum for KTNET * final version for KTNET

Showing

205.9 KB

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

因为 它太大了无法显示 source diff 。你可以改为 查看blob。