Update SSD documentation (#987)

* Refine documentation and code.

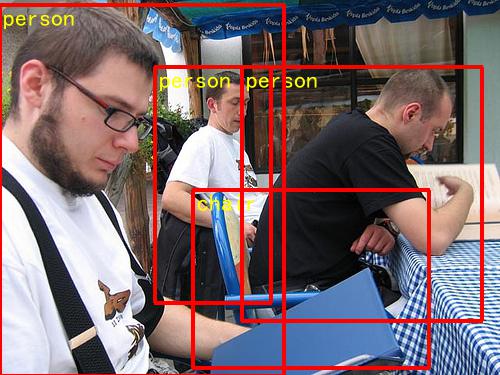

Showing

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

* Refine documentation and code.

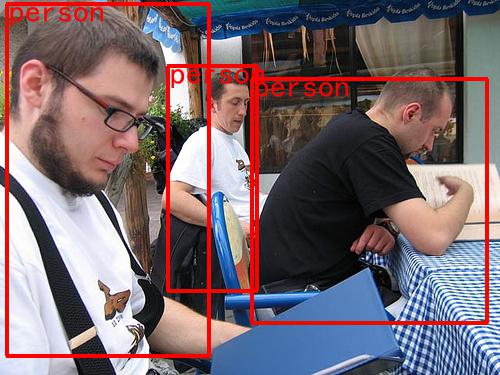

24.2 KB | W: | H:

24.1 KB | W: | H:

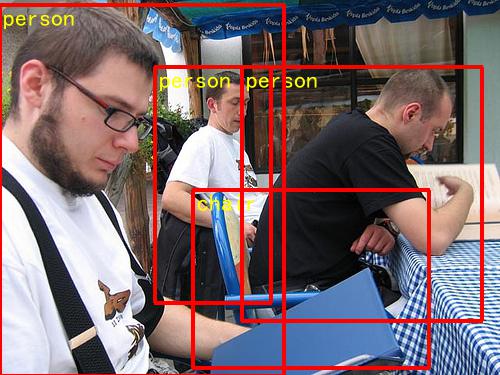

42.3 KB | W: | H:

42.1 KB | W: | H:

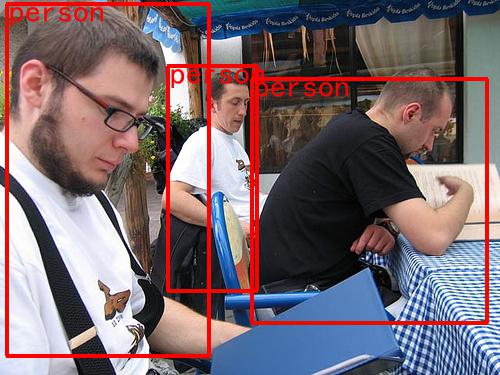

33.0 KB | W: | H:

32.7 KB | W: | H:

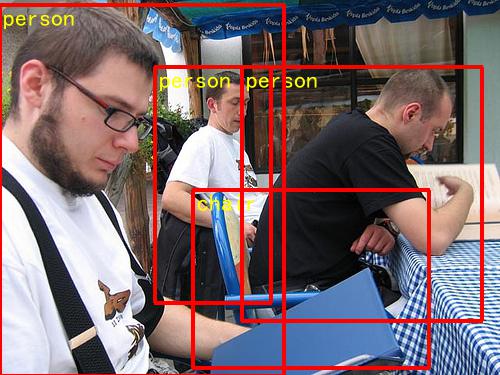

45.9 KB | W: | H:

47.6 KB | W: | H: