Merge branch 'develop' into web-service

Showing

core/general-server/op/general_dist_kv_infer_op.cpp

100755 → 100644

doc/INFERNCE_TO_SERVING.md

0 → 100644

doc/INFERNCE_TO_SERVING_CN.md

0 → 100644

doc/NEW_WEB_SERVICE.md

0 → 100644

doc/NEW_WEB_SERVICE_CN.md

0 → 100644

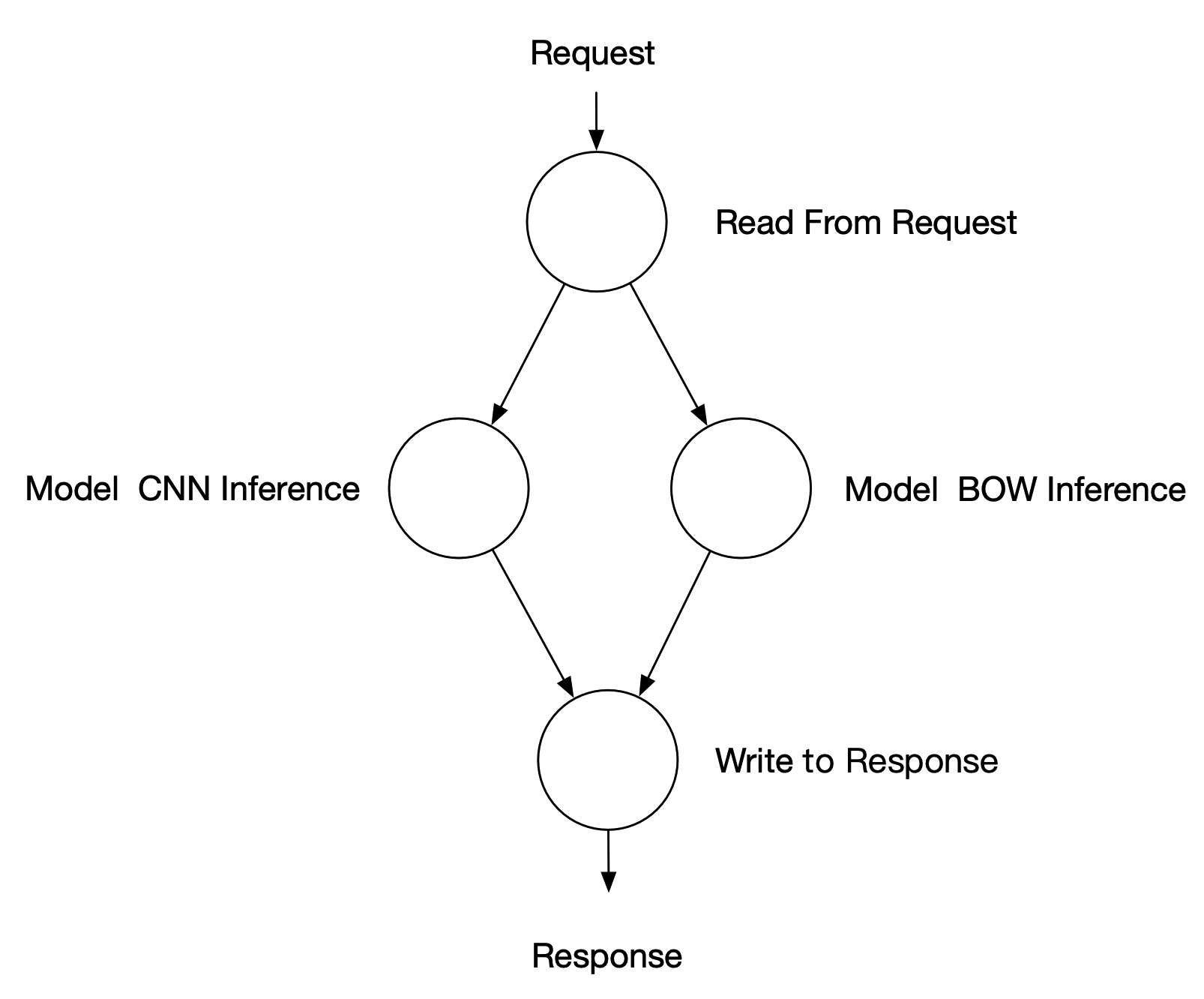

doc/complex_dag.png

0 → 100644

396.8 KB

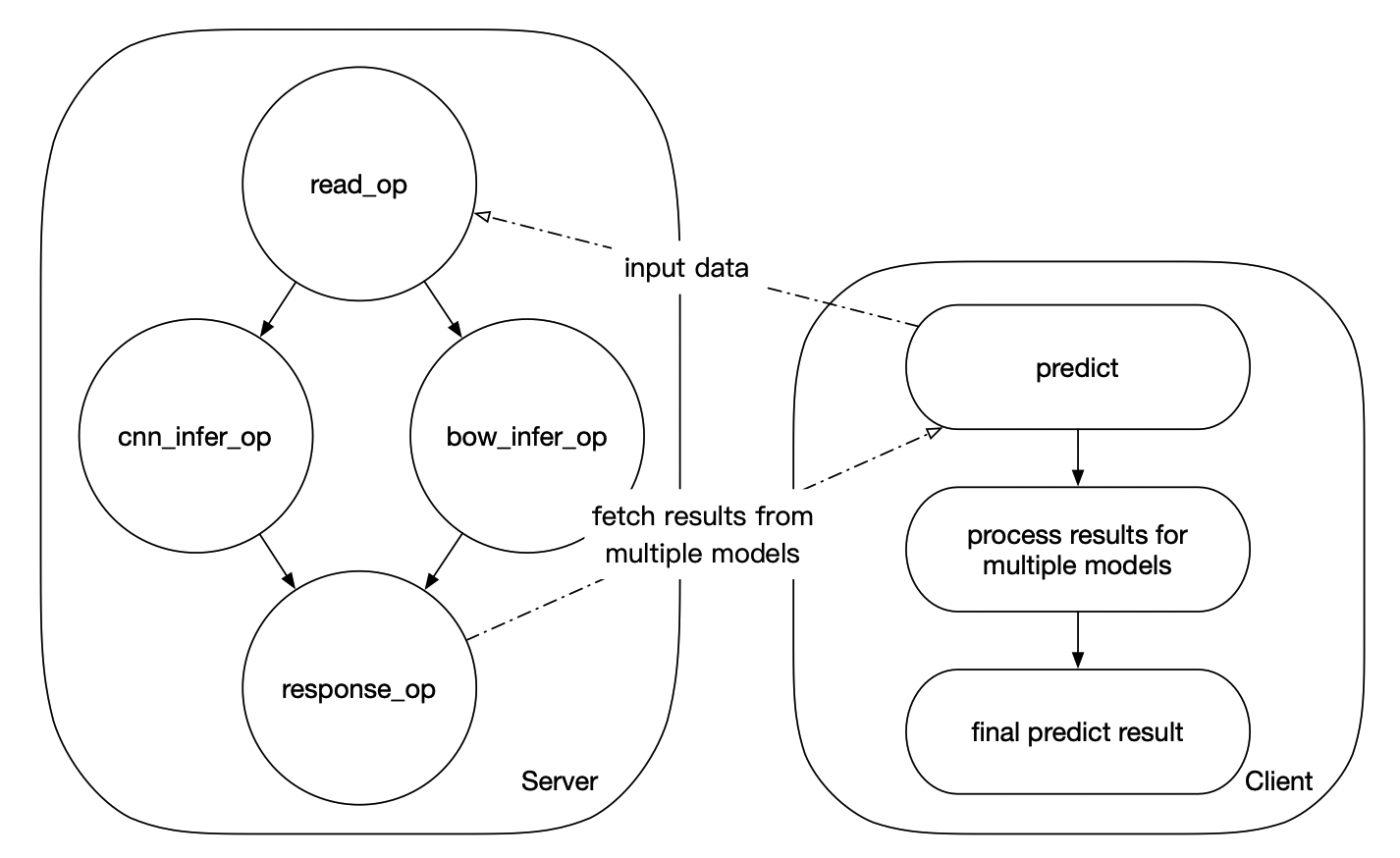

doc/model_ensemble_example.png

0 → 100644

341.4 KB

135.1 KB

148.2 KB