Merge branch 'v0.6.0' of https://github.com/PaddlePaddle/Serving into v0.6.0

Showing

doc/SERVING_AUTH_DOCKER.md

0 → 100644

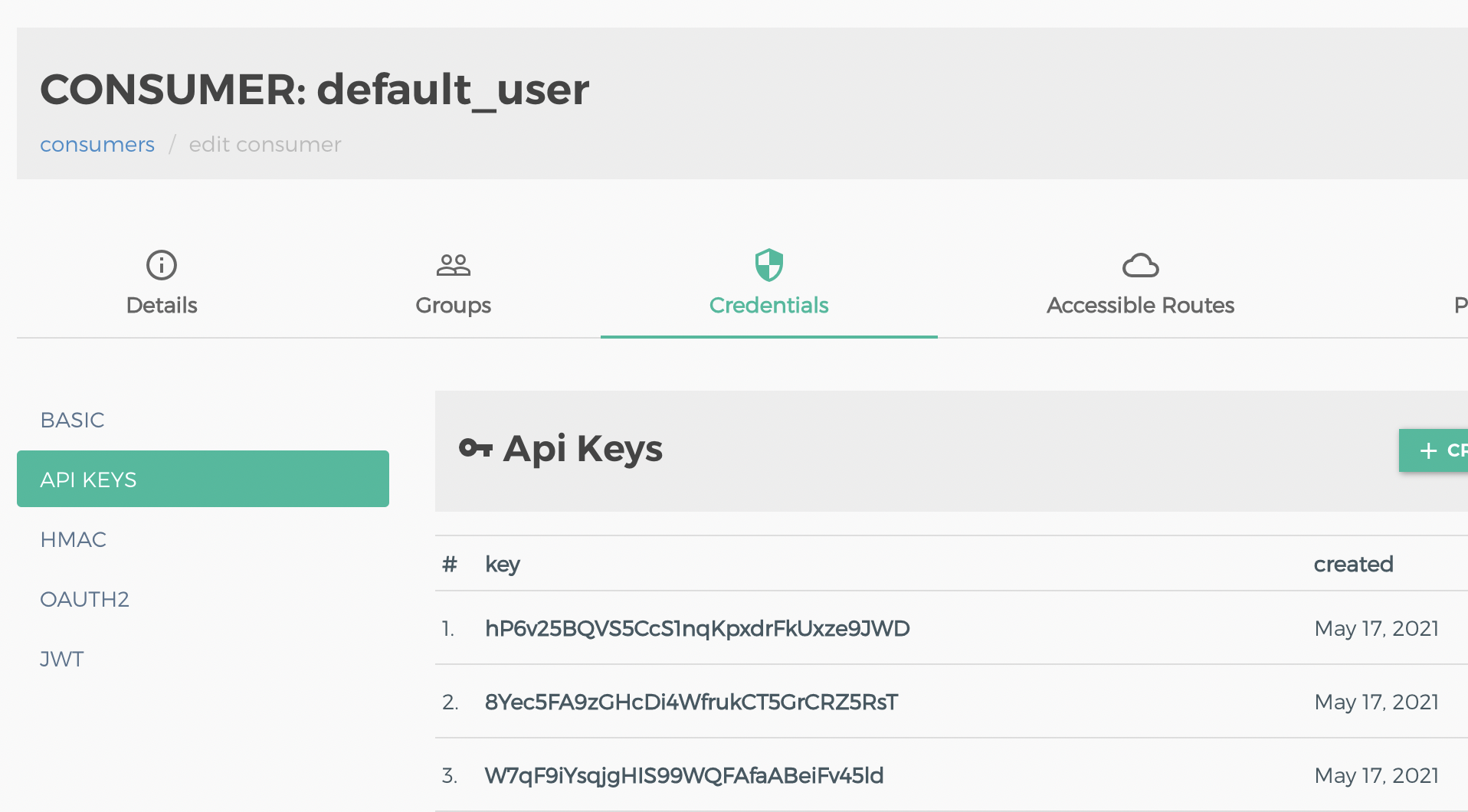

doc/kong-api_keys.png

0 → 100644

369.3 KB

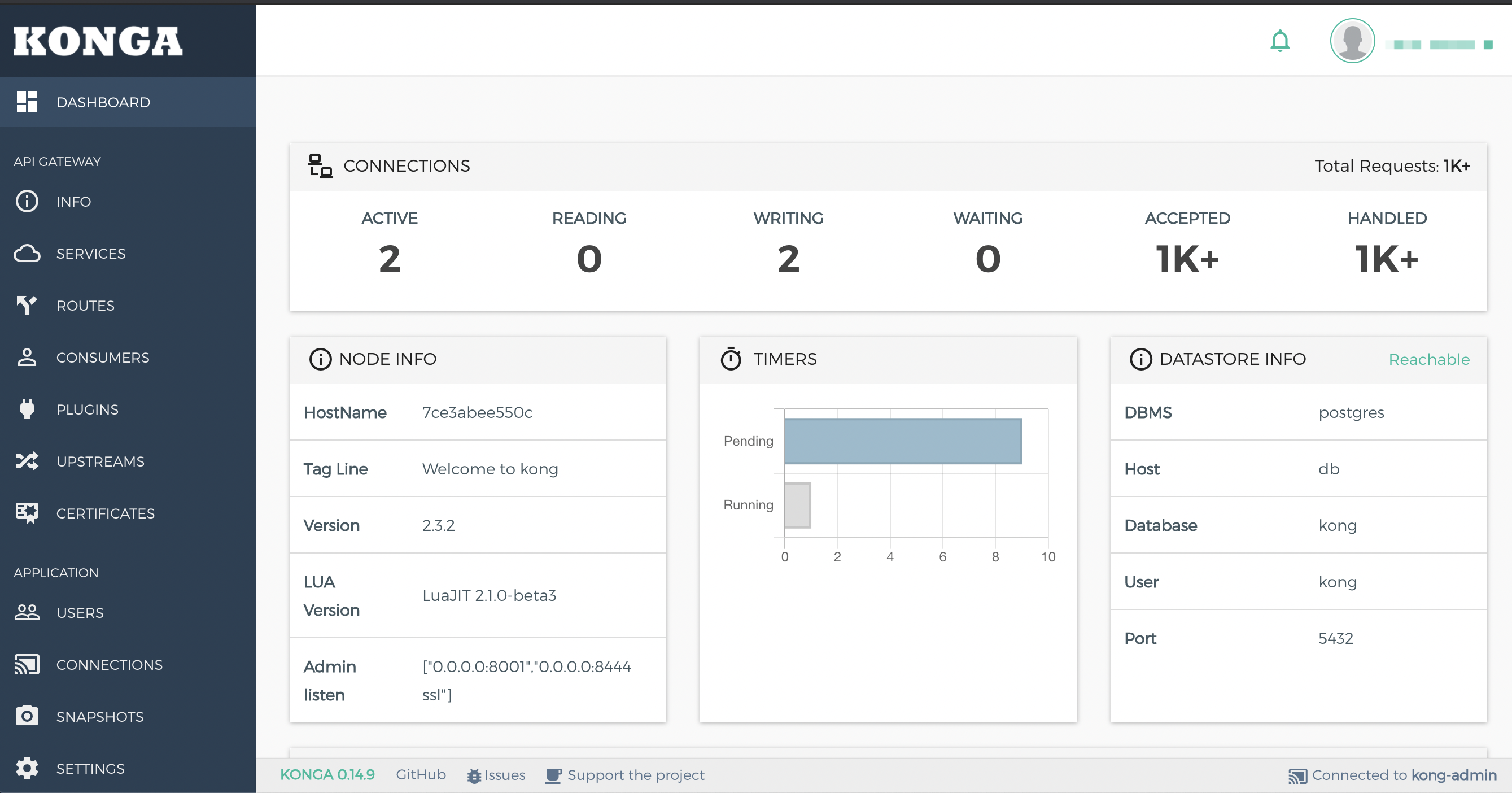

doc/kong-dashboard.png

0 → 100644

881.6 KB

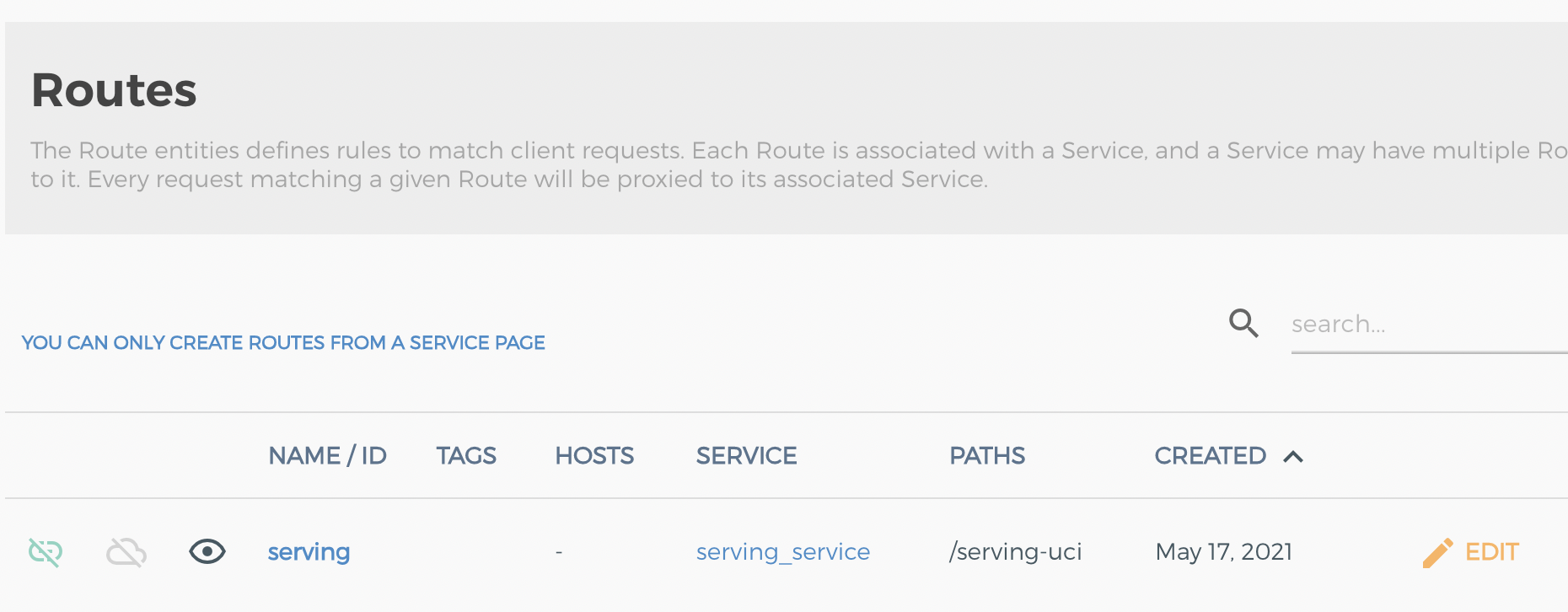

doc/kong-routes.png

0 → 100644

275.8 KB

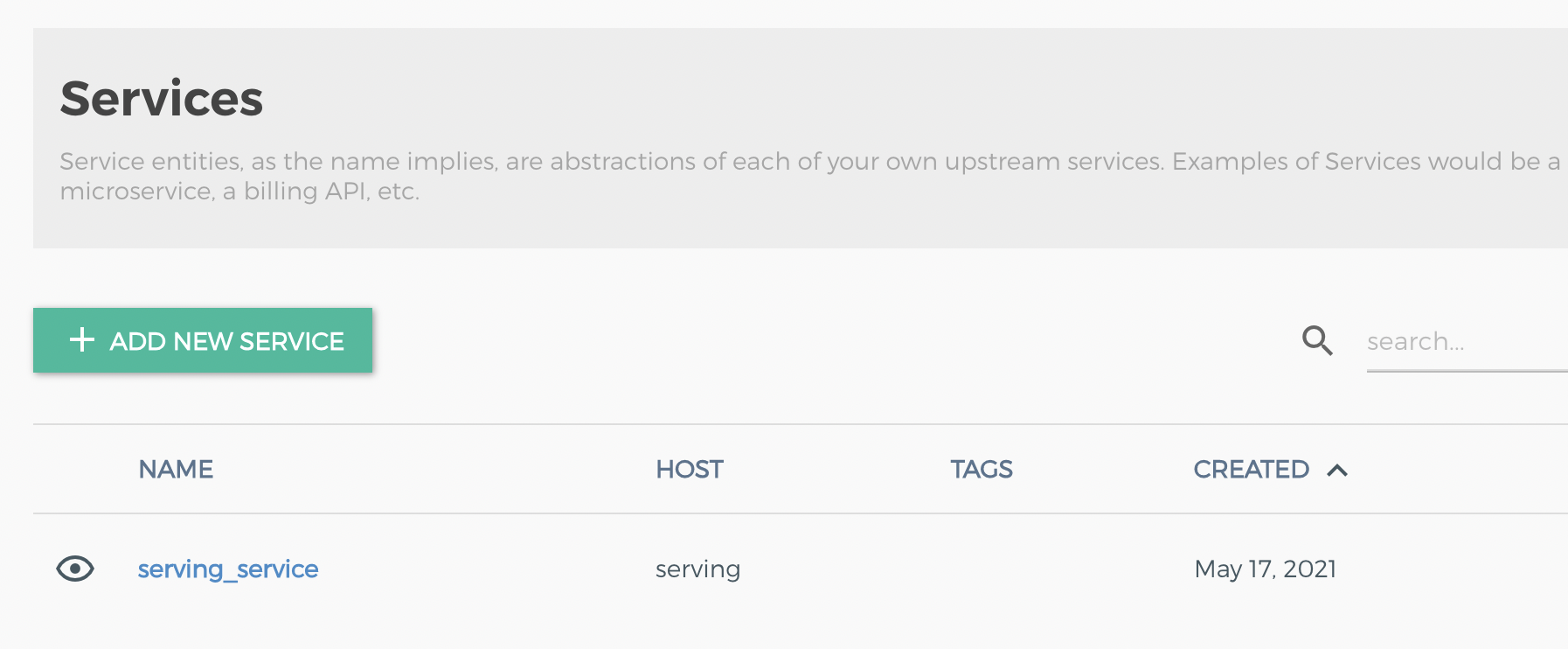

doc/kong-services.png

0 → 100644

245.6 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

38.8 KB

tools/auth/key-auth-k8s.yaml

0 → 100644

tools/auth/kong-consumer-k8s.yaml

0 → 100644

tools/auth/kong-ingress-k8s.yaml

0 → 100644

tools/auth/kong-ingress.yaml

0 → 100644

tools/auth/serving-demo-k8s.yaml

0 → 100644