Merge from github

Showing

cmake/cuda.cmake

0 → 100644

30.0 KB

38.5 KB

31.0 KB

20.1 KB

33.8 KB

63.1 KB

43.8 KB

26.7 KB

29.7 KB

18.4 KB

demo-client/data/images/val.txt

0 → 100644

demo-client/src/ximage_press.cpp

0 → 100644

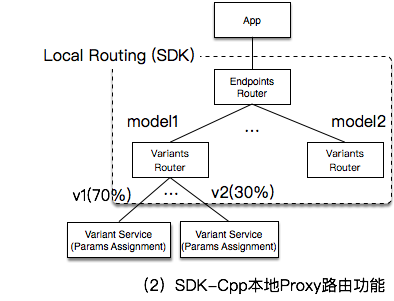

doc/client-side-proxy.png

0 → 100755

19.8 KB

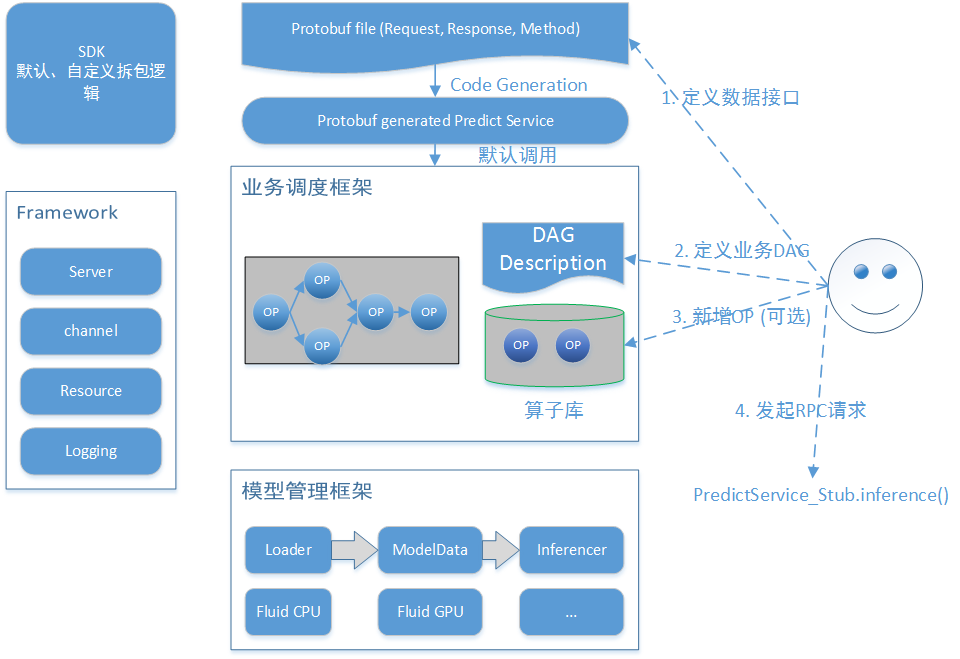

doc/framework.png

0 → 100755

75.0 KB

doc/multi-service.png

0 → 100755

18.3 KB

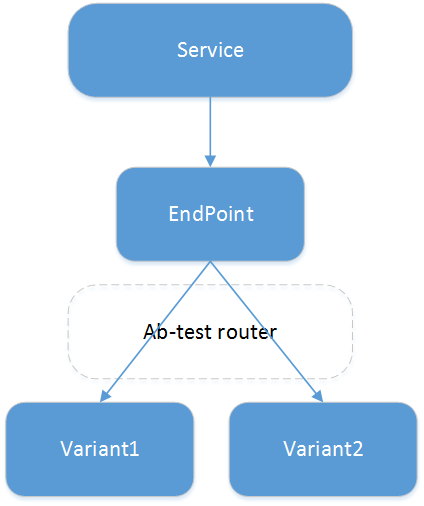

doc/multi-variants.png

0 → 100755

17.9 KB

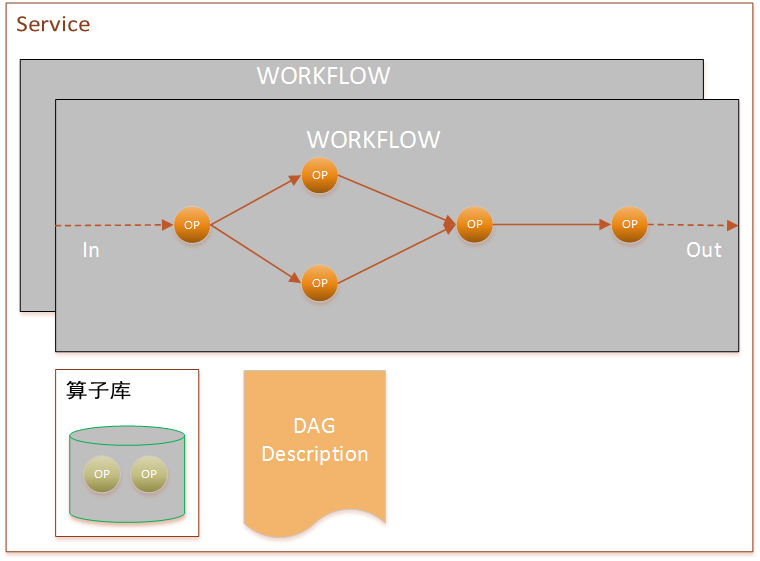

doc/predict-service.png

0 → 100755

30.8 KB

doc/server-side.png

0 → 100755

32.0 KB