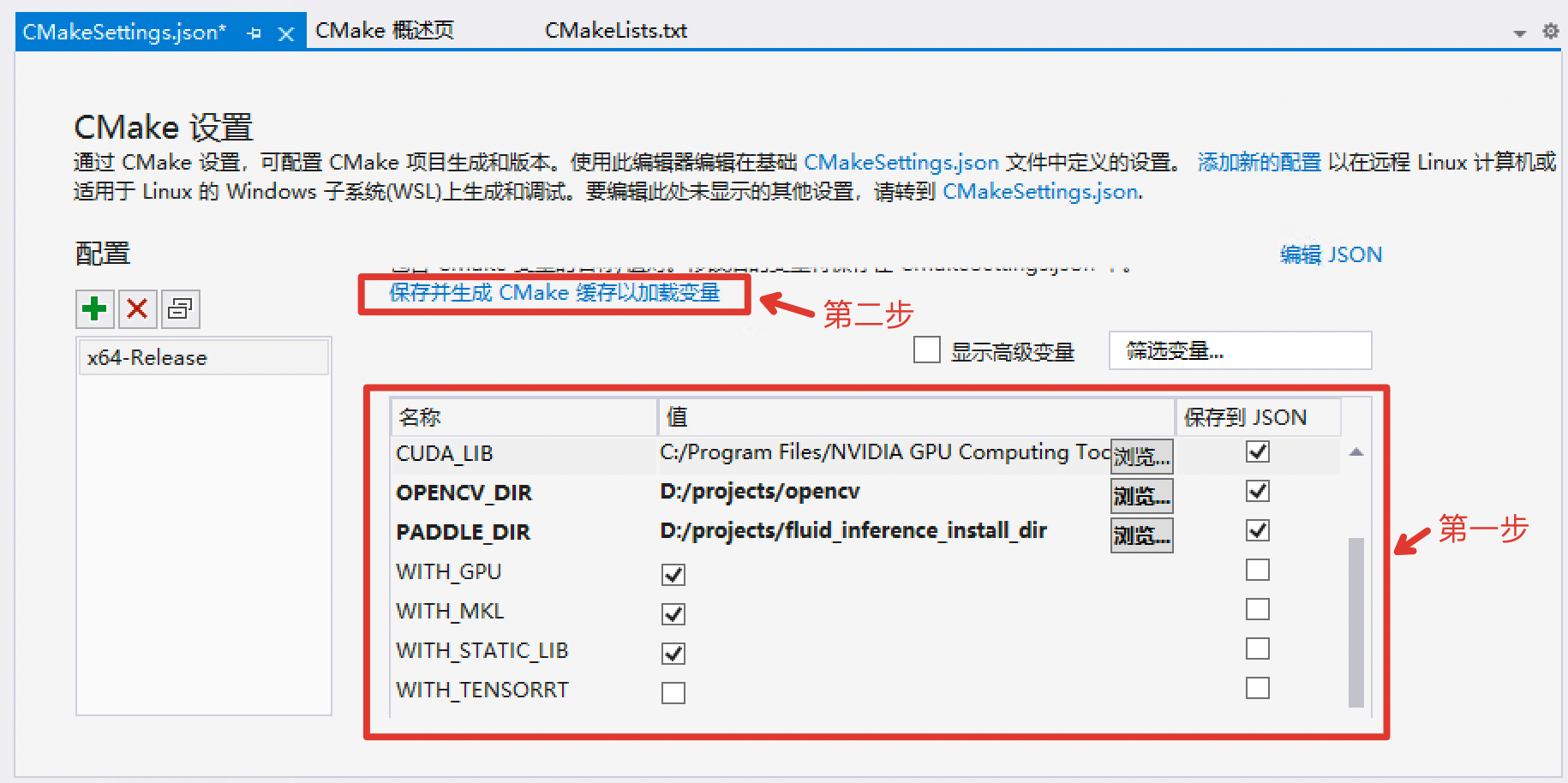

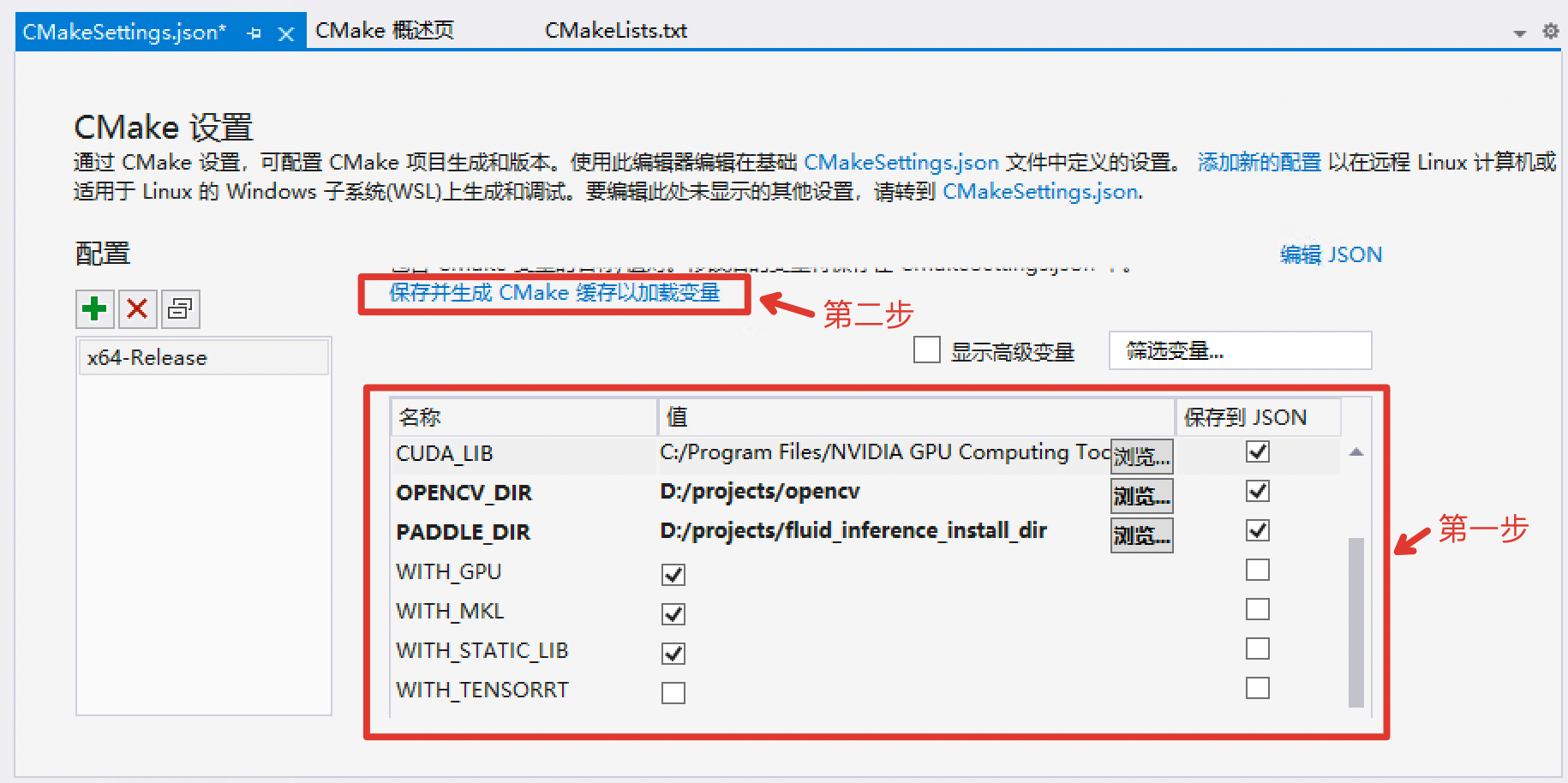

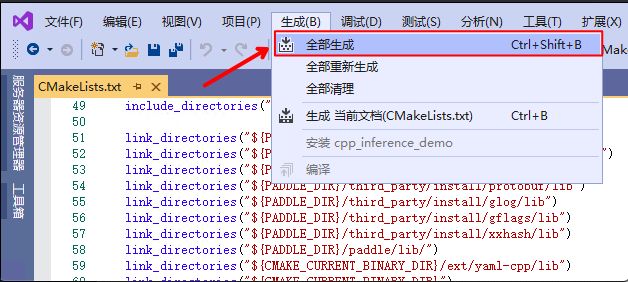

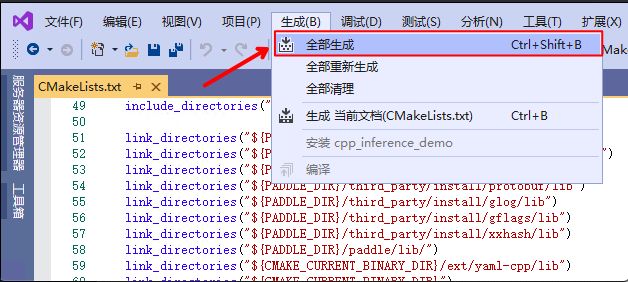

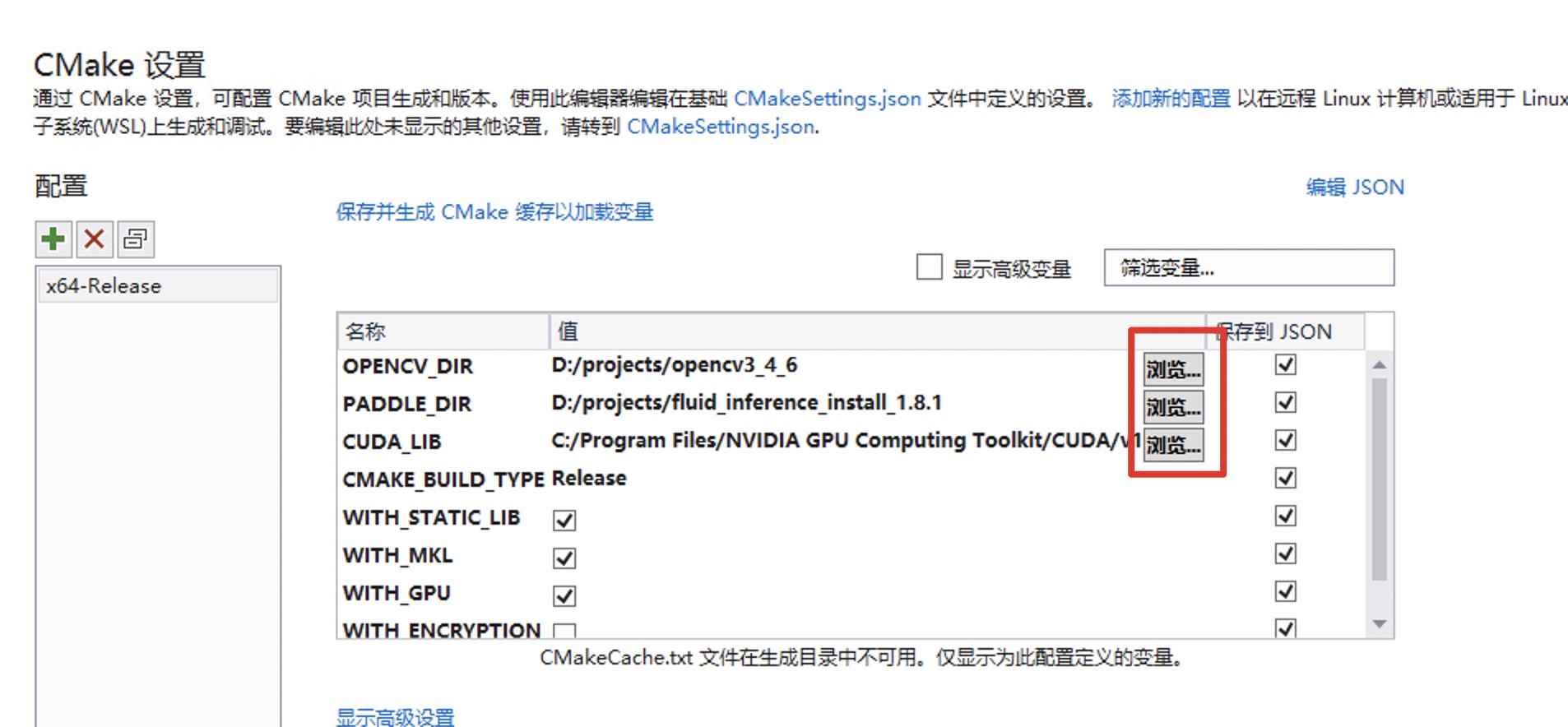

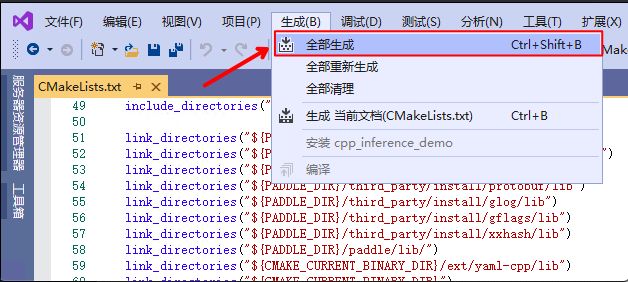

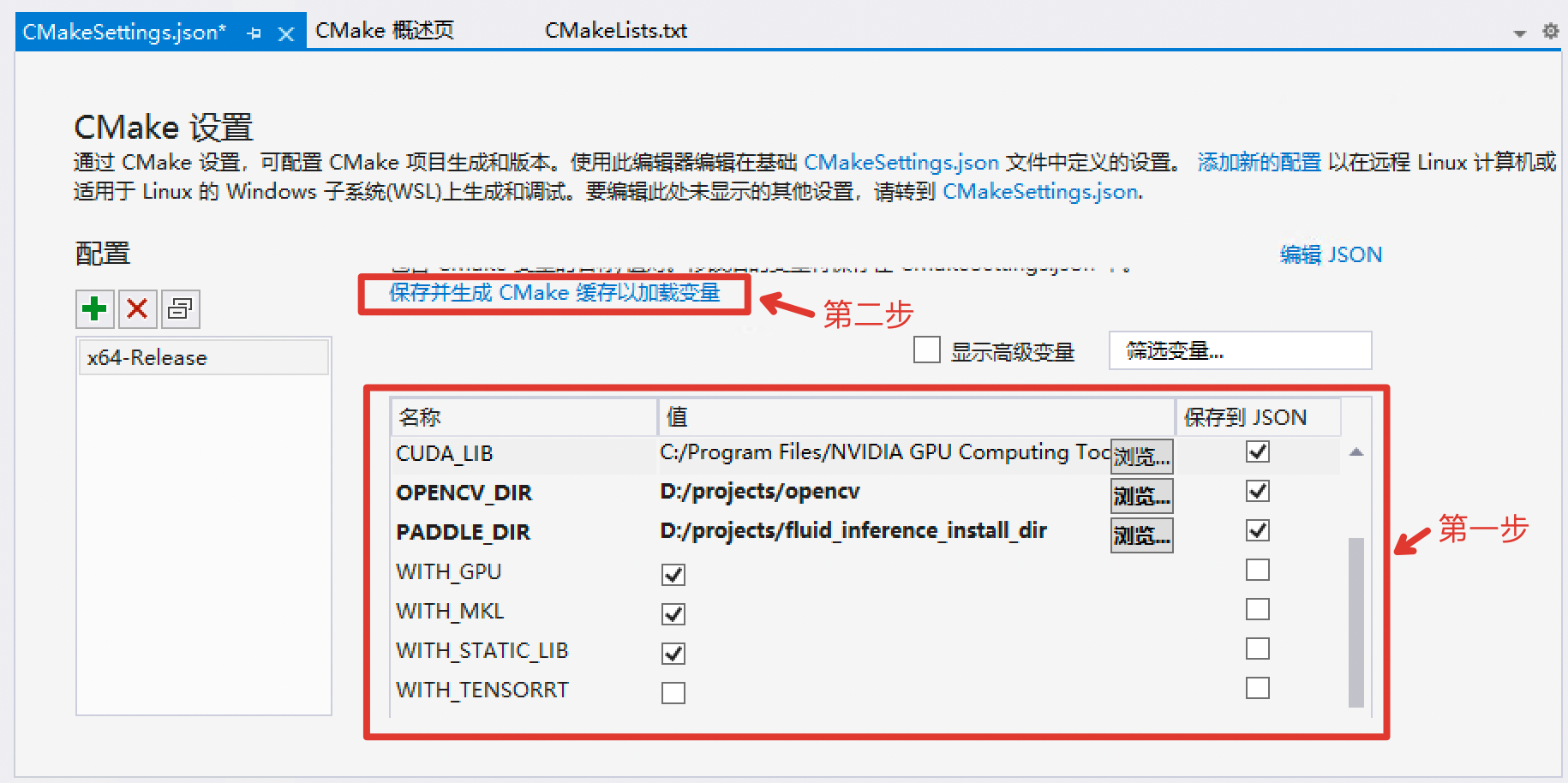

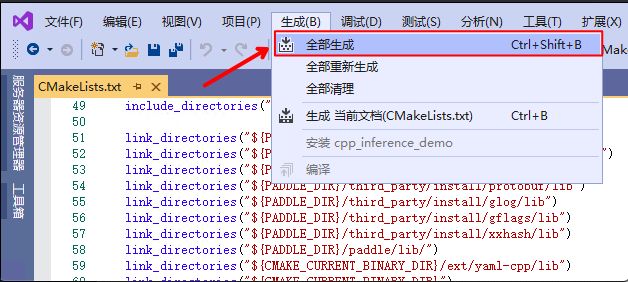

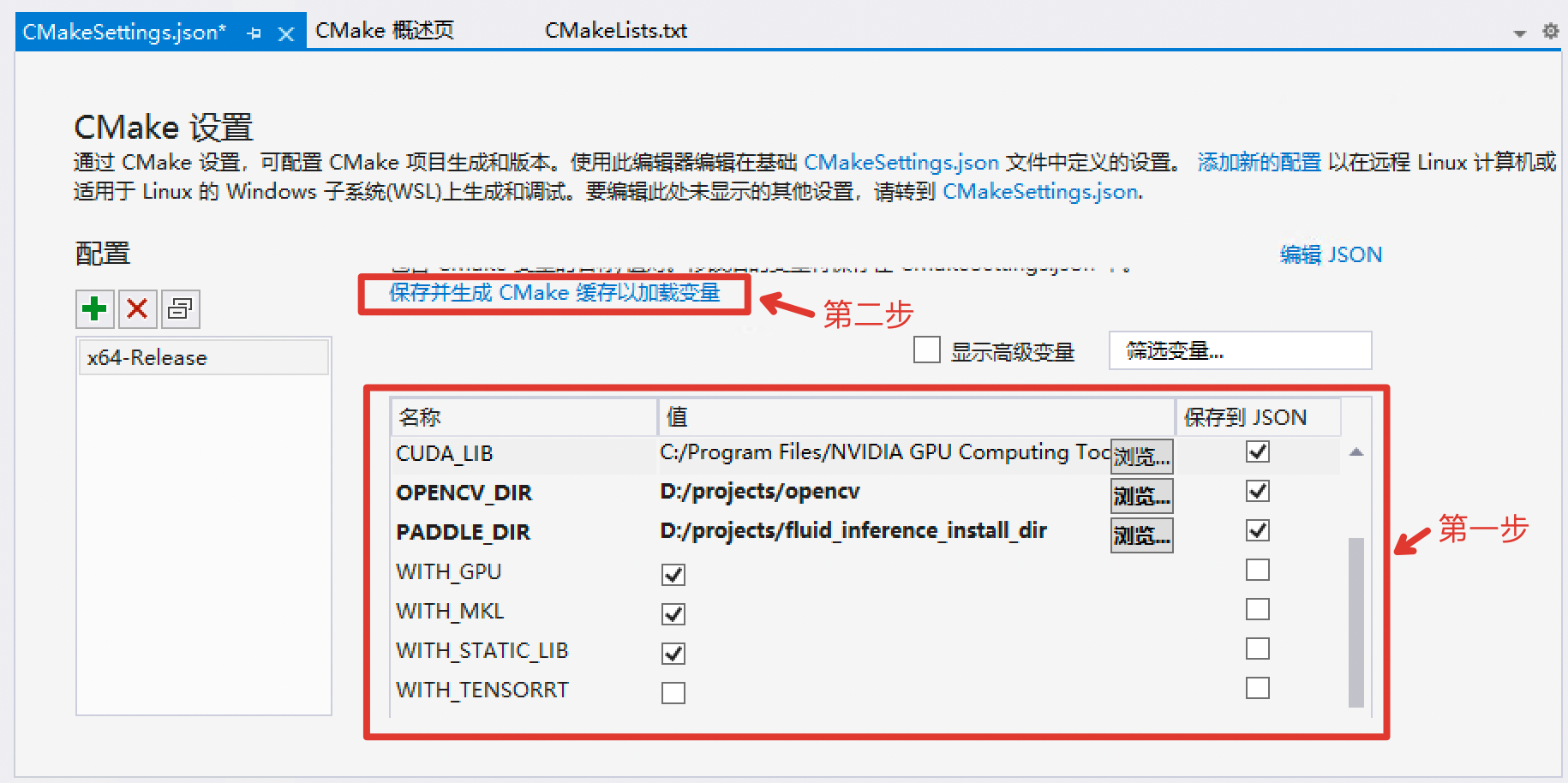

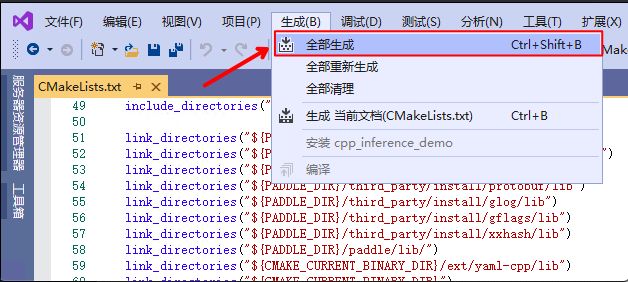

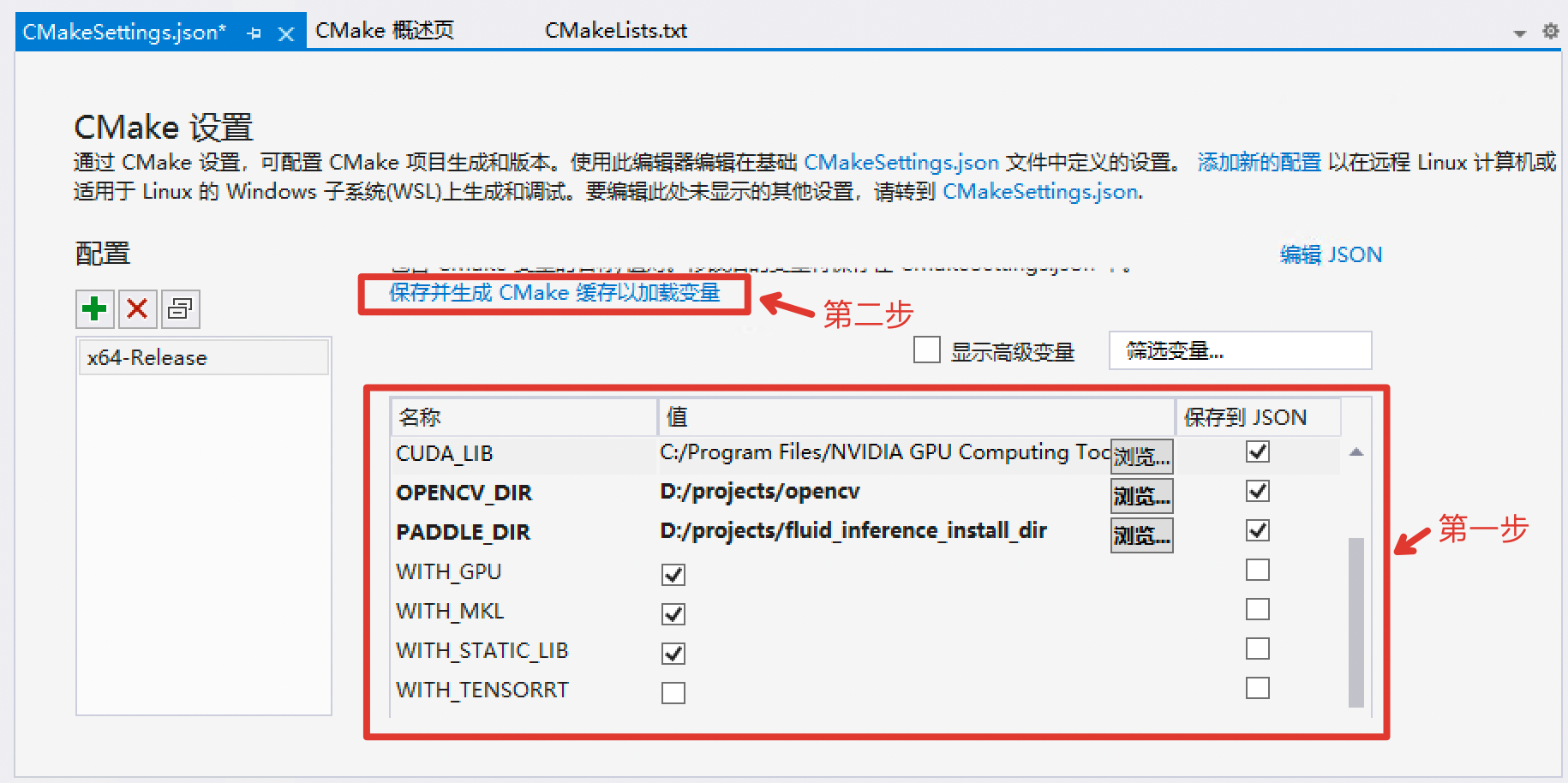

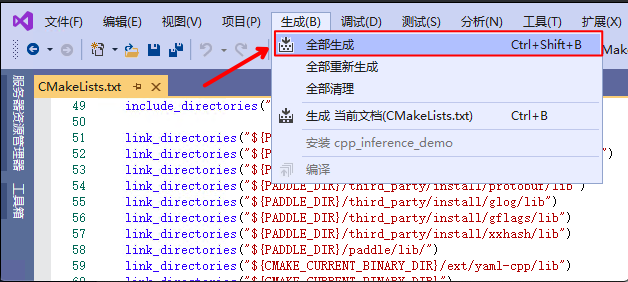

Add Batch Predict and Fix Windows Secure Deployment

Add batch prediction and comment in every header file

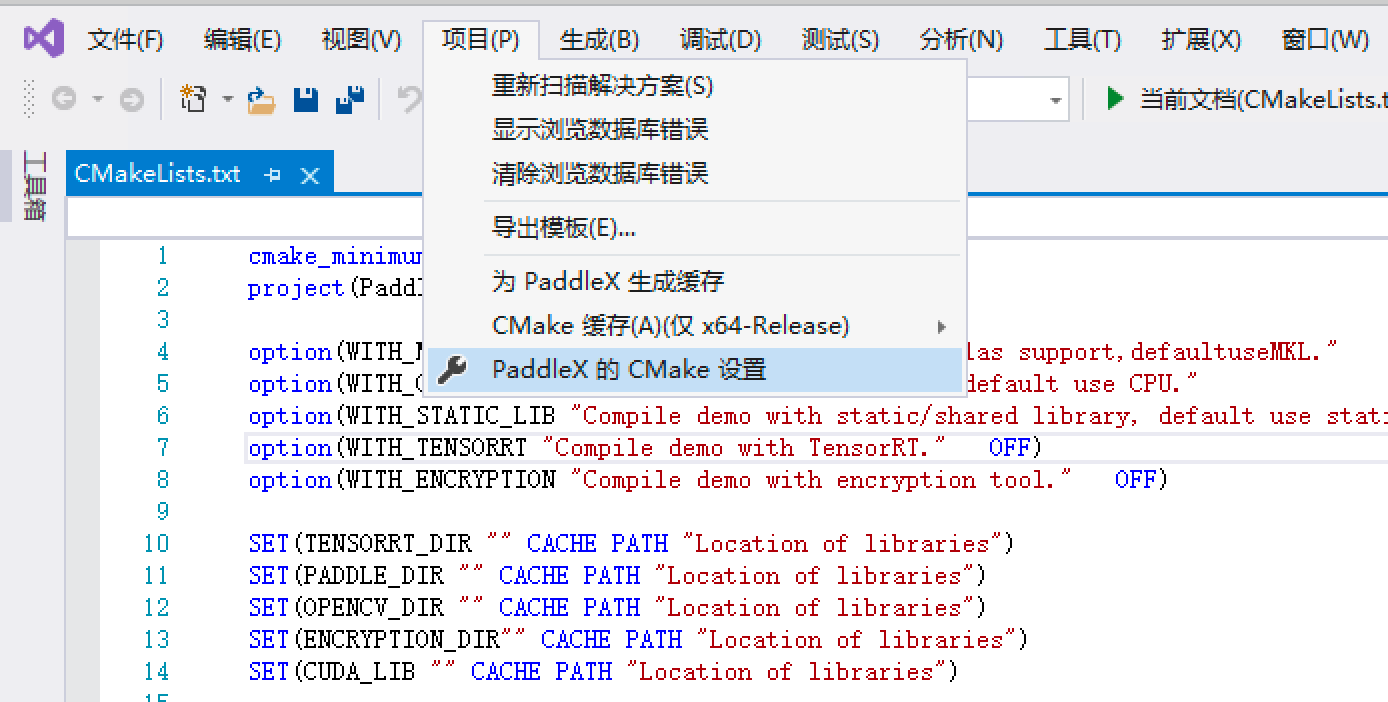

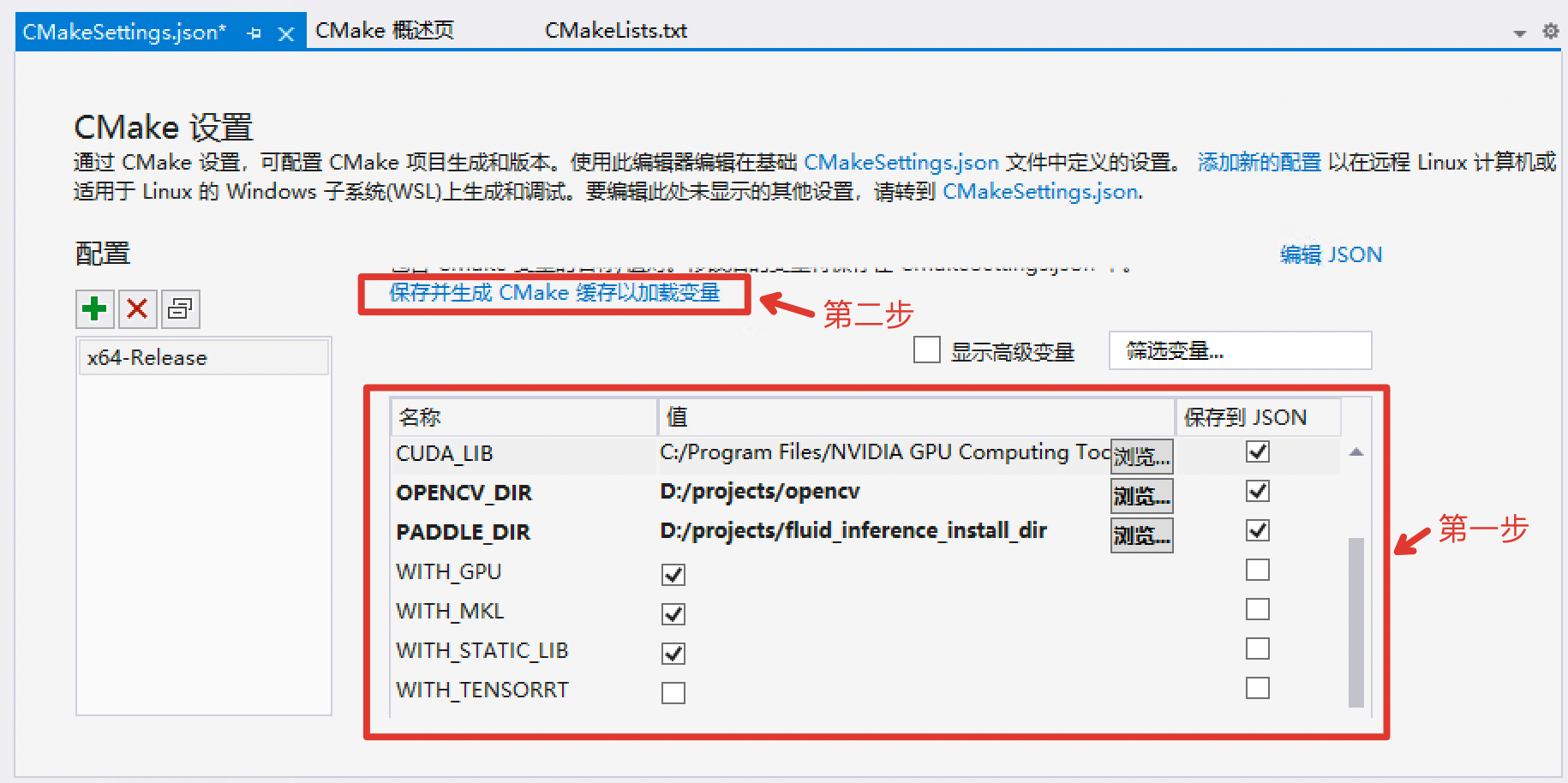

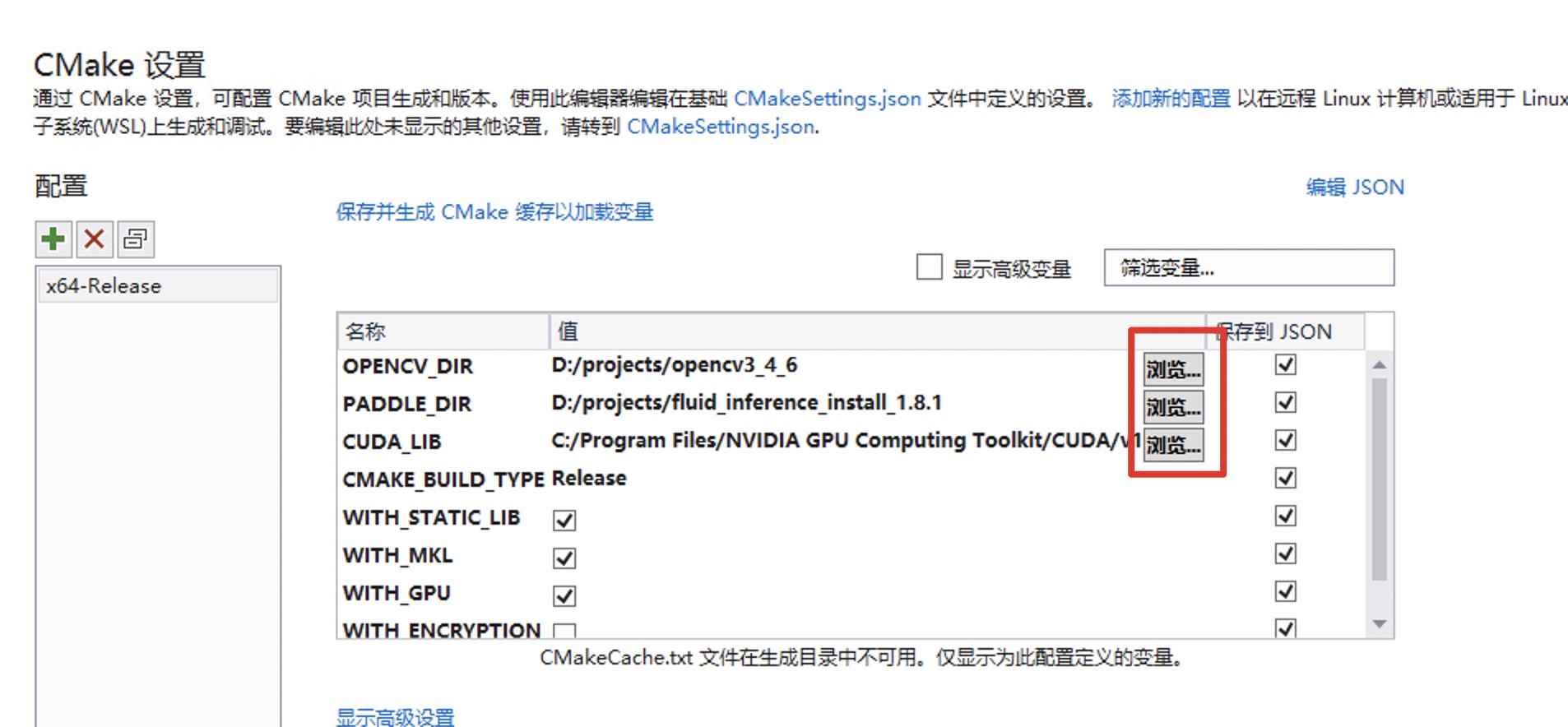

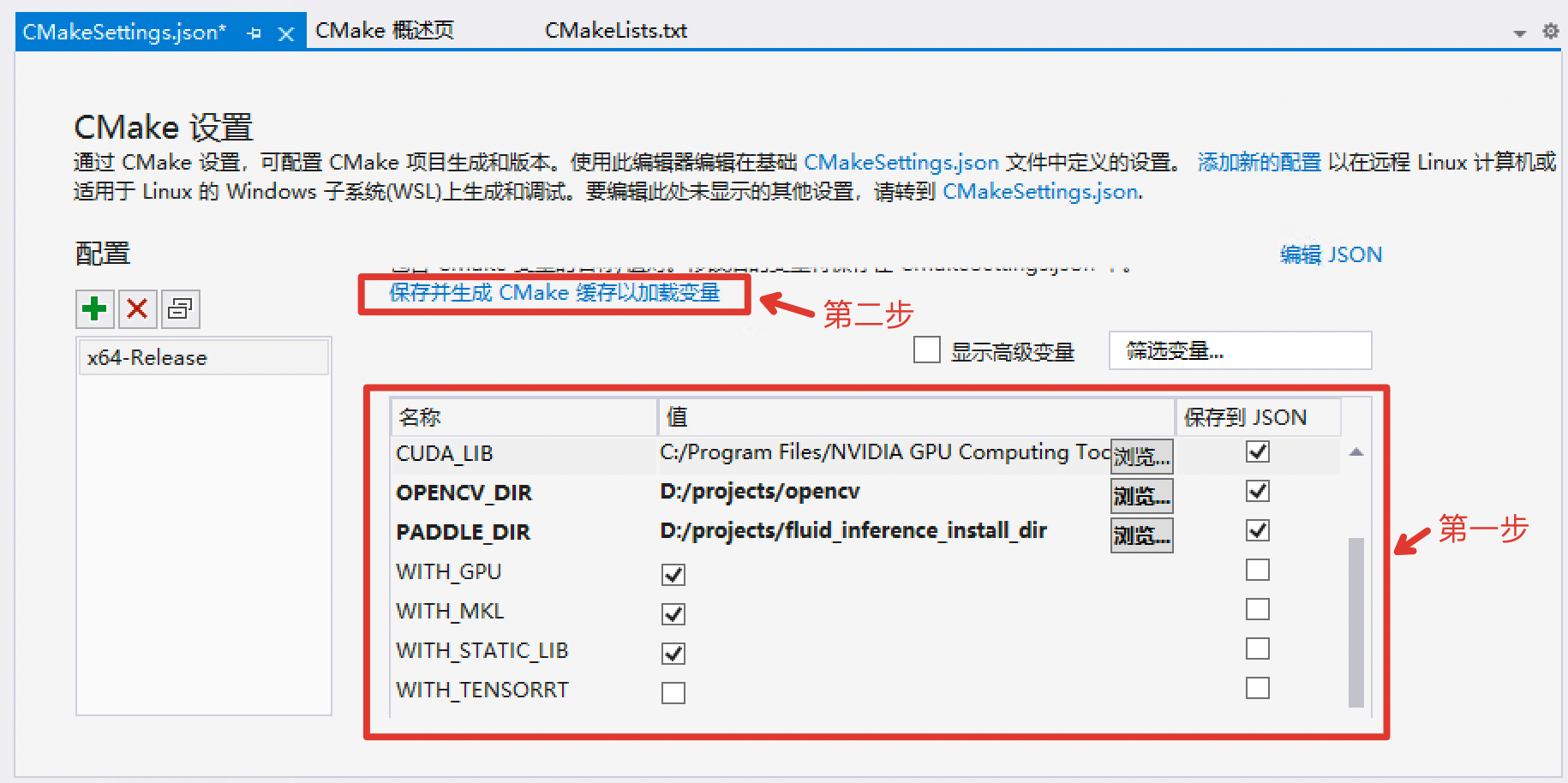

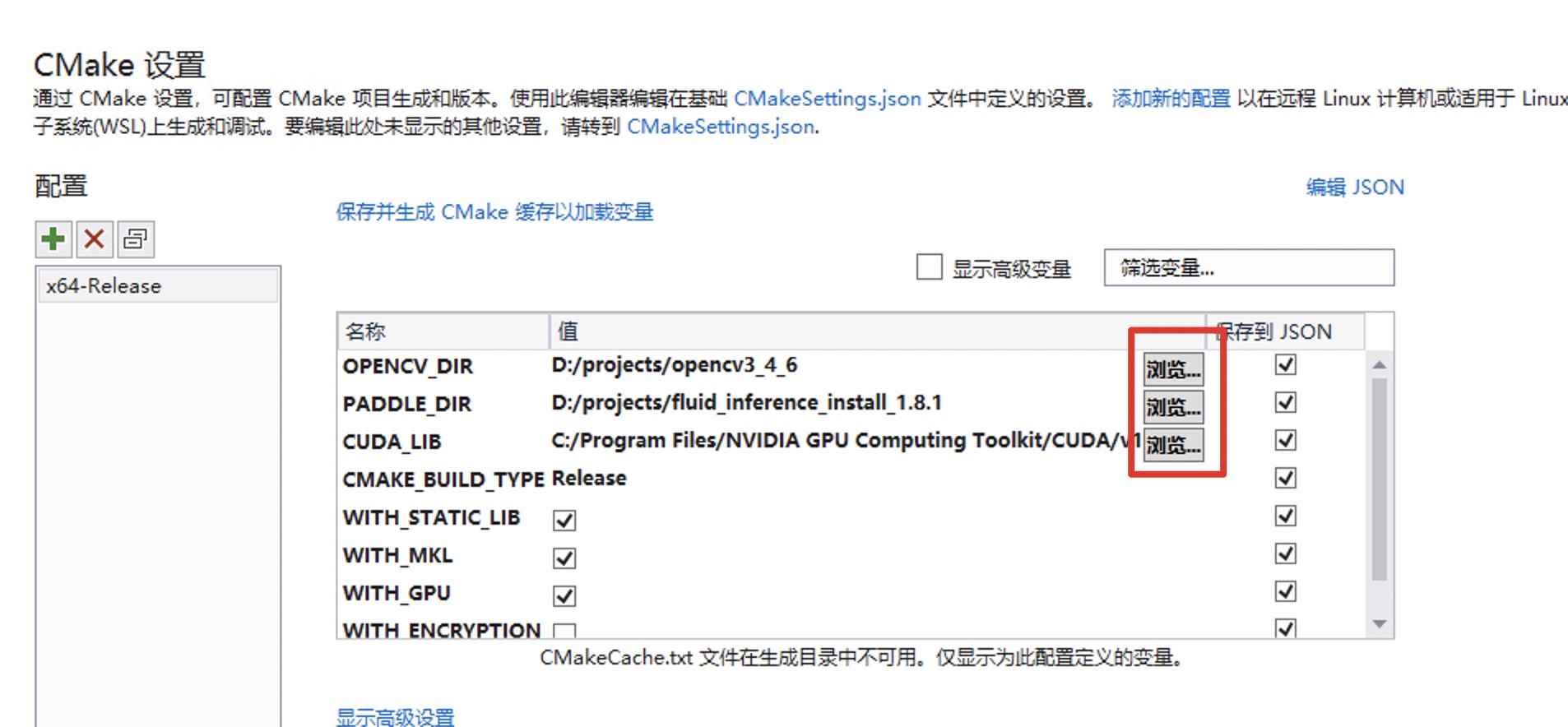

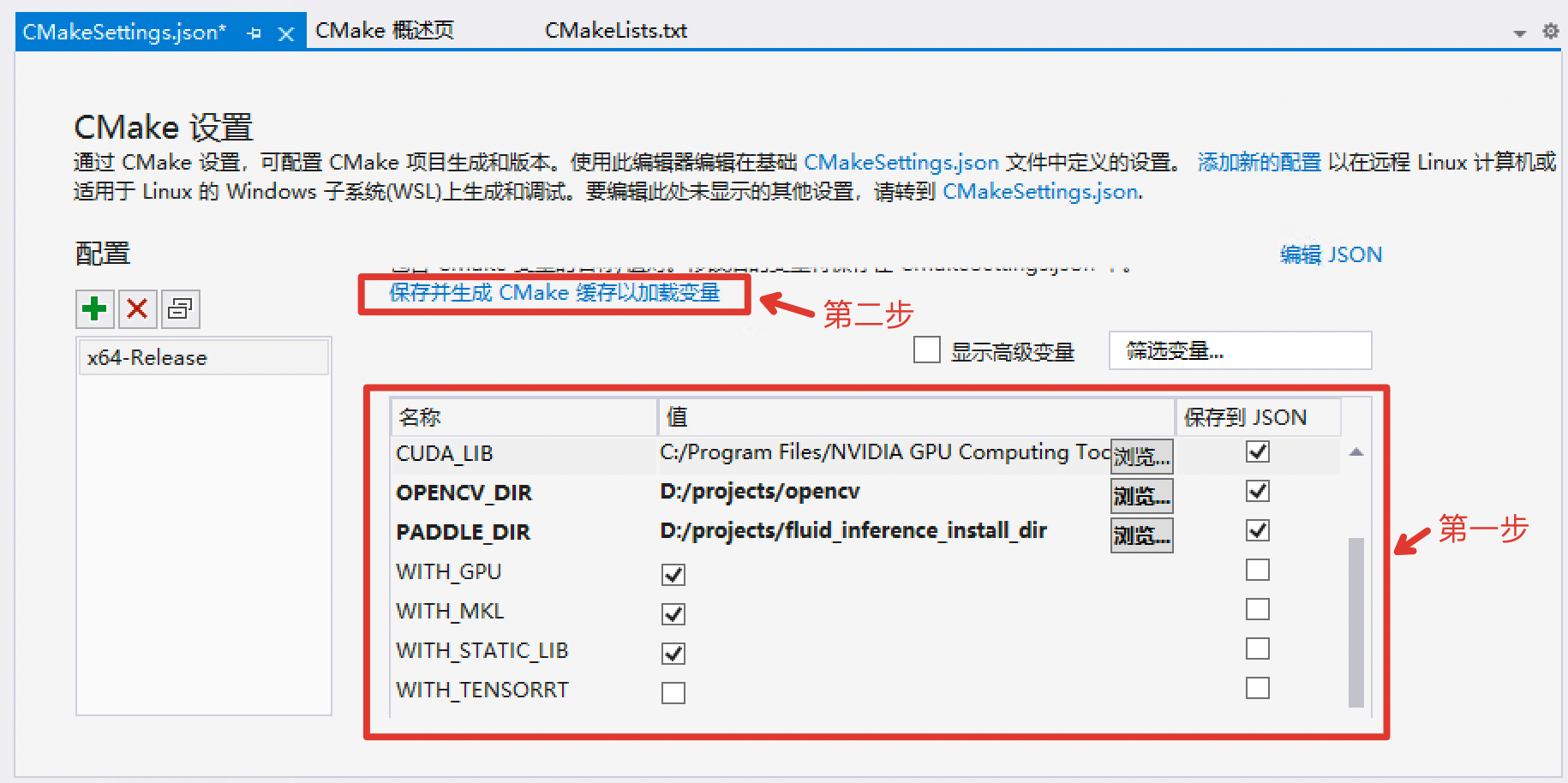

Showing

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

83.3 KB

397.9 KB

Add batch prediction and comment in every header file

77.3 KB | W: | H:

294.1 KB | W: | H:

427.5 KB | W: | H:

215.3 KB | W: | H:

83.3 KB | W: | H:

427.5 KB | W: | H:

83.3 KB

397.9 KB