| 序号 | +任务 | +模型 | +压缩策略[3][4] | +精度(自建中文数据集) | +耗时[1](ms) | +整体耗时[2](ms) | +加速比 | +整体模型大小(M) | +压缩比例 | +下载链接 | +

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | +检测 | +MobileNetV3_DB | +无 | +61.7 | +224 | +375 | +- | +8.6 | +- | ++ |

| 识别 | +MobileNetV3_CRNN | +无 | +62.0 | +9.52 | ++ | |||||

| 1 | +检测 | +SlimTextDet | +PACT量化训练 | +62.1 | +195 | +348 | +8% | +2.8 | +67.82% | ++ |

| 识别 | +SlimTextRec | +PACT量化训练 | +61.48 | +8.6 | ++ | |||||

| 2 | +检测 | +SlimTextDet_quat_pruning | +剪裁+PACT量化训练 | +60.86 | +142 | +288 | +30% | +2.8 | +67.82% | ++ |

| 识别 | +SlimTextRec | +PACT量化训练 | +61.48 | +8.6 | ++ | |||||

| 3 | +检测 | +SlimTextDet_pruning | +剪裁 | +61.57 | +138 | +295 | +27% | +2.9 | +66.28% | ++ |

| 识别 | +SlimTextRec | +PACT量化训练 | +61.48 | +8.6 | ++ |

+

+

+

+

+

+

-

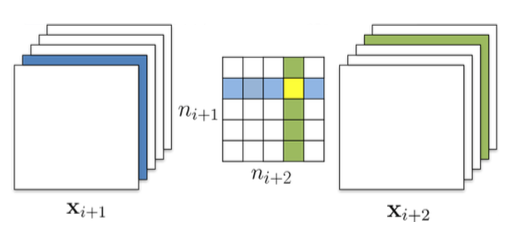

-图1:量化前的模型结构

-

-

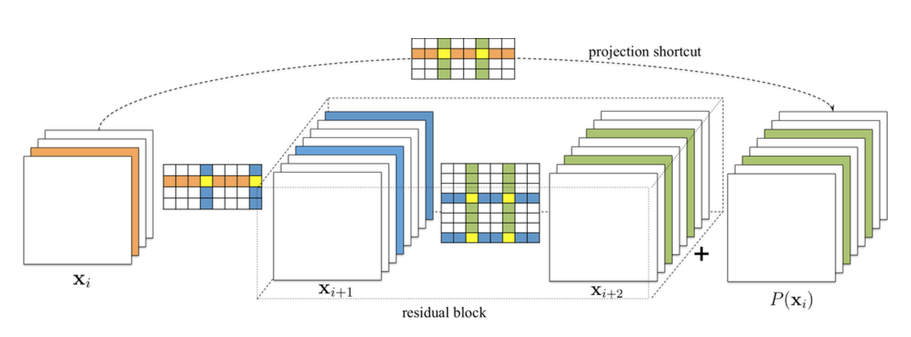

-图2: 量化后的模型结构

-

+ MobileNetV2's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/mobilenet_v2.py#L29), [paper](https://arxiv.org/abs/1801.04381) + +2. MobileNetV1Space

+ MobilNetV1's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/mobilenet_v1.py#L29), [paper](https://arxiv.org/abs/1704.04861) + +3. ResNetSpace

+ ResNetSpace's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/resnet.py#L30), [paper](https://arxiv.org/pdf/1512.03385.pdf) + + +#### Based on block from different model: +1. MobileNetV1BlockSpace

+ MobileNetV1Block's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/mobilenet_v1.py#L173) + +2. MobileNetV2BlockSpace

+ MobileNetV2Block's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/mobilenet_v2.py#L174) + +3. ResNetBlockSpace

+ ResNetBlock's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/resnet.py#L148) + +4. InceptionABlockSpace

+ InceptionABlock's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/inception_v4.py#L140) + +5. InceptionCBlockSpace

+ InceptionCBlock's architecture can reference: [code](https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/models/inception_v4.py#L291) + + +## How to use search space +1. Only need to specify the name of search space if use the space based on origin model architecture, such as configs for class SANAS is [('MobileNetV2Space')] if you want to use origin MobileNetV2 as search space. +2. Use search space paddleslim.nas provided based on block:

+ 2.1 Use `input_size`, `output_size` and `block_num` to construct search space, such as configs for class SANAS is ('MobileNetV2BlockSpace', {'input_size': 224, 'output_size': 32, 'block_num': 10})].

+ 2.2 Use `block_mask` to construct search space, such as configs for class SANAS is [('MobileNetV2BlockSpace', {'block_mask': [0, 1, 1, 1, 1, 0, 1, 0]})]. + +## How to write yourself search space +If you want to write yourself search space, you need to inherit base class named SearchSpaceBase and overwrite following functions: