diff --git a/contrib/README.md b/contrib/README.md

index 0361532b7f5bc5d271690734018c2a91aa20ea66..0ff80a5778fc18d183348ac753c7a4ae84698cef 100644

--- a/contrib/README.md

+++ b/contrib/README.md

@@ -22,7 +22,7 @@ CVPR 19 Look into Person (LIP) 单人人像分割比赛冠军模型,详见[ACE

### 4. 运行

-**NOTE:** 运行该模型需要需至少2.5G显存

+**NOTE:** 运行该模型需要2G左右显存

使用GPU预测

```

diff --git a/contrib/infer.py b/contrib/infer.py

index 866718f6495f7cd2a2938a9cfefc63d60c316807..8f939c8455cd3868120781a7a8d96ace0ff772b1 100644

--- a/contrib/infer.py

+++ b/contrib/infer.py

@@ -118,10 +118,10 @@ def infer():

output_im.putpalette(palette)

output_im.save(result_path)

- if idx % 100 == 0:

- print('%d processd' % (idx))

+ if (idx + 1) % 100 == 0:

+ print('%d processd' % (idx + 1))

- print('%d processd done' % (idx))

+ print('%d processd done' % (idx + 1))

return 0

diff --git a/docs/annotation/README.md b/docs/annotation/README.md

index 57c53b51da5d4474341b67cb57cf4a9c59067a5d..13a98e823eef195cef2acc457e7fa6df41bb9834 100644

--- a/docs/annotation/README.md

+++ b/docs/annotation/README.md

@@ -11,7 +11,7 @@

打开终端输入`labelme`会出现LableMe的交互界面,可以先预览`LabelMe`给出的已标注好的图片,再开始标注自定义数据集。

-

+

图1 LableMe交互界面的示意图

-

+

图2 已标注图片的示意图

-

+

图3 标注单个目标的示意图

-

+

图5 LableMe产出的真值文件的示意图

-

+

图6 训练所需的数据集目录的结构示意图

-

+

图7 训练所需的数据集各目录的内容示意图

@@ -27,4 +27,4 @@ DATALOADER Group存放所有与数据加载相关的配置

256

-

\ No newline at end of file

+

diff --git a/docs/data_prepare.md b/docs/data_prepare.md

index f518845f96167207aa9c9922692806a8375be443..b1564124001a0598810cbb5b513852546919e583 100644

--- a/docs/data_prepare.md

+++ b/docs/data_prepare.md

@@ -45,7 +45,7 @@ PaddleSeg采用通用的文件列表方式组织训练集、验证集和测试

```

-其中`[SEP]`是文件路径分割符,可以在`DATASET.SEPRATOR`配置项中修改, 默认为空格。

+其中`[SEP]`是文件路径分割符,可以在`DATASET.SEPARATOR`配置项中修改, 默认为空格。

**注意事项**

diff --git a/docs/model_zoo.md b/docs/model_zoo.md

index 5f5c0180193c8010aec3cf784f025dadee6d86e2..03f61ade79b69cfd9f57d7ed8ece5ae874c1d196 100644

--- a/docs/model_zoo.md

+++ b/docs/model_zoo.md

@@ -40,5 +40,5 @@ train数据集合为Cityscapes 训练集合,测试为Cityscapes的验证集合

|---|---|---|---|---|---|---|

| DeepLabv3+/MobileNetv2/bn | Cityscapes |MODEL.MODEL_NAME: deeplabv3p

MODEL.DEEPLAB.BACKBONE: mobilenet

MODEL.DEEPLAB.DEPTH_MULTIPLIER: 1.0

MODEL.DEEPLAB.ENCODER_WITH_ASPP: False

MODEL.DEEPLAB.ENABLE_DECODER: False

MODEL.DEFAULT_NORM_TYPE: bn|[mobilenet_cityscapes.tgz](https://paddleseg.bj.bcebos.com/models/mobilenet_cityscapes.tgz) |16|false| 0.698|

| DeepLabv3+/Xception65/gn | Cityscapes |MODEL.MODEL_NAME: deeplabv3p

MODEL.DEEPLAB.BACKBONE: xception_65

MODEL.DEFAULT_NORM_TYPE: gn | [deeplabv3p_xception65_cityscapes.tgz](https://paddleseg.bj.bcebos.com/models/deeplabv3p_xception65_cityscapes.tgz) |16|false| 0.7804 |

-| DeepLabv3+/Xception65/bn | Cityscapes | MODEL.MODEL_NAME: deeplabv3p

MODEL.DEEPLAB.BACKBONE: xception_65

MODEL.DEFAULT_NORM_TYPE: bn| [Xception65_deeplab_cityscapes.tgz](https://paddleseg.bj.bcebos.com/models/Xception65_deeplab_cityscapes.tgz) | 16 | false | 0.7715 |

-| ICNet/bn | Cityscapes | MODEL.MODEL_NAME: icnet

MODEL.DEFAULT_NORM_TYPE: bn | [icnet_cityscapes.tgz](https://paddleseg.bj.bcebos.com/models/icnet_cityscapes.tgz) |16|false| 0.6854 |

+| DeepLabv3+/Xception65/bn | Cityscapes | MODEL.MODEL_NAME: deeplabv3p

MODEL.DEEPLAB.BACKBONE: xception_65

MODEL.DEFAULT_NORM_TYPE: bn| [Xception65_deeplab_cityscapes.tgz](https://paddleseg.bj.bcebos.com/models/xception65_bn_cityscapes.tgz) | 16 | false | 0.7715 |

+| ICNet/bn | Cityscapes | MODEL.MODEL_NAME: icnet

MODEL.DEFAULT_NORM_TYPE: bn | [icnet_cityscapes.tgz](https://paddleseg.bj.bcebos.com/models/icnet6831.tar.gz) |16|false| 0.6831 |

diff --git a/inference/CMakeLists.txt b/inference/CMakeLists.txt

index 4480161b4557c4ce507f93b41596b0e887c4f21d..994befc87be458ecab679c637d17cfd6239019fb 100644

--- a/inference/CMakeLists.txt

+++ b/inference/CMakeLists.txt

@@ -36,7 +36,7 @@ if (NOT DEFINED OPENCV_DIR OR ${OPENCV_DIR} STREQUAL "")

endif()

include_directories("${CMAKE_SOURCE_DIR}/")

-include_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/src/yaml-cpp/include")

+include_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/src/ext-yaml-cpp/include")

include_directories("${PADDLE_DIR}/")

include_directories("${PADDLE_DIR}/third_party/install/protobuf/include")

include_directories("${PADDLE_DIR}/third_party/install/glog/include")

@@ -82,7 +82,7 @@ if (WIN32)

add_definitions(-DSTATIC_LIB)

endif()

else()

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++14")

+ set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -o2 -std=c++11")

set(CMAKE_STATIC_LIBRARY_PREFIX "")

endif()

@@ -160,14 +160,13 @@ if (NOT WIN32)

set(EXTERNAL_LIB "-lrt -ldl -lpthread")

set(DEPS ${DEPS}

${MATH_LIB} ${MKLDNN_LIB}

- glog gflags protobuf snappystream snappy z xxhash

+ glog gflags protobuf yaml-cpp snappystream snappy z xxhash

${EXTERNAL_LIB})

else()

set(DEPS ${DEPS}

${MATH_LIB} ${MKLDNN_LIB}

opencv_world346 glog libyaml-cppmt gflags_static libprotobuf snappy zlibstatic xxhash snappystream ${EXTERNAL_LIB})

set(DEPS ${DEPS} libcmt shlwapi)

- set(DEPS ${DEPS} ${YAML_CPP_LIBRARY})

endif(NOT WIN32)

if(WITH_GPU)

@@ -206,13 +205,17 @@ ADD_LIBRARY(libpaddleseg_inference STATIC ${PADDLESEG_INFERENCE_SRCS})

target_link_libraries(libpaddleseg_inference ${DEPS})

add_executable(demo demo.cpp)

-ADD_DEPENDENCIES(libpaddleseg_inference yaml-cpp)

-ADD_DEPENDENCIES(demo yaml-cpp libpaddleseg_inference)

+ADD_DEPENDENCIES(libpaddleseg_inference ext-yaml-cpp)

+ADD_DEPENDENCIES(demo ext-yaml-cpp libpaddleseg_inference)

target_link_libraries(demo ${DEPS} libpaddleseg_inference)

-

-add_custom_command(TARGET demo POST_BUILD

+if (WIN32)

+ add_custom_command(TARGET demo POST_BUILD

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/mklml.dll ./mklml.dll

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5md.dll ./libiomp5md.dll

- COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mkldnn/bin/mkldnn.dll ./mkldnn.dll

- )

\ No newline at end of file

+ COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mkldnn/lib/mkldnn.dll ./mkldnn.dll

+ COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/mklml.dll ./release/mklml.dll

+ COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5md.dll ./release/libiomp5md.dll

+ COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mkldnn/lib/mkldnn.dll ./mkldnn.dll

+ )

+endif()

diff --git a/inference/README.md b/inference/README.md

index e11ffb72e9d515c0302078d32dc4e7b09b4df9c3..a29a45b54de496399809081a3dd4bd0c8cbde929 100644

--- a/inference/README.md

+++ b/inference/README.md

@@ -4,132 +4,133 @@

本目录提供一个跨平台的图像分割模型的C++预测部署方案,用户通过一定的配置,加上少量的代码,即可把模型集成到自己的服务中,完成图像分割的任务。

-主要设计的目标包括以下三点:

-- 跨平台,支持在 windows和Linux完成编译、开发和部署

+主要设计的目标包括以下四点:

+- 跨平台,支持在 windows 和 Linux 完成编译、开发和部署

- 支持主流图像分割任务,用户通过少量配置即可加载模型完成常见预测任务,比如人像分割等

- 可扩展性,支持用户针对新模型开发自己特殊的数据预处理、后处理等逻辑

-

+- 高性能,除了`PaddlePaddle`自身带来的性能优势,我们还针对图像分割的特点对关键步骤进行了性能优化

## 主要目录和文件

-| 文件 | 作用 |

-|-------|----------|

-| CMakeList.txt | cmake 编译配置文件 |

-| external-cmake| 依赖的外部项目 cmake (目前仅有yaml-cpp)|

-| demo.cpp | 示例C++代码,演示加载模型完成预测任务 |

-| predictor | 加载模型并预测的类代码|

-| preprocess |数据预处理相关的类代码|

-| utils | 一些基础公共函数|

-| images/humanseg | 样例人像分割模型的测试图片目录|

-| conf/humanseg.yaml | 示例人像分割模型配置|

-| tools/visualize.py | 预测结果彩色可视化脚本 |

-

-## Windows平台编译

-

-### 前置条件

-* Visual Studio 2015+

-* CUDA 8.0 / CUDA 9.0 + CuDNN 7

-* CMake 3.0+

-

-我们分别在 `Visual Studio 2015` 和 `Visual Studio 2019 Community` 两个版本下做了测试.

-

-**下面所有示例,以根目录为 `D:\`演示**

-

-### Step1: 下载代码

-

-1. `git clone http://gitlab.baidu.com/Paddle/PaddleSeg.git`

-2. 拷贝 `D:\PaddleSeg\inference\` 目录到 `D:\PaddleDeploy`下

-

-目录`D:\PaddleDeploy\inference` 目录包含了`CMakelist.txt`以及代码等项目文件.

-

-

-

-### Step2: 下载PaddlePaddle预测库fluid_inference

-根据Windows环境,下载相应版本的PaddlePaddle预测库,并解压到`D:\PaddleDeploy\`目录

-

-| CUDA | GPU | 下载地址 |

-|------|------|--------|

-| 8.0 | Yes | [fluid_inference.zip](https://bj.bcebos.com/v1/paddleseg/fluid_inference_win.zip) |

-| 9.0 | Yes | [fluid_inference_cuda90.zip](https://paddleseg.bj.bcebos.com/fluid_inference_cuda9_cudnn7.zip) |

-

-`D:\PaddleDeploy\fluid_inference`目录包含内容为:

-```bash

-paddle # paddle核心目录

-third_party # paddle 第三方依赖

-version.txt # 编译的版本信息

```

+inference

+├── demo.cpp # 演示加载模型、读入数据、完成预测任务C++代码

+|

+├── conf

+│ └── humanseg.yaml # 示例人像分割模型配置

+├── images

+│ └── humanseg # 示例人像分割模型测试图片目录

+├── tools

+│ └── visualize.py # 示例人像分割模型结果可视化脚本

+├── docs

+| ├── linux_build.md # Linux 编译指南

+| ├── windows_vs2015_build.md # windows VS2015编译指南

+│ └── windows_vs2019_build.md # Windows VS2019编译指南

+|

+├── utils # 一些基础公共函数

+|

+├── preprocess # 数据预处理相关代码

+|

+├── predictor # 模型加载和预测相关代码

+|

+├── CMakeList.txt # cmake编译入口文件

+|

+└── external-cmake # 依赖的外部项目cmake(目前仅有yaml-cpp)

+```

-### Step3: 安装配置OpenCV

+## 编译

+支持在`Windows`和`Linux`平台编译和使用:

+- [Linux 编译指南](./docs/linux_build.md)

+- [Windows 使用 Visual Studio 2019 Community 编译指南](./docs/windows_vs2019_build.md)

+- [Windows 使用 Visual Studio 2015 编译指南](./docs/windows_vs2015_build.md)

-1. 在OpenCV官网下载适用于Windows平台的3.4.6版本, [下载地址](https://sourceforge.net/projects/opencvlibrary/files/3.4.6/opencv-3.4.6-vc14_vc15.exe/download)

-2. 运行下载的可执行文件,将OpenCV解压至指定目录,如`D:\PaddleDeploy\opencv`

-3. 配置环境变量,如下流程所示

- 1. 我的电脑->属性->高级系统设置->环境变量

- 2. 在系统变量中找到Path(如没有,自行创建),并双击编辑

- 3. 新建,将opencv路径填入并保存,如`D:\PaddleDeploy\opencv\build\x64\vc14\bin`

+`Windows`上推荐使用最新的`Visual Studio 2019 Community`直接编译`CMake`项目。

-### Step4: 以VS2015为例编译代码

+## 预测并可视化结果

-以下命令需根据自己系统中各相关依赖的路径进行修改

+完成编译后,便生成了需要的可执行文件和链接库,然后执行以下步骤:

-* 调用VS2015, 请根据实际VS安装路径进行调整,打开cmd命令行工具执行以下命令

-* 其他vs版本,请查找到对应版本的`vcvarsall.bat`路径,替换本命令即可

+### 1. 下载模型文件

+我们提供了一个人像分割模型示例用于测试,点击右侧地址下载:[示例模型下载地址](https://paddleseg.bj.bcebos.com/inference_model/deeplabv3p_xception65_humanseg.tgz)

+下载并解压,解压后目录结构如下:

```

-call "C:\Program Files (x86)\Microsoft Visual Studio 14.0\VC\vcvarsall.bat" amd64

+deeplabv3p_xception65_humanseg

+├── __model__ # 模型文件

+|

+└── __params__ # 参数文件

```

-

-* CMAKE编译工程

- * PADDLE_DIR: fluid_inference预测库目录

- * CUDA_LIB: CUDA动态库目录, 请根据实际安装情况调整

- * OPENCV_DIR: OpenCV解压目录

-

-```

-# 创建CMake的build目录

-D:

-cd PaddleDeploy\inference

-mkdir build

-cd build

-D:\PaddleDeploy\inference\build> cmake .. -G "Visual Studio 14 2015 Win64" -DWITH_GPU=ON -DPADDLE_DIR=D:\PaddleDeploy\fluid_inference -DCUDA_LIB=D:\PaddleDeploy\cudalib\v8.0\lib\x64 -DOPENCV_DIR=D:\PaddleDeploy\opencv -T host=x64

+解压后把上述目录拷贝到合适的路径:

+

+**假设**`Windows`系统上,我们模型和参数文件所在路径为`D:\projects\models\deeplabv3p_xception65_humanseg`。

+

+**假设**`Linux`上对应的路径则为`/root/projects/models/deeplabv3p_xception65_humanseg`。

+

+

+### 2. 修改配置

+源代码的`conf`目录下提供了示例人像分割模型的配置文件`humanseg.yaml`, 相关的字段含义和说明如下:

+```yaml

+DEPLOY:

+ # 是否使用GPU预测

+ USE_GPU: 1

+ # 模型和参数文件所在目录路径

+ MODEL_PATH: "/root/projects/models/deeplabv3p_xception65_humanseg"

+ # 模型文件名

+ MODEL_FILENAME: "__model__"

+ # 参数文件名

+ PARAMS_FILENAME: "__params__"

+ # 预测图片的的标准输入尺寸,输入尺寸不一致会做resize

+ EVAL_CROP_SIZE: (513, 513)

+ # 均值

+ MEAN: [104.008, 116.669, 122.675]

+ # 方差

+ STD: [1.0, 1.0, 1.0]

+ # 图片类型, rgb 或者 rgba

+ IMAGE_TYPE: "rgb"

+ # 分类类型数

+ NUM_CLASSES: 2

+ # 图片通道数

+ CHANNELS : 3

+ # 预处理方式,目前提供图像分割的通用处理类SegPreProcessor

+ PRE_PROCESSOR: "SegPreProcessor"

+ # 预测模式,支持 NATIVE 和 ANALYSIS

+ PREDICTOR_MODE: "ANALYSIS"

+ # 每次预测的 batch_size

+ BATCH_SIZE : 3

```

+修改字段`MODEL_PATH`的值为你在**上一步**下载并解压的模型文件所放置的目录即可。

-这里的`cmake`参数`-G`, 可以根据自己的`VS`版本调整,具体请参考[cmake文档](https://cmake.org/cmake/help/v3.15/manual/cmake-generators.7.html)

-* 生成可执行文件

+### 3. 执行预测

-```

-D:\PaddleDeploy\inference\build> msbuild /m /p:Configuration=Release cpp_inference_demo.sln

-```

-

-### Step5: 预测及可视化

-

-上步骤中编译生成的可执行文件和相关动态链接库并保存在build/Release目录下,可通过Windows命令行直接调用。

-可下载并解压示例模型进行测试,点击下载示例的人像分割模型[下载地址](https://paddleseg.bj.bcebos.com/inference_model/deeplabv3p_xception65_humanseg.tgz)

-

-假设解压至 `D:\PaddleDeploy\models\deeplabv3p_xception65_humanseg` ,执行以下命令:

+在终端中切换到生成的可执行文件所在目录为当前目录(Windows系统为`cmd`)。

+`Linux` 系统中执行以下命令:

+```shell

+./demo --conf=/root/projects/PaddleSeg/inference/conf/humanseg.yaml --input_dir=/root/projects/PaddleSeg/inference/images/humanseg/

```

-cd Release

-D:\PaddleDeploy\inference\build\Release> demo.exe --conf=D:\\PaddleDeploy\\inference\\conf\\humanseg.yaml --input_dir=D:\\PaddleDeploy\\inference\\images\humanseg\\

+`Windows` 中执行以下命令:

+```shell

+D:\projects\PaddleSeg\inference\build\Release>demo.exe --conf=D:\\projects\\PaddleSeg\\inference\\conf\\humanseg.yaml --input_dir=D:\\projects\\PaddleSeg\\inference\\images\humanseg\\

```

+

预测使用的两个命令参数说明如下:

| 参数 | 含义 |

|-------|----------|

-| conf | 模型配置的yaml文件路径 |

+| conf | 模型配置的Yaml文件路径 |

| input_dir | 需要预测的图片目录 |

-**配置文件**的样例以及字段注释说明请参考: [conf/humanseg.yaml](./conf/humanseg.yaml)

-样例程序会扫描input_dir目录下的所有图片,并生成对应的预测结果图片。

+配置文件说明请参考上一步,样例程序会扫描input_dir目录下的所有图片,并生成对应的预测结果图片:

文件`demo.jpg`预测的结果存储在`demo_jpg.png`中,可视化结果在`demo_jpg_scoremap.png`中, 原始尺寸的预测结果在`demo_jpg_recover.png`中。

输入原图

-

+

输出预测结果

-

+

diff --git a/inference/conf/humanseg.yaml b/inference/conf/humanseg.yaml

index cb5d2f165bfa3601cfec213e754d702b2e0c32ff..537005e18bffe3b62456d873bba12ba01d572291 100644

--- a/inference/conf/humanseg.yaml

+++ b/inference/conf/humanseg.yaml

@@ -1,6 +1,6 @@

DEPLOY:

USE_GPU: 1

- MODEL_PATH: "C:\\PaddleDeploy\\models\\deeplabv3p_xception65_humanseg"

+ MODEL_PATH: "/root/projects/models/deeplabv3p_xception65_humanseg"

MODEL_NAME: "unet"

MODEL_FILENAME: "__model__"

PARAMS_FILENAME: "__params__"

@@ -11,5 +11,5 @@ DEPLOY:

NUM_CLASSES: 2

CHANNELS : 3

PRE_PROCESSOR: "SegPreProcessor"

- PREDICTOR_MODE: "ANALYSIS"

- BATCH_SIZE : 3

\ No newline at end of file

+ PREDICTOR_MODE: "NATIVE"

+ BATCH_SIZE : 3

diff --git a/inference/demo.cpp b/inference/demo.cpp

index 763e01b4ed163b7ac02800112a2faa114074bd12..657d4f4244069d0a59c4dee7827d047a375f2741 100644

--- a/inference/demo.cpp

+++ b/inference/demo.cpp

@@ -21,7 +21,6 @@ int main(int argc, char** argv) {

// 2. get all the images with extension '.jpeg' at input_dir

auto imgs = PaddleSolution::utils::get_directory_images(FLAGS_input_dir, ".jpeg|.jpg");

-

// 3. predict

predictor.predict(imgs);

return 0;

diff --git a/inference/docs/linux_build.md b/inference/docs/linux_build.md

new file mode 100644

index 0000000000000000000000000000000000000000..53742c97005d85225c85ec33432d8e5fef02c9df

--- /dev/null

+++ b/inference/docs/linux_build.md

@@ -0,0 +1,69 @@

+# Linux平台 编译指南

+

+## 说明

+本文档在 `Linux`平台使用`GCC 4.8.5` 和 `GCC 4.9.4`测试过,如果需要使用更高G++版本编译使用,则需要重新编译Paddle预测库,请参考: [从源码编译Paddle预测库](https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/advanced_usage/deploy/inference/build_and_install_lib_cn.html#id15)。

+

+## 前置条件

+* G++ 4.8.2 ~ 4.9.4

+* CUDA 8.0/ CUDA 9.0

+* CMake 3.0+

+

+请确保系统已经安装好上述基本软件,**下面所有示例以工作目录为 `/root/projects/`演示**。

+

+### Step1: 下载代码

+

+1. `mkdir -p /root/projects/ && cd /root/projects`

+2. `git clone http://gitlab.baidu.com/Paddle/PaddleSeg.git`

+

+`C++`预测代码在`/root/projects/projects/PaddleSeg/inference` 目录,该目录不依赖任何`PaddleSeg`下其他目录。

+

+

+### Step2: 下载PaddlePaddle C++ 预测库 fluid_inference

+

+目前仅支持`CUDA 8` 和 `CUDA 9`,请点击 [PaddlePaddle预测库下载地址](https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/advanced_usage/deploy/inference/build_and_install_lib_cn.html)下载对应的版本。

+

+

+下载并解压后`/root/projects/fluid_inference`目录包含内容为:

+```

+fluid_inference

+├── paddle # paddle核心库和头文件

+|

+├── third_party # 第三方依赖库和头文件

+|

+└── version.txt # 版本和编译信息

+```

+

+### Step3: 安装配置OpenCV

+```shell

+# 0. 切换到/root/projects目录

+cd /root/projects

+# 1. 下载OpenCV3.4.6版本源代码

+wget -c https://paddleseg.bj.bcebos.com/inference/opencv-3.4.6.zip

+# 2. 解压

+unzip opencv-3.4.6.zip && cd opencv-3.4.6

+# 3. 创建build目录并编译, 这里安装到/usr/local/opencv3目录

+mkdir build && cd build

+cmake .. -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=/usr/local/opencv3

+make -j4

+make install

+```

+

+### Step4: 编译

+```shell

+cd /root/projects/PaddleSeg/inference

+mkdir build && cd build

+cmake .. -DWITH_GPU=ON -DPADDLE_DIR=/root/projects/fluid_inference -DCUDA_LIB=/usr/local/cuda/lib64/ -DOPENCV_DIR=/usr/local/opencv3/ -DCUDNN_LIB=/usr/local/cuda/lib64/

+make

+```

+

+### Step5: 预测及可视化

+

+执行命令:

+

+```

+./demo --conf=/path/to/your/conf --input_dir=/path/to/your/input/data/directory

+```

+

+更详细说明请参考ReadMe文档: [预测和可视化部分](../ReadMe.md)

+

+

diff --git a/inference/docs/windows_vs2015_build.md b/inference/docs/windows_vs2015_build.md

new file mode 100644

index 0000000000000000000000000000000000000000..b57167aeb977be8b8003fb57bf5d12a4824c2059

--- /dev/null

+++ b/inference/docs/windows_vs2015_build.md

@@ -0,0 +1,98 @@

+# Windows平台使用 Visual Studio 2015 编译指南

+

+本文档步骤,我们同时在`Visual Studio 2015` 和 `Visual Studio 2019 Community` 两个版本进行了测试,我们推荐使用[`Visual Studio 2019`直接编译`CMake`项目](./windows_vs2019_build.md)。

+

+

+## 前置条件

+* Visual Studio 2015

+* CUDA 8.0/ CUDA 9.0

+* CMake 3.0+

+

+请确保系统已经安装好上述基本软件,**下面所有示例以工作目录为 `D:\projects`演示**。

+

+### Step1: 下载代码

+

+1. 打开`cmd`, 执行 `cd /d D:\projects`

+2. `git clone http://gitlab.baidu.com/Paddle/PaddleSeg.git`

+

+`C++`预测库代码在`D:\projects\PaddleSeg\inference` 目录,该目录不依赖任何`PaddleSeg`下其他目录。

+

+

+### Step2: 下载PaddlePaddle C++ 预测库 fluid_inference

+

+根据Windows环境,下载相应版本的PaddlePaddle预测库,并解压到`D:\projects\`目录

+

+| CUDA | GPU | 下载地址 |

+|------|------|--------|

+| 8.0 | Yes | [fluid_inference.zip](https://bj.bcebos.com/v1/paddleseg/fluid_inference_win.zip) |

+| 9.0 | Yes | [fluid_inference_cuda90.zip](https://paddleseg.bj.bcebos.com/fluid_inference_cuda9_cudnn7.zip) |

+

+解压后`D:\projects\fluid_inference`目录包含内容为:

+```

+fluid_inference

+├── paddle # paddle核心库和头文件

+|

+├── third_party # 第三方依赖库和头文件

+|

+└── version.txt # 版本和编译信息

+```

+

+### Step3: 安装配置OpenCV

+

+1. 在OpenCV官网下载适用于Windows平台的3.4.6版本, [下载地址](https://sourceforge.net/projects/opencvlibrary/files/3.4.6/opencv-3.4.6-vc14_vc15.exe/download)

+2. 运行下载的可执行文件,将OpenCV解压至指定目录,如`D:\PaddleDeploy\opencv`

+3. 配置环境变量,如下流程所示

+ - 我的电脑->属性->高级系统设置->环境变量

+ - 在系统变量中找到Path(如没有,自行创建),并双击编辑

+ - 新建,将opencv路径填入并保存,如`D:\PaddleDeploy\opencv\build\x64\vc14\bin`

+

+### Step4: 以VS2015为例编译代码

+

+以下命令需根据自己系统中各相关依赖的路径进行修改

+

+* 调用VS2015, 请根据实际VS安装路径进行调整,打开cmd命令行工具执行以下命令

+* 其他vs版本(比如vs2019),请查找到对应版本的`vcvarsall.bat`路径,替换本命令即可

+

+```

+call "C:\Program Files (x86)\Microsoft Visual Studio 14.0\VC\vcvarsall.bat" amd64

+```

+

+* CMAKE编译工程

+ * PADDLE_DIR: fluid_inference预测库路径

+ * CUDA_LIB: CUDA动态库目录, 请根据实际安装情况调整

+ * OPENCV_DIR: OpenCV解压目录

+

+```

+# 切换到预测库所在目录

+cd /d D:\projects\PaddleSeg\inference\

+# 创建构建目录, 重新构建只需要删除该目录即可

+mkdir build

+cd build

+# cmake构建VS项目

+D:\projects\PaddleSeg\inference\build> cmake .. -G "Visual Studio 14 2015 Win64" -DWITH_GPU=ON -DPADDLE_DIR=D:\projects\fluid_inference -DCUDA_LIB=D:\projects\cudalib\v8.0\lib\x64 -DOPENCV_DIR=D:\projects\opencv -T host=x64

+```

+

+这里的`cmake`参数`-G`, 表示生成对应的VS版本的工程,可以根据自己的`VS`版本调整,具体请参考[cmake文档](https://cmake.org/cmake/help/v3.15/manual/cmake-generators.7.html)

+

+* 生成可执行文件

+

+```

+D:\projects\PaddleSeg\inference\build> msbuild /m /p:Configuration=Release cpp_inference_demo.sln

+```

+

+### Step5: 预测及可视化

+

+上述`Visual Studio 2015`编译产出的可执行文件在`build\release`目录下,切换到该目录:

+```

+cd /d D:\projects\PaddleSeg\inference\build\release

+```

+

+之后执行命令:

+

+```

+demo.exe --conf=/path/to/your/conf --input_dir=/path/to/your/input/data/directory

+```

+

+更详细说明请参考ReadMe文档: [预测和可视化部分](../README.md)

+

+

diff --git a/inference/docs/windows_vs2019_build.md b/inference/docs/windows_vs2019_build.md

new file mode 100644

index 0000000000000000000000000000000000000000..b60201f7c7c16330fe50b4eb0f51019c4ec01f71

--- /dev/null

+++ b/inference/docs/windows_vs2019_build.md

@@ -0,0 +1,101 @@

+# Visual Studio 2019 Community CMake 编译指南

+

+Windows 平台下,我们使用`Visual Studio 2015` 和 `Visual Studio 2019 Community` 进行了测试。微软从`Visual Studio 2017`开始即支持直接管理`CMake`跨平台编译项目,但是直到`2019`才提供了稳定和完全的支持,所以如果你想使用CMake管理项目编译构建,我们推荐你使用`Visual Studio 2019`环境下构建。

+

+你也可以使用和`VS2015`一样,通过把`CMake`项目转化成`VS`项目来编译,其中**有差别的部分**在文档中我们有说明,请参考:[使用Visual Studio 2015 编译指南](./windows_vs2015_build.md)

+

+## 前置条件

+* Visual Studio 2019

+* CUDA 8.0/ CUDA 9.0

+* CMake 3.0+

+

+请确保系统已经安装好上述基本软件,我们使用的是`VS2019`的社区版。

+

+**下面所有示例以工作目录为 `D:\projects`演示**。

+

+### Step1: 下载代码

+

+1. 点击下载源代码:[下载地址](https://github.com/PaddlePaddle/PaddleSeg/archive/master.zip)

+2. 解压,解压后目录重命名为`PaddleSeg`

+

+以下代码目录路径为`D:\projects\PaddleSeg` 为例。

+

+

+### Step2: 下载PaddlePaddle C++ 预测库 fluid_inference

+

+根据Windows环境,下载相应版本的PaddlePaddle预测库,并解压到`D:\projects\`目录

+

+| CUDA | GPU | 下载地址 |

+|------|------|--------|

+| 8.0 | Yes | [fluid_inference.zip](https://bj.bcebos.com/v1/paddleseg/fluid_inference_win.zip) |

+| 9.0 | Yes | [fluid_inference_cuda90.zip](https://paddleseg.bj.bcebos.com/fluid_inference_cuda9_cudnn7.zip) |

+

+解压后`D:\projects\fluid_inference`目录包含内容为:

+```

+fluid_inference

+├── paddle # paddle核心库和头文件

+|

+├── third_party # 第三方依赖库和头文件

+|

+└── version.txt # 版本和编译信息

+```

+**注意:** `CUDA90`版本解压后目录名称为`fluid_inference_cuda90`。

+

+### Step3: 安装配置OpenCV

+

+1. 在OpenCV官网下载适用于Windows平台的3.4.6版本, [下载地址](https://sourceforge.net/projects/opencvlibrary/files/3.4.6/opencv-3.4.6-vc14_vc15.exe/download)

+2. 运行下载的可执行文件,将OpenCV解压至指定目录,如`D:\projects\opencv`

+3. 配置环境变量,如下流程所示

+ - 我的电脑->属性->高级系统设置->环境变量

+ - 在系统变量中找到Path(如没有,自行创建),并双击编辑

+ - 新建,将opencv路径填入并保存,如`D:\projects\opencv\build\x64\vc14\bin`

+

+### Step4: 使用Visual Studio 2019直接编译CMake

+

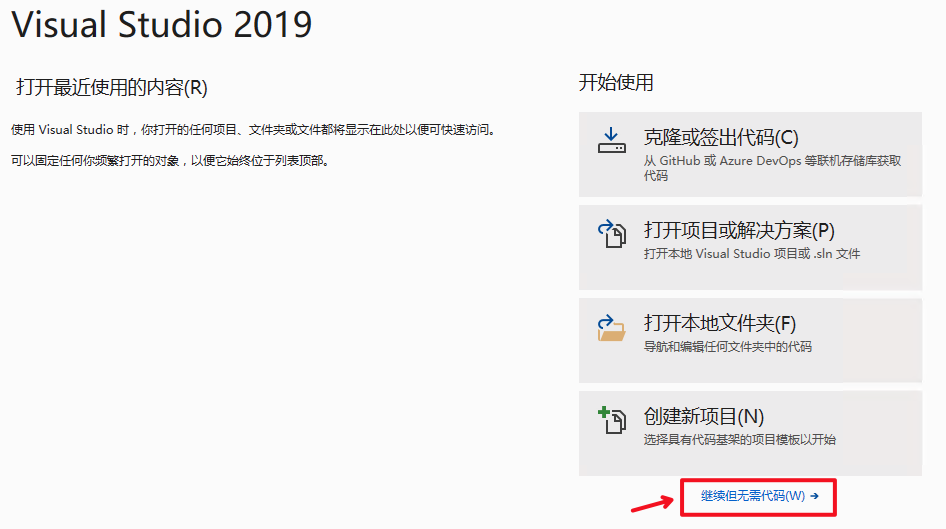

+1. 打开Visual Studio 2019 Community,点击`继续但无需代码`

+

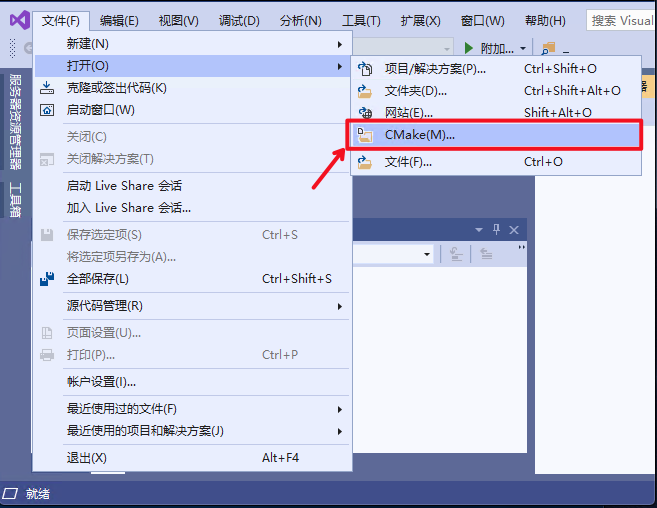

+2. 点击: `文件`->`打开`->`CMake`

+

+

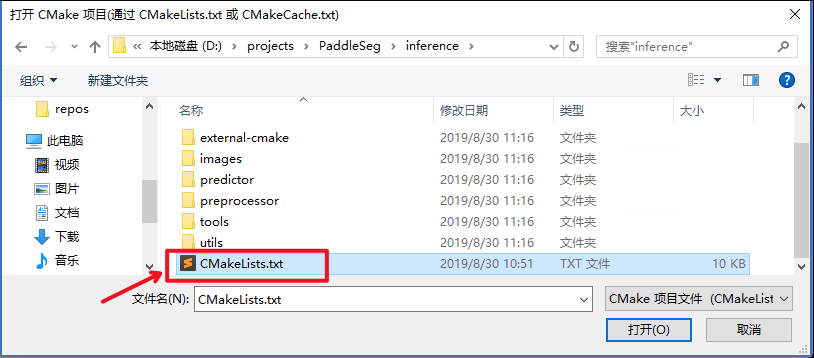

+选择项目代码所在路径,并打开`CMakeList.txt`:

+

+

+

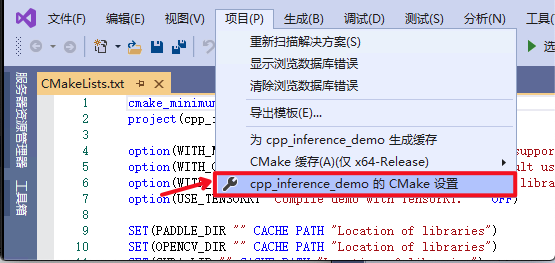

+3. 点击:`项目`->`cpp_inference_demo的CMake设置`

+

+

+

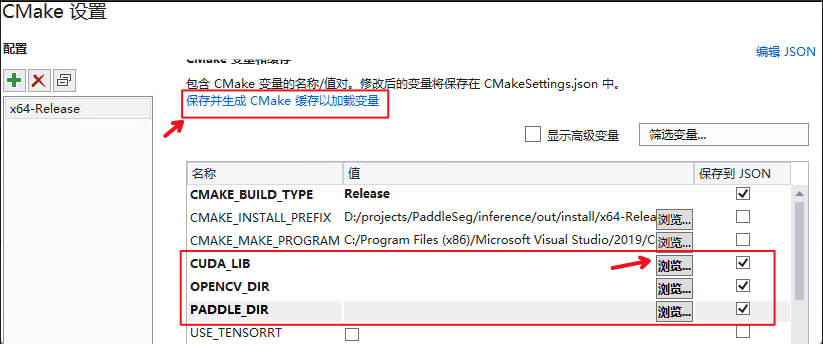

+4. 点击`浏览`,分别设置编译选项指定`CUDA`、`OpenCV`、`Paddle预测库`的路径

+

+

+

+三个编译参数的含义说明如下:

+

+| 参数名 | 含义 |

+| ---- | ---- |

+| CUDA_LIB | cuda的库路径 |

+| OPENCV_DIR | OpenCV的安装路径, |

+| PADDLE_DIR | Paddle预测库的路径 |

+

+**设置完成后**, 点击上图中`保存并生成CMake缓存以加载变量`。

+

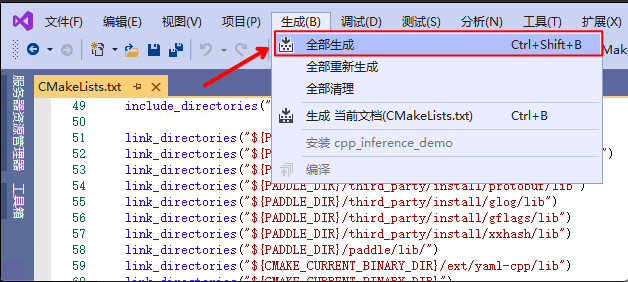

+5. 点击`生成`->`全部生成`

+

+

+

+

+### Step5: 预测及可视化

+

+上述`Visual Studio 2019`编译产出的可执行文件在`out\build\x64-Release`目录下,打开`cmd`,并切换到该目录:

+

+```

+cd /d D:\projects\PaddleSeg\inference\out\x64-Release

+```

+

+之后执行命令:

+

+```

+demo.exe --conf=/path/to/your/conf --input_dir=/path/to/your/input/data/directory

+```

+

+更详细说明请参考ReadMe文档: [预测和可视化部分](../ReadMe.md)

diff --git a/inference/external-cmake/yaml-cpp.cmake b/inference/external-cmake/yaml-cpp.cmake

index fefa1826876e5c25eef6367b6ca3bf49f72d7c10..15fa2674e00d85f1db7bbdfdceeebadaf0eabf5a 100644

--- a/inference/external-cmake/yaml-cpp.cmake

+++ b/inference/external-cmake/yaml-cpp.cmake

@@ -6,7 +6,7 @@ include(ExternalProject)

message("${CMAKE_BUILD_TYPE}")

ExternalProject_Add(

- yaml-cpp

+ ext-yaml-cpp

GIT_REPOSITORY https://github.com/jbeder/yaml-cpp.git

GIT_TAG e0e01d53c27ffee6c86153fa41e7f5e57d3e5c90

CMAKE_ARGS

@@ -26,4 +26,4 @@ ExternalProject_Add(

# Disable install step

INSTALL_COMMAND ""

LOG_DOWNLOAD ON

-)

\ No newline at end of file

+)

diff --git a/inference/images/humanseg/1.jpg b/inference/images/humanseg/1.jpg

deleted file mode 100644

index 33572fe5fcb36962e9b49cfcf7f9c888490226c1..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/1.jpg and /dev/null differ

diff --git a/inference/images/humanseg/10.jpg b/inference/images/humanseg/10.jpg

deleted file mode 100644

index 735d588935c618a4753f577b0411ca698de11030..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/10.jpg and /dev/null differ

diff --git a/inference/images/humanseg/11.jpg b/inference/images/humanseg/11.jpg

deleted file mode 100644

index 54ebeecf1c5374282cf1c5f927f655cdaafb03fe..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/11.jpg and /dev/null differ

diff --git a/inference/images/humanseg/12.jpg b/inference/images/humanseg/12.jpg

deleted file mode 100644

index 01c08457da7c8b077971b1801a4d209b2fbaee5a..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/12.jpg and /dev/null differ

diff --git a/inference/images/humanseg/13.jpg b/inference/images/humanseg/13.jpg

deleted file mode 100644

index bc989ebc693cb9b7cb2b8b78557e5d644b25712f..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/13.jpg and /dev/null differ

diff --git a/inference/images/humanseg/14.jpg b/inference/images/humanseg/14.jpg

deleted file mode 100644

index d5dbead2d253cca159028375f1a2c2dcae78a97f..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/14.jpg and /dev/null differ

diff --git a/inference/images/humanseg/2.jpg b/inference/images/humanseg/2.jpg

deleted file mode 100644

index b6e07945118facd5e40d4aa768394b3a3ef21201..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/2.jpg and /dev/null differ

diff --git a/inference/images/humanseg/3.jpg b/inference/images/humanseg/3.jpg

deleted file mode 100644

index 74a5e6e5841b3483dd5598a5390c13830fdc7b0e..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/3.jpg and /dev/null differ

diff --git a/inference/images/humanseg/4.jpg b/inference/images/humanseg/4.jpg

deleted file mode 100644

index 640fdf9167cd703b450dd4ad24d220ad4ea431a4..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/4.jpg and /dev/null differ

diff --git a/inference/images/humanseg/5.jpg b/inference/images/humanseg/5.jpg

deleted file mode 100644

index f6878129705b31f467729b06b176555cf6f956db..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/5.jpg and /dev/null differ

diff --git a/inference/images/humanseg/6.jpg b/inference/images/humanseg/6.jpg

deleted file mode 100644

index 3d10c0d1ea47987153f7c51e6b992d1dfe35d3ae..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/6.jpg and /dev/null differ

diff --git a/inference/images/humanseg/7.jpg b/inference/images/humanseg/7.jpg

deleted file mode 100644

index 41a99436a4d1cccdaf57e09646f1e40aca285a6b..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/7.jpg and /dev/null differ

diff --git a/inference/images/humanseg/8.jpg b/inference/images/humanseg/8.jpg

deleted file mode 100644

index 4f86baf95d9eb6b5849b84e58a7e2d47a18c8949..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/8.jpg and /dev/null differ

diff --git a/inference/images/humanseg/9.jpg b/inference/images/humanseg/9.jpg

deleted file mode 100644

index 9e4a6268ffc9377f729dcb391b1efef70139545c..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/9.jpg and /dev/null differ

diff --git a/inference/images/humanseg/demo.jpg b/inference/images/humanseg/demo.jpg

deleted file mode 100644

index 77b9a93e69db37e825c6e0c092636f9e4b3b5c33..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/demo.jpg and /dev/null differ

diff --git a/inference/images/humanseg/demo1.jpeg b/inference/images/humanseg/demo1.jpeg

new file mode 100644

index 0000000000000000000000000000000000000000..de231b52c7f0dc0848dea2bcf297cc8d908559ca

Binary files /dev/null and b/inference/images/humanseg/demo1.jpeg differ

diff --git a/inference/images/humanseg/demo2.jpeg b/inference/images/humanseg/demo2.jpeg

new file mode 100644

index 0000000000000000000000000000000000000000..c3919623107a4edc8210739d0f05930215f8cda6

Binary files /dev/null and b/inference/images/humanseg/demo2.jpeg differ

diff --git a/inference/images/humanseg/demo2_jpeg_recover.png b/inference/images/humanseg/demo2_jpeg_recover.png

new file mode 100644

index 0000000000000000000000000000000000000000..534bbd9443b1a93c65ce1ce7137a03a0ee0f864b

Binary files /dev/null and b/inference/images/humanseg/demo2_jpeg_recover.png differ

diff --git a/inference/images/humanseg/demo3.jpeg b/inference/images/humanseg/demo3.jpeg

new file mode 100644

index 0000000000000000000000000000000000000000..c02b837749065111d75a908d4f1182a593cdb143

Binary files /dev/null and b/inference/images/humanseg/demo3.jpeg differ

diff --git a/inference/images/humanseg/demo_jpg_recover.png b/inference/images/humanseg/demo_jpg_recover.png

deleted file mode 100644

index e28f06e30d5a6ea7373c5ff64c55b1d040f73a64..0000000000000000000000000000000000000000

Binary files a/inference/images/humanseg/demo_jpg_recover.png and /dev/null differ

diff --git a/inference/predictor/seg_predictor.cpp b/inference/predictor/seg_predictor.cpp

index 4b6e44e6f6662dbc67efe3db289e5a2f119b8f0c..ee32d75561e5d93fa11c7013d1a4a9f845dc9919 100644

--- a/inference/predictor/seg_predictor.cpp

+++ b/inference/predictor/seg_predictor.cpp

@@ -125,6 +125,10 @@ namespace PaddleSolution {

int Predictor::native_predict(const std::vector& imgs)

{

+ if (imgs.size() == 0) {

+ LOG(ERROR) << "No image found";

+ return -1;

+ }

int config_batch_size = _model_config._batch_size;

int channels = _model_config._channels;

@@ -205,6 +209,11 @@ namespace PaddleSolution {

int Predictor::analysis_predict(const std::vector& imgs) {

+ if (imgs.size() == 0) {

+ LOG(ERROR) << "No image found";

+ return -1;

+ }

+

int config_batch_size = _model_config._batch_size;

int channels = _model_config._channels;

int eval_width = _model_config._resize[0];

diff --git a/inference/utils/seg_conf_parser.h b/inference/utils/seg_conf_parser.h

index b2a2b4160217433b553540129e1075640822a9dc..078d04f3eb9dcd1763f69a8eb770c0853f9f1b24 100644

--- a/inference/utils/seg_conf_parser.h

+++ b/inference/utils/seg_conf_parser.h

@@ -28,7 +28,6 @@ namespace PaddleSolution {

_channels = 0;

_use_gpu = 0;

_batch_size = 1;

- _model_name.clear();

_model_file_name.clear();

_model_path.clear();

_param_file_name.clear();

@@ -57,7 +56,7 @@ namespace PaddleSolution {

}

bool load_config(const std::string& conf_file) {

-

+

reset();

YAML::Node config = YAML::LoadFile(conf_file);

@@ -79,8 +78,6 @@ namespace PaddleSolution {

_img_type = config["DEPLOY"]["IMAGE_TYPE"].as();

// 5. get class number

_class_num = config["DEPLOY"]["NUM_CLASSES"].as();

- // 6. get model_name

- _model_name = config["DEPLOY"]["MODEL_NAME"].as();

// 7. set model path

_model_path = config["DEPLOY"]["MODEL_PATH"].as();

// 8. get model file_name

@@ -101,7 +98,7 @@ namespace PaddleSolution {

}

void debug() const {

-

+

std::cout << "EVAL_CROP_SIZE: (" << _resize[0] << ", " << _resize[1] << ")" << std::endl;

std::cout << "MEAN: [";

@@ -129,7 +126,6 @@ namespace PaddleSolution {

std::cout << "DEPLOY.NUM_CLASSES: " << _class_num << std::endl;

std::cout << "DEPLOY.CHANNELS: " << _channels << std::endl;

std::cout << "DEPLOY.MODEL_PATH: " << _model_path << std::endl;

- std::cout << "DEPLOY.MODEL_NAME: " << _model_name << std::endl;

std::cout << "DEPLOY.MODEL_FILENAME: " << _model_file_name << std::endl;

std::cout << "DEPLOY.PARAMS_FILENAME: " << _param_file_name << std::endl;

std::cout << "DEPLOY.PRE_PROCESSOR: " << _pre_processor << std::endl;

@@ -152,8 +148,6 @@ namespace PaddleSolution {

int _channels;

// DEPLOY.MODEL_PATH

std::string _model_path;

- // DEPLOY.MODEL_NAME

- std::string _model_name;

// DEPLOY.MODEL_FILENAME

std::string _model_file_name;

// DEPLOY.PARAMS_FILENAME

diff --git a/inference/utils/utils.h b/inference/utils/utils.h

index e9c62b5778aa77e427ec5653d056035ee4028bd9..e349618a28282257b01ac44d661f292850cc19b9 100644

--- a/inference/utils/utils.h

+++ b/inference/utils/utils.h

@@ -3,7 +3,13 @@

#include

#include

#include

+

+#ifdef _WIN32

#include

+#else

+#include

+#include

+#endif

namespace PaddleSolution {

namespace utils {

@@ -14,7 +20,31 @@ namespace PaddleSolution {

#endif

return dir + seperator + path;

}

+ #ifndef _WIN32

+ // scan a directory and get all files with input extensions

+ inline std::vector get_directory_images(const std::string& path, const std::string& exts)

+ {

+ std::vector imgs;

+ struct dirent *entry;

+ DIR *dir = opendir(path.c_str());

+ if (dir == NULL) {

+ closedir(dir);

+ return imgs;

+ }

+ while ((entry = readdir(dir)) != NULL) {

+ std::string item = entry->d_name;

+ auto ext = strrchr(entry->d_name, '.');

+ if (!ext || std::string(ext) == "." || std::string(ext) == "..") {

+ continue;

+ }

+ if (exts.find(ext) != std::string::npos) {

+ imgs.push_back(path_join(path, entry->d_name));

+ }

+ }

+ return imgs;

+ }

+ #else

// scan a directory and get all files with input extensions

inline std::vector get_directory_images(const std::string& path, const std::string& exts)

{

@@ -28,5 +58,6 @@ namespace PaddleSolution {

}

return imgs;

}

+ #endif

}

}

diff --git a/pdseg/reader.py b/pdseg/reader.py

index 98a0eb0f4d5a1a51f6425bc049e46f6e741c765a..b4ebb4eba753b91aaa619c012611b7cab0614841 100644

--- a/pdseg/reader.py

+++ b/pdseg/reader.py

@@ -106,19 +106,21 @@ class SegDataset(object):

def batch(self, reader, batch_size, is_test=False, drop_last=False):

def batch_reader(is_test=False, drop_last=drop_last):

if is_test:

- imgs, img_names, valid_shapes, org_shapes = [], [], [], []

- for img, img_name, valid_shape, org_shape in reader():

+ imgs, grts, img_names, valid_shapes, org_shapes = [], [], [], [], []

+ for img, grt, img_name, valid_shape, org_shape in reader():

imgs.append(img)

+ grts.append(grt)

img_names.append(img_name)

valid_shapes.append(valid_shape)

org_shapes.append(org_shape)

if len(imgs) == batch_size:

- yield np.array(imgs), img_names, np.array(

- valid_shapes), np.array(org_shapes)

- imgs, img_names, valid_shapes, org_shapes = [], [], [], []

+ yield np.array(imgs), np.array(

+ grts), img_names, np.array(valid_shapes), np.array(

+ org_shapes)

+ imgs, grts, img_names, valid_shapes, org_shapes = [], [], [], [], []

if not drop_last and len(imgs) > 0:

- yield np.array(imgs), img_names, np.array(

+ yield np.array(imgs), np.array(grts), img_names, np.array(

valid_shapes), np.array(org_shapes)

else:

imgs, labs, ignore = [], [], []

@@ -146,93 +148,64 @@ class SegDataset(object):

# reserver alpha channel

cv2_imread_flag = cv2.IMREAD_UNCHANGED

- if mode == ModelPhase.TRAIN or mode == ModelPhase.EVAL:

- parts = line.strip().split(cfg.DATASET.SEPARATOR)

- if len(parts) != 2:

+ parts = line.strip().split(cfg.DATASET.SEPARATOR)

+ if len(parts) != 2:

+ if mode == ModelPhase.TRAIN or mode == ModelPhase.EVAL:

raise Exception("File list format incorrect! It should be"

" image_name{}label_name\\n".format(

cfg.DATASET.SEPARATOR))

+ img_name, grt_name = parts[0], None

+ else:

img_name, grt_name = parts[0], parts[1]

- img_path = os.path.join(src_dir, img_name)

- grt_path = os.path.join(src_dir, grt_name)

- img = cv2_imread(img_path, cv2_imread_flag)

+ img_path = os.path.join(src_dir, img_name)

+ img = cv2_imread(img_path, cv2_imread_flag)

+

+ if grt_name is not None:

+ grt_path = os.path.join(src_dir, grt_name)

grt = cv2_imread(grt_path, cv2.IMREAD_GRAYSCALE)

+ else:

+ grt = None

- if img is None or grt is None:

- raise Exception(

- "Empty image, src_dir: {}, img: {} & lab: {}".format(

- src_dir, img_path, grt_path))

+ if img is None:

+ raise Exception(

+ "Empty image, src_dir: {}, img: {} & lab: {}".format(

+ src_dir, img_path, grt_path))

- img_height = img.shape[0]

- img_width = img.shape[1]

+ img_height = img.shape[0]

+ img_width = img.shape[1]

+

+ if grt is not None:

grt_height = grt.shape[0]

grt_width = grt.shape[1]

if img_height != grt_height or img_width != grt_width:

raise Exception(

"source img and label img must has the same size")

-

- if len(img.shape) < 3:

- img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

-

- img_channels = img.shape[2]

- if img_channels < 3:

- raise Exception(

- "PaddleSeg only supports gray, rgb or rgba image")

- if img_channels != cfg.DATASET.DATA_DIM:

- raise Exception(

- "Input image channel({}) is not match cfg.DATASET.DATA_DIM({}), img_name={}"

- .format(img_channels, cfg.DATASET.DATADIM, img_name))

- if img_channels != len(cfg.MEAN):

- raise Exception(

- "img name {}, img chns {} mean size {}, size unequal".

- format(img_name, img_channels, len(cfg.MEAN)))

- if img_channels != len(cfg.STD):

- raise Exception(

- "img name {}, img chns {} std size {}, size unequal".format(

- img_name, img_channels, len(cfg.STD)))

-

- # visualization mode

- elif mode == ModelPhase.VISUAL:

- if cfg.DATASET.SEPARATOR in line:

- parts = line.strip().split(cfg.DATASET.SEPARATOR)

- img_name = parts[0]

- else:

- img_name = line.strip()

-

- img_path = os.path.join(src_dir, img_name)

- img = cv2_imread(img_path, cv2_imread_flag)

-

- if img is None:

- raise Exception("empty image, src_dir:{}, img: {}".format(

- src_dir, img_name))

-

- # Convert grayscale image to BGR 3 channel image

- if len(img.shape) < 3:

- img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

-

- img_height = img.shape[0]

- img_width = img.shape[1]

- img_channels = img.shape[2]

-

- if img_channels < 3:

- raise Exception("this repo only recept gray, rgb or rgba image")

- if img_channels != cfg.DATASET.DATA_DIM:

- raise Exception("data dim must equal to image channels")

- if img_channels != len(cfg.MEAN):

- raise Exception(

- "img name {}, img chns {} mean size {}, size unequal".

- format(img_name, img_channels, len(cfg.MEAN)))

- if img_channels != len(cfg.STD):

+ else:

+ if mode == ModelPhase.TRAIN or mode == ModelPhase.EVAL:

raise Exception(

- "img name {}, img chns {} std size {}, size unequal".format(

- img_name, img_channels, len(cfg.STD)))

+ "Empty image, src_dir: {}, img: {} & lab: {}".format(

+ src_dir, img_path, grt_path))

- grt = None

- grt_name = None

- else:

- raise ValueError("mode error: {}".format(mode))

+ if len(img.shape) < 3:

+ img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

+

+ img_channels = img.shape[2]

+ if img_channels < 3:

+ raise Exception("PaddleSeg only supports gray, rgb or rgba image")

+ if img_channels != cfg.DATASET.DATA_DIM:

+ raise Exception(

+ "Input image channel({}) is not match cfg.DATASET.DATA_DIM({}), img_name={}"

+ .format(img_channels, cfg.DATASET.DATADIM, img_name))

+ if img_channels != len(cfg.MEAN):

+ raise Exception(

+ "img name {}, img chns {} mean size {}, size unequal".format(

+ img_name, img_channels, len(cfg.MEAN)))

+ if img_channels != len(cfg.STD):

+ raise Exception(

+ "img name {}, img chns {} std size {}, size unequal".format(

+ img_name, img_channels, len(cfg.STD)))

return img, grt, img_name, grt_name

@@ -329,4 +302,4 @@ class SegDataset(object):

elif ModelPhase.is_eval(mode):

return (img, grt, ignore)

elif ModelPhase.is_visual(mode):

- return (img, img_name, valid_shape, org_shape)

+ return (img, grt, img_name, valid_shape, org_shape)

diff --git a/pdseg/vis.py b/pdseg/vis.py

index 0d7eb79893aaa263d0999f2a6ec3df3fbaf97c36..727edae1f9555badff040e7501253980d10dc3df 100644

--- a/pdseg/vis.py

+++ b/pdseg/vis.py

@@ -171,7 +171,7 @@ def visualize(cfg,

fetch_list = [pred.name]

test_reader = dataset.batch(dataset.generator, batch_size=1, is_test=True)

img_cnt = 0

- for imgs, img_names, valid_shapes, org_shapes in test_reader:

+ for imgs, grts, img_names, valid_shapes, org_shapes in test_reader:

pred_shape = (imgs.shape[2], imgs.shape[3])

pred, = exe.run(

program=test_prog,

@@ -185,6 +185,7 @@ def visualize(cfg,

# Add more comments

res_map = np.squeeze(pred[i, :, :, :]).astype(np.uint8)

img_name = img_names[i]

+ grt = grts[i]

res_shape = (res_map.shape[0], res_map.shape[1])

if res_shape[0] != pred_shape[0] or res_shape[1] != pred_shape[1]:

res_map = cv2.resize(

@@ -196,6 +197,11 @@ def visualize(cfg,

res_map, (org_shape[1], org_shape[0]),

interpolation=cv2.INTER_NEAREST)

+ if grt is not None:

+ grt = cv2.resize(

+ grt, (org_shape[1], org_shape[0]),

+ interpolation=cv2.INTER_NEAREST)

+

png_fn = to_png_fn(img_names[i])

if also_save_raw_results:

raw_fn = os.path.join(raw_save_dir, png_fn)

@@ -209,6 +215,8 @@ def visualize(cfg,

makedirs(dirname)

pred_mask = colorize(res_map, org_shapes[i], color_map)

+ if grt is not None:

+ grt = colorize(grt, org_shapes[i], color_map)

cv2.imwrite(vis_fn, pred_mask)

img_cnt += 1

@@ -233,7 +241,13 @@ def visualize(cfg,

img,

epoch,

dataformats='HWC')

- #TODO: add ground truth (label) images

+ #add ground truth (label) images

+ if grt is not None:

+ log_writer.add_image(

+ "Label/{}".format(img_names[i]),

+ grt[..., ::-1],

+ epoch,

+ dataformats='HWC')

# If in local_test mode, only visualize 5 images just for testing

# procedure

diff --git a/serving/COMPILE_GUIDE.md b/serving/COMPILE_GUIDE.md

index bc415682d932303d21c376c39527babbf1b29e58..a3aeea9c9e0200642f3b97dd74c283311f65ca67 100644

--- a/serving/COMPILE_GUIDE.md

+++ b/serving/COMPILE_GUIDE.md

@@ -1,95 +1,7 @@

# 源码编译安装及搭建服务流程

-本文将介绍源码编译安装以及在服务搭建流程。

+本文将介绍源码编译安装以及在服务搭建流程。编译前确保PaddleServing的依赖项安装完毕。依赖安装教程请前往[PaddleSegServing 依赖安装](./README.md).

-## 1. 系统依赖项

-

-依赖项 | 验证过的版本

- -- | --

-Linux | Centos 6.10 / 7

-CMake | 3.0+

-GCC | 4.8.2/5.4.0

-Python| 2.7

-GO编译器| 1.9.2

-openssl| 1.0.1+

-bzip2 | 1.0.6+

-

-如果需要使用GPU预测,还需安装以下几个依赖库

-

- GPU库 | 验证过的版本

- -- | --

-CUDA | 9.2

-cuDNN | 7.1.4

-nccl | 2.4.7

-

-

-## 2. 安装依赖项

-

-以下流程在百度云CentOS7.5+CUDA9.2环境下进行。

-### 2.1. 安装openssl、Go编译器以及bzip2

-

-```bash

-yum -y install openssl openssl-devel golang bzip2-libs bzip2-devel

-```

-

-### 2.2. 安装GPU预测的依赖项(如果需要使用GPU预测,必须执行此步骤)

-#### 2.2.1. 安装配置CUDA9.2以及cuDNN 7.1.4

-该百度云机器已经安装CUDA以及cuDNN,仅需复制相关头文件与链接库

-

-```bash

-# 看情况确定是否需要安装 cudnn

-# 进入 cudnn 根目录

-cd /home/work/cudnn/cudnn7.1.4

-# 拷贝头文件

-cp include/cudnn.h /usr/local/cuda/include/

-# 拷贝链接库

-cp lib64/libcudnn* /usr/local/cuda/lib64/

-# 修改头文件、链接库访问权限

-chmod a+r /usr/local/cuda/include/cudnn.h /usr/local/cuda/lib64/libcudnn*

-```

-

-#### 2.2.2. 安装nccl库

-

-```bash

-# 下载文件 nccl-repo-rhel7-2.4.7-ga-cuda9.2-1-1.x86_64.rpm

-wget -c https://paddlehub.bj.bcebos.com/serving/nccl-repo-rhel7-2.4.7-ga-cuda9.2-1-1.x86_64.rpm

-# 安装nccl的repo

-rpm -i nccl-repo-rhel7-2.4.7-ga-cuda9.2-1-1.x86_64.rpm

-# 更新索引

-yum -y update

-# 安装包

-yum -y install libnccl-2.4.7-1+cuda9.2 libnccl-devel-2.4.7-1+cuda9.2 libnccl-static-2.4.7-1+cuda9.2

-```

-

-### 2.3. 安装 cmake 3.15

-如果机器没有安装cmake或者已安装cmake的版本低于3.0,请执行以下步骤

-

-```bash

-# 如果原来的已经安装低于3.0版本的cmake,请先卸载原有低版本 cmake

-yum -y remove cmake

-# 下载源代码并解压

-wget -c https://github.com/Kitware/CMake/releases/download/v3.15.0/cmake-3.15.0.tar.gz

-tar xvfz cmake-3.15.0.tar.gz

-# 编译cmake

-cd cmake-3.15.0

-./configure

-make -j4

-# 安装并检查cmake版本

-make install

-cmake --version

-# 在cmake-3.15.0目录中,将相应的头文件目录(curl目录,为PaddleServing的依赖头文件目录)拷贝到系统include目录下

-cp -r Utilities/cmcurl/include/curl/ /usr/include/

-```

-

-### 2.4. 为依赖库增加相应的软连接

-

- 现在Linux系统中大部分链接库的名称都以版本号作为后缀,如libcurl.so.4.3.0。这种命名方式最大的问题是,CMakeList.txt中find_library命令是无法识别使用这种命名方式的链接库,会导致CMake时候出错。由于本项目是用CMake构建,所以务必保证相应的链接库以 .so 或 .a为后缀命名。解决这个问题最简单的方式就是用创建一个软连接指向相应的链接库。在百度云的机器中,只有curl库的命名方式有问题。所以命令如下:(如果是其他库,解决方法也类似):

-

-```bash

-ln -s /usr/lib64/libcurl.so.4.3.0 /usr/lib64/libcurl.so

-```

-

-

-### 2.5. 编译安装PaddleServing

+## 1. 编译安装PaddleServing

下列步骤介绍CPU版本以及GPU版本的PaddleServing编译安装过程。

```bash

@@ -134,7 +46,7 @@ serving

└── tools

```

-### 2.6. 安装PaddleSegServing

+## 2. 安装PaddleSegServing

```bash

# Step 1. 在~目录下下载PaddleSeg代码

@@ -243,4 +155,4 @@ make install

可参考[预编译安装流程](./README.md)中2.2.2节。可执行文件在该目录下:~/serving/build/output/demo/seg-serving/bin/。

### 3.4 运行客户端程序进行测试。

-可参考[预编译安装流程](./README.md)中2.2.3节。

+可参考[预编译安装流程](./README.md)中2.2.3节。

\ No newline at end of file

diff --git a/serving/README.md b/serving/README.md

index 826b53efc1bfe790114ce0f57309be0e3953a324..71a1e6f442728cbb8791ca65b9406b070ecfe049 100644

--- a/serving/README.md

+++ b/serving/README.md

@@ -1,25 +1,105 @@

-# PaddleSeg Serving

+# PaddleSegServing

## 1.简介

PaddleSegServing是基于PaddleSeg开发的实时图像分割服务的企业级解决方案。用户仅需关注模型本身,无需理解模型模型的加载、预测以及GPU/CPU资源的并发调度等细节操作,通过设置不同的参数配置,即可根据自身的业务需求定制化不同图像分割服务。目前,PaddleSegServing支持人脸分割、城市道路分割、宠物外形分割模型。本文将通过一个人脸分割服务的搭建示例,展示PaddleSeg服务通用的搭建流程。

## 2.预编译版本安装及搭建服务流程

-### 2.1. 下载预编译的PaddleSegServing

+运行PaddleSegServing需要依赖其他的链接库,请保证在下载安装前系统环境已经具有相应的依赖项。

+安装以及搭建服务的流程均在Centos和Ubuntu系统上验证。以下是Centos系统上的搭建流程,Ubuntu版本的依赖项安装流程介绍在[Ubuntu系统下依赖项的安装教程](UBUNTU.md)。

+

+### 2.1. 系统依赖项

+依赖项 | 验证过的版本

+ -- | --

+Linux | Centos 6.10 / 7, Ubuntu16.07

+CMake | 3.0+

+GCC | 4.8.2

+Python| 2.7

+GO编译器| 1.9.2

+openssl| 1.0.1+

+bzip2 | 1.0.6+

+

+如果需要使用GPU预测,还需安装以下几个依赖库

+

+ GPU库 | 验证过的版本

+ -- | --

+CUDA | 9.2

+cuDNN | 7.1.4

+nccl | 2.4.7

+

+### 2.2. 安装依赖项

+

+#### 2.2.1. 安装openssl、Go编译器以及bzip2

+

+```bash

+yum -y install openssl openssl-devel golang bzip2-libs bzip2-devel

+```

+#### 2.2.2. 安装GPU预测的依赖项(如果需要使用GPU预测,必须执行此步骤)

+#### 2.2.2.1. 安装配置CUDA 9.2以及cuDNN 7.1.4

+请确保正确安装CUDA 9.2以及cuDNN 7.1.4. 以下为安装CUDA和cuDNN的官方教程。

+

+```bash

+安装CUDA教程: https://developer.nvidia.com/cuda-90-download-archive?target_os=Linux&target_arch=x86_64&target_distro=CentOS&target_version=7&target_type=rpmnetwork

+

+安装cuDNN教程: https://docs.nvidia.com/deeplearning/sdk/cudnn-install/index.html

+```

+

+#### 2.2.2.2. 安装nccl库(如果已安装nccl 2.4.7请忽略该步骤)

+

+```bash

+# 下载文件 nccl-repo-rhel7-2.4.7-ga-cuda9.2-1-1.x86_64.rpm

+wget -c https://paddlehub.bj.bcebos.com/serving/nccl-repo-rhel7-2.4.7-ga-cuda9.2-1-1.x86_64.rpm

+# 安装nccl的repo

+rpm -i nccl-repo-rhel7-2.4.7-ga-cuda9.2-1-1.x86_64.rpm

+# 更新索引

+yum -y update

+# 安装包

+yum -y install libnccl-2.4.7-1+cuda9.2 libnccl-devel-2.4.7-1+cuda9.2 libnccl-static-2.4.7-1+cuda9.2

+```

+

+### 2.2.3. 安装 cmake 3.15

+如果机器没有安装cmake或者已安装cmake的版本低于3.0,请执行以下步骤

+

+```bash

+# 如果原来的已经安装低于3.0版本的cmake,请先卸载原有低版本 cmake

+yum -y remove cmake

+# 下载源代码并解压

+wget -c https://github.com/Kitware/CMake/releases/download/v3.15.0/cmake-3.15.0.tar.gz

+tar xvfz cmake-3.15.0.tar.gz

+# 编译cmake

+cd cmake-3.15.0

+./configure

+make -j4

+# 安装并检查cmake版本

+make install

+cmake --version

+# 在cmake-3.15.0目录中,将相应的头文件目录(curl目录,为PaddleServing的依赖头文件目录)拷贝到系统include目录下

+cp -r Utilities/cmcurl/include/curl/ /usr/include/

+```

+

+### 2.2.4. 为依赖库增加相应的软连接

+

+ 现在Linux系统中大部分链接库的名称都以版本号作为后缀,如libcurl.so.4.3.0。这种命名方式最大的问题是,CMakeList.txt中find_library命令是无法识别使用这种命名方式的链接库,会导致CMake时候出错。由于本项目是用CMake构建,所以务必保证相应的链接库以 .so 或 .a为后缀命名。解决这个问题最简单的方式就是用创建一个软连接指向相应的链接库。在百度云的机器中,只有curl库的命名方式有问题。所以命令如下:(如果是其他库,解决方法也类似):

+

+```bash

+ln -s /usr/lib64/libcurl.so.4.3.0 /usr/lib64/libcurl.so

+```

+

+### 2.3. 下载预编译的PaddleSegServing

预编译版本在Centos7.6系统下编译,如果想快速体验PaddleSegServing,可在此系统下下载预编译版本进行安装。预编译版本有两个,一个是针对有GPU的机器,推荐安装GPU版本PaddleSegServing。另一个是CPU版本PaddleServing,针对无GPU的机器。

-#### 2.1.1. 下载并解压GPU版本PaddleSegServing

+#### 2.3.1. 下载并解压GPU版本PaddleSegServing

```bash

cd ~

-wget -c XXXX/PaddleSegServing.centos7.6_cuda9.2_gpu.tar.gz

-tar xvfz PaddleSegServing.centos7.6_cuda9.2_gpu.tar.gz

+wget -c --no-check-certificate https://paddleseg.bj.bcebos.com/serving/paddle_seg_serving_centos7.6_gpu_cuda9.2.tar.gz

+tar xvfz PaddleSegServing.centos7.6_cuda9.2_gpu.tar.gz seg-serving

```

-#### 2.1.2. 下载并解压CPU版本PaddleSegServing

+#### 2.3.2. 下载并解压CPU版本PaddleSegServing

```bash

cd ~

-wget -c XXXX/PaddleSegServing.centos7.6_cuda9.2_cpu.tar.gz

-tar xvfz PaddleSegServing.centos7.6_cuda9.2_gpu.tar.gz

+wget -c --no-check-certificate https://paddleseg.bj.bcebos.com/serving/paddle_seg_serving_centos7.6_cpu.tar.gz

+tar xvfz PaddleSegServing.centos7.6_cuda9.2_gpu.tar.gz seg-serving

```

解压后的PaddleSegServing目录如下。

@@ -36,13 +116,22 @@ tar xvfz PaddleSegServing.centos7.6_cuda9.2_gpu.tar.gz

└── log

```

-### 2.2. 运行PaddleSegServing

+### 2.4 安装动态库

+把 libiomp5.so, libmklml_gnu.so, libmklml_intel.so拷贝到/usr/lib。

+

+```bash

+cd seg-serving/bin/

+cp libiomp5.so libmklml_gnu.so libmklml_intel.so /usr/lib

+```

+

+

+### 2.5. 运行PaddleSegServing

本节将介绍如何运行以及测试PaddleSegServing。

-#### 2.2.1. 搭建人脸分割服务

+#### 2.5.1. 搭建人脸分割服务

搭建人脸分割服务只需完成一些配置文件的编写即可,其他分割服务的搭建流程类似。

-##### 2.2.1.1. 下载人脸分割模型文件,并将其复制到相应目录。

+#### 2.5.1.1. 下载人脸分割模型文件,并将其复制到相应目录。

```bash

# 下载人脸分割模型

wget -c https://paddleseg.bj.bcebos.com/inference_model/deeplabv3p_xception65_humanseg.tgz

@@ -52,11 +141,7 @@ cp -r deeplabv3p_xception65_humanseg seg-serving/bin/data/model/paddle/fluid

```

-##### 2.2.1.2. 配置参数文件

-

-参数文件如,PaddleSegServing仅新增一个配置文件seg_conf.yaml,用来指定具体分割模型的一些参数,如均值、方差、图像尺寸等。该配置文件可在gflags.conf中通过--seg_conf_file指定。

-

-其他配置文件的字段解释可参考以下链接:https://github.com/PaddlePaddle/Serving/blob/develop/doc/SERVING_CONFIGURE.md (TODO:介绍seg_conf.yaml中每个字段的含义)

+#### 2.5.1.2. 配置参数文件。参数文件如下。PaddleSegServing仅新增一个配置文件seg_conf.yaml,用来指定具体分割模型的一些参数,如均值、方差、图像尺寸等。该配置文件可在gflags.conf中通过--seg_conf_file指定。其他配置文件的字段解释可参考以下链接:https://github.com/PaddlePaddle/Serving/blob/develop/doc/SERVING_CONFIGURE.md

```bash

conf/

@@ -68,7 +153,25 @@ conf/

└── workflow.prototxt

```

-#### 2.2.2 运行服务端程序

+以下为seg_conf.yaml文件内容以及每一个配置项的内容。

+

+```bash

+%YAML:1.0

+# 输入到模型的图像的尺寸。会将任意图片resize到513*513尺寸的图像,再放入模型进行推测。

+SIZE: [513, 513]

+# 均值

+MEAN: [104.008, 116.669, 122.675]

+# 方差

+STD: [1.0, 1.0, 1.0]

+# 通道数

+CHANNELS: 3

+# 类别数量

+CLASS_NUM: 2

+# 加载的模型的名称,需要与model_toolkit.prototxt中对应模型的名称保持一致。

+MODEL_NAME: "human_segmentation"

+```

+

+#### 2.5.2 运行服务端程序

```bash

# 1. 设置环境变量

@@ -77,17 +180,44 @@ export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/lib64:$LD_LIBRARY_PATH

cd ~/serving/build/output/demo/seg-serving/bin/

./seg-serving

```

-#### 2.2.3.运行客户端程序进行测试 (建议在windows、mac测试,可直接查看分割后的图像)

+#### 2.5.3.运行客户端程序

+以下为PaddleSeg的目录结构,客户端在PaddleSeg/serving/tools目录。

-客户端程序是用Python3编写的,代码简洁易懂,可以通过运行客户端验证服务的正确性以及性能表现。

+```bash

+PaddleSeg

+├── configs

+├── contrib

+├── dataset

+├── docs

+├── inference

+├── pdseg

+├── README.md

+├── requirements.txt

+├── scripts

+├── serving

+│ ├── COMPILE_GUIDE.md

+│ ├── imgs

+│ ├── README.md

+│ ├── requirements.txt # 客户端程序依赖的包

+│ ├── seg-serving

+│ ├── tools # 客户端目录

+│ │ ├── images # 测试的图像目录,可放置jpg格式或其他三通道格式的图像,以jpg或jpeg作为文件后缀名

+│ │ │ ├── 1.jpg

+│ │ │ ├── 2.jpg

+│ │ │ └── 3.jpg

+│ │ └── image_seg_client.py # 客户端测试代码

+│ └── UBUNTU.md

+├── test

+└── test.md

+```

+客户端程序使用Python3编写,通过下载requirements.txt中的python依赖包(`pip3 install -r requirements.txt`),用户可以在Windows、Mac、Linux等平台上正常运行该客户端,测试的图像放在PaddleSeg/serving/tools/images目录,用户可以根据自己需要把其他三通道格式的图片放置到该目录下进行测试。从服务端返回的结果图像保存在PaddleSeg/serving/tools目录下。

```bash

-# 使用Python3.6,需要安装opencv-python、requests、numpy包(建议安装anaconda)

cd tools

vim image_seg_client.py (修改IMAGE_SEG_URL变量,改成服务端的ip地址)

python3.6 image_seg_client.py

-# 当前目录下可以看到生成出分割结果的图片。

+# 当前目录可以看到生成出分割结果的图片。

```

## 3. 源码编译安装及搭建服务流程 (可选)

-源码编译安装时间较长,一般推荐在centos7.6下安装预编译版本进行使用。如果您系统版本非centos7.6或者您想进行二次开发,请点击以下链接查看[源码编译安装流程](./COMPILE_GUIDE.md)。

+源码编译安装时间较长,一般推荐在centos7.6下安装预编译版本进行使用。如果您系统版本非centos7.6或者您想进行二次开发,请点击以下链接查看[源码编译安装流程](./COMPILE_GUIDE.md)。

\ No newline at end of file

diff --git a/serving/UBUNTU.md b/serving/UBUNTU.md

new file mode 100644

index 0000000000000000000000000000000000000000..608a5ce9b53587595aed7c21f245cd36f09515a7

--- /dev/null

+++ b/serving/UBUNTU.md

@@ -0,0 +1,76 @@

+# Ubuntu系统下依赖项的安装教程

+运行PaddleSegServing需要系统安装一些依赖库。在不同发行版本的Linux系统下,安装依赖项的具体命令略有不同,以下介绍在Ubuntu 16.07下安装依赖项的方法。

+

+## 1. 安装ssl、go、python、bzip2、crypto.

+

+```bash

+sudo apt-get install golang-1.10 python2.7 libssl1.0.0 libssl-dev libssl-doc libcrypto++-dev libcrypto++-doc libcrypto++-utils libbz2-1.0 libbz2-dev

+```

+

+## 2. 为ssl、crypto、curl链接库添加软连接

+

+```bash

+ln -s /lib/x86_64-linux-gnu/libssl.so.1.0.0 /usr/lib/x86_64-linux-gnu/libssl.so

+ln -s /lib/x86_64-linux-gnu/libcrypto.so.1.0.0 /usr/lib/x86_64-linux-gnu/libcrypto.so.10

+ln -s /usr/lib/x86_64-linux-gnu/libcurl.so.4.4.0 /usr/lib/x86_64-linux-gnu/libcurl.so

+```

+

+## 3. 安装GPU依赖项(如果需要使用GPU预测,必须执行此步骤)

+### 3.1. 安装配置CUDA 9.2以及cuDNN 7.1.4

+方法与[预编译安装流程](README.md) 2.2.2.1节一样。

+

+### 3.2. 安装nccl库(如果已安装nccl 2.4.7请忽略该步骤)

+

+```bash

+# 下载nccl相关的deb包

+wget -c --no-check-certificate https://paddleseg.bj.bcebos.com/serving/nccl-repo-ubuntu1604-2.4.8-ga-cuda9.2_1-1_amd64.deb

+sudo apt-key add /var/nccl-repo-2.4.8-ga-cuda9.2/7fa2af80.pub

+# 安装deb包

+sudo dpkg -i nccl-repo-ubuntu1604-2.4.8-ga-cuda9.2_1-1_amd64.deb

+# 更新索引

+sudo apt update

+# 安装nccl库

+sudo apt-get install libnccl2 libnccl-dev

+```

+

+## 4. 安装cmake 3.15

+如果机器没有安装cmake或者已安装cmake的版本低于3.0,请执行以下步骤

+

+```bash

+# 如果原来的已经安装低于3.0版本的cmake,请先卸载原有低版本 cmake

+sudo apt-get autoremove cmake

+```

+其余安装cmake的流程请参考以下链接[预编译安装流程](README.md) 2.2.3节。

+

+## 5. 安装PaddleSegServing

+### 5.1. 下载并解压GPU版本PaddleSegServing

+

+```bash

+cd ~

+wget -c --no-check-certificate https://paddleseg.bj.bcebos.com/serving/paddle_seg_serving_ubuntu16.07_gpu_cuda9.2.tar.gz

+tar xvfz PaddleSegServing.ubuntu16.07_cuda9.2_gpu.tar.gz seg-serving

+```

+

+### 5.2. 下载并解压CPU版本PaddleSegServing

+

+```bash

+cd ~

+wget -c --no-check-certificate https://paddleseg.bj.bcebos.com/serving%2Fpaddle_seg_serving_ubuntu16.07_cpu.tar.gz

+tar xvfz PaddleSegServing.ubuntu16.07_cuda9.2_gpu.tar.gz seg-serving

+```

+

+## 6. gcc版本问题

+在Ubuntu 16.07系统中,默认的gcc版本为5.4.0。而目前PaddleSegServing仅支持gcc 4.8编译,所以如果测试的机器gcc版本为5.4,请先进行降级(无需卸载原有的gcc)。

+

+```bash

+# 安装gcc 4.8

+sudo apt-get install gcc-4.8

+# 查看是否成功安装gcc4.8

+ls /usr/bin/gcc*

+# 设置gcc4.8的优先级,使其能被gcc命令优先连接gcc4.8

+sudo update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-4.8 100

+# 查看设置结果(非必须)

+sudo update-alternatives --config gcc

+```

+

+

diff --git a/serving/seg-serving/conf/gflags.conf b/serving/seg-serving/conf/gflags.conf

index 87318f8c08db1ea7897630cb5bf964b0bf98ced2..1c49f42918e1c8fac32985cf880a67963a877dee 100644

--- a/serving/seg-serving/conf/gflags.conf

+++ b/serving/seg-serving/conf/gflags.conf

@@ -1,2 +1,5 @@

--enable_model_toolkit

--seg_conf_file=./conf/seg_conf.yaml

+--num_threads=1

+--bthread_min_concurrency=4

+--bthread_concurrency=4

diff --git a/serving/seg-serving/conf/model_toolkit.prototxt b/serving/seg-serving/conf/model_toolkit.prototxt

index 0cf63dffc9c9375f30f531a0c92a15df174182e8..9bc44c7e5a8974f0561526296f0faeb7f14f5df7 100644

--- a/serving/seg-serving/conf/model_toolkit.prototxt

+++ b/serving/seg-serving/conf/model_toolkit.prototxt

@@ -1,6 +1,6 @@

engines {

name: "human_segmentation"

- type: "FLUID_GPU_NATIVE"

+ type: "FLUID_GPU_ANALYSIS"

reloadable_meta: "./data/model/paddle/fluid_time_file"

reloadable_type: "timestamp_ne"

model_data_path: "./data/model/paddle/fluid/deeplabv3p_xception65_humanseg"

diff --git a/serving/seg-serving/op/image_seg_op.cpp b/serving/seg-serving/op/image_seg_op.cpp

index 77314705c8383c814bef61df0625db5ac0ccf3d3..0473b51582b529a694d2faab40865c795da492ea 100644

--- a/serving/seg-serving/op/image_seg_op.cpp

+++ b/serving/seg-serving/op/image_seg_op.cpp

@@ -128,16 +128,30 @@ int ImageSegOp::inference() {

mask_raw[di] = label;

}

+ //cv::Mat mask_mat = cv::Mat(height, width, CV_32FC1);

cv::Mat mask_mat = cv::Mat(height, width, CV_8UC1);

- mask_mat.data = mask_raw.data();

+ //scoremap

+ // mask_mat.data = reinterpret_cast(data + out_size);

+ //mask_mat.data = mask_raw.data();

+ std::vector temp_mat(out_size, 0);

+ for(int i = 0; i < out_size; ++i){

+ temp_mat[i] = 255 * data[i + out_size];

+ }

+ mask_mat.data = temp_mat.data();

+

cv::Mat mask_temp_mat((*height_vec)[si], (*width_vec)[si], mask_mat.type());

//Size(cols, rows)

cv::resize(mask_mat, mask_temp_mat, mask_temp_mat.size());

- // cv::resize(mask_mat, mask_temp_mat, cv::Size((*width_vec)[si], (*height_vec)[si]));

-

+//debug

+ //for(int i = 0; i < (*height_vec)[si]; ++i){

+ // for(int j = 0; j < (*width_vec)[si]; ++j) {

+ // std::cout << mask_temp_mat.at(i, j) << " ";

+ // }

+ // std::cout << std::endl;

+ //}

std::vector mat_buff;

cv::imencode(".png", mask_temp_mat, mat_buff);

- ins->set_mask(mat_buff.data(), mat_buff.size());

+ ins->set_mask(reinterpret_cast(mat_buff.data()), mat_buff.size());

}

// release out tensor object resource

diff --git a/serving/seg-serving/op/reader_op.cpp b/serving/seg-serving/op/reader_op.cpp

index d7a630e077df95341b14cec51ca3db4761211644..b825d81bae728f4fe5bc0d7982995f3ae00326fa 100644

--- a/serving/seg-serving/op/reader_op.cpp

+++ b/serving/seg-serving/op/reader_op.cpp

@@ -103,7 +103,8 @@ int ReaderOp::inference() {

const ImageSegReqItem& ins = req->instances(si);

// read dense image from request bytes

const char* binary = ins.image_binary().c_str();

- size_t length = ins.image_length();

+ //size_t length = ins.image_length();

+ size_t length = ins.image_binary().length();

if (length == 0) {

LOG(ERROR) << "Empty image, length is 0";

return -1;

diff --git a/serving/seg-serving/op/write_json_op.cpp b/serving/seg-serving/op/write_json_op.cpp

index 17a4dc3c5dd11a80457d2f58aadb7ef65d2e2305..fa80d61da46aa7ed05c361c26a64a8e58d24457f 100644

--- a/serving/seg-serving/op/write_json_op.cpp

+++ b/serving/seg-serving/op/write_json_op.cpp

@@ -50,7 +50,7 @@ int WriteJsonOp::inference() {

std::string err_string;

uint32_t batch_size = seg_out->item_size();

LOG(INFO) << "batch_size = " << batch_size;

- LOG(INFO) << seg_out->ShortDebugString();

+// LOG(INFO) << seg_out->ShortDebugString();

for (uint32_t si = 0; si < batch_size; si++) {

ResponseItem* ins = res->add_prediction();

//LOG(INFO) << "Original image width = " << seg_out->width(si) << ", height = " << seg_out->height(si);

@@ -59,6 +59,7 @@ int WriteJsonOp::inference() {

return -1;

}

std::string* text = ins->mutable_info();

+ LOG(INFO) << seg_out->item(si).ShortDebugString();

if (!ProtoMessageToJson(seg_out->item(si), text, &err_string)) {

LOG(ERROR) << "Failed convert message["

<< seg_out->item(si).ShortDebugString()

diff --git a/serving/tools/image_seg_client.py b/serving/tools/image_seg_client.py

index caef60fb75a5c11ec06557ac7bd9bb883eea4688..a537feccb3993e4067ebec83662fe63e8c1d2d76 100644

--- a/serving/tools/image_seg_client.py

+++ b/serving/tools/image_seg_client.py

@@ -1,6 +1,5 @@

# coding: utf-8

-import sys

-

+import os

import cv2

import requests

import json

@@ -8,115 +7,96 @@ import base64

import numpy as np

import time

import threading

+import re

#分割服务的地址

-#IMAGE_SEG_URL = 'http://yq01-gpu-151-23-00.epc:8010/ImageSegService/inference'

-#IMAGE_SEG_URL = 'http://106.12.25.202:8010/ImageSegService/inference'

-IMAGE_SEG_URL = 'http://180.76.118.53:8010/ImageSegService/inference'

-

-# 请求预测服务

-# input_img 要预测的图片列表

-def get_item_json(input_img):

- with open(input_img, mode="rb") as fp:

- # 使用 http 协议请求服务时, 请使用 base64 编码发送图片

- item_binary_b64 = str(base64.b64encode(fp.read()), 'utf-8')

- item_size = len(item_binary_b64)

- item_json = {

- "image_length": item_size,

- "image_binary": item_binary_b64

- }

- return item_json

-

-

-def request_predictor_server(input_img_list, dir_name):

- data = {"instances" : [get_item_json(dir_name + input_img) for input_img in input_img_list]}

- response = requests.post(IMAGE_SEG_URL, data=json.dumps(data))

- try:

- response = json.loads(response.text)

- prediction_list = response["prediction"]

- mask_response_list = [mask_response["info"] for mask_response in prediction_list]

- mask_raw_list = [json.loads(mask_response)["mask"] for mask_response in mask_response_list]

- except Exception as err:

- print ("Exception[%s], server_message[%s]" % (str(err), response.text))

- return None

- # 使用 json 协议回复的包也是 base64 编码过的

- mask_binary_list = [base64.b64decode(mask_raw) for mask_raw in mask_raw_list]

- m = [np.fromstring(mask_binary, np.uint8) for mask_binary in mask_binary_list]

- return m

-

-# 对预测结果进行可视化

-# input_raw_mask 是server返回的预测结果

-# output_img 是可视化结果存储路径

-def visualization(mask_mat, output_img):

- # ColorMap for visualization more clearly

- color_map = [[128, 64, 128],

- [244, 35, 231],

- [69, 69, 69],

- [102, 102, 156],

- [190, 153, 153],

- [153, 153, 153],

- [250, 170, 29],

- [219, 219, 0],

- [106, 142, 35],

- [152, 250, 152],

- [69, 129, 180],

- [219, 19, 60],

- [255, 0, 0],

- [0, 0, 142],

- [0, 0, 69],

- [0, 60, 100],

- [0, 79, 100],

- [0, 0, 230],

- [119, 10, 32]]

-

- im = cv2.imdecode(mask_mat, 1)

- w, h, c = im.shape

- im2 = cv2.resize(im, (w, h))

- im = im2

- for i in range(0, h):

- for j in range(0, w):

- im[i, j] = color_map[im[i, j, 0]]

- cv2.imwrite(output_img, im)

-

-

-#benchmark test

-def benchmark_test(batch_size, img_list):

- start = time.time()

- total_size = len(img_list)

- for i in range(0, total_size, batch_size):

- mask_mat_list = request_predictor_server(img_list[i : np.min([i + batch_size, total_size])], "images/")

- # 将获得的mask matrix转换成可视化图像,并在当前目录下保存为图像文件

- # 如果进行压测,可以把这句话注释掉

- # for j in range(len(mask_mat_list)):

- # visualization(mask_mat_list[j], img_list[j + i])

- latency = time.time() - start

- print("batch size = %d, total latency = %f s" % (batch_size, latency))

-

+IMAGE_SEG_URL = 'http://xxx.xxx.xxx.xxx:8010/ImageSegService/inference'

class ClientThread(threading.Thread):

- def __init__(self, thread_id, batch_size):

+ def __init__(self, thread_id, image_data_repo):

threading.Thread.__init__(self)

self.__thread_id = thread_id

- self.__batch_size = batch_size

+ self.__image_data_repo = image_data_repo

def run(self):

- self.request_image_seg_service(3)

+ self.__request_image_seg_service()

- def request_image_seg_service(self, imgs_num):

- total_size = imgs_num

- img_list = [str(i + 1) + ".jpg" for i in range(total_size)]

- # batch_size_list = [2**i for i in range(0, 4)]

+ def __request_image_seg_service(self):

# 持续发送150个请求

- batch_size_list = [self.__batch_size] * 150

- i = 1

- for batch_size in batch_size_list:

+ for i in range(1, 151):

print("Epoch %d, thread %d" % (i, self.__thread_id))

- i += 1

- benchmark_test(batch_size, img_list)

-

+ self.__benchmark_test()

+

+ # benchmark test

+ def __benchmark_test(self):

+ start = time.time()

+ for image_filename in self.__image_data_repo:

+ mask_mat_list = self.__request_predictor_server(image_filename)

+ input_img = self.__image_data_repo.get_image_matrix(image_filename)

+ # 将获得的mask matrix转换成可视化图像,并在当前目录下保存为图像文件

+ # 如果进行压测,可以把这句话注释掉

+ for j in range(len(mask_mat_list)):

+ self.__visualization(mask_mat_list[j], image_filename, 2, input_img)

+ latency = time.time() - start

+ print("total latency = %f s" % (latency))

+

+ # 对预测结果进行可视化

+ # input_raw_mask 是server返回的预测结果

+ # output_img 是可视化结果存储路径

+ def __visualization(self, mask_mat, output_img, num_cls, input_img):

+ # ColorMap for visualization more clearly

+ n = num_cls

+ color_map = []

+ for j in range(n):

+ lab = j

+ a = b = c = 0

+ color_map.append([a, b, c])

+ i = 0

+ while lab:

+ color_map[j][0] |= (((lab >> 0) & 1) << (7 - i))

+ color_map[j][1] |= (((lab >> 1) & 1) << (7 - i))

+ color_map[j][2] |= (((lab >> 2) & 1) << (7 - i))

+ i += 1

+ lab >>= 3

+ im = cv2.imdecode(mask_mat, 1)

+ w, h, c = im.shape

+ im2 = cv2.resize(im, (w, h))

+ im = im2

+ # I = aF + (1-a)B

+ a = im / 255.0

+ im = a * input_img + (1 - a) * [255, 255, 255]

+ cv2.imwrite(output_img, im)

+

+ def __request_predictor_server(self, input_img):

+ data = {"instances": [self.__get_item_json(input_img)]}

+ response = requests.post(IMAGE_SEG_URL, data=json.dumps(data))

+ try:

+ response = json.loads(response.text)

+ prediction_list = response["prediction"]

+ mask_response_list = [mask_response["info"] for mask_response in prediction_list]

+ mask_raw_list = [json.loads(mask_response)["mask"] for mask_response in mask_response_list]

+ except Exception as err:

+ print("Exception[%s], server_message[%s]" % (str(err), response.text))

+ return None

+ # 使用 json 协议回复的包也是 base64 编码过的

+ mask_binary_list = [base64.b64decode(mask_raw) for mask_raw in mask_raw_list]

+ m = [np.fromstring(mask_binary, np.uint8) for mask_binary in mask_binary_list]

+ return m

+

+ # 请求预测服务

+ # input_img 要预测的图片列表

+ def __get_item_json(self, input_img):

+ # 使用 http 协议请求服务时, 请使用 base64 编码发送图片

+ item_binary_b64 = str(base64.b64encode(self.__image_data_repo.get_image_binary(input_img)), 'utf-8')

+ item_size = len(item_binary_b64)

+ item_json = {

+ "image_length": item_size,

+ "image_binary": item_binary_b64

+ }

+ return item_json

-def create_thread_pool(thread_num, batch_size):

- return [ClientThread(i + 1, batch_size) for i in range(thread_num)]

+def create_thread_pool(thread_num, image_data_repo):

+ return [ClientThread(i + 1, image_data_repo) for i in range(thread_num)]

def run_threads(thread_pool):

@@ -126,7 +106,35 @@ def run_threads(thread_pool):

for thread in thread_pool:

thread.join()

+class ImageDataRepo:

+ def __init__(self, dir_name):

+ print("Loading images data...")

+ self.__data = {}

+ pattern = re.compile(".+\.(jpg|jpeg)", re.I)

+ if os.path.isdir(dir_name):

+ for image_filename in os.listdir(dir_name):

+ if pattern.match(image_filename):

+ full_path = os.path.join(dir_name, image_filename)

+ fp = open(full_path, mode="rb")

+ image_binary_data = fp.read()

+ image_mat_data = cv2.imread(full_path)

+ self.__data[image_filename] = (image_binary_data, image_mat_data)

+ else:

+ raise Exception("Please use directory to initialize");

+ print("Finish loading.")

+ def __iter__(self):

+ for filename in self.__data:

+ yield filename

+

+ def get_image_binary(self, image_name):

+ return self.__data[image_name][0]

+

+ def get_image_matrix(self, image_name):

+ return self.__data[image_name][1]

+

+

if __name__ == "__main__":

- thread_pool = create_thread_pool(thread_num=2, batch_size=1)

+ #preprocess

+ IDR = ImageDataRepo("images")

+ thread_pool = create_thread_pool(thread_num=1, image_data_repo=IDR)

run_threads(thread_pool)

-

diff --git a/test/test_utils.py b/test/test_utils.py

index e35c0326766ce395d66b5e6e9c726c4dbcf4f512..a5070de68872a2894e9bdd2186c6f302302af725 100644

--- a/test/test_utils.py

+++ b/test/test_utils.py

@@ -20,6 +20,7 @@ import sys

import tarfile

import zipfile

import platform

+import functools

lasttime = time.time()

FLUSH_INTERVAL = 0.1

@@ -78,8 +79,10 @@ def _uncompress_file(filepath, extrapath, delete_file, print_progress):

if filepath.endswith("zip"):

handler = _uncompress_file_zip

- else:

+ elif filepath.endswith("tgz"):

handler = _uncompress_file_tar

+ else:

+ handler = functools.partial(_uncompress_file_tar, mode="r")

for total_num, index in handler(filepath, extrapath):

if print_progress:

@@ -104,8 +107,8 @@ def _uncompress_file_zip(filepath, extrapath):

yield total_num, index

-def _uncompress_file_tar(filepath, extrapath):

- files = tarfile.open(filepath, "r:gz")

+def _uncompress_file_tar(filepath, extrapath, mode="r:gz"):

+ files = tarfile.open(filepath, mode)

filelist = files.getnames()

total_num = len(filelist)

for index, file in enumerate(filelist):

+

+

+

+

+

+

+

+

-

-  +

+  +

+

+

+

+

+

+

+