Merge branch 'master' into multiview-simnet

Showing

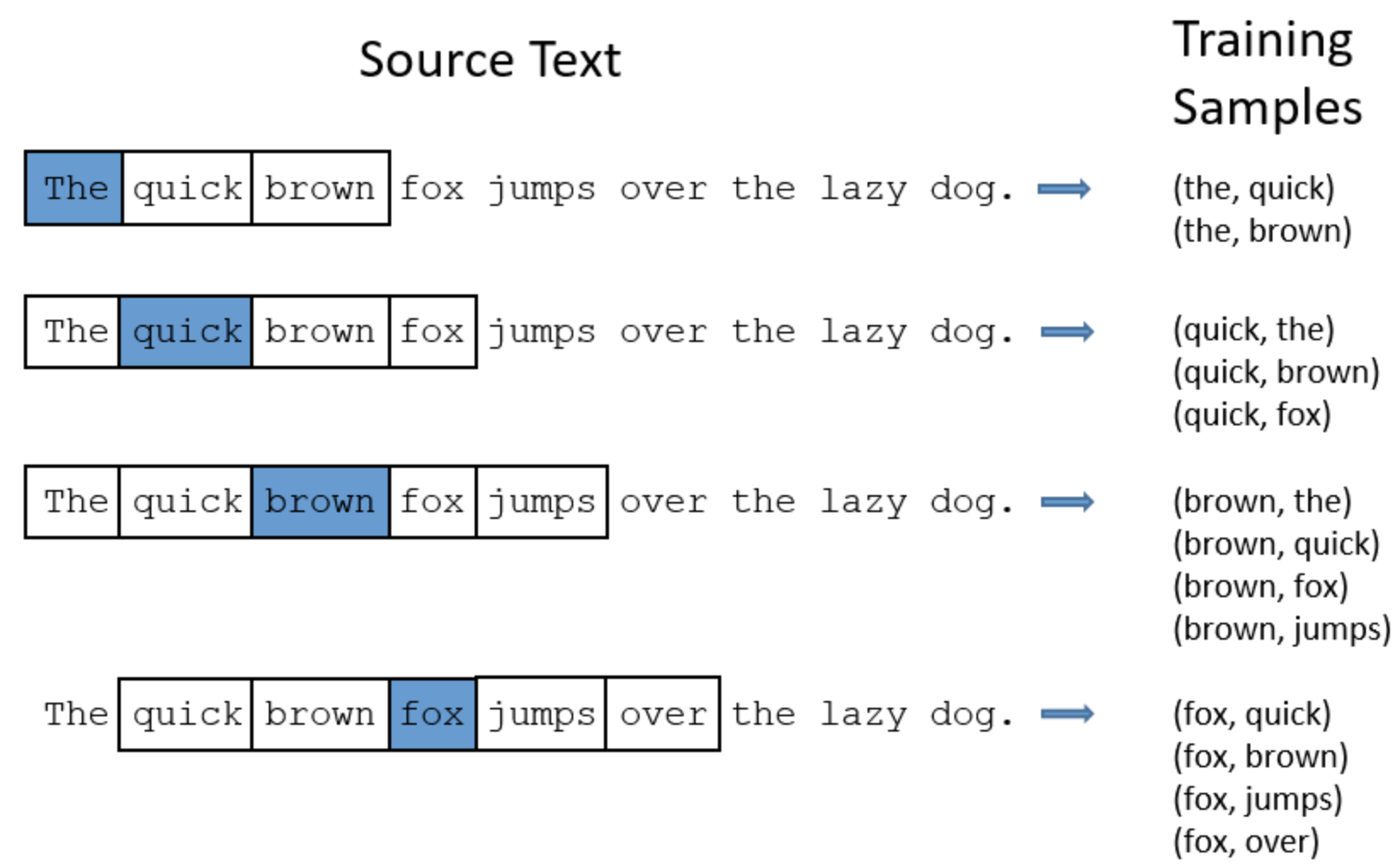

doc/imgs/w2v_train.png

0 → 100644

223.3 KB

models/recall/word2vec/README.md

0 → 100644

models/recall/word2vec/infer.py

0 → 100644

models/recall/word2vec/utils.py

0 → 100644