Merge branch 'master' into multiview-simnet

Showing

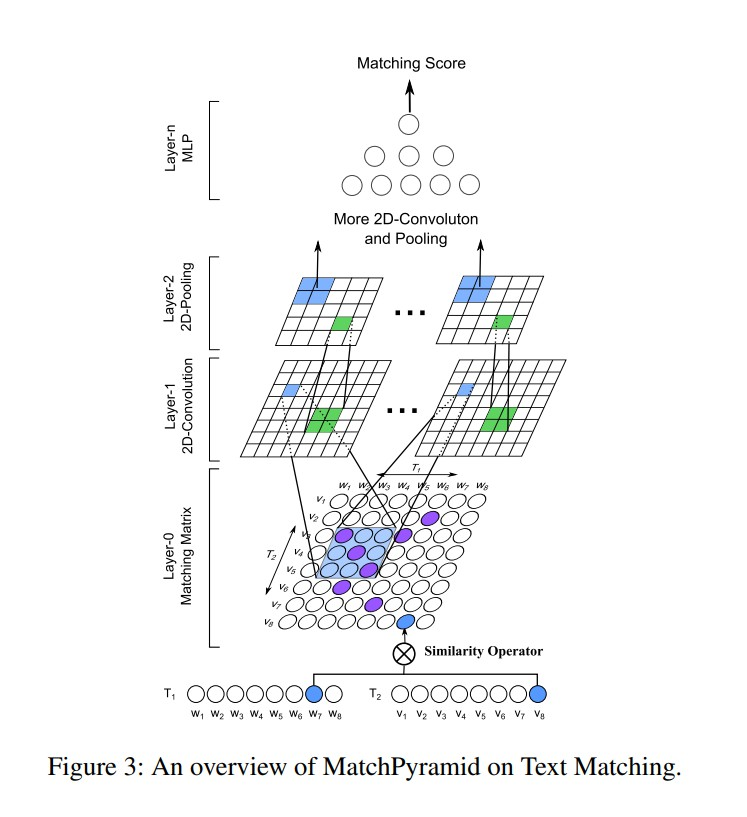

doc/imgs/match-pyramid.png

0 → 100644

219.2 KB

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

models/match/dssm/readme.md

0 → 100644

models/match/dssm/run.sh

0 → 100644

models/match/dssm/transform.py

0 → 100644