add ncf youtube

Showing

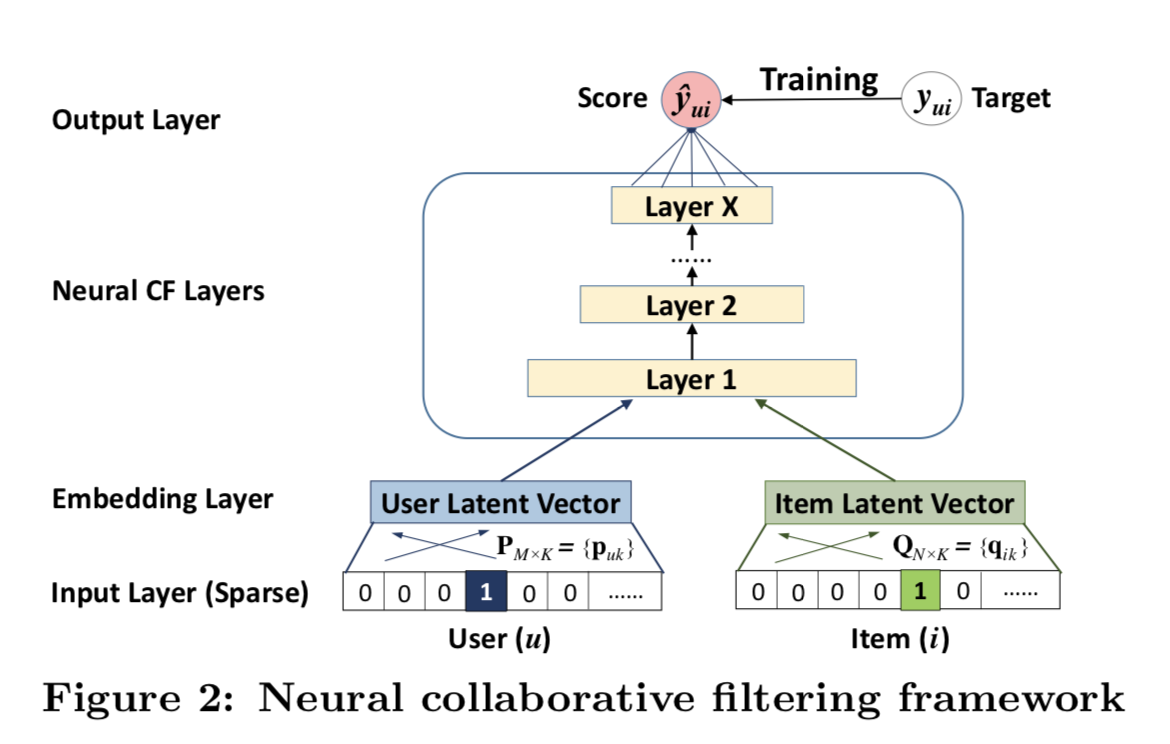

doc/imgs/ncf.png

0 → 100644

148.0 KB

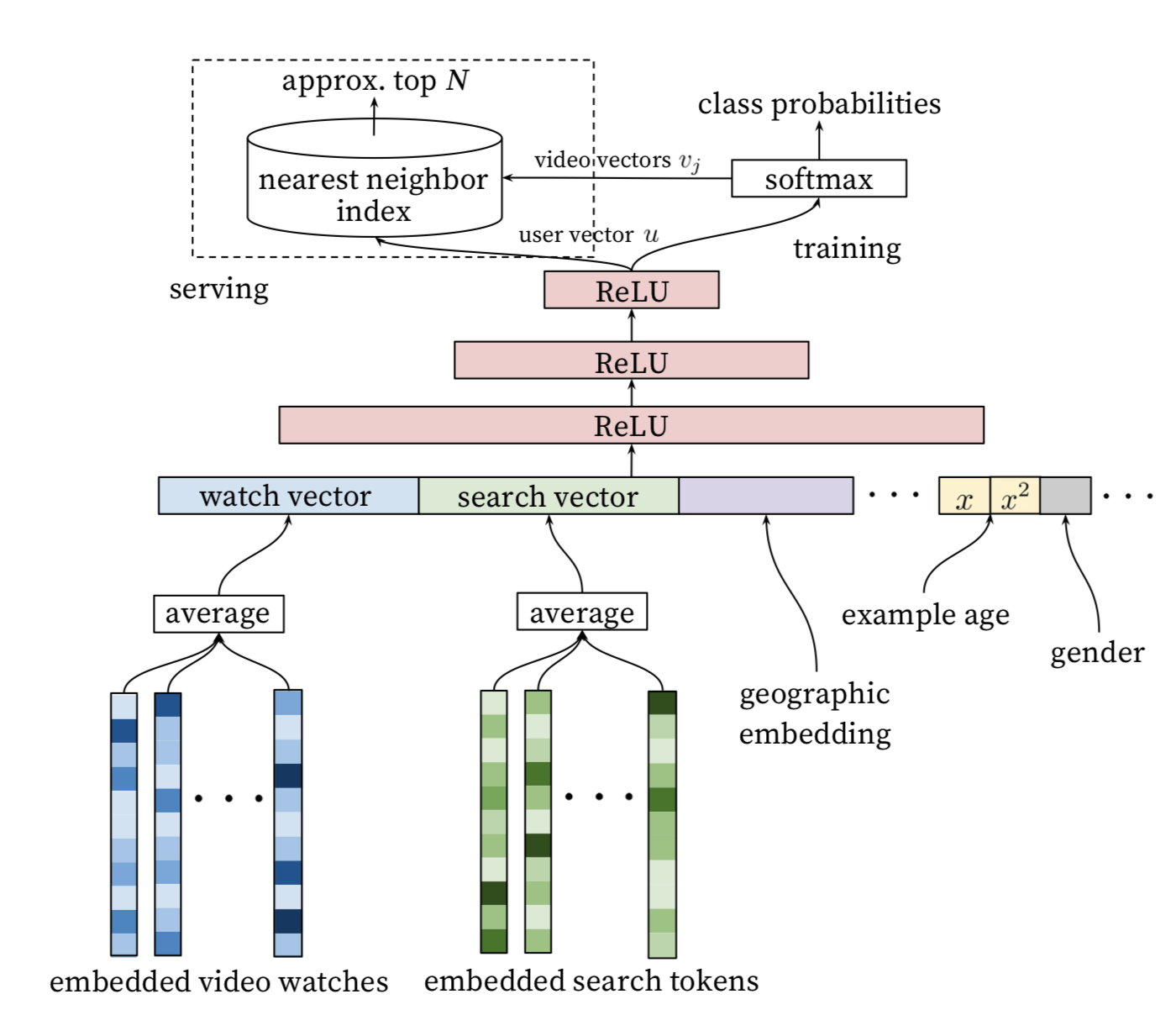

doc/imgs/youtube_dnn.png

0 → 100644

214.9 KB

models/recall/ncf/__init__.py

0 → 100755

models/recall/ncf/config.yaml

0 → 100644

models/recall/ncf/model.py

0 → 100644