Merge branch 'rarfile' of https://github.com/123malin/PaddleRec into rarfile

Showing

README_CN.md

已删除

100644 → 0

README_EN.md

0 → 100644

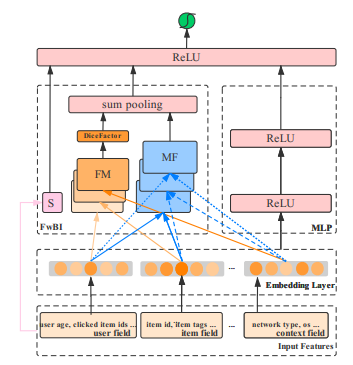

doc/imgs/flen.png

0 → 100644

36.1 KB

models/rank/AutoInt/__init__.py

0 → 100755

models/rank/AutoInt/config.yaml

0 → 100755

models/rank/AutoInt/model.py

0 → 100755

models/rank/BST/__init__.py

0 → 100755

models/rank/BST/config.yaml

0 → 100755

models/rank/BST/data/remap_id.py

0 → 100755

models/rank/BST/model.py

0 → 100755

models/rank/dnn/backend.yaml

0 → 100644

models/rank/flen/README.md

0 → 100644

models/rank/flen/__init__.py

0 → 100644

models/rank/flen/config.yaml

0 → 100644

models/rank/flen/data/run.sh

0 → 100644

models/rank/flen/model.py

0 → 100644

setup.cfg

0 → 100644