English | [简体中文](README_ch.md)

------------------------------------------------------------------------------------------

## Introduction

PaddleOCR aims to create multilingual, awesome, leading, and practical OCR tools that help users train better models and apply them into practice.

## Notice

PaddleOCR supports both dynamic graph and static graph programming paradigm

- Dynamic graph: dygraph branch (default), **supported by paddle 2.0.0 ([installation](./doc/doc_en/installation_en.md))**

- Static graph: develop branch

**Recent updates**

- PaddleOCR R&D team would like to share the released tools with developers, at 20:15 pm on August 4th, [Live Address](https://live.bilibili.com/21689802).

- 2021.8.3 released PaddleOCR v2.2, add a new structured documents analysis toolkit, i.e., [PP-Structure](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.2/ppstructure/README.md), support layout analysis and table recognition (One-key to export chart images to Excel files).

- 2021.4.8 release end-to-end text recognition algorithm [PGNet](https://www.aaai.org/AAAI21Papers/AAAI-2885.WangP.pdf) which is published in AAAI 2021. Find tutorial [here](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.1/doc/doc_en/pgnet_en.md);release multi language recognition [models](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.1/doc/doc_en/multi_languages_en.md), support more than 80 languages recognition; especically, the performance of [English recognition model](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.1/doc/doc_en/models_list_en.md#English) is Optimized.

- 2021.1.21 update more than 25+ multilingual recognition models [models list](./doc/doc_en/models_list_en.md), including:English, Chinese, German, French, Japanese,Spanish,Portuguese Russia Arabic and so on. Models for more languages will continue to be updated [Develop Plan](https://github.com/PaddlePaddle/PaddleOCR/issues/1048).

- 2020.12.15 update Data synthesis tool, i.e., [Style-Text](./StyleText/README.md),easy to synthesize a large number of images which are similar to the target scene image.

- 2020.11.25 Update a new data annotation tool, i.e., [PPOCRLabel](./PPOCRLabel/README.md), which is helpful to improve the labeling efficiency. Moreover, the labeling results can be used in training of the PP-OCR system directly.

- 2020.9.22 Update the PP-OCR technical article, https://arxiv.org/abs/2009.09941

- [more](./doc/doc_en/update_en.md)

## Features

- PPOCR series of high-quality pre-trained models, comparable to commercial effects

- Ultra lightweight ppocr_mobile series models: detection (3.0M) + direction classifier (1.4M) + recognition (5.0M) = 9.4M

- General ppocr_server series models: detection (47.1M) + direction classifier (1.4M) + recognition (94.9M) = 143.4M

- Support Chinese, English, and digit recognition, vertical text recognition, and long text recognition

- Support multi-language recognition: Korean, Japanese, German, French

- Rich toolkits related to the OCR areas

- Semi-automatic data annotation tool, i.e., PPOCRLabel: support fast and efficient data annotation

- Data synthesis tool, i.e., Style-Text: easy to synthesize a large number of images which are similar to the target scene image

- Support user-defined training, provides rich predictive inference deployment solutions

- Support PIP installation, easy to use

- Support Linux, Windows, MacOS and other systems

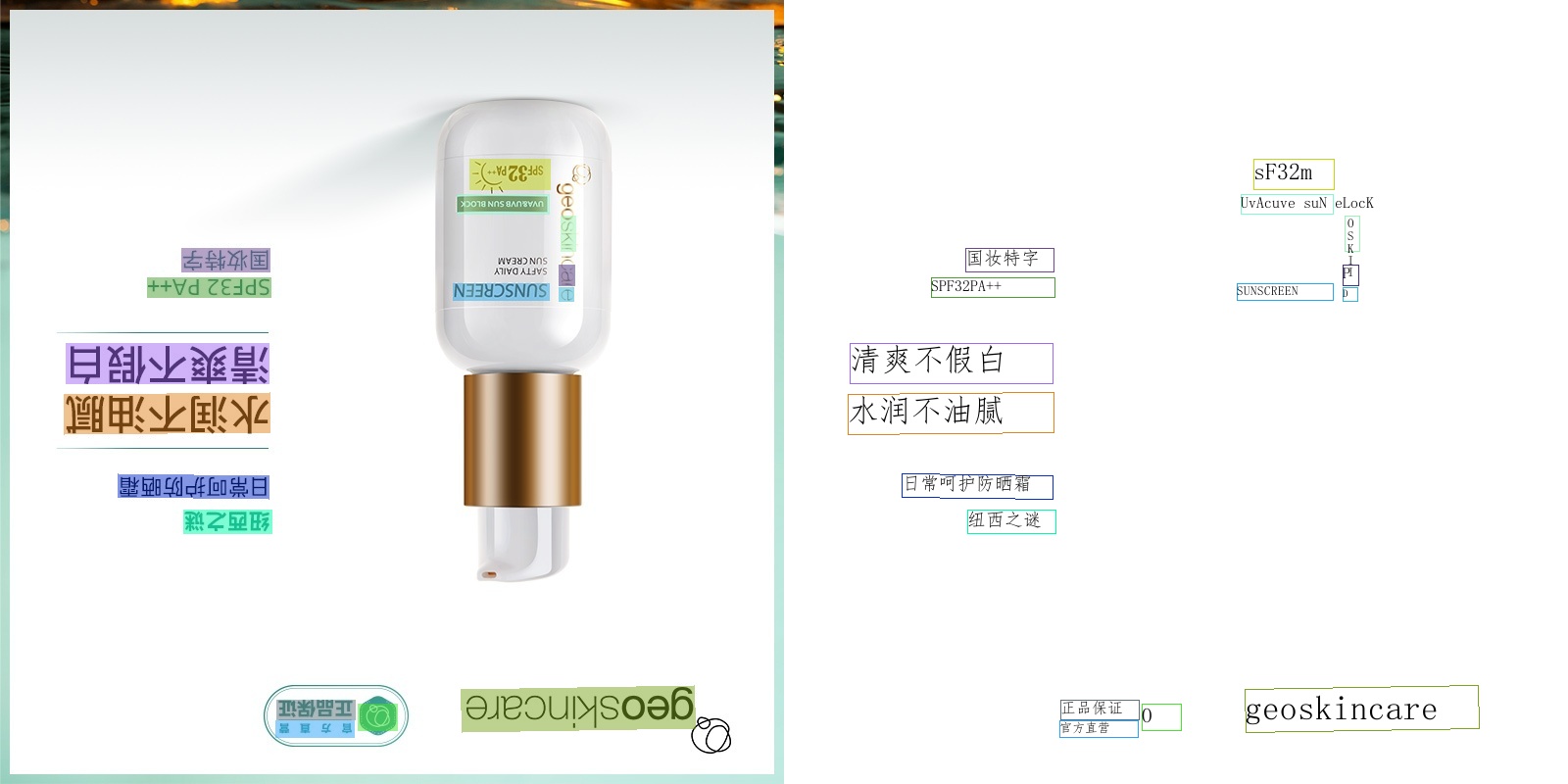

## Visualization

The above pictures are the visualizations of the general ppocr_server model. For more effect pictures, please see [More visualizations](./doc/doc_en/visualization_en.md).

## Community

- Scan the QR code below with your Wechat, you can access to official technical exchange group. Look forward to your participation.

## Quick Experience

You can also quickly experience the ultra-lightweight OCR : [Online Experience](https://www.paddlepaddle.org.cn/hub/scene/ocr)

Mobile DEMO experience (based on EasyEdge and Paddle-Lite, supports iOS and Android systems): [Sign in to the website to obtain the QR code for installing the App](https://ai.baidu.com/easyedge/app/openSource?from=paddlelite)

Also, you can scan the QR code below to install the App (**Android support only**)

- [**OCR Quick Start**](./doc/doc_en/quickstart_en.md)

## PP-OCR 2.0 series model list(Update on Dec 15)

**Note** : Compared with [models 1.1](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/doc/doc_en/models_list_en.md), which are trained with static graph programming paradigm, models 2.0 are the dynamic graph trained version and achieve close performance.

| Model introduction | Model name | Recommended scene | Detection model | Direction classifier | Recognition model |

| ------------------------------------------------------------ | ---------------------------- | ----------------- | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

| Chinese and English ultra-lightweight OCR model (9.4M) | ch_ppocr_mobile_v2.0_xx | Mobile & server |[inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_train.tar)|[inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) |[inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_pre.tar) |

| Chinese and English general OCR model (143.4M) | ch_ppocr_server_v2.0_xx | Server |[inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_train.tar) |[inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_traingit.tar) |[inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_pre.tar) |

For more model downloads (including multiple languages), please refer to [PP-OCR v2.0 series model downloads](./doc/doc_en/models_list_en.md).

For a new language request, please refer to [Guideline for new language_requests](#language_requests).

## Tutorials

- [Quick Start](./doc/doc_en/quickstart_en.md)

- [PaddleOCR Overview and Installation](./doc/doc_en/paddleOCR_overview_en.md)

- PP-OCR Industry Landing: from Training to Deployment

- [PP-OCR Model and Configuration](./doc/doc_en/models_and_config_en.md)

- [PP-OCR Model Download](./doc/doc_en/models_list_en.md)

- [Yml Configuration](./doc/doc_en/config_en.md)

- [Python Inference](./doc/doc_en/inference_en.md)

- [PP-OCR Training](./doc/doc_en/training_en.md)

- [Text Detection](./doc/doc_en/detection_en.md)

- [Text Recognition](./doc/doc_en/recognition_en.md)

- [Direction Classification](./doc/doc_en/angle_class_en.md)

- Inference and Deployment

- [Python Inference](./doc/doc_en/inference_en.md)

- [C++ Inference](./deploy/cpp_infer/readme_en.md)

- [Serving](./deploy/pdserving/README.md)

- [Mobile](./deploy/lite/readme_en.md)

- [Benchmark](./doc/doc_en/benchmark_en.md)

- [PP-Structure: Information Extraction](./ppstructure/README.md)

- [Layout Parser](./ppstructure/layout/README.md)

- [Table Recognition](./ppstructure/table/README.md)

- Academic Circles

- [Two-stage Algorithm](./doc/doc_en/algorithm_overview_en.md)

- [PGNet Algorithm](./doc/doc_en/algorithm_overview_en.md)

- Data Annotation and Synthesis

- [Semi-automatic Annotation Tool: PPOCRLabel](./PPOCRLabel/README.md)

- [Data Synthesis Tool: Style-Text](./StyleText/README.md)

- [Other Data Annotation Tools](./doc/doc_en/data_annotation_en.md)

- [Other Data Synthesis Tools](./doc/doc_en/data_synthesis_en.md)

- Datasets

- [General OCR Datasets(Chinese/English)](./doc/doc_en/datasets_en.md)

- [HandWritten_OCR_Datasets(Chinese)](./doc/doc_en/handwritten_datasets_en.md)

- [Various OCR Datasets(multilingual)](./doc/doc_en/vertical_and_multilingual_datasets_en.md)

- [Visualization](#Visualization)

- [New language requests](#language_requests)

- [FAQ](./doc/doc_en/FAQ_en.md)

- [Community](#Community)

- [References](./doc/doc_en/reference_en.md)

- [License](#LICENSE)

- [Contribution](#CONTRIBUTION)

## PP-OCR Pipeline

PP-OCR is a practical ultra-lightweight OCR system. It is mainly composed of three parts: DB text detection[2], detection frame correction and CRNN text recognition[7]. The system adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module. The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to the PP-OCR technical article (https://arxiv.org/abs/2009.09941). Besides, The implementation of the FPGM Pruner [8] and PACT quantization [9] is based on [PaddleSlim](https://github.com/PaddlePaddle/PaddleSlim).

## Visualization [more](./doc/doc_en/visualization_en.md)

- Chinese OCR model

- English OCR model

- Multilingual OCR model

## Guideline for new language requests

If you want to request a new language support, a PR with 2 following files are needed:

1. In folder [ppocr/utils/dict](./ppocr/utils/dict),

it is necessary to submit the dict text to this path and name it with `{language}_dict.txt` that contains a list of all characters. Please see the format example from other files in that folder.

2. In folder [ppocr/utils/corpus](./ppocr/utils/corpus),

it is necessary to submit the corpus to this path and name it with `{language}_corpus.txt` that contains a list of words in your language.

Maybe, 50000 words per language is necessary at least.

Of course, the more, the better.

If your language has unique elements, please tell me in advance within any way, such as useful links, wikipedia and so on.

More details, please refer to [Multilingual OCR Development Plan](https://github.com/PaddlePaddle/PaddleOCR/issues/1048).

## License

This project is released under Apache 2.0 license

## Contribution

We welcome all the contributions to PaddleOCR and appreciate for your feedback very much.

- Many thanks to [Khanh Tran](https://github.com/xxxpsyduck) and [Karl Horky](https://github.com/karlhorky) for contributing and revising the English documentation.

- Many thanks to [zhangxin](https://github.com/ZhangXinNan) for contributing the new visualize function、add .gitignore and discard set PYTHONPATH manually.

- Many thanks to [lyl120117](https://github.com/lyl120117) for contributing the code for printing the network structure.

- Thanks [xiangyubo](https://github.com/xiangyubo) for contributing the handwritten Chinese OCR datasets.

- Thanks [authorfu](https://github.com/authorfu) for contributing Android demo and [xiadeye](https://github.com/xiadeye) contributing iOS demo, respectively.

- Thanks [BeyondYourself](https://github.com/BeyondYourself) for contributing many great suggestions and simplifying part of the code style.

- Thanks [tangmq](https://gitee.com/tangmq) for contributing Dockerized deployment services to PaddleOCR and supporting the rapid release of callable Restful API services.

- Thanks [lijinhan](https://github.com/lijinhan) for contributing a new way, i.e., java SpringBoot, to achieve the request for the Hubserving deployment.

- Thanks [Mejans](https://github.com/Mejans) for contributing the Occitan corpus and character set.

- Thanks [LKKlein](https://github.com/LKKlein) for contributing a new deploying package with the Golang program language.

- Thanks [Evezerest](https://github.com/Evezerest), [ninetailskim](https://github.com/ninetailskim), [edencfc](https://github.com/edencfc), [BeyondYourself](https://github.com/BeyondYourself) and [1084667371](https://github.com/1084667371) for contributing a new data annotation tool, i.e., PPOCRLabel。