@@ -100,7 +100,7 @@ Considering that the features of some channels will be suppressed if the convolu

The recognition module of PP-OCRv3 is optimized based on the text recognition algorithm [SVTR](https://arxiv.org/abs/2205.00159). RNN is abandoned in SVTR, and the context information of the text line image is more effectively mined by introducing the Transformers structure, thereby improving the text recognition ability.

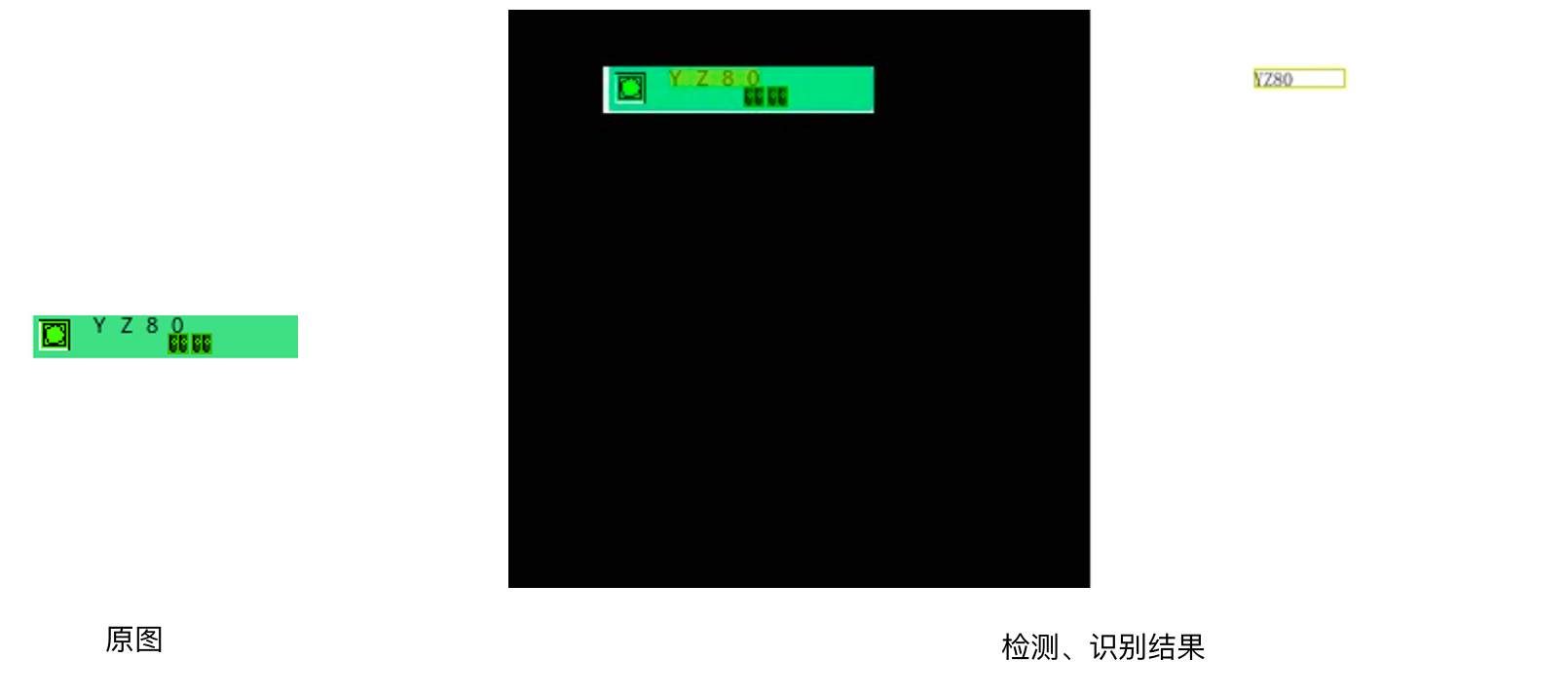

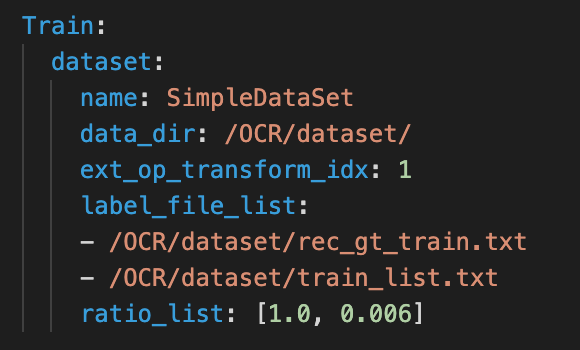

-The recognition accuracy of SVTR_inty outperforms PP-OCRv2 recognition model by 5.3%, while the prediction speed nearly 11 times slower. It takes nearly 100ms to predict a text line on CPU. Therefore, as shown in the figure below, PP-OCRv3 adopts the following six optimization strategies to accelerate the recognition model.

+The recognition accuracy of SVTR_tiny outperforms PP-OCRv2 recognition model by 5.3%, while the prediction speed nearly 11 times slower. It takes nearly 100ms to predict a text line on CPU. Therefore, as shown in the figure below, PP-OCRv3 adopts the following six optimization strategies to accelerate the recognition model.

diff --git a/doc/doc_en/algorithm_det_east_en.md b/doc/doc_en/algorithm_det_east_en.md

index 3955809a49a595aa59717bafcfbb23146ae96bd2..07c434a9b162d9d373f5f357522cbd752be1afc1 100644

--- a/doc/doc_en/algorithm_det_east_en.md

+++ b/doc/doc_en/algorithm_det_east_en.md

@@ -40,7 +40,7 @@ Please prepare your environment referring to [prepare the environment](./environ

The above EAST model is trained using the ICDAR2015 text detection public dataset. For the download of the dataset, please refer to [ocr_datasets](./dataset/ocr_datasets_en.md).

-After the data download is complete, please refer to [Text Detection Training Tutorial](./detection.md) for training. PaddleOCR has modularized the code structure, so that you only need to **replace the configuration file** to train different detection models.

+After the data download is complete, please refer to [Text Detection Training Tutorial](./detection_en.md) for training. PaddleOCR has modularized the code structure, so that you only need to **replace the configuration file** to train different detection models.

diff --git a/doc/doc_en/algorithm_det_fcenet_en.md b/doc/doc_en/algorithm_det_fcenet_en.md

index e15fb9a07ede3296d3de83c134457194d4639a1c..f3c51a91a486342b86828167de5c1b386b42cc66 100644

--- a/doc/doc_en/algorithm_det_fcenet_en.md

+++ b/doc/doc_en/algorithm_det_fcenet_en.md

@@ -37,7 +37,7 @@ Please prepare your environment referring to [prepare the environment](./environ

The above FCE model is trained using the CTW1500 text detection public dataset. For the download of the dataset, please refer to [ocr_datasets](./dataset/ocr_datasets_en.md).

-After the data download is complete, please refer to [Text Detection Training Tutorial](./detection.md) for training. PaddleOCR has modularized the code structure, so that you only need to **replace the configuration file** to train different detection models.

+After the data download is complete, please refer to [Text Detection Training Tutorial](./detection_en.md) for training. PaddleOCR has modularized the code structure, so that you only need to **replace the configuration file** to train different detection models.

## 4. Inference and Deployment

diff --git a/doc/doc_en/algorithm_det_psenet_en.md b/doc/doc_en/algorithm_det_psenet_en.md

index d4cb3ea7d1e82a3f9c261c6e44cd6df6b0f6bf1e..3977a156ace3beb899e105bc381e27af6e825d6a 100644

--- a/doc/doc_en/algorithm_det_psenet_en.md

+++ b/doc/doc_en/algorithm_det_psenet_en.md

@@ -39,7 +39,7 @@ Please prepare your environment referring to [prepare the environment](./environ

The above PSE model is trained using the ICDAR2015 text detection public dataset. For the download of the dataset, please refer to [ocr_datasets](./dataset/ocr_datasets_en.md).

-After the data download is complete, please refer to [Text Detection Training Tutorial](./detection.md) for training. PaddleOCR has modularized the code structure, so that you only need to **replace the configuration file** to train different detection models.

+After the data download is complete, please refer to [Text Detection Training Tutorial](./detection_en.md) for training. PaddleOCR has modularized the code structure, so that you only need to **replace the configuration file** to train different detection models.

## 4. Inference and Deployment

diff --git a/doc/doc_en/algorithm_e2e_pgnet_en.md b/doc/doc_en/algorithm_e2e_pgnet_en.md

index c7cb3221ccfd897e2fd9062a828c2fe0ceb42024..ab74c57bc3d4d97852641cd708a2dceea5732ba7 100644

--- a/doc/doc_en/algorithm_e2e_pgnet_en.md

+++ b/doc/doc_en/algorithm_e2e_pgnet_en.md

@@ -36,7 +36,7 @@ The results of detection and recognition are as follows:

## 2. Environment Configuration

-Please refer to [Operation Environment Preparation](./environment_en.md) to configure PaddleOCR operating environment first, refer to [PaddleOCR Overview and Project Clone](./paddleOCR_overview_en.md) to clone the project

+Please refer to [Operation Environment Preparation](./environment_en.md) to configure PaddleOCR operating environment first, refer to [Project Clone](./clone_en.md) to clone the project

## 3. Quick Use

diff --git a/doc/doc_en/algorithm_overview_en.md b/doc/doc_en/algorithm_overview_en.md

index 18c9cd7d51bdf0129245afca8a759afab5d9d589..28aca7c0d171008156104fbcc786707538fd49ef 100755

--- a/doc/doc_en/algorithm_overview_en.md

+++ b/doc/doc_en/algorithm_overview_en.md

@@ -41,6 +41,12 @@ On Total-Text dataset, the text detection result is as follows:

| --- | --- | --- | --- | --- | --- |

|SAST|ResNet50_vd|89.63%|78.44%|83.66%|[trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/det_r50_vd_sast_totaltext_v2.0_train.tar)|

+On CTW1500 dataset, the text detection result is as follows:

+

+|Model|Backbone|Precision|Recall|Hmean| Download link|

+| --- | --- | --- | --- | --- |---|

+|FCE|ResNet50_dcn|88.39%|82.18%|85.27%| [trained model](https://paddleocr.bj.bcebos.com/contribution/det_r50_dcn_fce_ctw_v2.0_train.tar) |

+

**Note:** Additional data, like icdar2013, icdar2017, COCO-Text, ArT, was added to the model training of SAST. Download English public dataset in organized format used by PaddleOCR from:

* [Baidu Drive](https://pan.baidu.com/s/12cPnZcVuV1zn5DOd4mqjVw) (download code: 2bpi).

* [Google Drive](https://drive.google.com/drive/folders/1ll2-XEVyCQLpJjawLDiRlvo_i4BqHCJe?usp=sharing)

@@ -59,6 +65,8 @@ Supported text recognition algorithms (Click the link to get the tutorial):

- [x] [SAR](./algorithm_rec_sar_en.md)

- [x] [SEED](./algorithm_rec_seed_en.md)

- [x] [SVTR](./algorithm_rec_svtr_en.md)

+- [x] [ViTSTR](./algorithm_rec_vitstr_en.md)

+- [x] [ABINet](./algorithm_rec_abinet_en.md)

Refer to [DTRB](https://arxiv.org/abs/1904.01906), the training and evaluation result of these above text recognition (using MJSynth and SynthText for training, evaluate on IIIT, SVT, IC03, IC13, IC15, SVTP, CUTE) is as follow:

@@ -77,7 +85,8 @@ Refer to [DTRB](https://arxiv.org/abs/1904.01906), the training and evaluation r

|SAR|Resnet31| 87.20% | rec_r31_sar | [trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.1/rec/rec_r31_sar_train.tar) |

|SEED|Aster_Resnet| 85.35% | rec_resnet_stn_bilstm_att | [trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.1/rec/rec_resnet_stn_bilstm_att.tar) |

|SVTR|SVTR-Tiny| 89.25% | rec_svtr_tiny_none_ctc_en | [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/rec_svtr_tiny_none_ctc_en_train.tar) |

-

+|ViTSTR|ViTSTR| 79.82% | rec_vitstr_none_ce_en | [trained model](https://paddleocr.bj.bcebos.com/rec_vitstr_none_none_train.tar) |

+|ABINet|Resnet45| 90.75% | rec_r45_abinet_en | [trained model](https://paddleocr.bj.bcebos.com/rec_r45_abinet_train.tar) |

diff --git a/doc/doc_en/algorithm_rec_abinet_en.md b/doc/doc_en/algorithm_rec_abinet_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..767ca65f6411a7bc071ccafacc09d12bc160e6b6

--- /dev/null

+++ b/doc/doc_en/algorithm_rec_abinet_en.md

@@ -0,0 +1,136 @@

+# ABINet

+

+- [1. Introduction](#1)

+- [2. Environment](#2)

+- [3. Model Training / Evaluation / Prediction](#3)

+ - [3.1 Training](#3-1)

+ - [3.2 Evaluation](#3-2)

+ - [3.3 Prediction](#3-3)

+- [4. Inference and Deployment](#4)

+ - [4.1 Python Inference](#4-1)

+ - [4.2 C++ Inference](#4-2)

+ - [4.3 Serving](#4-3)

+ - [4.4 More](#4-4)

+- [5. FAQ](#5)

+

+

+## 1. Introduction

+

+Paper:

+> [ABINet: Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition](https://openaccess.thecvf.com/content/CVPR2021/papers/Fang_Read_Like_Humans_Autonomous_Bidirectional_and_Iterative_Language_Modeling_for_CVPR_2021_paper.pdf)

+> Shancheng Fang and Hongtao Xie and Yuxin Wang and Zhendong Mao and Yongdong Zhang

+> CVPR, 2021

+

+Using MJSynth and SynthText two text recognition datasets for training, and evaluating on IIIT, SVT, IC03, IC13, IC15, SVTP, CUTE datasets, the algorithm reproduction effect is as follows:

+

+|Model|Backbone|config|Acc|Download link|

+| --- | --- | --- | --- | --- |

+|ABINet|ResNet45|[rec_r45_abinet.yml](../../configs/rec/rec_r45_abinet.yml)|90.75%|[pretrained & trained model](https://paddleocr.bj.bcebos.com/rec_r45_abinet_train.tar)|

+

+

+## 2. Environment

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

+

+

+

+## 3. Model Training / Evaluation / Prediction

+

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+

+Training:

+

+Specifically, after the data preparation is completed, the training can be started. The training command is as follows:

+

+```

+#Single GPU training (long training period, not recommended)

+python3 tools/train.py -c configs/rec/rec_r45_abinet.yml

+

+#Multi GPU training, specify the gpu number through the --gpus parameter

+python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/rec/rec_r45_abinet.yml

+```

+

+Evaluation:

+

+```

+# GPU evaluation

+python3 -m paddle.distributed.launch --gpus '0' tools/eval.py -c configs/rec/rec_r45_abinet.yml -o Global.pretrained_model={path/to/weights}/best_accuracy

+```

+

+Prediction:

+

+```

+# The configuration file used for prediction must match the training

+python3 tools/infer_rec.py -c configs/rec/rec_r45_abinet.yml -o Global.infer_img='./doc/imgs_words_en/word_10.png' Global.pretrained_model=./rec_r45_abinet_train/best_accuracy

+```

+

+

+## 4. Inference and Deployment

+

+

+### 4.1 Python Inference

+First, the model saved during the ABINet text recognition training process is converted into an inference model. ( [Model download link](https://paddleocr.bj.bcebos.com/rec_r45_abinet_train.tar)) ), you can use the following command to convert:

+

+```

+python3 tools/export_model.py -c configs/rec/rec_r45_abinet.yml -o Global.pretrained_model=./rec_r45_abinet_train/best_accuracy Global.save_inference_dir=./inference/rec_r45_abinet

+```

+

+**Note:**

+- If you are training the model on your own dataset and have modified the dictionary file, please pay attention to modify the `character_dict_path` in the configuration file to the modified dictionary file.

+- If you modified the input size during training, please modify the `infer_shape` corresponding to ABINet in the `tools/export_model.py` file.

+

+After the conversion is successful, there are three files in the directory:

+```

+/inference/rec_r45_abinet/

+ ├── inference.pdiparams

+ ├── inference.pdiparams.info

+ └── inference.pdmodel

+```

+

+

+For ABINet text recognition model inference, the following commands can be executed:

+

+```

+python3 tools/infer/predict_rec.py --image_dir='./doc/imgs_words_en/word_10.png' --rec_model_dir='./inference/rec_r45_abinet/' --rec_algorithm='ABINet' --rec_image_shape='3,32,128' --rec_char_dict_path='./ppocr/utils/ic15_dict.txt'

+```

+

+

+

+After executing the command, the prediction result (recognized text and score) of the image above is printed to the screen, an example is as follows:

+The result is as follows:

+```shell

+Predicts of ./doc/imgs_words_en/word_10.png:('pain', 0.9999995231628418)

+```

+

+

+### 4.2 C++ Inference

+

+Not supported

+

+

+### 4.3 Serving

+

+Not supported

+

+

+### 4.4 More

+

+Not supported

+

+

+## 5. FAQ

+

+1. Note that the MJSynth and SynthText datasets come from [ABINet repo](https://github.com/FangShancheng/ABINet).

+2. We use the pre-trained model provided by the ABINet authors for finetune training.

+

+## Citation

+

+```bibtex

+@article{Fang2021ABINet,

+ title = {ABINet: Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition},

+ author = {Shancheng Fang and Hongtao Xie and Yuxin Wang and Zhendong Mao and Yongdong Zhang},

+ booktitle = {CVPR},

+ year = {2021},

+ url = {https://arxiv.org/abs/2103.06495},

+ pages = {7098-7107}

+}

+```

diff --git a/doc/doc_en/algorithm_rec_aster_en.md b/doc/doc_en/algorithm_rec_aster_en.md

index 1540681a19f94160e221c37173510395d0fd407f..b949cb5b37c985cc55a2aa01ea5ae4096946bb05 100644

--- a/doc/doc_en/algorithm_rec_aster_en.md

+++ b/doc/doc_en/algorithm_rec_aster_en.md

@@ -33,13 +33,13 @@ Using MJSynth and SynthText two text recognition datasets for training, and eval

## 2. Environment

-Please refer to ["Environment Preparation"](./environment.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone.md) to clone the project code.

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

## 3. Model Training / Evaluation / Prediction

-Please refer to [Text Recognition Tutorial](./recognition.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

Training:

diff --git a/doc/doc_en/algorithm_rec_crnn_en.md b/doc/doc_en/algorithm_rec_crnn_en.md

index 571569ee445d756ca7bdfeea6d5f960187a5a666..8548c2fa625b713d7e7e278506ff5c46713303ed 100644

--- a/doc/doc_en/algorithm_rec_crnn_en.md

+++ b/doc/doc_en/algorithm_rec_crnn_en.md

@@ -33,13 +33,13 @@ Using MJSynth and SynthText two text recognition datasets for training, and eval

## 2. Environment

-Please refer to ["Environment Preparation"](./environment.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone.md) to clone the project code.

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

## 3. Model Training / Evaluation / Prediction

-Please refer to [Text Recognition Tutorial](./recognition.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

Training:

diff --git a/doc/doc_en/algorithm_rec_nrtr_en.md b/doc/doc_en/algorithm_rec_nrtr_en.md

index 3f8fd0adee900cf889d70e8b78fb1122d54c7d08..309d7ab123065f15a599a1220ab3f39afffb9b60 100644

--- a/doc/doc_en/algorithm_rec_nrtr_en.md

+++ b/doc/doc_en/algorithm_rec_nrtr_en.md

@@ -12,6 +12,7 @@

- [4.3 Serving](#4-3)

- [4.4 More](#4-4)

- [5. FAQ](#5)

+- [6. Release Note](#6)

## 1. Introduction

@@ -25,17 +26,17 @@ Using MJSynth and SynthText two text recognition datasets for training, and eval

|Model|Backbone|config|Acc|Download link|

| --- | --- | --- | --- | --- |

-|NRTR|MTB|[rec_mtb_nrtr.yml](../../configs/rec/rec_mtb_nrtr.yml)|84.21%|[train model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_mtb_nrtr_train.tar)|

+|NRTR|MTB|[rec_mtb_nrtr.yml](../../configs/rec/rec_mtb_nrtr.yml)|84.21%|[trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_mtb_nrtr_train.tar)|

## 2. Environment

-Please refer to ["Environment Preparation"](./environment.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone.md) to clone the project code.

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

## 3. Model Training / Evaluation / Prediction

-Please refer to [Text Recognition Tutorial](./recognition.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

Training:

@@ -98,7 +99,7 @@ python3 tools/infer/predict_rec.py --image_dir='./doc/imgs_words_en/word_10.png'

After executing the command, the prediction result (recognized text and score) of the image above is printed to the screen, an example is as follows:

The result is as follows:

```shell

-Predicts of ./doc/imgs_words_en/word_10.png:('pain', 0.9265879392623901)

+Predicts of ./doc/imgs_words_en/word_10.png:('pain', 0.9465042352676392)

```

@@ -121,12 +122,146 @@ Not supported

1. In the `NRTR` paper, Beam search is used to decode characters, but the speed is slow. Beam search is not used by default here, and greedy search is used to decode characters.

+

+## 6. Release Note

+

+1. The release/2.6 version updates the NRTR code structure. The new version of NRTR can load the model parameters of the old version (release/2.5 and before), and you may use the following code to convert the old version model parameters to the new version model parameters:

+

+```python

+

+ params = paddle.load('path/' + '.pdparams') # the old version parameters

+ state_dict = model.state_dict() # the new version model parameters

+ new_state_dict = {}

+

+ for k1, v1 in state_dict.items():

+

+ k = k1

+ if 'encoder' in k and 'self_attn' in k and 'qkv' in k and 'weight' in k:

+

+ k_para = k[:13] + 'layers.' + k[13:]

+ q = params[k_para.replace('qkv', 'conv1')].transpose((1, 0, 2, 3))

+ k = params[k_para.replace('qkv', 'conv2')].transpose((1, 0, 2, 3))

+ v = params[k_para.replace('qkv', 'conv3')].transpose((1, 0, 2, 3))

+

+ new_state_dict[k1] = np.concatenate([q[:, :, 0, 0], k[:, :, 0, 0], v[:, :, 0, 0]], -1)

+

+ elif 'encoder' in k and 'self_attn' in k and 'qkv' in k and 'bias' in k:

+

+ k_para = k[:13] + 'layers.' + k[13:]

+ q = params[k_para.replace('qkv', 'conv1')]

+ k = params[k_para.replace('qkv', 'conv2')]

+ v = params[k_para.replace('qkv', 'conv3')]

+

+ new_state_dict[k1] = np.concatenate([q, k, v], -1)

+

+ elif 'encoder' in k and 'self_attn' in k and 'out_proj' in k:

+

+ k_para = k[:13] + 'layers.' + k[13:]

+ new_state_dict[k1] = params[k_para]

+

+ elif 'encoder' in k and 'norm3' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ new_state_dict[k1] = params[k_para.replace('norm3', 'norm2')]

+

+ elif 'encoder' in k and 'norm1' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ new_state_dict[k1] = params[k_para]

+

+

+ elif 'decoder' in k and 'self_attn' in k and 'qkv' in k and 'weight' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ q = params[k_para.replace('qkv', 'conv1')].transpose((1, 0, 2, 3))

+ k = params[k_para.replace('qkv', 'conv2')].transpose((1, 0, 2, 3))

+ v = params[k_para.replace('qkv', 'conv3')].transpose((1, 0, 2, 3))

+ new_state_dict[k1] = np.concatenate([q[:, :, 0, 0], k[:, :, 0, 0], v[:, :, 0, 0]], -1)

+

+ elif 'decoder' in k and 'self_attn' in k and 'qkv' in k and 'bias' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ q = params[k_para.replace('qkv', 'conv1')]

+ k = params[k_para.replace('qkv', 'conv2')]

+ v = params[k_para.replace('qkv', 'conv3')]

+ new_state_dict[k1] = np.concatenate([q, k, v], -1)

+

+ elif 'decoder' in k and 'self_attn' in k and 'out_proj' in k:

+

+ k_para = k[:13] + 'layers.' + k[13:]

+ new_state_dict[k1] = params[k_para]

+

+ elif 'decoder' in k and 'cross_attn' in k and 'q' in k and 'weight' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ k_para = k_para.replace('cross_attn', 'multihead_attn')

+ q = params[k_para.replace('q', 'conv1')].transpose((1, 0, 2, 3))

+ new_state_dict[k1] = q[:, :, 0, 0]

+

+ elif 'decoder' in k and 'cross_attn' in k and 'q' in k and 'bias' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ k_para = k_para.replace('cross_attn', 'multihead_attn')

+ q = params[k_para.replace('q', 'conv1')]

+ new_state_dict[k1] = q

+

+ elif 'decoder' in k and 'cross_attn' in k and 'kv' in k and 'weight' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ k_para = k_para.replace('cross_attn', 'multihead_attn')

+ k = params[k_para.replace('kv', 'conv2')].transpose((1, 0, 2, 3))

+ v = params[k_para.replace('kv', 'conv3')].transpose((1, 0, 2, 3))

+ new_state_dict[k1] = np.concatenate([k[:, :, 0, 0], v[:, :, 0, 0]], -1)

+

+ elif 'decoder' in k and 'cross_attn' in k and 'kv' in k and 'bias' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ k_para = k_para.replace('cross_attn', 'multihead_attn')

+ k = params[k_para.replace('kv', 'conv2')]

+ v = params[k_para.replace('kv', 'conv3')]

+ new_state_dict[k1] = np.concatenate([k, v], -1)

+

+ elif 'decoder' in k and 'cross_attn' in k and 'out_proj' in k:

+

+ k_para = k[:13] + 'layers.' + k[13:]

+ k_para = k_para.replace('cross_attn', 'multihead_attn')

+ new_state_dict[k1] = params[k_para]

+ elif 'decoder' in k and 'norm' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ new_state_dict[k1] = params[k_para]

+ elif 'mlp' in k and 'weight' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ k_para = k_para.replace('fc', 'conv')

+ k_para = k_para.replace('mlp.', '')

+ w = params[k_para].transpose((1, 0, 2, 3))

+ new_state_dict[k1] = w[:, :, 0, 0]

+ elif 'mlp' in k and 'bias' in k:

+ k_para = k[:13] + 'layers.' + k[13:]

+ k_para = k_para.replace('fc', 'conv')

+ k_para = k_para.replace('mlp.', '')

+ w = params[k_para]

+ new_state_dict[k1] = w

+

+ else:

+ new_state_dict[k1] = params[k1]

+

+ if list(new_state_dict[k1].shape) != list(v1.shape):

+ print(k1)

+

+

+ for k, v1 in state_dict.items():

+ if k not in new_state_dict.keys():

+ print(1, k)

+ elif list(new_state_dict[k].shape) != list(v1.shape):

+ print(2, k)

+

+

+

+ model.set_state_dict(new_state_dict)

+ paddle.save(model.state_dict(), 'nrtrnew_from_old_params.pdparams')

+

+```

+

+2. The new version has a clean code structure and improved inference speed compared with the old version.

+

## Citation

```bibtex

@article{Sheng2019NRTR,

title = {NRTR: A No-Recurrence Sequence-to-Sequence Model For Scene Text Recognition},

- author = {Fenfen Sheng and Zhineng Chen andBo Xu},

+ author = {Fenfen Sheng and Zhineng Chen and Bo Xu},

booktitle = {ICDAR},

year = {2019},

url = {http://arxiv.org/abs/1806.00926},

diff --git a/doc/doc_en/algorithm_rec_sar_en.md b/doc/doc_en/algorithm_rec_sar_en.md

index 8c1e6dbbfa5cc05da4d7423a535c6db74cf8f4c3..24b87c10c3b2839909392bf3de0e0c850112fcdc 100644

--- a/doc/doc_en/algorithm_rec_sar_en.md

+++ b/doc/doc_en/algorithm_rec_sar_en.md

@@ -31,13 +31,13 @@ Note:In addition to using the two text recognition datasets MJSynth and SynthTex

## 2. Environment

-Please refer to ["Environment Preparation"](./environment.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone.md) to clone the project code.

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

## 3. Model Training / Evaluation / Prediction

-Please refer to [Text Recognition Tutorial](./recognition.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

Training:

diff --git a/doc/doc_en/algorithm_rec_seed_en.md b/doc/doc_en/algorithm_rec_seed_en.md

index 21679f42fd6302228804db49d731f9b69ec692b2..f8d7ae6d3f34ab8a4f510c88002b22dbce7a10e8 100644

--- a/doc/doc_en/algorithm_rec_seed_en.md

+++ b/doc/doc_en/algorithm_rec_seed_en.md

@@ -31,13 +31,13 @@ Using MJSynth and SynthText two text recognition datasets for training, and eval

## 2. Environment

-Please refer to ["Environment Preparation"](./environment.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone.md) to clone the project code.

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

## 3. Model Training / Evaluation / Prediction

-Please refer to [Text Recognition Tutorial](./recognition.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

Training:

diff --git a/doc/doc_en/algorithm_rec_srn_en.md b/doc/doc_en/algorithm_rec_srn_en.md

index c022a81f9e5797c531c79de7e793d44d9a22552c..1d7fc07dc29e0de021165fc5656cbca704b45284 100644

--- a/doc/doc_en/algorithm_rec_srn_en.md

+++ b/doc/doc_en/algorithm_rec_srn_en.md

@@ -30,13 +30,13 @@ Using MJSynth and SynthText two text recognition datasets for training, and eval

## 2. Environment

-Please refer to ["Environment Preparation"](./environment.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone.md) to clone the project code.

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

## 3. Model Training / Evaluation / Prediction

-Please refer to [Text Recognition Tutorial](./recognition.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

Training:

diff --git a/doc/doc_en/algorithm_rec_starnet.md b/doc/doc_en/algorithm_rec_starnet.md

new file mode 100644

index 0000000000000000000000000000000000000000..dbb53a9c737c16fa249483fa97b0b49cf25b2137

--- /dev/null

+++ b/doc/doc_en/algorithm_rec_starnet.md

@@ -0,0 +1,139 @@

+# STAR-Net

+

+- [1. Introduction](#1)

+- [2. Environment](#2)

+- [3. Model Training / Evaluation / Prediction](#3)

+ - [3.1 Training](#3-1)

+ - [3.2 Evaluation](#3-2)

+ - [3.3 Prediction](#3-3)

+- [4. Inference and Deployment](#4)

+ - [4.1 Python Inference](#4-1)

+ - [4.2 C++ Inference](#4-2)

+ - [4.3 Serving](#4-3)

+ - [4.4 More](#4-4)

+- [5. FAQ](#5)

+

+

+## 1. Introduction

+

+Paper information:

+> [STAR-Net: a spatial attention residue network for scene text recognition.](http://www.bmva.org/bmvc/2016/papers/paper043/paper043.pdf)

+> Wei Liu, Chaofeng Chen, Kwan-Yee K. Wong, Zhizhong Su and Junyu Han.

+> BMVC, pages 43.1-43.13, 2016

+

+Refer to [DTRB](https://arxiv.org/abs/1904.01906) text Recognition Training and Evaluation Process . Using MJSynth and SynthText two text recognition datasets for training, and evaluating on IIIT, SVT, IC03, IC13, IC15, SVTP, CUTE datasets, the algorithm reproduction effect is as follows:

+

+|Models|Backbone Networks|Avg Accuracy|Configuration Files|Download Links|

+| --- | --- | --- | --- | --- |

+|StarNet|Resnet34_vd|84.44%|[configs/rec/rec_r34_vd_tps_bilstm_ctc.yml](../../configs/rec/rec_r34_vd_tps_bilstm_ctc.yml)|[trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_r34_vd_tps_bilstm_ctc_v2.0_train.tar)|

+|StarNet|MobileNetV3|81.42%|[configs/rec/rec_mv3_tps_bilstm_ctc.yml](../../configs/rec/rec_mv3_tps_bilstm_ctc.yml)|[ trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_mv3_tps_bilstm_ctc_v2.0_train.tar)|

+

+

+

+## 2. Environment

+Please refer to [Operating Environment Preparation](./environment_en.md) to configure the PaddleOCR operating environment, and refer to [Project Clone](./clone_en.md) to clone the project code.

+

+

+## 3. Model Training / Evaluation / Prediction

+

+Please refer to [Text Recognition Training Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**. Take the backbone network based on Resnet34_vd as an example:

+

+

+### 3.1 Training

+After the data preparation is complete, the training can be started. The training command is as follows:

+

+````

+#Single card training (long training period, not recommended)

+python3 tools/train.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml #Multi-card training, specify the card number through the --gpus parameter

+python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c rec_r34_vd_tps_bilstm_ctc.yml

+ ````

+

+

+### 3.2 Evaluation

+

+````

+# GPU evaluation, Global.pretrained_model is the model to be evaluated

+python3 -m paddle.distributed.launch --gpus '0' tools/eval.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml -o Global.pretrained_model={path/to/weights}/best_accuracy

+ ````

+

+

+### 3.3 Prediction

+

+````

+# The configuration file used for prediction must match the training

+python3 tools/infer_rec.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml -o Global.pretrained_model={path/to/weights}/best_accuracy Global.infer_img=doc/imgs_words/en/word_1.png

+ ````

+

+

+## 4. Inference

+

+

+### 4.1 Python Inference

+First, convert the model saved during the STAR-Net text recognition training process into an inference model. Take the model trained on the MJSynth and SynthText text recognition datasets based on the Resnet34_vd backbone network as an example [Model download address]( https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_r34_vd_none_bilstm_ctc_v2.0_train.tar) , which can be converted using the following command:

+

+```shell

+python3 tools/export_model.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml -o Global.pretrained_model=./rec_r34_vd_tps_bilstm_ctc_v2.0_train/best_accuracy Global.save_inference_dir=./inference/rec_starnet

+ ````

+

+STAR-Net text recognition model inference, you can execute the following commands:

+

+```shell

+python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words_en/word_336.png" --rec_model_dir="./inference/rec_starnet/" --rec_image_shape="3, 32, 100" --rec_char_dict_path="./ppocr/utils/ic15_dict.txt"

+ ````

+

+

+

+The inference results are as follows:

+

+

+```bash

+Predicts of ./doc/imgs_words_en/word_336.png:('super', 0.9999073)

+```

+

+**Attention** Since the above model refers to the [DTRB](https://arxiv.org/abs/1904.01906) text recognition training and evaluation process, it is different from the ultra-lightweight Chinese recognition model training in two aspects:

+

+- The image resolutions used during training are different. The image resolutions used for training the above models are [3, 32, 100], while for Chinese model training, in order to ensure the recognition effect of long texts, the image resolutions used during training are [ 3, 32, 320]. The default shape parameter of the predictive inference program is the image resolution used for training Chinese, i.e. [3, 32, 320]. Therefore, when inferring the above English model here, it is necessary to set the shape of the recognized image through the parameter rec_image_shape.

+

+- Character list, the experiment in the DTRB paper is only for 26 lowercase English letters and 10 numbers, a total of 36 characters. All uppercase and lowercase characters are converted to lowercase characters, and characters not listed above are ignored and considered spaces. Therefore, there is no input character dictionary here, but a dictionary is generated by the following command. Therefore, the parameter rec_char_dict_path needs to be set during inference, which is specified as an English dictionary "./ppocr/utils/ic15_dict.txt".

+

+```

+self.character_str = "0123456789abcdefghijklmnopqrstuvwxyz"

+dict_character = list(self.character_str)

+

+

+ ```

+

+

+### 4.2 C++ Inference

+

+After preparing the inference model, refer to the [cpp infer](../../deploy/cpp_infer/) tutorial to operate.

+

+

+### 4.3 Serving

+

+After preparing the inference model, refer to the [pdserving](../../deploy/pdserving/) tutorial for Serving deployment, including two modes: Python Serving and C++ Serving.

+

+

+### 4.4 More

+

+The STAR-Net model also supports the following inference deployment methods:

+

+- Paddle2ONNX Inference: After preparing the inference model, refer to the [paddle2onnx](../../deploy/paddle2onnx/) tutorial.

+

+

+## 5. FAQ

+

+## Quote

+

+```bibtex

+@inproceedings{liu2016star,

+ title={STAR-Net: a spatial attention residue network for scene text recognition.},

+ author={Liu, Wei and Chen, Chaofeng and Wong, Kwan-Yee K and Su, Zhizhong and Han, Junyu},

+ booktitle={BMVC},

+ volume={2},

+ pages={7},

+ year={2016}

+}

+```

+

+

diff --git a/doc/doc_en/algorithm_rec_svtr_en.md b/doc/doc_en/algorithm_rec_svtr_en.md

index 2e7deb4c077ce508773c4789e2e76bdda7dfe8c8..37cd35f35a2025cbb55ff85fe27b50e5d6e556aa 100644

--- a/doc/doc_en/algorithm_rec_svtr_en.md

+++ b/doc/doc_en/algorithm_rec_svtr_en.md

@@ -34,7 +34,7 @@ The accuracy (%) and model files of SVTR on the public dataset of scene text rec

## 2. Environment

-Please refer to ["Environment Preparation"](./environment.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone.md) to clone the project code.

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

#### Dataset Preparation

@@ -44,7 +44,7 @@ Please refer to ["Environment Preparation"](./environment.md) to configure the P

## 3. Model Training / Evaluation / Prediction

-Please refer to [Text Recognition Tutorial](./recognition.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

Training:

@@ -88,7 +88,6 @@ python3 tools/export_model.py -c configs/rec/rec_svtrnet.yml -o Global.pretraine

**Note:**

- If you are training the model on your own dataset and have modified the dictionary file, please pay attention to modify the `character_dict_path` in the configuration file to the modified dictionary file.

-- If you modified the input size during training, please modify the `infer_shape` corresponding to SVTR in the `tools/export_model.py` file.

After the conversion is successful, there are three files in the directory:

```

diff --git a/doc/doc_en/algorithm_rec_vitstr_en.md b/doc/doc_en/algorithm_rec_vitstr_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..a6f9e2f15df69e7949a4b9713274d9b83ff98f60

--- /dev/null

+++ b/doc/doc_en/algorithm_rec_vitstr_en.md

@@ -0,0 +1,134 @@

+# ViTSTR

+

+- [1. Introduction](#1)

+- [2. Environment](#2)

+- [3. Model Training / Evaluation / Prediction](#3)

+ - [3.1 Training](#3-1)

+ - [3.2 Evaluation](#3-2)

+ - [3.3 Prediction](#3-3)

+- [4. Inference and Deployment](#4)

+ - [4.1 Python Inference](#4-1)

+ - [4.2 C++ Inference](#4-2)

+ - [4.3 Serving](#4-3)

+ - [4.4 More](#4-4)

+- [5. FAQ](#5)

+

+

+## 1. Introduction

+

+Paper:

+> [Vision Transformer for Fast and Efficient Scene Text Recognition](https://arxiv.org/abs/2105.08582)

+> Rowel Atienza

+> ICDAR, 2021

+

+Using MJSynth and SynthText two text recognition datasets for training, and evaluating on IIIT, SVT, IC03, IC13, IC15, SVTP, CUTE datasets, the algorithm reproduction effect is as follows:

+

+|Model|Backbone|config|Acc|Download link|

+| --- | --- | --- | --- | --- |

+|ViTSTR|ViTSTR|[rec_vitstr_none_ce.yml](../../configs/rec/rec_vitstr_none_ce.yml)|79.82%|[trained model](https://paddleocr.bj.bcebos.com/rec_vitstr_none_none_train.tar)|

+

+

+## 2. Environment

+Please refer to ["Environment Preparation"](./environment_en.md) to configure the PaddleOCR environment, and refer to ["Project Clone"](./clone_en.md) to clone the project code.

+

+

+

+## 3. Model Training / Evaluation / Prediction

+

+Please refer to [Text Recognition Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**.

+

+Training:

+

+Specifically, after the data preparation is completed, the training can be started. The training command is as follows:

+

+```

+#Single GPU training (long training period, not recommended)

+python3 tools/train.py -c configs/rec/rec_vitstr_none_ce.yml

+

+#Multi GPU training, specify the gpu number through the --gpus parameter

+python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/rec/rec_vitstr_none_ce.yml

+```

+

+Evaluation:

+

+```

+# GPU evaluation

+python3 -m paddle.distributed.launch --gpus '0' tools/eval.py -c configs/rec/rec_vitstr_none_ce.yml -o Global.pretrained_model={path/to/weights}/best_accuracy

+```

+

+Prediction:

+

+```

+# The configuration file used for prediction must match the training

+python3 tools/infer_rec.py -c configs/rec/rec_vitstr_none_ce.yml -o Global.infer_img='./doc/imgs_words_en/word_10.png' Global.pretrained_model=./rec_vitstr_none_ce_train/best_accuracy

+```

+

+

+## 4. Inference and Deployment

+

+

+### 4.1 Python Inference

+First, the model saved during the ViTSTR text recognition training process is converted into an inference model. ( [Model download link](https://paddleocr.bj.bcebos.com/rec_vitstr_none_none_train.tar)) ), you can use the following command to convert:

+

+```

+python3 tools/export_model.py -c configs/rec/rec_vitstr_none_ce.yml -o Global.pretrained_model=./rec_vitstr_none_ce_train/best_accuracy Global.save_inference_dir=./inference/rec_vitstr

+```

+

+**Note:**

+- If you are training the model on your own dataset and have modified the dictionary file, please pay attention to modify the `character_dict_path` in the configuration file to the modified dictionary file.

+- If you modified the input size during training, please modify the `infer_shape` corresponding to ViTSTR in the `tools/export_model.py` file.

+

+After the conversion is successful, there are three files in the directory:

+```

+/inference/rec_vitstr/

+ ├── inference.pdiparams

+ ├── inference.pdiparams.info

+ └── inference.pdmodel

+```

+

+

+For ViTSTR text recognition model inference, the following commands can be executed:

+

+```

+python3 tools/infer/predict_rec.py --image_dir='./doc/imgs_words_en/word_10.png' --rec_model_dir='./inference/rec_vitstr/' --rec_algorithm='ViTSTR' --rec_image_shape='1,224,224' --rec_char_dict_path='./ppocr/utils/EN_symbol_dict.txt'

+```

+

+

+

+After executing the command, the prediction result (recognized text and score) of the image above is printed to the screen, an example is as follows:

+The result is as follows:

+```shell

+Predicts of ./doc/imgs_words_en/word_10.png:('pain', 0.9998350143432617)

+```

+

+

+### 4.2 C++ Inference

+

+Not supported

+

+

+### 4.3 Serving

+

+Not supported

+

+

+### 4.4 More

+

+Not supported

+

+

+## 5. FAQ

+

+1. In the `ViTSTR` paper, using pre-trained weights on ImageNet1k for initial training, we did not use pre-trained weights in training, and the final accuracy did not change or even improved.

+

+## Citation

+

+```bibtex

+@article{Atienza2021ViTSTR,

+ title = {Vision Transformer for Fast and Efficient Scene Text Recognition},

+ author = {Rowel Atienza},

+ booktitle = {ICDAR},

+ year = {2021},

+ url = {https://arxiv.org/abs/2105.08582}

+}

+```

diff --git a/doc/doc_en/detection_en.md b/doc/doc_en/detection_en.md

index 76e0f8509b92dfaae62dce7ba2b4b73d39da1600..f85bf585cb66332d90de8d66ed315cb04ece7636 100644

--- a/doc/doc_en/detection_en.md

+++ b/doc/doc_en/detection_en.md

@@ -159,7 +159,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/MobileNetV3_large_x0_5_pretrained

```

-**Note:** When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. In addition, training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`.

+**Note:** (1) When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. (2) Training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`. (3) For more details about the distributed training speedup ratio, please refer to [Distributed Training Tutorial](./distributed_training_en.md).

### 2.6 Training with knowledge distillation

diff --git a/doc/doc_en/distributed_training.md b/doc/doc_en/distributed_training_en.md

similarity index 70%

rename from doc/doc_en/distributed_training.md

rename to doc/doc_en/distributed_training_en.md

index 2822ee5e4ea52720a458e4060d8a09be7b98846b..5a219ed2b494d6239096ff634dfdc702c4be9419 100644

--- a/doc/doc_en/distributed_training.md

+++ b/doc/doc_en/distributed_training_en.md

@@ -40,11 +40,17 @@ python3 -m paddle.distributed.launch \

## Performance comparison

-* Based on 26W public recognition dataset (LSVT, rctw, mtwi), training on single 8-card P40 and dual 8-card P40, the final time consumption is as follows.

+* On two 8-card P40 graphics cards, the final time consumption and speedup ratio for public recognition dataset (LSVT, RCTW, MTWI) containing 260k images are as follows.

-| Model | Config file | Number of machines | Number of GPUs per machine | Training time | Recognition acc | Speedup ratio |

-| :-------: | :------------: | :----------------: | :----------------------------: | :------------------: | :--------------: | :-----------: |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 1 | 8 | 60h | 66.7% | - |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 2 | 8 | 40h | 67.0% | 150% |

-It can be seen that the training time is shortened from 60h to 40h, the speedup ratio can reach 150% (60h / 40h), and the efficiency is 75% (60h / (40h * 2)).

+| Model | Config file | Recognition acc | single 8-card training time | two 8-card training time | Speedup ratio |

+|------|-----|--------|--------|--------|-----|

+| CRNN | [rec_chinese_lite_train_v2.0.yml](../../configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml) | 67.0% | 2.50d | 1.67d | **1.5** |

+

+

+* On four 8-card V100 graphics cards, the final time consumption and speedup ratio for full data are as follows.

+

+

+| Model | Config file | Recognition acc | single 8-card training time | four 8-card training time | Speedup ratio |

+|------|-----|--------|--------|--------|-----|

+| SVTR | [ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml) | 74.0% | 10d | 2.84d | **3.5** |

diff --git a/doc/doc_en/knowledge_distillation_en.md b/doc/doc_en/knowledge_distillation_en.md

index bd36907c98c6d556fe1dea85712ece0e717fe426..52725e5c0586b7f7b3e8fdc86d0c24ea38030d53 100755

--- a/doc/doc_en/knowledge_distillation_en.md

+++ b/doc/doc_en/knowledge_distillation_en.md

@@ -438,10 +438,10 @@ Architecture:

```

If DML is used, that is, the method of two small models learning from each other, the Teacher network structure in the above configuration file needs to be set to the same configuration as the Student model.

-Refer to the configuration file for details. [ch_PP-OCRv3_det_dml.yml](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.4/configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml)

+Refer to the configuration file for details. [ch_PP-OCRv3_det_dml.yml](../../configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_dml.yml)

-The following describes the configuration file parameters [ch_PP-OCRv3_det_cml.yml](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.4/configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml):

+The following describes the configuration file parameters [ch_PP-OCRv3_det_cml.yml](../../configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml):

```

Architecture:

diff --git a/doc/doc_en/models_list_en.md b/doc/doc_en/models_list_en.md

index 8e8c1f2fe11bcd0748d556d34fd184fed4b3a86f..c52f71dfe4124302b8cb308980a6228a89589bd6 100644

--- a/doc/doc_en/models_list_en.md

+++ b/doc/doc_en/models_list_en.md

@@ -20,7 +20,7 @@ The downloadable models provided by PaddleOCR include `inference model`, `traine

|model type|model format|description|

|--- | --- | --- |

-|inference model|inference.pdmodel、inference.pdiparams|Used for inference based on Paddle inference engine,[detail](./inference_en.md)|

+|inference model|inference.pdmodel、inference.pdiparams|Used for inference based on Paddle inference engine,[detail](./inference_ppocr_en.md)|

|trained model, pre-trained model|\*.pdparams、\*.pdopt、\*.states |The checkpoints model saved in the training process, which stores the parameters of the model, mostly used for model evaluation and continuous training.|

|nb model|\*.nb| Model optimized by Paddle-Lite, which is suitable for mobile-side deployment scenarios (Paddle-Lite is needed for nb model deployment). |

@@ -37,7 +37,7 @@ Relationship of the above models is as follows.

|model name|description|config|model size|download|

| --- | --- | --- | --- | --- |

-|ch_PP-OCRv3_det_slim| [New] slim quantization with distillation lightweight model, supporting Chinese, English, multilingual text detection |[ch_PP-OCRv3_det_cml.yml](../../configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml)| 1.1M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_slim_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/ch/ch_PP-OCRv3_det_slim_distill_train.tar) / [nb model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_slim_infer.nb)|

+|ch_PP-OCRv3_det_slim| [New] slim quantization with distillation lightweight model, supporting Chinese, English, multilingual text detection |[ch_PP-OCRv3_det_cml.yml](../../configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml)| 1.1M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_slim_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_slim_distill_train.tar) / [nb model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_slim_infer.nb)|

|ch_PP-OCRv3_det| [New] Original lightweight model, supporting Chinese, English, multilingual text detection |[ch_PP-OCRv3_det_cml.yml](../../configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml)| 3.8M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar)|

|ch_PP-OCRv2_det_slim| [New] slim quantization with distillation lightweight model, supporting Chinese, English, multilingual text detection|[ch_PP-OCRv2_det_cml.yml](../../configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_cml.yml)| 3M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_slim_quant_infer.tar)|

|ch_PP-OCRv2_det| [New] Original lightweight model, supporting Chinese, English, multilingual text detection|[ch_PP-OCRv2_det_cml.yml](../../configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_cml.yml)|3M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar)|

@@ -75,7 +75,7 @@ Relationship of the above models is as follows.

|model name|description|config|model size|download|

| --- | --- | --- | --- | --- |

-|ch_PP-OCRv3_rec_slim | [New] Slim qunatization with distillation lightweight model, supporting Chinese, English text recognition |[ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml)| 4.9M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_slim_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/ch/ch_PP-OCRv3_rec_slim_train.tar) / [nb model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_slim_infer.nb) |

+|ch_PP-OCRv3_rec_slim | [New] Slim qunatization with distillation lightweight model, supporting Chinese, English text recognition |[ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml)| 4.9M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_slim_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_slim_train.tar) / [nb model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_slim_infer.nb) |

|ch_PP-OCRv3_rec| [New] Original lightweight model, supporting Chinese, English, multilingual text recognition |[ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml)| 12.4M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar) |

|ch_PP-OCRv2_rec_slim| Slim qunatization with distillation lightweight model, supporting Chinese, English text recognition|[ch_PP-OCRv2_rec.yml](../../configs/rec/ch_PP-OCRv2/ch_PP-OCRv2_rec.yml)| 9M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_slim_quant_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_slim_quant_train.tar) |

|ch_PP-OCRv2_rec| Original lightweight model, supporting Chinese, English, multilingual text recognition |[ch_PP-OCRv2_rec_distillation.yml](../../configs/rec/ch_PP-OCRv2/ch_PP-OCRv2_rec_distillation.yml)|8.5M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_train.tar) |

@@ -91,7 +91,7 @@ Relationship of the above models is as follows.

|model name|description|config|model size|download|

| --- | --- | --- | --- | --- |

-|en_PP-OCRv3_rec_slim | [New] Slim qunatization with distillation lightweight model, supporting english, English text recognition |[en_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml)| 3.2M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/PP-OCRv3_rec_slim_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_slim_train.tar) / [nb model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_slim_infer.nb) |

+|en_PP-OCRv3_rec_slim | [New] Slim qunatization with distillation lightweight model, supporting english, English text recognition |[en_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml)| 3.2M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_slim_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_slim_train.tar) / [nb model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_slim_infer.nb) |

|en_PP-OCRv3_rec| [New] Original lightweight model, supporting english, English, multilingual text recognition |[en_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/en_PP-OCRv3_rec.yml)| 9.6M |[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_train.tar) |

|en_number_mobile_slim_v2.0_rec|Slim pruned and quantized lightweight model, supporting English and number recognition|[rec_en_number_lite_train.yml](../../configs/rec/multi_language/rec_en_number_lite_train.yml)| 2.7M | [inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/en_number_mobile_v2.0_rec_slim_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/en_number_mobile_v2.0_rec_slim_train.tar) |

|en_number_mobile_v2.0_rec|Original lightweight model, supporting English and number recognition|[rec_en_number_lite_train.yml](../../configs/rec/multi_language/rec_en_number_lite_train.yml)|2.6M|[inference model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/en_number_mobile_v2.0_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/en_number_mobile_v2.0_rec_train.tar) |

@@ -108,7 +108,7 @@ Relationship of the above models is as follows.

| ka_PP-OCRv3_rec | ppocr/utils/dict/ka_dict.txt | Lightweight model for Kannada recognition |[ka_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/multi_language/ka_PP-OCRv3_rec.yml)|9.9M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/ka_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/ka_PP-OCRv3_rec_train.tar) |

| ta_PP-OCRv3_rec | ppocr/utils/dict/ta_dict.txt |Lightweight model for Tamil recognition|[ta_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/multi_language/ta_PP-OCRv3_rec.yml)|9.6M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/ta_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/ta_PP-OCRv3_rec_train.tar) |

| latin_PP-OCRv3_rec | ppocr/utils/dict/latin_dict.txt | Lightweight model for latin recognition | [latin_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/multi_language/latin_PP-OCRv3_rec.yml) |9.7M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/latin_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/latin_PP-OCRv3_rec_train.tar) |

-| arabic_PP-OCRv3_rec | ppocr/utils/dict/arabic_dict.txt | Lightweight model for arabic recognition | [arabic_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/multi_language/rec_arabic_lite_train.yml) |9.6M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/arabic_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/arabic_PP-OCRv3_rec_train.tar) |

+| arabic_PP-OCRv3_rec | ppocr/utils/dict/arabic_dict.txt | Lightweight model for arabic recognition | [arabic_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/multi_language/arabic_PP-OCRv3_rec.yml) |9.6M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/arabic_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/arabic_PP-OCRv3_rec_train.tar) |

| cyrillic_PP-OCRv3_rec | ppocr/utils/dict/cyrillic_dict.txt | Lightweight model for cyrillic recognition | [cyrillic_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/multi_language/cyrillic_PP-OCRv3_rec.yml) |9.6M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/cyrillic_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/cyrillic_PP-OCRv3_rec_train.tar) |

| devanagari_PP-OCRv3_rec | ppocr/utils/dict/devanagari_dict.txt | Lightweight model for devanagari recognition | [devanagari_PP-OCRv3_rec.yml](../../configs/rec/PP-OCRv3/multi_language/devanagari_PP-OCRv3_rec.yml) |9.9M|[inference model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/devanagari_PP-OCRv3_rec_infer.tar) / [trained model](https://paddleocr.bj.bcebos.com/PP-OCRv3/multilingual/devanagari_PP-OCRv3_rec_train.tar) |

diff --git a/doc/doc_en/multi_languages_en.md b/doc/doc_en/multi_languages_en.md

index 4696a3e842242517d19bcac7d7bdef3b4c233b12..d9cb180f706eebdba4727f7909499487794545b9 100644

--- a/doc/doc_en/multi_languages_en.md

+++ b/doc/doc_en/multi_languages_en.md

@@ -187,10 +187,10 @@ In addition to installing the whl package for quick forecasting,

PPOCR also provides a variety of forecasting deployment methods.

If necessary, you can read related documents:

-- [Python Inference](./inference_en.md)

-- [C++ Inference](../../deploy/cpp_infer/readme_en.md)

+- [Python Inference](./inference_ppocr_en.md)

+- [C++ Inference](../../deploy/cpp_infer/readme.md)

- [Serving](../../deploy/hubserving/readme_en.md)

-- [Mobile](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/deploy/lite/readme_en.md)

+- [Mobile](../../deploy/lite/readme.md)

- [Benchmark](./benchmark_en.md)

diff --git a/doc/doc_en/ppocr_introduction_en.md b/doc/doc_en/ppocr_introduction_en.md

index 8fe6bc683ac69bdff0e3b4297f2eaa95b934fa17..d28ccb3529a46bdf0d3fd1d1c81f14137d10f2ea 100644

--- a/doc/doc_en/ppocr_introduction_en.md

+++ b/doc/doc_en/ppocr_introduction_en.md

@@ -29,16 +29,16 @@ PP-OCR pipeline is as follows:

PP-OCR system is in continuous optimization. At present, PP-OCR and PP-OCRv2 have been released:

-PP-OCR adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module (as shown in the green box above). The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to the PP-OCR technical article (https://arxiv.org/abs/2009.09941).

+PP-OCR adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module (as shown in the green box above). The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to [PP-OCR technical report](https://arxiv.org/abs/2009.09941).

#### PP-OCRv2

-On the basis of PP-OCR, PP-OCRv2 is further optimized in five aspects. The detection model adopts CML(Collaborative Mutual Learning) knowledge distillation strategy and CopyPaste data expansion strategy. The recognition model adopts LCNet lightweight backbone network, U-DML knowledge distillation strategy and enhanced CTC loss function improvement (as shown in the red box above), which further improves the inference speed and prediction effect. For more details, please refer to the technical report of PP-OCRv2 (https://arxiv.org/abs/2109.03144).

+On the basis of PP-OCR, PP-OCRv2 is further optimized in five aspects. The detection model adopts CML(Collaborative Mutual Learning) knowledge distillation strategy and CopyPaste data expansion strategy. The recognition model adopts LCNet lightweight backbone network, U-DML knowledge distillation strategy and enhanced CTC loss function improvement (as shown in the red box above), which further improves the inference speed and prediction effect. For more details, please refer to [PP-OCRv2 technical report](https://arxiv.org/abs/2109.03144).

#### PP-OCRv3

PP-OCRv3 upgraded the detection model and recognition model in 9 aspects based on PP-OCRv2:

- PP-OCRv3 detector upgrades the CML(Collaborative Mutual Learning) text detection strategy proposed in PP-OCRv2, and further optimizes the effect of teacher model and student model respectively. In the optimization of teacher model, a pan module with large receptive field named LK-PAN is proposed and the DML distillation strategy is adopted; In the optimization of student model, a FPN module with residual attention mechanism named RSE-FPN is proposed.

-- PP-OCRv3 recognizer is optimized based on text recognition algorithm [SVTR](https://arxiv.org/abs/2205.00159). SVTR no longer adopts RNN by introducing transformers structure, which can mine the context information of text line image more effectively, so as to improve the ability of text recognition. PP-OCRv3 adopts lightweight text recognition network SVTR_LCNet, guided training of CTC loss by attention loss, data augmentation strategy TextConAug, better pre-trained model by self-supervised TextRotNet, UDML(Unified Deep Mutual Learning), and UIM (Unlabeled Images Mining) to accelerate the model and improve the effect.

+- PP-OCRv3 recognizer is optimized based on text recognition algorithm [SVTR](https://arxiv.org/abs/2205.00159). SVTR no longer adopts RNN by introducing transformers structure, which can mine the context information of text line image more effectively, so as to improve the ability of text recognition. PP-OCRv3 adopts lightweight text recognition network SVTR_LCNet, guided training of CTC by attention, data augmentation strategy TextConAug, better pre-trained model by self-supervised TextRotNet, UDML(Unified Deep Mutual Learning), and UIM (Unlabeled Images Mining) to accelerate the model and improve the effect.

PP-OCRv3 pipeline is as follows:

@@ -46,7 +46,7 @@ PP-OCRv3 pipeline is as follows:

-For more details, please refer to [PP-OCRv3 technical report](./PP-OCRv3_introduction_en.md).

+For more details, please refer to [PP-OCRv3 technical report](https://arxiv.org/abs/2206.03001v2).

## 2. Features

diff --git a/doc/doc_en/quickstart_en.md b/doc/doc_en/quickstart_en.md

index d7aeb7773021aa6cf8f4d71298588915e5938fab..c678dc47625f4289a93621144bf5577b059d52b3 100644

--- a/doc/doc_en/quickstart_en.md

+++ b/doc/doc_en/quickstart_en.md

@@ -119,7 +119,18 @@ If you do not use the provided test image, you can replace the following `--imag

['PAIN', 0.9934559464454651]

```

-If you need to use the 2.0 model, please specify the parameter `--ocr_version PP-OCR`, paddleocr uses the PP-OCRv3 model by default(`--ocr_version PP-OCRv3`). More whl package usage can be found in [whl package](./whl_en.md)

+**Version**

+paddleocr uses the PP-OCRv3 model by default(`--ocr_version PP-OCRv3`). If you want to use other versions, you can set the parameter `--ocr_version`, the specific version description is as follows:

+| version name | description |

+| --- | --- |

+| PP-OCRv3 | support Chinese and English detection and recognition, direction classifier, support multilingual recognition |

+| PP-OCRv2 | only supports Chinese and English detection and recognition, direction classifier, multilingual model is not updated |

+| PP-OCR | support Chinese and English detection and recognition, direction classifier, support multilingual recognition |

+

+If you want to add your own trained model, you can add model links and keys in [paddleocr](../../paddleocr.py) and recompile.

+

+More whl package usage can be found in [whl package](./whl_en.md)

+

#### 2.1.2 Multi-language Model

diff --git a/doc/doc_en/recognition_en.md b/doc/doc_en/recognition_en.md

index 60b4a1b26b373adc562ab9624e55ffe59a775a35..7d31b0ffe28c59ad3397d06fa178bcf8cbb822e9 100644

--- a/doc/doc_en/recognition_en.md

+++ b/doc/doc_en/recognition_en.md

@@ -306,7 +306,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/rec_mv3_none_bilstm_ctc_v2.0_train

```

-**Note:** When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. In addition, training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`.

+**Note:** (1) When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. (2) Training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`. (3) For more details about the distributed training speedup ratio, please refer to [Distributed Training Tutorial](./distributed_training_en.md).

### 2.6 Training with Knowledge Distillation

diff --git a/doc/doc_en/update_en.md b/doc/doc_en/update_en.md

index a900219b2462524425fc4303ea3bd571efcbab8f..a44dd0d70c611e1b5fb59d1e58b382704d0bbae8 100644

--- a/doc/doc_en/update_en.md

+++ b/doc/doc_en/update_en.md

@@ -1,8 +1,8 @@

# RECENT UPDATES

- 2022.5.9 release PaddleOCR v2.5, including:

- - [PP-OCRv3](./doc/doc_en/ppocr_introduction_en.md#pp-ocrv3): With comparable speed, the effect of Chinese scene is further improved by 5% compared with PP-OCRv2, the effect of English scene is improved by 11%, and the average recognition accuracy of 80 language multilingual models is improved by more than 5%.

- - [PPOCRLabelv2](./PPOCRLabel): Add the annotation function for table recognition task, key information extraction task and irregular text image.

- - Interactive e-book [*"Dive into OCR"*](./doc/doc_en/ocr_book_en.md), covers the cutting-edge theory and code practice of OCR full stack technology.

+ - [PP-OCRv3](./ppocr_introduction_en.md#pp-ocrv3): With comparable speed, the effect of Chinese scene is further improved by 5% compared with PP-OCRv2, the effect of English scene is improved by 11%, and the average recognition accuracy of 80 language multilingual models is improved by more than 5%.

+ - [PPOCRLabelv2](../../PPOCRLabel): Add the annotation function for table recognition task, key information extraction task and irregular text image.

+ - Interactive e-book [*"Dive into OCR"*](./ocr_book_en.md), covers the cutting-edge theory and code practice of OCR full stack technology.

- 2022.5.7 Add support for metric and model logging during training to [Weights & Biases](https://docs.wandb.ai/).

- 2021.12.21 OCR open source online course starts. The lesson starts at 8:30 every night and lasts for ten days. Free registration: https://aistudio.baidu.com/aistudio/course/introduce/25207

- 2021.12.21 release PaddleOCR v2.4, release 1 text detection algorithm (PSENet), 3 text recognition algorithms (NRTR、SEED、SAR), 1 key information extraction algorithm (SDMGR) and 3 DocVQA algorithms (LayoutLM、LayoutLMv2,LayoutXLM).

diff --git a/paddleocr.py b/paddleocr.py

index a1265f79def7018a5586be954127e5b7fdba011e..470dc60da3b15195bcd401aff5e50be5a2cfd13e 100644

--- a/paddleocr.py

+++ b/paddleocr.py

@@ -154,7 +154,13 @@ MODEL_URLS = {

'https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_infer.tar',

'dict_path': './ppocr/utils/ppocr_keys_v1.txt'

}

- }

+ },

+ 'cls': {

+ 'ch': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar',

+ }

+ },

},

'PP-OCR': {

'det': {

diff --git a/ppocr/data/imaug/__init__.py b/ppocr/data/imaug/__init__.py

index 548832fb0d116ba2de622bd97562b591d74501d8..63dfda91f8d0eb200d3c635fda43670039375784 100644

--- a/ppocr/data/imaug/__init__.py

+++ b/ppocr/data/imaug/__init__.py

@@ -22,8 +22,10 @@ from .make_shrink_map import MakeShrinkMap

from .random_crop_data import EastRandomCropData, RandomCropImgMask

from .make_pse_gt import MakePseGt

+

from .rec_img_aug import RecAug, RecConAug, RecResizeImg, ClsResizeImg, \

- SRNRecResizeImg, NRTRRecResizeImg, SARRecResizeImg, PRENResizeImg

+ SRNRecResizeImg, GrayRecResizeImg, SARRecResizeImg, PRENResizeImg, \

+ ABINetRecResizeImg, SVTRRecResizeImg, ABINetRecAug

from .ssl_img_aug import SSLRotateResize

from .randaugment import RandAugment

from .copy_paste import CopyPaste

diff --git a/ppocr/data/imaug/abinet_aug.py b/ppocr/data/imaug/abinet_aug.py

new file mode 100644

index 0000000000000000000000000000000000000000..eefdc75d5a5c0ac3f7136bf22a2adb31129bd313

--- /dev/null

+++ b/ppocr/data/imaug/abinet_aug.py

@@ -0,0 +1,407 @@

+# copyright (c) 2020 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""

+This code is refer from:

+https://github.com/FangShancheng/ABINet/blob/main/transforms.py

+"""

+import math

+import numbers

+import random

+

+import cv2

+import numpy as np

+from paddle.vision.transforms import Compose, ColorJitter

+

+

+def sample_asym(magnitude, size=None):

+ return np.random.beta(1, 4, size) * magnitude

+

+

+def sample_sym(magnitude, size=None):

+ return (np.random.beta(4, 4, size=size) - 0.5) * 2 * magnitude

+

+

+def sample_uniform(low, high, size=None):

+ return np.random.uniform(low, high, size=size)

+

+

+def get_interpolation(type='random'):

+ if type == 'random':

+ choice = [

+ cv2.INTER_NEAREST, cv2.INTER_LINEAR, cv2.INTER_CUBIC, cv2.INTER_AREA

+ ]

+ interpolation = choice[random.randint(0, len(choice) - 1)]

+ elif type == 'nearest':

+ interpolation = cv2.INTER_NEAREST

+ elif type == 'linear':

+ interpolation = cv2.INTER_LINEAR

+ elif type == 'cubic':

+ interpolation = cv2.INTER_CUBIC

+ elif type == 'area':

+ interpolation = cv2.INTER_AREA

+ else:

+ raise TypeError(

+ 'Interpolation types only nearest, linear, cubic, area are supported!'

+ )

+ return interpolation

+

+

+class CVRandomRotation(object):

+ def __init__(self, degrees=15):

+ assert isinstance(degrees,

+ numbers.Number), "degree should be a single number."

+ assert degrees >= 0, "degree must be positive."

+ self.degrees = degrees

+

+ @staticmethod

+ def get_params(degrees):

+ return sample_sym(degrees)

+

+ def __call__(self, img):

+ angle = self.get_params(self.degrees)

+ src_h, src_w = img.shape[:2]

+ M = cv2.getRotationMatrix2D(

+ center=(src_w / 2, src_h / 2), angle=angle, scale=1.0)

+ abs_cos, abs_sin = abs(M[0, 0]), abs(M[0, 1])

+ dst_w = int(src_h * abs_sin + src_w * abs_cos)

+ dst_h = int(src_h * abs_cos + src_w * abs_sin)

+ M[0, 2] += (dst_w - src_w) / 2

+ M[1, 2] += (dst_h - src_h) / 2

+

+ flags = get_interpolation()

+ return cv2.warpAffine(

+ img,

+ M, (dst_w, dst_h),

+ flags=flags,

+ borderMode=cv2.BORDER_REPLICATE)

+

+

+class CVRandomAffine(object):

+ def __init__(self, degrees, translate=None, scale=None, shear=None):

+ assert isinstance(degrees,

+ numbers.Number), "degree should be a single number."

+ assert degrees >= 0, "degree must be positive."

+ self.degrees = degrees

+

+ if translate is not None:

+ assert isinstance(translate, (tuple, list)) and len(translate) == 2, \

+ "translate should be a list or tuple and it must be of length 2."

+ for t in translate:

+ if not (0.0 <= t <= 1.0):

+ raise ValueError(

+ "translation values should be between 0 and 1")

+ self.translate = translate

+

+ if scale is not None:

+ assert isinstance(scale, (tuple, list)) and len(scale) == 2, \

+ "scale should be a list or tuple and it must be of length 2."

+ for s in scale:

+ if s <= 0:

+ raise ValueError("scale values should be positive")

+ self.scale = scale

+

+ if shear is not None:

+ if isinstance(shear, numbers.Number):

+ if shear < 0:

+ raise ValueError(

+ "If shear is a single number, it must be positive.")

+ self.shear = [shear]

+ else:

+ assert isinstance(shear, (tuple, list)) and (len(shear) == 2), \

+ "shear should be a list or tuple and it must be of length 2."

+ self.shear = shear

+ else:

+ self.shear = shear

+

+ def _get_inverse_affine_matrix(self, center, angle, translate, scale,

+ shear):

+ # https://github.com/pytorch/vision/blob/v0.4.0/torchvision/transforms/functional.py#L717

+ from numpy import sin, cos, tan

+

+ if isinstance(shear, numbers.Number):

+ shear = [shear, 0]

+

+ if not isinstance(shear, (tuple, list)) and len(shear) == 2:

+ raise ValueError(

+ "Shear should be a single value or a tuple/list containing " +

+ "two values. Got {}".format(shear))

+

+ rot = math.radians(angle)

+ sx, sy = [math.radians(s) for s in shear]

+

+ cx, cy = center

+ tx, ty = translate

+

+ # RSS without scaling

+ a = cos(rot - sy) / cos(sy)

+ b = -cos(rot - sy) * tan(sx) / cos(sy) - sin(rot)

+ c = sin(rot - sy) / cos(sy)

+ d = -sin(rot - sy) * tan(sx) / cos(sy) + cos(rot)

+

+ # Inverted rotation matrix with scale and shear

+ # det([[a, b], [c, d]]) == 1, since det(rotation) = 1 and det(shear) = 1

+ M = [d, -b, 0, -c, a, 0]

+ M = [x / scale for x in M]

+

+ # Apply inverse of translation and of center translation: RSS^-1 * C^-1 * T^-1

+ M[2] += M[0] * (-cx - tx) + M[1] * (-cy - ty)

+ M[5] += M[3] * (-cx - tx) + M[4] * (-cy - ty)

+

+ # Apply center translation: C * RSS^-1 * C^-1 * T^-1

+ M[2] += cx

+ M[5] += cy

+ return M

+

+ @staticmethod

+ def get_params(degrees, translate, scale_ranges, shears, height):

+ angle = sample_sym(degrees)

+ if translate is not None:

+ max_dx = translate[0] * height

+ max_dy = translate[1] * height