diff --git a/doc/doc_en/multi_languages_en.md b/doc/doc_en/multi_languages_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..d1c4583f66f611fbef7191d52d32e02187853c9b

--- /dev/null

+++ b/doc/doc_en/multi_languages_en.md

@@ -0,0 +1,285 @@

+# Multi-language model

+

+**Recent Update**

+

+-2021.4.9 supports the detection and recognition of 80 languages

+-2021.4.9 supports **lightweight high-precision** English model detection and recognition

+

+-[1 Installation](#Install)

+ -[1.1 paddle installation](#paddleinstallation)

+ -[1.2 paddleocr package installation](#paddleocr_package_install)

+

+-[2 Quick Use](#Quick_Use)

+ -[2.1 Command line operation](#Command_line_operation)

+ -[2.1.1 Prediction of the whole image](#bash_detection+recognition)

+ -[2.1.2 Recognition](#bash_Recognition)

+ -[2.1.3 Detection](#bash_detection)

+ -[2.2 python script running](#python_Script_running)

+ -[2.2.1 Whole image prediction](#python_detection+recognition)

+ -[2.2.2 Recognition](#python_Recognition)

+ -[2.2.3 Detection](#python_detection)

+-[3 Custom Training](#Custom_Training)

+-[4 Supported languages and abbreviations](#language_abbreviations)

+

+

+## 1 Installation

+

+

+### 1.1 paddle installation

+```

+# cpu

+pip install paddlepaddle

+

+# gpu

+pip instll paddlepaddle-gpu

+```

+

+

+### 1.2 paddleocr package installation

+

+

+pip install

+```

+pip install "paddleocr>=2.0.4" # 2.0.4 version is recommended

+```

+Build and install locally

+```

+python3 setup.py bdist_wheel

+pip3 install dist/paddleocr-x.x.x-py3-none-any.whl # x.x.x is the version number of paddleocr

+```

+

+

+## 2 Quick use

+

+

+### 2.1 Command line operation

+

+View help information

+

+```

+paddleocr -h

+```

+

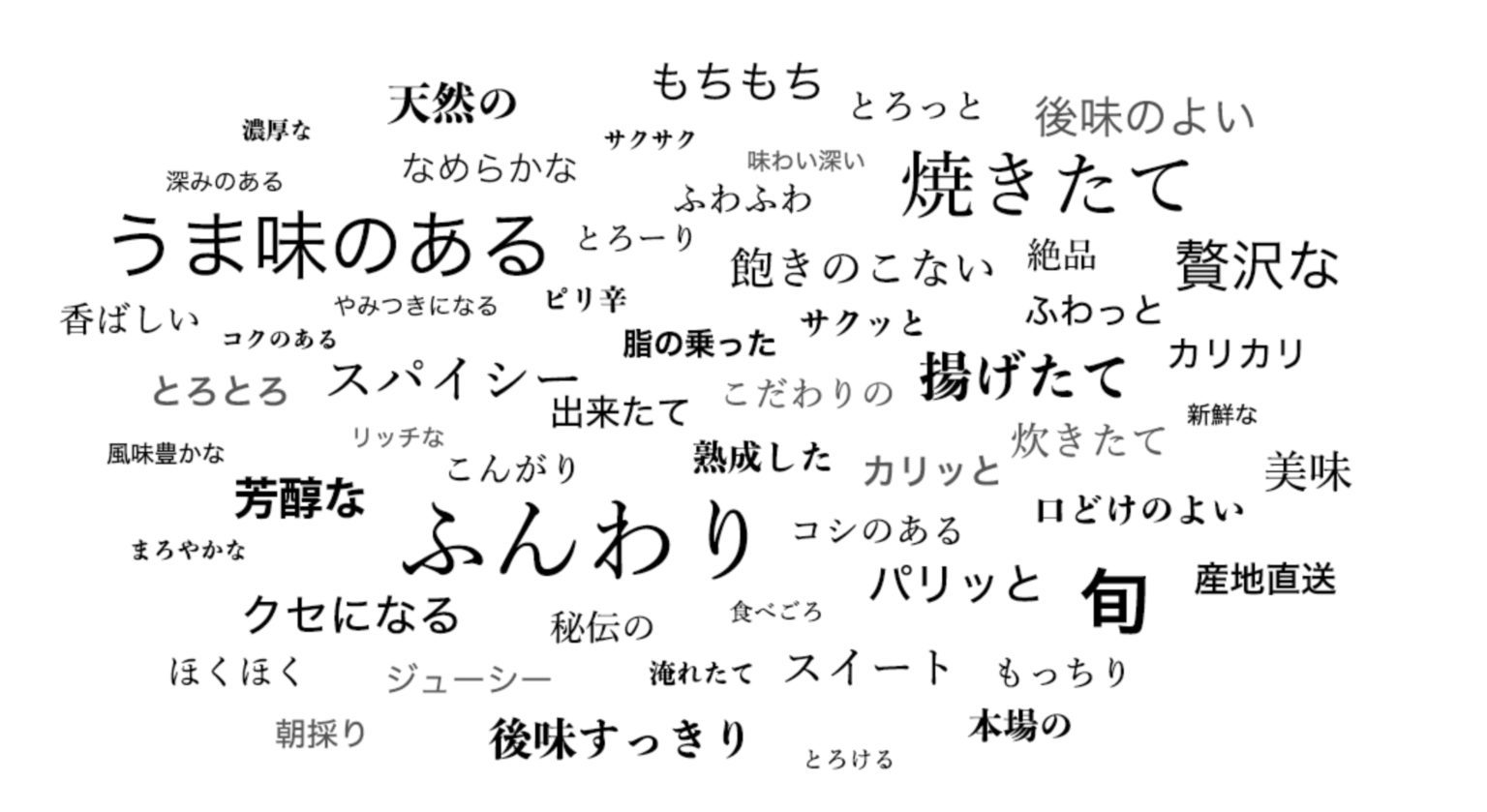

+* Whole image prediction (detection + recognition)

+

+Paddleocr currently supports 80 languages, which can be switched by modifying the --lang parameter.

+The specific supported [language] (#language_abbreviations) can be viewed in the table.

+

+``` bash

+

+paddleocr --image_dir doc/imgs/japan_2.jpg --lang=japan

+```

+

+

+The result is a list, each item contains a text box, text and recognition confidence

+```text

+[[[671.0, 60.0], [847.0, 63.0], [847.0, 104.0], [671.0, 102.0]], ('もちもち', 0.9993342)]

+[[[394.0, 82.0], [536.0, 77.0], [538.0, 127.0], [396.0, 132.0]], ('自然の', 0.9919842)]

+[[[880.0, 89.0], [1014.0, 93.0], [1013.0, 127.0], [879.0, 124.0]], ('とろっと', 0.9976762)]

+[[[1067.0, 101.0], [1294.0, 101.0], [1294.0, 138.0], [1067.0, 138.0]], ('后味のよい', 0.9988712)]

+......

+```

+

+* Recognition

+

+```bash

+paddleocr --image_dir doc/imgs_words/japan/1.jpg --det false --lang=japan

+```

+

+

+

+The result is a tuple, which returns the recognition result and recognition confidence

+

+```text

+('したがって', 0.99965394)

+```

+

+* Detection

+

+```

+paddleocr --image_dir PaddleOCR/doc/imgs/11.jpg --rec false

+```

+

+The result is a list, each item contains only text boxes

+

+```

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+

+### 2.2 python script running

+

+ppocr also supports running in python scripts for easy embedding in your own code:

+

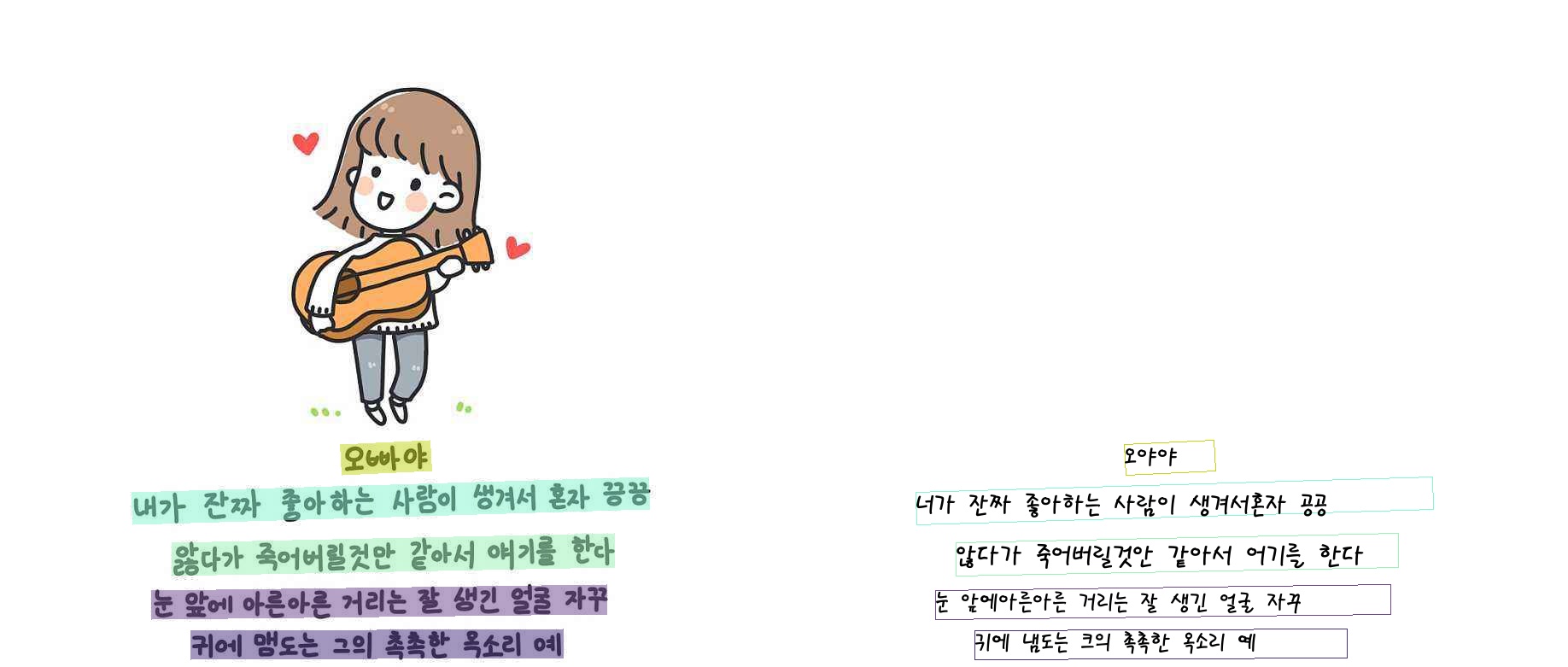

+* Whole image prediction (detection + recognition)

+

+```

+from paddleocr import PaddleOCR, draw_ocr

+

+# Also switch the language by modifying the lang parameter

+ocr = PaddleOCR(lang="korean") # The model file will be downloaded automatically when executed for the first time

+img_path ='doc/imgs/korean_1.jpg'

+result = ocr.ocr(img_path)

+# Print detection frame and recognition result

+for line in result:

+ print(line)

+

+# Visualization

+from PIL import Image

+image = Image.open(img_path).convert('RGB')

+boxes = [line[0] for line in result]

+txts = [line[1][0] for line in result]

+scores = [line[1][1] for line in result]

+im_show = draw_ocr(image, boxes, txts, scores, font_path='/path/to/PaddleOCR/doc/korean.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+

+Visualization of results:

+

+

+

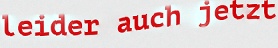

+* Recognition

+

+```

+from paddleocr import PaddleOCR

+ocr = PaddleOCR(lang="german")

+img_path ='PaddleOCR/doc/imgs_words/german/1.jpg'

+result = ocr.ocr(img_path, det=False, cls=True)

+for line in result:

+ print(line)

+```

+

+

+

+The result is a tuple, which only contains the recognition result and recognition confidence

+

+```

+('leider auch jetzt', 0.97538936)

+```

+

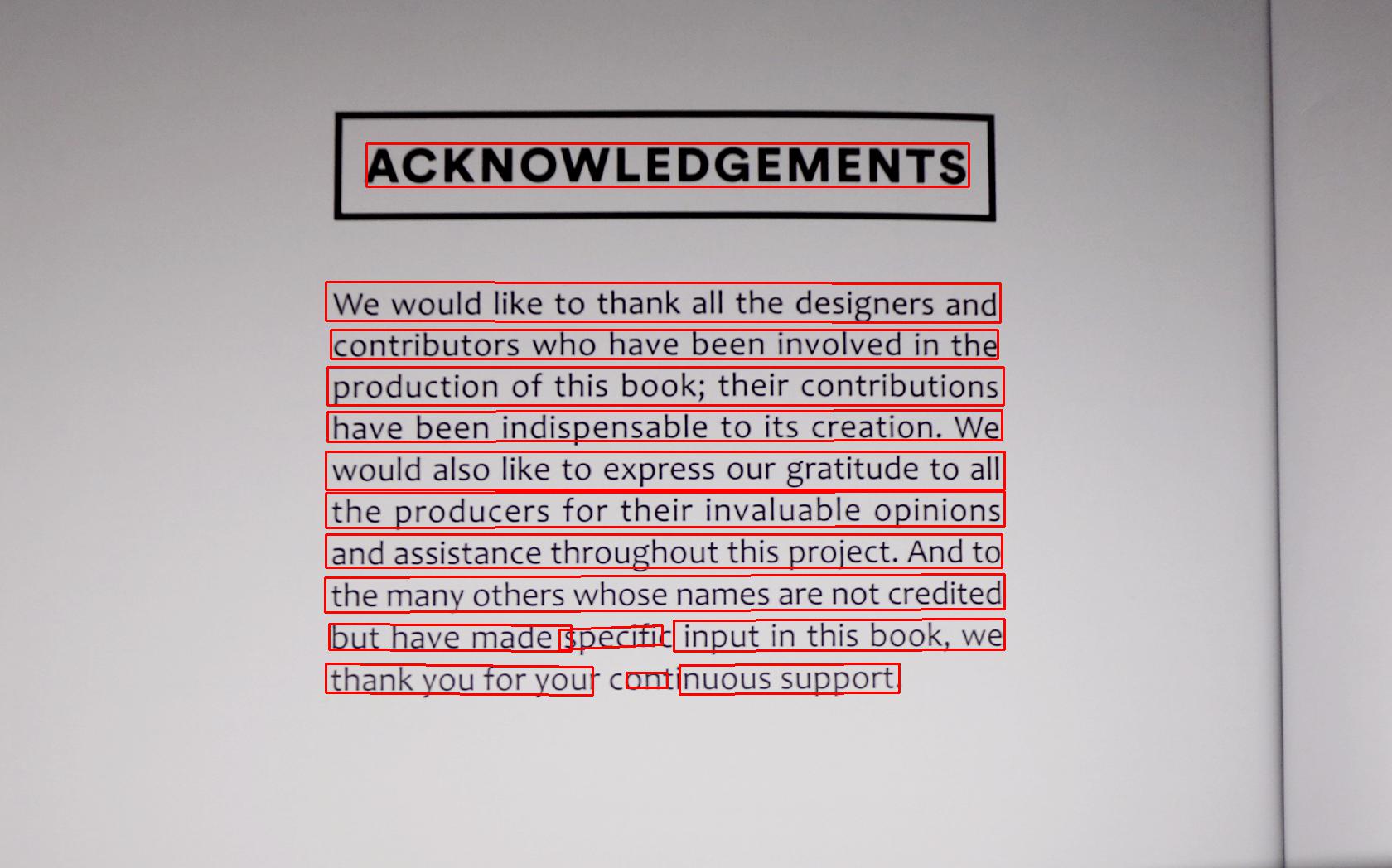

+* Detection

+

+```python

+from paddleocr import PaddleOCR, draw_ocr

+ocr = PaddleOCR() # need to run only once to download and load model into memory

+img_path ='PaddleOCR/doc/imgs_en/img_12.jpg'

+result = ocr.ocr(img_path, rec=False)

+for line in result:

+ print(line)

+

+# show result

+from PIL import Image

+

+image = Image.open(img_path).convert('RGB')

+im_show = draw_ocr(image, result, txts=None, scores=None, font_path='/path/to/PaddleOCR/doc/fonts/simfang.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+The result is a list, each item contains only text boxes

+```bash

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+Visualization of results:

+

+

+ppocr also supports direction classification. For more usage methods, please refer to: [whl package instructions](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md).

+

+

+## 3 Custom training

+

+ppocr supports using your own data for custom training or finetune, where the recognition model can refer to [French configuration file](../../configs/rec/multi_language/rec_french_lite_train.yml)

+Modify the training data path, dictionary and other parameters.

+

+For specific data preparation and training process, please refer to: [Text Detection](../doc_en/detection_en.md), [Text Recognition](../doc_en/recognition_en.md), more functions such as predictive deployment,

+For functions such as data annotation, you can read the complete [Document Tutorial](../../README.md).

+

+

+## 4 Support languages and abbreviations

+

+| Language | Abbreviation |

+| --- | --- |

+|chinese and english|ch|

+|english|en|

+|french|fr|

+|german|german|

+|japan|japan|

+|korean|korean|

+|chinese traditional |ch_tra|

+| Italian |it|

+|Spanish |es|

+| Portuguese|pt|

+|Russia|ru|

+|Arabic|ar|

+|Hindi|hi|

+|Uyghur|ug|

+|Persian|fa|

+|Urdu|ur|

+| Serbian(latin) |rs_latin|

+|Occitan |oc|

+|Marathi|mr|

+|Nepali|ne|

+|Serbian(cyrillic)|rs_cyrillic|

+|Bulgarian |bg|

+|Ukranian|uk|

+|Belarusian|be|

+|Telugu |te|

+|Kannada |kn|

+|Tamil |ta|

+|Afrikaans |af|

+|Azerbaijani |az|

+|Bosnian|bs|

+|Czech|cs|

+|Welsh |cy|

+|Danish|da|

+|Estonian |et|

+|Irish |ga|

+|Croatian |hr|

+|Hungarian |hu|

+|Indonesian|id|

+|Icelandic|is|

+|Kurdish|ku|

+|Lithuanian |lt|

+ |Latvian |lv|

+|Maori|mi|

+|Malay|ms|

+|Maltese |mt|

+|Dutch |nl|

+|Norwegian |no|

+|Polish |pl|

+|Romanian |ro|

+|Slovak |sk|

+|Slovenian |sl|

+|Albanian |sq|

+|Swedish |sv|

+|Swahili |sw|

+|Tagalog |tl|

+|Turkish |tr|

+|Uzbek |uz|

+|Vietnamese |vi|

+|Mongolian |mn|

+|Abaza |abq|

+|Adyghe |ady|

+|Kabardian |kbd|

+|Avar |ava|

+|Dargwa |dar|

+|Ingush |inh|

+|Lak |lbe|

+|Lezghian |lez|

+|Tabassaran |tab|

+|Bihari |bh|

+|Maithili |mai|

+|Angika |ang|

+|Bhojpuri |bho|

+|Magahi |mah|

+|Nagpur |sck|

+|Newari |new|

+|Goan Konkani|gom|

+|Saudi Arabia|sa|

diff --git a/ppocr/utils/utility.py b/ppocr/utils/utility.py

index 29576d971486326aec3c93601656d7b982ef3336..7bb4c906d298af54ed56e2805f487a2c22d1894b 100755

--- a/ppocr/utils/utility.py

+++ b/ppocr/utils/utility.py

@@ -61,6 +61,7 @@ def get_image_file_list(img_file):

imgs_lists.append(file_path)

if len(imgs_lists) == 0:

raise Exception("not found any img file in {}".format(img_file))

+ imgs_lists = sorted(imgs_lists)

return imgs_lists

diff --git a/tools/infer/predict_rec.py b/tools/infer/predict_rec.py

index 1cb6e01b087ff98efb0a57be3cc58a79425fea57..24388026b8f395427c93e285ed550446e3aa9b9c 100755

--- a/tools/infer/predict_rec.py

+++ b/tools/infer/predict_rec.py

@@ -41,6 +41,7 @@ class TextRecognizer(object):

self.character_type = args.rec_char_type

self.rec_batch_num = args.rec_batch_num

self.rec_algorithm = args.rec_algorithm

+ self.max_text_length = args.max_text_length

postprocess_params = {

'name': 'CTCLabelDecode',

"character_type": args.rec_char_type,

@@ -186,8 +187,9 @@ class TextRecognizer(object):

norm_img = norm_img[np.newaxis, :]

norm_img_batch.append(norm_img)

else:

- norm_img = self.process_image_srn(

- img_list[indices[ino]], self.rec_image_shape, 8, 25)

+ norm_img = self.process_image_srn(img_list[indices[ino]],

+ self.rec_image_shape, 8,

+ self.max_text_length)

encoder_word_pos_list = []

gsrm_word_pos_list = []

gsrm_slf_attn_bias1_list = []

diff --git a/tools/infer/predict_system.py b/tools/infer/predict_system.py

index de7ee9d342063161f2e329c99d2428051c0ecf8c..ba81aff0a940fbee234e59e98f73c62fc7f69f09 100755

--- a/tools/infer/predict_system.py

+++ b/tools/infer/predict_system.py

@@ -13,6 +13,7 @@

# limitations under the License.

import os

import sys

+import subprocess

__dir__ = os.path.dirname(os.path.abspath(__file__))

sys.path.append(__dir__)

@@ -141,6 +142,7 @@ def sorted_boxes(dt_boxes):

def main(args):

image_file_list = get_image_file_list(args.image_dir)

+ image_file_list = image_file_list[args.process_id::args.total_process_num]

text_sys = TextSystem(args)

is_visualize = True

font_path = args.vis_font_path

@@ -184,4 +186,18 @@ def main(args):

if __name__ == "__main__":

- main(utility.parse_args())

+ args = utility.parse_args()

+ if args.use_mp:

+ p_list = []

+ total_process_num = args.total_process_num

+ for process_id in range(total_process_num):

+ cmd = [sys.executable, "-u"] + sys.argv + [

+ "--process_id={}".format(process_id),

+ "--use_mp={}".format(False)

+ ]

+ p = subprocess.Popen(cmd, stdout=sys.stdout, stderr=sys.stdout)

+ p_list.append(p)

+ for p in p_list:

+ p.wait()

+ else:

+ main(args)

diff --git a/tools/infer/utility.py b/tools/infer/utility.py

index 9019f003b44d9ecb69ed390fba8cc97d4d074cd5..b273eaf3258421d5c5c30c132f99e78f9f0999ba 100755

--- a/tools/infer/utility.py

+++ b/tools/infer/utility.py

@@ -98,6 +98,10 @@ def parse_args():

parser.add_argument("--enable_mkldnn", type=str2bool, default=False)

parser.add_argument("--use_pdserving", type=str2bool, default=False)

+ parser.add_argument("--use_mp", type=str2bool, default=False)

+ parser.add_argument("--total_process_num", type=int, default=1)

+ parser.add_argument("--process_id", type=int, default=0)

+

return parser.parse_args()