Merge branch 'dygraph' into dygraph

Showing

| W: | H:

| W: | H:

| W: | H:

| W: | H:

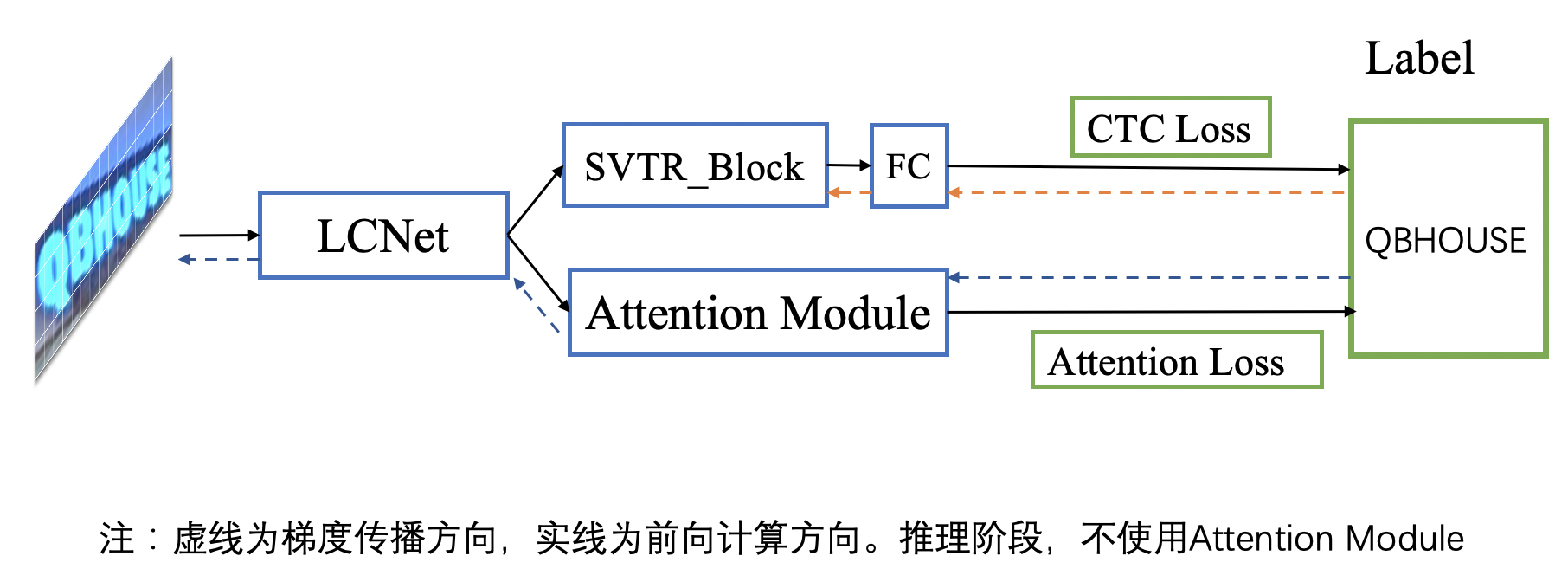

doc/ppocr_v3/GTC.png

0 → 100644

415.0 KB

doc/ppocr_v3/SSL.png

0 → 100644

111.5 KB

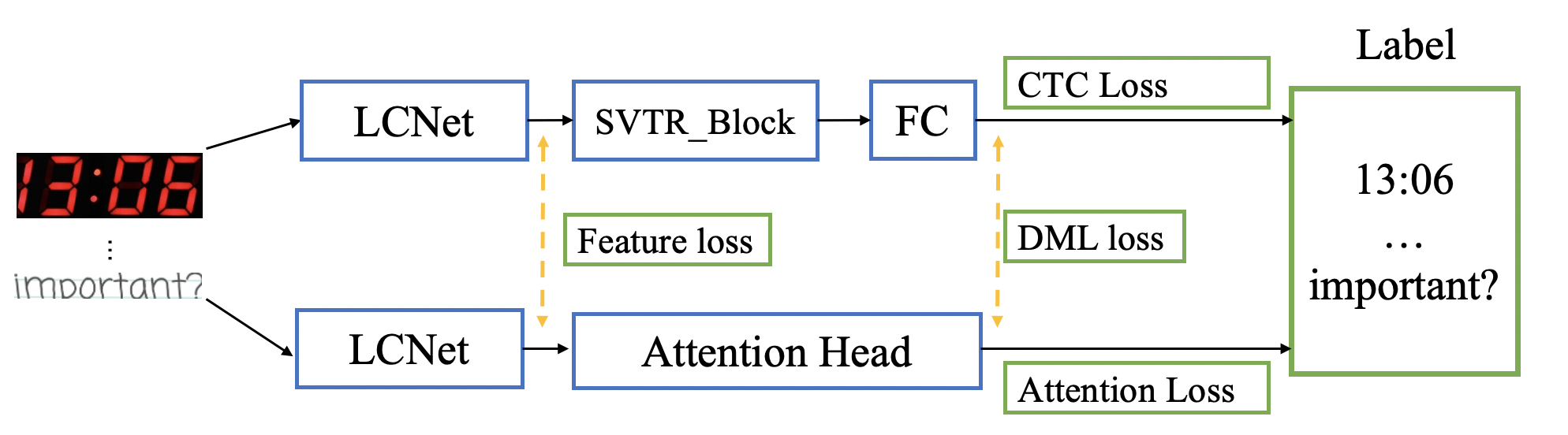

doc/ppocr_v3/UDML.png

0 → 100644

348.7 KB

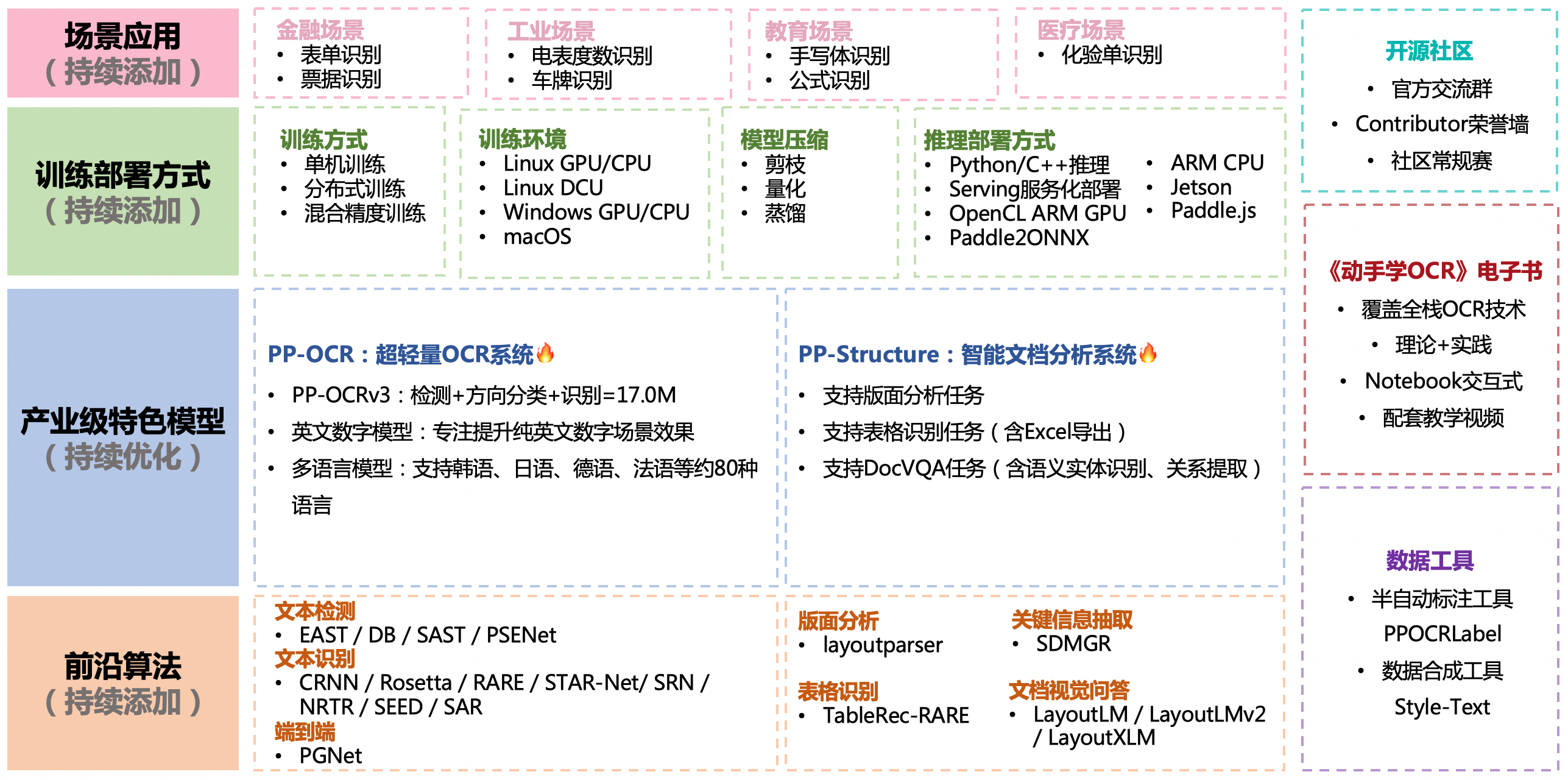

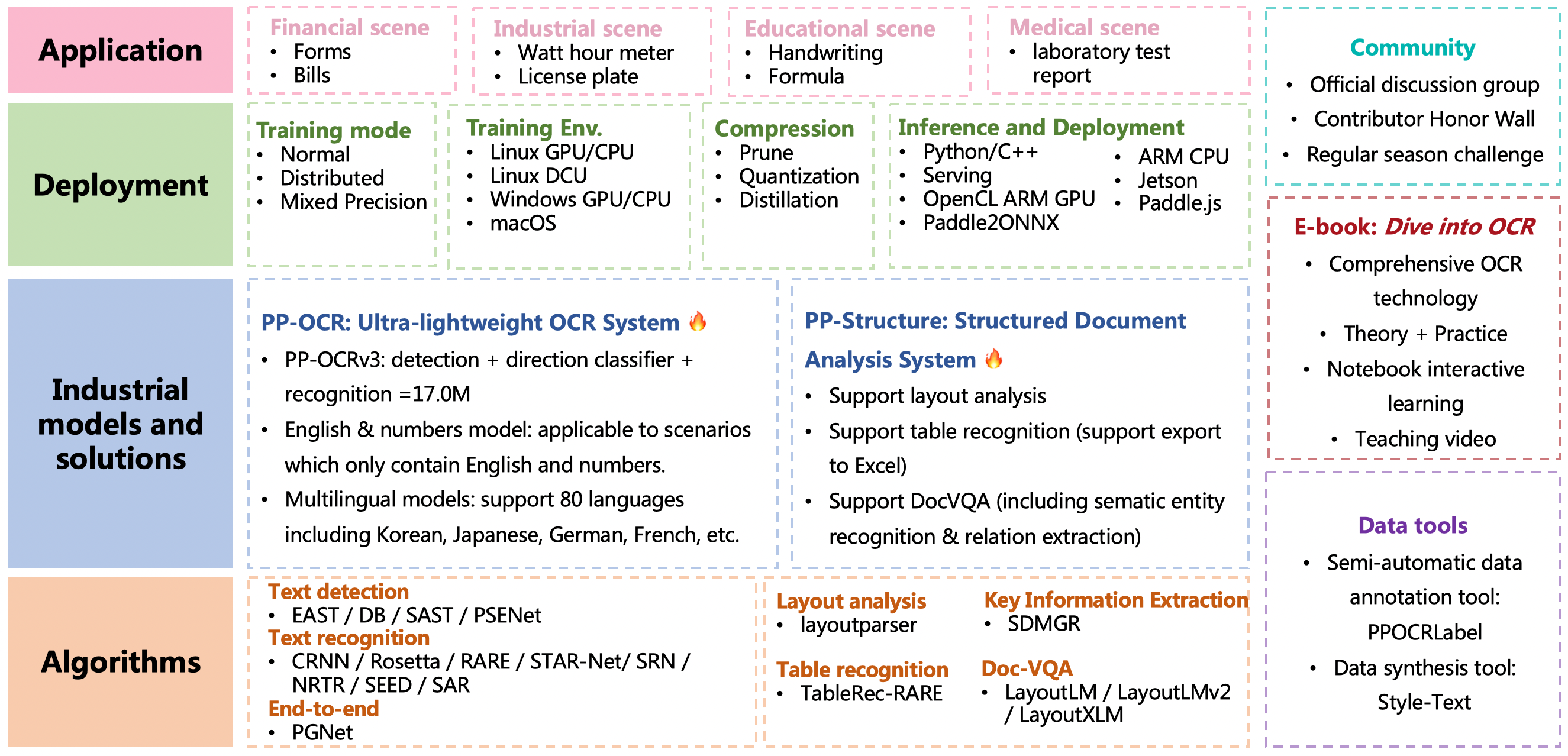

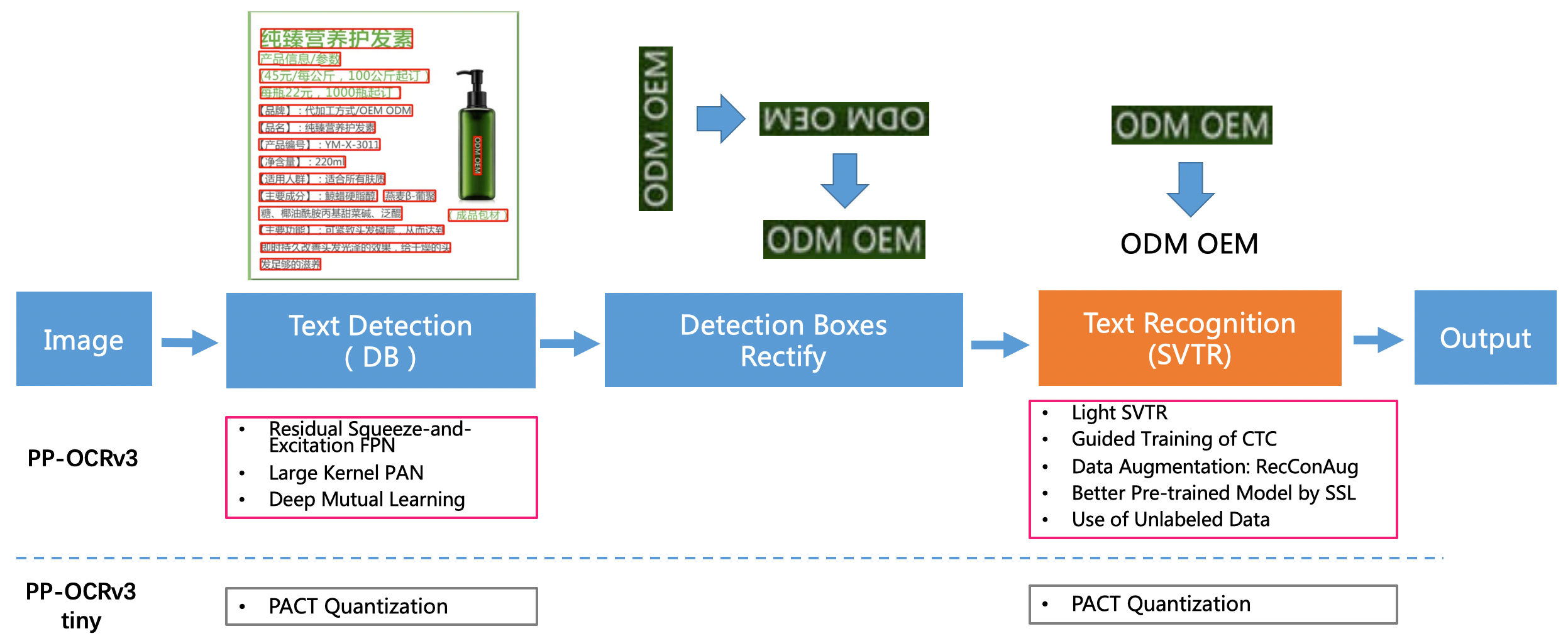

doc/ppocr_v3/ppocr_v3.png

0 → 100644

426.2 KB

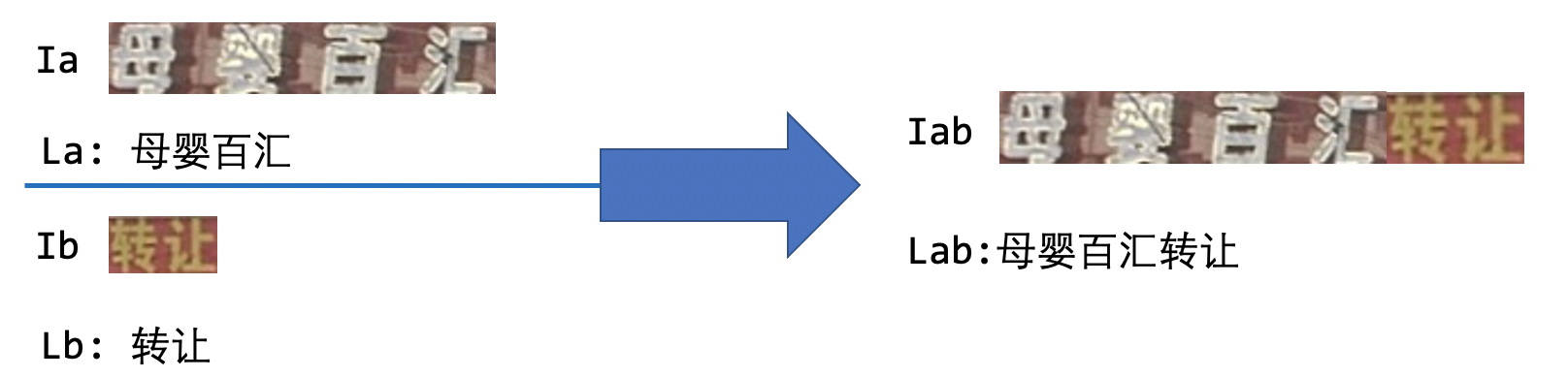

doc/ppocr_v3/recconaug.png

0 → 100644

296.6 KB

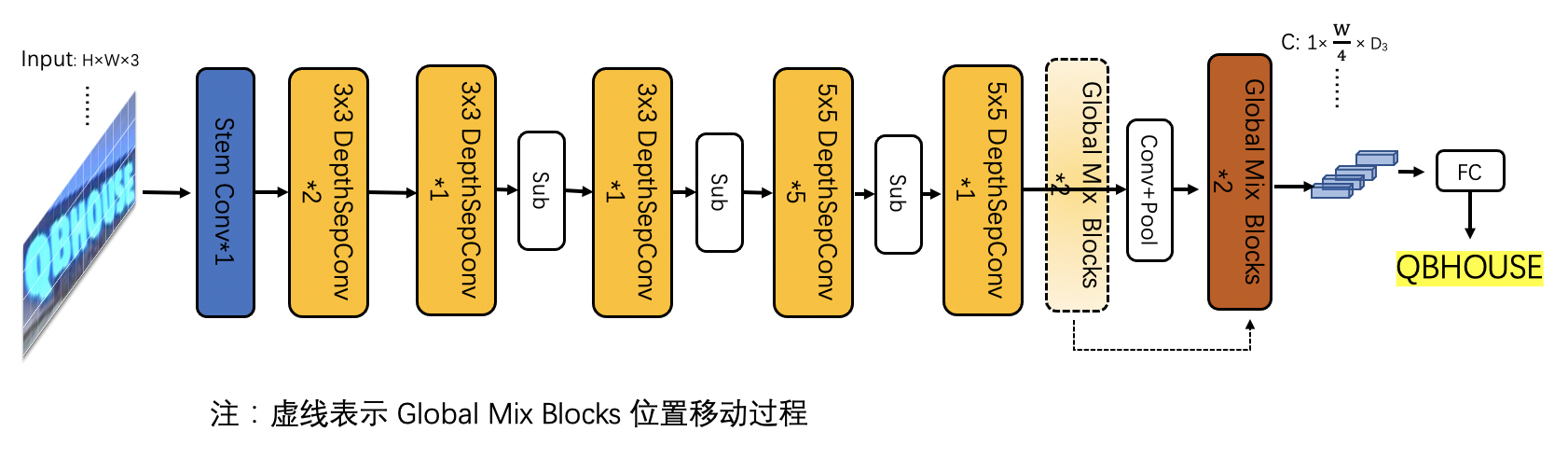

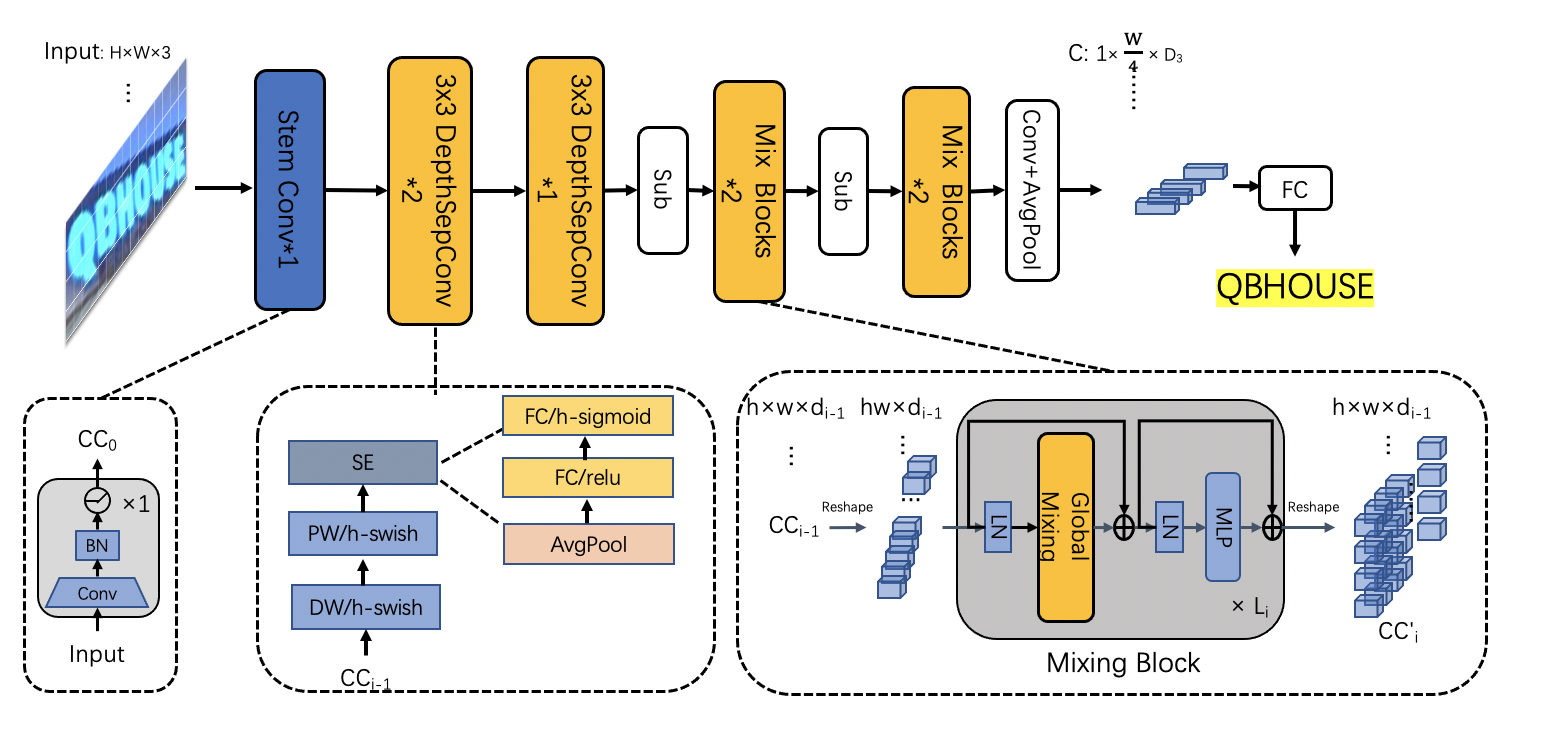

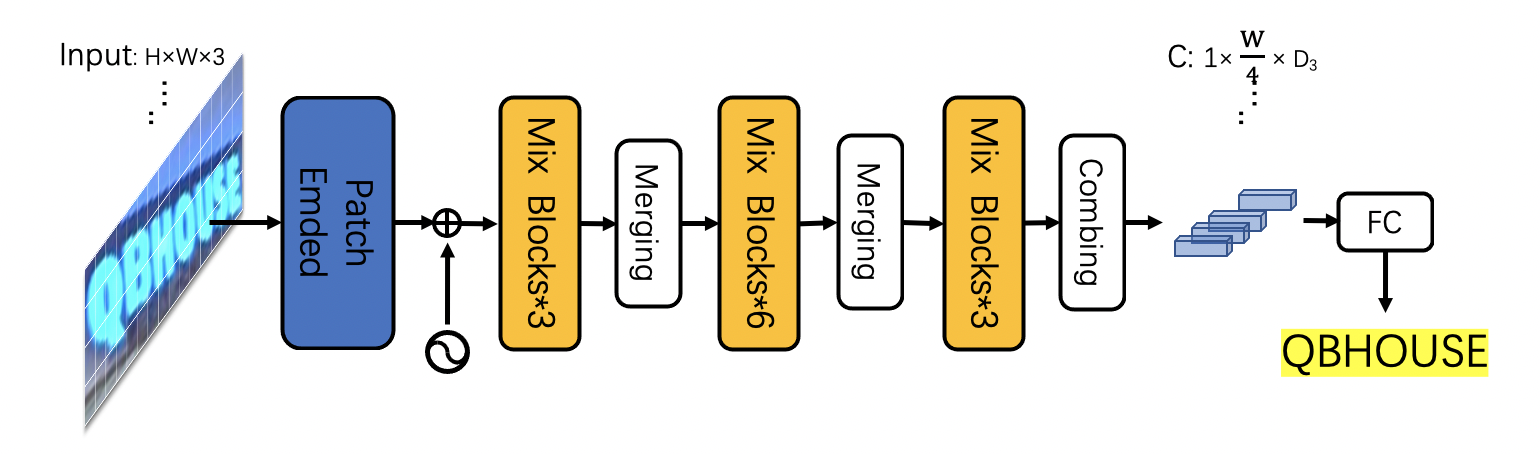

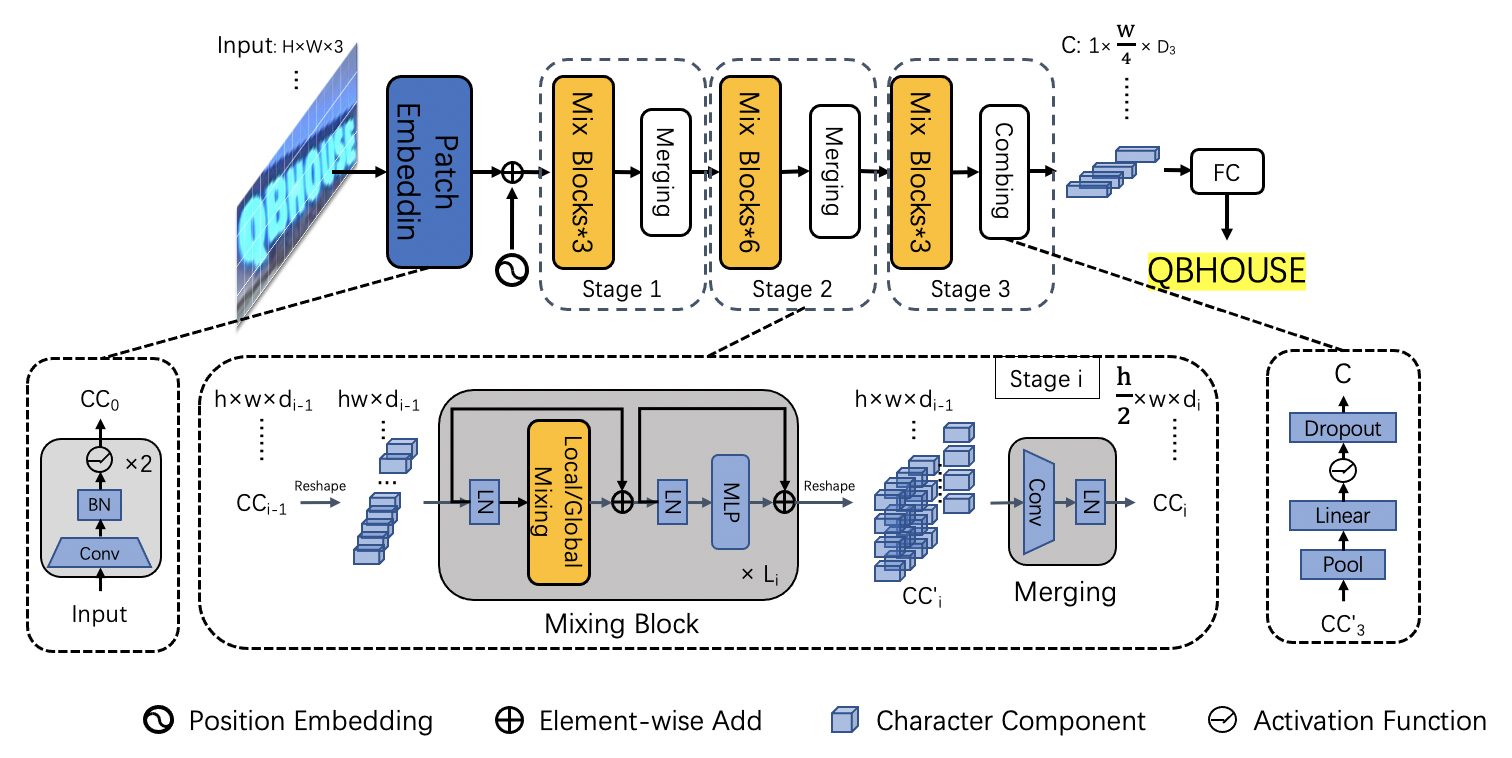

doc/ppocr_v3/svtr_g2.png

0 → 100644

323.3 KB

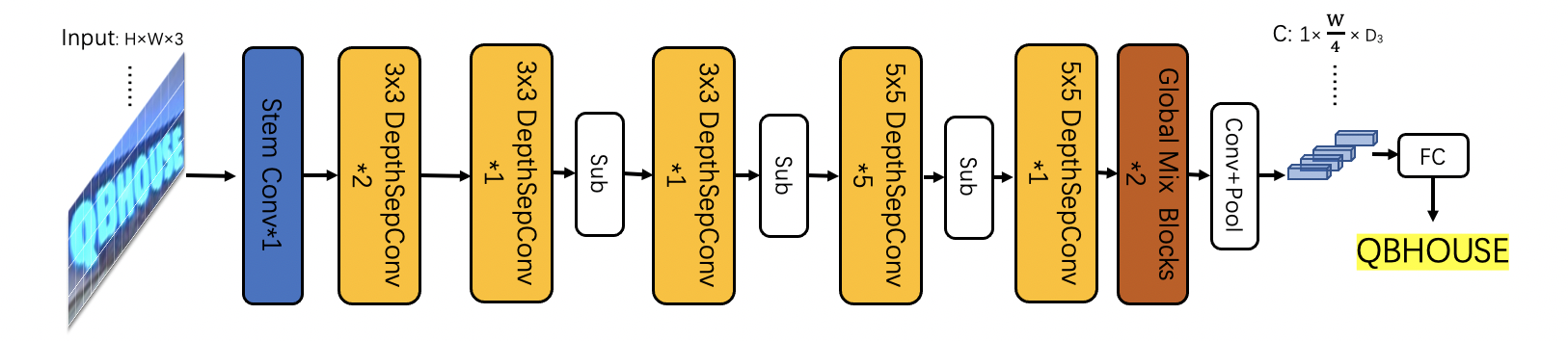

doc/ppocr_v3/svtr_g4.png

0 → 100644

549.7 KB

doc/ppocr_v3/svtr_tiny.jpg

0 → 100644

323.6 KB

doc/ppocr_v3/svtr_tiny.png

0 → 100644

585.6 KB

doc/ppocrv3_framework.png

0 → 100644

957.2 KB