', '', '',

@@ -169,36 +168,40 @@ class StructureSystem(object):

'type': region['label'].lower(),

'bbox': [x1, y1, x2, y2],

'img': roi_img,

- 'res': res

+ 'res': res,

+ 'img_idx': img_idx

})

end = time.time()

time_dict['all'] = end - start

return res_list, time_dict

- elif self.mode == 'vqa':

+ elif self.mode == 'kie':

raise NotImplementedError

return None, None

-def save_structure_res(res, save_folder, img_name):

+def save_structure_res(res, save_folder, img_name, img_idx=0):

excel_save_folder = os.path.join(save_folder, img_name)

os.makedirs(excel_save_folder, exist_ok=True)

res_cp = deepcopy(res)

# save res

with open(

- os.path.join(excel_save_folder, 'res.txt'), 'w',

+ os.path.join(excel_save_folder, 'res_{}.txt'.format(img_idx)),

+ 'w',

encoding='utf8') as f:

for region in res_cp:

roi_img = region.pop('img')

f.write('{}\n'.format(json.dumps(region)))

- if region['type'] == 'table' and len(region[

+ if region['type'].lower() == 'table' and len(region[

'res']) > 0 and 'html' in region['res']:

- excel_path = os.path.join(excel_save_folder,

- '{}.xlsx'.format(region['bbox']))

+ excel_path = os.path.join(

+ excel_save_folder,

+ '{}_{}.xlsx'.format(region['bbox'], img_idx))

to_excel(region['res']['html'], excel_path)

- elif region['type'] == 'figure':

- img_path = os.path.join(excel_save_folder,

- '{}.jpg'.format(region['bbox']))

+ elif region['type'].lower() == 'figure':

+ img_path = os.path.join(

+ excel_save_folder,

+ '{}_{}.jpg'.format(region['bbox'], img_idx))

cv2.imwrite(img_path, roi_img)

@@ -214,28 +217,75 @@ def main(args):

for i, image_file in enumerate(image_file_list):

logger.info("[{}/{}] {}".format(i, img_num, image_file))

- img, flag = check_and_read_gif(image_file)

+ img, flag_gif, flag_pdf = check_and_read(image_file)

img_name = os.path.basename(image_file).split('.')[0]

- if not flag:

+ if not flag_gif and not flag_pdf:

img = cv2.imread(image_file)

- if img is None:

- logger.error("error in loading image:{}".format(image_file))

- continue

- res, time_dict = structure_sys(img)

- if structure_sys.mode == 'structure':

- save_structure_res(res, save_folder, img_name)

- draw_img = draw_structure_result(img, res, args.vis_font_path)

- img_save_path = os.path.join(save_folder, img_name, 'show.jpg')

- elif structure_sys.mode == 'vqa':

- raise NotImplementedError

- # draw_img = draw_ser_results(img, res, args.vis_font_path)

- # img_save_path = os.path.join(save_folder, img_name + '.jpg')

- cv2.imwrite(img_save_path, draw_img)

- logger.info('result save to {}'.format(img_save_path))

- if args.recovery:

- convert_info_docx(img, res, save_folder, img_name)

+ if not flag_pdf:

+ if img is None:

+ logger.error("error in loading image:{}".format(image_file))

+ continue

+ res, time_dict = structure_sys(img)

+

+ if structure_sys.mode == 'structure':

+ save_structure_res(res, save_folder, img_name)

+ draw_img = draw_structure_result(img, res, args.vis_font_path)

+ img_save_path = os.path.join(save_folder, img_name, 'show.jpg')

+ elif structure_sys.mode == 'kie':

+ raise NotImplementedError

+ # draw_img = draw_ser_results(img, res, args.vis_font_path)

+ # img_save_path = os.path.join(save_folder, img_name + '.jpg')

+ cv2.imwrite(img_save_path, draw_img)

+ logger.info('result save to {}'.format(img_save_path))

+ if args.recovery:

+ try:

+ from ppstructure.recovery.recovery_to_doc import sorted_layout_boxes, convert_info_docx

+ h, w, _ = img.shape

+ res = sorted_layout_boxes(res, w)

+ convert_info_docx(img, res, save_folder, img_name,

+ args.save_pdf)

+ except Exception as ex:

+ logger.error(

+ "error in layout recovery image:{}, err msg: {}".format(

+ image_file, ex))

+ continue

+ else:

+ pdf_imgs = img

+ all_res = []

+ for index, img in enumerate(pdf_imgs):

+

+ res, time_dict = structure_sys(img, index)

+ if structure_sys.mode == 'structure' and res != []:

+ save_structure_res(res, save_folder, img_name, index)

+ draw_img = draw_structure_result(img, res,

+ args.vis_font_path)

+ img_save_path = os.path.join(save_folder, img_name,

+ 'show_{}.jpg'.format(index))

+ elif structure_sys.mode == 'kie':

+ raise NotImplementedError

+ # draw_img = draw_ser_results(img, res, args.vis_font_path)

+ # img_save_path = os.path.join(save_folder, img_name + '.jpg')

+ if res != []:

+ cv2.imwrite(img_save_path, draw_img)

+ logger.info('result save to {}'.format(img_save_path))

+ if args.recovery and res != []:

+ from ppstructure.recovery.recovery_to_doc import sorted_layout_boxes, convert_info_docx

+ h, w, _ = img.shape

+ res = sorted_layout_boxes(res, w)

+ all_res += res

+

+ if args.recovery and all_res != []:

+ try:

+ convert_info_docx(img, all_res, save_folder, img_name,

+ args.save_pdf)

+ except Exception as ex:

+ logger.error(

+ "error in layout recovery image:{}, err msg: {}".format(

+ image_file, ex))

+ continue

+

logger.info("Predict time : {:.3f}s".format(time_dict['all']))

diff --git a/ppstructure/recovery/README.md b/ppstructure/recovery/README.md

index 883dbef3e829dfa213644b610af1ca279dac8641..90a6a2c3c4189dc885d698e4cac2d1a24a49d1df 100644

--- a/ppstructure/recovery/README.md

+++ b/ppstructure/recovery/README.md

@@ -6,10 +6,12 @@ English | [简体中文](README_ch.md)

- [2.1 Installation dependencies](#2.1)

- [2.2 Install PaddleOCR](#2.2)

- [3. Quick Start](#3)

+ - [3.1 Download models](#3.1)

+ - [3.2 Layout recovery](#3.2)

-## 1. Introduction

+## 1. Introduction

Layout recovery means that after OCR recognition, the content is still arranged like the original document pictures, and the paragraphs are output to word document in the same order.

@@ -17,8 +19,9 @@ Layout recovery combines [layout analysis](../layout/README.md)、[table recogni

The following figure shows the result:

-

+

-

+

tags removed

+ # Cannot use find_all as it only finds element tags and does not find text which

+ # is not inside an element

+ return ' '.join([str(i) for i in soup.contents])

+

+ def add_styles_to_paragraph(self, style):

+ if 'text-align' in style:

+ align = style['text-align']

+ if align == 'center':

+ self.paragraph.paragraph_format.alignment = WD_ALIGN_PARAGRAPH.CENTER

+ elif align == 'right':

+ self.paragraph.paragraph_format.alignment = WD_ALIGN_PARAGRAPH.RIGHT

+ elif align == 'justify':

+ self.paragraph.paragraph_format.alignment = WD_ALIGN_PARAGRAPH.JUSTIFY

+ if 'margin-left' in style:

+ margin = style['margin-left']

+ units = re.sub(r'[0-9]+', '', margin)

+ margin = int(float(re.sub(r'[a-z]+', '', margin)))

+ if units == 'px':

+ self.paragraph.paragraph_format.left_indent = Inches(min(margin // 10 * INDENT, MAX_INDENT))

+ # TODO handle non px units

+

+ def add_styles_to_run(self, style):

+ if 'color' in style:

+ if 'rgb' in style['color']:

+ color = re.sub(r'[a-z()]+', '', style['color'])

+ colors = [int(x) for x in color.split(',')]

+ elif '#' in style['color']:

+ color = style['color'].lstrip('#')

+ colors = tuple(int(color[i:i+2], 16) for i in (0, 2, 4))

+ else:

+ colors = [0, 0, 0]

+ # TODO map colors to named colors (and extended colors...)

+ # For now set color to black to prevent crashing

+ self.run.font.color.rgb = RGBColor(*colors)

+

+ if 'background-color' in style:

+ if 'rgb' in style['background-color']:

+ color = color = re.sub(r'[a-z()]+', '', style['background-color'])

+ colors = [int(x) for x in color.split(',')]

+ elif '#' in style['background-color']:

+ color = style['background-color'].lstrip('#')

+ colors = tuple(int(color[i:i+2], 16) for i in (0, 2, 4))

+ else:

+ colors = [0, 0, 0]

+ # TODO map colors to named colors (and extended colors...)

+ # For now set color to black to prevent crashing

+ self.run.font.highlight_color = WD_COLOR.GRAY_25 #TODO: map colors

+

+ def apply_paragraph_style(self, style=None):

+ try:

+ if style:

+ self.paragraph.style = style

+ elif self.paragraph_style:

+ self.paragraph.style = self.paragraph_style

+ except KeyError as e:

+ raise ValueError(f"Unable to apply style {self.paragraph_style}.") from e

+

+ def parse_dict_string(self, string, separator=';'):

+ new_string = string.replace(" ", '').split(separator)

+ string_dict = dict([x.split(':') for x in new_string if ':' in x])

+ return string_dict

+

+ def handle_li(self):

+ # check list stack to determine style and depth

+ list_depth = len(self.tags['list'])

+ if list_depth:

+ list_type = self.tags['list'][-1]

+ else:

+ list_type = 'ul' # assign unordered if no tag

+

+ if list_type == 'ol':

+ list_style = styles['LIST_NUMBER']

+ else:

+ list_style = styles['LIST_BULLET']

+

+ self.paragraph = self.doc.add_paragraph(style=list_style)

+ self.paragraph.paragraph_format.left_indent = Inches(min(list_depth * LIST_INDENT, MAX_INDENT))

+ self.paragraph.paragraph_format.line_spacing = 1

+

+ def add_image_to_cell(self, cell, image):

+ # python-docx doesn't have method yet for adding images to table cells. For now we use this

+ paragraph = cell.add_paragraph()

+ run = paragraph.add_run()

+ run.add_picture(image)

+

+ def handle_img(self, current_attrs):

+ if not self.include_images:

+ self.skip = True

+ self.skip_tag = 'img'

+ return

+ src = current_attrs['src']

+ # fetch image

+ src_is_url = is_url(src)

+ if src_is_url:

+ try:

+ image = fetch_image(src)

+ except urllib.error.URLError:

+ image = None

+ else:

+ image = src

+ # add image to doc

+ if image:

+ try:

+ if isinstance(self.doc, docx.document.Document):

+ self.doc.add_picture(image)

+ else:

+ self.add_image_to_cell(self.doc, image)

+ except FileNotFoundError:

+ image = None

+ if not image:

+ if src_is_url:

+ self.doc.add_paragraph("" % src)

+ else:

+ # avoid exposing filepaths in document

+ self.doc.add_paragraph("" % get_filename_from_url(src))

+

+

+ def handle_table(self, html):

+ """

+ To handle nested tables, we will parse tables manually as follows:

+ Get table soup

+ Create docx table

+ Iterate over soup and fill docx table with new instances of this parser

+ Tell HTMLParser to ignore any tags until the corresponding closing table tag

+ """

+ doc = Document()

+ table_soup = BeautifulSoup(html, 'html.parser')

+ rows, cols_len = self.get_table_dimensions(table_soup)

+ table = doc.add_table(len(rows), cols_len)

+ table.style = doc.styles['Table Grid']

+ cell_row = 0

+ for index, row in enumerate(rows):

+ cols = self.get_table_columns(row)

+ cell_col = 0

+ for col in cols:

+ colspan = int(col.attrs.get('colspan', 1))

+ rowspan = int(col.attrs.get('rowspan', 1))

+

+ cell_html = self.get_cell_html(col)

+

+ if col.name == 'th':

+ cell_html = "%s" % cell_html

+ docx_cell = table.cell(cell_row, cell_col)

+ while docx_cell.text != '': # Skip the merged cell

+ cell_col += 1

+ docx_cell = table.cell(cell_row, cell_col)

+

+ cell_to_merge = table.cell(cell_row + rowspan - 1, cell_col + colspan - 1)

+ if docx_cell != cell_to_merge:

+ docx_cell.merge(cell_to_merge)

+

+ child_parser = HtmlToDocx()

+ child_parser.copy_settings_from(self)

+

+ child_parser.add_html_to_cell(cell_html or ' ', docx_cell) # occupy the position

+

+ cell_col += colspan

+ cell_row += 1

+

+ # skip all tags until corresponding closing tag

+ self.instances_to_skip = len(table_soup.find_all('table'))

+ self.skip_tag = 'table'

+ self.skip = True

+ self.table = None

+ return table

+

+ def handle_link(self, href, text):

+ # Link requires a relationship

+ is_external = href.startswith('http')

+ rel_id = self.paragraph.part.relate_to(

+ href,

+ docx.opc.constants.RELATIONSHIP_TYPE.HYPERLINK,

+ is_external=True # don't support anchor links for this library yet

+ )

+

+ # Create the w:hyperlink tag and add needed values

+ hyperlink = docx.oxml.shared.OxmlElement('w:hyperlink')

+ hyperlink.set(docx.oxml.shared.qn('r:id'), rel_id)

+

+

+ # Create sub-run

+ subrun = self.paragraph.add_run()

+ rPr = docx.oxml.shared.OxmlElement('w:rPr')

+

+ # add default color

+ c = docx.oxml.shared.OxmlElement('w:color')

+ c.set(docx.oxml.shared.qn('w:val'), "0000EE")

+ rPr.append(c)

+

+ # add underline

+ u = docx.oxml.shared.OxmlElement('w:u')

+ u.set(docx.oxml.shared.qn('w:val'), 'single')

+ rPr.append(u)

+

+ subrun._r.append(rPr)

+ subrun._r.text = text

+

+ # Add subrun to hyperlink

+ hyperlink.append(subrun._r)

+

+ # Add hyperlink to run

+ self.paragraph._p.append(hyperlink)

+

+ def handle_starttag(self, tag, attrs):

+ if self.skip:

+ return

+ if tag == 'head':

+ self.skip = True

+ self.skip_tag = tag

+ self.instances_to_skip = 0

+ return

+ elif tag == 'body':

+ return

+

+ current_attrs = dict(attrs)

+

+ if tag == 'span':

+ self.tags['span'].append(current_attrs)

+ return

+ elif tag == 'ol' or tag == 'ul':

+ self.tags['list'].append(tag)

+ return # don't apply styles for now

+ elif tag == 'br':

+ self.run.add_break()

+ return

+

+ self.tags[tag] = current_attrs

+ if tag in ['p', 'pre']:

+ self.paragraph = self.doc.add_paragraph()

+ self.apply_paragraph_style()

+

+ elif tag == 'li':

+ self.handle_li()

+

+ elif tag == "hr":

+

+ # This implementation was taken from:

+ # https://github.com/python-openxml/python-docx/issues/105#issuecomment-62806373

+

+ self.paragraph = self.doc.add_paragraph()

+ pPr = self.paragraph._p.get_or_add_pPr()

+ pBdr = OxmlElement('w:pBdr')

+ pPr.insert_element_before(pBdr,

+ 'w:shd', 'w:tabs', 'w:suppressAutoHyphens', 'w:kinsoku', 'w:wordWrap',

+ 'w:overflowPunct', 'w:topLinePunct', 'w:autoSpaceDE', 'w:autoSpaceDN',

+ 'w:bidi', 'w:adjustRightInd', 'w:snapToGrid', 'w:spacing', 'w:ind',

+ 'w:contextualSpacing', 'w:mirrorIndents', 'w:suppressOverlap', 'w:jc',

+ 'w:textDirection', 'w:textAlignment', 'w:textboxTightWrap',

+ 'w:outlineLvl', 'w:divId', 'w:cnfStyle', 'w:rPr', 'w:sectPr',

+ 'w:pPrChange'

+ )

+ bottom = OxmlElement('w:bottom')

+ bottom.set(qn('w:val'), 'single')

+ bottom.set(qn('w:sz'), '6')

+ bottom.set(qn('w:space'), '1')

+ bottom.set(qn('w:color'), 'auto')

+ pBdr.append(bottom)

+

+ elif re.match('h[1-9]', tag):

+ if isinstance(self.doc, docx.document.Document):

+ h_size = int(tag[1])

+ self.paragraph = self.doc.add_heading(level=min(h_size, 9))

+ else:

+ self.paragraph = self.doc.add_paragraph()

+

+ elif tag == 'img':

+ self.handle_img(current_attrs)

+ return

+

+ elif tag == 'table':

+ self.handle_table()

+ return

+

+ # set new run reference point in case of leading line breaks

+ if tag in ['p', 'li', 'pre']:

+ self.run = self.paragraph.add_run()

+

+ # add style

+ if not self.include_styles:

+ return

+ if 'style' in current_attrs and self.paragraph:

+ style = self.parse_dict_string(current_attrs['style'])

+ self.add_styles_to_paragraph(style)

+

+ def handle_endtag(self, tag):

+ if self.skip:

+ if not tag == self.skip_tag:

+ return

+

+ if self.instances_to_skip > 0:

+ self.instances_to_skip -= 1

+ return

+

+ self.skip = False

+ self.skip_tag = None

+ self.paragraph = None

+

+ if tag == 'span':

+ if self.tags['span']:

+ self.tags['span'].pop()

+ return

+ elif tag == 'ol' or tag == 'ul':

+ remove_last_occurence(self.tags['list'], tag)

+ return

+ elif tag == 'table':

+ self.table_no += 1

+ self.table = None

+ self.doc = self.document

+ self.paragraph = None

+

+ if tag in self.tags:

+ self.tags.pop(tag)

+ # maybe set relevant reference to None?

+

+ def handle_data(self, data):

+ if self.skip:

+ return

+

+ # Only remove white space if we're not in a pre block.

+ if 'pre' not in self.tags:

+ # remove leading and trailing whitespace in all instances

+ data = remove_whitespace(data, True, True)

+

+ if not self.paragraph:

+ self.paragraph = self.doc.add_paragraph()

+ self.apply_paragraph_style()

+

+ # There can only be one nested link in a valid html document

+ # You cannot have interactive content in an A tag, this includes links

+ # https://html.spec.whatwg.org/#interactive-content

+ link = self.tags.get('a')

+ if link:

+ self.handle_link(link['href'], data)

+ else:

+ # If there's a link, dont put the data directly in the run

+ self.run = self.paragraph.add_run(data)

+ spans = self.tags['span']

+ for span in spans:

+ if 'style' in span:

+ style = self.parse_dict_string(span['style'])

+ self.add_styles_to_run(style)

+

+ # add font style and name

+ for tag in self.tags:

+ if tag in font_styles:

+ font_style = font_styles[tag]

+ setattr(self.run.font, font_style, True)

+

+ if tag in font_names:

+ font_name = font_names[tag]

+ self.run.font.name = font_name

+

+ def ignore_nested_tables(self, tables_soup):

+ """

+ Returns array containing only the highest level tables

+ Operates on the assumption that bs4 returns child elements immediately after

+ the parent element in `find_all`. If this changes in the future, this method will need to be updated

+ :return:

+ """

+ new_tables = []

+ nest = 0

+ for table in tables_soup:

+ if nest:

+ nest -= 1

+ continue

+ new_tables.append(table)

+ nest = len(table.find_all('table'))

+ return new_tables

+

+ def get_table_rows(self, table_soup):

+ # If there's a header, body, footer or direct child tr tags, add row dimensions from there

+ return table_soup.select(', '.join(self.table_row_selectors), recursive=False)

+

+ def get_table_columns(self, row):

+ # Get all columns for the specified row tag.

+ return row.find_all(['th', 'td'], recursive=False) if row else []

+

+ def get_table_dimensions(self, table_soup):

+ # Get rows for the table

+ rows = self.get_table_rows(table_soup)

+ # Table is either empty or has non-direct children between table and tr tags

+ # Thus the row dimensions and column dimensions are assumed to be 0

+

+ cols = self.get_table_columns(rows[0]) if rows else []

+ # Add colspan calculation column number

+ col_count = 0

+ for col in cols:

+ colspan = col.attrs.get('colspan', 1)

+ col_count += int(colspan)

+

+ # return len(rows), col_count

+ return rows, col_count

+

+ def get_tables(self):

+ if not hasattr(self, 'soup'):

+ self.include_tables = False

+ return

+ # find other way to do it, or require this dependency?

+ self.tables = self.ignore_nested_tables(self.soup.find_all('table'))

+ self.table_no = 0

+

+ def run_process(self, html):

+ if self.bs and BeautifulSoup:

+ self.soup = BeautifulSoup(html, 'html.parser')

+ html = str(self.soup)

+ if self.include_tables:

+ self.get_tables()

+ self.feed(html)

+

+ def add_html_to_document(self, html, document):

+ if not isinstance(html, str):

+ raise ValueError('First argument needs to be a %s' % str)

+ elif not isinstance(document, docx.document.Document) and not isinstance(document, docx.table._Cell):

+ raise ValueError('Second argument needs to be a %s' % docx.document.Document)

+ self.set_initial_attrs(document)

+ self.run_process(html)

+

+ def add_html_to_cell(self, html, cell):

+ self.set_initial_attrs(cell)

+ self.run_process(html)

+

+ def parse_html_file(self, filename_html, filename_docx=None):

+ with open(filename_html, 'r') as infile:

+ html = infile.read()

+ self.set_initial_attrs()

+ self.run_process(html)

+ if not filename_docx:

+ path, filename = os.path.split(filename_html)

+ filename_docx = '%s/new_docx_file_%s' % (path, filename)

+ self.doc.save('%s.docx' % filename_docx)

+

+ def parse_html_string(self, html):

+ self.set_initial_attrs()

+ self.run_process(html)

+ return self.doc

\ No newline at end of file

diff --git a/ppstructure/table/README.md b/ppstructure/table/README.md

index 3732a89c54b3686a6d8cf390d3b9043826c4f459..e5c85eb9619ea92cd8b31041907d518eeceaf6a5 100644

--- a/ppstructure/table/README.md

+++ b/ppstructure/table/README.md

@@ -33,8 +33,8 @@ We evaluated the algorithm on the PubTabNet[1] eval dataset, and the

|Method|Acc|[TEDS(Tree-Edit-Distance-based Similarity)](https://github.com/ibm-aur-nlp/PubTabNet/tree/master/src)|Speed|

| --- | --- | --- | ---|

| EDD[2] |x| 88.3 |x|

-| TableRec-RARE(ours) |73.8%| 95.3% |1550ms|

-| SLANet(ours) | 76.2%| 95.85% |766ms|

+| TableRec-RARE(ours) | 71.73%| 93.88% |779ms|

+| SLANet(ours) | 76.31%| 95.89%|766ms|

The performance indicators are explained as follows:

- Acc: The accuracy of the table structure in each image, a wrong token is considered an error.

@@ -59,16 +59,16 @@ cd PaddleOCR/ppstructure

# download model

mkdir inference && cd inference

# Download the PP-OCRv3 text detection model and unzip it

-wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_slim_infer.tar && tar xf ch_PP-OCRv3_det_slim_infer.tar

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar && tar xf ch_PP-OCRv3_det_infer.tar

# Download the PP-OCRv3 text recognition model and unzip it

-wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_slim_infer.tar && tar xf ch_PP-OCRv3_rec_slim_infer.tar

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar && tar xf ch_PP-OCRv3_rec_infer.tar

# Download the PP-Structurev2 form recognition model and unzip it

wget https://paddleocr.bj.bcebos.com/ppstructure/models/slanet/ch_ppstructure_mobile_v2.0_SLANet_infer.tar && tar xf ch_ppstructure_mobile_v2.0_SLANet_infer.tar

cd ..

# run

python3.7 table/predict_table.py \

- --det_model_dir=inference/ch_PP-OCRv3_det_slim_infer \

- --rec_model_dir=inference/ch_PP-OCRv3_rec_slim_infer \

+ --det_model_dir=inference/ch_PP-OCRv3_det_infer \

+ --rec_model_dir=inference/ch_PP-OCRv3_rec_infer \

--table_model_dir=inference/ch_ppstructure_mobile_v2.0_SLANet_infer \

--rec_char_dict_path=../ppocr/utils/ppocr_keys_v1.txt \

--table_char_dict_path=../ppocr/utils/dict/table_structure_dict_ch.txt \

diff --git a/ppstructure/table/README_ch.md b/ppstructure/table/README_ch.md

index cc73f8bcec727f6eff1bf412fb877373d405e489..086e39348e96abe4320debef1cc11487694ccd49 100644

--- a/ppstructure/table/README_ch.md

+++ b/ppstructure/table/README_ch.md

@@ -39,8 +39,8 @@

|算法|Acc|[TEDS(Tree-Edit-Distance-based Similarity)](https://github.com/ibm-aur-nlp/PubTabNet/tree/master/src)|Speed|

| --- | --- | --- | ---|

| EDD[2] |x| 88.3% |x|

-| TableRec-RARE(ours) |73.8%| 95.3% |1550ms|

-| SLANet(ours) | 76.2%| 95.85% |766ms|

+| TableRec-RARE(ours) | 71.73%| 93.88% |779ms|

+| SLANet(ours) |76.31%| 95.89%|766ms|

性能指标解释如下:

- Acc: 模型对每张图像里表格结构的识别准确率,错一个token就算错误。

@@ -64,16 +64,16 @@ cd PaddleOCR/ppstructure

# 下载模型

mkdir inference && cd inference

# 下载PP-OCRv3文本检测模型并解压

-wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_slim_infer.tar && tar xf ch_PP-OCRv3_det_slim_infer.tar

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar && tar xf ch_PP-OCRv3_det_infer.tar

# 下载PP-OCRv3文本识别模型并解压

-wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_slim_infer.tar && tar xf ch_PP-OCRv3_rec_slim_infer.tar

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar && tar xf ch_PP-OCRv3_rec_infer.tar

# 下载PP-Structurev2表格识别模型并解压

wget https://paddleocr.bj.bcebos.com/ppstructure/models/slanet/ch_ppstructure_mobile_v2.0_SLANet_infer.tar && tar xf ch_ppstructure_mobile_v2.0_SLANet_infer.tar

cd ..

# 执行表格识别

python table/predict_table.py \

- --det_model_dir=inference/ch_PP-OCRv3_det_slim_infer \

- --rec_model_dir=inference/ch_PP-OCRv3_rec_slim_infer \

+ --det_model_dir=inference/ch_PP-OCRv3_det_infer \

+ --rec_model_dir=inference/ch_PP-OCRv3_rec_infer \

--table_model_dir=inference/ch_ppstructure_mobile_v2.0_SLANet_infer \

--rec_char_dict_path=../ppocr/utils/ppocr_keys_v1.txt \

--table_char_dict_path=../ppocr/utils/dict/table_structure_dict_ch.txt \

diff --git a/ppstructure/table/predict_structure.py b/ppstructure/table/predict_structure.py

index a580947aad428a0744e3da4b8302f047c6b11bee..45cbba3e298004d3711b05e6fb7cffecae637601 100755

--- a/ppstructure/table/predict_structure.py

+++ b/ppstructure/table/predict_structure.py

@@ -29,7 +29,7 @@ import tools.infer.utility as utility

from ppocr.data import create_operators, transform

from ppocr.postprocess import build_post_process

from ppocr.utils.logging import get_logger

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

from ppocr.utils.visual import draw_rectangle

from ppstructure.utility import parse_args

@@ -133,7 +133,7 @@ def main(args):

os.path.join(args.output, 'infer.txt'), mode='w',

encoding='utf-8') as f_w:

for image_file in image_file_list:

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

if not flag:

img = cv2.imread(image_file)

if img is None:

diff --git a/ppstructure/table/predict_table.py b/ppstructure/table/predict_table.py

index e94347d86144cd66474546e99a2c9dffee4978d9..aeec66deca62f648df249a5833dbfa678d2da612 100644

--- a/ppstructure/table/predict_table.py

+++ b/ppstructure/table/predict_table.py

@@ -31,7 +31,7 @@ import tools.infer.predict_rec as predict_rec

import tools.infer.predict_det as predict_det

import tools.infer.utility as utility

from tools.infer.predict_system import sorted_boxes

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

from ppocr.utils.logging import get_logger

from ppstructure.table.matcher import TableMatch

from ppstructure.table.table_master_match import TableMasterMatcher

@@ -194,7 +194,7 @@ def main(args):

for i, image_file in enumerate(image_file_list):

logger.info("[{}/{}] {}".format(i, img_num, image_file))

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

excel_path = os.path.join(

args.output, os.path.basename(image_file).split('.')[0] + '.xlsx')

if not flag:

diff --git a/ppstructure/table/table_metric/table_metric.py b/ppstructure/table/table_metric/table_metric.py

index 9aca98ad785d4614a803fa5a277a6e4a27b3b078..923a9c0071d083de72a2a896d6f62037373d4e73 100755

--- a/ppstructure/table/table_metric/table_metric.py

+++ b/ppstructure/table/table_metric/table_metric.py

@@ -9,7 +9,7 @@

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# Apache 2.0 License for more details.

-import distance

+from rapidfuzz.distance import Levenshtein

from apted import APTED, Config

from apted.helpers import Tree

from lxml import etree, html

@@ -39,17 +39,6 @@ class TableTree(Tree):

class CustomConfig(Config):

- @staticmethod

- def maximum(*sequences):

- """Get maximum possible value

- """

- return max(map(len, sequences))

-

- def normalized_distance(self, *sequences):

- """Get distance from 0 to 1

- """

- return float(distance.levenshtein(*sequences)) / self.maximum(*sequences)

-

def rename(self, node1, node2):

"""Compares attributes of trees"""

#print(node1.tag)

@@ -58,23 +47,12 @@ class CustomConfig(Config):

if node1.tag == 'td':

if node1.content or node2.content:

#print(node1.content, )

- return self.normalized_distance(node1.content, node2.content)

+ return Levenshtein.normalized_distance(node1.content, node2.content)

return 0.

class CustomConfig_del_short(Config):

- @staticmethod

- def maximum(*sequences):

- """Get maximum possible value

- """

- return max(map(len, sequences))

-

- def normalized_distance(self, *sequences):

- """Get distance from 0 to 1

- """

- return float(distance.levenshtein(*sequences)) / self.maximum(*sequences)

-

def rename(self, node1, node2):

"""Compares attributes of trees"""

if (node1.tag != node2.tag) or (node1.colspan != node2.colspan) or (node1.rowspan != node2.rowspan):

@@ -90,21 +68,10 @@ class CustomConfig_del_short(Config):

node1_content = ['####']

if len(node2_content) < 3:

node2_content = ['####']

- return self.normalized_distance(node1_content, node2_content)

+ return Levenshtein.normalized_distance(node1_content, node2_content)

return 0.

class CustomConfig_del_block(Config):

- @staticmethod

- def maximum(*sequences):

- """Get maximum possible value

- """

- return max(map(len, sequences))

-

- def normalized_distance(self, *sequences):

- """Get distance from 0 to 1

- """

- return float(distance.levenshtein(*sequences)) / self.maximum(*sequences)

-

def rename(self, node1, node2):

"""Compares attributes of trees"""

if (node1.tag != node2.tag) or (node1.colspan != node2.colspan) or (node1.rowspan != node2.rowspan):

@@ -120,7 +87,7 @@ class CustomConfig_del_block(Config):

while ' ' in node2_content:

print(node2_content.index(' '))

node2_content.pop(node2_content.index(' '))

- return self.normalized_distance(node1_content, node2_content)

+ return Levenshtein.normalized_distance(node1_content, node2_content)

return 0.

class TEDS(object):

diff --git a/ppstructure/utility.py b/ppstructure/utility.py

index 625185e6f5b090641befc35b3b4980c331687cff..bdea0af69e37e15d1f191b2a86c036ae1c2b1e45 100644

--- a/ppstructure/utility.py

+++ b/ppstructure/utility.py

@@ -38,7 +38,7 @@ def init_args():

parser.add_argument(

"--layout_dict_path",

type=str,

- default="../ppocr/utils/dict/layout_dict/layout_pubalynet_dict.txt")

+ default="../ppocr/utils/dict/layout_dict/layout_publaynet_dict.txt")

parser.add_argument(

"--layout_score_threshold",

type=float,

@@ -49,8 +49,8 @@ def init_args():

type=float,

default=0.5,

help="Threshold of nms.")

- # params for vqa

- parser.add_argument("--vqa_algorithm", type=str, default='LayoutXLM')

+ # params for kie

+ parser.add_argument("--kie_algorithm", type=str, default='LayoutXLM')

parser.add_argument("--ser_model_dir", type=str)

parser.add_argument(

"--ser_dict_path",

@@ -63,7 +63,7 @@ def init_args():

"--mode",

type=str,

default='structure',

- help='structure and vqa is supported')

+ help='structure and kie is supported')

parser.add_argument(

"--image_orientation",

type=bool,

@@ -84,11 +84,18 @@ def init_args():

type=str2bool,

default=True,

help='In the forward, whether the non-table area is recognition by ocr')

+ # param for recovery

parser.add_argument(

"--recovery",

- type=bool,

+ type=str2bool,

default=False,

help='Whether to enable layout of recovery')

+ parser.add_argument(

+ "--save_pdf",

+ type=str2bool,

+ default=False,

+ help='Whether to save pdf file')

+

return parser

diff --git a/ppstructure/vqa/README.md b/ppstructure/vqa/README.md

deleted file mode 100644

index 28b794383bceccf655bdf00df5ee0c98841e2e95..0000000000000000000000000000000000000000

--- a/ppstructure/vqa/README.md

+++ /dev/null

@@ -1,285 +0,0 @@

-English | [简体中文](README_ch.md)

-

-- [1 Introduction](#1-introduction)

-- [2. Performance](#2-performance)

-- [3. Effect demo](#3-effect-demo)

- - [3.1 SER](#31-ser)

- - [3.2 RE](#32-re)

-- [4. Install](#4-install)

- - [4.1 Install dependencies](#41-install-dependencies)

- - [5.3 RE](#53-re)

-- [6. Reference Links](#6-reference-links)

-- [License](#license)

-

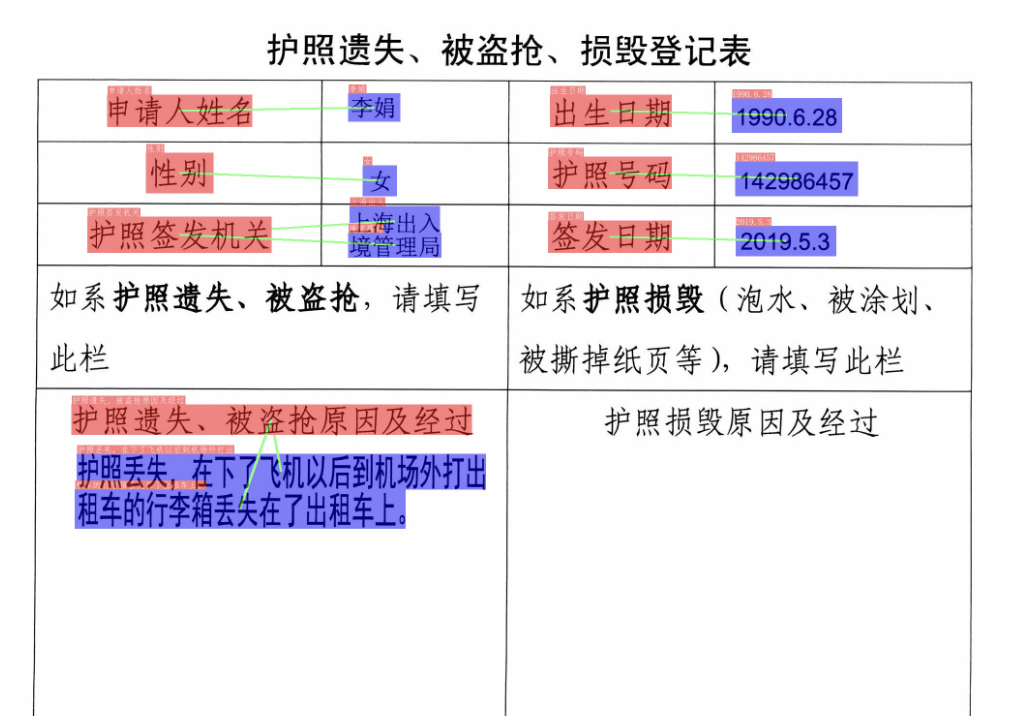

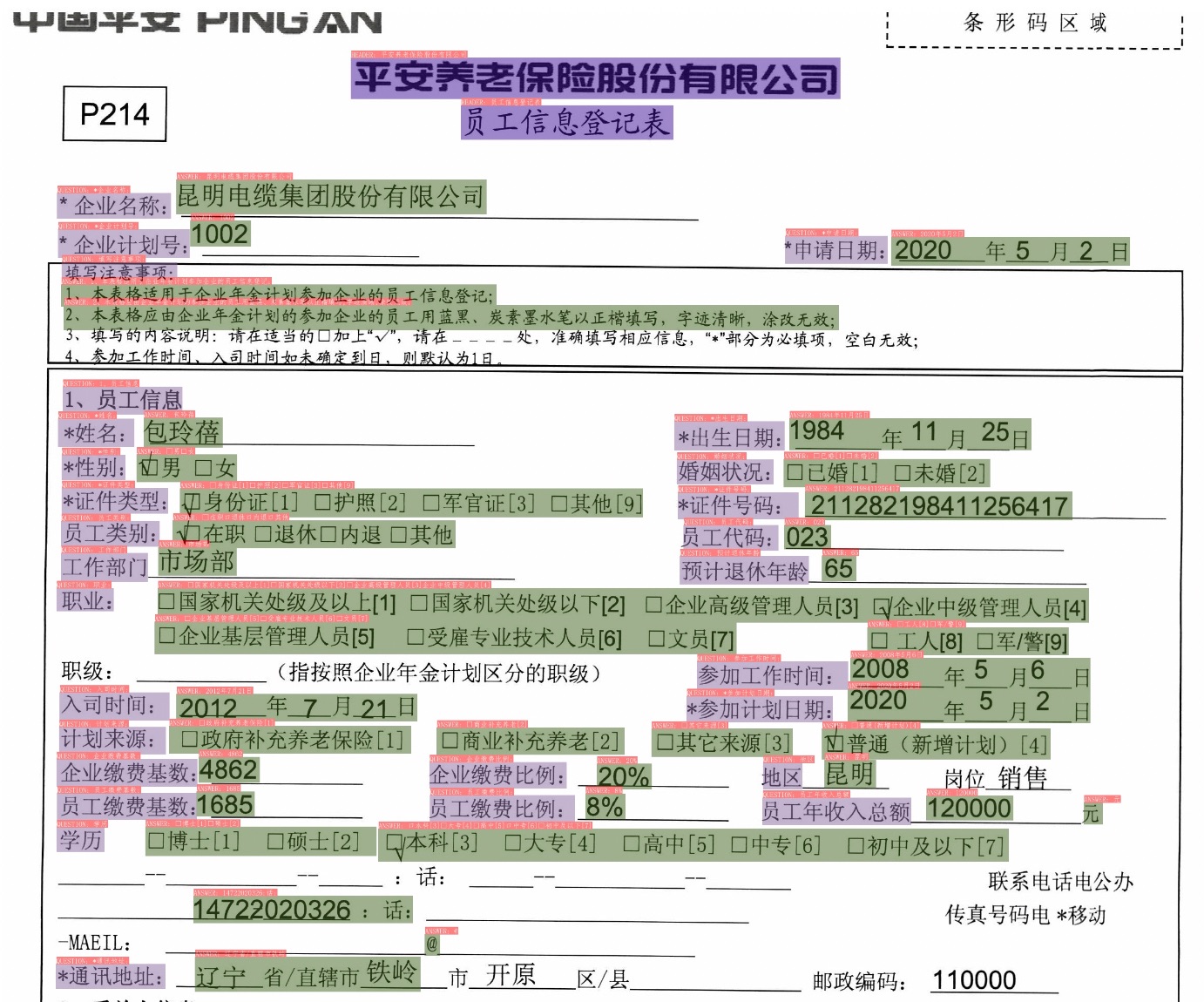

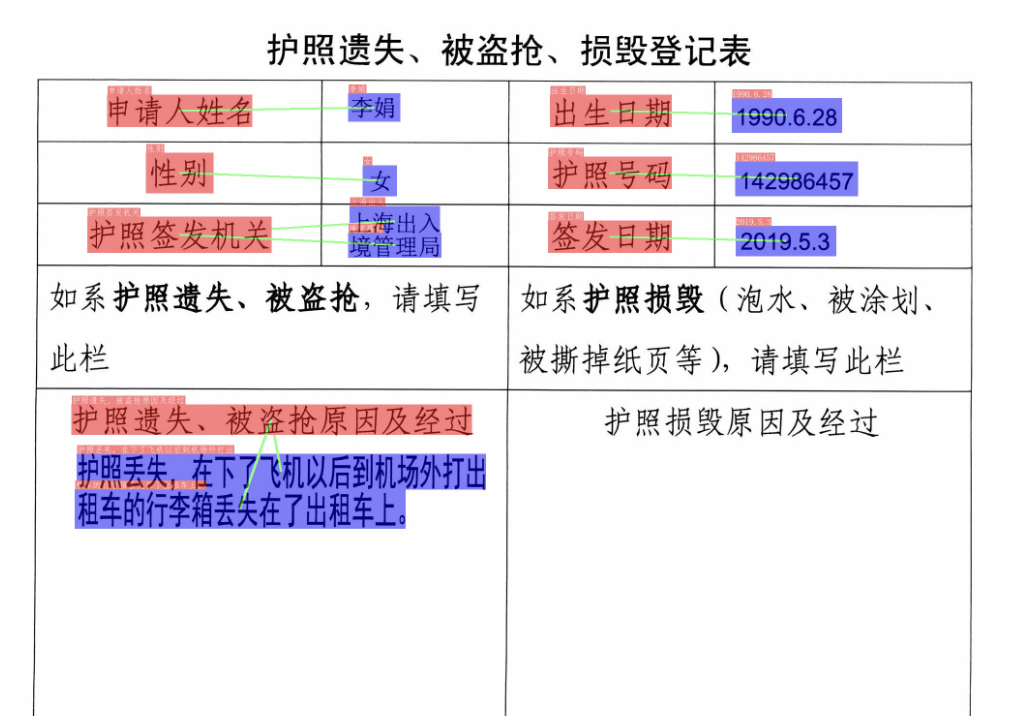

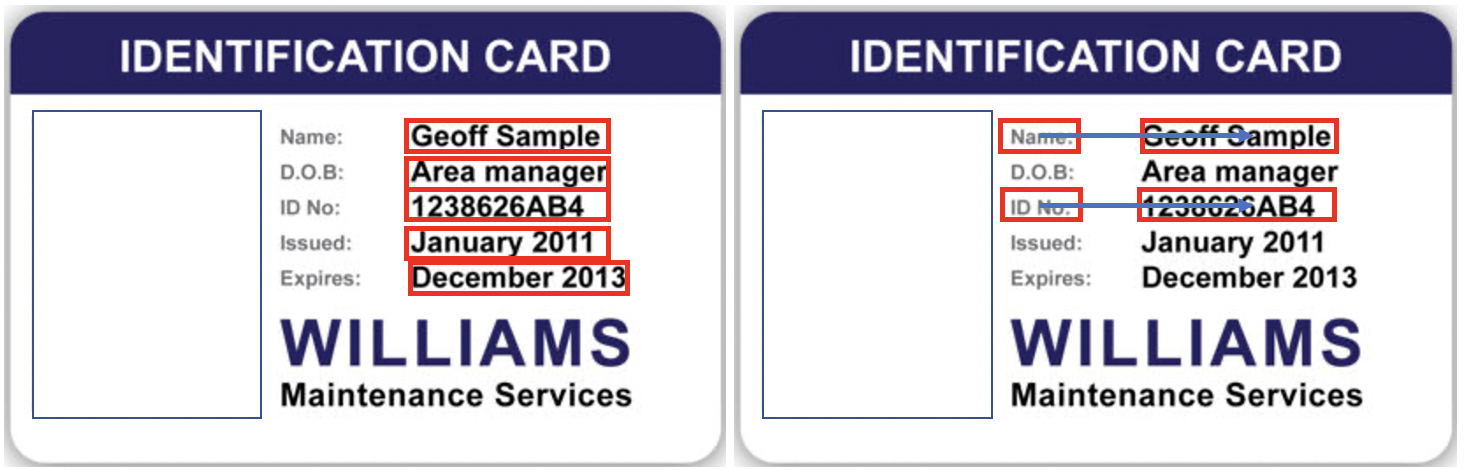

-# Document Visual Question Answering

-

-## 1 Introduction

-

-VQA refers to visual question answering, which mainly asks and answers image content. DOC-VQA is one of the VQA tasks. DOC-VQA mainly asks questions about the text content of text images.

-

-The DOC-VQA algorithm in PP-Structure is developed based on the PaddleNLP natural language processing algorithm library.

-

-The main features are as follows:

-

-- Integrate [LayoutXLM](https://arxiv.org/pdf/2104.08836.pdf) model and PP-OCR prediction engine.

-- Supports Semantic Entity Recognition (SER) and Relation Extraction (RE) tasks based on multimodal methods. Based on the SER task, the text recognition and classification in the image can be completed; based on the RE task, the relationship extraction of the text content in the image can be completed, such as judging the problem pair (pair).

-- Supports custom training for SER tasks and RE tasks.

-- Supports end-to-end system prediction and evaluation of OCR+SER.

-- Supports end-to-end system prediction of OCR+SER+RE.

-

-

-This project is an open source implementation of [LayoutXLM: Multimodal Pre-training for Multilingual Visually-rich Document Understanding](https://arxiv.org/pdf/2104.08836.pdf) on Paddle 2.2,

-Included fine-tuning code on [XFUND dataset](https://github.com/doc-analysis/XFUND).

-

-## 2. Performance

-

-We evaluate the algorithm on the Chinese dataset of [XFUND](https://github.com/doc-analysis/XFUND), and the performance is as follows

-

-| Model | Task | hmean | Model download address |

-|:---:|:---:|:---:| :---:|

-| LayoutXLM | SER | 0.9038 | [link](https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutXLM_xfun_zh.tar) |

-| LayoutXLM | RE | 0.7483 | [link](https://paddleocr.bj.bcebos.com/pplayout/re_LayoutXLM_xfun_zh.tar) |

-| LayoutLMv2 | SER | 0.8544 | [link](https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutLMv2_xfun_zh.tar)

-| LayoutLMv2 | RE | 0.6777 | [link](https://paddleocr.bj.bcebos.com/pplayout/re_LayoutLMv2_xfun_zh.tar) |

-| LayoutLM | SER | 0.7731 | [link](https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutLM_xfun_zh.tar) |

-

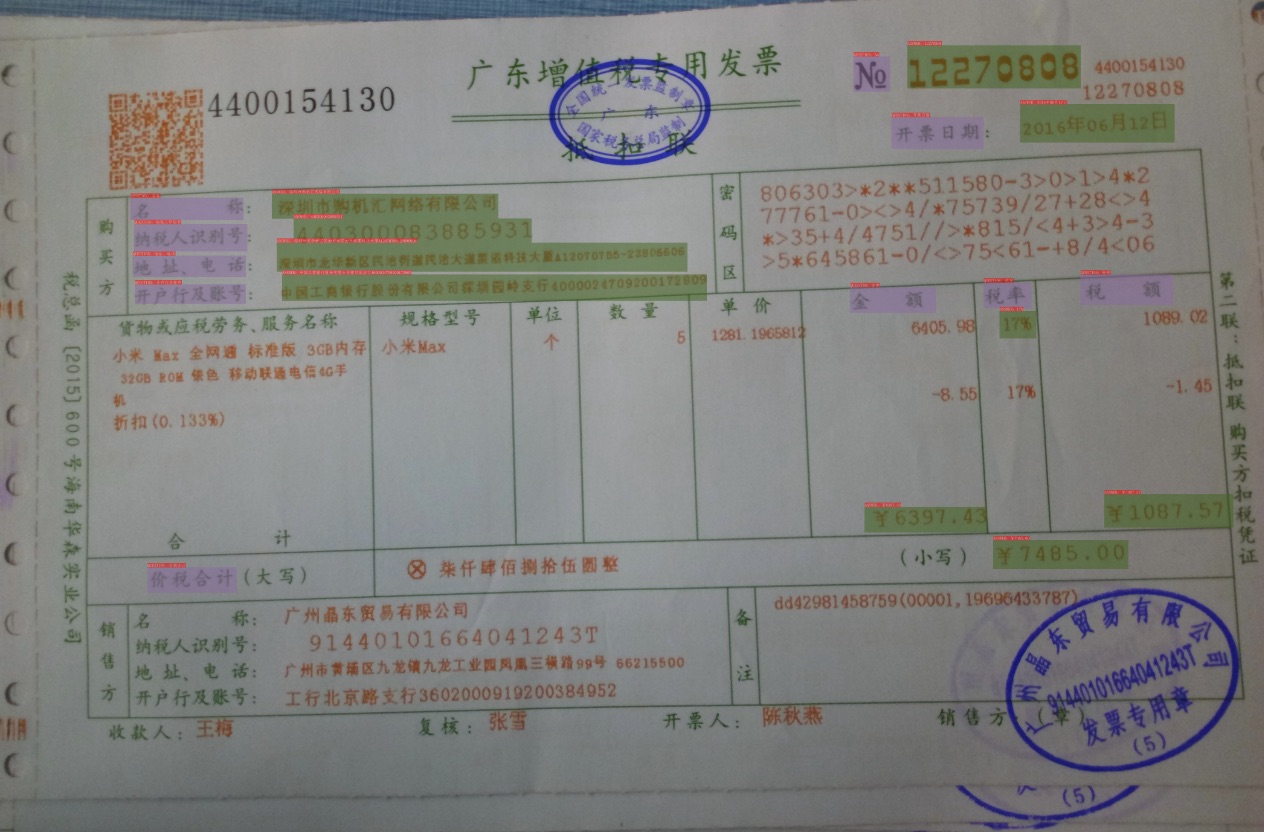

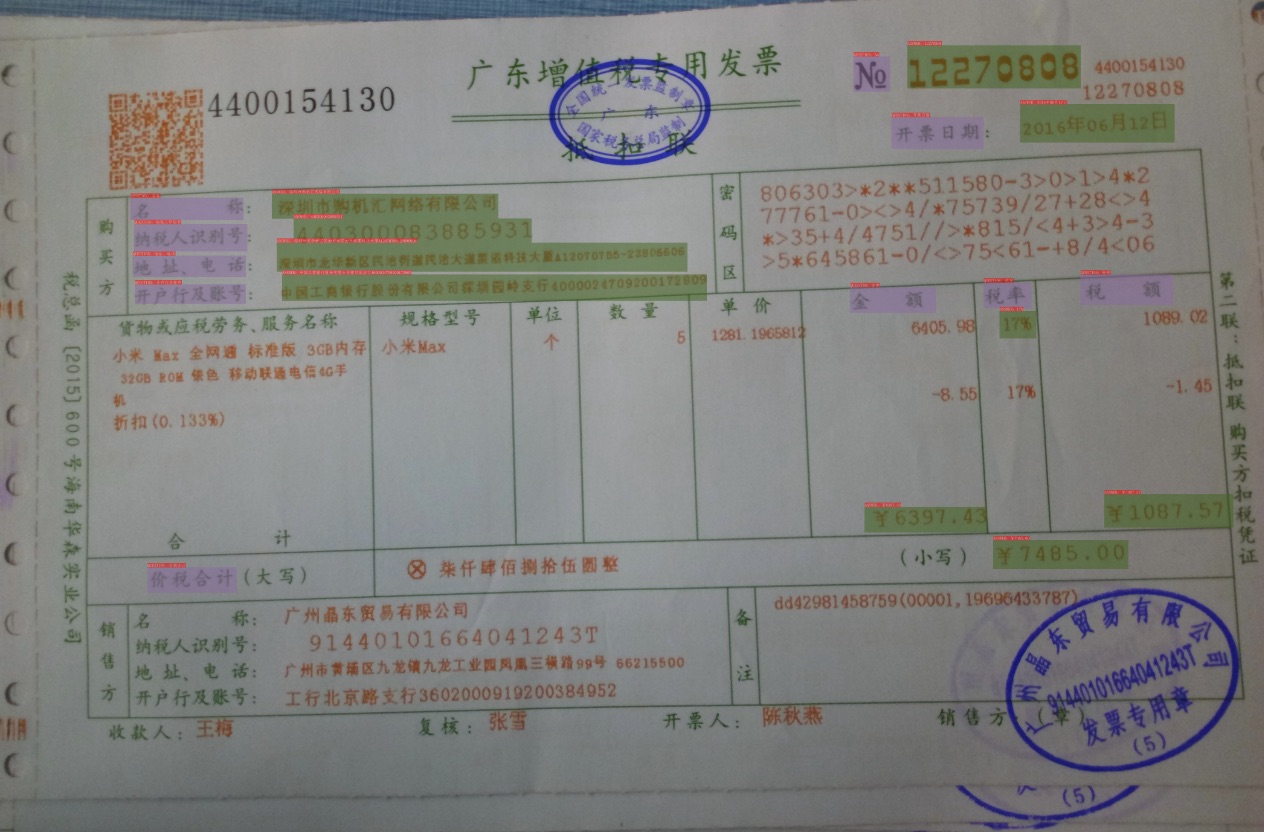

-## 3. Effect demo

-

-**Note:** The test images are from the XFUND dataset.

-

-

-### 3.1 SER

-

- |

----|---

-

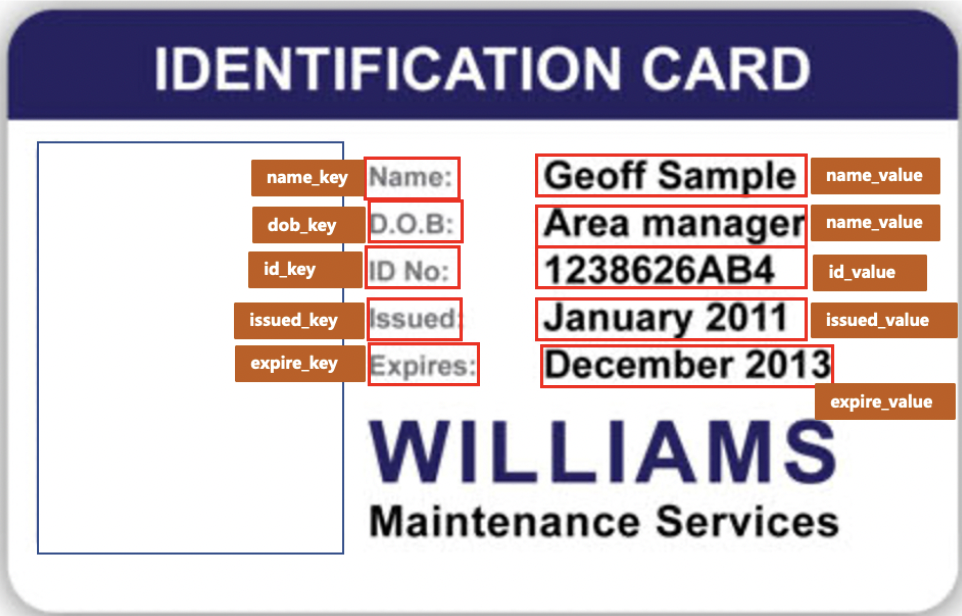

-Boxes with different colors in the figure represent different categories. For the XFUND dataset, there are 3 categories: `QUESTION`, `ANSWER`, `HEADER`

-

-* Dark purple: HEADER

-* Light purple: QUESTION

-* Army Green: ANSWER

-

-The corresponding categories and OCR recognition results are also marked on the upper left of the OCR detection frame.

-

-

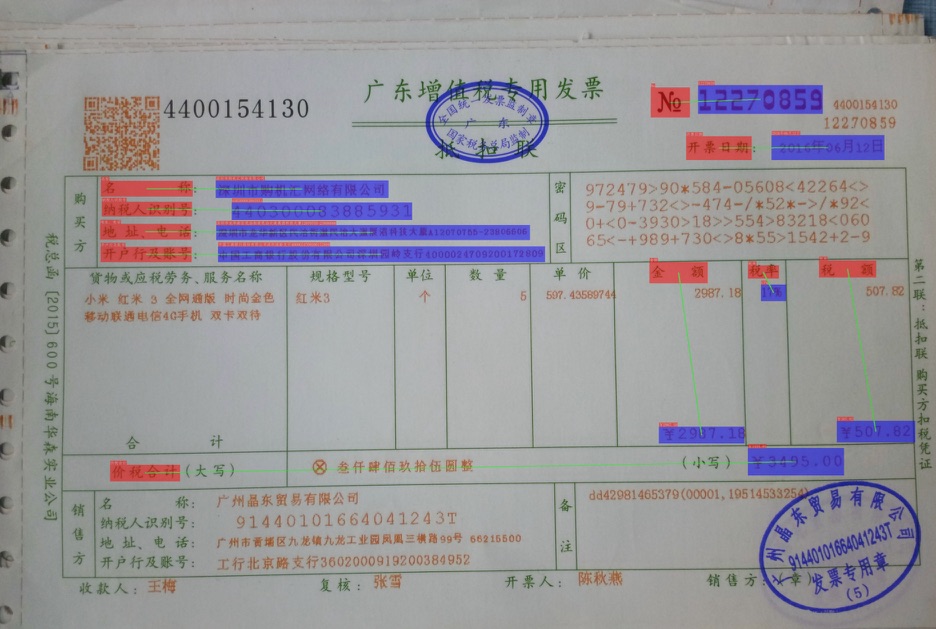

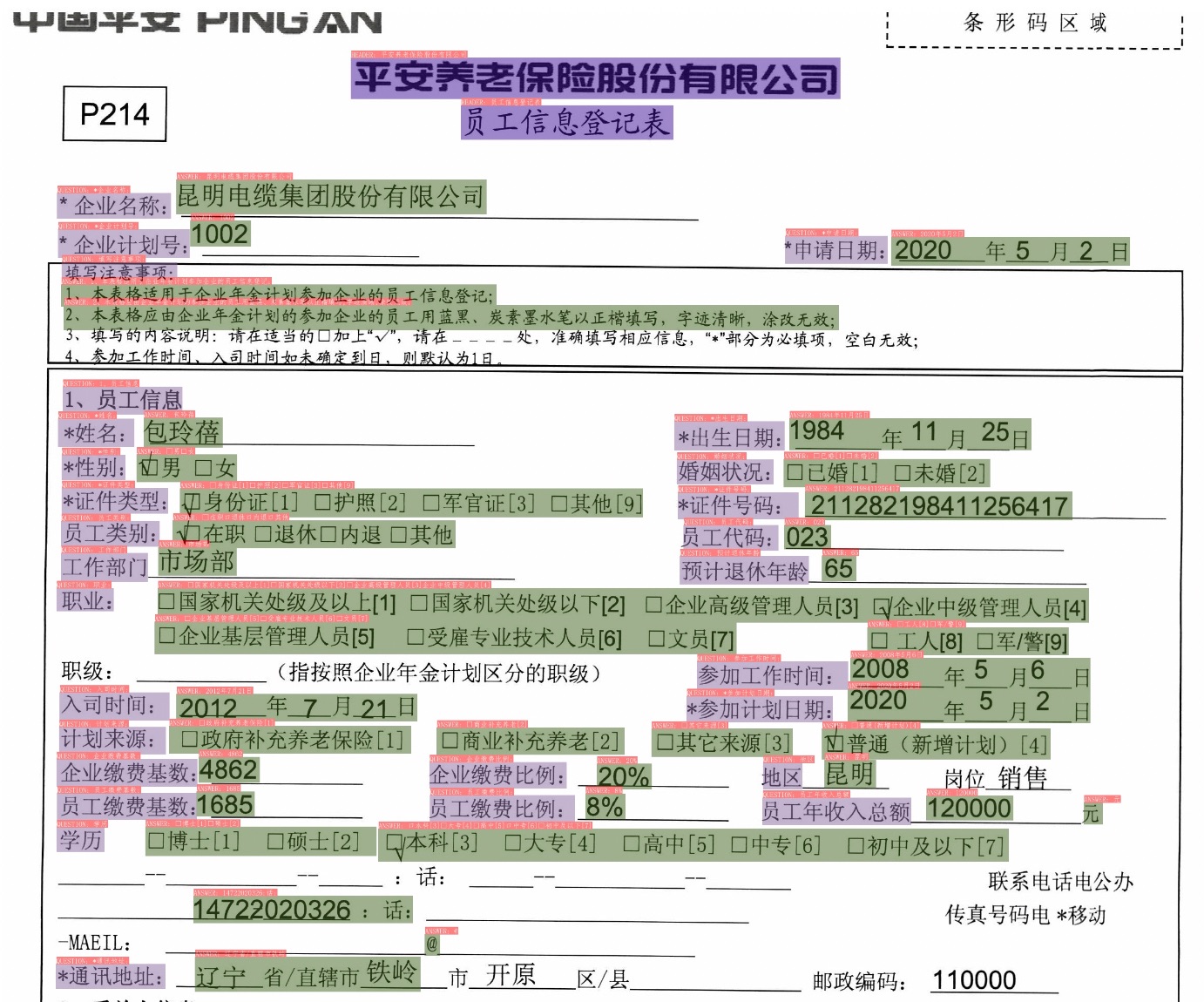

-### 3.2 RE

-

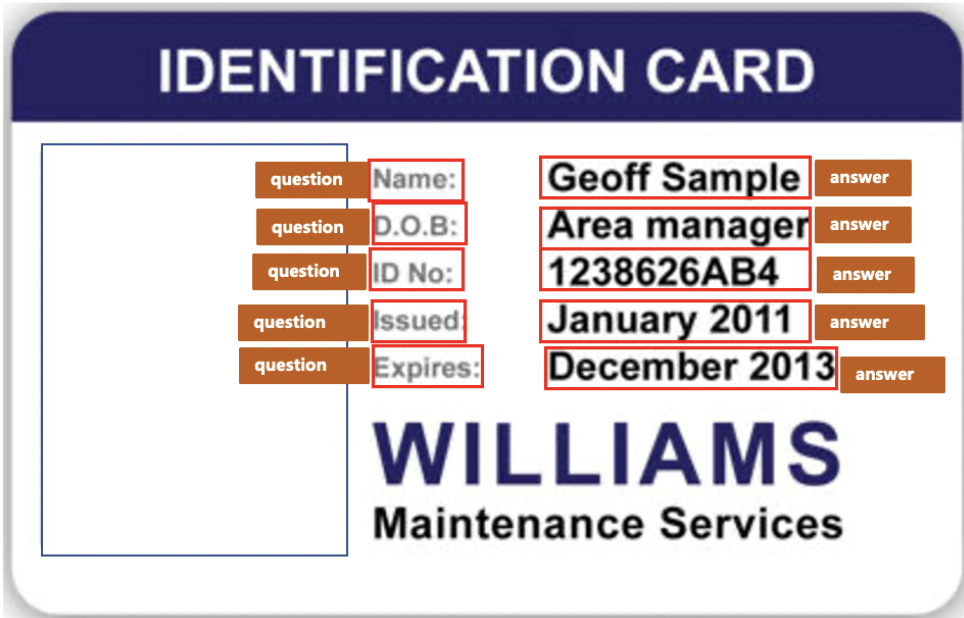

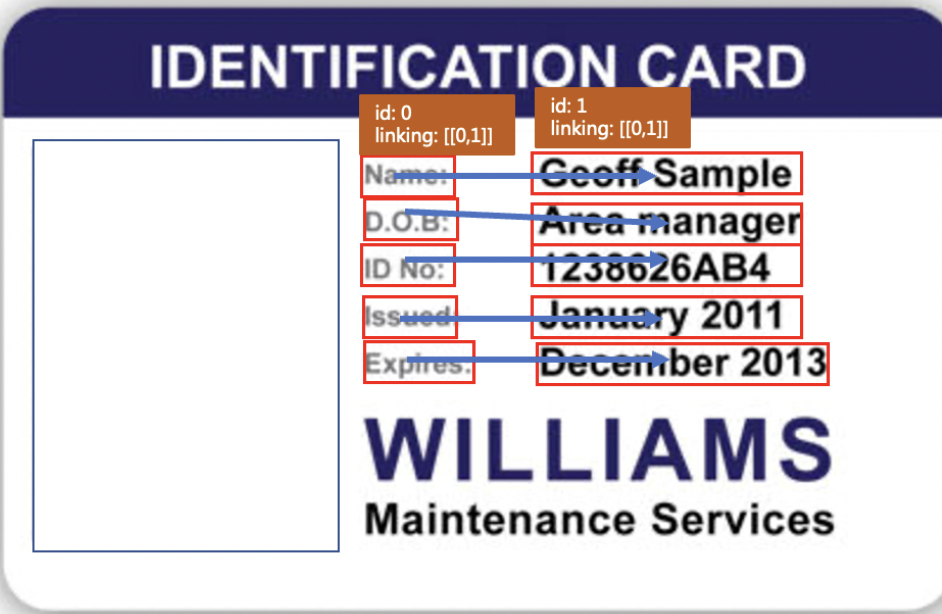

- |

----|---

-

-

-The red box in the figure represents the question, the blue box represents the answer, and the question and the answer are connected by a green line. The corresponding categories and OCR recognition results are also marked on the upper left of the OCR detection frame.

-

-## 4. Install

-

-### 4.1 Install dependencies

-

-- **(1) Install PaddlePaddle**

-

-```bash

-python3 -m pip install --upgrade pip

-

-# GPU installation

-python3 -m pip install "paddlepaddle-gpu>=2.2" -i https://mirror.baidu.com/pypi/simple

-

-# CPU installation

-python3 -m pip install "paddlepaddle>=2.2" -i https://mirror.baidu.com/pypi/simple

-

-````

-For more requirements, please refer to the instructions in [Installation Documentation](https://www.paddlepaddle.org.cn/install/quick).

-

-### 4.2 Install PaddleOCR

-

-- **(1) pip install PaddleOCR whl package quickly (prediction only)**

-

-```bash

-python3 -m pip install paddleocr

-````

-

-- **(2) Download VQA source code (prediction + training)**

-

-```bash

-[Recommended] git clone https://github.com/PaddlePaddle/PaddleOCR

-

-# If the pull cannot be successful due to network problems, you can also choose to use the hosting on the code cloud:

-git clone https://gitee.com/paddlepaddle/PaddleOCR

-

-# Note: Code cloud hosting code may not be able to synchronize the update of this github project in real time, there is a delay of 3 to 5 days, please use the recommended method first.

-````

-

-- **(3) Install VQA's `requirements`**

-

-```bash

-python3 -m pip install -r ppstructure/vqa/requirements.txt

-````

-

-## 5. Usage

-

-### 5.1 Data and Model Preparation

-

-If you want to experience the prediction process directly, you can download the pre-training model provided by us, skip the training process, and just predict directly.

-

-* Download the processed dataset

-

-The download address of the processed XFUND Chinese dataset: [link](https://paddleocr.bj.bcebos.com/ppstructure/dataset/XFUND.tar).

-

-

-Download and unzip the dataset, and place the dataset in the current directory after unzipping.

-

-```shell

-wget https://paddleocr.bj.bcebos.com/ppstructure/dataset/XFUND.tar

-````

-

-* Convert the dataset

-

-If you need to train other XFUND datasets, you can use the following commands to convert the datasets

-

-```bash

-python3 ppstructure/vqa/tools/trans_xfun_data.py --ori_gt_path=path/to/json_path --output_path=path/to/save_path

-````

-

-* Download the pretrained models

-```bash

-mkdir pretrain && cd pretrain

-#download the SER model

-wget https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutXLM_xfun_zh.tar && tar -xvf ser_LayoutXLM_xfun_zh.tar

-#download the RE model

-wget https://paddleocr.bj.bcebos.com/pplayout/re_LayoutXLM_xfun_zh.tar && tar -xvf re_LayoutXLM_xfun_zh.tar

-cd ../

-````

-

-

-### 5.2 SER

-

-Before starting training, you need to modify the following four fields

-

-1. `Train.dataset.data_dir`: point to the directory where the training set images are stored

-2. `Train.dataset.label_file_list`: point to the training set label file

-3. `Eval.dataset.data_dir`: refers to the directory where the validation set images are stored

-4. `Eval.dataset.label_file_list`: point to the validation set label file

-

-* start training

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/ser/layoutxlm.yml

-````

-

-Finally, `precision`, `recall`, `hmean` and other indicators will be printed.

-In the `./output/ser_layoutxlm/` folder will save the training log, the optimal model and the model for the latest epoch.

-

-* resume training

-

-To resume training, assign the folder path of the previously trained model to the `Architecture.Backbone.checkpoints` field.

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-````

-

-* evaluate

-

-Evaluation requires assigning the folder path of the model to be evaluated to the `Architecture.Backbone.checkpoints` field.

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-````

-Finally, `precision`, `recall`, `hmean` and other indicators will be printed

-

-* `OCR + SER` tandem prediction based on training engine

-

-Use the following command to complete the series prediction of `OCR engine + SER`, taking the SER model based on LayoutXLM as an example::

-

-```shell

-python3.7 tools/export_model.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=pretrain/ser_LayoutXLM_xfun_zh/ Global.save_inference_dir=output/ser/infer

-````

-

-Finally, the prediction result visualization image and the prediction result text file will be saved in the directory configured by the `config.Global.save_res_path` field. The prediction result text file is named `infer_results.txt`.

-

-* End-to-end evaluation of `OCR + SER` prediction system

-

-First use the `tools/infer_vqa_token_ser.py` script to complete the prediction of the dataset, then use the following command to evaluate.

-

-```shell

-export CUDA_VISIBLE_DEVICES=0

-python3 tools/eval_with_label_end2end.py --gt_json_path XFUND/zh_val/xfun_normalize_val.json --pred_json_path output_res/infer_results.txt

-````

-* export model

-

-Use the following command to complete the model export of the SER model, taking the SER model based on LayoutXLM as an example:

-

-```shell

-python3.7 tools/export_model.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=pretrain/ser_LayoutXLM_xfun_zh/ Global.save_inference_dir=output/ser/infer

-```

-The converted model will be stored in the directory specified by the `Global.save_inference_dir` field.

-

-* `OCR + SER` tandem prediction based on prediction engine

-

-Use the following command to complete the tandem prediction of `OCR + SER` based on the prediction engine, taking the SER model based on LayoutXLM as an example:

-

-```shell

-cd ppstructure

-CUDA_VISIBLE_DEVICES=0 python3.7 vqa/predict_vqa_token_ser.py --vqa_algorithm=LayoutXLM --ser_model_dir=../output/ser/infer --ser_dict_path=../train_data/XFUND/class_list_xfun.txt --vis_font_path=../doc/fonts/simfang.ttf --image_dir=docs/vqa/input/zh_val_42.jpg --output=output

-```

-After the prediction is successful, the visualization images and results will be saved in the directory specified by the `output` field

-

-

-### 5.3 RE

-

-* start training

-

-Before starting training, you need to modify the following four fields

-

-1. `Train.dataset.data_dir`: point to the directory where the training set images are stored

-2. `Train.dataset.label_file_list`: point to the training set label file

-3. `Eval.dataset.data_dir`: refers to the directory where the validation set images are stored

-4. `Eval.dataset.label_file_list`: point to the validation set label file

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/re/layoutxlm.yml

-````

-

-Finally, `precision`, `recall`, `hmean` and other indicators will be printed.

-In the `./output/re_layoutxlm/` folder will save the training log, the optimal model and the model for the latest epoch.

-

-* resume training

-

-To resume training, assign the folder path of the previously trained model to the `Architecture.Backbone.checkpoints` field.

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/re/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-````

-

-* evaluate

-

-Evaluation requires assigning the folder path of the model to be evaluated to the `Architecture.Backbone.checkpoints` field.

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py -c configs/vqa/re/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-````

-Finally, `precision`, `recall`, `hmean` and other indicators will be printed

-

-* Use `OCR engine + SER + RE` tandem prediction

-

-Use the following command to complete the series prediction of `OCR engine + SER + RE`, taking the pretrained SER and RE models as an example:

-```shell

-export CUDA_VISIBLE_DEVICES=0

-python3 tools/infer_vqa_token_ser_re.py -c configs/vqa/re/layoutxlm.yml -o Architecture.Backbone.checkpoints=pretrain/re_LayoutXLM_xfun_zh/Global.infer_img=ppstructure/docs/vqa/input/zh_val_21.jpg -c_ser configs/vqa/ser/layoutxlm. yml -o_ser Architecture.Backbone.checkpoints=pretrain/ser_LayoutXLM_xfun_zh/

-````

-

-Finally, the prediction result visualization image and the prediction result text file will be saved in the directory configured by the `config.Global.save_res_path` field. The prediction result text file is named `infer_results.txt`.

-

-* export model

-

-cooming soon

-

-* `OCR + SER + RE` tandem prediction based on prediction engine

-

-cooming soon

-

-## 6. Reference Links

-

-- LayoutXLM: Multimodal Pre-training for Multilingual Visually-rich Document Understanding, https://arxiv.org/pdf/2104.08836.pdf

-- microsoft/unilm/layoutxlm, https://github.com/microsoft/unilm/tree/master/layoutxlm

-- XFUND dataset, https://github.com/doc-analysis/XFUND

-

-## License

-

-The content of this project itself is licensed under the [Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0)](https://creativecommons.org/licenses/by-nc-sa/4.0/)

diff --git a/ppstructure/vqa/README_ch.md b/ppstructure/vqa/README_ch.md

deleted file mode 100644

index f168110ed9b2e750b3b2ee6f5ab0116daebc3e77..0000000000000000000000000000000000000000

--- a/ppstructure/vqa/README_ch.md

+++ /dev/null

@@ -1,283 +0,0 @@

-[English](README.md) | 简体中文

-

-- [1. 简介](#1-简介)

-- [2. 性能](#2-性能)

-- [3. 效果演示](#3-效果演示)

- - [3.1 SER](#31-ser)

- - [3.2 RE](#32-re)

-- [4. 安装](#4-安装)

- - [4.1 安装依赖](#41-安装依赖)

- - [4.2 安装PaddleOCR(包含 PP-OCR 和 VQA)](#42-安装paddleocr包含-pp-ocr-和-vqa)

-- [5. 使用](#5-使用)

- - [5.1 数据和预训练模型准备](#51-数据和预训练模型准备)

- - [5.2 SER](#52-ser)

- - [5.3 RE](#53-re)

-- [6. 参考链接](#6-参考链接)

-- [License](#license)

-

-# 文档视觉问答(DOC-VQA)

-

-## 1. 简介

-

-VQA指视觉问答,主要针对图像内容进行提问和回答,DOC-VQA是VQA任务中的一种,DOC-VQA主要针对文本图像的文字内容提出问题。

-

-PP-Structure 里的 DOC-VQA算法基于PaddleNLP自然语言处理算法库进行开发。

-

-主要特性如下:

-

-- 集成[LayoutXLM](https://arxiv.org/pdf/2104.08836.pdf)模型以及PP-OCR预测引擎。

-- 支持基于多模态方法的语义实体识别 (Semantic Entity Recognition, SER) 以及关系抽取 (Relation Extraction, RE) 任务。基于 SER 任务,可以完成对图像中的文本识别与分类;基于 RE 任务,可以完成对图象中的文本内容的关系提取,如判断问题对(pair)。

-- 支持SER任务和RE任务的自定义训练。

-- 支持OCR+SER的端到端系统预测与评估。

-- 支持OCR+SER+RE的端到端系统预测。

-

-本项目是 [LayoutXLM: Multimodal Pre-training for Multilingual Visually-rich Document Understanding](https://arxiv.org/pdf/2104.08836.pdf) 在 Paddle 2.2上的开源实现,

-包含了在 [XFUND数据集](https://github.com/doc-analysis/XFUND) 上的微调代码。

-

-## 2. 性能

-

-我们在 [XFUND](https://github.com/doc-analysis/XFUND) 的中文数据集上对算法进行了评估,性能如下

-

-| 模型 | 任务 | hmean | 模型下载地址 |

-|:---:|:---:|:---:| :---:|

-| LayoutXLM | SER | 0.9038 | [链接](https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutXLM_xfun_zh.tar) |

-| LayoutXLM | RE | 0.7483 | [链接](https://paddleocr.bj.bcebos.com/pplayout/re_LayoutXLM_xfun_zh.tar) |

-| LayoutLMv2 | SER | 0.8544 | [链接](https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutLMv2_xfun_zh.tar)

-| LayoutLMv2 | RE | 0.6777 | [链接](https://paddleocr.bj.bcebos.com/pplayout/re_LayoutLMv2_xfun_zh.tar) |

-| LayoutLM | SER | 0.7731 | [链接](https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutLM_xfun_zh.tar) |

-

-## 3. 效果演示

-

-**注意:** 测试图片来源于XFUND数据集。

-

-### 3.1 SER

-

- |

----|---

-

-图中不同颜色的框表示不同的类别,对于XFUND数据集,有`QUESTION`, `ANSWER`, `HEADER` 3种类别

-

-* 深紫色:HEADER

-* 浅紫色:QUESTION

-* 军绿色:ANSWER

-

-在OCR检测框的左上方也标出了对应的类别和OCR识别结果。

-

-### 3.2 RE

-

- |

----|---

-

-

-图中红色框表示问题,蓝色框表示答案,问题和答案之间使用绿色线连接。在OCR检测框的左上方也标出了对应的类别和OCR识别结果。

-

-## 4. 安装

-

-### 4.1 安装依赖

-

-- **(1) 安装PaddlePaddle**

-

-```bash

-python3 -m pip install --upgrade pip

-

-# GPU安装

-python3 -m pip install "paddlepaddle-gpu>=2.2" -i https://mirror.baidu.com/pypi/simple

-

-# CPU安装

-python3 -m pip install "paddlepaddle>=2.2" -i https://mirror.baidu.com/pypi/simple

-

-```

-更多需求,请参照[安装文档](https://www.paddlepaddle.org.cn/install/quick)中的说明进行操作。

-

-### 4.2 安装PaddleOCR(包含 PP-OCR 和 VQA)

-

-- **(1)pip快速安装PaddleOCR whl包(仅预测)**

-

-```bash

-python3 -m pip install paddleocr

-```

-

-- **(2)下载VQA源码(预测+训练)**

-

-```bash

-【推荐】git clone https://github.com/PaddlePaddle/PaddleOCR

-

-# 如果因为网络问题无法pull成功,也可选择使用码云上的托管:

-git clone https://gitee.com/paddlepaddle/PaddleOCR

-

-# 注:码云托管代码可能无法实时同步本github项目更新,存在3~5天延时,请优先使用推荐方式。

-```

-

-- **(3)安装VQA的`requirements`**

-

-```bash

-python3 -m pip install -r ppstructure/vqa/requirements.txt

-```

-

-## 5. 使用

-

-### 5.1 数据和预训练模型准备

-

-如果希望直接体验预测过程,可以下载我们提供的预训练模型,跳过训练过程,直接预测即可。

-

-* 下载处理好的数据集

-

-处理好的XFUND中文数据集下载地址:[链接](https://paddleocr.bj.bcebos.com/ppstructure/dataset/XFUND.tar)。

-

-

-下载并解压该数据集,解压后将数据集放置在当前目录下。

-

-```shell

-wget https://paddleocr.bj.bcebos.com/ppstructure/dataset/XFUND.tar

-```

-

-* 转换数据集

-

-若需进行其他XFUND数据集的训练,可使用下面的命令进行数据集的转换

-

-```bash

-python3 ppstructure/vqa/tools/trans_xfun_data.py --ori_gt_path=path/to/json_path --output_path=path/to/save_path

-```

-

-* 下载预训练模型

-```bash

-mkdir pretrain && cd pretrain

-#下载SER模型

-wget https://paddleocr.bj.bcebos.com/pplayout/ser_LayoutXLM_xfun_zh.tar && tar -xvf ser_LayoutXLM_xfun_zh.tar

-#下载RE模型

-wget https://paddleocr.bj.bcebos.com/pplayout/re_LayoutXLM_xfun_zh.tar && tar -xvf re_LayoutXLM_xfun_zh.tar

-cd ../

-```

-

-### 5.2 SER

-

-启动训练之前,需要修改下面的四个字段

-

-1. `Train.dataset.data_dir`:指向训练集图片存放目录

-2. `Train.dataset.label_file_list`:指向训练集标注文件

-3. `Eval.dataset.data_dir`:指指向验证集图片存放目录

-4. `Eval.dataset.label_file_list`:指向验证集标注文件

-

-* 启动训练

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/ser/layoutxlm.yml

-```

-

-最终会打印出`precision`, `recall`, `hmean`等指标。

-在`./output/ser_layoutxlm/`文件夹中会保存训练日志,最优的模型和最新epoch的模型。

-

-* 恢复训练

-

-恢复训练需要将之前训练好的模型所在文件夹路径赋值给 `Architecture.Backbone.checkpoints` 字段。

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-```

-

-* 评估

-

-评估需要将待评估的模型所在文件夹路径赋值给 `Architecture.Backbone.checkpoints` 字段。

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-```

-最终会打印出`precision`, `recall`, `hmean`等指标

-

-* 基于训练引擎的`OCR + SER`串联预测

-

-使用如下命令即可完成基于训练引擎的`OCR + SER`的串联预测, 以基于LayoutXLM的SER模型为例:

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/infer_vqa_token_ser.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=pretrain/ser_LayoutXLM_xfun_zh/ Global.infer_img=doc/vqa/input/zh_val_42.jpg

-```

-

-最终会在`config.Global.save_res_path`字段所配置的目录下保存预测结果可视化图像以及预测结果文本文件,预测结果文本文件名为`infer_results.txt`。

-

-* 对`OCR + SER`预测系统进行端到端评估

-

-首先使用 `tools/infer_vqa_token_ser.py` 脚本完成数据集的预测,然后使用下面的命令进行评估。

-

-```shell

-export CUDA_VISIBLE_DEVICES=0

-python3 tools/eval_with_label_end2end.py --gt_json_path XFUND/zh_val/xfun_normalize_val.json --pred_json_path output_res/infer_results.txt

-```

-* 模型导出

-

-使用如下命令即可完成SER模型的模型导出, 以基于LayoutXLM的SER模型为例:

-

-```shell

-python3.7 tools/export_model.py -c configs/vqa/ser/layoutxlm.yml -o Architecture.Backbone.checkpoints=pretrain/ser_LayoutXLM_xfun_zh/ Global.save_inference_dir=output/ser/infer

-```

-转换后的模型会存放在`Global.save_inference_dir`字段指定的目录下。

-

-* 基于预测引擎的`OCR + SER`串联预测

-

-使用如下命令即可完成基于预测引擎的`OCR + SER`的串联预测, 以基于LayoutXLM的SER模型为例:

-

-```shell

-cd ppstructure

-CUDA_VISIBLE_DEVICES=0 python3.7 vqa/predict_vqa_token_ser.py --vqa_algorithm=LayoutXLM --ser_model_dir=../output/ser/infer --ser_dict_path=../train_data/XFUND/class_list_xfun.txt --vis_font_path=../doc/fonts/simfang.ttf --image_dir=docs/vqa/input/zh_val_42.jpg --output=output

-```

-预测成功后,可视化图片和结果会保存在`output`字段指定的目录下

-

-### 5.3 RE

-

-* 启动训练

-

-启动训练之前,需要修改下面的四个字段

-

-1. `Train.dataset.data_dir`:指向训练集图片存放目录

-2. `Train.dataset.label_file_list`:指向训练集标注文件

-3. `Eval.dataset.data_dir`:指指向验证集图片存放目录

-4. `Eval.dataset.label_file_list`:指向验证集标注文件

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/re/layoutxlm.yml

-```

-

-最终会打印出`precision`, `recall`, `hmean`等指标。

-在`./output/re_layoutxlm/`文件夹中会保存训练日志,最优的模型和最新epoch的模型。

-

-* 恢复训练

-

-恢复训练需要将之前训练好的模型所在文件夹路径赋值给 `Architecture.Backbone.checkpoints` 字段。

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py -c configs/vqa/re/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-```

-

-* 评估

-

-评估需要将待评估的模型所在文件夹路径赋值给 `Architecture.Backbone.checkpoints` 字段。

-

-```shell

-CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py -c configs/vqa/re/layoutxlm.yml -o Architecture.Backbone.checkpoints=path/to/model_dir

-```

-最终会打印出`precision`, `recall`, `hmean`等指标

-

-* 基于训练引擎的`OCR + SER + RE`串联预测

-

-使用如下命令即可完成基于训练引擎的`OCR + SER + RE`串联预测, 以基于LayoutXLMSER和RE模型为例:

-```shell

-export CUDA_VISIBLE_DEVICES=0

-python3 tools/infer_vqa_token_ser_re.py -c configs/vqa/re/layoutxlm.yml -o Architecture.Backbone.checkpoints=pretrain/re_LayoutXLM_xfun_zh/ Global.infer_img=ppstructure/docs/vqa/input/zh_val_21.jpg -c_ser configs/vqa/ser/layoutxlm.yml -o_ser Architecture.Backbone.checkpoints=pretrain/ser_LayoutXLM_xfun_zh/

-```

-

-最终会在`config.Global.save_res_path`字段所配置的目录下保存预测结果可视化图像以及预测结果文本文件,预测结果文本文件名为`infer_results.txt`。

-

-* 模型导出

-

-cooming soon

-

-* 基于预测引擎的`OCR + SER + RE`串联预测

-

-cooming soon

-

-## 6. 参考链接

-

-- LayoutXLM: Multimodal Pre-training for Multilingual Visually-rich Document Understanding, https://arxiv.org/pdf/2104.08836.pdf

-- microsoft/unilm/layoutxlm, https://github.com/microsoft/unilm/tree/master/layoutxlm

-- XFUND dataset, https://github.com/doc-analysis/XFUND

-

-## License

-

-The content of this project itself is licensed under the [Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0)](https://creativecommons.org/licenses/by-nc-sa/4.0/)

diff --git a/ppstructure/vqa/requirements.txt b/ppstructure/vqa/requirements.txt

deleted file mode 100644

index fcd882274c4402ba2a1d34f20ee6e2befa157121..0000000000000000000000000000000000000000

--- a/ppstructure/vqa/requirements.txt

+++ /dev/null

@@ -1,7 +0,0 @@

-sentencepiece

-yacs

-seqeval

-paddlenlp>=2.2.1

-pypandoc

-attrdict

-python_docx

\ No newline at end of file

diff --git a/requirements.txt b/requirements.txt

index b15176db3eb42c381c1612f404fd15c6b020b3dc..976d29192abbbf89b8ee6064c0b4ec48d43ad268 100644

--- a/requirements.txt

+++ b/requirements.txt

@@ -6,7 +6,7 @@ lmdb

tqdm

numpy

visualdl

-python-Levenshtein

+rapidfuzz

opencv-contrib-python==4.4.0.46

cython

lxml

diff --git a/test_tipc/configs/layoutxlm_ser/train_infer_python.txt b/test_tipc/configs/layoutxlm_ser/train_infer_python.txt

index 5284ffabe2de4eb8bb000e7fb745ef2846ed6b64..549a31e69e367237ec0396778162a5f91c8b7412 100644

--- a/test_tipc/configs/layoutxlm_ser/train_infer_python.txt

+++ b/test_tipc/configs/layoutxlm_ser/train_infer_python.txt

@@ -9,7 +9,7 @@ Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=4|whole_train_whole_infer=8

Architecture.Backbone.checkpoints:null

train_model_name:latest

-train_infer_img_dir:ppstructure/docs/vqa/input/zh_val_42.jpg

+train_infer_img_dir:ppstructure/docs/kie/input/zh_val_42.jpg

null:null

##

trainer:norm_train

@@ -37,7 +37,7 @@ export2:null

infer_model:null

infer_export:null

infer_quant:False

-inference:ppstructure/vqa/predict_vqa_token_ser.py --vqa_algorithm=LayoutXLM --ser_dict_path=train_data/XFUND/class_list_xfun.txt --output=output

+inference:ppstructure/kie/predict_kie_token_ser.py --kie_algorithm=LayoutXLM --ser_dict_path=train_data/XFUND/class_list_xfun.txt --output=output

--use_gpu:True|False

--enable_mkldnn:False

--cpu_threads:6

@@ -45,7 +45,7 @@ inference:ppstructure/vqa/predict_vqa_token_ser.py --vqa_algorithm=LayoutXLM -

--use_tensorrt:False

--precision:fp32

--ser_model_dir:

---image_dir:./ppstructure/docs/vqa/input/zh_val_42.jpg

+--image_dir:./ppstructure/docs/kie/input/zh_val_42.jpg

null:null

--benchmark:False

null:null

diff --git a/test_tipc/configs/vi_layoutxlm_ser/train_infer_python.txt b/test_tipc/configs/vi_layoutxlm_ser/train_infer_python.txt

index 59d347461171487c186c052e290f6b13236aa5c9..adad78bb76e34635a632ef7c1b55e212bc4b636a 100644

--- a/test_tipc/configs/vi_layoutxlm_ser/train_infer_python.txt

+++ b/test_tipc/configs/vi_layoutxlm_ser/train_infer_python.txt

@@ -9,7 +9,7 @@ Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=4|whole_train_whole_infer=8

Architecture.Backbone.checkpoints:null

train_model_name:latest

-train_infer_img_dir:ppstructure/docs/vqa/input/zh_val_42.jpg

+train_infer_img_dir:ppstructure/docs/kie/input/zh_val_42.jpg

null:null

##

trainer:norm_train

@@ -37,7 +37,7 @@ export2:null

infer_model:null

infer_export:null

infer_quant:False

-inference:ppstructure/vqa/predict_vqa_token_ser.py --vqa_algorithm=LayoutXLM --ser_dict_path=train_data/XFUND/class_list_xfun.txt --output=output --ocr_order_method=tb-yx

+inference:ppstructure/kie/predict_kie_token_ser.py --kie_algorithm=LayoutXLM --ser_dict_path=train_data/XFUND/class_list_xfun.txt --output=output --ocr_order_method=tb-yx

--use_gpu:True|False

--enable_mkldnn:False

--cpu_threads:6

@@ -45,7 +45,7 @@ inference:ppstructure/vqa/predict_vqa_token_ser.py --vqa_algorithm=LayoutXLM -

--use_tensorrt:False

--precision:fp32

--ser_model_dir:

---image_dir:./ppstructure/docs/vqa/input/zh_val_42.jpg

+--image_dir:./ppstructure/docs/kie/input/zh_val_42.jpg

null:null

--benchmark:False

null:null

diff --git a/test_tipc/prepare.sh b/test_tipc/prepare.sh

index 259a1159cb326760384645b2aff313b75da6084a..31bbbe30befe727e9e2a132e6ab4f8515035af79 100644

--- a/test_tipc/prepare.sh

+++ b/test_tipc/prepare.sh

@@ -107,7 +107,7 @@ if [ ${MODE} = "benchmark_train" ];then

cd ../

fi

if [ ${model_name} == "layoutxlm_ser" ] || [ ${model_name} == "vi_layoutxlm_ser" ]; then

- pip install -r ppstructure/vqa/requirements.txt

+ pip install -r ppstructure/kie/requirements.txt

pip install paddlenlp\>=2.3.5 --force-reinstall -i https://mirrors.aliyun.com/pypi/simple/

wget -nc -P ./train_data/ https://paddleocr.bj.bcebos.com/ppstructure/dataset/XFUND.tar --no-check-certificate

cd ./train_data/ && tar xf XFUND.tar

@@ -221,7 +221,7 @@ if [ ${MODE} = "lite_train_lite_infer" ];then

cd ./pretrain_models/ && tar xf rec_r32_gaspin_bilstm_att_train.tar && cd ../

fi

if [ ${model_name} == "layoutxlm_ser" ] || [ ${model_name} == "vi_layoutxlm_ser" ]; then

- pip install -r ppstructure/vqa/requirements.txt

+ pip install -r ppstructure/kie/requirements.txt

pip install paddlenlp\>=2.3.5 --force-reinstall -i https://mirrors.aliyun.com/pypi/simple/

wget -nc -P ./train_data/ https://paddleocr.bj.bcebos.com/ppstructure/dataset/XFUND.tar --no-check-certificate

cd ./train_data/ && tar xf XFUND.tar

diff --git a/tools/infer/predict_cls.py b/tools/infer/predict_cls.py

index ed2f47c04de6f4ab6a874db052e953a1ce4e0b76..d2b7108ca35666acfa53e785686fd7b9dfc21ed5 100755

--- a/tools/infer/predict_cls.py

+++ b/tools/infer/predict_cls.py

@@ -30,7 +30,7 @@ import traceback

import tools.infer.utility as utility

from ppocr.postprocess import build_post_process

from ppocr.utils.logging import get_logger

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

logger = get_logger()

@@ -128,7 +128,7 @@ def main(args):

valid_image_file_list = []

img_list = []

for image_file in image_file_list:

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

if not flag:

img = cv2.imread(image_file)

if img is None:

diff --git a/tools/infer/predict_det.py b/tools/infer/predict_det.py

index 394a48948b1f284bd405532769b76eeb298668bd..9f5c480d3c55367a02eacb48bed6ae3d38282f05 100755

--- a/tools/infer/predict_det.py

+++ b/tools/infer/predict_det.py

@@ -27,7 +27,7 @@ import sys

import tools.infer.utility as utility

from ppocr.utils.logging import get_logger

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

from ppocr.data import create_operators, transform

from ppocr.postprocess import build_post_process

import json

@@ -289,7 +289,7 @@ if __name__ == "__main__":

os.makedirs(draw_img_save)

save_results = []

for image_file in image_file_list:

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

if not flag:

img = cv2.imread(image_file)

if img is None:

diff --git a/tools/infer/predict_e2e.py b/tools/infer/predict_e2e.py

index fb2859f0c7e0d3aa0b87dbe11123dfc88f4b4e8e..de315d701c7172ded4d30e48e79abee367f42239 100755

--- a/tools/infer/predict_e2e.py

+++ b/tools/infer/predict_e2e.py

@@ -27,7 +27,7 @@ import sys

import tools.infer.utility as utility

from ppocr.utils.logging import get_logger

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

from ppocr.data import create_operators, transform

from ppocr.postprocess import build_post_process

@@ -148,7 +148,7 @@ if __name__ == "__main__":

if not os.path.exists(draw_img_save):

os.makedirs(draw_img_save)

for image_file in image_file_list:

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

if not flag:

img = cv2.imread(image_file)

if img is None:

diff --git a/tools/infer/predict_rec.py b/tools/infer/predict_rec.py

index 53dab6f26d8b84a224360f2fa6fe5f411eea751f..7c46e17bacdf1fff464322d284e4549bd8edacf2 100755

--- a/tools/infer/predict_rec.py

+++ b/tools/infer/predict_rec.py

@@ -30,7 +30,7 @@ import paddle

import tools.infer.utility as utility

from ppocr.postprocess import build_post_process

from ppocr.utils.logging import get_logger

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

logger = get_logger()

@@ -68,7 +68,7 @@ class TextRecognizer(object):

'name': 'SARLabelDecode',

"character_dict_path": args.rec_char_dict_path,

"use_space_char": args.use_space_char

- }

+ }

elif self.rec_algorithm == "VisionLAN":

postprocess_params = {

'name': 'VLLabelDecode',

@@ -399,7 +399,9 @@ class TextRecognizer(object):

norm_img_batch.append(norm_img)

elif self.rec_algorithm == "RobustScanner":

norm_img, _, _, valid_ratio = self.resize_norm_img_sar(

- img_list[indices[ino]], self.rec_image_shape, width_downsample_ratio=0.25)

+ img_list[indices[ino]],

+ self.rec_image_shape,

+ width_downsample_ratio=0.25)

norm_img = norm_img[np.newaxis, :]

valid_ratio = np.expand_dims(valid_ratio, axis=0)

valid_ratios = []

@@ -484,12 +486,8 @@ class TextRecognizer(object):

elif self.rec_algorithm == "RobustScanner":

valid_ratios = np.concatenate(valid_ratios)

word_positions_list = np.concatenate(word_positions_list)

- inputs = [

- norm_img_batch,

- valid_ratios,

- word_positions_list

- ]

-

+ inputs = [norm_img_batch, valid_ratios, word_positions_list]

+

if self.use_onnx:

input_dict = {}

input_dict[self.input_tensor.name] = norm_img_batch

@@ -555,7 +553,7 @@ def main(args):

res = text_recognizer([img] * int(args.rec_batch_num))

for image_file in image_file_list:

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

if not flag:

img = cv2.imread(image_file)

if img is None:

diff --git a/tools/infer/predict_sr.py b/tools/infer/predict_sr.py

index b10d90bf1d6ce3de6d2947e9cc1f73443736518d..ca99f6819f4b207ecc0f0d1383fe1d26d07fbf50 100755

--- a/tools/infer/predict_sr.py

+++ b/tools/infer/predict_sr.py

@@ -30,7 +30,7 @@ import paddle

import tools.infer.utility as utility

from ppocr.postprocess import build_post_process

from ppocr.utils.logging import get_logger

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

logger = get_logger()

@@ -120,7 +120,7 @@ def main(args):

res = text_recognizer([img] * int(args.sr_batch_num))

for image_file in image_file_list:

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

if not flag:

img = Image.open(image_file).convert("RGB")

if img is None:

diff --git a/tools/infer/predict_system.py b/tools/infer/predict_system.py

index 73b7155baa9f869da928b5be03692c08115489ee..e0f2c41fa2aba23491efee920afbd76db1ec84e0 100755

--- a/tools/infer/predict_system.py

+++ b/tools/infer/predict_system.py

@@ -32,7 +32,7 @@ import tools.infer.utility as utility

import tools.infer.predict_rec as predict_rec

import tools.infer.predict_det as predict_det

import tools.infer.predict_cls as predict_cls

-from ppocr.utils.utility import get_image_file_list, check_and_read_gif

+from ppocr.utils.utility import get_image_file_list, check_and_read

from ppocr.utils.logging import get_logger

from tools.infer.utility import draw_ocr_box_txt, get_rotate_crop_image

logger = get_logger()

@@ -120,11 +120,14 @@ def sorted_boxes(dt_boxes):

_boxes = list(sorted_boxes)

for i in range(num_boxes - 1):

- if abs(_boxes[i + 1][0][1] - _boxes[i][0][1]) < 10 and \

- (_boxes[i + 1][0][0] < _boxes[i][0][0]):

- tmp = _boxes[i]

- _boxes[i] = _boxes[i + 1]

- _boxes[i + 1] = tmp

+ for j in range(i, 0, -1):

+ if abs(_boxes[j + 1][0][1] - _boxes[j][0][1]) < 10 and \

+ (_boxes[j + 1][0][0] < _boxes[j][0][0]):

+ tmp = _boxes[j]

+ _boxes[j] = _boxes[j + 1]

+ _boxes[j + 1] = tmp

+ else:

+ break

return _boxes

@@ -156,7 +159,7 @@ def main(args):

count = 0

for idx, image_file in enumerate(image_file_list):

- img, flag = check_and_read_gif(image_file)

+ img, flag, _ = check_and_read(image_file)

if not flag:

img = cv2.imread(image_file)

if img is None:

diff --git a/tools/infer/utility.py b/tools/infer/utility.py

index 1eebc73f31e6b48a473c20d907ca401ad919fe0b..8d3e93992d9d8cbd19fdd2c071565c940d011883 100644

--- a/tools/infer/utility.py

+++ b/tools/infer/utility.py

@@ -181,14 +181,21 @@ def create_predictor(args, mode, logger):

return sess, sess.get_inputs()[0], None, None

else:

- model_file_path = model_dir + "/inference.pdmodel"

- params_file_path = model_dir + "/inference.pdiparams"

+ file_names = ['model', 'inference']

+ for file_name in file_names:

+ model_file_path = '{}/{}.pdmodel'.format(model_dir, file_name)

+ params_file_path = '{}/{}.pdiparams'.format(model_dir, file_name)

+ if os.path.exists(model_file_path) and os.path.exists(

+ params_file_path):

+ break

if not os.path.exists(model_file_path):

- raise ValueError("not find model file path {}".format(

- model_file_path))

+ raise ValueError(

+ "not find model.pdmodel or inference.pdmodel in {}".format(

+ model_dir))

if not os.path.exists(params_file_path):

- raise ValueError("not find params file path {}".format(

- params_file_path))

+ raise ValueError(

+ "not find model.pdiparams or inference.pdiparams in {}".format(

+ model_dir))

config = inference.Config(model_file_path, params_file_path)

@@ -218,23 +225,24 @@ def create_predictor(args, mode, logger):

min_subgraph_size, # skip the minmum trt subgraph

use_calib_mode=False)

- # collect shape

- if args.shape_info_filename is not None:

- if not os.path.exists(args.shape_info_filename):

- config.collect_shape_range_info(args.shape_info_filename)

- logger.info(

- f"collect dynamic shape info into : {args.shape_info_filename}"

- )

+ # collect shape

+ if args.shape_info_filename is not None:

+ if not os.path.exists(args.shape_info_filename):

+ config.collect_shape_range_info(

+ args.shape_info_filename)

+ logger.info(

+ f"collect dynamic shape info into : {args.shape_info_filename}"

+ )

+ else:

+ logger.info(

+ f"dynamic shape info file( {args.shape_info_filename} ) already exists, not need to generate again."

+ )

+ config.enable_tuned_tensorrt_dynamic_shape(

+ args.shape_info_filename, True)

else:

logger.info(

- f"dynamic shape info file( {args.shape_info_filename} ) already exists, not need to generate again."

+ f"when using tensorrt, dynamic shape is a suggested option, you can use '--shape_info_filename=shape.txt' for offline dygnamic shape tuning"

)

- config.enable_tuned_tensorrt_dynamic_shape(

- args.shape_info_filename, True)

- else:

- logger.info(

- f"when using tensorrt, dynamic shape is a suggested option, you can use '--shape_info_filename=shape.txt' for offline dygnamic shape tuning"

- )

elif args.use_xpu:

config.enable_xpu(10 * 1024 * 1024)

@@ -542,7 +550,7 @@ def text_visual(texts,

def base64_to_cv2(b64str):

import base64

data = base64.b64decode(b64str.encode('utf8'))

- data = np.fromstring(data, np.uint8)

+ data = np.frombuffer(data, np.uint8)

data = cv2.imdecode(data, cv2.IMREAD_COLOR)

return data

diff --git a/tools/infer_kie.py b/tools/infer_kie.py

index 346e2e0aeeee695ab49577b6b13dcc058150df1a..9375434cc887b08dfa746420a6c73c58c6e04797 100755

--- a/tools/infer_kie.py

+++ b/tools/infer_kie.py

@@ -88,6 +88,29 @@ def draw_kie_result(batch, node, idx_to_cls, count):

cv2.imwrite(save_path, vis_img)

logger.info("The Kie Image saved in {}".format(save_path))

+def write_kie_result(fout, node, data):

+ """

+ Write infer result to output file, sorted by the predict label of each line.

+ The format keeps the same as the input with additional score attribute.

+ """

+ import json

+ label = data['label']

+ annotations = json.loads(label)

+ max_value, max_idx = paddle.max(node, -1), paddle.argmax(node, -1)

+ node_pred_label = max_idx.numpy().tolist()

+ node_pred_score = max_value.numpy().tolist()

+ res = []

+ for i, label in enumerate(node_pred_label):

+ pred_score = '{:.2f}'.format(node_pred_score[i])

+ pred_res = {

+ 'label': label,

+ 'transcription': annotations[i]['transcription'],

+ 'score': pred_score,

+ 'points': annotations[i]['points'],

+ }

+ res.append(pred_res)

+ res.sort(key=lambda x: x['label'])

+ fout.writelines([json.dumps(res, ensure_ascii=False) + '\n'])

def main():

global_config = config['Global']

@@ -114,7 +137,7 @@ def main():

warmup_times = 0

count_t = []

- with open(save_res_path, "wb") as fout:

+ with open(save_res_path, "w") as fout:

with open(config['Global']['infer_img'], "rb") as f:

lines = f.readlines()

for index, data_line in enumerate(lines):

@@ -139,6 +162,8 @@ def main():

node = F.softmax(node, -1)

count_t.append(time.time() - st)

draw_kie_result(batch, node, idx_to_cls, index)

+ write_kie_result(fout, node, data)

+ fout.close()

logger.info("success!")

logger.info("It took {} s for predict {} images.".format(

np.sum(count_t), len(count_t)))

diff --git a/tools/infer_vqa_token_ser.py b/tools/infer_kie_token_ser.py

similarity index 97%

rename from tools/infer_vqa_token_ser.py

rename to tools/infer_kie_token_ser.py

index a15d83b17cc738a5c3349d461c3bce119c2355e7..2fc5749b9c10b9c89bc16e561fbe9c5ce58eb13c 100755

--- a/tools/infer_vqa_token_ser.py

+++ b/tools/infer_kie_token_ser.py

@@ -75,6 +75,8 @@ class SerPredictor(object):

self.ocr_engine = PaddleOCR(

use_angle_cls=False,

show_log=False,

+ rec_model_dir=global_config.get("kie_rec_model_dir", None),

+ det_model_dir=global_config.get("kie_det_model_dir", None),

use_gpu=global_config['use_gpu'])

# create data ops

diff --git a/tools/infer_vqa_token_ser_re.py b/tools/infer_kie_token_ser_re.py

similarity index 97%

rename from tools/infer_vqa_token_ser_re.py

rename to tools/infer_kie_token_ser_re.py

index 51378bdaeb03d4ec6d7684de80625c5029963745..3ee696f28470a16205be628b3aeb586ef7a9c6a6 100755

--- a/tools/infer_vqa_token_ser_re.py

+++ b/tools/infer_kie_token_ser_re.py

@@ -39,7 +39,7 @@ from ppocr.utils.visual import draw_re_results

from ppocr.utils.logging import get_logger

from ppocr.utils.utility import get_image_file_list, load_vqa_bio_label_maps, print_dict

from tools.program import ArgsParser, load_config, merge_config

-from tools.infer_vqa_token_ser import SerPredictor

+from tools.infer_kie_token_ser import SerPredictor

class ReArgsParser(ArgsParser):

@@ -205,9 +205,7 @@ if __name__ == '__main__':

result = ser_re_engine(data)

result = result[0]

fout.write(img_path + "\t" + json.dumps(

- {

- "ser_result": result,