diff --git a/README_ch.md b/README_ch.md

index a6d456a5316cec20c3f34588453d212173f6b2c8..0430fe759f62155ad97d73db06445bbfe551c181 100755

--- a/README_ch.md

+++ b/README_ch.md

@@ -8,9 +8,10 @@ PaddleOCR同时支持动态图与静态图两种编程范式

- 静态图版本:develop分支

**近期更新**

+- 【预告】 PaddleOCR研发团队对最新发版内容技术深入解读,4月13日晚上19:00,[直播地址](https://live.bilibili.com/21689802)

+- 2021.4.8 release 2.1版本,新增AAAI 2021论文[端到端识别算法PGNet](./doc/doc_ch/pgnet.md)开源,[多语言模型](./doc/doc_ch/multi_languages.md)支持种类增加到80+。

- 2021.2.1 [FAQ](./doc/doc_ch/FAQ.md)新增5个高频问题,总数162个,每周一都会更新,欢迎大家持续关注。

-- 2021.1.26,28,29 PaddleOCR官方研发团队带来技术深入解读三日直播课,1月26日、28日、29日晚上19:30,[直播地址](https://live.bilibili.com/21689802)

-- 2021.1.21 更新多语言识别模型,目前支持语种超过27种,[多语言模型下载](./doc/doc_ch/models_list.md),包括中文简体、中文繁体、英文、法文、德文、韩文、日文、意大利文、西班牙文、葡萄牙文、俄罗斯文、阿拉伯文等,后续计划可以参考[多语言研发计划](https://github.com/PaddlePaddle/PaddleOCR/issues/1048)

+- 2021.1.21 更新多语言识别模型,目前支持语种超过27种,包括中文简体、中文繁体、英文、法文、德文、韩文、日文、意大利文、西班牙文、葡萄牙文、俄罗斯文、阿拉伯文等,后续计划可以参考[多语言研发计划](https://github.com/PaddlePaddle/PaddleOCR/issues/1048)

- 2020.12.15 更新数据合成工具[Style-Text](./StyleText/README_ch.md),可以批量合成大量与目标场景类似的图像,在多个场景验证,效果明显提升。

- 2020.11.25 更新半自动标注工具[PPOCRLabel](./PPOCRLabel/README_ch.md),辅助开发者高效完成标注任务,输出格式与PP-OCR训练任务完美衔接。

- 2020.9.22 更新PP-OCR技术文章,https://arxiv.org/abs/2009.09941

@@ -74,11 +75,13 @@ PaddleOCR同时支持动态图与静态图两种编程范式

## 文档教程

- [快速安装](./doc/doc_ch/installation.md)

- [中文OCR模型快速使用](./doc/doc_ch/quickstart.md)

+- [多语言OCR模型快速使用](./doc/doc_ch/multi_languages.md)

- [代码组织结构](./doc/doc_ch/tree.md)

- 算法介绍

- [文本检测](./doc/doc_ch/algorithm_overview.md)

- [文本识别](./doc/doc_ch/algorithm_overview.md)

- [PP-OCR Pipline](#PP-OCR)

+ - [端到端PGNet算法](./doc/doc_ch/pgnet.md)

- 模型训练/评估

- [文本检测](./doc/doc_ch/detection.md)

- [文本识别](./doc/doc_ch/recognition.md)

diff --git a/deploy/android_demo/README.md b/deploy/android_demo/README.md

index 285f7a84387e5f50ac3286cee10b0c4bea0deb31..f7ea1287638168804c80628429599c251e0b4ad4 100644

--- a/deploy/android_demo/README.md

+++ b/deploy/android_demo/README.md

@@ -20,7 +20,7 @@ Demo测试的时候使用的是NDK 20b版本,20版本以上均可以支持编

# FAQ:

-Q1: 更新1.1版本的模型后,demo报错?

+Q1: 更新V2.0版本的模型后,demo报错?

-A1. 如果要更换V1.1 版本的模型,请更新模型的同时,更新预测库文件,建议使用[PaddleLite 2.6.3](https://github.com/PaddlePaddle/Paddle-Lite/releases/tag/v2.6.3)版本的预测库文件,OCR移动端部署参考[教程](../lite/readme.md)。

+A1. 如果要更换V2.0版本的模型,请更新模型的同时,更新预测库文件,建议使用[PaddleLite 2.8.0](https://github.com/PaddlePaddle/Paddle-Lite/releases/tag/v2.8)版本的预测库文件,OCR移动端部署参考[教程](../lite/readme.md)。

diff --git a/doc/doc_ch/multi_languages.md b/doc/doc_ch/multi_languages.md

new file mode 100644

index 0000000000000000000000000000000000000000..a8f7c2b77f64285e0edfbd22c248e84f0bb84d42

--- /dev/null

+++ b/doc/doc_ch/multi_languages.md

@@ -0,0 +1,284 @@

+# 多语言模型

+

+**近期更新**

+

+- 2021.4.9 支持**80种**语言的检测和识别

+- 2021.4.9 支持**轻量高精度**英文模型检测识别

+

+- [1 安装](#安装)

+ - [1.1 paddle 安装](#paddle安装)

+ - [1.2 paddleocr package 安装](#paddleocr_package_安装)

+

+- [2 快速使用](#快速使用)

+ - [2.1 命令行运行](#命令行运行)

+ - [2.1.1 整图预测](#bash_检测+识别)

+ - [2.1.2 识别预测](#bash_识别)

+ - [2.1.3 检测预测](#bash_检测)

+ - [2.2 python 脚本运行](#python_脚本运行)

+ - [2.2.1 整图预测](#python_检测+识别)

+ - [2.2.2 识别预测](#python_识别)

+ - [2.2.3 检测预测](#python_检测)

+- [3 自定义训练](#自定义训练)

+- [4 支持语种及缩写](#语种缩写)

+

+

+## 1 安装

+

+

+### 1.1 paddle 安装

+```

+# cpu

+pip install paddlepaddle

+

+# gpu

+pip instll paddlepaddle-gpu

+```

+

+

+### 1.2 paddleocr package 安装

+

+

+pip 安装

+```

+pip install "paddleocr>=2.0.4" # 推荐使用2.0.4版本

+```

+本地构建并安装

+```

+python3 setup.py bdist_wheel

+pip3 install dist/paddleocr-x.x.x-py3-none-any.whl # x.x.x是paddleocr的版本号

+```

+

+

+## 2 快速使用

+

+

+### 2.1 命令行运行

+

+查看帮助信息

+

+```

+paddleocr -h

+```

+

+* 整图预测(检测+识别)

+

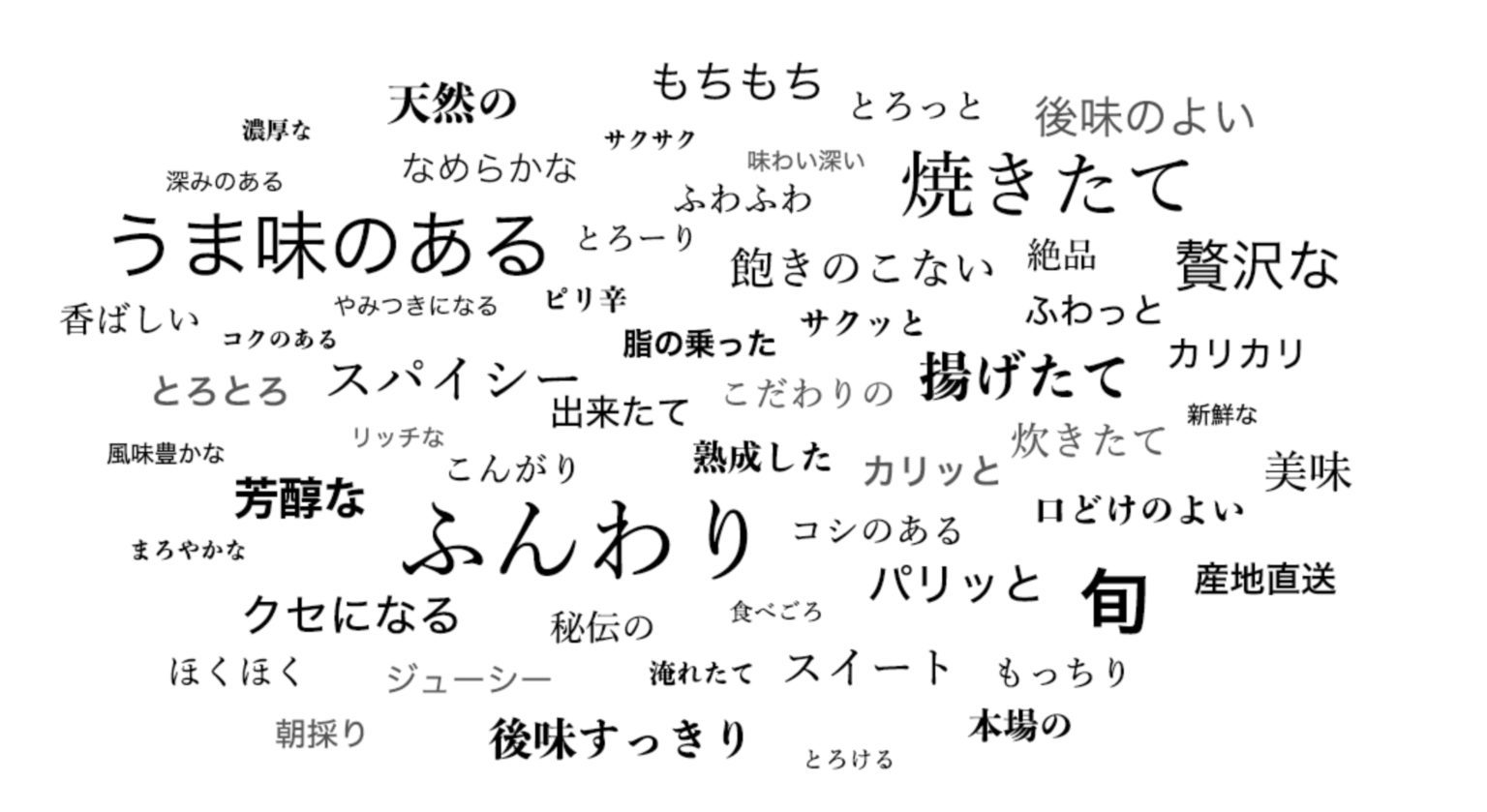

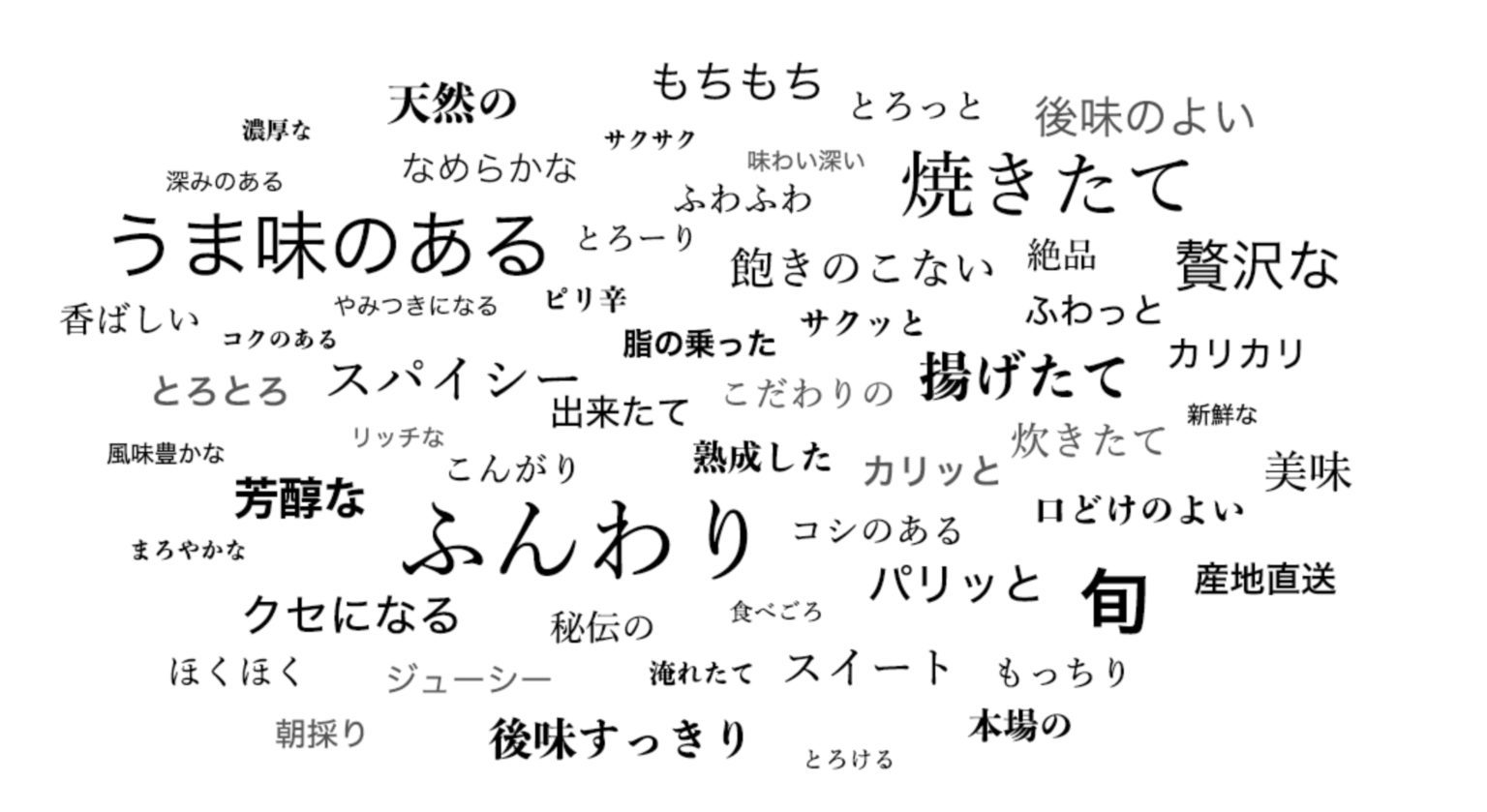

+Paddleocr目前支持80个语种,可以通过修改--lang参数进行切换,具体支持的[语种](#语种缩写)可查看表格。

+

+``` bash

+

+paddleocr --image_dir doc/imgs/japan_2.jpg --lang=japan

+```

+

+

+结果是一个list,每个item包含了文本框,文字和识别置信度

+```text

+[[[671.0, 60.0], [847.0, 63.0], [847.0, 104.0], [671.0, 102.0]], ('もちもち', 0.9993342)]

+[[[394.0, 82.0], [536.0, 77.0], [538.0, 127.0], [396.0, 132.0]], ('天然の', 0.9919842)]

+[[[880.0, 89.0], [1014.0, 93.0], [1013.0, 127.0], [879.0, 124.0]], ('とろっと', 0.9976762)]

+[[[1067.0, 101.0], [1294.0, 101.0], [1294.0, 138.0], [1067.0, 138.0]], ('後味のよい', 0.9988712)]

+......

+```

+

+* 识别预测

+

+```bash

+paddleocr --image_dir doc/imgs_words/japan/1.jpg --det false --lang=japan

+```

+

+

+

+结果是一个tuple,返回识别结果和识别置信度

+

+```text

+('したがって', 0.99965394)

+```

+

+* 检测预测

+

+```

+paddleocr --image_dir PaddleOCR/doc/imgs/11.jpg --rec false

+```

+

+结果是一个list,每个item只包含文本框

+

+```

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+

+### 2.2 python 脚本运行

+

+ppocr 也支持在python脚本中运行,便于嵌入到您自己的代码中:

+

+* 整图预测(检测+识别)

+

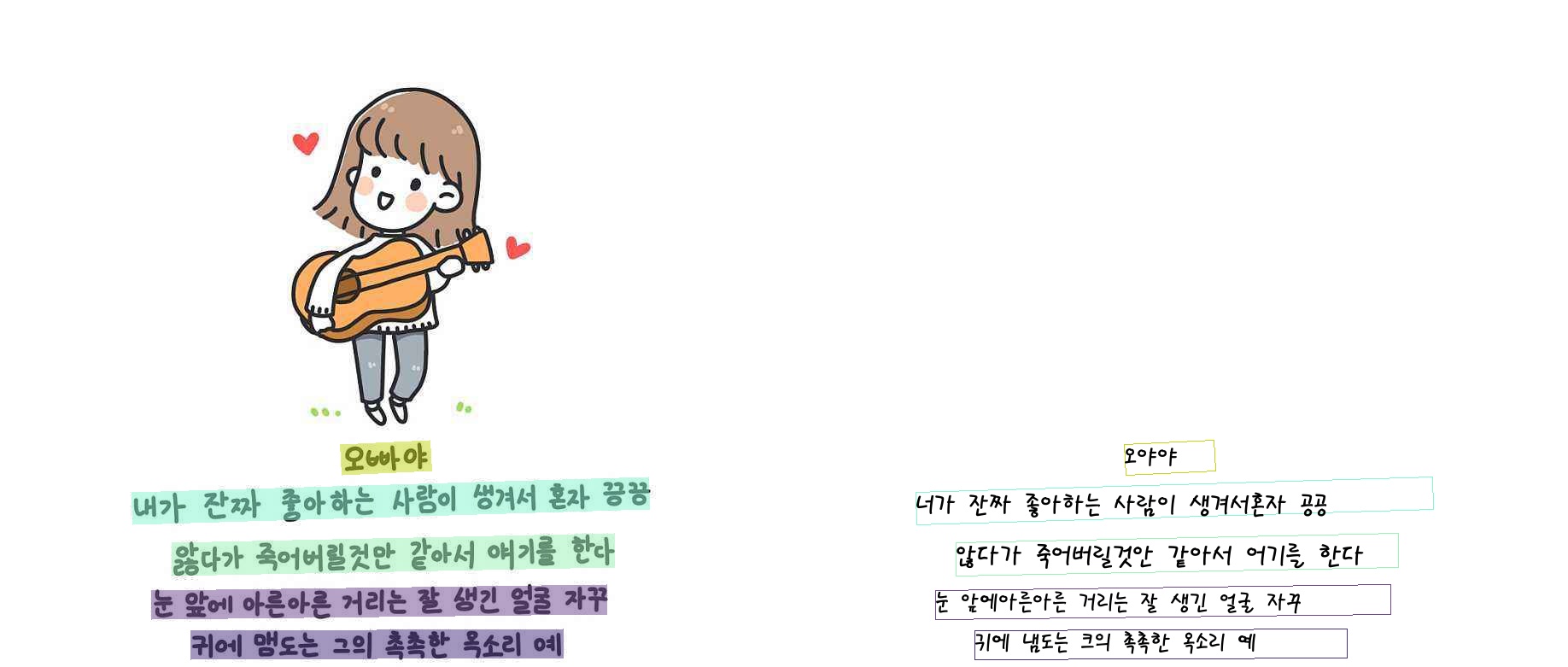

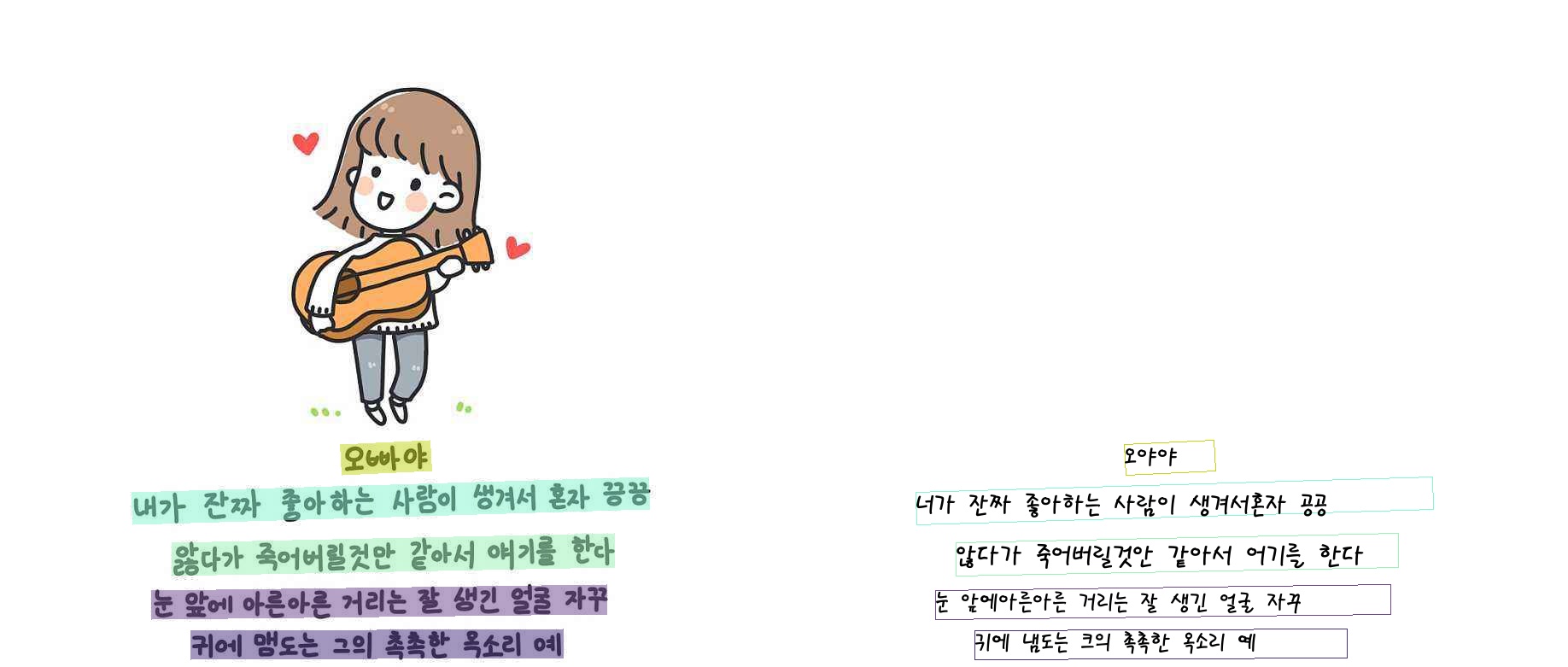

+```

+from paddleocr import PaddleOCR, draw_ocr

+

+# 同样也是通过修改 lang 参数切换语种

+ocr = PaddleOCR(lang="korean") # 首次执行会自动下载模型文件

+img_path = 'doc/imgs/korean_1.jpg '

+result = ocr.ocr(img_path)

+# 打印检测框和识别结果

+for line in result:

+ print(line)

+

+# 可视化

+from PIL import Image

+image = Image.open(img_path).convert('RGB')

+boxes = [line[0] for line in result]

+txts = [line[1][0] for line in result]

+scores = [line[1][1] for line in result]

+im_show = draw_ocr(image, boxes, txts, scores, font_path='/path/to/PaddleOCR/doc/korean.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+

+结果可视化:

+

+

+

+* 识别预测

+

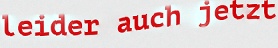

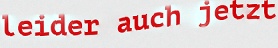

+```

+from paddleocr import PaddleOCR

+ocr = PaddleOCR(lang="german")

+img_path = 'PaddleOCR/doc/imgs_words/german/1.jpg'

+result = ocr.ocr(img_path, det=False, cls=True)

+for line in result:

+ print(line)

+```

+

+

+

+结果是一个tuple,只包含识别结果和识别置信度

+

+```

+('leider auch jetzt', 0.97538936)

+```

+

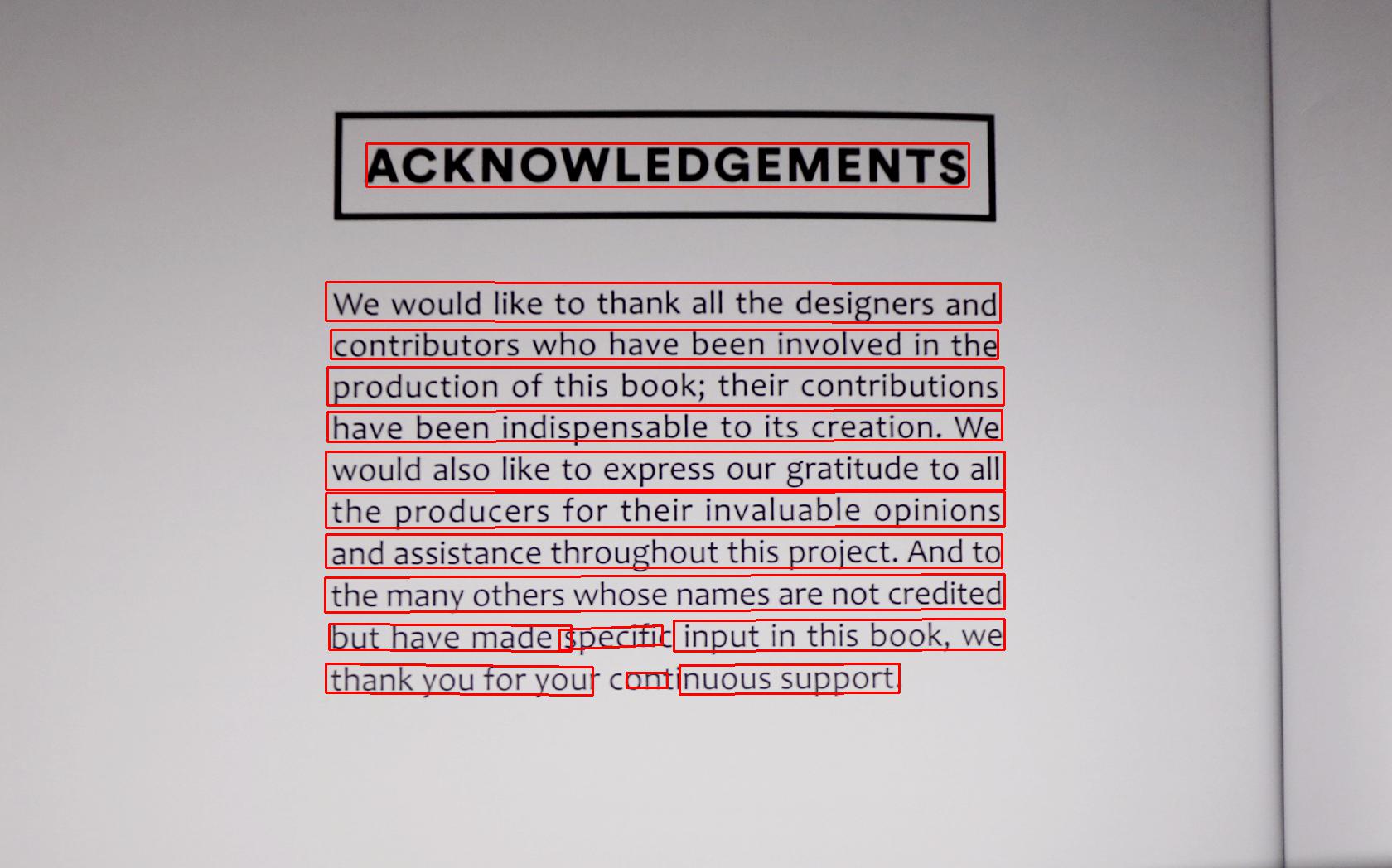

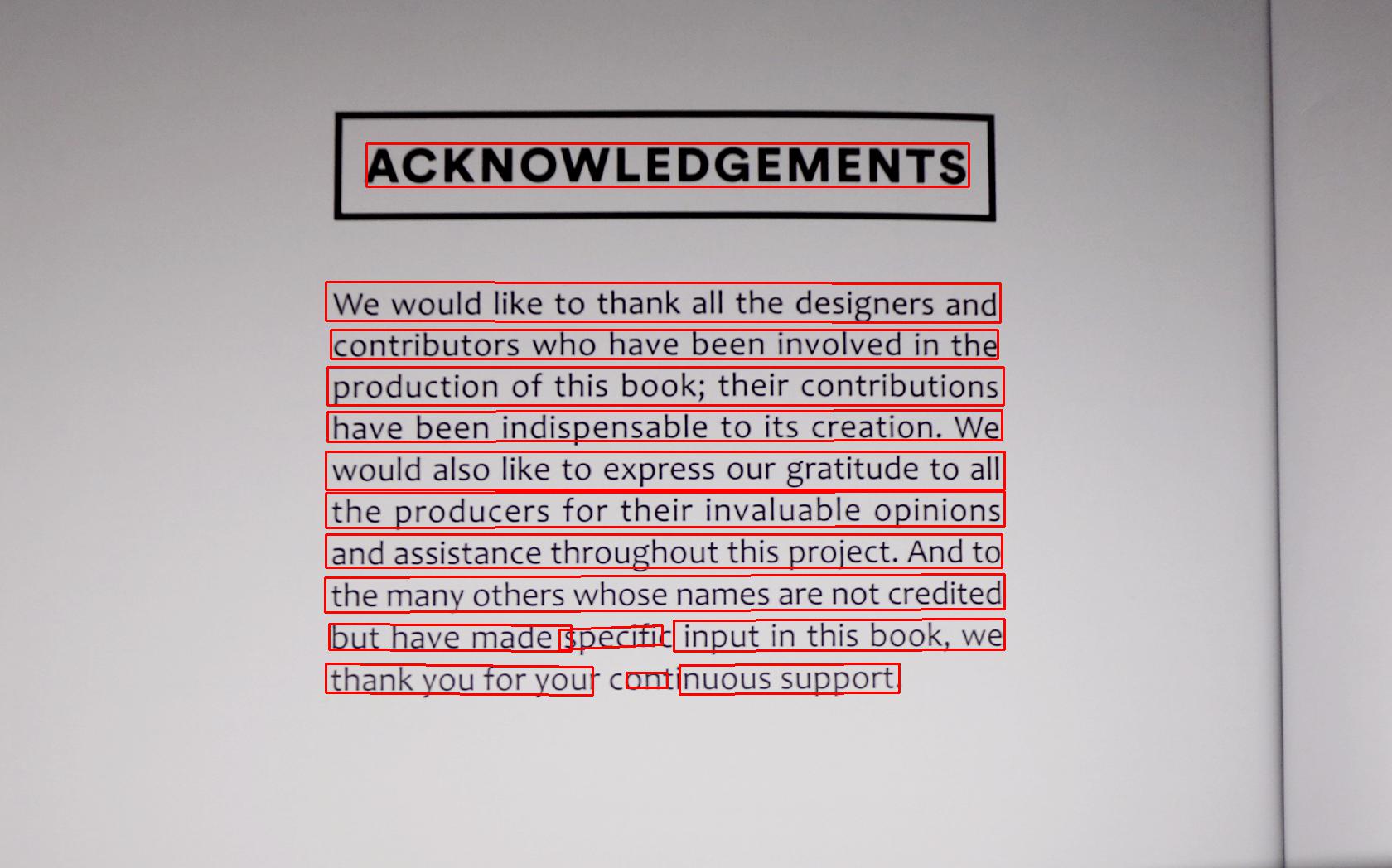

+* 检测预测

+

+```python

+from paddleocr import PaddleOCR, draw_ocr

+ocr = PaddleOCR() # need to run only once to download and load model into memory

+img_path = 'PaddleOCR/doc/imgs_en/img_12.jpg'

+result = ocr.ocr(img_path, rec=False)

+for line in result:

+ print(line)

+

+# 显示结果

+from PIL import Image

+

+image = Image.open(img_path).convert('RGB')

+im_show = draw_ocr(image, result, txts=None, scores=None, font_path='/path/to/PaddleOCR/doc/fonts/simfang.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+结果是一个list,每个item只包含文本框

+```bash

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+结果可视化 :

+

+

+ppocr 还支持方向分类, 更多使用方式请参考:[whl包使用说明](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md)。

+

+

+## 3 自定义训练

+

+ppocr 支持使用自己的数据进行自定义训练或finetune, 其中识别模型可以参考 [法语配置文件](../../configs/rec/multi_language/rec_french_lite_train.yml)

+修改训练数据路径、字典等参数。

+

+具体数据准备、训练过程可参考:[文本检测](../doc_ch/detection.md)、[文本识别](../doc_ch/recognition.md),更多功能如预测部署、

+数据标注等功能可以阅读完整的[文档教程](../../README_ch.md)。

+

+

+## 4 支持语种及缩写

+

+| 语种 | 描述 | 缩写 |

+| --- | --- | --- |

+|中文|chinese and english|ch|

+|英文|english|en|

+|法文|french|fr|

+|德文|german|german|

+|日文|japan|japan|

+|韩文|korean|korean|

+|中文繁体|chinese traditional |ch_tra|

+|意大利文| Italian |it|

+|西班牙文|Spanish |es|

+|葡萄牙文| Portuguese|pt|

+|俄罗斯文|Russia|ru|

+|阿拉伯文|Arabic|ar|

+|印地文|Hindi|hi|

+|维吾尔|Uyghur|ug|

+|波斯文|Persian|fa|

+|乌尔都文|Urdu|ur|

+|塞尔维亚文(latin)| Serbian(latin) |rs_latin|

+|欧西坦文|Occitan |oc|

+|马拉地文|Marathi|mr|

+|尼泊尔文|Nepali|ne|

+|塞尔维亚文(cyrillic)|Serbian(cyrillic)|rs_cyrillic|

+|保加利亚文|Bulgarian |bg|

+|乌克兰文|Ukranian|uk|

+|白俄罗斯文|Belarusian|be|

+|泰卢固文|Telugu |te|

+|卡纳达文|Kannada |kn|

+|泰米尔文|Tamil |ta|

+|南非荷兰文 |Afrikaans |af|

+|阿塞拜疆文 |Azerbaijani |az|

+|波斯尼亚文|Bosnian|bs|

+|捷克文|Czech|cs|

+|威尔士文 |Welsh |cy|

+|丹麦文 |Danish|da|

+|爱沙尼亚文 |Estonian |et|

+|爱尔兰文 |Irish |ga|

+|克罗地亚文|Croatian |hr|

+|匈牙利文|Hungarian |hu|

+|印尼文|Indonesian|id|

+|冰岛文 |Icelandic|is|

+|库尔德文 |Kurdish|ku|

+|立陶宛文|Lithuanian |lt|

+|拉脱维亚文 |Latvian |lv|

+|毛利文|Maori|mi|

+|马来文 |Malay|ms|

+|马耳他文 |Maltese |mt|

+|荷兰文 |Dutch |nl|

+|挪威文 |Norwegian |no|

+|波兰文|Polish |pl|

+| 罗马尼亚文|Romanian |ro|

+| 斯洛伐克文|Slovak |sk|

+| 斯洛文尼亚文|Slovenian |sl|

+| 阿尔巴尼亚文|Albanian |sq|

+| 瑞典文|Swedish |sv|

+| 西瓦希里文|Swahili |sw|

+| 塔加洛文|Tagalog |tl|

+| 土耳其文|Turkish |tr|

+| 乌兹别克文|Uzbek |uz|

+| 越南文|Vietnamese |vi|

+| 蒙古文|Mongolian |mn|

+| 阿巴扎文|Abaza |abq|

+| 阿迪赫文|Adyghe |ady|

+| 卡巴丹文|Kabardian |kbd|

+| 阿瓦尔文|Avar |ava|

+| 达尔瓦文|Dargwa |dar|

+| 因古什文|Ingush |inh|

+| 拉克文|Lak |lbe|

+| 莱兹甘文|Lezghian |lez|

+|塔巴萨兰文 |Tabassaran |tab|

+| 比尔哈文|Bihari |bh|

+| 迈蒂利文|Maithili |mai|

+| 昂加文|Angika |ang|

+| 孟加拉文|Bhojpuri |bho|

+| 摩揭陀文 |Magahi |mah|

+| 那格浦尔文|Nagpur |sck|

+| 尼瓦尔文|Newari |new|

+| 保加利亚文 |Goan Konkani|gom|

+| 沙特阿拉伯文|Saudi Arabia|sa|

diff --git a/doc/doc_ch/pgnet.md b/doc/doc_ch/pgnet.md

index ff9983274829759feaa0e69f61cbccc8b88c5696..4d3b8208777873dc7c0cdb87346eb950d3e3e2f4 100644

--- a/doc/doc_ch/pgnet.md

+++ b/doc/doc_ch/pgnet.md

@@ -2,12 +2,10 @@

- [一、简介](#简介)

- [二、环境配置](#环境配置)

- [三、快速使用](#快速使用)

-- [四、快速训练](#开始训练)

-- [五、预测推理](#预测推理)

-

+- [四、模型训练、评估、推理](#快速训练)

-##简介

+## 一、简介

OCR算法可以分为两阶段算法和端对端的算法。二阶段OCR算法一般分为两个部分,文本检测和文本识别算法,文件检测算法从图像中得到文本行的检测框,然后识别算法去识别文本框中的内容。而端对端OCR算法可以在一个算法中完成文字检测和文字识别,其基本思想是设计一个同时具有检测单元和识别模块的模型,共享其中两者的CNN特征,并联合训练。由于一个算法即可完成文字识别,端对端模型更小,速度更快。

### PGNet算法介绍

@@ -27,13 +25,11 @@ PGNet算法细节详见[论文](https://www.aaai.org/AAAI21Papers/AAAI-2885.Wang

-##环境配置

+## 二、环境配置

请先参考[快速安装](./installation.md)配置PaddleOCR运行环境。

-*注意:也可以通过 whl 包安装使用PaddleOCR,具体参考[Paddleocr Package使用说明](./whl.md)。*

-

-##快速使用

+## 三、快速使用

### inference模型下载

本节以训练好的端到端模型为例,快速使用模型预测,首先下载训练好的端到端inference模型[下载地址](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/e2e_server_pgnetA_infer.tar)

```

@@ -61,20 +57,25 @@ python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/im

# 如果想使用CPU进行预测,需设置use_gpu参数为False

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True --use_gpu=False

```

-

-##开始训练

+### 可视化结果

+可视化文本检测结果默认保存到./inference_results文件夹里面,结果文件的名称前缀为'e2e_res'。结果示例如下:

+

+

+

+## 四、模型训练、评估、推理

本节以totaltext数据集为例,介绍PaddleOCR中端到端模型的训练、评估与测试。

-###数据形式为icdar, 十六点标注数据

-解压数据集和下载标注文件后,PaddleOCR/train_data/total_text/train/ 有两个文件夹,分别是:

+

+### 准备数据

+下载解压[totaltext](https://github.com/cs-chan/Total-Text-Dataset/blob/master/Dataset/README.md)数据集到PaddleOCR/train_data/目录,数据集组织结构:

```

/PaddleOCR/train_data/total_text/train/

- |- rgb/ total_text数据集的训练数据

+ |- rgb/ # total_text数据集的训练数据

|- gt_0.png

| ...

- |- total_text.txt total_text数据集的训练标注

+ |- total_text.txt # total_text数据集的训练标注

```

-提供的标注文件格式如下,中间用"\t"分隔:

+total_text.txt标注文件格式如下,文件名和标注信息中间用"\t"分隔:

```

" 图像文件名 json.dumps编码的图像标注信息"

rgb/gt_0.png [{"transcription": "EST", "points": [[1004.0,689.0],[1019.0,698.0],[1034.0,708.0],[1049.0,718.0],[1064.0,728.0],[1079.0,738.0],[1095.0,748.0],[1094.0,774.0],[1079.0,765.0],[1065.0,756.0],[1050.0,747.0],[1036.0,738.0],[1021.0,729.0],[1007.0,721.0]]}, {...}]

@@ -83,22 +84,19 @@ json.dumps编码前的图像标注信息是包含多个字典的list,字典中

`transcription` 表示当前文本框的文字,**当其内容为“###”时,表示该文本框无效,在训练时会跳过。**

如果您想在其他数据集上训练,可以按照上述形式构建标注文件。

-### 快速启动训练

+### 启动训练

-模型训练一般分两步骤进行,第一步可以选择用合成数据训练,第二步加载第一步训练好的模型训练,这边我们提供了第一步训练好的模型,可以直接加载,从第二步开始训练

-[下载地址](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/train_step1.tar)

+PGNet训练分为两个步骤:step1: 在合成数据上训练,得到预训练模型,此时模型精度依然较低;step2: 加载预训练模型,在totaltext数据集上训练;为快速训练,我们直接提供了step1的预训练模型。

```shell

cd PaddleOCR/

-下载ResNet50_vd的动态图预训练模型

+下载step1 预训练模型

wget -P ./pretrain_models/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/train_step1.tar

可以得到以下的文件格式

./pretrain_models/train_step1/

└─ best_accuracy.pdopt

└─ best_accuracy.states

└─ best_accuracy.pdparams

-

```

-

*如果您安装的是cpu版本,请将配置文件中的 `use_gpu` 字段修改为false*

```shell

@@ -117,7 +115,6 @@ python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Optimizer.base_lr=0.0

```

#### 断点训练

-

如果训练程序中断,如果希望加载训练中断的模型从而恢复训练,可以通过指定Global.checkpoints指定要加载的模型路径:

```shell

python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.checkpoints=./your/trained/model

@@ -125,9 +122,6 @@ python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.checkpoints=./

**注意**:`Global.checkpoints`的优先级高于`Global.pretrain_weights`的优先级,即同时指定两个参数时,优先加载`Global.checkpoints`指定的模型,如果`Global.checkpoints`指定的模型路径有误,会加载`Global.pretrain_weights`指定的模型。

-

-## 预测推理

-

PaddleOCR计算三个OCR端到端相关的指标,分别是:Precision、Recall、Hmean。

运行如下代码,根据配置文件`e2e_r50_vd_pg.yml`中`save_res_path`指定的测试集检测结果文件,计算评估指标。

@@ -138,7 +132,7 @@ PaddleOCR计算三个OCR端到端相关的指标,分别是:Precision、Recal

python3 tools/eval.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.checkpoints="{path/to/weights}/best_accuracy"

```

-### 测试端到端效果

+### 模型预测

测试单张图像的端到端识别效果

```shell

python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/img_10.jpg" Global.pretrained_model="./output/det_db/best_accuracy" Global.load_static_weights=false

@@ -149,8 +143,8 @@ python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img=

python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/" Global.pretrained_model="./output/det_db/best_accuracy" Global.load_static_weights=false

```

-###转为推理模型

-### (1). 四边形文本检测模型(ICDAR2015)

+### 预测推理

+#### (1).四边形文本检测模型(ICDAR2015)

首先将PGNet端到端训练过程中保存的模型,转换成inference model。以基于Resnet50_vd骨干网络,以英文数据集训练的模型为例[模型下载地址](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar) ,可以使用如下命令进行转换:

```

wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar && tar xf en_server_pgnetA.tar

@@ -164,7 +158,7 @@ python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/im

-### (2). 弯曲文本检测模型(Total-Text)

+#### (2).弯曲文本检测模型(Total-Text)

对于弯曲文本样例

**PGNet端到端模型推理,需要设置参数`--e2e_algorithm="PGNet"`,同时,还需要增加参数`--e2e_pgnet_polygon=True`,**可以执行如下命令:

diff --git a/doc/doc_en/multi_languages_en.md b/doc/doc_en/multi_languages_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..d1c4583f66f611fbef7191d52d32e02187853c9b

--- /dev/null

+++ b/doc/doc_en/multi_languages_en.md

@@ -0,0 +1,285 @@

+# Multi-language model

+

+**Recent Update**

+

+-2021.4.9 supports the detection and recognition of 80 languages

+-2021.4.9 supports **lightweight high-precision** English model detection and recognition

+

+-[1 Installation](#Install)

+ -[1.1 paddle installation](#paddleinstallation)

+ -[1.2 paddleocr package installation](#paddleocr_package_install)

+

+-[2 Quick Use](#Quick_Use)

+ -[2.1 Command line operation](#Command_line_operation)

+ -[2.1.1 Prediction of the whole image](#bash_detection+recognition)

+ -[2.1.2 Recognition](#bash_Recognition)

+ -[2.1.3 Detection](#bash_detection)

+ -[2.2 python script running](#python_Script_running)

+ -[2.2.1 Whole image prediction](#python_detection+recognition)

+ -[2.2.2 Recognition](#python_Recognition)

+ -[2.2.3 Detection](#python_detection)

+-[3 Custom Training](#Custom_Training)

+-[4 Supported languages and abbreviations](#language_abbreviations)

+

+

+## 1 Installation

+

+

+### 1.1 paddle installation

+```

+# cpu

+pip install paddlepaddle

+

+# gpu

+pip instll paddlepaddle-gpu

+```

+

+

+### 1.2 paddleocr package installation

+

+

+pip install

+```

+pip install "paddleocr>=2.0.4" # 2.0.4 version is recommended

+```

+Build and install locally

+```

+python3 setup.py bdist_wheel

+pip3 install dist/paddleocr-x.x.x-py3-none-any.whl # x.x.x is the version number of paddleocr

+```

+

+

+## 2 Quick use

+

+

+### 2.1 Command line operation

+

+View help information

+

+```

+paddleocr -h

+```

+

+* Whole image prediction (detection + recognition)

+

+Paddleocr currently supports 80 languages, which can be switched by modifying the --lang parameter.

+The specific supported [language] (#language_abbreviations) can be viewed in the table.

+

+``` bash

+

+paddleocr --image_dir doc/imgs/japan_2.jpg --lang=japan

+```

+

+

+The result is a list, each item contains a text box, text and recognition confidence

+```text

+[[[671.0, 60.0], [847.0, 63.0], [847.0, 104.0], [671.0, 102.0]], ('もちもち', 0.9993342)]

+[[[394.0, 82.0], [536.0, 77.0], [538.0, 127.0], [396.0, 132.0]], ('自然の', 0.9919842)]

+[[[880.0, 89.0], [1014.0, 93.0], [1013.0, 127.0], [879.0, 124.0]], ('とろっと', 0.9976762)]

+[[[1067.0, 101.0], [1294.0, 101.0], [1294.0, 138.0], [1067.0, 138.0]], ('后味のよい', 0.9988712)]

+......

+```

+

+* Recognition

+

+```bash

+paddleocr --image_dir doc/imgs_words/japan/1.jpg --det false --lang=japan

+```

+

+

+

+The result is a tuple, which returns the recognition result and recognition confidence

+

+```text

+('したがって', 0.99965394)

+```

+

+* Detection

+

+```

+paddleocr --image_dir PaddleOCR/doc/imgs/11.jpg --rec false

+```

+

+The result is a list, each item contains only text boxes

+

+```

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+

+### 2.2 python script running

+

+ppocr also supports running in python scripts for easy embedding in your own code:

+

+* Whole image prediction (detection + recognition)

+

+```

+from paddleocr import PaddleOCR, draw_ocr

+

+# Also switch the language by modifying the lang parameter

+ocr = PaddleOCR(lang="korean") # The model file will be downloaded automatically when executed for the first time

+img_path ='doc/imgs/korean_1.jpg'

+result = ocr.ocr(img_path)

+# Print detection frame and recognition result

+for line in result:

+ print(line)

+

+# Visualization

+from PIL import Image

+image = Image.open(img_path).convert('RGB')

+boxes = [line[0] for line in result]

+txts = [line[1][0] for line in result]

+scores = [line[1][1] for line in result]

+im_show = draw_ocr(image, boxes, txts, scores, font_path='/path/to/PaddleOCR/doc/korean.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+

+Visualization of results:

+

+

+

+* Recognition

+

+```

+from paddleocr import PaddleOCR

+ocr = PaddleOCR(lang="german")

+img_path ='PaddleOCR/doc/imgs_words/german/1.jpg'

+result = ocr.ocr(img_path, det=False, cls=True)

+for line in result:

+ print(line)

+```

+

+

+

+The result is a tuple, which only contains the recognition result and recognition confidence

+

+```

+('leider auch jetzt', 0.97538936)

+```

+

+* Detection

+

+```python

+from paddleocr import PaddleOCR, draw_ocr

+ocr = PaddleOCR() # need to run only once to download and load model into memory

+img_path ='PaddleOCR/doc/imgs_en/img_12.jpg'

+result = ocr.ocr(img_path, rec=False)

+for line in result:

+ print(line)

+

+# show result

+from PIL import Image

+

+image = Image.open(img_path).convert('RGB')

+im_show = draw_ocr(image, result, txts=None, scores=None, font_path='/path/to/PaddleOCR/doc/fonts/simfang.ttf')

+im_show = Image.fromarray(im_show)

+im_show.save('result.jpg')

+```

+The result is a list, each item contains only text boxes

+```bash

+[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

+[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

+[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

+......

+```

+

+Visualization of results:

+

+

+ppocr also supports direction classification. For more usage methods, please refer to: [whl package instructions](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md).

+

+

+## 3 Custom training

+

+ppocr supports using your own data for custom training or finetune, where the recognition model can refer to [French configuration file](../../configs/rec/multi_language/rec_french_lite_train.yml)

+Modify the training data path, dictionary and other parameters.

+

+For specific data preparation and training process, please refer to: [Text Detection](../doc_en/detection_en.md), [Text Recognition](../doc_en/recognition_en.md), more functions such as predictive deployment,

+For functions such as data annotation, you can read the complete [Document Tutorial](../../README.md).

+

+

+## 4 Support languages and abbreviations

+

+| Language | Abbreviation |

+| --- | --- |

+|chinese and english|ch|

+|english|en|

+|french|fr|

+|german|german|

+|japan|japan|

+|korean|korean|

+|chinese traditional |ch_tra|

+| Italian |it|

+|Spanish |es|

+| Portuguese|pt|

+|Russia|ru|

+|Arabic|ar|

+|Hindi|hi|

+|Uyghur|ug|

+|Persian|fa|

+|Urdu|ur|

+| Serbian(latin) |rs_latin|

+|Occitan |oc|

+|Marathi|mr|

+|Nepali|ne|

+|Serbian(cyrillic)|rs_cyrillic|

+|Bulgarian |bg|

+|Ukranian|uk|

+|Belarusian|be|

+|Telugu |te|

+|Kannada |kn|

+|Tamil |ta|

+|Afrikaans |af|

+|Azerbaijani |az|

+|Bosnian|bs|

+|Czech|cs|

+|Welsh |cy|

+|Danish|da|

+|Estonian |et|

+|Irish |ga|

+|Croatian |hr|

+|Hungarian |hu|

+|Indonesian|id|

+|Icelandic|is|

+|Kurdish|ku|

+|Lithuanian |lt|

+ |Latvian |lv|

+|Maori|mi|

+|Malay|ms|

+|Maltese |mt|

+|Dutch |nl|

+|Norwegian |no|

+|Polish |pl|

+|Romanian |ro|

+|Slovak |sk|

+|Slovenian |sl|

+|Albanian |sq|

+|Swedish |sv|

+|Swahili |sw|

+|Tagalog |tl|

+|Turkish |tr|

+|Uzbek |uz|

+|Vietnamese |vi|

+|Mongolian |mn|

+|Abaza |abq|

+|Adyghe |ady|

+|Kabardian |kbd|

+|Avar |ava|

+|Dargwa |dar|

+|Ingush |inh|

+|Lak |lbe|

+|Lezghian |lez|

+|Tabassaran |tab|

+|Bihari |bh|

+|Maithili |mai|

+|Angika |ang|

+|Bhojpuri |bho|

+|Magahi |mah|

+|Nagpur |sck|

+|Newari |new|

+|Goan Konkani|gom|

+|Saudi Arabia|sa|

diff --git a/doc/doc_en/pgnet_en.md b/doc/doc_en/pgnet_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..0f47f0e656f922e944710a746a6cd29ab6d46d8e

--- /dev/null

+++ b/doc/doc_en/pgnet_en.md

@@ -0,0 +1,175 @@

+# End-to-end OCR Algorithm-PGNet

+- [1. Brief Introduction](#Brief_Introduction)

+- [2. Environment Configuration](#Environment_Configuration)

+- [3. Quick Use](#Quick_Use)

+- [4. Model Training,Evaluation And Inference](#Model_Training_Evaluation_And_Inference)

+

+

+## 1. Brief Introduction

+OCR algorithm can be divided into two-stage algorithm and end-to-end algorithm. The two-stage OCR algorithm is generally divided into two parts, text detection and text recognition algorithm. The text detection algorithm gets the detection box of the text line from the image, and then the recognition algorithm identifies the content of the text box. The end-to-end OCR algorithm can complete text detection and recognition in one algorithm. Its basic idea is to design a model with both detection unit and recognition module, share the CNN features of both and train them together. Because one algorithm can complete character recognition, the end-to-end model is smaller and faster.

+### Introduction Of PGNet Algorithm

+In recent years, the end-to-end OCR algorithm has been well developed, including MaskTextSpotter series, TextSnake, TextDragon, PGNet series and so on. Among these algorithms, PGNet algorithm has the advantages that other algorithms do not

+- Pgnet loss is designed to guide training, and no character-level annotations is needed

+- NMS and ROI related operations are not needed, It can accelerate the prediction

+- The reading order prediction module is proposed

+- A graph based modification module (GRM) is proposed to further improve the performance of model recognition

+- Higher accuracy and faster prediction speed

+

+For details of PGNet algorithm, please refer to [paper](https://www.aaai.org/AAAI21Papers/AAAI-2885.WangP.pdf), The schematic diagram of the algorithm is as follows:

+

+After feature extraction, the input image is sent to four branches: TBO module for text edge offset prediction, TCL module for text centerline prediction, TDO module for text direction offset prediction, and TCC module for text character classification graph prediction.

+The output of TBO and TCL can get text detection results after post-processing, and TCL, TDO and TCC are responsible for text recognition.

+

+The results of detection and recognition are as follows:

+

+

+

+

+## 2. Environment Configuration

+Please refer to [Quick Installation](./installation_en.md) Configure the PaddleOCR running environment.

+

+

+## 3. Quick Use

+### inference model download

+This section takes the trained end-to-end model as an example to quickly use the model prediction. First, download the trained end-to-end inference model [download address](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/e2e_server_pgnetA_infer.tar)

+```

+mkdir inference && cd inference

+# Download the English end-to-end model and unzip it

+wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/e2e_server_pgnetA_infer.tar && tar xf e2e_server_pgnetA_infer.tar

+```

+* In Windows environment, if 'wget' is not installed, the link can be copied to the browser when downloading the model, and decompressed and placed in the corresponding directory

+

+After decompression, there should be the following file structure:

+```

+├── e2e_server_pgnetA_infer

+│ ├── inference.pdiparams

+│ ├── inference.pdiparams.info

+│ └── inference.pdmodel

+```

+### Single image or image set prediction

+```bash

+# Prediction single image specified by image_dir

+python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True

+

+# Prediction the collection of images specified by image_dir

+python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True

+

+# If you want to use CPU for prediction, you need to set use_gpu parameter is false

+python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True --use_gpu=False

+```

+### Visualization results

+The visualized end-to-end results are saved to the `./inference_results` folder by default, and the name of the result file is prefixed with 'e2e_res'. Examples of results are as follows:

+

+

+

+## 4. Model Training,Evaluation And Inference

+This section takes the totaltext dataset as an example to introduce the training, evaluation and testing of the end-to-end model in PaddleOCR.

+

+### Data Preparation

+Download and unzip [totaltext](https://github.com/cs-chan/Total-Text-Dataset/blob/master/Dataset/README.md) dataset to PaddleOCR/train_data/, dataset organization structure is as follow:

+```

+/PaddleOCR/train_data/total_text/train/

+ |- rgb/ # total_text training data of dataset

+ |- gt_0.png

+ | ...

+ |- total_text.txt # total_text training annotation of dataset

+```

+

+total_text.txt: the format of dimension file is as follows,the file name and annotation information are separated by "\t":

+```

+" Image file name Image annotation information encoded by json.dumps"

+rgb/gt_0.png [{"transcription": "EST", "points": [[1004.0,689.0],[1019.0,698.0],[1034.0,708.0],[1049.0,718.0],[1064.0,728.0],[1079.0,738.0],[1095.0,748.0],[1094.0,774.0],[1079.0,765.0],[1065.0,756.0],[1050.0,747.0],[1036.0,738.0],[1021.0,729.0],[1007.0,721.0]]}, {...}]

+```

+The image annotation after **json.dumps()** encoding is a list containing multiple dictionaries.

+

+The `points` in the dictionary represent the coordinates (x, y) of the four points of the text box, arranged clockwise from the point at the upper left corner.

+

+`transcription` represents the text of the current text box. **When its content is "###" it means that the text box is invalid and will be skipped during training.**

+

+If you want to train PaddleOCR on other datasets, please build the annotation file according to the above format.

+

+

+### Start Training

+

+PGNet training is divided into two steps: Step 1: training on the synthetic data to get the pretrain_model, and the accuracy of the model is still low; step 2: loading the pretrain_model and training on the totaltext data set; for fast training, we directly provide the pre training model of step 1[download link](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/train_step1.tar).

+```shell

+cd PaddleOCR/

+download step1 pretrain_models

+wget -P ./pretrain_models/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/train_step1.tar

+You can get the following file format

+./pretrain_models/train_step1/

+ └─ best_accuracy.pdopt

+ └─ best_accuracy.states

+ └─ best_accuracy.pdparams

+```

+*If CPU version installed, please set the parameter `use_gpu` to `false` in the configuration.*

+

+```shell

+# single GPU training

+python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./pretrain_models/train_step1/best_accuracy Global.load_static_weights=False

+# multi-GPU training

+# Set the GPU ID used by the '--gpus' parameter.

+python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./pretrain_models/train_step1/best_accuracy Global.load_static_weights=False

+```

+

+In the above instruction, use `-c` to select the training to use the `configs/e2e/e2e_r50_vd_pg.yml` configuration file.

+For a detailed explanation of the configuration file, please refer to [config](./config_en.md).

+

+You can also use `-o` to change the training parameters without modifying the yml file. For example, adjust the training learning rate to 0.0001

+```shell

+python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Optimizer.base_lr=0.0001

+```

+

+#### Load trained model and continue training

+If you expect to load trained model and continue the training again, you can specify the parameter `Global.checkpoints` as the model path to be loaded.

+```shell

+python3 tools/train.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.checkpoints=./your/trained/model

+```

+

+**Note**: The priority of `Global.checkpoints` is higher than that of `Global.pretrain_weights`, that is, when two parameters are specified at the same time, the model specified by `Global.checkpoints` will be loaded first. If the model path specified by `Global.checkpoints` is wrong, the one specified by `Global.pretrain_weights` will be loaded.

+

+PaddleOCR calculates three indicators for evaluating performance of OCR end-to-end task: Precision, Recall, and Hmean.

+

+

+Run the following code to calculate the evaluation indicators. The result will be saved in the test result file specified by `save_res_path` in the configuration file `e2e_r50_vd_pg.yml`

+When evaluating, set post-processing parameters `max_side_len=768`. If you use different datasets, different models for training.

+The model parameters during training are saved in the `Global.save_model_dir` directory by default. When evaluating indicators, you need to set `Global.checkpoints` to point to the saved parameter file.

+```shell

+python3 tools/eval.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.checkpoints="{path/to/weights}/best_accuracy"

+```

+

+### Model Test

+Test the end-to-end result on a single image:

+```shell

+python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/img_10.jpg" Global.pretrained_model="./output/det_db/best_accuracy" Global.load_static_weights=false

+```

+

+Test the end-to-end result on all images in the folder:

+```shell

+python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/" Global.pretrained_model="./output/det_db/best_accuracy" Global.load_static_weights=false

+```

+

+### Model inference

+#### (1).Quadrangle text detection model (ICDAR2015)

+First, convert the model saved in the PGNet end-to-end training process into an inference model. In the first stage of training based on composite dataset, the model of English data set training is taken as an example[model download link](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar), you can use the following command to convert:

+```

+wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar && tar xf en_server_pgnetA.tar

+python3 tools/export_model.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./en_server_pgnetA/iter_epoch_450 Global.load_static_weights=False Global.save_inference_dir=./inference/e2e

+```

+**For PGNet quadrangle end-to-end model inference, you need to set the parameter `--e2e_algorithm="PGNet"`**, run the following command:

+```

+python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img_10.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=False

+```

+The visualized text detection results are saved to the `./inference_results` folder by default, and the name of the result file is prefixed with 'e2e_res'. Examples of results are as follows:

+

+

+

+#### (2). Curved text detection model (Total-Text)

+For the curved text example, we use the same model as the quadrilateral

+**For PGNet end-to-end curved text detection model inference, you need to set the parameter `--e2e_algorithm="PGNet"` and `--e2e_pgnet_polygon=True`**, run the following command:

+```

+python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True

+```

+The visualized text detection results are saved to the `./inference_results` folder by default, and the name of the result file is prefixed with 'e2e_res'. Examples of results are as follows:

+

+

diff --git a/doc/imgs_results/whl/12_det.jpg b/doc/imgs_results/whl/12_det.jpg

index 1d5ccf2a6b5d3fa9516560e0cb2646ad6b917da6..71627f0b8db8fdc6e1bf0c4601f0311160d3164d 100644

Binary files a/doc/imgs_results/whl/12_det.jpg and b/doc/imgs_results/whl/12_det.jpg differ

diff --git a/paddleocr.py b/paddleocr.py

index c3741b264503534ef3e64531c2576273d8ccfd11..47e1267ac40effbe8b4ab80723c66eb5378be179 100644

--- a/paddleocr.py

+++ b/paddleocr.py

@@ -66,6 +66,46 @@ model_urls = {

'url':

'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/japan_mobile_v2.0_rec_infer.tar',

'dict_path': './ppocr/utils/dict/japan_dict.txt'

+ },

+ 'chinese_cht': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/chinese_cht_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/chinese_cht_dict.txt'

+ },

+ 'ta': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ta_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/ta_dict.txt'

+ },

+ 'te': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/te_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/te_dict.txt'

+ },

+ 'ka': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ka_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/ka_dict.txt'

+ },

+ 'latin': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/latin_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/latin_dict.txt'

+ },

+ 'arabic': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/arabic_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/arabic_dict.txt'

+ },

+ 'cyrillic': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/cyrillic_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/cyrillic_dict.txt'

+ },

+ 'devanagari': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/devanagari_ppocr_mobile_v2.0_rec_infer.tar',

+ 'dict_path': './ppocr/utils/dict/devanagari_dict.txt'

}

},

'cls':

@@ -233,6 +273,29 @@ class PaddleOCR(predict_system.TextSystem):

postprocess_params.__dict__.update(**kwargs)

self.use_angle_cls = postprocess_params.use_angle_cls

lang = postprocess_params.lang

+ latin_lang = [

+ 'af', 'az', 'bs', 'cs', 'cy', 'da', 'de', 'en', 'es', 'et', 'fr',

+ 'ga', 'hr', 'hu', 'id', 'is', 'it', 'ku', 'la', 'lt', 'lv', 'mi',

+ 'ms', 'mt', 'nl', 'no', 'oc', 'pi', 'pl', 'pt', 'ro', 'rs_latin',

+ 'sk', 'sl', 'sq', 'sv', 'sw', 'tl', 'tr', 'uz', 'vi'

+ ]

+ arabic_lang = ['ar', 'fa', 'ug', 'ur']

+ cyrillic_lang = [

+ 'ru', 'rs_cyrillic', 'be', 'bg', 'uk', 'mn', 'abq', 'ady', 'kbd',

+ 'ava', 'dar', 'inh', 'che', 'lbe', 'lez', 'tab'

+ ]

+ devanagari_lang = [

+ 'hi', 'mr', 'ne', 'bh', 'mai', 'ang', 'bho', 'mah', 'sck', 'new',

+ 'gom', 'sa', 'bgc'

+ ]

+ if lang in latin_lang:

+ lang = "latin"

+ elif lang in arabic_lang:

+ lang = "arabic"

+ elif lang in cyrillic_lang:

+ lang = "cyrillic"

+ elif lang in devanagari_lang:

+ lang = "devanagari"

assert lang in model_urls[

'rec'], 'param lang must in {}, but got {}'.format(

model_urls['rec'].keys(), lang)

diff --git a/ppocr/utils/dict/arabic_dict.txt b/ppocr/utils/dict/arabic_dict.txt

new file mode 100644

index 0000000000000000000000000000000000000000..e97abf39274df77fbad066ee4635aebc6743140c

--- /dev/null

+++ b/ppocr/utils/dict/arabic_dict.txt

@@ -0,0 +1,162 @@

+

+!

+#

+$

+%

+&

+'

+(

++

+,

+-

+.

+/

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+:

+?

+@

+A

+B

+C

+D

+E

+F

+G

+H

+I

+J

+K

+L

+M

+N

+O

+P

+Q

+R

+S

+T

+U

+V

+W

+X

+Y

+Z

+_

+a

+b

+c

+d

+e

+f

+g

+h

+i

+j

+k

+l

+m

+n

+o

+p

+q

+r

+s

+t

+u

+v

+w

+x

+y

+z

+É

+é

+ء

+آ

+أ

+ؤ

+إ

+ئ

+ا

+ب

+ة

+ت

+ث

+ج

+ح

+خ

+د

+ذ

+ر

+ز

+س

+ش

+ص

+ض

+ط

+ظ

+ع

+غ

+ف

+ق

+ك

+ل

+م

+ن

+ه

+و

+ى

+ي

+ً

+ٌ

+ٍ

+َ

+ُ

+ِ

+ّ

+ْ

+ٓ

+ٔ

+ٰ

+ٱ

+ٹ

+پ

+چ

+ڈ

+ڑ

+ژ

+ک

+ڭ

+گ

+ں

+ھ

+ۀ

+ہ

+ۂ

+ۃ

+ۆ

+ۇ

+ۈ

+ۋ

+ی

+ې

+ے

+ۓ

+ە

+١

+٢

+٣

+٤

+٥

+٦

+٧

+٨

+٩

diff --git a/ppocr/utils/dict/cyrillic_dict.txt b/ppocr/utils/dict/cyrillic_dict.txt

new file mode 100644

index 0000000000000000000000000000000000000000..2b6f66494d5417e18bbd225719aa72690e09e126

--- /dev/null

+++ b/ppocr/utils/dict/cyrillic_dict.txt

@@ -0,0 +1,163 @@

+

+!

+#

+$

+%

+&

+'

+(

++

+,

+-

+.

+/

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+:

+?

+@

+A

+B

+C

+D

+E

+F

+G

+H

+I

+J

+K

+L

+M

+N

+O

+P

+Q

+R

+S

+T

+U

+V

+W

+X

+Y

+Z

+_

+a

+b

+c

+d

+e

+f

+g

+h

+i

+j

+k

+l

+m

+n

+o

+p

+q

+r

+s

+t

+u

+v

+w

+x

+y

+z

+É

+é

+Ё

+Є

+І

+Ј

+Љ

+Ў

+А

+Б

+В

+Г

+Д

+Е

+Ж

+З

+И

+Й

+К

+Л

+М

+Н

+О

+П

+Р

+С

+Т

+У

+Ф

+Х

+Ц

+Ч

+Ш

+Щ

+Ъ

+Ы

+Ь

+Э

+Ю

+Я

+а

+б

+в

+г

+д

+е

+ж

+з

+и

+й

+к

+л

+м

+н

+о

+п

+р

+с

+т

+у

+ф

+х

+ц

+ч

+ш

+щ

+ъ

+ы

+ь

+э

+ю

+я

+ё

+ђ

+є

+і

+ј

+љ

+њ

+ћ

+ў

+џ

+Ґ

+ґ

diff --git a/ppocr/utils/dict/devanagari_dict.txt b/ppocr/utils/dict/devanagari_dict.txt

new file mode 100644

index 0000000000000000000000000000000000000000..f55923061bfd480b875bb3679d7a75a9157387a9

--- /dev/null

+++ b/ppocr/utils/dict/devanagari_dict.txt

@@ -0,0 +1,167 @@

+

+!

+#

+$

+%

+&

+'

+(

++

+,

+-

+.

+/

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+:

+?

+@

+A

+B

+C

+D

+E

+F

+G

+H

+I

+J

+K

+L

+M

+N

+O

+P

+Q

+R

+S

+T

+U

+V

+W

+X

+Y

+Z

+_

+a

+b

+c

+d

+e

+f

+g

+h

+i

+j

+k

+l

+m

+n

+o

+p

+q

+r

+s

+t

+u

+v

+w

+x

+y

+z

+É

+é

+ँ

+ं

+ः

+अ

+आ

+इ

+ई

+उ

+ऊ

+ऋ

+ए

+ऐ

+ऑ

+ओ

+औ

+क

+ख

+ग

+घ

+ङ

+च

+छ

+ज

+झ

+ञ

+ट

+ठ

+ड

+ढ

+ण

+त

+थ

+द

+ध

+न

+ऩ

+प

+फ

+ब

+भ

+म

+य

+र

+ऱ

+ल

+ळ

+व

+श

+ष

+स

+ह

+़

+ा

+ि

+ी

+ु

+ू

+ृ

+ॅ

+े

+ै

+ॉ

+ो

+ौ

+्

+॒

+क़

+ख़

+ग़

+ज़

+ड़

+ढ़

+फ़

+ॠ

+।

+०

+१

+२

+३

+४

+५

+६

+७

+८

+९

+॰

diff --git a/ppocr/utils/dict/latin_dict.txt b/ppocr/utils/dict/latin_dict.txt

new file mode 100644

index 0000000000000000000000000000000000000000..e166bf33ecfbdc90ddb3d9743fded23306acabd5

--- /dev/null

+++ b/ppocr/utils/dict/latin_dict.txt

@@ -0,0 +1,185 @@

+

+!

+"

+#

+$

+%

+&

+'

+(

+)

+*

++

+,

+-

+.

+/

+0

+1

+2

+3

+4

+5

+6

+7

+8

+9

+:

+;

+<

+=

+>

+?

+@

+A

+B

+C

+D

+E

+F

+G

+H

+I

+J

+K

+L

+M

+N

+O

+P

+Q

+R

+S

+T

+U

+V

+W

+X

+Y

+Z

+[

+]

+_

+`

+a

+b

+c

+d

+e

+f

+g

+h

+i

+j

+k

+l

+m

+n

+o

+p

+q

+r

+s

+t

+u

+v

+w

+x

+y

+z

+{

+}

+¡

+£

+§

+ª

+«

+

+°

+²

+³

+´

+µ

+·

+º

+»

+¿

+À

+Á

+Â

+Ä

+Å

+Ç

+È

+É

+Ê

+Ë

+Ì

+Í

+Î

+Ï

+Ò

+Ó

+Ô

+Õ

+Ö

+Ú

+Ü

+Ý

+ß

+à

+á

+â

+ã

+ä

+å

+æ

+ç

+è

+é

+ê

+ë

+ì

+í

+î

+ï

+ñ

+ò

+ó

+ô

+õ

+ö

+ø

+ù

+ú

+û

+ü

+ý

+ą

+Ć

+ć

+Č

+č

+Đ

+đ

+ę

+ı

+Ł

+ł

+ō

+Œ

+œ

+Š

+š

+Ÿ

+Ž

+ž

+ʒ

+β

+δ

+ε

+з

+Ṡ

+‘

+€

+™

diff --git a/ppocr/utils/utility.py b/ppocr/utils/utility.py

index 29576d971486326aec3c93601656d7b982ef3336..7bb4c906d298af54ed56e2805f487a2c22d1894b 100755

--- a/ppocr/utils/utility.py

+++ b/ppocr/utils/utility.py

@@ -61,6 +61,7 @@ def get_image_file_list(img_file):

imgs_lists.append(file_path)

if len(imgs_lists) == 0:

raise Exception("not found any img file in {}".format(img_file))

+ imgs_lists = sorted(imgs_lists)

return imgs_lists

diff --git a/setup.py b/setup.py

index 70400df484128ba751da5f97503cc7f84e260d86..d491adb17e6251355c0190d0ddecb9a82b09bc2e 100644

--- a/setup.py

+++ b/setup.py

@@ -32,7 +32,7 @@ setup(

package_dir={'paddleocr': ''},

include_package_data=True,

entry_points={"console_scripts": ["paddleocr= paddleocr.paddleocr:main"]},

- version='2.0.3',

+ version='2.0.4',

install_requires=requirements,

license='Apache License 2.0',

description='Awesome OCR toolkits based on PaddlePaddle (8.6M ultra-lightweight pre-trained model, support training and deployment among server, mobile, embeded and IoT devices',

diff --git a/tools/eval.py b/tools/eval.py

index 4afed469c875ef8d2200cdbfd89e5a8af4c6b7c3..9817fa75093dd5127e3d11501ebc0473c9b53365 100755

--- a/tools/eval.py

+++ b/tools/eval.py

@@ -59,10 +59,10 @@ def main():

eval_class = build_metric(config['Metric'])

# start eval

- metirc = program.eval(model, valid_dataloader, post_process_class,

+ metric = program.eval(model, valid_dataloader, post_process_class,

eval_class, use_srn)

logger.info('metric eval ***************')

- for k, v in metirc.items():

+ for k, v in metric.items():

logger.info('{}:{}'.format(k, v))

diff --git a/tools/export_model.py b/tools/export_model.py

index 1e9526e03d6b9001249d5891c37bee071c1f36a3..f587b2bb363e01ab4c0b2429fc95f243085649d1 100755

--- a/tools/export_model.py

+++ b/tools/export_model.py

@@ -31,14 +31,6 @@ from ppocr.utils.logging import get_logger

from tools.program import load_config, merge_config, ArgsParser

-def parse_args():

- parser = argparse.ArgumentParser()

- parser.add_argument("-c", "--config", help="configuration file to use")

- parser.add_argument(

- "-o", "--output_path", type=str, default='./output/infer/')

- return parser.parse_args()

-

-

def main():

FLAGS = ArgsParser().parse_args()

config = load_config(FLAGS.config)

diff --git a/tools/infer/predict_rec.py b/tools/infer/predict_rec.py

index 1cb6e01b087ff98efb0a57be3cc58a79425fea57..24388026b8f395427c93e285ed550446e3aa9b9c 100755

--- a/tools/infer/predict_rec.py

+++ b/tools/infer/predict_rec.py

@@ -41,6 +41,7 @@ class TextRecognizer(object):

self.character_type = args.rec_char_type

self.rec_batch_num = args.rec_batch_num

self.rec_algorithm = args.rec_algorithm

+ self.max_text_length = args.max_text_length

postprocess_params = {

'name': 'CTCLabelDecode',

"character_type": args.rec_char_type,

@@ -186,8 +187,9 @@ class TextRecognizer(object):

norm_img = norm_img[np.newaxis, :]

norm_img_batch.append(norm_img)

else:

- norm_img = self.process_image_srn(

- img_list[indices[ino]], self.rec_image_shape, 8, 25)

+ norm_img = self.process_image_srn(img_list[indices[ino]],

+ self.rec_image_shape, 8,

+ self.max_text_length)

encoder_word_pos_list = []

gsrm_word_pos_list = []

gsrm_slf_attn_bias1_list = []

diff --git a/tools/infer/predict_system.py b/tools/infer/predict_system.py

index de7ee9d342063161f2e329c99d2428051c0ecf8c..ba81aff0a940fbee234e59e98f73c62fc7f69f09 100755

--- a/tools/infer/predict_system.py

+++ b/tools/infer/predict_system.py

@@ -13,6 +13,7 @@

# limitations under the License.

import os

import sys

+import subprocess

__dir__ = os.path.dirname(os.path.abspath(__file__))

sys.path.append(__dir__)

@@ -141,6 +142,7 @@ def sorted_boxes(dt_boxes):

def main(args):

image_file_list = get_image_file_list(args.image_dir)

+ image_file_list = image_file_list[args.process_id::args.total_process_num]

text_sys = TextSystem(args)

is_visualize = True

font_path = args.vis_font_path

@@ -184,4 +186,18 @@ def main(args):

if __name__ == "__main__":

- main(utility.parse_args())

+ args = utility.parse_args()

+ if args.use_mp:

+ p_list = []

+ total_process_num = args.total_process_num

+ for process_id in range(total_process_num):

+ cmd = [sys.executable, "-u"] + sys.argv + [

+ "--process_id={}".format(process_id),

+ "--use_mp={}".format(False)

+ ]

+ p = subprocess.Popen(cmd, stdout=sys.stdout, stderr=sys.stdout)

+ p_list.append(p)

+ for p in p_list:

+ p.wait()

+ else:

+ main(args)

diff --git a/tools/infer/utility.py b/tools/infer/utility.py

index 9019f003b44d9ecb69ed390fba8cc97d4d074cd5..b273eaf3258421d5c5c30c132f99e78f9f0999ba 100755

--- a/tools/infer/utility.py

+++ b/tools/infer/utility.py

@@ -98,6 +98,10 @@ def parse_args():

parser.add_argument("--enable_mkldnn", type=str2bool, default=False)

parser.add_argument("--use_pdserving", type=str2bool, default=False)

+ parser.add_argument("--use_mp", type=str2bool, default=False)

+ parser.add_argument("--total_process_num", type=int, default=1)

+ parser.add_argument("--process_id", type=int, default=0)

+

return parser.parse_args()