Merge branch 'dygraph' of https://github.com/PaddlePaddle/PaddleOCR into dygraph

Showing

configs/det/det_r50_drrg_ctw.yml

0 → 100755

configs/rec/rec_d28_can.yml

0 → 100644

configs/sr/sr_telescope.yml

0 → 100644

11.5 KB

14.9 KB

4.8 KB

doc/doc_ch/algorithm_det_drrg.md

0 → 100644

doc/doc_ch/algorithm_rec_can.md

0 → 100644

doc/doc_ch/algorithm_rec_rfl.md

0 → 100644

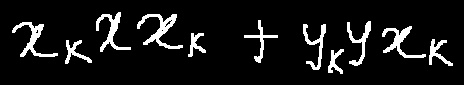

doc/imgs_results/sr_word_52.png

0 → 100644

8.2 KB

ppocr/data/imaug/drrg_targets.py

0 → 100644

此差异已折叠。

ppocr/ext_op/__init__.py

0 → 100644

ppocr/losses/__init__.py

100755 → 100644

ppocr/losses/det_drrg_loss.py

0 → 100644

此差异已折叠。

ppocr/losses/rec_can_loss.py

0 → 100644

ppocr/losses/rec_rfl_loss.py

0 → 100644

此差异已折叠。

ppocr/losses/text_focus_loss.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

ppocr/modeling/heads/gcn.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

ppocr/modeling/necks/fpn_unet.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

ppocr/utils/dict/confuse.pkl

0 → 100644

文件已添加

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。