update autodl finetuner (#196)

* update autodl * fix reader that drops dataset in predicting phase * update the directory of tmp.txt

Showing

demo/autofinetune/README.md

0 → 100644

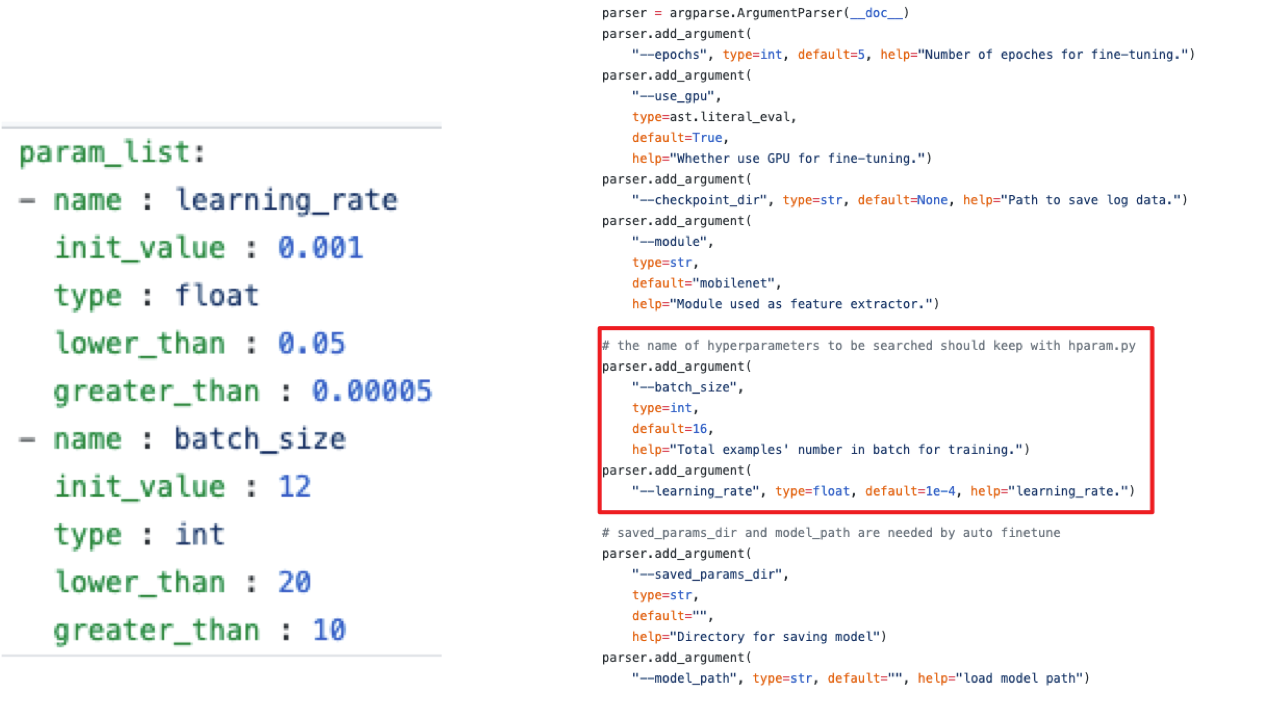

demo/autofinetune/hparam.yaml

0 → 100644

demo/autofinetune/img_cls.py

0 → 100644

docs/imgs/demo.png

0 → 100644

280.4 KB

paddlehub/dataset/tnews.py

0 → 100644

| #for python2, you should use requirements_py2.txt | #for python2, you should use requirements_py2.txt | ||

| pre-commit | pre-commit | ||

| protobuf >= 3.1.0 | protobuf >= 3.6.0 | ||

| yapf == 0.26.0 | yapf == 0.26.0 | ||

| pyyaml | pyyaml | ||

| numpy | numpy | ||

| ... | ... |