diff --git a/.travis.yml b/.travis.yml

index b9308b0258039a3d6b6c69183b0e37d3cfec48ff..fdb117ee50798740ff56d566ec8c66420759a8c4 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -21,8 +21,13 @@ env:

- PYTHONPATH=${PWD}

install:

- - pip install --upgrade paddlepaddle

- - pip install -r requirements.txt

+ - if [[ $TRAVIS_OS_NAME == osx ]]; then

+ pip3 install --upgrade paddlepaddle;

+ pip3 install -r requirements.txt;

+ else

+ pip install --upgrade paddlepaddle;

+ pip install -r requirements.txt;

+ fi

notifications:

email:

diff --git a/README.md b/README.md

index a5f6636c3a05912e91cdb21b207288ca7b57a6e6..9a8c178c7d4a6520392b700158e7b11da900741d 100644

--- a/README.md

+++ b/README.md

@@ -50,7 +50,7 @@ PaddleHub以预训练模型应用为核心具备以下特点:

### 安装命令

-PaddlePaddle框架的安装请查阅[飞桨快速安装](https://www.paddlepaddle.org.cn/install/quick)

+在安装PaddleHub之前,请先安装PaddlePaddle深度学习框架,更多安装说明请查阅[飞桨快速安装](https://www.paddlepaddle.org.cn/install/quick)

```shell

pip install paddlehub

@@ -66,6 +66,18 @@ PaddleHub采用模型即软件的设计理念,所有的预训练模型与Pytho

安装PaddleHub后,执行命令[hub run](./docs/tutorial/cmdintro.md),即可快速体验无需代码、一键预测的功能:

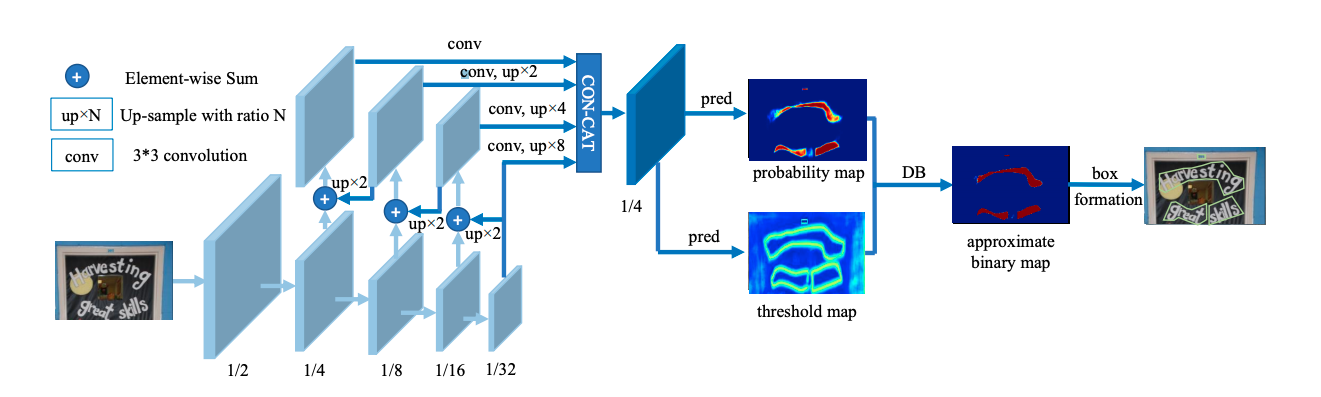

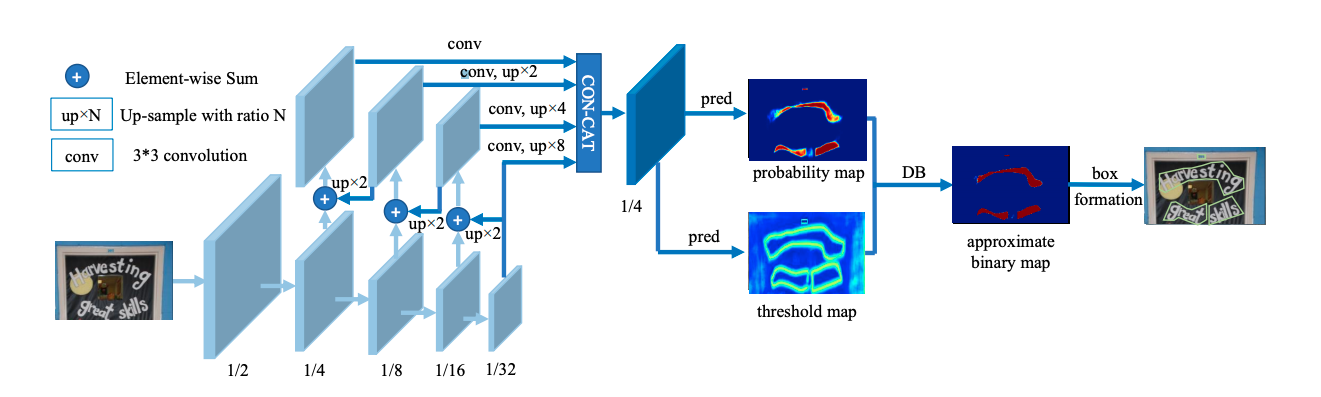

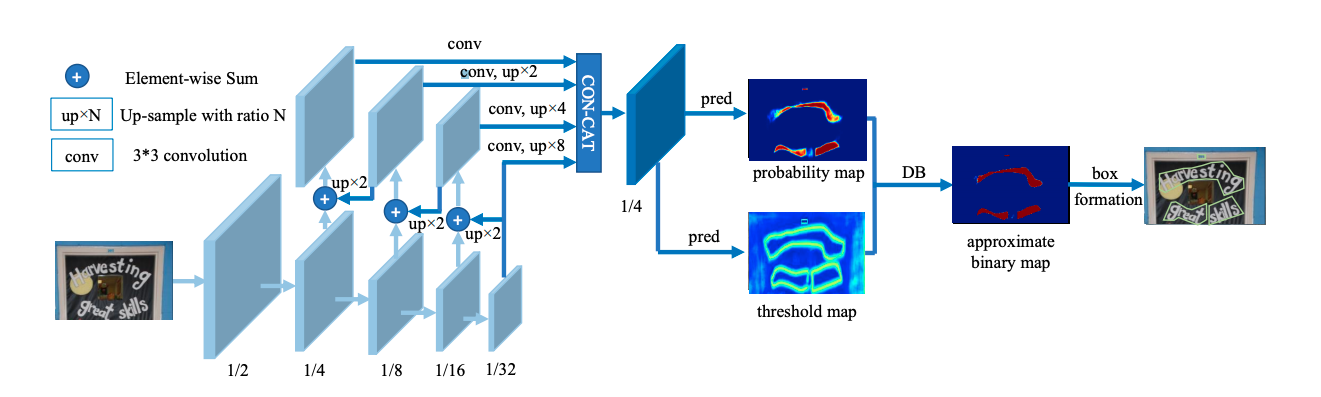

+* 使用[文字识别](https://www.paddlepaddle.org.cn/hublist?filter=en_category&value=TextRecognition)轻量级中文OCR模型chinese_ocr_db_crnn_mobile即可一键快速识别图片中的文字。

+```shell

+$ wget https://paddlehub.bj.bcebos.com/model/image/ocr/test_ocr.jpg

+$ hub run chinese_ocr_db_crnn_mobile --input_path test_ocr.jpg --visualization=True

+```

+

+预测结果图片保存在当前运行路径下ocr_result文件夹中,如下图所示。

+

+

+  +

* 使用[目标检测](https://www.paddlepaddle.org.cn/hublist?filter=en_category&value=ObjectDetection)模型pyramidbox_lite_mobile_mask对图片进行口罩检测

```shell

$ wget https://paddlehub.bj.bcebos.com/resources/test_mask_detection.jpg

@@ -192,5 +204,5 @@ $ hub uninstall ernie

## 更新历史

-PaddleHub v1.6 已发布!

+PaddleHub v1.7 已发布!

更多升级详情参考[更新历史](./RELEASE.md)

diff --git a/RELEASE.md b/RELEASE.md

index b2e177dfa2de3d09d5e85be59dcd2d1914c8368c..8c8a7a08e085d756f02f5cf9490128a550f27a51 100644

--- a/RELEASE.md

+++ b/RELEASE.md

@@ -1,3 +1,23 @@

+## `v1.7.0`

+

+* 丰富预训练模型,提升应用性

+ * 新增VENUS系列视觉预训练模型[yolov3_darknet53_venus](https://www.paddlepaddle.org.cn/hubdetail?name=yolov3_darknet53_venus&en_category=ObjectDetection),[faster_rcnn_resnet50_fpn_venus](https://www.paddlepaddle.org.cn/hubdetail?name=faster_rcnn_resnet50_fpn_venus&en_category=ObjectDetection),可大幅度提升图像分类和目标检测任务的Fine-tune效果

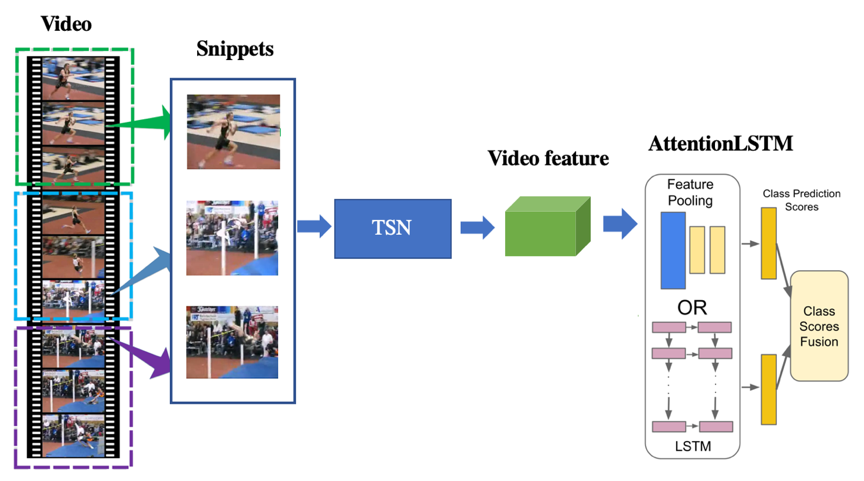

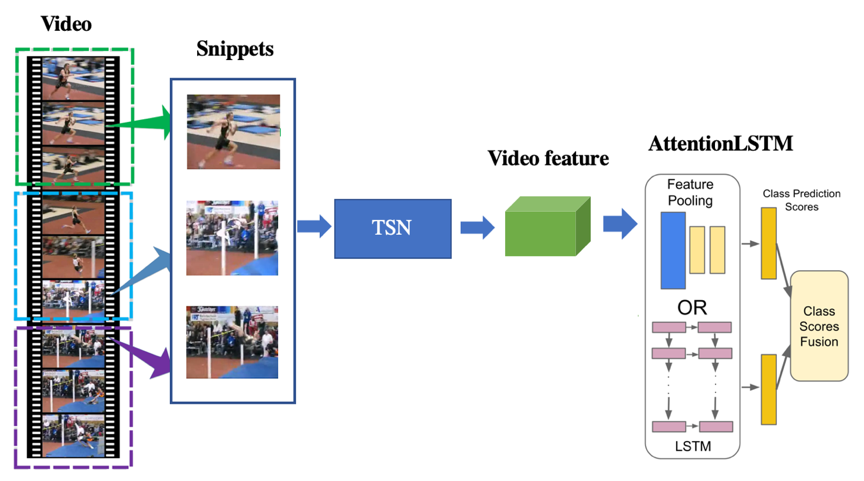

+ * 新增工业级短视频分类模型[videotag_tsn_lstm](https://paddlepaddle.org.cn/hubdetail?name=videotag_tsn_lstm&en_category=VideoClassification),支持3000类中文标签识别

+ * 新增轻量级中文OCR模型[chinese_ocr_db_rcnn](https://www.paddlepaddle.org.cn/hubdetail?name=chinese_ocr_db_rcnn&en_category=TextRecognition)、[chinese_text_detection_db](https://www.paddlepaddle.org.cn/hubdetail?name=chinese_text_detection_db&en_category=TextRecognition),支持一键快速OCR识别

+ * 新增行人检测、车辆检测、动物识别、Object等工业级模型

+

+* Fine-tune API升级

+ * 文本分类任务新增6个预置网络,包括CNN, BOW, LSTM, BiLSTM, DPCNN等

+ * 使用VisualDL可视化训练评估性能数据

+

+## `v1.6.2`

+

+* 修复图像分类在windows下运行错误

+

+## `v1.6.1`

+

+* 修复windows下安装PaddleHub缺失config.json文件

+

# `v1.6.0`

* NLP Module全面升级,提升应用性和灵活性

diff --git a/paddlehub/autodl/DELTA/README.md b/autodl/DELTA/README.md

similarity index 84%

rename from paddlehub/autodl/DELTA/README.md

rename to autodl/DELTA/README.md

index 7b235d46f085ba05aa41ab0dabd9317b3efd09da..8620bb0dec9c0fad617abe6537f5ac93c7c503b8 100644

--- a/paddlehub/autodl/DELTA/README.md

+++ b/autodl/DELTA/README.md

@@ -1,10 +1,12 @@

+# DELTA: DEep Learning Transfer using Feature Map with Attention for Convolutional Networks

-# Introduction

-This page implements the [DELTA](https://arxiv.org/abs/1901.09229) algorithm in [PaddlePaddle](https://www.paddlepaddle.org.cn/install/quick).

+## Introduction

+

+This page implements the [DELTA](https://arxiv.org/abs/1901.09229) algorithm in [PaddlePaddle](https://www.paddlepaddle.org.cn).

> Li, Xingjian, et al. "DELTA: Deep learning transfer using feature map with attention for convolutional networks." ICLR 2019.

-# Preparation of Data and Pre-trained Model

+## Preparation of Data and Pre-trained Model

- Download transfer learning target datasets, like [Caltech-256](http://www.vision.caltech.edu/Image_Datasets/Caltech256/), [CUB_200_2011](http://www.vision.caltech.edu/visipedia/CUB-200-2011.html) or others. Arrange the dataset in this way:

```

@@ -23,7 +25,7 @@ This page implements the [DELTA](https://arxiv.org/abs/1901.09229) algorithm in

- Download [the pretrained models](https://github.com/PaddlePaddle/models/tree/release/1.7/PaddleCV/image_classification#resnet-series). We give the results of ResNet-101 below.

-# Running Scripts

+## Running Scripts

Modify `global_data_path` in `datasets/data_path` to the path root where the dataset is.

diff --git a/paddlehub/autodl/DELTA/args.py b/autodl/DELTA/args.py

similarity index 100%

rename from paddlehub/autodl/DELTA/args.py

rename to autodl/DELTA/args.py

diff --git a/paddlehub/autodl/DELTA/datasets/data_path.py b/autodl/DELTA/datasets/data_path.py

similarity index 100%

rename from paddlehub/autodl/DELTA/datasets/data_path.py

rename to autodl/DELTA/datasets/data_path.py

diff --git a/paddlehub/autodl/DELTA/datasets/readers.py b/autodl/DELTA/datasets/readers.py

similarity index 100%

rename from paddlehub/autodl/DELTA/datasets/readers.py

rename to autodl/DELTA/datasets/readers.py

diff --git a/paddlehub/autodl/DELTA/main.py b/autodl/DELTA/main.py

similarity index 100%

rename from paddlehub/autodl/DELTA/main.py

rename to autodl/DELTA/main.py

diff --git a/paddlehub/autodl/DELTA/models/__init__.py b/autodl/DELTA/models/__init__.py

similarity index 100%

rename from paddlehub/autodl/DELTA/models/__init__.py

rename to autodl/DELTA/models/__init__.py

diff --git a/paddlehub/autodl/DELTA/models/resnet.py b/autodl/DELTA/models/resnet.py

similarity index 100%

rename from paddlehub/autodl/DELTA/models/resnet.py

rename to autodl/DELTA/models/resnet.py

diff --git a/paddlehub/autodl/DELTA/models/resnet_vc.py b/autodl/DELTA/models/resnet_vc.py

similarity index 100%

rename from paddlehub/autodl/DELTA/models/resnet_vc.py

rename to autodl/DELTA/models/resnet_vc.py

diff --git a/demo/autofinetune_image_classification/img_cls.py b/demo/autofinetune_image_classification/img_cls.py

index c1194de2f52877b23924a91610e50284c1e3734a..ba61db1a9d584cde8952ac1f839137c2d604625c 100644

--- a/demo/autofinetune_image_classification/img_cls.py

+++ b/demo/autofinetune_image_classification/img_cls.py

@@ -18,7 +18,7 @@ parser.add_argument(

default="mobilenet",

help="Module used as feature extractor.")

-# the name of hyperparameters to be searched should keep with hparam.py

+# the name of hyper-parameters to be searched should keep with hparam.py

parser.add_argument(

"--batch_size",

type=int,

@@ -27,7 +27,7 @@ parser.add_argument(

parser.add_argument(

"--learning_rate", type=float, default=1e-4, help="learning_rate.")

-# saved_params_dir and model_path are needed by auto finetune

+# saved_params_dir and model_path are needed by auto fine-tune

parser.add_argument(

"--saved_params_dir",

type=str,

@@ -76,7 +76,7 @@ def finetune(args):

img = input_dict["image"]

feed_list = [img.name]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.DefaultFinetuneStrategy(learning_rate=args.learning_rate)

config = hub.RunConfig(

use_cuda=True,

@@ -100,7 +100,7 @@ def finetune(args):

task.load_parameters(args.model_path)

logger.info("PaddleHub has loaded model from %s" % args.model_path)

- # Finetune by PaddleHub's API

+ # Fine-tune by PaddleHub's API

task.finetune()

# Evaluate by PaddleHub's API

run_states = task.eval()

@@ -114,7 +114,7 @@ def finetune(args):

shutil.copytree(best_model_dir, args.saved_params_dir)

shutil.rmtree(config.checkpoint_dir)

- # acc on dev will be used by auto finetune

+ # acc on dev will be used by auto fine-tune

hub.report_final_result(eval_avg_score["acc"])

diff --git a/demo/autofinetune_text_classification/text_cls.py b/demo/autofinetune_text_classification/text_cls.py

index a08ef35b9468dc7ca76e8b4b9f570c62cc96c58c..198523430b0a07b1afebbc1ef9078b8c41472965 100644

--- a/demo/autofinetune_text_classification/text_cls.py

+++ b/demo/autofinetune_text_classification/text_cls.py

@@ -13,7 +13,7 @@ from paddlehub.common.logger import logger

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--epochs", type=int, default=3, help="epochs.")

-# the name of hyperparameters to be searched should keep with hparam.py

+# the name of hyper-parameters to be searched should keep with hparam.py

parser.add_argument("--batch_size", type=int, default=32, help="batch_size.")

parser.add_argument(

"--learning_rate", type=float, default=5e-5, help="learning_rate.")

@@ -33,7 +33,7 @@ parser.add_argument(

default=None,

help="Directory to model checkpoint")

-# saved_params_dir and model_path are needed by auto finetune

+# saved_params_dir and model_path are needed by auto fine-tune

parser.add_argument(

"--saved_params_dir",

type=str,

@@ -82,14 +82,14 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.AdamWeightDecayStrategy(

warmup_proportion=args.warmup_prop,

learning_rate=args.learning_rate,

weight_decay=args.weight_decay,

lr_scheduler="linear_decay")

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

checkpoint_dir=args.checkpoint_dir,

use_cuda=True,

@@ -98,7 +98,7 @@ if __name__ == '__main__':

enable_memory_optim=True,

strategy=strategy)

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

cls_task = hub.TextClassifierTask(

data_reader=reader,

feature=pooled_output,

@@ -125,5 +125,5 @@ if __name__ == '__main__':

shutil.copytree(best_model_dir, args.saved_params_dir)

shutil.rmtree(config.checkpoint_dir)

- # acc on dev will be used by auto finetune

+ # acc on dev will be used by auto fine-tune

hub.report_final_result(eval_avg_score["acc"])

diff --git a/demo/image_classification/img_classifier.py b/demo/image_classification/img_classifier.py

index 40e170a564ddcc9c54a6d6aff08e898466da5320..f79323be30f79509dcf4a0588383a724e2cbbcc5 100644

--- a/demo/image_classification/img_classifier.py

+++ b/demo/image_classification/img_classifier.py

@@ -14,7 +14,7 @@ parser.add_argument("--use_gpu", type=ast.literal_eval, default=True

parser.add_argument("--checkpoint_dir", type=str, default="paddlehub_finetune_ckpt", help="Path to save log data.")

parser.add_argument("--batch_size", type=int, default=16, help="Total examples' number in batch for training.")

parser.add_argument("--module", type=str, default="resnet50", help="Module used as feature extractor.")

-parser.add_argument("--dataset", type=str, default="flowers", help="Dataset to finetune.")

+parser.add_argument("--dataset", type=str, default="flowers", help="Dataset to fine-tune.")

parser.add_argument("--use_data_parallel", type=ast.literal_eval, default=True, help="Whether use data parallel.")

# yapf: enable.

@@ -60,7 +60,7 @@ def finetune(args):

# Setup feed list for data feeder

feed_list = [input_dict["image"].name]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=args.use_data_parallel,

use_cuda=args.use_gpu,

@@ -69,7 +69,7 @@ def finetune(args):

checkpoint_dir=args.checkpoint_dir,

strategy=hub.finetune.strategy.DefaultFinetuneStrategy())

- # Define a reading comprehension finetune task by PaddleHub's API

+ # Define a image classification task by PaddleHub Fine-tune API

task = hub.ImageClassifierTask(

data_reader=data_reader,

feed_list=feed_list,

@@ -77,7 +77,7 @@ def finetune(args):

num_classes=dataset.num_labels,

config=config)

- # Finetune by PaddleHub's API

+ # Fine-tune by PaddleHub's API

task.finetune_and_eval()

diff --git a/demo/image_classification/predict.py b/demo/image_classification/predict.py

index bc2192686b049f95fbfdd9bef6da92598404848c..ac6bc802e2dc3d2b2c54bfcb59a0e58c3161354f 100644

--- a/demo/image_classification/predict.py

+++ b/demo/image_classification/predict.py

@@ -13,7 +13,7 @@ parser.add_argument("--use_gpu", type=ast.literal_eval, default=True

parser.add_argument("--checkpoint_dir", type=str, default="paddlehub_finetune_ckpt", help="Path to save log data.")

parser.add_argument("--batch_size", type=int, default=16, help="Total examples' number in batch for training.")

parser.add_argument("--module", type=str, default="resnet50", help="Module used as a feature extractor.")

-parser.add_argument("--dataset", type=str, default="flowers", help="Dataset to finetune.")

+parser.add_argument("--dataset", type=str, default="flowers", help="Dataset to fine-tune.")

# yapf: enable.

module_map = {

@@ -58,7 +58,7 @@ def predict(args):

# Setup feed list for data feeder

feed_list = [input_dict["image"].name]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=False,

use_cuda=args.use_gpu,

@@ -66,7 +66,7 @@ def predict(args):

checkpoint_dir=args.checkpoint_dir,

strategy=hub.finetune.strategy.DefaultFinetuneStrategy())

- # Define a reading comprehension finetune task by PaddleHub's API

+ # Define a image classification task by PaddleHub Fine-tune API

task = hub.ImageClassifierTask(

data_reader=data_reader,

feed_list=feed_list,

diff --git a/demo/multi_label_classification/multi_label_classifier.py b/demo/multi_label_classification/multi_label_classifier.py

index f958902fe4cade75e5a624e7c84225e4344aae78..76645d2f88fb390e3b36ea3e2c86809d17451284 100644

--- a/demo/multi_label_classification/multi_label_classifier.py

+++ b/demo/multi_label_classification/multi_label_classifier.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

import argparse

import ast

@@ -23,7 +23,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=3, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--learning_rate", type=float, default=5e-5, help="Learning rate used to train with warmup.")

parser.add_argument("--weight_decay", type=float, default=0.01, help="Weight decay rate for L2 regularizer.")

parser.add_argument("--warmup_proportion", type=float, default=0.1, help="Warmup proportion params for warmup strategy")

@@ -56,13 +56,13 @@ if __name__ == '__main__':

# Use "pooled_output" for classification tasks on an entire sentence.

pooled_output = outputs["pooled_output"]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.AdamWeightDecayStrategy(

warmup_proportion=args.warmup_proportion,

weight_decay=args.weight_decay,

learning_rate=args.learning_rate)

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_cuda=args.use_gpu,

num_epoch=args.num_epoch,

@@ -70,7 +70,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=strategy)

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

multi_label_cls_task = hub.MultiLabelClassifierTask(

data_reader=reader,

feature=pooled_output,

@@ -78,6 +78,6 @@ if __name__ == '__main__':

num_classes=dataset.num_labels,

config=config)

- # Finetune and evaluate by PaddleHub's API

+ # Fine-tune and evaluate by PaddleHub's API

# will finish training, evaluation, testing, save model automatically

multi_label_cls_task.finetune_and_eval()

diff --git a/demo/multi_label_classification/predict.py b/demo/multi_label_classification/predict.py

index bcc11592232d1f946c945d0d6ca6eff87cde7090..bcd849061e5a663933a83c9a39b2d0d5cf2f8705 100644

--- a/demo/multi_label_classification/predict.py

+++ b/demo/multi_label_classification/predict.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

from __future__ import absolute_import

from __future__ import division

@@ -35,7 +35,7 @@ parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

parser.add_argument("--batch_size", type=int, default=1, help="Total examples' number in batch for training.")

parser.add_argument("--max_seq_len", type=int, default=128, help="Number of words of the longest seqence.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

args = parser.parse_args()

# yapf: enable.

@@ -65,7 +65,7 @@ if __name__ == '__main__':

# Use "sequence_output" for token-level output.

pooled_output = outputs["pooled_output"]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=False,

use_cuda=args.use_gpu,

@@ -73,7 +73,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.finetune.strategy.DefaultFinetuneStrategy())

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

multi_label_cls_task = hub.MultiLabelClassifierTask(

data_reader=reader,

feature=pooled_output,

diff --git a/demo/qa_classification/classifier.py b/demo/qa_classification/classifier.py

index 4c1fad8030e567b7dcb4c1576209d1ac06ee65e6..70f22a70938017ca270f0d3577a1574053c0fa9f 100644

--- a/demo/qa_classification/classifier.py

+++ b/demo/qa_classification/classifier.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

import argparse

import ast

@@ -23,7 +23,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=3, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--learning_rate", type=float, default=5e-5, help="Learning rate used to train with warmup.")

parser.add_argument("--weight_decay", type=float, default=0.01, help="Weight decay rate for L2 regularizer.")

parser.add_argument("--warmup_proportion", type=float, default=0.0, help="Warmup proportion params for warmup strategy")

@@ -61,13 +61,13 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.AdamWeightDecayStrategy(

warmup_proportion=args.warmup_proportion,

weight_decay=args.weight_decay,

learning_rate=args.learning_rate)

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=args.use_data_parallel,

use_cuda=args.use_gpu,

@@ -76,7 +76,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=strategy)

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

cls_task = hub.TextClassifierTask(

data_reader=reader,

feature=pooled_output,

@@ -84,6 +84,6 @@ if __name__ == '__main__':

num_classes=dataset.num_labels,

config=config)

- # Finetune and evaluate by PaddleHub's API

+ # Fine-tune and evaluate by PaddleHub's API

# will finish training, evaluation, testing, save model automatically

cls_task.finetune_and_eval()

diff --git a/demo/qa_classification/predict.py b/demo/qa_classification/predict.py

index fd8ab5a48047eebdc45776e6aeb9be8839a7c3ee..170319d2ee55f0c8060d42fb3f18ec920152ccc7 100644

--- a/demo/qa_classification/predict.py

+++ b/demo/qa_classification/predict.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

from __future__ import absolute_import

from __future__ import division

@@ -33,7 +33,7 @@ parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

parser.add_argument("--batch_size", type=int, default=1, help="Total examples' number in batch for training.")

parser.add_argument("--max_seq_len", type=int, default=128, help="Number of words of the longest seqence.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for fine-tuning, input should be True or False")

args = parser.parse_args()

# yapf: enable.

@@ -63,7 +63,7 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=False,

use_cuda=args.use_gpu,

@@ -71,7 +71,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.finetune.strategy.DefaultFinetuneStrategy())

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

cls_task = hub.TextClassifierTask(

data_reader=reader,

feature=pooled_output,

diff --git a/demo/reading_comprehension/predict.py b/demo/reading_comprehension/predict.py

index a9f8c2f998fb0a29ea76473f412142806ea36b3b..2cc96f62acea550e3ffa9d9e0bb12bfbb9d3ce7b 100644

--- a/demo/reading_comprehension/predict.py

+++ b/demo/reading_comprehension/predict.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

from __future__ import absolute_import

from __future__ import division

@@ -28,7 +28,7 @@ hub.common.logger.logger.setLevel("INFO")

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=1, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint.")

parser.add_argument("--max_seq_len", type=int, default=384, help="Number of words of the longest seqence.")

parser.add_argument("--batch_size", type=int, default=8, help="Total examples' number in batch for training.")

@@ -64,7 +64,7 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=False,

use_cuda=args.use_gpu,

@@ -72,7 +72,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.AdamWeightDecayStrategy())

- # Define a reading comprehension finetune task by PaddleHub's API

+ # Define a reading comprehension fine-tune task by PaddleHub's API

reading_comprehension_task = hub.ReadingComprehensionTask(

data_reader=reader,

feature=seq_output,

diff --git a/demo/reading_comprehension/reading_comprehension.py b/demo/reading_comprehension/reading_comprehension.py

index 11fe241d8aff97591979e2dcde16f74a7ef67367..d4793823d2147ecb6f8badb776d4cb827b541a8d 100644

--- a/demo/reading_comprehension/reading_comprehension.py

+++ b/demo/reading_comprehension/reading_comprehension.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

import argparse

import ast

@@ -25,7 +25,7 @@ hub.common.logger.logger.setLevel("INFO")

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=1, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--learning_rate", type=float, default=3e-5, help="Learning rate used to train with warmup.")

parser.add_argument("--weight_decay", type=float, default=0.01, help="Weight decay rate for L2 regularizer.")

parser.add_argument("--warmup_proportion", type=float, default=0.0, help="Warmup proportion params for warmup strategy")

@@ -64,13 +64,13 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.AdamWeightDecayStrategy(

weight_decay=args.weight_decay,

learning_rate=args.learning_rate,

warmup_proportion=args.warmup_proportion)

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

eval_interval=300,

use_data_parallel=args.use_data_parallel,

@@ -80,7 +80,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=strategy)

- # Define a reading comprehension finetune task by PaddleHub's API

+ # Define a reading comprehension fine-tune task by PaddleHub's API

reading_comprehension_task = hub.ReadingComprehensionTask(

data_reader=reader,

feature=seq_output,

@@ -89,5 +89,5 @@ if __name__ == '__main__':

sub_task="squad",

)

- # Finetune by PaddleHub's API

+ # Fine-tune by PaddleHub's API

reading_comprehension_task.finetune_and_eval()

diff --git a/demo/regression/predict.py b/demo/regression/predict.py

index 0adfc3886a54f60b7282fbc0584793f7b1c06a5d..b9e73d995f9c63fd847bda46561bd35c66a31f2a 100644

--- a/demo/regression/predict.py

+++ b/demo/regression/predict.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

from __future__ import absolute_import

from __future__ import division

@@ -33,7 +33,7 @@ parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

parser.add_argument("--batch_size", type=int, default=1, help="Total examples' number in batch for training.")

parser.add_argument("--max_seq_len", type=int, default=512, help="Number of words of the longest seqence.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for fine-tuning, input should be True or False")

args = parser.parse_args()

# yapf: enable.

@@ -64,7 +64,7 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=False,

use_cuda=args.use_gpu,

@@ -72,7 +72,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.AdamWeightDecayStrategy())

- # Define a regression finetune task by PaddleHub's API

+ # Define a regression fine-tune task by PaddleHub's API

reg_task = hub.RegressionTask(

data_reader=reader,

feature=pooled_output,

diff --git a/demo/regression/regression.py b/demo/regression/regression.py

index e2c1c0bf5da280b9c7a701a6c393a6ddd8bea145..0979e1c639ca728c46151ad151aaaa9bd389ecc1 100644

--- a/demo/regression/regression.py

+++ b/demo/regression/regression.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

import argparse

import ast

@@ -23,7 +23,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=3, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--learning_rate", type=float, default=5e-5, help="Learning rate used to train with warmup.")

parser.add_argument("--weight_decay", type=float, default=0.01, help="Weight decay rate for L2 regularizer.")

parser.add_argument("--warmup_proportion", type=float, default=0.1, help="Warmup proportion params for warmup strategy")

@@ -62,13 +62,13 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.AdamWeightDecayStrategy(

warmup_proportion=args.warmup_proportion,

weight_decay=args.weight_decay,

learning_rate=args.learning_rate)

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

eval_interval=300,

use_data_parallel=args.use_data_parallel,

@@ -78,13 +78,13 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=strategy)

- # Define a regression finetune task by PaddleHub's API

+ # Define a regression fine-tune task by PaddleHub's API

reg_task = hub.RegressionTask(

data_reader=reader,

feature=pooled_output,

feed_list=feed_list,

config=config)

- # Finetune and evaluate by PaddleHub's API

+ # Fine-tune and evaluate by PaddleHub's API

# will finish training, evaluation, testing, save model automatically

reg_task.finetune_and_eval()

diff --git a/demo/senta/predict.py b/demo/senta/predict.py

index a1d800889fe72876a733629e2f822efd53fecfd4..f287c576d95588aedf4baf5e8563a2d09f6f61b6 100644

--- a/demo/senta/predict.py

+++ b/demo/senta/predict.py

@@ -16,7 +16,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--batch_size", type=int, default=1, help="Total examples' number in batch when the program predicts.")

args = parser.parse_args()

# yapf: enable.

@@ -37,7 +37,7 @@ if __name__ == '__main__':

# Must feed all the tensor of senta's module need

feed_list = [inputs["words"].name]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=False,

use_cuda=args.use_gpu,

@@ -45,7 +45,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.AdamWeightDecayStrategy())

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

cls_task = hub.TextClassifierTask(

data_reader=reader,

feature=sent_feature,

diff --git a/demo/senta/senta_finetune.py b/demo/senta/senta_finetune.py

index 18b0a092dc25a2bbcd3313a9e6a66cd3976d303f..cba8326e5aa04ca71a05862a5de8524350b26ac8 100644

--- a/demo/senta/senta_finetune.py

+++ b/demo/senta/senta_finetune.py

@@ -8,7 +8,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=3, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

parser.add_argument("--batch_size", type=int, default=32, help="Total examples' number in batch for training.")

args = parser.parse_args()

@@ -30,7 +30,7 @@ if __name__ == '__main__':

# Must feed all the tensor of senta's module need

feed_list = [inputs["words"].name]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_cuda=args.use_gpu,

use_pyreader=False,

@@ -40,7 +40,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.AdamWeightDecayStrategy())

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

cls_task = hub.TextClassifierTask(

data_reader=reader,

feature=sent_feature,

@@ -48,6 +48,6 @@ if __name__ == '__main__':

num_classes=dataset.num_labels,

config=config)

- # Finetune and evaluate by PaddleHub's API

+ # Fine-tune and evaluate by PaddleHub's API

# will finish training, evaluation, testing, save model automatically

cls_task.finetune_and_eval()

diff --git a/demo/sequence_labeling/predict.py b/demo/sequence_labeling/predict.py

index fb189b42b83319bcee2823d71ca25bb94e52ec18..54deb81d41f848719b7d1263b56b0cdadefa7de4 100644

--- a/demo/sequence_labeling/predict.py

+++ b/demo/sequence_labeling/predict.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on sequence labeling task """

+"""Fine-tuning on sequence labeling task """

from __future__ import absolute_import

from __future__ import division

@@ -27,14 +27,13 @@ import time

import paddle

import paddle.fluid as fluid

import paddlehub as hub

-from paddlehub.finetune.evaluate import chunk_eval, calculate_f1

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

parser.add_argument("--max_seq_len", type=int, default=512, help="Number of words of the longest seqence.")

parser.add_argument("--batch_size", type=int, default=1, help="Total examples' number in batch for training.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for fine-tuning, input should be True or False")

args = parser.parse_args()

# yapf: enable.

@@ -67,7 +66,7 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=False,

use_cuda=args.use_gpu,

@@ -75,7 +74,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.finetune.strategy.DefaultFinetuneStrategy())

- # Define a sequence labeling finetune task by PaddleHub's API

+ # Define a sequence labeling fine-tune task by PaddleHub's API

# if add crf, the network use crf as decoder

seq_label_task = hub.SequenceLabelTask(

data_reader=reader,

@@ -84,7 +83,7 @@ if __name__ == '__main__':

max_seq_len=args.max_seq_len,

num_classes=dataset.num_labels,

config=config,

- add_crf=True)

+ add_crf=False)

# Data to be predicted

# If using python 2, prefix "u" is necessary

diff --git a/demo/sequence_labeling/sequence_label.py b/demo/sequence_labeling/sequence_label.py

index a2b283e857c39ff60912a5df5560ddc08f5f4a1c..958f9839b9fa1ea4655dec20e56165eaf7883da1 100644

--- a/demo/sequence_labeling/sequence_label.py

+++ b/demo/sequence_labeling/sequence_label.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on sequence labeling task."""

+"""Fine-tuning on sequence labeling task."""

import argparse

import ast

@@ -23,7 +23,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=3, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--learning_rate", type=float, default=5e-5, help="Learning rate used to train with warmup.")

parser.add_argument("--weight_decay", type=float, default=0.01, help="Weight decay rate for L2 regularizer.")

parser.add_argument("--warmup_proportion", type=float, default=0.1, help="Warmup proportion params for warmup strategy")

@@ -60,13 +60,13 @@ if __name__ == '__main__':

inputs["segment_ids"].name, inputs["input_mask"].name

]

- # Select a finetune strategy

+ # Select a fine-tune strategy

strategy = hub.AdamWeightDecayStrategy(

warmup_proportion=args.warmup_proportion,

weight_decay=args.weight_decay,

learning_rate=args.learning_rate)

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=args.use_data_parallel,

use_cuda=args.use_gpu,

@@ -75,7 +75,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=strategy)

- # Define a sequence labeling finetune task by PaddleHub's API

+ # Define a sequence labeling fine-tune task by PaddleHub's API

# If add crf, the network use crf as decoder

seq_label_task = hub.SequenceLabelTask(

data_reader=reader,

@@ -84,8 +84,8 @@ if __name__ == '__main__':

max_seq_len=args.max_seq_len,

num_classes=dataset.num_labels,

config=config,

- add_crf=True)

+ add_crf=False)

- # Finetune and evaluate model by PaddleHub's API

+ # Fine-tune and evaluate model by PaddleHub's API

# will finish training, evaluation, testing, save model automatically

seq_label_task.finetune_and_eval()

diff --git a/demo/ssd/ssd_demo.py b/demo/ssd/ssd_demo.py

index 3d4b376984f877dbd1fe25585d9b71b826e2ef20..cedeb1cfc5edd9b2d790413024f40d8433b4f413 100644

--- a/demo/ssd/ssd_demo.py

+++ b/demo/ssd/ssd_demo.py

@@ -1,20 +1,14 @@

#coding:utf-8

import os

import paddlehub as hub

+import cv2

if __name__ == "__main__":

ssd = hub.Module(name="ssd_mobilenet_v1_pascal")

test_img_path = os.path.join("test", "test_img_bird.jpg")

- # get the input keys for signature 'object_detection'

- data_format = ssd.processor.data_format(sign_name='object_detection')

- key = list(data_format.keys())[0]

-

- # set input dict

- input_dict = {key: [test_img_path]}

-

# execute predict and print the result

- results = ssd.object_detection(data=input_dict)

+ results = ssd.object_detection(images=[cv2.imread(test_img_path)])

for result in results:

- hub.logger.info(result)

+ print(result)

diff --git a/demo/text_classification/README.md b/demo/text_classification/README.md

index 65c064eb2fcaa075de5e5102ba2dea2c42150ebe..7e3c7c643fdf2adb18576b2a10564eab87e7a8ff 100644

--- a/demo/text_classification/README.md

+++ b/demo/text_classification/README.md

@@ -2,9 +2,31 @@

本示例将展示如何使用PaddleHub Fine-tune API以及Transformer类预训练模型(ERNIE/BERT/RoBERTa)完成分类任务。

+**PaddleHub 1.7.0以上版本支持在Transformer类预训练模型之后拼接预置网络(bow, bilstm, cnn, dpcnn, gru, lstm)完成文本分类任务**

+

+## 目录结构

+```

+text_classification

+├── finetuned_model_to_module # PaddleHub Fine-tune得到模型如何转化为module,从而利用PaddleHub Serving部署

+│ ├── __init__.py

+│ └── module.py

+├── predict_predefine_net.py # 加入预置网络预测脚本

+├── predict.py # 不使用预置网络(使用fc网络)的预测脚本

+├── README.md # 文本分类迁移学习文档说明

+├── run_cls_predefine_net.sh # 加入预置网络的文本分类任务训练启动脚本

+├── run_cls.sh # 不使用预置网络(使用fc网络)的训练启动脚本

+├── run_predict_predefine_net.sh # 使用预置网络(使用fc网络)的预测启动脚本

+├── run_predict.sh # # 不使用预置网络(使用fc网络)的预测启动脚本

+├── text_classifier_dygraph.py # 动态图训练脚本

+├── text_cls_predefine_net.py # 加入预置网络训练脚本

+└── text_cls.py # 不使用预置网络(使用fc网络)的训练脚本

+```

+

## 如何开始Fine-tune

-在完成安装PaddlePaddle与PaddleHub后,通过执行脚本`sh run_classifier.sh`即可开始使用ERNIE对ChnSentiCorp数据集进行Fine-tune。

+以下例子已不使用预置网络完成文本分类任务,说明PaddleHub如何完成迁移学习。使用预置网络完成文本分类任务,步骤类似。

+

+在完成安装PaddlePaddle与PaddleHub后,通过执行脚本`sh run_cls.sh`即可开始使用ERNIE对ChnSentiCorp数据集进行Fine-tune。

其中脚本参数说明如下:

@@ -164,9 +186,27 @@ cls_task = hub.TextClassifierTask(

cls_task.finetune_and_eval()

```

**NOTE:**

-1. `outputs["pooled_output"]`返回了ERNIE/BERT模型对应的[CLS]向量,可以用于句子或句对的特征表达。

-2. `feed_list`中的inputs参数指名了ERNIE/BERT中的输入tensor的顺序,与ClassifyReader返回的结果一致。

+1. `outputs["pooled_output"]`返回了Transformer类预训练模型对应的[CLS]向量,可以用于句子或句对的特征表达。

+2. `feed_list`中的inputs参数指名了Transformer类预训练模型中的输入tensor的顺序,与ClassifyReader返回的结果一致。

3. `hub.TextClassifierTask`通过输入特征,label与迁移的类别数,可以生成适用于文本分类的迁移任务`TextClassifierTask`。

+4. 使用预置网络与否,传入`hub.TextClassifierTask`的特征不相同。`hub.TextClassifierTask`通过参数`feature`和`token_feature`区分。

+ `feature`应是sentence-level特征,shape应为[-1, emb_size];`token_feature`是token-levle特征,shape应为[-1, max_seq_len, emb_size]。

+ 如果使用预置网络,则应取Transformer类预训练模型的sequence_output特征(`outputs["sequence_output"]`)。并且`hub.TextClassifierTask(token_feature=outputs["sequence_output"])`。

+ 如果不使用预置网络,直接通过fc网络进行分类,则应取Transformer类预训练模型的pooled_output特征(`outputs["pooled_output"]`)。并且`hub.TextClassifierTask(feature=outputs["pooled_output"])`。

+5. 使用预置网络,可以通过`hub.TextClassifierTask`参数network进行指定不同的网络结构。如下代码表示选择bilstm网络拼接在Transformer类预训练模型之后。

+ PaddleHub文本分类任务预置网络支持BOW,Bi-LSTM,CNN,DPCNN,GRU,LSTM。指定network应是其中之一。

+ 其中DPCNN网络实现为[ACL2017-Deep Pyramid Convolutional Neural Networks for Text Categorization](https://www.aclweb.org/anthology/P17-1052.pdf)。

+```python

+cls_task = hub.TextClassifierTask(

+ data_reader=reader,

+ token_feature=outputs["sequence_output"],

+ feed_list=feed_list,

+ network='bilstm',

+ num_classes=dataset.num_labels,

+ config=config,

+ metrics_choices=metrics_choices)

+```

+

#### 自定义迁移任务

@@ -190,29 +230,9 @@ python predict.py --checkpoint_dir $CKPT_DIR --max_seq_len 128

```

其中CKPT_DIR为Fine-tune API保存最佳模型的路径, max_seq_len是ERNIE模型的最大序列长度,*请与训练时配置的参数保持一致*

-参数配置正确后,请执行脚本`sh run_predict.sh`,即可看到以下文本分类预测结果, 以及最终准确率。

-如需了解更多预测步骤,请参考`predict.py`。

-

-```

-这个宾馆比较陈旧了,特价的房间也很一般。总体来说一般 predict=0

-交通方便;环境很好;服务态度很好 房间较小 predict=1

-19天硬盘就罢工了~~~算上运来的一周都没用上15天~~~可就是不能换了~~~唉~~~~你说这算什么事呀~~~ predict=0

-```

+参数配置正确后,请执行脚本`sh run_predict.sh`,即可看到文本分类预测结果。

-我们在AI Studio上提供了IPython NoteBook形式的demo,您可以直接在平台上在线体验,链接如下:

-

-|预训练模型|任务类型|数据集|AIStudio链接|备注|

-|-|-|-|-|-|

-|ResNet|图像分类|猫狗数据集DogCat|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/147010)||

-|ERNIE|文本分类|中文情感分类数据集ChnSentiCorp|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/147006)||

-|ERNIE|文本分类|中文新闻分类数据集THUNEWS|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/221999)|本教程讲述了如何将自定义数据集加载,并利用Fine-tune API完成文本分类迁移学习。|

-|ERNIE|序列标注|中文序列标注数据集MSRA_NER|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/147009)||

-|ERNIE|序列标注|中文快递单数据集Express|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/184200)|本教程讲述了如何将自定义数据集加载,并利用Fine-tune API完成序列标注迁移学习。|

-|ERNIE Tiny|文本分类|中文情感分类数据集ChnSentiCorp|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/186443)||

-|Senta|文本分类|中文情感分类数据集ChnSentiCorp|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/216846)|本教程讲述了任何利用Senta和Fine-tune API完成情感分类迁移学习。|

-|Senta|情感分析预测|N/A|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/215814)||

-|LAC|词法分析|N/A|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/215711)||

-|Ultra-Light-Fast-Generic-Face-Detector-1MB|人脸检测|N/A|[点击体验](https://aistudio.baidu.com/aistudio/projectdetail/215962)||

+我们在AI Studio上提供了IPython NoteBook形式的demo,点击[PaddleHub教程合集](https://aistudio.baidu.com/aistudio/projectdetail/231146),可使用AI Studio平台提供的GPU算力进行快速尝试。

## 超参优化AutoDL Finetuner

diff --git a/demo/text_classification/predict.py b/demo/text_classification/predict.py

index 81dcd41ecf193a7329a003827351f3b843118de8..3a63e63b1078d537e502aad0613cccd712186b72 100644

--- a/demo/text_classification/predict.py

+++ b/demo/text_classification/predict.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

from __future__ import absolute_import

from __future__ import division

@@ -32,7 +32,7 @@ parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

parser.add_argument("--batch_size", type=int, default=1, help="Total examples' number in batch for training.")

parser.add_argument("--max_seq_len", type=int, default=512, help="Number of words of the longest seqence.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--use_data_parallel", type=ast.literal_eval, default=False, help="Whether use data parallel.")

args = parser.parse_args()

# yapf: enable.

@@ -70,7 +70,7 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=args.use_data_parallel,

use_cuda=args.use_gpu,

@@ -78,7 +78,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.AdamWeightDecayStrategy())

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

cls_task = hub.TextClassifierTask(

data_reader=reader,

feature=pooled_output,

diff --git a/demo/text_classification/predict_predefine_net.py b/demo/text_classification/predict_predefine_net.py

index e53cf2b8712f1160abb99e985ca85fb5a4174127..3255270310527b81c3eb272d8331ff7ce3dfd3b3 100644

--- a/demo/text_classification/predict_predefine_net.py

+++ b/demo/text_classification/predict_predefine_net.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

from __future__ import absolute_import

from __future__ import division

@@ -32,7 +32,7 @@ parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--checkpoint_dir", type=str, default=None, help="Directory to model checkpoint")

parser.add_argument("--batch_size", type=int, default=1, help="Total examples' number in batch for training.")

parser.add_argument("--max_seq_len", type=int, default=512, help="Number of words of the longest seqence.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=False, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--use_data_parallel", type=ast.literal_eval, default=False, help="Whether use data parallel.")

parser.add_argument("--network", type=str, default='bilstm', help="Pre-defined network which was connected after Transformer model, such as ERNIE, BERT ,RoBERTa and ELECTRA.")

args = parser.parse_args()

@@ -71,7 +71,7 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=args.use_data_parallel,

use_cuda=args.use_gpu,

@@ -79,7 +79,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=hub.AdamWeightDecayStrategy())

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

# network choice: bilstm, bow, cnn, dpcnn, gru, lstm (PaddleHub pre-defined network)

# If you wanna add network after ERNIE/BERT/RoBERTa/ELECTRA module,

# you must use the outputs["sequence_output"] as the token_feature of TextClassifierTask,

diff --git a/demo/text_classification/text_cls.py b/demo/text_classification/text_cls.py

index e221cdc7e9fbc0c63162c9a43e9751ddc6ac223a..b68925ba282775b0c57ceb6b249bc53ac258c55e 100644

--- a/demo/text_classification/text_cls.py

+++ b/demo/text_classification/text_cls.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

import argparse

import ast

@@ -21,7 +21,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=3, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--learning_rate", type=float, default=5e-5, help="Learning rate used to train with warmup.")

parser.add_argument("--weight_decay", type=float, default=0.01, help="Weight decay rate for L2 regularizer.")

parser.add_argument("--warmup_proportion", type=float, default=0.1, help="Warmup proportion params for warmup strategy")

@@ -68,13 +68,13 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.AdamWeightDecayStrategy(

warmup_proportion=args.warmup_proportion,

weight_decay=args.weight_decay,

learning_rate=args.learning_rate)

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=args.use_data_parallel,

use_cuda=args.use_gpu,

@@ -83,7 +83,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=strategy)

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

cls_task = hub.TextClassifierTask(

data_reader=reader,

feature=pooled_output,

@@ -92,6 +92,6 @@ if __name__ == '__main__':

config=config,

metrics_choices=metrics_choices)

- # Finetune and evaluate by PaddleHub's API

+ # Fine-tune and evaluate by PaddleHub's API

# will finish training, evaluation, testing, save model automatically

cls_task.finetune_and_eval()

diff --git a/demo/text_classification/text_cls_predefine_net.py b/demo/text_classification/text_cls_predefine_net.py

index 23746c03e2563ca2696ff0351cb93d73ae17de1f..4194bb4264bf86631fc9f550cc9b59f421be021d 100644

--- a/demo/text_classification/text_cls_predefine_net.py

+++ b/demo/text_classification/text_cls_predefine_net.py

@@ -12,7 +12,7 @@

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

-"""Finetuning on classification task """

+"""Fine-tuning on classification task """

import argparse

import ast

@@ -21,7 +21,7 @@ import paddlehub as hub

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

parser.add_argument("--num_epoch", type=int, default=3, help="Number of epoches for fine-tuning.")

-parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for finetuning, input should be True or False")

+parser.add_argument("--use_gpu", type=ast.literal_eval, default=True, help="Whether use GPU for fine-tuning, input should be True or False")

parser.add_argument("--learning_rate", type=float, default=5e-5, help="Learning rate used to train with warmup.")

parser.add_argument("--weight_decay", type=float, default=0.01, help="Weight decay rate for L2 regularizer.")

parser.add_argument("--warmup_proportion", type=float, default=0.1, help="Warmup proportion params for warmup strategy")

@@ -69,13 +69,13 @@ if __name__ == '__main__':

inputs["input_mask"].name,

]

- # Select finetune strategy, setup config and finetune

+ # Select fine-tune strategy, setup config and fine-tune

strategy = hub.AdamWeightDecayStrategy(

warmup_proportion=args.warmup_proportion,

weight_decay=args.weight_decay,

learning_rate=args.learning_rate)

- # Setup runing config for PaddleHub Finetune API

+ # Setup RunConfig for PaddleHub Fine-tune API

config = hub.RunConfig(

use_data_parallel=args.use_data_parallel,

use_cuda=args.use_gpu,

@@ -84,7 +84,7 @@ if __name__ == '__main__':

checkpoint_dir=args.checkpoint_dir,

strategy=strategy)

- # Define a classfication finetune task by PaddleHub's API

+ # Define a classfication fine-tune task by PaddleHub's API

# network choice: bilstm, bow, cnn, dpcnn, gru, lstm (PaddleHub pre-defined network)

# If you wanna add network after ERNIE/BERT/RoBERTa/ELECTRA module,

# you must use the outputs["sequence_output"] as the token_feature of TextClassifierTask,

@@ -98,6 +98,6 @@ if __name__ == '__main__':

config=config,

metrics_choices=metrics_choices)

- # Finetune and evaluate by PaddleHub's API

+ # Fine-tune and evaluate by PaddleHub's API

# will finish training, evaluation, testing, save model automatically

cls_task.finetune_and_eval()

diff --git a/docs/imgs/ocr_res.jpg b/docs/imgs/ocr_res.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..387298de8d3d62ceb8c32c7e091a5e701f92ad43

Binary files /dev/null and b/docs/imgs/ocr_res.jpg differ

diff --git a/docs/reference/task/task.md b/docs/reference/task/task.md

index a1286b25a19fff6d3f881c95ad2ac76690f95cc7..216f035667ba57613399cdd495faca14f0cf3f96 100644

--- a/docs/reference/task/task.md

+++ b/docs/reference/task/task.md

@@ -13,22 +13,22 @@ Task的基本方法和属性参见[BaseTask](base_task.md)。

PaddleHub预置了常见任务的Task,每种Task都有自己特有的应用场景以及提供了对应的度量指标,用于适应用户的不同需求。预置的任务类型如下:

* 图像分类任务

-[ImageClassifierTask]()

+[ImageClassifierTask](image_classify_task.md)

* 文本分类任务

-[TextClassifierTask]()

+[TextClassifierTask](text_classify_task.md)

* 序列标注任务

-[SequenceLabelTask]()

+[SequenceLabelTask](sequence_label_task.md)

* 多标签分类任务

-[MultiLabelClassifierTask]()

+[MultiLabelClassifierTask](multi_lable_classify_task.md)

* 回归任务

-[RegressionTask]()

+[RegressionTask](regression_task.md)

* 阅读理解任务

-[ReadingComprehensionTask]()

+[ReadingComprehensionTask](reading_comprehension_task.md)

## 自定义Task

-如果这些Task不支持您的特定需求,您也可以通过继承BasicTask来实现自己的任务,具体实现细节参见[自定义Task]()

+如果这些Task不支持您的特定需求,您也可以通过继承BasicTask来实现自己的任务,具体实现细节参见[自定义Task](../../tutorial/how_to_define_task.md)以及[修改Task中的模型网络](../../tutorial/define_task_example.md)

## 修改Task内置方法

-如果Task内置方法不满足您的需求,您可以通过Task支持的Hook机制修改方法实现,详细信息参见[修改Task内置方法]()

+如果Task内置方法不满足您的需求,您可以通过Task支持的Hook机制修改方法实现,详细信息参见[修改Task内置方法](../../tutorial/hook.md)

diff --git a/docs/reference/task/text_classify_task.md b/docs/reference/task/text_classify_task.md

index 560977fd5134bbc74ee3d74b9f7288a02e5131a2..9501cfcd60769ee71cc01019d95ccf4a202a9204 100644

--- a/docs/reference/task/text_classify_task.md

+++ b/docs/reference/task/text_classify_task.md

@@ -2,23 +2,28 @@

文本分类任务Task,继承自[BaseTask](base_task.md),该Task基于输入的特征,添加一个Dropout层,以及一个或多个全连接层来创建一个文本分类任务用于finetune,度量指标为准确率,损失函数为交叉熵Loss。

```python

hub.TextClassifierTask(

- feature,

num_classes,

feed_list,

data_reader,

+ feature=None,

+ token_feature=None,

startup_program=None,

config=None,

hidden_units=None,

+ network=None,

metrics_choices="default"):

```

**参数**

-* feature (fluid.Variable): 输入的特征矩阵。

+

* num_classes (int): 分类任务的类别数量

* feed_list (list): 待feed变量的名字列表

-* data_reader: 提供数据的Reader

+* data_reader: 提供数据的Reader,可选为ClassifyReader和LACClassifyReader。

+* feature(fluid.Variable): 输入的sentence-level特征矩阵,shape应为[-1, emb_size]。默认为None。

+* token_feature(fluid.Variable): 输入的token-level特征矩阵,shape应为[-1, seq_len, emb_size]。默认为None。feature和token_feature须指定其中一个。

+* network(str): 文本分类任务PaddleHub预置网络,支持BOW,Bi-LSTM,CNN,DPCNN,GRU,LSTM。如果指定network,则应使用token_feature作为输入特征。其中DPCNN网络实现为[ACL2017-Deep Pyramid Convolutional Neural Networks for Text Categorization](https://www.aclweb.org/anthology/P17-1052.pdf)。

* startup_program (fluid.Program): 存储了模型参数初始化op的Program,如果未提供,则使用fluid.default_startup_program()

-* config ([RunConfig](../config.md)): 运行配置

+* config ([RunConfig](../config.md)): 运行配置,如设置batch_size,epoch,learning_rate等。

* hidden_units (list): TextClassifierTask最终的全连接层输出维度为label_size,是每个label的概率值。在这个全连接层之前可以设置额外的全连接层,并指定它们的输出维度,例如hidden_units=[4,2]表示先经过一层输出维度为4的全连接层,再输入一层输出维度为2的全连接层,最后再输入输出维度为label_size的全连接层。

* metrics_choices("default" or list ⊂ ["acc", "f1", "matthews"]): 任务训练过程中需要计算的评估指标,默认为“default”,此时等效于["acc"]。metrics_choices支持训练过程中同时评估多个指标,其中指定的第一个指标将被作为主指标用于判断当前得分是否为最佳分值,例如["matthews", "acc"],"matthews"将作为主指标,参与最佳模型的判断中;“acc”只计算并输出,不参与最佳模型的判断。

@@ -28,4 +33,4 @@ hub.TextClassifierTask(

**示例**

-[文本分类](https://github.com/PaddlePaddle/PaddleHub/blob/release/v1.4/demo/text_classification/text_classifier.py)

+[文本分类](../../../demo/text_classification/text_cls.py)

diff --git a/docs/release.md b/docs/release.md

index 1849191883628e92de3cebf4f3fb51a9830f753f..9a59ba48fd4f44654ba6c6d2166e3c087f31be00 100644

--- a/docs/release.md

+++ b/docs/release.md

@@ -1,5 +1,40 @@

# 更新历史

+## `v1.7.0`

+

+* 丰富预训练模型,提升应用性

+ * 新增VENUS系列视觉预训练模型[yolov3_darknet53_venus](https://www.paddlepaddle.org.cn/hubdetail?name=yolov3_darknet53_venus&en_category=ObjectDetection),[faster_rcnn_resnet50_fpn_venus](https://www.paddlepaddle.org.cn/hubdetail?name=faster_rcnn_resnet50_fpn_venus&en_category=ObjectDetection),可大幅度提升图像分类和目标检测任务的Fine-tune效果

+ * 新增工业级短视频分类模型[videotag_tsn_lstm](https://paddlepaddle.org.cn/hubdetail?name=videotag_tsn_lstm&en_category=VideoClassification),支持3000类中文标签识别

+ * 新增轻量级中文OCR模型[chinese_ocr_db_rcnn](https://www.paddlepaddle.org.cn/hubdetail?name=chinese_ocr_db_rcnn&en_category=TextRecognition)、[chinese_text_detection_db](https://www.paddlepaddle.org.cn/hubdetail?name=chinese_text_detection_db&en_category=TextRecognition),支持一键快速OCR识别

+ * 新增行人检测、车辆检测、动物识别、Object等工业级模型

+

+* Fine-tune API升级

+ * 文本分类任务新增6个预置网络,包括CNN, BOW, LSTM, BiLSTM, DPCNN等

+ * 使用VisualDL可视化训练评估性能数据

+

+## `v1.6.2`

+

+* 修复图像分类在windows下运行错误

+

+## `v1.6.1`

+

+* 修复windows下安装PaddleHub缺失config.json文件

+

+## `v1.6.0`

+

+* NLP Module全面升级,提升应用性和灵活性

+ * lac、senta系列(bow、cnn、bilstm、gru、lstm)、simnet_bow、porn_detection系列(cnn、gru、lstm)升级高性能预测,性能提升高达50%

+ * ERNIE、BERT、RoBERTa等Transformer类语义模型新增获取预训练embedding接口get_embedding,方便接入下游任务,提升应用性

+ * 新增RoBERTa通过模型结构压缩得到的3层Transformer模型[rbt3](https://www.paddlepaddle.org.cn/hubdetail?name=rbt3&en_category=SemanticModel)、[rbtl3](https://www.paddlepaddle.org.cn/hubdetail?name=rbtl3&en_category=SemanticModel)

+

+* Task predict接口增加高性能预测模式accelerate_mode,性能提升高达90%

+

+* PaddleHub Module创建流程开放,支持Fine-tune模型转化,全面提升应用性和灵活性

+ * [预训练模型转化为PaddleHub Module教程](https://github.com/PaddlePaddle/PaddleHub/blob/release/v1.6/docs/contribution/contri_pretrained_model.md)

+ * [Fine-tune模型转化为PaddleHub Module教程](https://github.com/PaddlePaddle/PaddleHub/blob/release/v1.6/docs/tutorial/finetuned_model_to_module.md)

+

+* [PaddleHub Serving](https://github.com/PaddlePaddle/PaddleHub/blob/release/v1.6/docs/tutorial/serving.md)优化启动方式,支持更加灵活的参数配置

+

## `v1.5.2`

* 优化pyramidbox_lite_server_mask、pyramidbox_lite_mobile_mask模型的服务化部署性能

diff --git a/docs/tutorial/how_to_load_data.md b/docs/tutorial/how_to_load_data.md

index b56b0e8eb0b624fe458b9ad6ab81868778a98d30..ac3694e005e0730d7abc8ce2d2fe21215ba7ec6a 100644

--- a/docs/tutorial/how_to_load_data.md

+++ b/docs/tutorial/how_to_load_data.md

@@ -95,7 +95,7 @@ label_list.txt的格式如下

```

示例:

-以[DogCat数据集](https://github.com/PaddlePaddle/PaddleHub/wiki/PaddleHub-API:-Dataset#class-hubdatasetdogcatdataset)为示例,train_list.txt/test_list.txt/validate_list.txt内容如下示例

+以[DogCat数据集](../reference/dataset.md#class-hubdatasetdogcatdataset)为示例,train_list.txt/test_list.txt/validate_list.txt内容如下示例

```

cat/3270.jpg 0

cat/646.jpg 0

diff --git a/hub_module/modules/image/classification/efficientnetb0_small_imagenet/module.py b/hub_module/modules/image/classification/efficientnetb0_small_imagenet/module.py

index efd069f36a3cd996dfd98c7116877ac2b60f56a9..393092cb385703f4b2c7fc93c99cb3804baeee2c 100644

--- a/hub_module/modules/image/classification/efficientnetb0_small_imagenet/module.py

+++ b/hub_module/modules/image/classification/efficientnetb0_small_imagenet/module.py

@@ -175,7 +175,7 @@ class EfficientNetB0ImageNet(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/fix_resnext101_32x48d_wsl_imagenet/module.py b/hub_module/modules/image/classification/fix_resnext101_32x48d_wsl_imagenet/module.py

index 40a12edfeaecad12264425230d3e8b00ee9c8698..ffd4d06462e5ccb5703b6d0a21a538fdfe3af6f7 100644

--- a/hub_module/modules/image/classification/fix_resnext101_32x48d_wsl_imagenet/module.py

+++ b/hub_module/modules/image/classification/fix_resnext101_32x48d_wsl_imagenet/module.py

@@ -161,7 +161,7 @@ class FixResnext10132x48dwslImagenet(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

if not self.predictor_set:

diff --git a/hub_module/modules/image/classification/mobilenet_v2_animals/module.py b/hub_module/modules/image/classification/mobilenet_v2_animals/module.py

index 87f6f53a7f1a9adaebe951466814cbc1a167ad59..b8afcae07c3fbdc082c821faa288c29bb34b1982 100644

--- a/hub_module/modules/image/classification/mobilenet_v2_animals/module.py

+++ b/hub_module/modules/image/classification/mobilenet_v2_animals/module.py

@@ -161,7 +161,7 @@ class MobileNetV2Animals(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/mobilenet_v2_dishes/module.py b/hub_module/modules/image/classification/mobilenet_v2_dishes/module.py

index 3b9abdd5f7bab314cc7108f6190f00b9a8bdc848..f1be00a305e164b363bb9c8266833f5a986a52a5 100644

--- a/hub_module/modules/image/classification/mobilenet_v2_dishes/module.py

+++ b/hub_module/modules/image/classification/mobilenet_v2_dishes/module.py

@@ -161,7 +161,7 @@ class MobileNetV2Dishes(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/mobilenet_v2_imagenet_ssld/module.py b/hub_module/modules/image/classification/mobilenet_v2_imagenet_ssld/module.py

index 598d7112d8b24b71f2771ce5ed6945a6656a020c..a2bacc749572129cbb5c8e1a4c3257b812df416b 100644

--- a/hub_module/modules/image/classification/mobilenet_v2_imagenet_ssld/module.py

+++ b/hub_module/modules/image/classification/mobilenet_v2_imagenet_ssld/module.py

@@ -184,7 +184,7 @@ class MobileNetV2ImageNetSSLD(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/mobilenet_v3_large_imagenet_ssld/module.py b/hub_module/modules/image/classification/mobilenet_v3_large_imagenet_ssld/module.py

index fcbe73744ce86c98b27ddf9c8c5e5bf442741cbb..07dd93a770a13036f4a1fa74d7cdc11de7a8b2d4 100644

--- a/hub_module/modules/image/classification/mobilenet_v3_large_imagenet_ssld/module.py

+++ b/hub_module/modules/image/classification/mobilenet_v3_large_imagenet_ssld/module.py

@@ -161,7 +161,7 @@ class MobileNetV3Large(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/mobilenet_v3_small_imagenet_ssld/module.py b/hub_module/modules/image/classification/mobilenet_v3_small_imagenet_ssld/module.py

index 4c447dbf3dd64f79061297aca0bad365dbc44c53..5e24ce93d810e40d2f27b658394d3d59586b5754 100644

--- a/hub_module/modules/image/classification/mobilenet_v3_small_imagenet_ssld/module.py

+++ b/hub_module/modules/image/classification/mobilenet_v3_small_imagenet_ssld/module.py

@@ -161,7 +161,7 @@ class MobileNetV3Small(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/resnet18_vd_imagenet/module.py b/hub_module/modules/image/classification/resnet18_vd_imagenet/module.py

index 9870a5db5a5d7f9d150d57a0ac601add77023c38..8171f3f03ab28ec68f5cf4337890899382557e7b 100644

--- a/hub_module/modules/image/classification/resnet18_vd_imagenet/module.py

+++ b/hub_module/modules/image/classification/resnet18_vd_imagenet/module.py

@@ -161,7 +161,7 @@ class ResNet18vdImageNet(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

if not self.predictor_set:

diff --git a/hub_module/modules/image/classification/resnet50_vd_animals/module.py b/hub_module/modules/image/classification/resnet50_vd_animals/module.py

index 5c555ebaca934b6b4f86a3d8a587efce58bcbce3..ed6abe6a873ad1df687792e854a9b5a7c405fe45 100644

--- a/hub_module/modules/image/classification/resnet50_vd_animals/module.py

+++ b/hub_module/modules/image/classification/resnet50_vd_animals/module.py

@@ -161,7 +161,7 @@ class ResNet50vdAnimals(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/resnet50_vd_dishes/module.py b/hub_module/modules/image/classification/resnet50_vd_dishes/module.py

index fb2f3de8f228302af77acf5918d52aaaaba56963..b554a8fc63d98f7e79edc2d634f6dd91a18e915d 100644

--- a/hub_module/modules/image/classification/resnet50_vd_dishes/module.py

+++ b/hub_module/modules/image/classification/resnet50_vd_dishes/module.py

@@ -161,7 +161,7 @@ class ResNet50vdDishes(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/resnet50_vd_imagenet_ssld/module.py b/hub_module/modules/image/classification/resnet50_vd_imagenet_ssld/module.py

index 9464a722d26f28058ef0cafe55f7d7a0a2603ffd..380eb839f7f8df17f6588ad0bbfd04c3c155972f 100644

--- a/hub_module/modules/image/classification/resnet50_vd_imagenet_ssld/module.py

+++ b/hub_module/modules/image/classification/resnet50_vd_imagenet_ssld/module.py

@@ -161,7 +161,7 @@ class ResNet50vdDishes(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/resnet50_vd_wildanimals/module.py b/hub_module/modules/image/classification/resnet50_vd_wildanimals/module.py

index 14fd2f9cf7ac80f686b2fe7f5f1200652b1629f9..3a8d811adac5ebbd6a6f3c729e82accab4272736 100644

--- a/hub_module/modules/image/classification/resnet50_vd_wildanimals/module.py

+++ b/hub_module/modules/image/classification/resnet50_vd_wildanimals/module.py

@@ -161,7 +161,7 @@ class ResNet50vdWildAnimals(hub.Module):

int(_places[0])

except:

raise RuntimeError(

- "Attempt to use GPU for prediction, but environment variable CUDA_VISIBLE_DEVICES was not set correctly."

+ "Environment Variable CUDA_VISIBLE_DEVICES is not set correctly. If you wanna use gpu, please set CUDA_VISIBLE_DEVICES as cuda_device_id."

)

all_data = list()

diff --git a/hub_module/modules/image/classification/se_resnet18_vd_imagenet/module.py b/hub_module/modules/image/classification/se_resnet18_vd_imagenet/module.py

index ec219bd8a8aca688ff491b85ad10a5e8f0c65d43..4e6d6db7fd3140ca659e7ffcc29de3fe35af37bd 100644

--- a/hub_module/modules/image/classification/se_resnet18_vd_imagenet/module.py

+++ b/hub_module/modules/image/classification/se_resnet18_vd_imagenet/module.py

@@ -161,7 +161,7 @@ class SEResNet18vdImageNet(hub.Module):

int(_places[0])

except: