@@ -149,6 +149,8 @@ print(results)

!hub serving start -m lac

```

+ 更多迁移学习能力可以参考[教程文档](https://paddlehub.readthedocs.io/zh_CN/release-v2.1/transfer_learning_index.html)

+

@@ -208,3 +210,4 @@ print(results)

* 非常感谢[BurrowsWang](https://github.com/BurrowsWang)修复Markdown表格显示问题

* 非常感谢[huqi](https://github.com/hu-qi)修复了readme中的错别字

* 非常感谢[parano](https://github.com/parano)、[cqvu](https://github.com/cqvu)、[deehrlic](https://github.com/deehrlic)三位的贡献与支持

+* 非常感谢[paopjian](https://github.com/paopjian)修改了中文readme模型搜索指向的的网站地址错误[#1424](https://github.com/PaddlePaddle/PaddleHub/issues/1424)

diff --git a/demo/README.md b/demo/README.md

index e69de29bb2d1d6434b8b29ae775ad8c2e48c5391..1ae13ac94032a39d213e818b7b61b18a5584acc2 100644

--- a/demo/README.md

+++ b/demo/README.md

@@ -0,0 +1,2 @@

+### PaddleHub Office Website:https://www.paddlepaddle.org.cn/hub

+### PaddleHub Module Searching:https://www.paddlepaddle.org.cn/hublist

diff --git a/demo/text_classification/predict.py b/demo/text_classification/predict.py

index ad7721cafcc5292e02b3329eaa5510ec829b9db0..48a5688bfe48bc4d3728d06c9cdc78281013b9d0 100644

--- a/demo/text_classification/predict.py

+++ b/demo/text_classification/predict.py

@@ -28,6 +28,6 @@ if __name__ == '__main__':

task='seq-cls',

load_checkpoint='./test_ernie_text_cls/best_model/model.pdparams',

label_map=label_map)

- results = model.predict(data, max_seq_len=50, batch_size=1, use_gpu=False)

+ results, probs = model.predict(data, max_seq_len=50, batch_size=1, use_gpu=False, return_prob=True)

for idx, text in enumerate(data):

print('Data: {} \t Lable: {}'.format(text[0], results[idx]))

diff --git a/docs/docs_ch/get_start/installation.rst b/docs/docs_ch/get_start/installation.rst

index 5d92979a46897ffc9cc0ae80e374e25832f07675..6391e2ea2280571740a09a9ae00cb04f08ef753b 100755

--- a/docs/docs_ch/get_start/installation.rst

+++ b/docs/docs_ch/get_start/installation.rst

@@ -21,7 +21,7 @@

安装命令

========================

-在安装PaddleHub之前,请先安装PaddlePaddle深度学习框架,更多安装说明请查阅`飞桨快速安装

`_.

+在安装PaddleHub之前,请先安装PaddlePaddle深度学习框架,更多安装说明请查阅`飞桨快速安装 `

.. code-block:: shell

@@ -30,6 +30,7 @@

除上述依赖外,PaddleHub的预训练模型和预置数据集需要连接服务端进行下载,请确保机器可以正常访问网络。若本地已存在相关的数据集和预训练模型,则可以离线运行PaddleHub。

.. note::

+

使用PaddleHub下载数据集、预训练模型等,要求机器可以访问外网。可以使用`server_check()`可以检查本地与远端PaddleHub-Server的连接状态,使用方法如下:

.. code-block:: Python

@@ -37,4 +38,4 @@

import paddlehub

paddlehub.server_check()

# 如果可以连接远端PaddleHub-Server,则显示Request Hub-Server successfully。

- # 如果无法连接远端PaddleHub-Server,则显示Request Hub-Server unsuccessfully。

\ No newline at end of file

+ # 如果无法连接远端PaddleHub-Server,则显示Request Hub-Server unsuccessfully。

diff --git a/docs/docs_ch/get_start/python_use_hub.rst b/docs/docs_ch/get_start/python_use_hub.rst

index b78d3f26c0c7a0db8261da5344ebd6dda737e7e4..839c7a9bf6e9bd5b164b38030c775af63c9299f9 100755

--- a/docs/docs_ch/get_start/python_use_hub.rst

+++ b/docs/docs_ch/get_start/python_use_hub.rst

@@ -32,7 +32,7 @@ PaddleHub采用模型即软件的设计理念,所有的预训练模型与Pytho

# module = hub.Module(name="humanseg_lite", version="1.1.1")

module = hub.Module(name="humanseg_lite")

- res = module.segmentation(

+ res = module.segment(

paths = ["./test_image.jpg"],

visualization=True,

output_dir='humanseg_output')

@@ -131,4 +131,4 @@ PaddleHub采用模型即软件的设计理念,所有的预训练模型与Pytho

----------------

- [{'text': '味道不错,确实不算太辣,适合不能吃辣的人。就在长江边上,抬头就能看到长江的风景。鸭肠、黄鳝都比较新鲜。', 'sentiment_label': 1, 'sentiment_key': 'positive', 'positive_probs': 0.9771, 'negative_probs': 0.0229}]

\ No newline at end of file

+ [{'text': '味道不错,确实不算太辣,适合不能吃辣的人。就在长江边上,抬头就能看到长江的风景。鸭肠、黄鳝都比较新鲜。', 'sentiment_label': 1, 'sentiment_key': 'positive', 'positive_probs': 0.9771, 'negative_probs': 0.0229}]

diff --git a/docs/imgs/joinus.JPEG b/docs/imgs/joinus.JPEG

deleted file mode 100644

index 3adc610f52aa7c24ee7a4d57746fb2efdcf612a9..0000000000000000000000000000000000000000

Binary files a/docs/imgs/joinus.JPEG and /dev/null differ

diff --git a/docs/imgs/joinus.PNG b/docs/imgs/joinus.PNG

index 1347a9acb42059e9bf0cf0b9ea9d4425ffcb2b46..a401d123cc3d7a43f7b7b7658b2aef6dcd34b3bc 100644

Binary files a/docs/imgs/joinus.PNG and b/docs/imgs/joinus.PNG differ

diff --git a/modules/audio/audio_classification/PANNs/cnn10/module.py b/modules/audio/audio_classification/PANNs/cnn10/module.py

index 4a45bbe84d78dd967241880d35ee9ca69e3f3e5b..4f474d1f67cbc17ea8b397173019b74bcfda934d 100644

--- a/modules/audio/audio_classification/PANNs/cnn10/module.py

+++ b/modules/audio/audio_classification/PANNs/cnn10/module.py

@@ -31,7 +31,7 @@ from paddlehub.utils.log import logger

name="panns_cnn10",

version="1.0.0",

summary="",

- author="Baidu",

+ author="paddlepaddle",

author_email="",

type="audio/sound_classification",

meta=AudioClassifierModule)

diff --git a/modules/audio/audio_classification/PANNs/cnn14/module.py b/modules/audio/audio_classification/PANNs/cnn14/module.py

index eb0efc318192c39b03b810824e0a7fd37071cf01..0bd1826e20b394dfbbf007f3ac5079f3f8727fbc 100644

--- a/modules/audio/audio_classification/PANNs/cnn14/module.py

+++ b/modules/audio/audio_classification/PANNs/cnn14/module.py

@@ -31,7 +31,7 @@ from paddlehub.utils.log import logger

name="panns_cnn14",

version="1.0.0",

summary="",

- author="Baidu",

+ author="paddlepaddle",

author_email="",

type="audio/sound_classification",

meta=AudioClassifierModule)

diff --git a/modules/audio/audio_classification/PANNs/cnn6/module.py b/modules/audio/audio_classification/PANNs/cnn6/module.py

index 360cccf2fc0c8092cb6f642f8f56e7cf47049b11..ec70e75d97045743468b3ecaea5de83e2767b49a 100644

--- a/modules/audio/audio_classification/PANNs/cnn6/module.py

+++ b/modules/audio/audio_classification/PANNs/cnn6/module.py

@@ -31,7 +31,7 @@ from paddlehub.utils.log import logger

name="panns_cnn6",

version="1.0.0",

summary="",

- author="Baidu",

+ author="paddlepaddle",

author_email="",

type="audio/sound_classification",

meta=AudioClassifierModule)

diff --git a/modules/audio/voice_cloning/lstm_tacotron2/README.md b/modules/audio/voice_cloning/lstm_tacotron2/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..58d6e846a25ddded31a10d6632aaaf6d7563f723

--- /dev/null

+++ b/modules/audio/voice_cloning/lstm_tacotron2/README.md

@@ -0,0 +1,102 @@

+```shell

+$ hub install lstm_tacotron2==1.0.0

+```

+

+## 概述

+

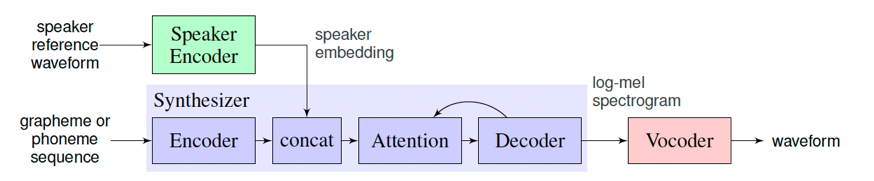

+声音克隆是指使用特定的音色,结合文字的读音合成音频,使得合成后的音频具有目标说话人的特征,从而达到克隆的目的。

+

+在训练语音克隆模型时,目标音色作为Speaker Encoder的输入,模型会提取这段语音的说话人特征(音色)作为Speaker Embedding。接着,在训练模型重新合成此类音色的语音时,除了输入的目标文本外,说话人的特征也将成为额外条件加入模型的训练。

+

+在预测时,选取一段新的目标音色作为Speaker Encoder的输入,并提取其说话人特征,最终实现输入为一段文本和一段目标音色,模型生成目标音色说出此段文本的语音片段。

+

+

+

+`lstm_tacotron2`是一个支持中文的语音克隆模型,分别使用了LSTMSpeakerEncoder、Tacotron2和WaveFlow模型分别用于语音特征提取、目标音频特征合成和语音波形转换。

+

+关于模型的详请可参考[Parakeet](https://github.com/PaddlePaddle/Parakeet/tree/release/v0.3/parakeet/models)。

+

+

+## API

+

+```python

+def __init__(speaker_audio: str = None,

+ output_dir: str = './')

+```

+初始化module,可配置模型的目标音色的音频文件和输出的路径。

+

+**参数**

+- `speaker_audio`(str): 目标说话人语音音频文件(*.wav)的路径,默认为None(使用默认的女声作为目标音色)。

+- `output_dir`(str): 合成音频的输出文件,默认为当前目录。

+

+

+```python

+def get_speaker_embedding()

+```

+获取模型的目标说话人特征。

+

+**返回**

+* `results`(numpy.ndarray): 长度为256的numpy数组,代表目标说话人的特征。

+

+```python

+def set_speaker_embedding(speaker_audio: str)

+```

+设置模型的目标说话人特征。

+

+**参数**

+- `speaker_audio`(str): 必填,目标说话人语音音频文件(*.wav)的路径。

+

+```python

+def generate(data: List[str], batch_size: int = 1, use_gpu: bool = False):

+```

+根据输入文字,合成目标说话人的语音音频文件。

+

+**参数**

+- `data`(List[str]): 必填,目标音频的内容文本列表,目前只支持中文,不支持添加标点符号。

+- `batch_size`(int): 可选,模型合成语音时的batch_size,默认为1。

+- `use_gpu`(bool): 是否使用gpu执行计算,默认为False。

+

+

+**代码示例**

+

+```python

+import paddlehub as hub

+

+model = hub.Module(name='lstm_tacotron2', output_dir='./', speaker_audio='/data/man.wav') # 指定目标音色音频文件

+texts = [

+ '语音的表现形式在未来将变得越来越重要$',

+ '今天的天气怎么样$', ]

+wavs = model.generate(texts, use_gpu=True)

+

+for text, wav in zip(texts, wavs):

+ print('='*30)

+ print(f'Text: {text}')

+ print(f'Wav: {wav}')

+```

+

+输出

+```

+==============================

+Text: 语音的表现形式在未来将变得越来越重要$

+Wav: /data/1.wav

+==============================

+Text: 今天的天气怎么样$

+Wav: /data/2.wav

+```

+

+

+## 查看代码

+

+https://github.com/PaddlePaddle/Parakeet

+

+## 依赖

+

+paddlepaddle >= 2.0.0

+

+paddlehub >= 2.1.0

+

+## 更新历史

+

+* 1.0.0

+

+ 初始发布

diff --git a/modules/audio/voice_cloning/lstm_tacotron2/__init__.py b/modules/audio/voice_cloning/lstm_tacotron2/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/modules/audio/voice_cloning/lstm_tacotron2/audio_processor.py b/modules/audio/voice_cloning/lstm_tacotron2/audio_processor.py

new file mode 100644

index 0000000000000000000000000000000000000000..a06d86ae3dfc15dca2e661b7ec180da2529c044b

--- /dev/null

+++ b/modules/audio/voice_cloning/lstm_tacotron2/audio_processor.py

@@ -0,0 +1,214 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from pathlib import Path

+from warnings import warn

+import struct

+

+from scipy.ndimage.morphology import binary_dilation

+import numpy as np

+import librosa

+

+try:

+ import webrtcvad

+except ModuleNotFoundError:

+ warn("Unable to import 'webrtcvad'." "This package enables noise removal and is recommended.")

+ webrtcvad = None

+

+INT16_MAX = (2**15) - 1

+

+

+def normalize_volume(wav, target_dBFS, increase_only=False, decrease_only=False):

+ # this function implements Loudness normalization, instead of peak

+ # normalization, See https://en.wikipedia.org/wiki/Audio_normalization

+ # dBFS: Decibels relative to full scale

+ # See https://en.wikipedia.org/wiki/DBFS for more details

+ # for 16Bit PCM audio, minimal level is -96dB

+ # compute the mean dBFS and adjust to target dBFS, with by increasing

+ # or decreasing

+ if increase_only and decrease_only:

+ raise ValueError("Both increase only and decrease only are set")

+ dBFS_change = target_dBFS - 10 * np.log10(np.mean(wav**2))

+ if ((dBFS_change < 0 and increase_only) or (dBFS_change > 0 and decrease_only)):

+ return wav

+ gain = 10**(dBFS_change / 20)

+ return wav * gain

+

+

+def trim_long_silences(wav, vad_window_length: int, vad_moving_average_width: int, vad_max_silence_length: int,

+ sampling_rate: int):

+ """

+ Ensures that segments without voice in the waveform remain no longer than a

+ threshold determined by the VAD parameters in params.py.

+

+ :param wav: the raw waveform as a numpy array of floats

+ :return: the same waveform with silences trimmed away (length <= original wav length)

+ """

+ # Compute the voice detection window size

+ samples_per_window = (vad_window_length * sampling_rate) // 1000

+

+ # Trim the end of the audio to have a multiple of the window size

+ wav = wav[:len(wav) - (len(wav) % samples_per_window)]

+

+ # Convert the float waveform to 16-bit mono PCM

+ pcm_wave = struct.pack("%dh" % len(wav), *(np.round(wav * INT16_MAX)).astype(np.int16))

+

+ # Perform voice activation detection

+ voice_flags = []

+ vad = webrtcvad.Vad(mode=3)

+ for window_start in range(0, len(wav), samples_per_window):

+ window_end = window_start + samples_per_window

+ voice_flags.append(vad.is_speech(pcm_wave[window_start * 2:window_end * 2], sample_rate=sampling_rate))

+ voice_flags = np.array(voice_flags)

+

+ # Smooth the voice detection with a moving average

+ def moving_average(array, width):

+ array_padded = np.concatenate((np.zeros((width - 1) // 2), array, np.zeros(width // 2)))

+ ret = np.cumsum(array_padded, dtype=float)

+ ret[width:] = ret[width:] - ret[:-width]

+ return ret[width - 1:] / width

+

+ audio_mask = moving_average(voice_flags, vad_moving_average_width)

+ audio_mask = np.round(audio_mask).astype(np.bool)

+

+ # Dilate the voiced regions

+ audio_mask = binary_dilation(audio_mask, np.ones(vad_max_silence_length + 1))

+ audio_mask = np.repeat(audio_mask, samples_per_window)

+

+ return wav[audio_mask]

+

+

+def compute_partial_slices(n_samples: int,

+ partial_utterance_n_frames: int,

+ hop_length: int,

+ min_pad_coverage: float = 0.75,

+ overlap: float = 0.5):

+ """

+ Computes where to split an utterance waveform and its corresponding mel spectrogram to obtain

+ partial utterances of each. Both the waveform and the mel

+ spectrogram slices are returned, so as to make each partial utterance waveform correspond to

+ its spectrogram. This function assumes that the mel spectrogram parameters used are those

+ defined in params_data.py.

+

+ The returned ranges may be indexing further than the length of the waveform. It is

+ recommended that you pad the waveform with zeros up to wave_slices[-1].stop.

+

+ :param n_samples: the number of samples in the waveform

+ :param partial_utterance_n_frames: the number of mel spectrogram frames in each partial

+ utterance

+ :param min_pad_coverage: when reaching the last partial utterance, it may or may not have

+ enough frames. If at least of are present,

+ then the last partial utterance will be considered, as if we padded the audio. Otherwise,

+ it will be discarded, as if we trimmed the audio. If there aren't enough frames for 1 partial

+ utterance, this parameter is ignored so that the function always returns at least 1 slice.

+ :param overlap: by how much the partial utterance should overlap. If set to 0, the partial

+ utterances are entirely disjoint.

+ :return: the waveform slices and mel spectrogram slices as lists of array slices. Index

+ respectively the waveform and the mel spectrogram with these slices to obtain the partial

+ utterances.

+ """

+ assert 0 <= overlap < 1

+ assert 0 < min_pad_coverage <= 1

+

+ # librosa's function to compute num_frames from num_samples

+ n_frames = int(np.ceil((n_samples + 1) / hop_length))

+ # frame shift between ajacent partials

+ frame_step = max(1, int(np.round(partial_utterance_n_frames * (1 - overlap))))

+

+ # Compute the slices

+ wav_slices, mel_slices = [], []

+ steps = max(1, n_frames - partial_utterance_n_frames + frame_step + 1)

+ for i in range(0, steps, frame_step):

+ mel_range = np.array([i, i + partial_utterance_n_frames])

+ wav_range = mel_range * hop_length

+ mel_slices.append(slice(*mel_range))

+ wav_slices.append(slice(*wav_range))

+

+ # Evaluate whether extra padding is warranted or not

+ last_wav_range = wav_slices[-1]

+ coverage = (n_samples - last_wav_range.start) / (last_wav_range.stop - last_wav_range.start)

+ if coverage < min_pad_coverage and len(mel_slices) > 1:

+ mel_slices = mel_slices[:-1]

+ wav_slices = wav_slices[:-1]

+

+ return wav_slices, mel_slices

+

+

+class SpeakerVerificationPreprocessor(object):

+ def __init__(self,

+ sampling_rate: int,

+ audio_norm_target_dBFS: float,

+ vad_window_length,

+ vad_moving_average_width,

+ vad_max_silence_length,

+ mel_window_length,

+ mel_window_step,

+ n_mels,

+ partial_n_frames: int,

+ min_pad_coverage: float = 0.75,

+ partial_overlap_ratio: float = 0.5):

+ self.sampling_rate = sampling_rate

+ self.audio_norm_target_dBFS = audio_norm_target_dBFS

+

+ self.vad_window_length = vad_window_length

+ self.vad_moving_average_width = vad_moving_average_width

+ self.vad_max_silence_length = vad_max_silence_length

+

+ self.n_fft = int(mel_window_length * sampling_rate / 1000)

+ self.hop_length = int(mel_window_step * sampling_rate / 1000)

+ self.n_mels = n_mels

+

+ self.partial_n_frames = partial_n_frames

+ self.min_pad_coverage = min_pad_coverage

+ self.partial_overlap_ratio = partial_overlap_ratio

+

+ def preprocess_wav(self, fpath_or_wav, source_sr=None):

+ # Load the wav from disk if needed

+ if isinstance(fpath_or_wav, (str, Path)):

+ wav, source_sr = librosa.load(str(fpath_or_wav), sr=None)

+ else:

+ wav = fpath_or_wav

+

+ # Resample if numpy.array is passed and sr does not match

+ if source_sr is not None and source_sr != self.sampling_rate:

+ wav = librosa.resample(wav, source_sr, self.sampling_rate)

+

+ # loudness normalization

+ wav = normalize_volume(wav, self.audio_norm_target_dBFS, increase_only=True)

+

+ # trim long silence

+ if webrtcvad:

+ wav = trim_long_silences(wav, self.vad_window_length, self.vad_moving_average_width,

+ self.vad_max_silence_length, self.sampling_rate)

+ return wav

+

+ def melspectrogram(self, wav):

+ mel = librosa.feature.melspectrogram(

+ wav, sr=self.sampling_rate, n_fft=self.n_fft, hop_length=self.hop_length, n_mels=self.n_mels)

+ mel = mel.astype(np.float32).T

+ return mel

+

+ def extract_mel_partials(self, wav):

+ wav_slices, mel_slices = compute_partial_slices(

+ len(wav), self.partial_n_frames, self.hop_length, self.min_pad_coverage, self.partial_overlap_ratio)

+

+ # pad audio if needed

+ max_wave_length = wav_slices[-1].stop

+ if max_wave_length >= len(wav):

+ wav = np.pad(wav, (0, max_wave_length - len(wav)), "constant")

+

+ # Split the utterance into partials

+ frames = self.melspectrogram(wav)

+ frames_batch = np.array([frames[s] for s in mel_slices])

+ return frames_batch # [B, T, C]

diff --git a/modules/audio/voice_cloning/lstm_tacotron2/chinese_g2p.py b/modules/audio/voice_cloning/lstm_tacotron2/chinese_g2p.py

new file mode 100644

index 0000000000000000000000000000000000000000..f8000cb540577695037858af458e48af5cf715e6

--- /dev/null

+++ b/modules/audio/voice_cloning/lstm_tacotron2/chinese_g2p.py

@@ -0,0 +1,39 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from typing import List, Tuple

+from pypinyin import lazy_pinyin, Style

+

+from .preprocess_transcription import split_syllable

+

+

+def convert_to_pinyin(text: str) -> List[str]:

+ """convert text into list of syllables, other characters that are not chinese, thus

+ cannot be converted to pinyin are splited.

+ """

+ syllables = lazy_pinyin(text, style=Style.TONE3, neutral_tone_with_five=True)

+ return syllables

+

+

+def convert_sentence(text: str) -> List[Tuple[str]]:

+ """convert a sentence into two list: phones and tones"""

+ syllables = convert_to_pinyin(text)

+ phones = []

+ tones = []

+ for syllable in syllables:

+ p, t = split_syllable(syllable)

+ phones.extend(p)

+ tones.extend(t)

+

+ return phones, tones

diff --git a/modules/audio/voice_cloning/lstm_tacotron2/module.py b/modules/audio/voice_cloning/lstm_tacotron2/module.py

new file mode 100644

index 0000000000000000000000000000000000000000..8e60afa2bb9a74e4922e99eef219e1816f9968af

--- /dev/null

+++ b/modules/audio/voice_cloning/lstm_tacotron2/module.py

@@ -0,0 +1,188 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import importlib

+import os

+from typing import List

+

+import numpy as np

+import paddle

+import paddle.nn as nn

+from paddlehub.env import MODULE_HOME

+from paddlehub.module.module import moduleinfo

+from paddlehub.utils.log import logger

+from paddlenlp.data import Pad

+from parakeet.models import ConditionalWaveFlow, Tacotron2

+from parakeet.models.lstm_speaker_encoder import LSTMSpeakerEncoder

+import soundfile as sf

+

+from .audio_processor import SpeakerVerificationPreprocessor

+from .chinese_g2p import convert_sentence

+from .preprocess_transcription import voc_phones, voc_tones, phone_pad_token, tone_pad_token

+

+

+@moduleinfo(

+ name="lstm_tacotron2",

+ version="1.0.0",

+ summary="",

+ author="paddlepaddle",

+ author_email="",

+ type="audio/voice_cloning",

+)

+class VoiceCloner(nn.Layer):

+ def __init__(self, speaker_audio: str = None, output_dir: str = './'):

+ super(VoiceCloner, self).__init__()

+

+ self.sample_rate = 22050 # Hyper params for the following model ckpts.

+ speaker_encoder_ckpt = os.path.join(MODULE_HOME, 'lstm_tacotron2', 'assets',

+ 'ge2e_ckpt_0.3/step-3000000.pdparams')

+ synthesizer_ckpt = os.path.join(MODULE_HOME, 'lstm_tacotron2', 'assets',

+ 'tacotron2_aishell3_ckpt_0.3/step-450000.pdparams')

+ vocoder_ckpt = os.path.join(MODULE_HOME, 'lstm_tacotron2', 'assets',

+ 'waveflow_ljspeech_ckpt_0.3/step-2000000.pdparams')

+

+ # Speaker encoder

+ self.speaker_processor = SpeakerVerificationPreprocessor(

+ sampling_rate=16000,

+ audio_norm_target_dBFS=-30,

+ vad_window_length=30,

+ vad_moving_average_width=8,

+ vad_max_silence_length=6,

+ mel_window_length=25,

+ mel_window_step=10,

+ n_mels=40,

+ partial_n_frames=160,

+ min_pad_coverage=0.75,

+ partial_overlap_ratio=0.5)

+ self.speaker_encoder = LSTMSpeakerEncoder(n_mels=40, num_layers=3, hidden_size=256, output_size=256)

+ self.speaker_encoder.set_state_dict(paddle.load(speaker_encoder_ckpt))

+ self.speaker_encoder.eval()

+

+ # Voice synthesizer

+ self.synthesizer = Tacotron2(

+ vocab_size=68,

+ n_tones=10,

+ d_mels=80,

+ d_encoder=512,

+ encoder_conv_layers=3,

+ encoder_kernel_size=5,

+ d_prenet=256,

+ d_attention_rnn=1024,

+ d_decoder_rnn=1024,

+ attention_filters=32,

+ attention_kernel_size=31,

+ d_attention=128,

+ d_postnet=512,

+ postnet_kernel_size=5,

+ postnet_conv_layers=5,

+ reduction_factor=1,

+ p_encoder_dropout=0.5,

+ p_prenet_dropout=0.5,

+ p_attention_dropout=0.1,

+ p_decoder_dropout=0.1,

+ p_postnet_dropout=0.5,

+ d_global_condition=256,

+ use_stop_token=False)

+ self.synthesizer.set_state_dict(paddle.load(synthesizer_ckpt))

+ self.synthesizer.eval()

+

+ # Vocoder

+ self.vocoder = ConditionalWaveFlow(

+ upsample_factors=[16, 16], n_flows=8, n_layers=8, n_group=16, channels=128, n_mels=80, kernel_size=[3, 3])

+ self.vocoder.set_state_dict(paddle.load(vocoder_ckpt))

+ self.vocoder.eval()

+

+ # Speaking embedding

+ self._speaker_embedding = None

+ if speaker_audio is None or not os.path.isfile(speaker_audio):

+ speaker_audio = os.path.join(MODULE_HOME, 'lstm_tacotron2', 'assets', 'voice_cloning.wav')

+ logger.warning(f'Due to no speaker audio is specified, speaker encoder will use defult '

+ f'waveform({speaker_audio}) to extract speaker embedding. You can use '

+ '"set_speaker_embedding()" method to reset a speaker audio for voice cloning.')

+ self.set_speaker_embedding(speaker_audio)

+

+ self.output_dir = os.path.abspath(output_dir)

+ if not os.path.exists(self.output_dir):

+ os.makedirs(self.output_dir)

+

+ def get_speaker_embedding(self):

+ return self._speaker_embedding.numpy()

+

+ def set_speaker_embedding(self, speaker_audio: str):

+ assert os.path.exists(speaker_audio), f'Speaker audio file: {speaker_audio} does not exists.'

+ mel_sequences = self.speaker_processor.extract_mel_partials(

+ self.speaker_processor.preprocess_wav(speaker_audio))

+ self._speaker_embedding = self.speaker_encoder.embed_utterance(paddle.to_tensor(mel_sequences))

+ logger.info(f'Speaker embedding has been set from file: {speaker_audio}')

+

+ def forward(self, phones: paddle.Tensor, tones: paddle.Tensor, speaker_embeddings: paddle.Tensor):

+ outputs = self.synthesizer.infer(phones, tones=tones, global_condition=speaker_embeddings)

+ mel_input = paddle.transpose(outputs["mel_outputs_postnet"], [0, 2, 1])

+ waveforms = self.vocoder.infer(mel_input)

+ return waveforms

+

+ def _convert_text_to_input(self, text: str):

+ """

+ Convert input string to phones and tones.

+ """

+ phones, tones = convert_sentence(text)

+ phones = np.array([voc_phones.lookup(item) for item in phones], dtype=np.int64)

+ tones = np.array([voc_tones.lookup(item) for item in tones], dtype=np.int64)

+ return phones, tones

+

+ def _batchify(self, data: List[str], batch_size: int):

+ """

+ Generate input batches.

+ """

+ phone_pad_func = Pad(voc_phones.lookup(phone_pad_token))

+ tone_pad_func = Pad(voc_tones.lookup(tone_pad_token))

+

+ def _parse_batch(batch_data):

+ phones, tones = zip(*batch_data)

+ speaker_embeddings = paddle.expand(self._speaker_embedding, shape=(len(batch_data), -1))

+ return phone_pad_func(phones), tone_pad_func(tones), speaker_embeddings

+

+ examples = [] # [(phones, tones), ...]

+ for text in data:

+ examples.append(self._convert_text_to_input(text))

+

+ # Seperates data into some batches.

+ one_batch = []

+ for example in examples:

+ one_batch.append(example)

+ if len(one_batch) == batch_size:

+ yield _parse_batch(one_batch)

+ one_batch = []

+ if one_batch:

+ yield _parse_batch(one_batch)

+

+ def generate(self, data: List[str], batch_size: int = 1, use_gpu: bool = False):

+ assert self._speaker_embedding is not None, f'Set speaker embedding before voice cloning.'

+

+ paddle.set_device('gpu') if use_gpu else paddle.set_device('cpu')

+ batches = self._batchify(data, batch_size)

+

+ results = []

+ for batch in batches:

+ phones, tones, speaker_embeddings = map(paddle.to_tensor, batch)

+ waveforms = self(phones, tones, speaker_embeddings).numpy()

+ results.extend(list(waveforms))

+

+ files = []

+ for idx, waveform in enumerate(results):

+ output_wav = os.path.join(self.output_dir, f'{idx+1}.wav')

+ sf.write(output_wav, waveform, samplerate=self.sample_rate)

+ files.append(output_wav)

+

+ return files

diff --git a/modules/audio/voice_cloning/lstm_tacotron2/preprocess_transcription.py b/modules/audio/voice_cloning/lstm_tacotron2/preprocess_transcription.py

new file mode 100644

index 0000000000000000000000000000000000000000..5c88cb4c71af42d8479eb78e6b0b667f4d64fbac

--- /dev/null

+++ b/modules/audio/voice_cloning/lstm_tacotron2/preprocess_transcription.py

@@ -0,0 +1,181 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import argparse

+from pathlib import Path

+import pickle

+import re

+

+from parakeet.frontend import Vocab

+import tqdm

+

+zh_pattern = re.compile("[\u4e00-\u9fa5]")

+

+_tones = {'', '', '', '0', '1', '2', '3', '4', '5'}

+

+_pauses = {'%', '$'}

+

+_initials = {

+ 'b',

+ 'p',

+ 'm',

+ 'f',

+ 'd',

+ 't',

+ 'n',

+ 'l',

+ 'g',

+ 'k',

+ 'h',

+ 'j',

+ 'q',

+ 'x',

+ 'zh',

+ 'ch',

+ 'sh',

+ 'r',

+ 'z',

+ 'c',

+ 's',

+}

+

+_finals = {

+ 'ii',

+ 'iii',

+ 'a',

+ 'o',

+ 'e',

+ 'ea',

+ 'ai',

+ 'ei',

+ 'ao',

+ 'ou',

+ 'an',

+ 'en',

+ 'ang',

+ 'eng',

+ 'er',

+ 'i',

+ 'ia',

+ 'io',

+ 'ie',

+ 'iai',

+ 'iao',

+ 'iou',

+ 'ian',

+ 'ien',

+ 'iang',

+ 'ieng',

+ 'u',

+ 'ua',

+ 'uo',

+ 'uai',

+ 'uei',

+ 'uan',

+ 'uen',

+ 'uang',

+ 'ueng',

+ 'v',

+ 've',

+ 'van',

+ 'ven',

+ 'veng',

+}

+

+_ernized_symbol = {'&r'}

+

+_specials = {'', '', '', ''}

+

+_phones = _initials | _finals | _ernized_symbol | _specials | _pauses

+

+phone_pad_token = ''

+tone_pad_token = ''

+voc_phones = Vocab(sorted(list(_phones)))

+voc_tones = Vocab(sorted(list(_tones)))

+

+

+def is_zh(word):

+ global zh_pattern

+ match = zh_pattern.search(word)

+ return match is not None

+

+

+def ernized(syllable):

+ return syllable[:2] != "er" and syllable[-2] == 'r'

+

+

+def convert(syllable):

+ # expansion of o -> uo

+ syllable = re.sub(r"([bpmf])o$", r"\1uo", syllable)

+ # syllable = syllable.replace("bo", "buo").replace("po", "puo").replace("mo", "muo").replace("fo", "fuo")

+ # expansion for iong, ong

+ syllable = syllable.replace("iong", "veng").replace("ong", "ueng")

+

+ # expansion for ing, in

+ syllable = syllable.replace("ing", "ieng").replace("in", "ien")

+

+ # expansion for un, ui, iu

+ syllable = syllable.replace("un", "uen").replace("ui", "uei").replace("iu", "iou")

+

+ # rule for variants of i

+ syllable = syllable.replace("zi", "zii").replace("ci", "cii").replace("si", "sii")\

+ .replace("zhi", "zhiii").replace("chi", "chiii").replace("shi", "shiii")\

+ .replace("ri", "riii")

+

+ # rule for y preceding i, u

+ syllable = syllable.replace("yi", "i").replace("yu", "v").replace("y", "i")

+

+ # rule for w

+ syllable = syllable.replace("wu", "u").replace("w", "u")

+

+ # rule for v following j, q, x

+ syllable = syllable.replace("ju", "jv").replace("qu", "qv").replace("xu", "xv")

+

+ return syllable

+

+

+def split_syllable(syllable: str):

+ """Split a syllable in pinyin into a list of phones and a list of tones.

+ Initials have no tone, represented by '0', while finals have tones from

+ '1,2,3,4,5'.

+

+ e.g.

+

+ zhang -> ['zh', 'ang'], ['0', '1']

+ """

+ if syllable in _pauses:

+ # syllable, tone

+ return [syllable], ['0']

+

+ tone = syllable[-1]

+ syllable = convert(syllable[:-1])

+

+ phones = []

+ tones = []

+

+ global _initials

+ if syllable[:2] in _initials:

+ phones.append(syllable[:2])

+ tones.append('0')

+ phones.append(syllable[2:])

+ tones.append(tone)

+ elif syllable[0] in _initials:

+ phones.append(syllable[0])

+ tones.append('0')

+ phones.append(syllable[1:])

+ tones.append(tone)

+ else:

+ phones.append(syllable)

+ tones.append(tone)

+ return phones, tones

diff --git a/modules/audio/voice_cloning/lstm_tacotron2/requirements.txt b/modules/audio/voice_cloning/lstm_tacotron2/requirements.txt

new file mode 100644

index 0000000000000000000000000000000000000000..013164d7c3fa849c686cdde69a260f95d83a8e64

--- /dev/null

+++ b/modules/audio/voice_cloning/lstm_tacotron2/requirements.txt

@@ -0,0 +1 @@

+paddle-parakeet

diff --git a/modules/image/classification/ghostnet_x0_5_imagenet/README.md b/modules/image/classification/ghostnet_x0_5_imagenet/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..40d83f30ccf0a11ddc149da0a92f32d0a78666ba

--- /dev/null

+++ b/modules/image/classification/ghostnet_x0_5_imagenet/README.md

@@ -0,0 +1,192 @@

+```shell

+$ hub install ghostnet_x0_5_imagenet==1.0.0

+```

+

+## 命令行预测

+

+```shell

+$ hub run ghostnet_x0_5_imagenet --input_path "/PATH/TO/IMAGE" --top_k 5

+```

+

+## 脚本预测

+

+```python

+import paddle

+import paddlehub as hub

+

+if __name__ == '__main__':

+

+ model = hub.Module(name='ghostnet_x0_5_imagenet',)

+ result = model.predict([PATH/TO/IMAGE])

+```

+

+## Fine-tune代码步骤

+

+使用PaddleHub Fine-tune API进行Fine-tune可以分为4个步骤。

+

+### Step1: 定义数据预处理方式

+```python

+import paddlehub.vision.transforms as T

+

+transforms = T.Compose([T.Resize((256, 256)),

+ T.CenterCrop(224),

+ T.Normalize(mean=[0.485, 0.456, 0.406], std = [0.229, 0.224, 0.225])],

+ to_rgb=True)

+```

+

+'transforms' 数据增强模块定义了丰富的数据预处理方式,用户可按照需求替换自己需要的数据预处理方式。

+

+### Step2: 下载数据集并使用

+```python

+from paddlehub.datasets import Flowers

+

+flowers = Flowers(transforms)

+flowers_validate = Flowers(transforms, mode='val')

+```

+* transforms(Callable): 数据预处理方式。

+* mode(str): 选择数据模式,可选项有 'train', 'test', 'val', 默认为'train'。

+

+'hub.datasets.Flowers()' 会自动从网络下载数据集并解压到用户目录下'$HOME/.paddlehub/dataset'目录。

+

+

+### Step3: 加载预训练模型

+

+```python

+import paddlehub as hub

+

+model = hub.Module(name='ghostnet_x0_5_imagenet',

+ label_list=["roses", "tulips", "daisy", "sunflowers", "dandelion"],

+ load_checkpoint=None)

+```

+* name(str): 选择预训练模型的名字。

+* label_list(list): 设置标签对应分类类别, 默认为Imagenet2012类别。

+* load _checkpoint(str): 模型参数地址。

+

+PaddleHub提供许多图像分类预训练模型,如xception、mobilenet、efficientnet等,详细信息参见[图像分类模型](https://www.paddlepaddle.org.cn/hub?filter=en_category&value=ImageClassification)。

+

+如果想尝试efficientnet模型,只需要更换Module中的'name'参数即可.

+```python

+import paddlehub as hub

+

+# 更换name参数即可无缝切换efficientnet模型, 代码示例如下

+module = hub.Module(name="efficientnetb7_imagenet")

+```

+**NOTE:**目前部分模型还没有完全升级到2.0版本,敬请期待。

+

+### Step4: 选择优化策略和运行配置

+

+```python

+import paddle

+from paddlehub.finetune.trainer import Trainer

+

+optimizer = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())

+trainer = Trainer(model, optimizer, checkpoint_dir='img_classification_ckpt')

+

+trainer.train(flowers, epochs=100, batch_size=32, eval_dataset=flowers_validate, save_interval=1)

+```

+

+#### 优化策略

+

+Paddle2.0rc提供了多种优化器选择,如'SGD', 'Adam', 'Adamax'等,详细参见[策略](https://www.paddlepaddle.org.cn/documentation/docs/zh/2.0-rc/api/paddle/optimizer/optimizer/Optimizer_cn.html)。

+

+其中'Adam':

+

+* learning_rate: 全局学习率。默认为1e-3;

+* parameters: 待优化模型参数。

+

+#### 运行配置

+'Trainer' 主要控制Fine-tune的训练,包含以下可控制的参数:

+

+* model: 被优化模型;

+* optimizer: 优化器选择;

+* use_vdl: 是否使用vdl可视化训练过程;

+* checkpoint_dir: 保存模型参数的地址;

+* compare_metrics: 保存最优模型的衡量指标;

+

+'trainer.train' 主要控制具体的训练过程,包含以下可控制的参数:

+

+* train_dataset: 训练时所用的数据集;

+* epochs: 训练轮数;

+* batch_size: 训练的批大小,如果使用GPU,请根据实际情况调整batch_size;

+* num_workers: works的数量,默认为0;

+* eval_dataset: 验证集;

+* log_interval: 打印日志的间隔, 单位为执行批训练的次数。

+* save_interval: 保存模型的间隔频次,单位为执行训练的轮数。

+

+## 模型预测

+

+当完成Fine-tune后,Fine-tune过程在验证集上表现最优的模型会被保存在'${CHECKPOINT_DIR}/best_model'目录下,其中'${CHECKPOINT_DIR}'目录为Fine-tune时所选择的保存checkpoint的目录。

+我们使用该模型来进行预测。predict.py脚本如下:

+

+```python

+import paddle

+import paddlehub as hub

+

+if __name__ == '__main__':

+

+ model = hub.Module(name='ghostnet_x0_5_imagenet', label_list=["roses", "tulips", "daisy", "sunflowers", "dandelion"], load_checkpoint='/PATH/TO/CHECKPOINT')

+ result = model.predict(['flower.jpg'])

+```

+

+参数配置正确后,请执行脚本'python predict.py', 加载模型具体可参见[加载](https://www.paddlepaddle.org.cn/documentation/docs/zh/2.0-rc/api/paddle/framework/io/load_cn.html#load)。

+

+**NOTE:** 进行预测时,所选择的module,checkpoint_dir,dataset必须和Fine-tune所用的一样。

+

+## 服务部署

+

+PaddleHub Serving可以部署一个在线分类任务服务

+

+## Step1: 启动PaddleHub Serving

+

+运行启动命令:

+

+```shell

+$ hub serving start -m ghostnet_x0_5_imagenet

+```

+

+这样就完成了一个分类任务服务化API的部署,默认端口号为8866。

+

+**NOTE:** 如使用GPU预测,则需要在启动服务之前,请设置CUDA_VISIBLE_DEVICES环境变量,否则不用设置。

+

+## Step2: 发送预测请求

+

+配置好服务端,以下数行代码即可实现发送预测请求,获取预测结果

+

+```python

+import requests

+import json

+import cv2

+import base64

+

+import numpy as np

+

+

+def cv2_to_base64(image):

+ data = cv2.imencode('.jpg', image)[1]

+ return base64.b64encode(data.tostring()).decode('utf8')

+

+def base64_to_cv2(b64str):

+ data = base64.b64decode(b64str.encode('utf8'))

+ data = np.fromstring(data, np.uint8)

+ data = cv2.imdecode(data, cv2.IMREAD_COLOR)

+ return data

+

+# 发送HTTP请求

+org_im = cv2.imread('/PATH/TO/IMAGE')

+

+data = {'images':[cv2_to_base64(org_im)], 'top_k':2}

+headers = {"Content-type": "application/json"}

+url = "http://127.0.0.1:8866/predict/ghostnet_x0_5_imagenet"

+r = requests.post(url=url, headers=headers, data=json.dumps(data))

+data =r.json()["results"]['data']

+```

+

+### 查看代码

+

+[PaddleClas](https://github.com/PaddlePaddle/PaddleClas)

+

+### 依赖

+

+paddlepaddle >= 2.0.0

+

+paddlehub >= 2.0.0

diff --git a/modules/image/classification/ghostnet_x0_5_imagenet/label_list.txt b/modules/image/classification/ghostnet_x0_5_imagenet/label_list.txt

new file mode 100644

index 0000000000000000000000000000000000000000..52baabc68e968dde482ca143728295355d83203a

--- /dev/null

+++ b/modules/image/classification/ghostnet_x0_5_imagenet/label_list.txt

@@ -0,0 +1,1000 @@

+tench

+goldfish

+great white shark

+tiger shark

+hammerhead

+electric ray

+stingray

+cock

+hen

+ostrich

+brambling

+goldfinch

+house finch

+junco

+indigo bunting

+robin

+bulbul

+jay

+magpie

+chickadee

+water ouzel

+kite

+bald eagle

+vulture

+great grey owl

+European fire salamander

+common newt

+eft

+spotted salamander

+axolotl

+bullfrog

+tree frog

+tailed frog

+loggerhead

+leatherback turtle

+mud turtle

+terrapin

+box turtle

+banded gecko

+common iguana

+American chameleon

+whiptail

+agama

+frilled lizard

+alligator lizard

+Gila monster

+green lizard

+African chameleon

+Komodo dragon

+African crocodile

+American alligator

+triceratops

+thunder snake

+ringneck snake

+hognose snake

+green snake

+king snake

+garter snake

+water snake

+vine snake

+night snake

+boa constrictor

+rock python

+Indian cobra

+green mamba

+sea snake

+horned viper

+diamondback

+sidewinder

+trilobite

+harvestman

+scorpion

+black and gold garden spider

+barn spider

+garden spider

+black widow

+tarantula

+wolf spider

+tick

+centipede

+black grouse

+ptarmigan

+ruffed grouse

+prairie chicken

+peacock

+quail

+partridge

+African grey

+macaw

+sulphur-crested cockatoo

+lorikeet

+coucal

+bee eater

+hornbill

+hummingbird

+jacamar

+toucan

+drake

+red-breasted merganser

+goose

+black swan

+tusker

+echidna

+platypus

+wallaby

+koala

+wombat

+jellyfish

+sea anemone

+brain coral

+flatworm

+nematode

+conch

+snail

+slug

+sea slug

+chiton

+chambered nautilus

+Dungeness crab

+rock crab

+fiddler crab

+king crab

+American lobster

+spiny lobster

+crayfish

+hermit crab

+isopod

+white stork

+black stork

+spoonbill

+flamingo

+little blue heron

+American egret

+bittern

+crane

+limpkin

+European gallinule

+American coot

+bustard

+ruddy turnstone

+red-backed sandpiper

+redshank

+dowitcher

+oystercatcher

+pelican

+king penguin

+albatross

+grey whale

+killer whale

+dugong

+sea lion

+Chihuahua

+Japanese spaniel

+Maltese dog

+Pekinese

+Shih-Tzu

+Blenheim spaniel

+papillon

+toy terrier

+Rhodesian ridgeback

+Afghan hound

+basset

+beagle

+bloodhound

+bluetick

+black-and-tan coonhound

+Walker hound

+English foxhound

+redbone

+borzoi

+Irish wolfhound

+Italian greyhound

+whippet

+Ibizan hound

+Norwegian elkhound

+otterhound

+Saluki

+Scottish deerhound

+Weimaraner

+Staffordshire bullterrier

+American Staffordshire terrier

+Bedlington terrier

+Border terrier

+Kerry blue terrier

+Irish terrier

+Norfolk terrier

+Norwich terrier

+Yorkshire terrier

+wire-haired fox terrier

+Lakeland terrier

+Sealyham terrier

+Airedale

+cairn

+Australian terrier

+Dandie Dinmont

+Boston bull

+miniature schnauzer

+giant schnauzer

+standard schnauzer

+Scotch terrier

+Tibetan terrier

+silky terrier

+soft-coated wheaten terrier

+West Highland white terrier

+Lhasa

+flat-coated retriever

+curly-coated retriever

+golden retriever

+Labrador retriever

+Chesapeake Bay retriever

+German short-haired pointer

+vizsla

+English setter

+Irish setter

+Gordon setter

+Brittany spaniel

+clumber

+English springer

+Welsh springer spaniel

+cocker spaniel

+Sussex spaniel

+Irish water spaniel

+kuvasz

+schipperke

+groenendael

+malinois

+briard

+kelpie

+komondor

+Old English sheepdog

+Shetland sheepdog

+collie

+Border collie

+Bouvier des Flandres

+Rottweiler

+German shepherd

+Doberman

+miniature pinscher

+Greater Swiss Mountain dog

+Bernese mountain dog

+Appenzeller

+EntleBucher

+boxer

+bull mastiff

+Tibetan mastiff

+French bulldog

+Great Dane

+Saint Bernard

+Eskimo dog

+malamute

+Siberian husky

+dalmatian

+affenpinscher

+basenji

+pug

+Leonberg

+Newfoundland

+Great Pyrenees

+Samoyed

+Pomeranian

+chow

+keeshond

+Brabancon griffon

+Pembroke

+Cardigan

+toy poodle

+miniature poodle

+standard poodle

+Mexican hairless

+timber wolf

+white wolf

+red wolf

+coyote

+dingo

+dhole

+African hunting dog

+hyena

+red fox

+kit fox

+Arctic fox

+grey fox

+tabby

+tiger cat

+Persian cat

+Siamese cat

+Egyptian cat

+cougar

+lynx

+leopard

+snow leopard

+jaguar

+lion

+tiger

+cheetah

+brown bear

+American black bear

+ice bear

+sloth bear

+mongoose

+meerkat

+tiger beetle

+ladybug

+ground beetle

+long-horned beetle

+leaf beetle

+dung beetle

+rhinoceros beetle

+weevil

+fly

+bee

+ant

+grasshopper

+cricket

+walking stick

+cockroach

+mantis

+cicada

+leafhopper

+lacewing

+dragonfly

+damselfly

+admiral

+ringlet

+monarch

+cabbage butterfly

+sulphur butterfly

+lycaenid

+starfish

+sea urchin

+sea cucumber

+wood rabbit

+hare

+Angora

+hamster

+porcupine

+fox squirrel

+marmot

+beaver

+guinea pig

+sorrel

+zebra

+hog

+wild boar

+warthog

+hippopotamus

+ox

+water buffalo

+bison

+ram

+bighorn

+ibex

+hartebeest

+impala

+gazelle

+Arabian camel

+llama

+weasel

+mink

+polecat

+black-footed ferret

+otter

+skunk

+badger

+armadillo

+three-toed sloth

+orangutan

+gorilla

+chimpanzee

+gibbon

+siamang

+guenon

+patas

+baboon

+macaque

+langur

+colobus

+proboscis monkey

+marmoset

+capuchin

+howler monkey

+titi

+spider monkey

+squirrel monkey

+Madagascar cat

+indri

+Indian elephant

+African elephant

+lesser panda

+giant panda

+barracouta

+eel

+coho

+rock beauty

+anemone fish

+sturgeon

+gar

+lionfish

+puffer

+abacus

+abaya

+academic gown

+accordion

+acoustic guitar

+aircraft carrier

+airliner

+airship

+altar

+ambulance

+amphibian

+analog clock

+apiary

+apron

+ashcan

+assault rifle

+backpack

+bakery

+balance beam

+balloon

+ballpoint

+Band Aid

+banjo

+bannister

+barbell

+barber chair

+barbershop

+barn

+barometer

+barrel

+barrow

+baseball

+basketball

+bassinet

+bassoon

+bathing cap

+bath towel

+bathtub

+beach wagon

+beacon

+beaker

+bearskin

+beer bottle

+beer glass

+bell cote

+bib

+bicycle-built-for-two

+bikini

+binder

+binoculars

+birdhouse

+boathouse

+bobsled

+bolo tie

+bonnet

+bookcase

+bookshop

+bottlecap

+bow

+bow tie

+brass

+brassiere

+breakwater

+breastplate

+broom

+bucket

+buckle

+bulletproof vest

+bullet train

+butcher shop

+cab

+caldron

+candle

+cannon

+canoe

+can opener

+cardigan

+car mirror

+carousel

+carpenters kit

+carton

+car wheel

+cash machine

+cassette

+cassette player

+castle

+catamaran

+CD player

+cello

+cellular telephone

+chain

+chainlink fence

+chain mail

+chain saw

+chest

+chiffonier

+chime

+china cabinet

+Christmas stocking

+church

+cinema

+cleaver

+cliff dwelling

+cloak

+clog

+cocktail shaker

+coffee mug

+coffeepot

+coil

+combination lock

+computer keyboard

+confectionery

+container ship

+convertible

+corkscrew

+cornet

+cowboy boot

+cowboy hat

+cradle

+crane

+crash helmet

+crate

+crib

+Crock Pot

+croquet ball

+crutch

+cuirass

+dam

+desk

+desktop computer

+dial telephone

+diaper

+digital clock

+digital watch

+dining table

+dishrag

+dishwasher

+disk brake

+dock

+dogsled

+dome

+doormat

+drilling platform

+drum

+drumstick

+dumbbell

+Dutch oven

+electric fan

+electric guitar

+electric locomotive

+entertainment center

+envelope

+espresso maker

+face powder

+feather boa

+file

+fireboat

+fire engine

+fire screen

+flagpole

+flute

+folding chair

+football helmet

+forklift

+fountain

+fountain pen

+four-poster

+freight car

+French horn

+frying pan

+fur coat

+garbage truck

+gasmask

+gas pump

+goblet

+go-kart

+golf ball

+golfcart

+gondola

+gong

+gown

+grand piano

+greenhouse

+grille

+grocery store

+guillotine

+hair slide

+hair spray

+half track

+hammer

+hamper

+hand blower

+hand-held computer

+handkerchief

+hard disc

+harmonica

+harp

+harvester

+hatchet

+holster

+home theater

+honeycomb

+hook

+hoopskirt

+horizontal bar

+horse cart

+hourglass

+iPod

+iron

+jack-o-lantern

+jean

+jeep

+jersey

+jigsaw puzzle

+jinrikisha

+joystick

+kimono

+knee pad

+knot

+lab coat

+ladle

+lampshade

+laptop

+lawn mower

+lens cap

+letter opener

+library

+lifeboat

+lighter

+limousine

+liner

+lipstick

+Loafer

+lotion

+loudspeaker

+loupe

+lumbermill

+magnetic compass

+mailbag

+mailbox

+maillot

+maillot

+manhole cover

+maraca

+marimba

+mask

+matchstick

+maypole

+maze

+measuring cup

+medicine chest

+megalith

+microphone

+microwave

+military uniform

+milk can

+minibus

+miniskirt

+minivan

+missile

+mitten

+mixing bowl

+mobile home

+Model T

+modem

+monastery

+monitor

+moped

+mortar

+mortarboard

+mosque

+mosquito net

+motor scooter

+mountain bike

+mountain tent

+mouse

+mousetrap

+moving van

+muzzle

+nail

+neck brace

+necklace

+nipple

+notebook

+obelisk

+oboe

+ocarina

+odometer

+oil filter

+organ

+oscilloscope

+overskirt

+oxcart

+oxygen mask

+packet

+paddle

+paddlewheel

+padlock

+paintbrush

+pajama

+palace

+panpipe

+paper towel

+parachute

+parallel bars

+park bench

+parking meter

+passenger car

+patio

+pay-phone

+pedestal

+pencil box

+pencil sharpener

+perfume

+Petri dish

+photocopier

+pick

+pickelhaube

+picket fence

+pickup

+pier

+piggy bank

+pill bottle

+pillow

+ping-pong ball

+pinwheel

+pirate

+pitcher

+plane

+planetarium

+plastic bag

+plate rack

+plow

+plunger

+Polaroid camera

+pole

+police van

+poncho

+pool table

+pop bottle

+pot

+potters wheel

+power drill

+prayer rug

+printer

+prison

+projectile

+projector

+puck

+punching bag

+purse

+quill

+quilt

+racer

+racket

+radiator

+radio

+radio telescope

+rain barrel

+recreational vehicle

+reel

+reflex camera

+refrigerator

+remote control

+restaurant

+revolver

+rifle

+rocking chair

+rotisserie

+rubber eraser

+rugby ball

+rule

+running shoe

+safe

+safety pin

+saltshaker

+sandal

+sarong

+sax

+scabbard

+scale

+school bus

+schooner

+scoreboard

+screen

+screw

+screwdriver

+seat belt

+sewing machine

+shield

+shoe shop

+shoji

+shopping basket

+shopping cart

+shovel

+shower cap

+shower curtain

+ski

+ski mask

+sleeping bag

+slide rule

+sliding door

+slot

+snorkel

+snowmobile

+snowplow

+soap dispenser

+soccer ball

+sock

+solar dish

+sombrero

+soup bowl

+space bar

+space heater

+space shuttle

+spatula

+speedboat

+spider web

+spindle

+sports car

+spotlight

+stage

+steam locomotive

+steel arch bridge

+steel drum

+stethoscope

+stole

+stone wall

+stopwatch

+stove

+strainer

+streetcar

+stretcher

+studio couch

+stupa

+submarine

+suit

+sundial

+sunglass

+sunglasses

+sunscreen

+suspension bridge

+swab

+sweatshirt

+swimming trunks

+swing

+switch

+syringe

+table lamp

+tank

+tape player

+teapot

+teddy

+television

+tennis ball

+thatch

+theater curtain

+thimble

+thresher

+throne

+tile roof

+toaster

+tobacco shop

+toilet seat

+torch

+totem pole

+tow truck

+toyshop

+tractor

+trailer truck

+tray

+trench coat

+tricycle

+trimaran

+tripod

+triumphal arch

+trolleybus

+trombone

+tub

+turnstile

+typewriter keyboard

+umbrella

+unicycle

+upright

+vacuum

+vase

+vault

+velvet

+vending machine

+vestment

+viaduct

+violin

+volleyball

+waffle iron

+wall clock

+wallet

+wardrobe

+warplane

+washbasin

+washer

+water bottle

+water jug

+water tower

+whiskey jug

+whistle

+wig

+window screen

+window shade

+Windsor tie

+wine bottle

+wing

+wok

+wooden spoon

+wool

+worm fence

+wreck

+yawl

+yurt

+web site

+comic book

+crossword puzzle

+street sign

+traffic light

+book jacket

+menu

+plate

+guacamole

+consomme

+hot pot

+trifle

+ice cream

+ice lolly

+French loaf

+bagel

+pretzel

+cheeseburger

+hotdog

+mashed potato

+head cabbage

+broccoli

+cauliflower

+zucchini

+spaghetti squash

+acorn squash

+butternut squash

+cucumber

+artichoke

+bell pepper

+cardoon

+mushroom

+Granny Smith

+strawberry

+orange

+lemon

+fig

+pineapple

+banana

+jackfruit

+custard apple

+pomegranate

+hay

+carbonara

+chocolate sauce

+dough

+meat loaf

+pizza

+potpie

+burrito

+red wine

+espresso

+cup

+eggnog

+alp

+bubble

+cliff

+coral reef

+geyser

+lakeside

+promontory

+sandbar

+seashore

+valley

+volcano

+ballplayer

+groom

+scuba diver

+rapeseed

+daisy

+yellow ladys slipper

+corn

+acorn

+hip

+buckeye

+coral fungus

+agaric

+gyromitra

+stinkhorn

+earthstar

+hen-of-the-woods

+bolete

+ear

+toilet tissue

diff --git a/modules/image/classification/ghostnet_x0_5_imagenet/module.py b/modules/image/classification/ghostnet_x0_5_imagenet/module.py

new file mode 100644

index 0000000000000000000000000000000000000000..8ab6d90613d8d1cd8a72fb2a6e81d77cbbcf6cda

--- /dev/null

+++ b/modules/image/classification/ghostnet_x0_5_imagenet/module.py

@@ -0,0 +1,324 @@

+# copyright (c) 2021 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import os

+import math

+from typing import Union

+

+import paddle

+import paddle.nn as nn

+import paddle.nn.functional as F

+import paddlehub.vision.transforms as T

+import numpy as np

+from paddle import ParamAttr

+from paddle.nn.initializer import Uniform, KaimingNormal

+from paddlehub.module.module import moduleinfo

+from paddlehub.module.cv_module import ImageClassifierModule

+

+

+class ConvBNLayer(nn.Layer):

+ def __init__(self, in_channels, out_channels, kernel_size, stride=1, groups=1, act="relu", name=None):

+ super(ConvBNLayer, self).__init__()

+ self._conv = nn.Conv2D(

+ in_channels=in_channels,

+ out_channels=out_channels,

+ kernel_size=kernel_size,

+ stride=stride,

+ padding=(kernel_size - 1) // 2,

+ groups=groups,

+ weight_attr=ParamAttr(initializer=KaimingNormal(), name=name + "_weights"),

+ bias_attr=False)

+ bn_name = name + "_bn"

+

+ self._batch_norm = nn.BatchNorm(

+ num_channels=out_channels,

+ act=act,

+ param_attr=ParamAttr(name=bn_name + "_scale", regularizer=paddle.regularizer.L2Decay(0.0)),

+ bias_attr=ParamAttr(name=bn_name + "_offset", regularizer=paddle.regularizer.L2Decay(0.0)),

+ moving_mean_name=bn_name + "_mean",

+ moving_variance_name=bn_name + "_variance")

+

+ def forward(self, inputs):

+ y = self._conv(inputs)

+ y = self._batch_norm(y)

+ return y

+

+

+class SEBlock(nn.Layer):

+ def __init__(self, num_channels, reduction_ratio=4, name=None):

+ super(SEBlock, self).__init__()

+ self.pool2d_gap = nn.AdaptiveAvgPool2D(1)

+ self._num_channels = num_channels

+ stdv = 1.0 / math.sqrt(num_channels * 1.0)

+ med_ch = num_channels // reduction_ratio

+ self.squeeze = nn.Linear(

+ num_channels,

+ med_ch,

+ weight_attr=ParamAttr(initializer=Uniform(-stdv, stdv), name=name + "_1_weights"),

+ bias_attr=ParamAttr(name=name + "_1_offset"))

+ stdv = 1.0 / math.sqrt(med_ch * 1.0)

+ self.excitation = nn.Linear(

+ med_ch,

+ num_channels,

+ weight_attr=ParamAttr(initializer=Uniform(-stdv, stdv), name=name + "_2_weights"),

+ bias_attr=ParamAttr(name=name + "_2_offset"))

+

+ def forward(self, inputs):

+ pool = self.pool2d_gap(inputs)

+ pool = paddle.squeeze(pool, axis=[2, 3])

+ squeeze = self.squeeze(pool)

+ squeeze = F.relu(squeeze)

+ excitation = self.excitation(squeeze)

+ excitation = paddle.clip(x=excitation, min=0, max=1)

+ excitation = paddle.unsqueeze(excitation, axis=[2, 3])

+ out = paddle.multiply(inputs, excitation)

+ return out

+

+

+class GhostModule(nn.Layer):

+ def __init__(self, in_channels, output_channels, kernel_size=1, ratio=2, dw_size=3, stride=1, relu=True, name=None):

+ super(GhostModule, self).__init__()

+ init_channels = int(math.ceil(output_channels / ratio))

+ new_channels = int(init_channels * (ratio - 1))

+ self.primary_conv = ConvBNLayer(

+ in_channels=in_channels,

+ out_channels=init_channels,

+ kernel_size=kernel_size,

+ stride=stride,

+ groups=1,

+ act="relu" if relu else None,

+ name=name + "_primary_conv")

+ self.cheap_operation = ConvBNLayer(

+ in_channels=init_channels,

+ out_channels=new_channels,

+ kernel_size=dw_size,

+ stride=1,

+ groups=init_channels,

+ act="relu" if relu else None,

+ name=name + "_cheap_operation")

+

+ def forward(self, inputs):

+ x = self.primary_conv(inputs)

+ y = self.cheap_operation(x)

+ out = paddle.concat([x, y], axis=1)

+ return out

+

+

+class GhostBottleneck(nn.Layer):

+ def __init__(self, in_channels, hidden_dim, output_channels, kernel_size, stride, use_se, name=None):

+ super(GhostBottleneck, self).__init__()

+ self._stride = stride

+ self._use_se = use_se

+ self._num_channels = in_channels

+ self._output_channels = output_channels

+ self.ghost_module_1 = GhostModule(

+ in_channels=in_channels,

+ output_channels=hidden_dim,

+ kernel_size=1,

+ stride=1,

+ relu=True,

+ name=name + "_ghost_module_1")

+ if stride == 2:

+ self.depthwise_conv = ConvBNLayer(

+ in_channels=hidden_dim,

+ out_channels=hidden_dim,

+ kernel_size=kernel_size,

+ stride=stride,

+ groups=hidden_dim,

+ act=None,

+ name=name + "_depthwise_depthwise" # looks strange due to an old typo, will be fixed later.

+ )

+ if use_se:

+ self.se_block = SEBlock(num_channels=hidden_dim, name=name + "_se")

+ self.ghost_module_2 = GhostModule(

+ in_channels=hidden_dim,

+ output_channels=output_channels,

+ kernel_size=1,

+ relu=False,

+ name=name + "_ghost_module_2")

+ if stride != 1 or in_channels != output_channels:

+ self.shortcut_depthwise = ConvBNLayer(

+ in_channels=in_channels,

+ out_channels=in_channels,

+ kernel_size=kernel_size,

+ stride=stride,

+ groups=in_channels,

+ act=None,

+ name=name + "_shortcut_depthwise_depthwise" # looks strange due to an old typo, will be fixed later.

+ )

+ self.shortcut_conv = ConvBNLayer(

+ in_channels=in_channels,

+ out_channels=output_channels,

+ kernel_size=1,

+ stride=1,

+ groups=1,

+ act=None,

+ name=name + "_shortcut_conv")

+

+ def forward(self, inputs):

+ x = self.ghost_module_1(inputs)

+ if self._stride == 2:

+ x = self.depthwise_conv(x)

+ if self._use_se:

+ x = self.se_block(x)

+ x = self.ghost_module_2(x)

+ if self._stride == 1 and self._num_channels == self._output_channels:

+ shortcut = inputs

+ else:

+ shortcut = self.shortcut_depthwise(inputs)

+ shortcut = self.shortcut_conv(shortcut)

+ return paddle.add(x=x, y=shortcut)

+

+

+@moduleinfo(

+ name="ghostnet_x0_5_imagenet",

+ type="CV/classification",

+ author="paddlepaddle",

+ author_email="",

+ summary="ghostnet_x0_5_imagenet is a classification model, "

+ "this module is trained with Imagenet dataset.",

+ version="1.0.0",

+ meta=ImageClassifierModule)

+class GhostNet(nn.Layer):

+ def __init__(self, label_list: list = None, load_checkpoint: str = None):

+ super(GhostNet, self).__init__()

+

+ if label_list is not None:

+ self.labels = label_list

+ class_dim = len(self.labels)

+ else:

+ label_list = []

+ label_file = os.path.join(self.directory, 'label_list.txt')

+ files = open(label_file)

+ for line in files.readlines():

+ line = line.strip('\n')

+ label_list.append(line)

+ self.labels = label_list

+ class_dim = len(self.labels)

+

+ self.cfgs = [

+ # k, t, c, SE, s

+ [3, 16, 16, 0, 1],

+ [3, 48, 24, 0, 2],

+ [3, 72, 24, 0, 1],

+ [5, 72, 40, 1, 2],

+ [5, 120, 40, 1, 1],

+ [3, 240, 80, 0, 2],

+ [3, 200, 80, 0, 1],

+ [3, 184, 80, 0, 1],

+ [3, 184, 80, 0, 1],

+ [3, 480, 112, 1, 1],

+ [3, 672, 112, 1, 1],

+ [5, 672, 160, 1, 2],

+ [5, 960, 160, 0, 1],

+ [5, 960, 160, 1, 1],

+ [5, 960, 160, 0, 1],

+ [5, 960, 160, 1, 1]

+ ]

+ self.scale = 0.5

+ output_channels = int(self._make_divisible(16 * self.scale, 4))

+ self.conv1 = ConvBNLayer(

+ in_channels=3, out_channels=output_channels, kernel_size=3, stride=2, groups=1, act="relu", name="conv1")

+ # build inverted residual blocks

+ idx = 0

+ self.ghost_bottleneck_list = []

+ for k, exp_size, c, use_se, s in self.cfgs:

+ in_channels = output_channels

+ output_channels = int(self._make_divisible(c * self.scale, 4))

+ hidden_dim = int(self._make_divisible(exp_size * self.scale, 4))

+ ghost_bottleneck = self.add_sublayer(

+ name="_ghostbottleneck_" + str(idx),

+ sublayer=GhostBottleneck(

+ in_channels=in_channels,

+ hidden_dim=hidden_dim,

+ output_channels=output_channels,

+ kernel_size=k,

+ stride=s,

+ use_se=use_se,

+ name="_ghostbottleneck_" + str(idx)))

+ self.ghost_bottleneck_list.append(ghost_bottleneck)

+ idx += 1

+ # build last several layers

+ in_channels = output_channels

+ output_channels = int(self._make_divisible(exp_size * self.scale, 4))

+ self.conv_last = ConvBNLayer(

+ in_channels=in_channels,

+ out_channels=output_channels,

+ kernel_size=1,

+ stride=1,

+ groups=1,

+ act="relu",

+ name="conv_last")

+ self.pool2d_gap = nn.AdaptiveAvgPool2D(1)

+ in_channels = output_channels

+ self._fc0_output_channels = 1280

+ self.fc_0 = ConvBNLayer(

+ in_channels=in_channels,

+ out_channels=self._fc0_output_channels,

+ kernel_size=1,

+ stride=1,

+ act="relu",

+ name="fc_0")

+ self.dropout = nn.Dropout(p=0.2)

+ stdv = 1.0 / math.sqrt(self._fc0_output_channels * 1.0)

+ self.fc_1 = nn.Linear(

+ self._fc0_output_channels,

+ class_dim,

+ weight_attr=ParamAttr(name="fc_1_weights", initializer=Uniform(-stdv, stdv)),

+ bias_attr=ParamAttr(name="fc_1_offset"))

+

+ if load_checkpoint is not None:

+ self.model_dict = paddle.load(load_checkpoint)

+ self.set_dict(self.model_dict)

+ print("load custom checkpoint success")

+ else:

+ checkpoint = os.path.join(self.directory, 'model.pdparams')

+ self.model_dict = paddle.load(checkpoint)

+ self.set_dict(self.model_dict)

+ print("load pretrained checkpoint success")

+

+ def transforms(self, images: Union[str, np.ndarray]):

+ transforms = T.Compose([

+ T.Resize((256, 256)),

+ T.CenterCrop(224),

+ T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

+ ],

+ to_rgb=True)

+ return transforms(images).astype('float32')

+

+ def forward(self, inputs):

+ x = self.conv1(inputs)

+ for ghost_bottleneck in self.ghost_bottleneck_list:

+ x = ghost_bottleneck(x)

+ x = self.conv_last(x)

+ feature = self.pool2d_gap(x)

+ x = self.fc_0(feature)

+ x = self.dropout(x)

+ x = paddle.reshape(x, shape=[-1, self._fc0_output_channels])

+ x = self.fc_1(x)

+ return x, feature

+

+ def _make_divisible(self, v, divisor, min_value=None):

+ """

+ This function is taken from the original tf repo.

+ It ensures that all layers have a channel number that is divisible by 8

+ It can be seen here:

+ https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

+ """

+ if min_value is None:

+ min_value = divisor

+ new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

+ # Make sure that round down does not go down by more than 10%.

+ if new_v < 0.9 * v:

+ new_v += divisor

+ return new_v

diff --git a/modules/image/classification/ghostnet_x1_0_imagenet/README.md b/modules/image/classification/ghostnet_x1_0_imagenet/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..9e25c471b72cf96393766d3c46eeb161ea7489b2

--- /dev/null

+++ b/modules/image/classification/ghostnet_x1_0_imagenet/README.md

@@ -0,0 +1,192 @@

+```shell

+$ hub install ghostnet_x1_0_imagenet==1.0.0

+```

+

+## 命令行预测

+

+```shell

+$ hub run ghostnet_x1_0_imagenet --input_path "/PATH/TO/IMAGE" --top_k 5

+```

+

+## 脚本预测

+

+```python

+import paddle

+import paddlehub as hub

+

+if __name__ == '__main__':

+

+ model = hub.Module(name='ghostnet_x1_0_imagenet',)

+ result = model.predict([PATH/TO/IMAGE])

+```

+

+## Fine-tune代码步骤

+

+使用PaddleHub Fine-tune API进行Fine-tune可以分为4个步骤。

+

+### Step1: 定义数据预处理方式

+```python

+import paddlehub.vision.transforms as T

+

+transforms = T.Compose([T.Resize((256, 256)),

+ T.CenterCrop(224),

+ T.Normalize(mean=[0.485, 0.456, 0.406], std = [0.229, 0.224, 0.225])],

+ to_rgb=True)

+```

+

+'transforms' 数据增强模块定义了丰富的数据预处理方式,用户可按照需求替换自己需要的数据预处理方式。

+

+### Step2: 下载数据集并使用

+```python

+from paddlehub.datasets import Flowers

+

+flowers = Flowers(transforms)

+flowers_validate = Flowers(transforms, mode='val')

+```

+* transforms(Callable): 数据预处理方式。

+* mode(str): 选择数据模式,可选项有 'train', 'test', 'val', 默认为'train'。

+

+'hub.datasets.Flowers()' 会自动从网络下载数据集并解压到用户目录下'$HOME/.paddlehub/dataset'目录。

+

+

+### Step3: 加载预训练模型

+

+```python

+import paddlehub as hub

+

+model = hub.Module(name='ghostnet_x1_0_imagenet',

+ label_list=["roses", "tulips", "daisy", "sunflowers", "dandelion"],

+ load_checkpoint=None)

+```

+* name(str): 选择预训练模型的名字。

+* label_list(list): 设置标签对应分类类别, 默认为Imagenet2012类别。

+* load _checkpoint(str): 模型参数地址。

+

+PaddleHub提供许多图像分类预训练模型,如xception、mobilenet、efficientnet等,详细信息参见[图像分类模型](https://www.paddlepaddle.org.cn/hub?filter=en_category&value=ImageClassification)。

+

+如果想尝试efficientnet模型,只需要更换Module中的'name'参数即可.

+```python

+import paddlehub as hub

+

+# 更换name参数即可无缝切换efficientnet模型, 代码示例如下

+module = hub.Module(name="efficientnetb7_imagenet")

+```

+**NOTE:**目前部分模型还没有完全升级到2.0版本,敬请期待。

+

+### Step4: 选择优化策略和运行配置

+

+```python

+import paddle

+from paddlehub.finetune.trainer import Trainer

+

+optimizer = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())

+trainer = Trainer(model, optimizer, checkpoint_dir='img_classification_ckpt')

+

+trainer.train(flowers, epochs=100, batch_size=32, eval_dataset=flowers_validate, save_interval=1)

+```

+

+#### 优化策略

+

+Paddle2.0rc提供了多种优化器选择,如'SGD', 'Adam', 'Adamax'等,详细参见[策略](https://www.paddlepaddle.org.cn/documentation/docs/zh/2.0-rc/api/paddle/optimizer/optimizer/Optimizer_cn.html)。

+

+其中'Adam':

+

+* learning_rate: 全局学习率。默认为1e-3;

+* parameters: 待优化模型参数。

+

+#### 运行配置

+'Trainer' 主要控制Fine-tune的训练,包含以下可控制的参数:

+

+* model: 被优化模型;

+* optimizer: 优化器选择;

+* use_vdl: 是否使用vdl可视化训练过程;

+* checkpoint_dir: 保存模型参数的地址;

+* compare_metrics: 保存最优模型的衡量指标;

+

+'trainer.train' 主要控制具体的训练过程,包含以下可控制的参数:

+

+* train_dataset: 训练时所用的数据集;

+* epochs: 训练轮数;

+* batch_size: 训练的批大小,如果使用GPU,请根据实际情况调整batch_size;

+* num_workers: works的数量,默认为0;

+* eval_dataset: 验证集;

+* log_interval: 打印日志的间隔, 单位为执行批训练的次数。

+* save_interval: 保存模型的间隔频次,单位为执行训练的轮数。

+

+## 模型预测

+

+当完成Fine-tune后,Fine-tune过程在验证集上表现最优的模型会被保存在'${CHECKPOINT_DIR}/best_model'目录下,其中'${CHECKPOINT_DIR}'目录为Fine-tune时所选择的保存checkpoint的目录。

+我们使用该模型来进行预测。predict.py脚本如下:

+

+```python

+import paddle

+import paddlehub as hub

+

+if __name__ == '__main__':

+

+ model = hub.Module(name='ghostnet_x1_0_imagenet', label_list=["roses", "tulips", "daisy", "sunflowers", "dandelion"], load_checkpoint='/PATH/TO/CHECKPOINT')

+ result = model.predict(['flower.jpg'])

+```

+

+参数配置正确后,请执行脚本'python predict.py', 加载模型具体可参见[加载](https://www.paddlepaddle.org.cn/documentation/docs/zh/2.0-rc/api/paddle/framework/io/load_cn.html#load)。

+

+**NOTE:** 进行预测时,所选择的module,checkpoint_dir,dataset必须和Fine-tune所用的一样。

+

+## 服务部署

+

+PaddleHub Serving可以部署一个在线分类任务服务

+

+## Step1: 启动PaddleHub Serving

+

+运行启动命令:

+

+```shell

+$ hub serving start -m ghostnet_x1_0_imagenet

+```

+

+这样就完成了一个分类任务服务化API的部署,默认端口号为8866。

+

+**NOTE:** 如使用GPU预测,则需要在启动服务之前,请设置CUDA_VISIBLE_DEVICES环境变量,否则不用设置。

+

+## Step2: 发送预测请求

+

+配置好服务端,以下数行代码即可实现发送预测请求,获取预测结果

+

+```python

+import requests

+import json

+import cv2

+import base64

+

+import numpy as np

+

+

+def cv2_to_base64(image):

+ data = cv2.imencode('.jpg', image)[1]

+ return base64.b64encode(data.tostring()).decode('utf8')

+

+def base64_to_cv2(b64str):

+ data = base64.b64decode(b64str.encode('utf8'))

+ data = np.fromstring(data, np.uint8)

+ data = cv2.imdecode(data, cv2.IMREAD_COLOR)

+ return data

+

+# 发送HTTP请求

+org_im = cv2.imread('/PATH/TO/IMAGE')

+

+data = {'images':[cv2_to_base64(org_im)], 'top_k':2}

+headers = {"Content-type": "application/json"}

+url = "http://127.0.0.1:8866/predict/ghostnet_x1_0_imagenet"

+r = requests.post(url=url, headers=headers, data=json.dumps(data))

+data =r.json()["results"]['data']

+```

+

+### 查看代码

+