[dev] add s2anet (#2432)

* Add S2ANet model for Oriented Object Detection.

Showing

configs/datasets/dota.yml

0 → 100644

configs/dota/README.md

0 → 100644

configs/dota/_base_/s2anet.yml

0 → 100644

configs/dota/s2anet_1x_dota.yml

0 → 100644

demo/P0072__1.0__0___0.png

0 → 100644

1.6 MB

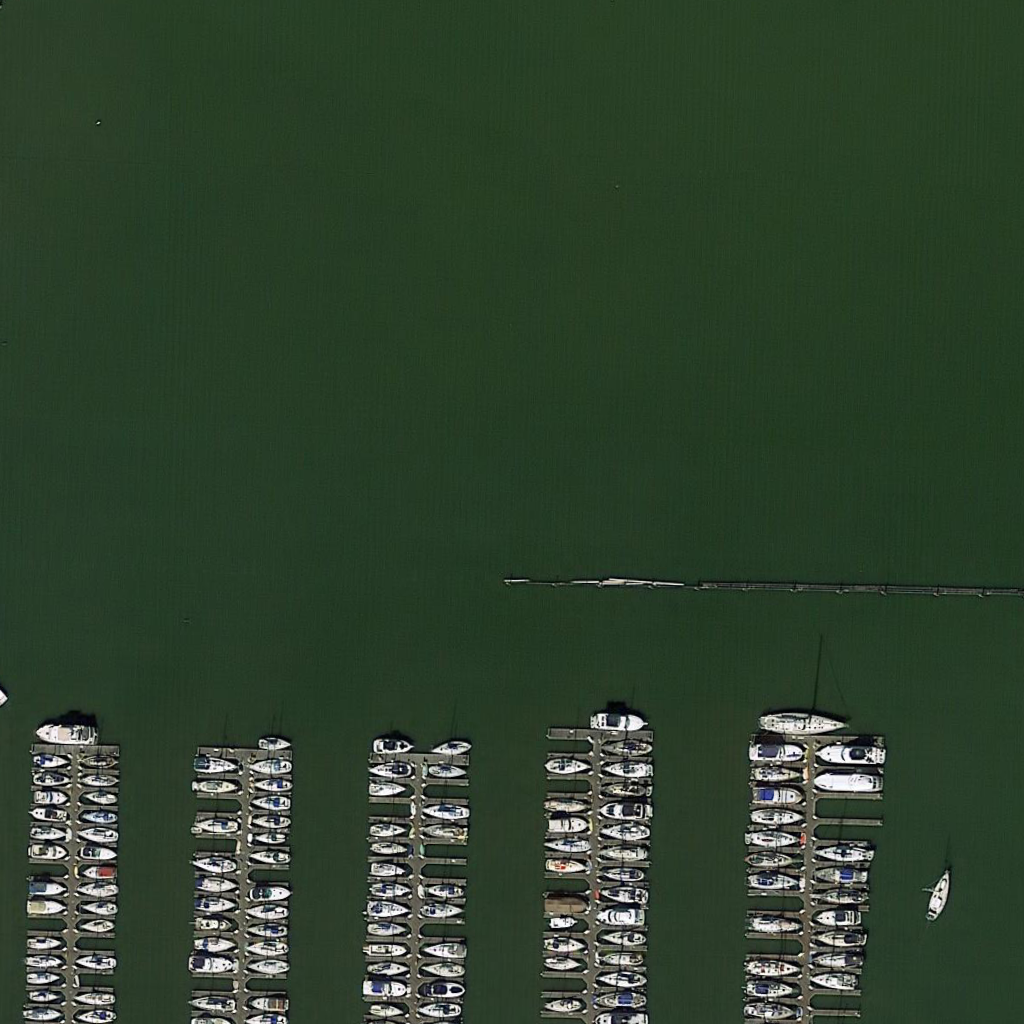

demo/P0861__1.0__1154___824.png

0 → 100644

1.2 MB

ppdet/ext_op/README.md

0 → 100644

ppdet/ext_op/rbox_iou_op.cc

0 → 100644

ppdet/ext_op/rbox_iou_op.cu

0 → 100644

ppdet/ext_op/setup.py

0 → 100644

ppdet/ext_op/test.py

0 → 100644