Rename object_detection to PaddleDetection. (#2601)

* Rename object_detection to PaddleDetection * Small fix for doc

上级

Showing

.gitignore

0 → 100644

.style.yapf

0 → 100644

README.md

0 → 100644

configs/faster_rcnn_r101_1x.yml

0 → 100644

configs/faster_rcnn_r50_1x.yml

0 → 100644

configs/faster_rcnn_r50_2x.yml

0 → 100644

configs/faster_rcnn_r50_vd_1x.yml

0 → 100644

configs/mask_rcnn_r101_fpn_1x.yml

0 → 100644

configs/mask_rcnn_r101_fpn_2x.yml

0 → 100644

configs/mask_rcnn_r50_1x.yml

0 → 100644

configs/mask_rcnn_r50_2x.yml

0 → 100644

configs/mask_rcnn_r50_fpn_1x.yml

0 → 100644

configs/mask_rcnn_r50_fpn_2x.yml

0 → 100644

configs/retinanet_r101_fpn_1x.yml

0 → 100644

configs/retinanet_r50_fpn_1x.yml

0 → 100644

configs/ssd_mobilenet_v1_voc.yml

0 → 100644

configs/yolov3_darknet.yml

0 → 100644

configs/yolov3_mobilenet_v1.yml

0 → 100644

configs/yolov3_r34.yml

0 → 100644

dataset/coco/download.sh

0 → 100644

dataset/voc/download.sh

0 → 100755

demo/000000014439.jpg

0 → 100644

190.7 KB

demo/000000087038.jpg

0 → 100644

179.0 KB

demo/000000570688.jpg

0 → 100644

57.9 KB

demo/mask_rcnn_demo.ipynb

0 → 100644

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

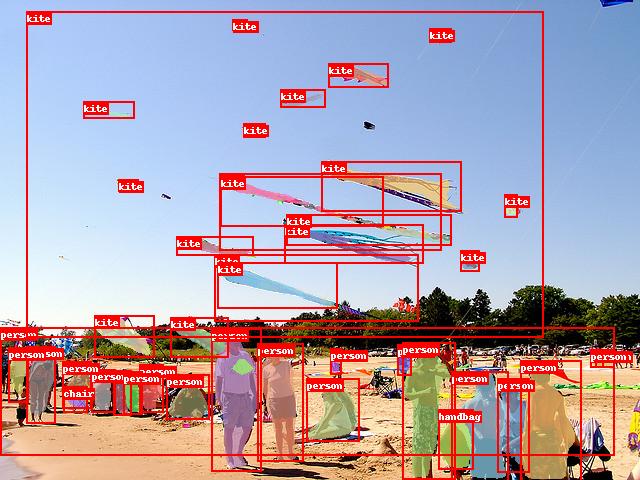

demo/output/000000570688.jpg

0 → 100644

68.4 KB

docs/CONFIG.md

0 → 100644

docs/DATA.md

0 → 100644

docs/DATA_cn.md

0 → 100644

docs/GETTING_STARTED.md

0 → 100644

docs/INSTALL.md

0 → 100644

ppdet/__init__.py

0 → 100644

ppdet/core/__init__.py

0 → 100644

ppdet/core/config/__init__.py

0 → 100644

ppdet/core/config/schema.py

0 → 100644

ppdet/core/config/yaml_helpers.py

0 → 100644

ppdet/core/workspace.py

0 → 100644

ppdet/data/README.md

0 → 120000

ppdet/data/README_cn.md

0 → 120000

ppdet/data/__init__.py

0 → 100644

ppdet/data/data_feed.py

0 → 100644

ppdet/data/dataset.py

0 → 100644

ppdet/data/reader.py

0 → 100644

ppdet/data/source/__init__.py

0 → 100644

ppdet/data/source/coco_loader.py

0 → 100644

ppdet/data/source/loader.py

0 → 100644

ppdet/data/source/roidb_source.py

0 → 100644

ppdet/data/source/voc_loader.py

0 → 100644

ppdet/data/tests/000012.jpg

0 → 100644

55.6 KB

ppdet/data/tests/coco.yml

0 → 100644

ppdet/data/tests/rcnn_dataset.yml

0 → 100644

ppdet/data/tests/run_all_tests.py

0 → 100644

ppdet/data/tests/set_env.py

0 → 100644

ppdet/data/tests/test_loader.py

0 → 100644

ppdet/data/tests/test_operator.py

0 → 100644

ppdet/data/tests/test_reader.py

0 → 100644

ppdet/data/tools/labelme2coco.py

0 → 100644

ppdet/data/transform/__init__.py

0 → 100644

ppdet/data/transform/op_helper.py

0 → 100644

ppdet/data/transform/operators.py

0 → 100644

ppdet/data/transform/post_map.py

0 → 100644

ppdet/modeling/__init__.py

0 → 100644

ppdet/modeling/backbones/fpn.py

0 → 100644

ppdet/modeling/backbones/senet.py

0 → 100644

ppdet/modeling/model_input.py

0 → 100644

ppdet/modeling/ops.py

0 → 100644

ppdet/modeling/tests/__init__.py

0 → 100644

ppdet/optimizer.py

0 → 100644

ppdet/utils/__init__.py

0 → 100644

ppdet/utils/checkpoint.py

0 → 100644

ppdet/utils/cli.py

0 → 100644

ppdet/utils/coco_eval.py

0 → 100644

ppdet/utils/colormap.py

0 → 100644

ppdet/utils/download.py

0 → 100644

ppdet/utils/eval_utils.py

0 → 100644

ppdet/utils/stats.py

0 → 100644

ppdet/utils/visualizer.py

0 → 100644

ppdet/utils/voc_eval.py

0 → 100644

ppdet/utils/voc_utils.py

0 → 100644

requirements.txt

0 → 100644

| tqdm | ||

| docstring_parser @ http://github.com/willthefrog/docstring_parser/tarball/master | ||

| typeguard ; python_version >= '3.4' |

tools/configure.py

0 → 100644

tools/eval.py

0 → 100644

tools/infer.py

0 → 100644

tools/train.py

0 → 100644