Support C++ TRT inference (#188)

* C++ inference: support TensorRT * Update README.md * add a yolov3 demo yaml

Showing

| W: | H:

| W: | H:

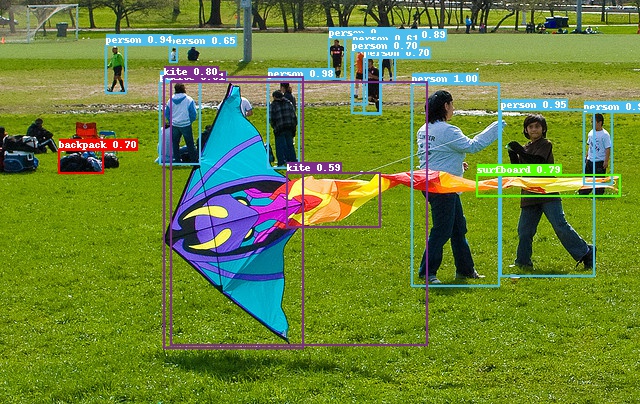

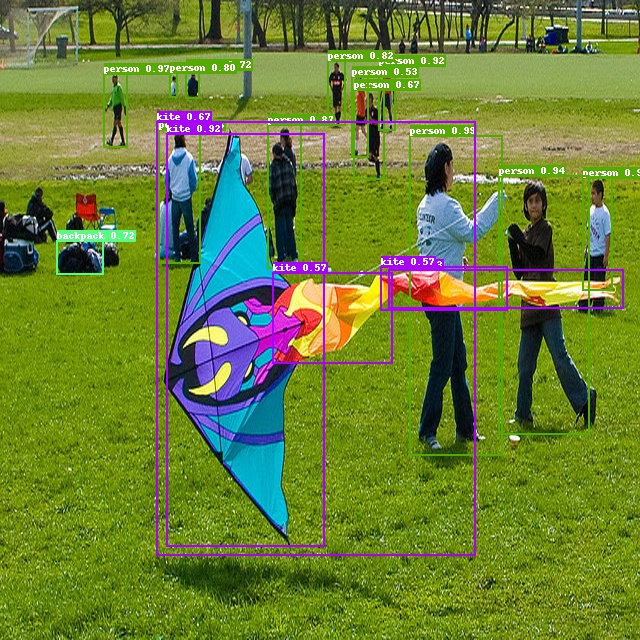

279.8 KB

* C++ inference: support TensorRT * Update README.md * add a yolov3 demo yaml

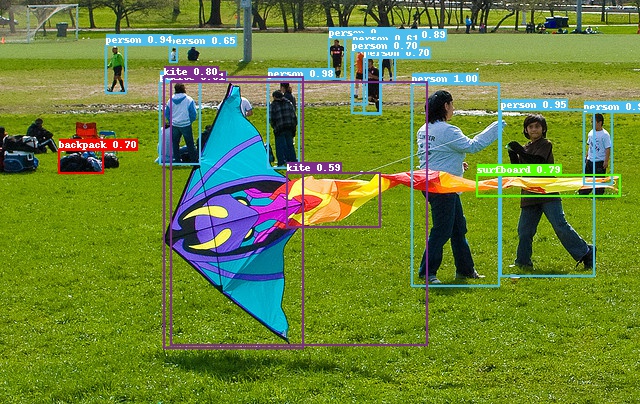

190.7 KB | W: | H:

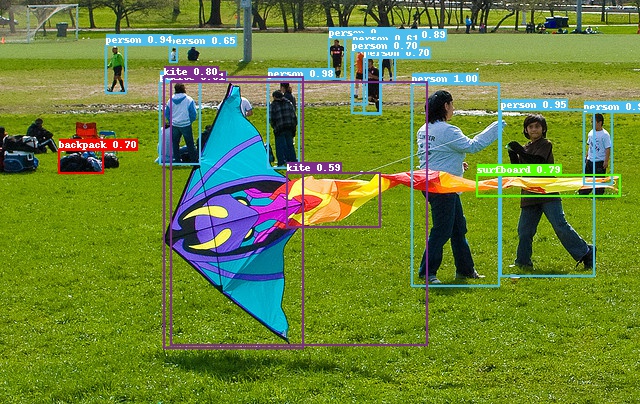

203.0 KB | W: | H:

279.8 KB