Merge branch 'ckpt_m2' of github.com:seiriosPlus/Paddle into ckpt_m2

Showing

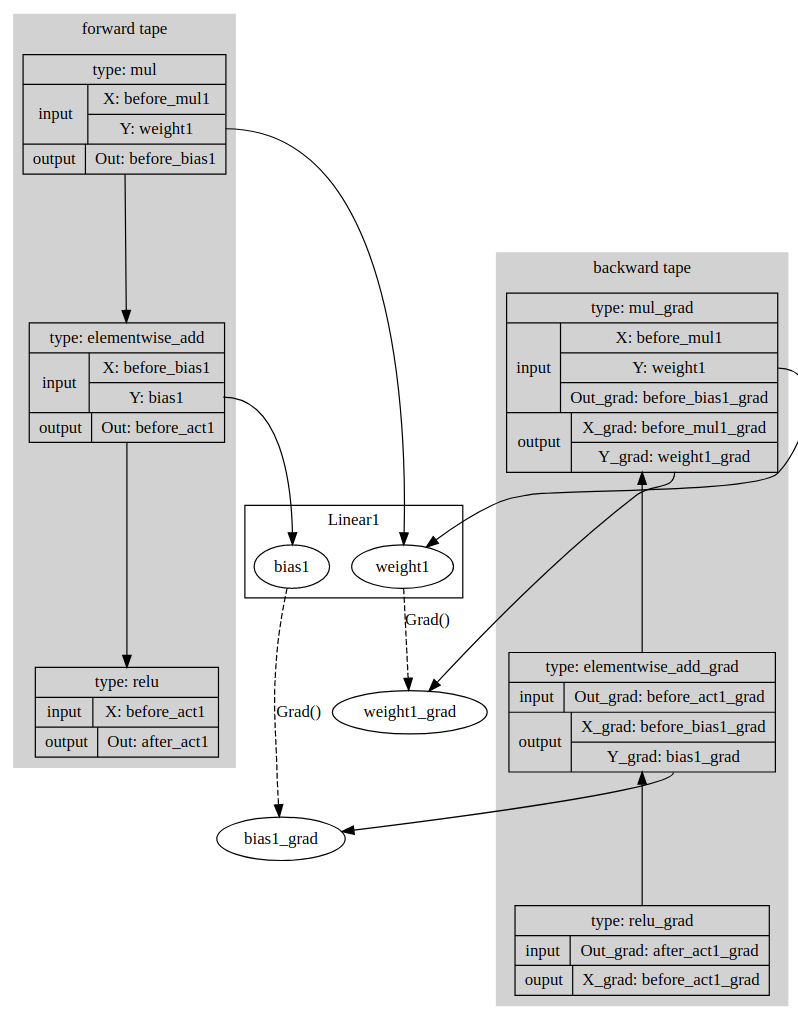

paddle/contrib/tape/README.md

已删除

100644 → 0

94.4 KB

paddle/contrib/tape/tape.cc

已删除

100644 → 0

paddle/contrib/tape/tape.h

已删除

100644 → 0

tools/print_signatures.py

0 → 100644

此差异已折叠。