@@ -111,6 +135,11 @@ PP-ShiTu图像识别快速体验:[点击这里](./docs/zh_CN/quick_start/quick

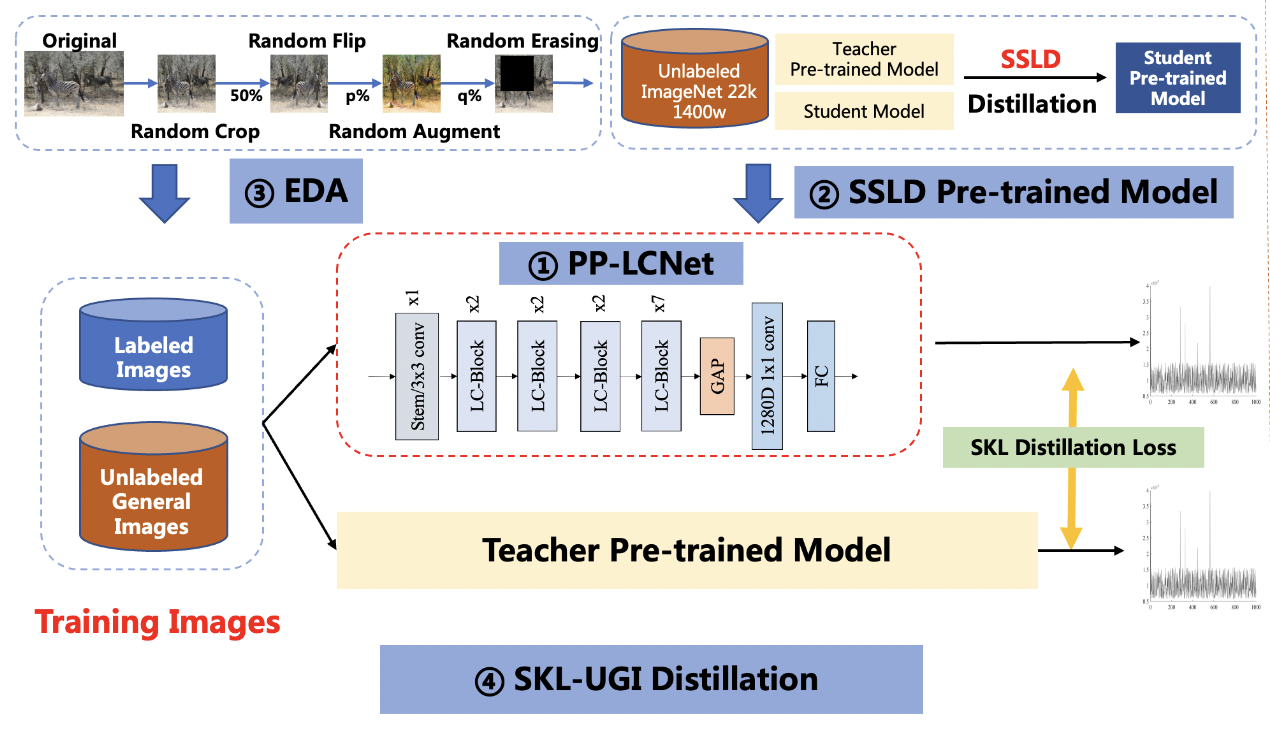

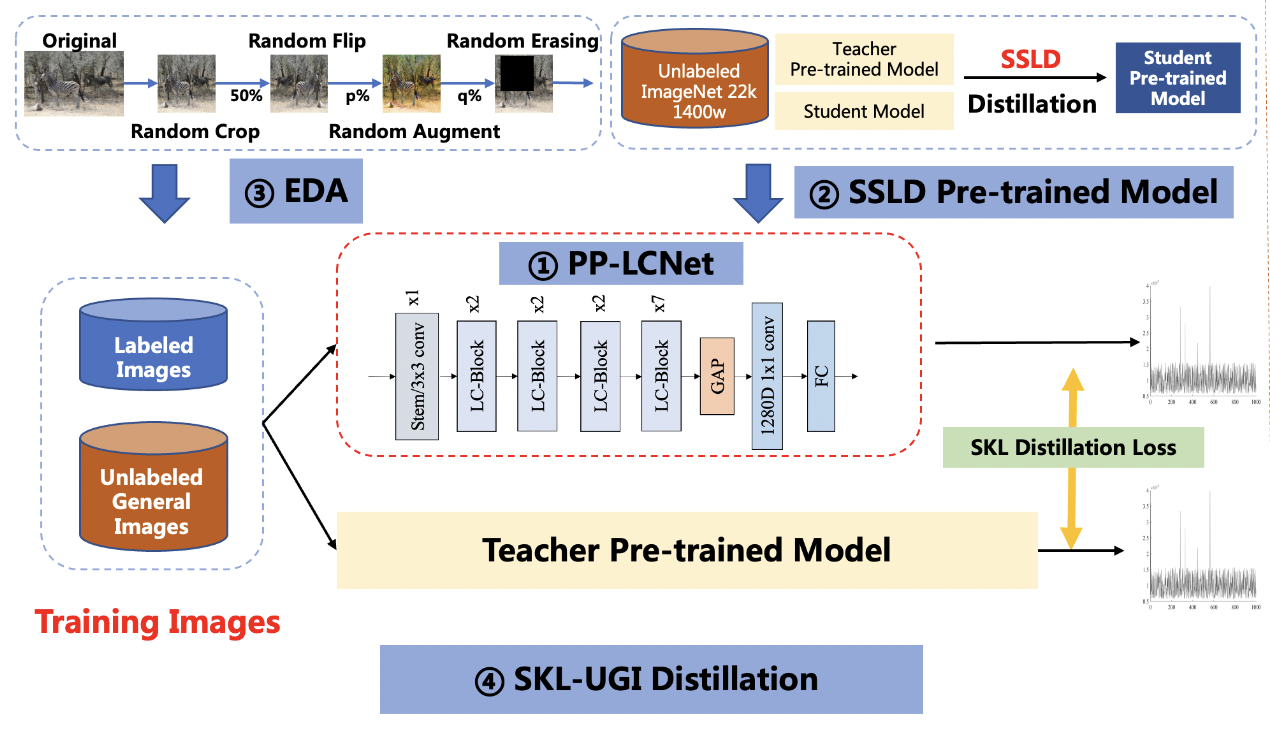

PP-ShiTu是一个实用的轻量级通用图像识别系统,主要由主体检测、特征学习和向量检索三个模块组成。该系统从骨干网络选择和调整、损失函数的选择、数据增强、学习率变换策略、正则化参数选择、预训练模型使用以及模型裁剪量化8个方面,采用多种策略,对各个模块的模型进行优化,最终得到在CPU上仅0.2s即可完成10w+库的图像识别的系统。更多细节请参考[PP-ShiTu技术方案](https://arxiv.org/pdf/2111.00775.pdf)。

+

+## PULC实用图像分类模型效果展示

+

+

+

## PP-ShiTu图像识别系统效果展示

diff --git a/README_en.md b/README_en.md

index 9b0d7c85d76cf06eac8fb265abb85c3bb98a275f..4bf960e57f2e56972f889c4bcf6a6d715b903477 100644

--- a/README_en.md

+++ b/README_en.md

@@ -4,39 +4,41 @@

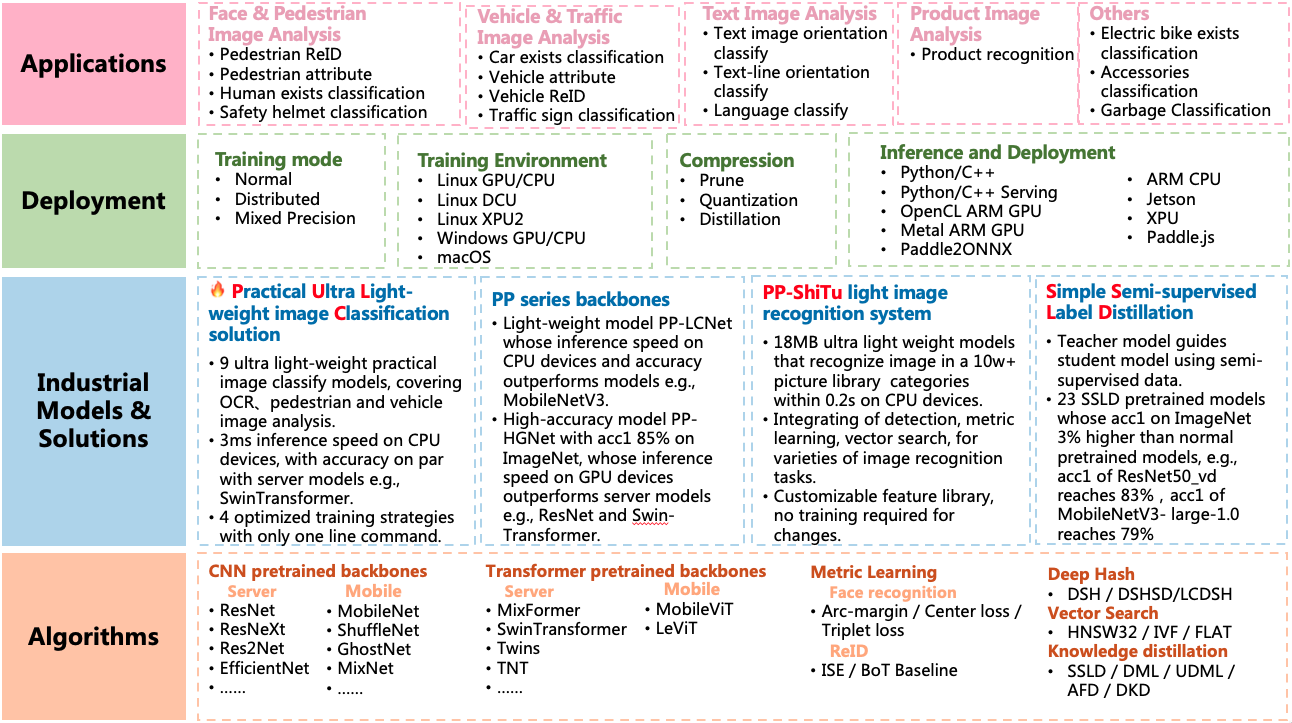

## Introduction

-PaddleClas is an image recognition toolset for industry and academia, helping users train better computer vision models and apply them in real scenarios.

+PaddleClas is an image classification and image recognition toolset for industry and academia, helping users train better computer vision models and apply them in real scenarios.

-**Recent updates**

-

-- 2022.4.21 Added the related [code](https://github.com/PaddlePaddle/PaddleClas/pull/1820/files) of the CVPR2022 oral paper [MixFormer](https://arxiv.org/pdf/2204.02557.pdf).

-

-- 2021.09.17 Add PP-LCNet series model developed by PaddleClas, these models show strong competitiveness on Intel CPUs.

-For the introduction of PP-LCNet, please refer to [paper](https://arxiv.org/pdf/2109.15099.pdf) or [PP-LCNet model introduction](docs/en/models/PP-LCNet_en.md). The metrics and pretrained model are available [here](docs/en/ImageNet_models_en.md).

-

-- 2021.06.29 Add Swin-transformer series model,Highest top1 acc on ImageNet1k dataset reaches 87.2%, training, evaluation and inference are all supported. Pretrained models can be downloaded [here](docs/en/models/models_intro_en.md).

-- 2021.06.16 PaddleClas release/2.2. Add metric learning and vector search modules. Add product recognition, animation character recognition, vehicle recognition and logo recognition. Added 30 pretrained models of LeViT, Twins, TNT, DLA, HarDNet, and RedNet, and the accuracy is roughly the same as that of the paper.

-- [more](./docs/en/update_history_en.md)

+

+

-## Features

+PULC demo images

+

+

-- A practical image recognition system consist of detection, feature learning and retrieval modules, widely applicable to all types of image recognition tasks.

-Four sample solutions are provided, including product recognition, vehicle recognition, logo recognition and animation character recognition.

-- Rich library of pre-trained models: Provide a total of 164 ImageNet pre-trained models in 35 series, among which 6 selected series of models support fast structural modification.

+

+

-- Comprehensive and easy-to-use feature learning components: 12 metric learning methods are integrated and can be combined and switched at will through configuration files.

+PP-ShiTu demo images

+

-- SSLD knowledge distillation: The 14 classification pre-training models generally improved their accuracy by more than 3%; among them, the ResNet50_vd model achieved a Top-1 accuracy of 84.0% on the Image-Net-1k dataset and the Res2Net200_vd pre-training model achieved a Top-1 accuracy of 85.1%.

+**Recent updates**

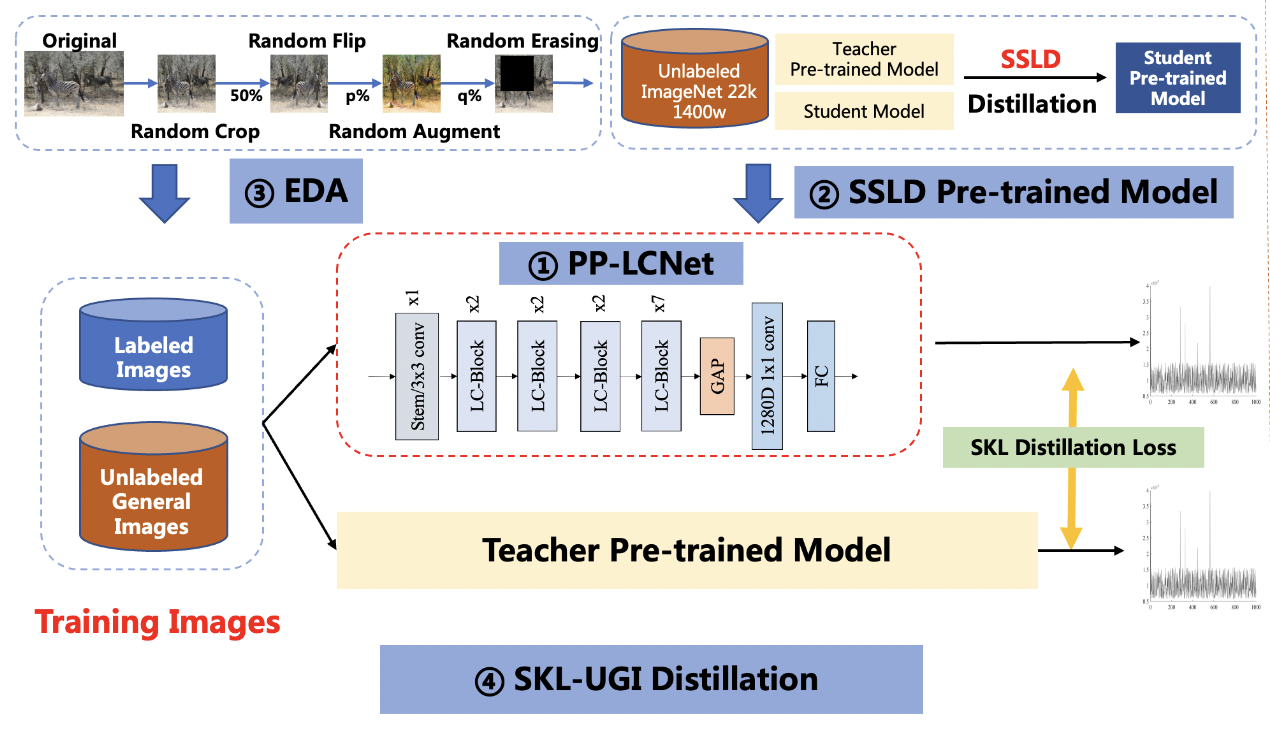

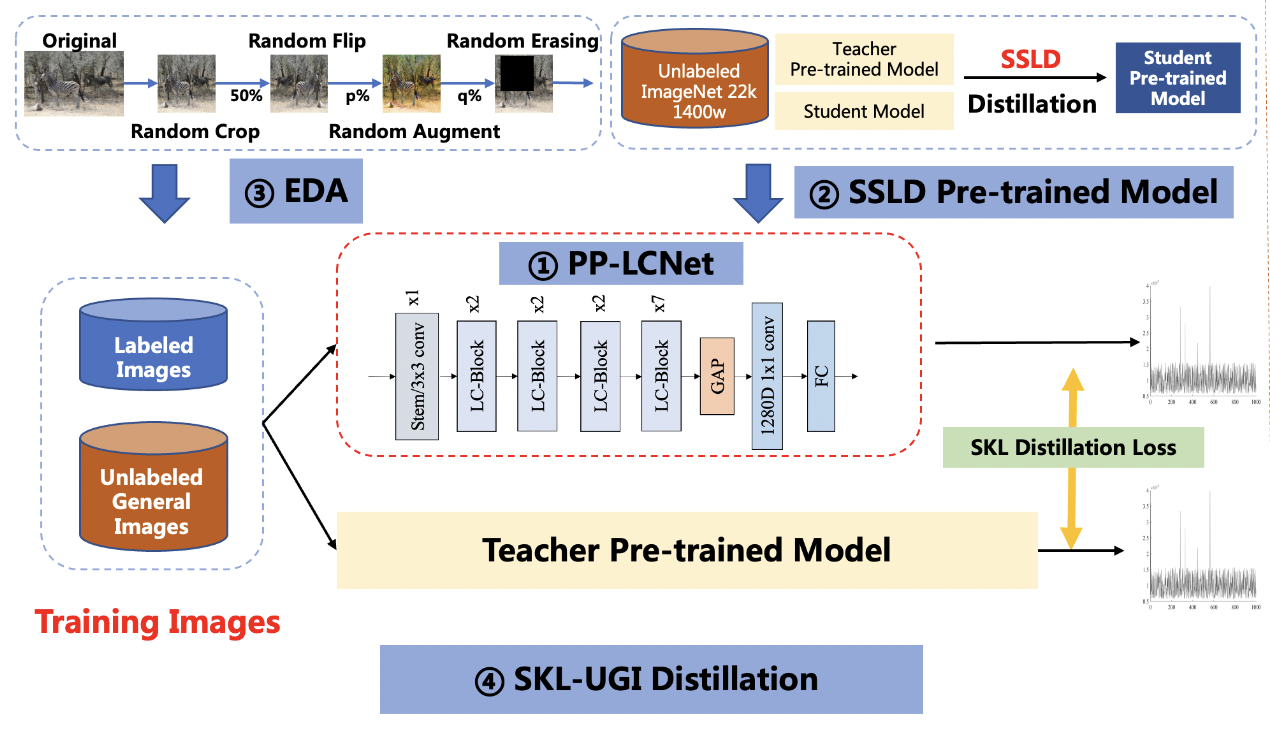

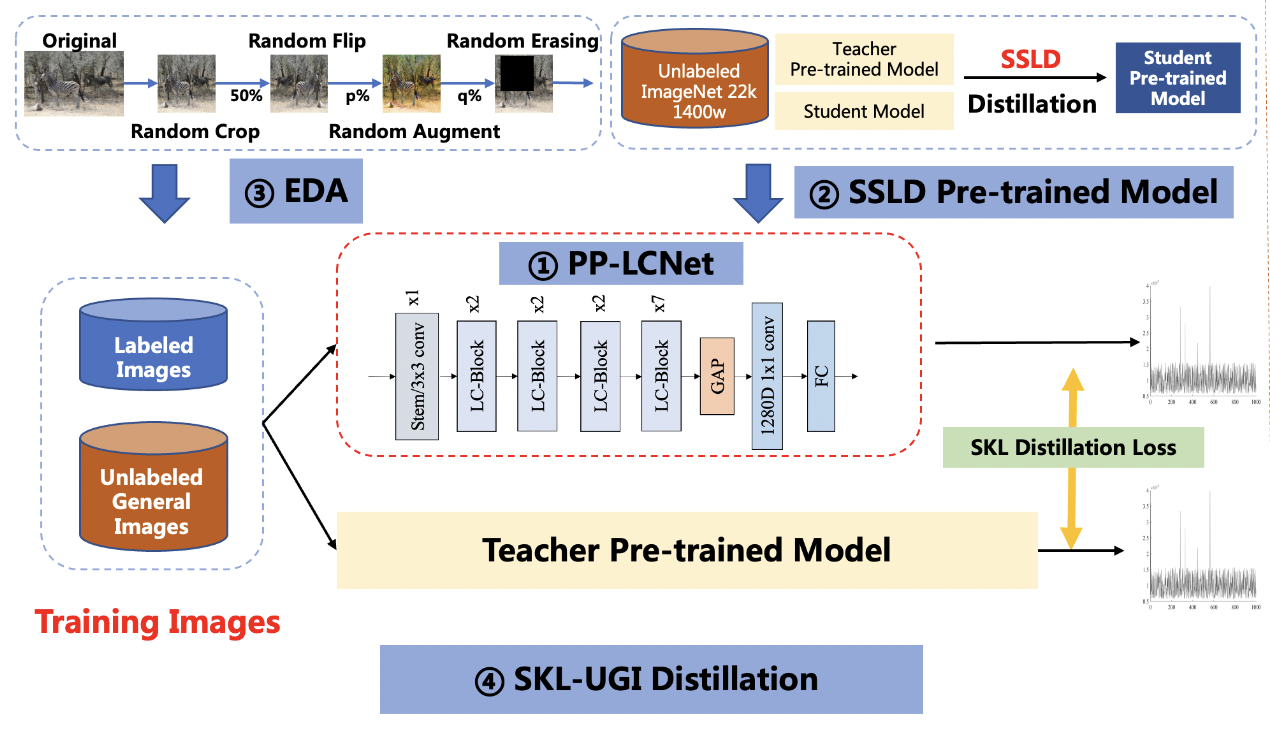

+- 2022.6.15 Release [**P**ractical **U**ltra **L**ight-weight image **C**lassification solutions](./docs/en/PULC/PULC_quickstart_en.md). PULC models inference within 3ms on CPU devices, with accuracy on par with SwinTransformer. We also release 9 practical classification models covering pedestrian, vehicle and OCR scenario.

+- 2022.4.21 Added the related [code](https://github.com/PaddlePaddle/PaddleClas/pull/1820/files) of the CVPR2022 oral paper [MixFormer](https://arxiv.org/pdf/2204.02557.pdf).

-- Data augmentation: Provide 8 data augmentation algorithms such as AutoAugment, Cutout, Cutmix, etc. with detailed introduction, code replication and evaluation of effectiveness in a unified experimental environment.

+- 2021.09.17 Add PP-LCNet series model developed by PaddleClas, these models show strong competitiveness on Intel CPUs.

+For the introduction of PP-LCNet, please refer to [paper](https://arxiv.org/pdf/2109.15099.pdf) or [PP-LCNet model introduction](docs/en/models/PP-LCNet_en.md). The metrics and pretrained model are available [here](docs/en/algorithm_introduction/ImageNet_models_en.md).

+- 2021.06.29 Add [Swin-transformer](docs/en/models/SwinTransformer_en.md)) series model,Highest top1 acc on ImageNet1k dataset reaches 87.2%, training, evaluation and inference are all supported. Pretrained models can be downloaded [here](docs/en/algorithm_introduction/ImageNet_models_en.md#16).

+- 2021.06.16 PaddleClas release/2.2. Add metric learning and vector search modules. Add product recognition, animation character recognition, vehicle recognition and logo recognition. Added 30 pretrained models of LeViT, Twins, TNT, DLA, HarDNet, and RedNet, and the accuracy is roughly the same as that of the paper.

+- [more](./docs/en/others/update_history_en.md)

+## Features

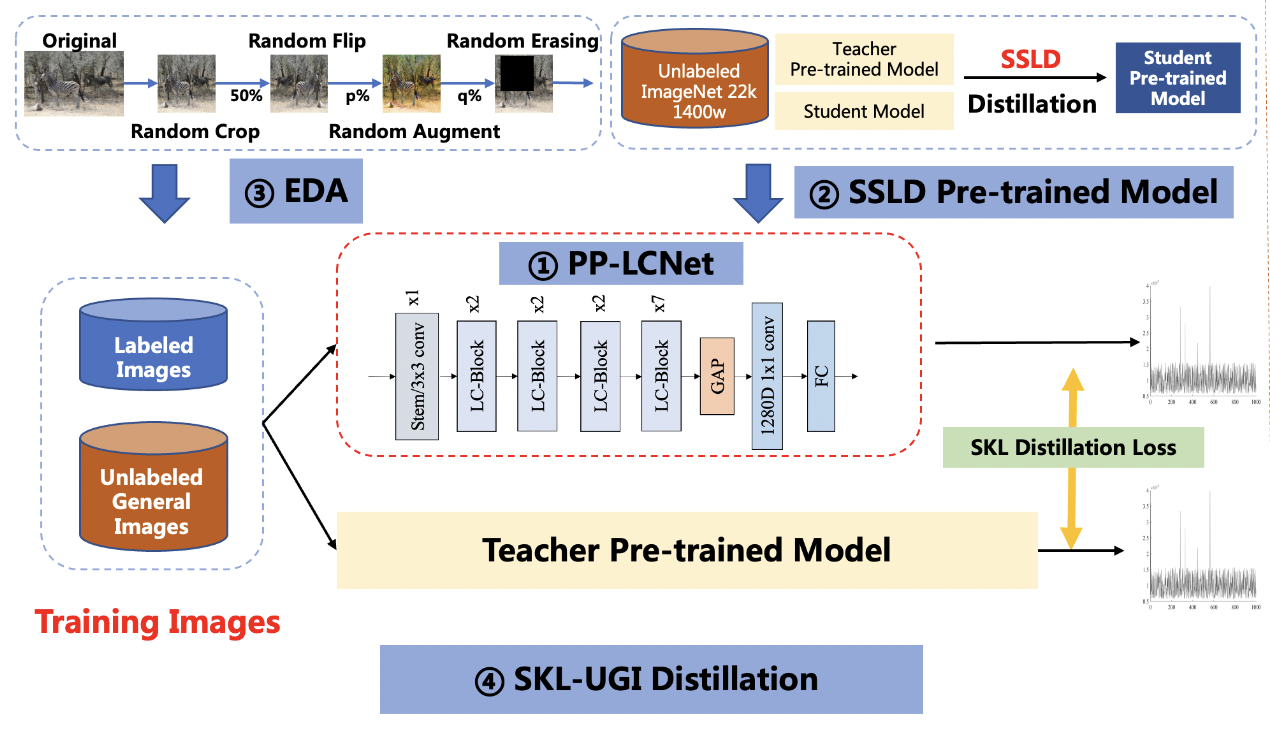

+PaddleClas release PP-HGNet、PP-LCNetv2、 PP-LCNet and **S**imple **S**emi-supervised **L**abel **D**istillation algorithms, and support plenty of

+image classification and image recognition algorithms.

+Based on th algorithms above, PaddleClas release PP-ShiTu image recognition system and [**P**ractical **U**ltra **L**ight-weight image **C**lassification solutions](docs/en/PULC/PULC_quickstart_en.md).

-

-

-

+

## Welcome to Join the Technical Exchange Group

@@ -48,41 +50,57 @@ Four sample solutions are provided, including product recognition, vehicle recog

## Quick Start

-Quick experience of image recognition:[Link](./docs/en/tutorials/quick_start_recognition_en.md)

+Quick experience of PP-ShiTu image recognition system:[Link](./docs/en/quick_start/quick_start_recognition_en.md)

+

+Quick experience of **P**ractical **U**ltra **L**ight-weight image **C**lassification models:[Link](docs/en/PULC/PULC_quickstart_en.md)

## Tutorials

-- [Quick Installation](./docs/en/tutorials/install_en.md)

-- [Quick Start of Recognition](./docs/en/tutorials/quick_start_recognition_en.md)

+- [Install Paddle](./docs/en/installation/install_paddle_en.md)

+- [Install PaddleClas Environment](./docs/en/installation/install_paddleclas_en.md)

+- [Practical Ultra Light-weight image Classification solutions](./docs/en/PULC/PULC_train_en.md)

+ - [PULC Quick Start](docs/en/PULC/PULC_quickstart_en.md)

+ - [PULC Model Zoo](docs/en/PULC/PULC_model_list_en.md)

+ - [PULC Classification Model of Someone or Nobody](docs/en/PULC/PULC_person_exists_en.md)

+ - [PULC Recognition Model of Person Attribute](docs/en/PULC/PULC_person_attribute_en.md)

+ - [PULC Classification Model of Wearing or Unwearing Safety Helmet](docs/en/PULC/PULC_safety_helmet_en.md)

+ - [PULC Classification Model of Traffic Sign](docs/en/PULC/PULC_traffic_sign_en.md)

+ - [PULC Recognition Model of Vehicle Attribute](docs/en/PULC/PULC_vehicle_attribute_en.md)

+ - [PULC Classification Model of Containing or Uncontaining Car](docs/en/PULC/PULC_car_exists_en.md)

+ - [PULC Classification Model of Text Image Orientation](docs/en/PULC/PULC_text_image_orientation_en.md)

+ - [PULC Classification Model of Textline Orientation](docs/en/PULC/PULC_textline_orientation_en.md)

+ - [PULC Classification Model of Language](docs/en/PULC/PULC_language_classification_en.md)

+- [Quick Start of Recognition](./docs/en/quick_start/quick_start_recognition_en.md)

- [Introduction to Image Recognition Systems](#Introduction_to_Image_Recognition_Systems)

-- [Demo images](#Demo_images)

+- [Image Recognition Demo images](#Rec_Demo_images)

+- [PULC demo images](#Clas_Demo_images)

- Algorithms Introduction

- - [Backbone Network and Pre-trained Model Library](./docs/en/ImageNet_models_en.md)

- - [Mainbody Detection](./docs/en/application/mainbody_detection_en.md)

- - [Image Classification](./docs/en/tutorials/image_classification_en.md)

- - [Feature Learning](./docs/en/application/feature_learning_en.md)

- - [Product Recognition](./docs/en/application/product_recognition_en.md)

- - [Vehicle Recognition](./docs/en/application/vehicle_recognition_en.md)

- - [Logo Recognition](./docs/en/application/logo_recognition_en.md)

- - [Animation Character Recognition](./docs/en/application/cartoon_character_recognition_en.md)

+ - [Backbone Network and Pre-trained Model Library](./docs/en/algorithm_introduction/ImageNet_models_en.md)

+ - [Mainbody Detection](./docs/en/image_recognition_pipeline/mainbody_detection_en.md)

+ - [Feature Learning](./docs/en/image_recognition_pipeline/feature_extraction_en.md)

- [Vector Search](./deploy/vector_search/README.md)

-- Models Training/Evaluation

- - [Image Classification](./docs/en/tutorials/getting_started_en.md)

- - [Feature Learning](./docs/en/tutorials/getting_started_retrieval_en.md)

- Inference Model Prediction

- - [Python Inference](./docs/en/inference.md)

+ - [Python Inference](./docs/en/inference_deployment/python_deploy_en.md)

- [C++ Classfication Inference](./deploy/cpp/readme_en.md), [C++ PP-ShiTu Inference](deploy/cpp_shitu/readme_en.md)

- Model Deploy (only support classification for now, recognition coming soon)

- [Hub Serving Deployment](./deploy/hubserving/readme_en.md)

- [Mobile Deployment](./deploy/lite/readme_en.md)

- - [Inference Using whl](./docs/en/whl_en.md)

+ - [Inference Using whl](./docs/en/inference_deployment/whl_deploy_en.md)

- Advanced Tutorial

- [Knowledge Distillation](./docs/en/advanced_tutorials/distillation/distillation_en.md)

- - [Model Quantization](./docs/en/extension/paddle_quantization_en.md)

- - [Data Augmentation](./docs/en/advanced_tutorials/image_augmentation/ImageAugment_en.md)

+ - [Model Quantization](./docs/en/algorithm_introduction/model_prune_quantization_en.md)

+ - [Data Augmentation](./docs/en/advanced_tutorials/DataAugmentation_en.md)

- [License](#License)

- [Contribution](#Contribution)

+

diff --git a/deploy/configs/PULC/car_exists/inference_car_exists.yaml b/deploy/configs/PULC/car_exists/inference_car_exists.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..b6733069d99b5622c83321bc628f3d70274ce8d4

--- /dev/null

+++ b/deploy/configs/PULC/car_exists/inference_car_exists.yaml

@@ -0,0 +1,36 @@

+Global:

+ infer_imgs: "./images/PULC/car_exists/objects365_00001507.jpeg"

+ inference_model_dir: "./models/car_exists_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: False

+ cpu_num_threads: 10

+ enable_benchmark: True

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ resize_short: 256

+ - CropImage:

+ size: 224

+ - NormalizeImage:

+ scale: 0.00392157

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: ThreshOutput

+ ThreshOutput:

+ threshold: 0.5

+ label_0: no_car

+ label_1: contains_car

+ SavePreLabel:

+ save_dir: ./pre_label/

diff --git a/deploy/configs/PULC/language_classification/inference_language_classification.yaml b/deploy/configs/PULC/language_classification/inference_language_classification.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..fb9fb6b6631e774e7486bcdb31c25621e2b7d790

--- /dev/null

+++ b/deploy/configs/PULC/language_classification/inference_language_classification.yaml

@@ -0,0 +1,33 @@

+Global:

+ infer_imgs: "./images/PULC/language_classification/word_35404.png"

+ inference_model_dir: "./models/language_classification_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: False

+ cpu_num_threads: 10

+ enable_benchmark: True

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ size: [160, 80]

+ - NormalizeImage:

+ scale: 0.00392157

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: Topk

+ Topk:

+ topk: 2

+ class_id_map_file: "../ppcls/utils/PULC_label_list/language_classification_label_list.txt"

+ SavePreLabel:

+ save_dir: ./pre_label/

diff --git a/deploy/configs/PULC/person_attribute/inference_person_attribute.yaml b/deploy/configs/PULC/person_attribute/inference_person_attribute.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..d5be2a3568291d0a31a7026974fc22ecf54a8f4c

--- /dev/null

+++ b/deploy/configs/PULC/person_attribute/inference_person_attribute.yaml

@@ -0,0 +1,32 @@

+Global:

+ infer_imgs: "./images/PULC/person_attribute/090004.jpg"

+ inference_model_dir: "./models/person_attribute_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: True

+ cpu_num_threads: 10

+ benchmark: False

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ size: [192, 256]

+ - NormalizeImage:

+ scale: 1.0/255.0

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: PersonAttribute

+ PersonAttribute:

+ threshold: 0.5 #default threshold

+ glasses_threshold: 0.3 #threshold only for glasses

+ hold_threshold: 0.6 #threshold only for hold

diff --git a/deploy/configs/PULC/person/inference_person_cls.yaml b/deploy/configs/PULC/person_exists/inference_person_exists.yaml

similarity index 81%

rename from deploy/configs/PULC/person/inference_person_cls.yaml

rename to deploy/configs/PULC/person_exists/inference_person_exists.yaml

index a70f663a792fcdcab3b7d45059f2afe0b1efbf07..3df94a80c7c75814e778e5320a31b20a8a7eb742 100644

--- a/deploy/configs/PULC/person/inference_person_cls.yaml

+++ b/deploy/configs/PULC/person_exists/inference_person_exists.yaml

@@ -1,6 +1,6 @@

Global:

- infer_imgs: "./images/PULC/person/objects365_02035329.jpg"

- inference_model_dir: "./models/person_cls_infer"

+ infer_imgs: "./images/PULC/person_exists/objects365_02035329.jpg"

+ inference_model_dir: "./models/person_exists_infer"

batch_size: 1

use_gpu: True

enable_mkldnn: False

@@ -29,7 +29,7 @@ PreProcess:

PostProcess:

main_indicator: ThreshOutput

ThreshOutput:

- threshold: 0.9

+ threshold: 0.5

label_0: nobody

label_1: someone

SavePreLabel:

diff --git a/deploy/configs/PULC/safety_helmet/inference_safety_helmet.yaml b/deploy/configs/PULC/safety_helmet/inference_safety_helmet.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..66a4cebb359a9b1f03a205ee6a031ca6464cffa8

--- /dev/null

+++ b/deploy/configs/PULC/safety_helmet/inference_safety_helmet.yaml

@@ -0,0 +1,36 @@

+Global:

+ infer_imgs: "./images/PULC/safety_helmet/safety_helmet_test_1.png"

+ inference_model_dir: "./models/safety_helmet_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: False

+ cpu_num_threads: 10

+ enable_benchmark: True

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ resize_short: 256

+ - CropImage:

+ size: 224

+ - NormalizeImage:

+ scale: 0.00392157

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: ThreshOutput

+ ThreshOutput:

+ threshold: 0.5

+ label_0: wearing_helmet

+ label_1: unwearing_helmet

+ SavePreLabel:

+ save_dir: ./pre_label/

diff --git a/deploy/configs/PULC/text_image_orientation/inference_text_image_orientation.yaml b/deploy/configs/PULC/text_image_orientation/inference_text_image_orientation.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..c6c3969ffa627288fe58fab28b3fe1cbffe9dd03

--- /dev/null

+++ b/deploy/configs/PULC/text_image_orientation/inference_text_image_orientation.yaml

@@ -0,0 +1,35 @@

+Global:

+ infer_imgs: "./images/PULC/text_image_orientation/img_rot0_demo.jpg"

+ inference_model_dir: "./models/text_image_orientation_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: False

+ cpu_num_threads: 10

+ enable_benchmark: True

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ resize_short: 256

+ - CropImage:

+ size: 224

+ - NormalizeImage:

+ scale: 0.00392157

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: Topk

+ Topk:

+ topk: 2

+ class_id_map_file: "../ppcls/utils/PULC_label_list/text_image_orientation_label_list.txt"

+ SavePreLabel:

+ save_dir: ./pre_label/

diff --git a/deploy/configs/PULC/textline_orientation/inference_textline_orientation.yaml b/deploy/configs/PULC/textline_orientation/inference_textline_orientation.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..108b3dd53a95c06345bdd7ccd34b2e5252d2df19

--- /dev/null

+++ b/deploy/configs/PULC/textline_orientation/inference_textline_orientation.yaml

@@ -0,0 +1,33 @@

+Global:

+ infer_imgs: "./images/PULC/textline_orientation/textline_orientation_test_0_0.png"

+ inference_model_dir: "./models/textline_orientation_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: True

+ cpu_num_threads: 10

+ enable_benchmark: True

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ size: [160, 80]

+ - NormalizeImage:

+ scale: 0.00392157

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: Topk

+ Topk:

+ topk: 1

+ class_id_map_file: "../ppcls/utils/PULC_label_list/textline_orientation_label_list.txt"

+ SavePreLabel:

+ save_dir: ./pre_label/

diff --git a/deploy/configs/PULC/traffic_sign/inference_traffic_sign.yaml b/deploy/configs/PULC/traffic_sign/inference_traffic_sign.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..53699718b4fdd38da86eaee4cccc584dcc87d2b7

--- /dev/null

+++ b/deploy/configs/PULC/traffic_sign/inference_traffic_sign.yaml

@@ -0,0 +1,35 @@

+Global:

+ infer_imgs: "./images/PULC/traffic_sign/99603_17806.jpg"

+ inference_model_dir: "./models/traffic_sign_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: True

+ cpu_num_threads: 10

+ benchmark: False

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ resize_short: 256

+ - CropImage:

+ size: 224

+ - NormalizeImage:

+ scale: 0.00392157

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: Topk

+ Topk:

+ topk: 5

+ class_id_map_file: "../ppcls/utils/PULC_label_list/traffic_sign_label_list.txt"

+ SavePreLabel:

+ save_dir: ./pre_label/

diff --git a/deploy/configs/PULC/vehicle_attribute/inference_vehicle_attribute.yaml b/deploy/configs/PULC/vehicle_attribute/inference_vehicle_attribute.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..14ae348d09faca113d5863fbb57f066675b3f447

--- /dev/null

+++ b/deploy/configs/PULC/vehicle_attribute/inference_vehicle_attribute.yaml

@@ -0,0 +1,32 @@

+Global:

+ infer_imgs: "./images/PULC/vehicle_attribute/0002_c002_00030670_0.jpg"

+ inference_model_dir: "./models/vehicle_attribute_infer"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: True

+ cpu_num_threads: 10

+ benchmark: False

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ size: [256, 192]

+ - NormalizeImage:

+ scale: 1.0/255.0

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: VehicleAttribute

+ VehicleAttribute:

+ color_threshold: 0.5

+ type_threshold: 0.5

+

diff --git a/deploy/configs/inference_attr.yaml b/deploy/configs/inference_attr.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..88f73db5419414812450b768ac783982386f0a78

--- /dev/null

+++ b/deploy/configs/inference_attr.yaml

@@ -0,0 +1,33 @@

+Global:

+ infer_imgs: "./images/Pedestrain_Attr.jpg"

+ inference_model_dir: "../inference/"

+ batch_size: 1

+ use_gpu: True

+ enable_mkldnn: False

+ cpu_num_threads: 10

+ enable_benchmark: True

+ use_fp16: False

+ ir_optim: True

+ use_tensorrt: False

+ gpu_mem: 8000

+ enable_profile: False

+

+PreProcess:

+ transform_ops:

+ - ResizeImage:

+ size: [192, 256]

+ - NormalizeImage:

+ scale: 1.0/255.0

+ mean: [0.485, 0.456, 0.406]

+ std: [0.229, 0.224, 0.225]

+ order: ''

+ channel_num: 3

+ - ToCHWImage:

+

+PostProcess:

+ main_indicator: PersonAttribute

+ PersonAttribute:

+ threshold: 0.5 #default threshold

+ glasses_threshold: 0.3 #threshold only for glasses

+ hold_threshold: 0.6 #threshold only for hold

+

diff --git a/deploy/configs/inference_cls.yaml b/deploy/configs/inference_cls.yaml

index fc0f0fe67aa628e504bb6fcb743f29fd020548cc..d9181278cc617822f98e4966abf0d12ceca498a4 100644

--- a/deploy/configs/inference_cls.yaml

+++ b/deploy/configs/inference_cls.yaml

@@ -1,5 +1,5 @@

Global:

- infer_imgs: "./images/ILSVRC2012_val_00000010.jpeg"

+ infer_imgs: "./images/ImageNet/ILSVRC2012_val_00000010.jpeg"

inference_model_dir: "./models"

batch_size: 1

use_gpu: True

@@ -32,4 +32,4 @@ PostProcess:

topk: 5

class_id_map_file: "../ppcls/utils/imagenet1k_label_list.txt"

SavePreLabel:

- save_dir: ./pre_label/

\ No newline at end of file

+ save_dir: ./pre_label/

diff --git a/deploy/configs/inference_cls_ch4.yaml b/deploy/configs/inference_cls_ch4.yaml

index 9b740ed8293c3d66a325682cafc42e2b1415df4d..85f9acb29a88772da63abe302354f5e17a9c3e59 100644

--- a/deploy/configs/inference_cls_ch4.yaml

+++ b/deploy/configs/inference_cls_ch4.yaml

@@ -1,5 +1,5 @@

Global:

- infer_imgs: "./images/ILSVRC2012_val_00000010.jpeg"

+ infer_imgs: "./images/ImageNet/ILSVRC2012_val_00000010.jpeg"

inference_model_dir: "./models"

batch_size: 1

use_gpu: True

@@ -32,4 +32,4 @@ PostProcess:

topk: 5

class_id_map_file: "../ppcls/utils/imagenet1k_label_list.txt"

SavePreLabel:

- save_dir: ./pre_label/

\ No newline at end of file

+ save_dir: ./pre_label/

diff --git a/deploy/images/ILSVRC2012_val_00000010.jpeg b/deploy/images/ImageNet/ILSVRC2012_val_00000010.jpeg

similarity index 100%

rename from deploy/images/ILSVRC2012_val_00000010.jpeg

rename to deploy/images/ImageNet/ILSVRC2012_val_00000010.jpeg

diff --git a/deploy/images/ILSVRC2012_val_00010010.jpeg b/deploy/images/ImageNet/ILSVRC2012_val_00010010.jpeg

similarity index 100%

rename from deploy/images/ILSVRC2012_val_00010010.jpeg

rename to deploy/images/ImageNet/ILSVRC2012_val_00010010.jpeg

diff --git a/deploy/images/ILSVRC2012_val_00020010.jpeg b/deploy/images/ImageNet/ILSVRC2012_val_00020010.jpeg

similarity index 100%

rename from deploy/images/ILSVRC2012_val_00020010.jpeg

rename to deploy/images/ImageNet/ILSVRC2012_val_00020010.jpeg

diff --git a/deploy/images/ILSVRC2012_val_00030010.jpeg b/deploy/images/ImageNet/ILSVRC2012_val_00030010.jpeg

similarity index 100%

rename from deploy/images/ILSVRC2012_val_00030010.jpeg

rename to deploy/images/ImageNet/ILSVRC2012_val_00030010.jpeg

diff --git a/deploy/images/PULC/car_exists/objects365_00001507.jpeg b/deploy/images/PULC/car_exists/objects365_00001507.jpeg

new file mode 100644

index 0000000000000000000000000000000000000000..9959954b6b8bf27589e1d2081f86c6078d16e2c1

Binary files /dev/null and b/deploy/images/PULC/car_exists/objects365_00001507.jpeg differ

diff --git a/deploy/images/PULC/car_exists/objects365_00001521.jpeg b/deploy/images/PULC/car_exists/objects365_00001521.jpeg

new file mode 100644

index 0000000000000000000000000000000000000000..ea65b3108ec0476ce952b3221c31ac54fcef161d

Binary files /dev/null and b/deploy/images/PULC/car_exists/objects365_00001521.jpeg differ

diff --git a/deploy/images/PULC/language_classification/word_17.png b/deploy/images/PULC/language_classification/word_17.png

new file mode 100644

index 0000000000000000000000000000000000000000..c0cd74632460e01676fbc5a43b220c0a7f7d0474

Binary files /dev/null and b/deploy/images/PULC/language_classification/word_17.png differ

diff --git a/deploy/images/PULC/language_classification/word_20.png b/deploy/images/PULC/language_classification/word_20.png

new file mode 100644

index 0000000000000000000000000000000000000000..f9149670e8a2aa086c91451442f63a727661fd7d

Binary files /dev/null and b/deploy/images/PULC/language_classification/word_20.png differ

diff --git a/deploy/images/PULC/language_classification/word_35404.png b/deploy/images/PULC/language_classification/word_35404.png

new file mode 100644

index 0000000000000000000000000000000000000000..9e1789ab47aefecac8eaf1121decfc6a8cfb1e8b

Binary files /dev/null and b/deploy/images/PULC/language_classification/word_35404.png differ

diff --git a/deploy/images/PULC/person_attribute/090004.jpg b/deploy/images/PULC/person_attribute/090004.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..140694eeec3d2925303e8c0d544ef5979cd78219

Binary files /dev/null and b/deploy/images/PULC/person_attribute/090004.jpg differ

diff --git a/deploy/images/PULC/person_attribute/090007.jpg b/deploy/images/PULC/person_attribute/090007.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..9fea2e7c9e0047a8b59606877ad41fe24bf2e24c

Binary files /dev/null and b/deploy/images/PULC/person_attribute/090007.jpg differ

diff --git a/deploy/images/PULC/person/objects365_01780782.jpg b/deploy/images/PULC/person_exists/objects365_01780782.jpg

similarity index 100%

rename from deploy/images/PULC/person/objects365_01780782.jpg

rename to deploy/images/PULC/person_exists/objects365_01780782.jpg

diff --git a/deploy/images/PULC/person/objects365_02035329.jpg b/deploy/images/PULC/person_exists/objects365_02035329.jpg

similarity index 100%

rename from deploy/images/PULC/person/objects365_02035329.jpg

rename to deploy/images/PULC/person_exists/objects365_02035329.jpg

diff --git a/deploy/images/PULC/safety_helmet/safety_helmet_test_1.png b/deploy/images/PULC/safety_helmet/safety_helmet_test_1.png

new file mode 100644

index 0000000000000000000000000000000000000000..c28f54f77d54df6e68e471538846b01db4387e08

Binary files /dev/null and b/deploy/images/PULC/safety_helmet/safety_helmet_test_1.png differ

diff --git a/deploy/images/PULC/safety_helmet/safety_helmet_test_2.png b/deploy/images/PULC/safety_helmet/safety_helmet_test_2.png

new file mode 100644

index 0000000000000000000000000000000000000000..8e784af808afb58d67fdb3e277dfeebd134ee846

Binary files /dev/null and b/deploy/images/PULC/safety_helmet/safety_helmet_test_2.png differ

diff --git a/deploy/images/PULC/text_image_orientation/img_rot0_demo.jpg b/deploy/images/PULC/text_image_orientation/img_rot0_demo.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..412d41956ba48c8e3243bdeff746d389be7e762b

Binary files /dev/null and b/deploy/images/PULC/text_image_orientation/img_rot0_demo.jpg differ

diff --git a/deploy/images/PULC/text_image_orientation/img_rot180_demo.jpg b/deploy/images/PULC/text_image_orientation/img_rot180_demo.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..f4725b96698e2ac222ae9d4830d8f29a33322443

Binary files /dev/null and b/deploy/images/PULC/text_image_orientation/img_rot180_demo.jpg differ

diff --git a/deploy/images/PULC/textline_orientation/textline_orientation_test_0_0.png b/deploy/images/PULC/textline_orientation/textline_orientation_test_0_0.png

new file mode 100644

index 0000000000000000000000000000000000000000..4b8d24d29ff0f8b4befff6bf943d506c36061d4d

Binary files /dev/null and b/deploy/images/PULC/textline_orientation/textline_orientation_test_0_0.png differ

diff --git a/deploy/images/PULC/textline_orientation/textline_orientation_test_0_1.png b/deploy/images/PULC/textline_orientation/textline_orientation_test_0_1.png

new file mode 100644

index 0000000000000000000000000000000000000000..42ad5234973679e65be6054f90c1cc7c0f989bd2

Binary files /dev/null and b/deploy/images/PULC/textline_orientation/textline_orientation_test_0_1.png differ

diff --git a/deploy/images/PULC/textline_orientation/textline_orientation_test_1_0.png b/deploy/images/PULC/textline_orientation/textline_orientation_test_1_0.png

new file mode 100644

index 0000000000000000000000000000000000000000..ac2447842dd0fac260c0d3c6e0d156dda9890923

Binary files /dev/null and b/deploy/images/PULC/textline_orientation/textline_orientation_test_1_0.png differ

diff --git a/deploy/images/PULC/textline_orientation/textline_orientation_test_1_1.png b/deploy/images/PULC/textline_orientation/textline_orientation_test_1_1.png

new file mode 100644

index 0000000000000000000000000000000000000000..7d5b75f7e5bbeabded56eba1b4b566c4ca019590

Binary files /dev/null and b/deploy/images/PULC/textline_orientation/textline_orientation_test_1_1.png differ

diff --git a/deploy/images/PULC/traffic_sign/100999_83928.jpg b/deploy/images/PULC/traffic_sign/100999_83928.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..6f32ed5ae2d8483d29986e3a45db1789da2a4d43

Binary files /dev/null and b/deploy/images/PULC/traffic_sign/100999_83928.jpg differ

diff --git a/deploy/images/PULC/traffic_sign/99603_17806.jpg b/deploy/images/PULC/traffic_sign/99603_17806.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..c792fdf6eb64395fffaf8289a1ec14d47279860e

Binary files /dev/null and b/deploy/images/PULC/traffic_sign/99603_17806.jpg differ

diff --git a/deploy/images/PULC/vehicle_attribute/0002_c002_00030670_0.jpg b/deploy/images/PULC/vehicle_attribute/0002_c002_00030670_0.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..bb5de9fc6ff99550bf9bff8d4a9f0d0e0fe18c06

Binary files /dev/null and b/deploy/images/PULC/vehicle_attribute/0002_c002_00030670_0.jpg differ

diff --git a/deploy/images/PULC/vehicle_attribute/0014_c012_00040750_0.jpg b/deploy/images/PULC/vehicle_attribute/0014_c012_00040750_0.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..76207d43ce597a1079c523dca0c32923bf15db19

Binary files /dev/null and b/deploy/images/PULC/vehicle_attribute/0014_c012_00040750_0.jpg differ

diff --git a/deploy/images/Pedestrain_Attr.jpg b/deploy/images/Pedestrain_Attr.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..6a87e856af8c17a3b93617b93ea517b91c508619

Binary files /dev/null and b/deploy/images/Pedestrain_Attr.jpg differ

diff --git a/deploy/paddle2onnx/readme.md b/deploy/paddle2onnx/readme.md

index d1307ea84e3d7a1465c7c464d3b41dfa7613a046..bacc202806bf1a60e85790969edcb70f1489f7df 100644

--- a/deploy/paddle2onnx/readme.md

+++ b/deploy/paddle2onnx/readme.md

@@ -1,53 +1,59 @@

# paddle2onnx 模型转化与预测

-本章节介绍 ResNet50_vd 模型如何转化为 ONNX 模型,并基于 ONNX 引擎预测。

+## 目录

+

+- [paddle2onnx 模型转化与预测](#paddle2onnx-模型转化与预测)

+ - [1. 环境准备](#1-环境准备)

+ - [2. 模型转换](#2-模型转换)

+ - [3. onnx 预测](#3-onnx-预测)

## 1. 环境准备

需要准备 Paddle2ONNX 模型转化环境,和 ONNX 模型预测环境。

-Paddle2ONNX 支持将 PaddlePaddle 模型格式转化到 ONNX 模型格式,算子目前稳定支持导出 ONNX Opset 9~11,部分Paddle算子支持更低的ONNX Opset转换。

-更多细节可参考 [Paddle2ONNX](https://github.com/PaddlePaddle/Paddle2ONNX/blob/develop/README_zh.md)

+Paddle2ONNX 支持将 PaddlePaddle inference 模型格式转化到 ONNX 模型格式,算子目前稳定支持导出 ONNX Opset 9~11。

+更多细节可参考 [Paddle2ONNX](https://github.com/PaddlePaddle/Paddle2ONNX#paddle2onnx)

- 安装 Paddle2ONNX

-```

-python3.7 -m pip install paddle2onnx

-```

+ ```shell

+ python3.7 -m pip install paddle2onnx

+ ```

-- 安装 ONNX 运行时

-```

-python3.7 -m pip install onnxruntime

-```

+- 安装 ONNX 推理引擎

+ ```shell

+ python3.7 -m pip install onnxruntime

+ ```

+下面以 ResNet50_vd 为例,介绍如何将 PaddlePaddle inference 模型转换为 ONNX 模型,并基于 ONNX 引擎预测。

## 2. 模型转换

- ResNet50_vd inference模型下载

-```

-cd deploy

-mkdir models && cd models

-wget -nc https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/inference/ResNet50_vd_infer.tar && tar xf ResNet50_vd_infer.tar

-cd ..

-```

+ ```shell

+ cd deploy

+ mkdir models && cd models

+ wget -nc https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/inference/ResNet50_vd_infer.tar && tar xf ResNet50_vd_infer.tar

+ cd ..

+ ```

- 模型转换

-使用 Paddle2ONNX 将 Paddle 静态图模型转换为 ONNX 模型格式:

-```

-paddle2onnx --model_dir=./models/ResNet50_vd_infer/ \

---model_filename=inference.pdmodel \

---params_filename=inference.pdiparams \

---save_file=./models/ResNet50_vd_infer/inference.onnx \

---opset_version=10 \

---enable_onnx_checker=True

-```

+ 使用 Paddle2ONNX 将 Paddle 静态图模型转换为 ONNX 模型格式:

+ ```shell

+ paddle2onnx --model_dir=./models/ResNet50_vd_infer/ \

+ --model_filename=inference.pdmodel \

+ --params_filename=inference.pdiparams \

+ --save_file=./models/ResNet50_vd_infer/inference.onnx \

+ --opset_version=10 \

+ --enable_onnx_checker=True

+ ```

-执行完毕后,ONNX 模型 `inference.onnx` 会被保存在 `./models/ResNet50_vd_infer/` 路径下

+转换完毕后,生成的ONNX 模型 `inference.onnx` 会被保存在 `./models/ResNet50_vd_infer/` 路径下

## 3. onnx 预测

执行如下命令:

-```

+```shell

python3.7 python/predict_cls.py \

-c configs/inference_cls.yaml \

-o Global.use_onnx=True \

diff --git a/deploy/paddle2onnx/readme_en.md b/deploy/paddle2onnx/readme_en.md

new file mode 100644

index 0000000000000000000000000000000000000000..6df13e5fe31805d642432dea8526661e82b6e95b

--- /dev/null

+++ b/deploy/paddle2onnx/readme_en.md

@@ -0,0 +1,59 @@

+# Paddle2ONNX: Converting To ONNX and Deployment

+

+This section introduce that how to convert the Paddle Inference Model ResNet50_vd to ONNX model and deployment based on ONNX engine.

+

+## 1. Installation

+

+First, you need to install Paddle2ONNX and onnxruntime. Paddle2ONNX is a toolkit to convert Paddle Inference Model to ONNX model. Please refer to [Paddle2ONNX](https://github.com/PaddlePaddle/Paddle2ONNX/blob/develop/README_en.md) for more information.

+

+- Paddle2ONNX Installation

+```

+python3.7 -m pip install paddle2onnx

+```

+

+- ONNX Installation

+```

+python3.7 -m pip install onnxruntime

+```

+

+## 2. Converting to ONNX

+

+Download the Paddle Inference Model ResNet50_vd:

+

+```

+cd deploy

+mkdir models && cd models

+wget -nc https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/inference/ResNet50_vd_infer.tar && tar xf ResNet50_vd_infer.tar

+cd ..

+```

+

+Converting to ONNX model:

+

+```

+paddle2onnx --model_dir=./models/ResNet50_vd_infer/ \

+--model_filename=inference.pdmodel \

+--params_filename=inference.pdiparams \

+--save_file=./models/ResNet50_vd_infer/inference.onnx \

+--opset_version=10 \

+--enable_onnx_checker=True

+```

+

+After running the above command, the ONNX model file converted would be save in `./models/ResNet50_vd_infer/`.

+

+## 3. Deployment

+

+Deployment with ONNX model, command is as shown below.

+

+```

+python3.7 python/predict_cls.py \

+-c configs/inference_cls.yaml \

+-o Global.use_onnx=True \

+-o Global.use_gpu=False \

+-o Global.inference_model_dir=./models/ResNet50_vd_infer

+```

+

+The prediction results:

+

+```

+ILSVRC2012_val_00000010.jpeg: class id(s): [153, 204, 229, 332, 155], score(s): [0.69, 0.10, 0.02, 0.01, 0.01], label_name(s): ['Maltese dog, Maltese terrier, Maltese', 'Lhasa, Lhasa apso', 'Old English sheepdog, bobtail', 'Angora, Angora rabbit', 'Shih-Tzu']

+```

diff --git a/deploy/paddleserving/build_server.sh b/deploy/paddleserving/build_server.sh

new file mode 100644

index 0000000000000000000000000000000000000000..1329a3684ff72862858ee25c0a938bd61ff654ae

--- /dev/null

+++ b/deploy/paddleserving/build_server.sh

@@ -0,0 +1,88 @@

+# 使用镜像:

+# registry.baidubce.com/paddlepaddle/paddle:latest-dev-cuda10.1-cudnn7-gcc82

+

+# 编译Serving Server:

+

+# client和app可以直接使用release版本

+

+# server因为加入了自定义OP,需要重新编译

+

+# 默认编译时的${PWD}=PaddleClas/deploy/paddleserving/

+

+python_name=${1:-'python'}

+

+apt-get update

+apt install -y libcurl4-openssl-dev libbz2-dev

+wget -nc https://paddle-serving.bj.bcebos.com/others/centos_ssl.tar

+tar xf centos_ssl.tar

+rm -rf centos_ssl.tar

+mv libcrypto.so.1.0.2k /usr/lib/libcrypto.so.1.0.2k

+mv libssl.so.1.0.2k /usr/lib/libssl.so.1.0.2k

+ln -sf /usr/lib/libcrypto.so.1.0.2k /usr/lib/libcrypto.so.10

+ln -sf /usr/lib/libssl.so.1.0.2k /usr/lib/libssl.so.10

+ln -sf /usr/lib/libcrypto.so.10 /usr/lib/libcrypto.so

+ln -sf /usr/lib/libssl.so.10 /usr/lib/libssl.so

+

+# 安装go依赖

+rm -rf /usr/local/go

+wget -qO- https://paddle-ci.cdn.bcebos.com/go1.17.2.linux-amd64.tar.gz | tar -xz -C /usr/local

+export GOROOT=/usr/local/go

+export GOPATH=/root/gopath

+export PATH=$PATH:$GOPATH/bin:$GOROOT/bin

+go env -w GO111MODULE=on

+go env -w GOPROXY=https://goproxy.cn,direct

+go install github.com/grpc-ecosystem/grpc-gateway/protoc-gen-grpc-gateway@v1.15.2

+go install github.com/grpc-ecosystem/grpc-gateway/protoc-gen-swagger@v1.15.2

+go install github.com/golang/protobuf/protoc-gen-go@v1.4.3

+go install google.golang.org/grpc@v1.33.0

+go env -w GO111MODULE=auto

+

+# 下载opencv库

+wget https://paddle-qa.bj.bcebos.com/PaddleServing/opencv3.tar.gz

+tar -xvf opencv3.tar.gz

+rm -rf opencv3.tar.gz

+export OPENCV_DIR=$PWD/opencv3

+

+# clone Serving

+git clone https://github.com/PaddlePaddle/Serving.git -b develop --depth=1

+

+cd Serving # PaddleClas/deploy/paddleserving/Serving

+export Serving_repo_path=$PWD

+git submodule update --init --recursive

+${python_name} -m pip install -r python/requirements.txt

+

+# set env

+export PYTHON_INCLUDE_DIR=$(${python_name} -c "from distutils.sysconfig import get_python_inc; print(get_python_inc())")

+export PYTHON_LIBRARIES=$(${python_name} -c "import distutils.sysconfig as sysconfig; print(sysconfig.get_config_var('LIBDIR'))")

+export PYTHON_EXECUTABLE=`which ${python_name}`

+

+export CUDA_PATH='/usr/local/cuda'

+export CUDNN_LIBRARY='/usr/local/cuda/lib64/'

+export CUDA_CUDART_LIBRARY='/usr/local/cuda/lib64/'

+export TENSORRT_LIBRARY_PATH='/usr/local/TensorRT6-cuda10.1-cudnn7/targets/x86_64-linux-gnu/'

+

+# cp 自定义OP代码

+\cp ../preprocess/general_clas_op.* ${Serving_repo_path}/core/general-server/op

+\cp ../preprocess/preprocess_op.* ${Serving_repo_path}/core/predictor/tools/pp_shitu_tools

+

+# 编译Server

+mkdir server-build-gpu-opencv

+cd server-build-gpu-opencv

+cmake -DPYTHON_INCLUDE_DIR=$PYTHON_INCLUDE_DIR \

+-DPYTHON_LIBRARIES=$PYTHON_LIBRARIES \

+-DPYTHON_EXECUTABLE=$PYTHON_EXECUTABLE \

+-DCUDA_TOOLKIT_ROOT_DIR=${CUDA_PATH} \

+-DCUDNN_LIBRARY=${CUDNN_LIBRARY} \

+-DCUDA_CUDART_LIBRARY=${CUDA_CUDART_LIBRARY} \

+-DTENSORRT_ROOT=${TENSORRT_LIBRARY_PATH} \

+-DOPENCV_DIR=${OPENCV_DIR} \

+-DWITH_OPENCV=ON \

+-DSERVER=ON \

+-DWITH_GPU=ON ..

+make -j32

+

+${python_name} -m pip install python/dist/paddle*

+

+# export SERVING_BIN

+export SERVING_BIN=$PWD/core/general-server/serving

+cd ../../

\ No newline at end of file

diff --git a/deploy/paddleserving/config.yml b/deploy/paddleserving/config.yml

index d9f464dd093d5a3d0ac34a61f4af17e3792fcd86..92d8297f9f23a4082cb0a499ca4c172e71d79caf 100644

--- a/deploy/paddleserving/config.yml

+++ b/deploy/paddleserving/config.yml

@@ -30,4 +30,4 @@ op:

client_type: local_predictor

#Fetch结果列表,以client_config中fetch_var的alias_name为准

- fetch_list: ["prediction"]

+ fetch_list: ["prediction"]

diff --git a/deploy/paddleserving/preprocess/general_clas_op.cpp b/deploy/paddleserving/preprocess/general_clas_op.cpp

new file mode 100644

index 0000000000000000000000000000000000000000..e0ab48fa52da70a558b34e7ab1deda52675e99bc

--- /dev/null

+++ b/deploy/paddleserving/preprocess/general_clas_op.cpp

@@ -0,0 +1,206 @@

+// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include "core/general-server/op/general_clas_op.h"

+#include "core/predictor/framework/infer.h"

+#include "core/predictor/framework/memory.h"

+#include "core/predictor/framework/resource.h"

+#include "core/util/include/timer.h"

+#include

+#include

+#include

+#include

+

+namespace baidu {

+namespace paddle_serving {

+namespace serving {

+

+using baidu::paddle_serving::Timer;

+using baidu::paddle_serving::predictor::MempoolWrapper;

+using baidu::paddle_serving::predictor::general_model::Tensor;

+using baidu::paddle_serving::predictor::general_model::Response;

+using baidu::paddle_serving::predictor::general_model::Request;

+using baidu::paddle_serving::predictor::InferManager;

+using baidu::paddle_serving::predictor::PaddleGeneralModelConfig;

+

+int GeneralClasOp::inference() {

+ VLOG(2) << "Going to run inference";

+ const std::vector pre_node_names = pre_names();

+ if (pre_node_names.size() != 1) {

+ LOG(ERROR) << "This op(" << op_name()

+ << ") can only have one predecessor op, but received "

+ << pre_node_names.size();

+ return -1;

+ }

+ const std::string pre_name = pre_node_names[0];

+

+ const GeneralBlob *input_blob = get_depend_argument(pre_name);

+ if (!input_blob) {

+ LOG(ERROR) << "input_blob is nullptr,error";

+ return -1;

+ }

+ uint64_t log_id = input_blob->GetLogId();

+ VLOG(2) << "(logid=" << log_id << ") Get precedent op name: " << pre_name;

+

+ GeneralBlob *output_blob = mutable_data();

+ if (!output_blob) {

+ LOG(ERROR) << "output_blob is nullptr,error";

+ return -1;

+ }

+ output_blob->SetLogId(log_id);

+

+ if (!input_blob) {

+ LOG(ERROR) << "(logid=" << log_id

+ << ") Failed mutable depended argument, op:" << pre_name;

+ return -1;

+ }

+

+ const TensorVector *in = &input_blob->tensor_vector;

+ TensorVector *out = &output_blob->tensor_vector;

+

+ int batch_size = input_blob->_batch_size;

+ output_blob->_batch_size = batch_size;

+ VLOG(2) << "(logid=" << log_id << ") infer batch size: " << batch_size;

+

+ Timer timeline;

+ int64_t start = timeline.TimeStampUS();

+ timeline.Start();

+

+ // only support string type

+

+ char *total_input_ptr = static_cast(in->at(0).data.data());

+ std::string base64str = total_input_ptr;

+

+ cv::Mat img = Base2Mat(base64str);

+

+ // RGB2BGR

+ cv::cvtColor(img, img, cv::COLOR_BGR2RGB);

+

+ // Resize

+ cv::Mat resize_img;

+ resize_op_.Run(img, resize_img, resize_short_size_);

+

+ // CenterCrop

+ crop_op_.Run(resize_img, crop_size_);

+

+ // Normalize

+ normalize_op_.Run(&resize_img, mean_, scale_, is_scale_);

+

+ // Permute

+ std::vector input(1 * 3 * resize_img.rows * resize_img.cols, 0.0f);

+ permute_op_.Run(&resize_img, input.data());

+ float maxValue = *max_element(input.begin(), input.end());

+ float minValue = *min_element(input.begin(), input.end());

+

+ TensorVector *real_in = new TensorVector();

+ if (!real_in) {

+ LOG(ERROR) << "real_in is nullptr,error";

+ return -1;

+ }

+

+ std::vector input_shape;

+ int in_num = 0;

+ void *databuf_data = NULL;

+ char *databuf_char = NULL;

+ size_t databuf_size = 0;

+

+ input_shape = {1, 3, resize_img.rows, resize_img.cols};

+ in_num = std::accumulate(input_shape.begin(), input_shape.end(), 1,

+ std::multiplies());

+

+ databuf_size = in_num * sizeof(float);

+ databuf_data = MempoolWrapper::instance().malloc(databuf_size);

+ if (!databuf_data) {

+ LOG(ERROR) << "Malloc failed, size: " << databuf_size;

+ return -1;

+ }

+

+ memcpy(databuf_data, input.data(), databuf_size);

+ databuf_char = reinterpret_cast(databuf_data);

+ paddle::PaddleBuf paddleBuf(databuf_char, databuf_size);

+ paddle::PaddleTensor tensor_in;

+ tensor_in.name = in->at(0).name;

+ tensor_in.dtype = paddle::PaddleDType::FLOAT32;

+ tensor_in.shape = {1, 3, resize_img.rows, resize_img.cols};

+ tensor_in.lod = in->at(0).lod;

+ tensor_in.data = paddleBuf;

+ real_in->push_back(tensor_in);

+

+ if (InferManager::instance().infer(engine_name().c_str(), real_in, out,

+ batch_size)) {

+ LOG(ERROR) << "(logid=" << log_id

+ << ") Failed do infer in fluid model: " << engine_name().c_str();

+ return -1;

+ }

+

+ int64_t end = timeline.TimeStampUS();

+ CopyBlobInfo(input_blob, output_blob);

+ AddBlobInfo(output_blob, start);

+ AddBlobInfo(output_blob, end);

+ return 0;

+}

+

+cv::Mat GeneralClasOp::Base2Mat(std::string &base64_data) {

+ cv::Mat img;

+ std::string s_mat;

+ s_mat = base64Decode(base64_data.data(), base64_data.size());

+ std::vector base64_img(s_mat.begin(), s_mat.end());

+ img = cv::imdecode(base64_img, cv::IMREAD_COLOR); // CV_LOAD_IMAGE_COLOR

+ return img;

+}

+

+std::string GeneralClasOp::base64Decode(const char *Data, int DataByte) {

+ const char DecodeTable[] = {

+ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

+ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

+ 0, 0, 0, 0, 0, 0, 0, 0, 0,

+ 62, // '+'

+ 0, 0, 0,

+ 63, // '/'

+ 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, // '0'-'9'

+ 0, 0, 0, 0, 0, 0, 0, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9,

+ 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, // 'A'-'Z'

+ 0, 0, 0, 0, 0, 0, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36,

+ 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, // 'a'-'z'

+ };

+

+ std::string strDecode;

+ int nValue;

+ int i = 0;

+ while (i < DataByte) {

+ if (*Data != '\r' && *Data != '\n') {

+ nValue = DecodeTable[*Data++] << 18;

+ nValue += DecodeTable[*Data++] << 12;

+ strDecode += (nValue & 0x00FF0000) >> 16;

+ if (*Data != '=') {

+ nValue += DecodeTable[*Data++] << 6;

+ strDecode += (nValue & 0x0000FF00) >> 8;

+ if (*Data != '=') {

+ nValue += DecodeTable[*Data++];

+ strDecode += nValue & 0x000000FF;

+ }

+ }

+ i += 4;

+ } else // 回车换行,跳过

+ {

+ Data++;

+ i++;

+ }

+ }

+ return strDecode;

+}

+DEFINE_OP(GeneralClasOp);

+} // namespace serving

+} // namespace paddle_serving

+} // namespace baidu

diff --git a/deploy/paddleserving/preprocess/general_clas_op.h b/deploy/paddleserving/preprocess/general_clas_op.h

new file mode 100644

index 0000000000000000000000000000000000000000..69b7a8e005872d7b66b9a61265ca5798b4ac8bab

--- /dev/null

+++ b/deploy/paddleserving/preprocess/general_clas_op.h

@@ -0,0 +1,70 @@

+// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#pragma once

+#include "core/general-server/general_model_service.pb.h"

+#include "core/general-server/op/general_infer_helper.h"

+#include "core/predictor/tools/pp_shitu_tools/preprocess_op.h"

+#include "paddle_inference_api.h" // NOLINT

+#include

+#include

+

+#include "opencv2/core.hpp"

+#include "opencv2/imgcodecs.hpp"

+#include "opencv2/imgproc.hpp"

+#include

+#include

+#include

+#include

+#include

+

+#include

+#include

+#include

+

+namespace baidu {

+namespace paddle_serving {

+namespace serving {

+

+class GeneralClasOp

+ : public baidu::paddle_serving::predictor::OpWithChannel {

+public:

+ typedef std::vector TensorVector;

+

+ DECLARE_OP(GeneralClasOp);

+

+ int inference();

+

+private:

+ // clas preprocess

+ std::vector mean_ = {0.485f, 0.456f, 0.406f};

+ std::vector scale_ = {0.229f, 0.224f, 0.225f};

+ bool is_scale_ = true;

+

+ int resize_short_size_ = 256;

+ int crop_size_ = 224;

+

+ PaddleClas::ResizeImg resize_op_;

+ PaddleClas::Normalize normalize_op_;

+ PaddleClas::Permute permute_op_;

+ PaddleClas::CenterCropImg crop_op_;

+

+ // read pics

+ cv::Mat Base2Mat(std::string &base64_data);

+ std::string base64Decode(const char *Data, int DataByte);

+};

+

+} // namespace serving

+} // namespace paddle_serving

+} // namespace baidu

diff --git a/deploy/paddleserving/preprocess/preprocess_op.cpp b/deploy/paddleserving/preprocess/preprocess_op.cpp

new file mode 100644

index 0000000000000000000000000000000000000000..9c79342ceda115fe3c213bb6f5d32c6e56f2380a

--- /dev/null

+++ b/deploy/paddleserving/preprocess/preprocess_op.cpp

@@ -0,0 +1,149 @@

+// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include "opencv2/core.hpp"

+#include "opencv2/imgcodecs.hpp"

+#include "opencv2/imgproc.hpp"

+#include "paddle_api.h"

+#include "paddle_inference_api.h"

+#include

+#include

+#include

+#include

+#include

+

+#include

+#include

+#include

+#include

+

+#include "preprocess_op.h"

+

+namespace Feature {

+

+void Permute::Run(const cv::Mat *im, float *data) {

+ int rh = im->rows;

+ int rw = im->cols;

+ int rc = im->channels();

+ for (int i = 0; i < rc; ++i) {

+ cv::extractChannel(*im, cv::Mat(rh, rw, CV_32FC1, data + i * rh * rw), i);

+ }

+}

+

+void Normalize::Run(cv::Mat *im, const std::vector &mean,

+ const std::vector &std, float scale) {

+ (*im).convertTo(*im, CV_32FC3, scale);

+ for (int h = 0; h < im->rows; h++) {

+ for (int w = 0; w < im->cols; w++) {

+ im->at(h, w)[0] =

+ (im->at(h, w)[0] - mean[0]) / std[0];

+ im->at(h, w)[1] =

+ (im->at(h, w)[1] - mean[1]) / std[1];

+ im->at(h, w)[2] =

+ (im->at(h, w)[2] - mean[2]) / std[2];

+ }

+ }

+}

+

+void CenterCropImg::Run(cv::Mat &img, const int crop_size) {

+ int resize_w = img.cols;

+ int resize_h = img.rows;

+ int w_start = int((resize_w - crop_size) / 2);

+ int h_start = int((resize_h - crop_size) / 2);

+ cv::Rect rect(w_start, h_start, crop_size, crop_size);

+ img = img(rect);

+}

+

+void ResizeImg::Run(const cv::Mat &img, cv::Mat &resize_img,

+ int resize_short_size, int size) {

+ int resize_h = 0;

+ int resize_w = 0;

+ if (size > 0) {

+ resize_h = size;

+ resize_w = size;

+ } else {

+ int w = img.cols;

+ int h = img.rows;

+

+ float ratio = 1.f;

+ if (h < w) {

+ ratio = float(resize_short_size) / float(h);

+ } else {

+ ratio = float(resize_short_size) / float(w);

+ }

+ resize_h = round(float(h) * ratio);

+ resize_w = round(float(w) * ratio);

+ }

+ cv::resize(img, resize_img, cv::Size(resize_w, resize_h));

+}

+

+} // namespace Feature

+

+namespace PaddleClas {

+void Permute::Run(const cv::Mat *im, float *data) {

+ int rh = im->rows;

+ int rw = im->cols;

+ int rc = im->channels();

+ for (int i = 0; i < rc; ++i) {

+ cv::extractChannel(*im, cv::Mat(rh, rw, CV_32FC1, data + i * rh * rw), i);

+ }

+}

+

+void Normalize::Run(cv::Mat *im, const std::vector &mean,

+ const std::vector &scale, const bool is_scale) {

+ double e = 1.0;

+ if (is_scale) {

+ e /= 255.0;

+ }

+ (*im).convertTo(*im, CV_32FC3, e);

+ for (int h = 0; h < im->rows; h++) {

+ for (int w = 0; w < im->cols; w++) {

+ im->at(h, w)[0] =

+ (im->at(h, w)[0] - mean[0]) / scale[0];

+ im->at(h, w)[1] =

+ (im->at(h, w)[1] - mean[1]) / scale[1];

+ im->at(h, w)[2] =

+ (im->at(h, w)[2] - mean[2]) / scale[2];

+ }

+ }

+}

+

+void CenterCropImg::Run(cv::Mat &img, const int crop_size) {

+ int resize_w = img.cols;

+ int resize_h = img.rows;

+ int w_start = int((resize_w - crop_size) / 2);

+ int h_start = int((resize_h - crop_size) / 2);

+ cv::Rect rect(w_start, h_start, crop_size, crop_size);

+ img = img(rect);

+}

+

+void ResizeImg::Run(const cv::Mat &img, cv::Mat &resize_img,

+ int resize_short_size) {

+ int w = img.cols;

+ int h = img.rows;

+

+ float ratio = 1.f;

+ if (h < w) {

+ ratio = float(resize_short_size) / float(h);

+ } else {

+ ratio = float(resize_short_size) / float(w);

+ }

+

+ int resize_h = round(float(h) * ratio);

+ int resize_w = round(float(w) * ratio);

+

+ cv::resize(img, resize_img, cv::Size(resize_w, resize_h));

+}

+

+} // namespace PaddleClas

diff --git a/deploy/paddleserving/preprocess/preprocess_op.h b/deploy/paddleserving/preprocess/preprocess_op.h

new file mode 100644

index 0000000000000000000000000000000000000000..0ea9d2e14a525365bb049a13358660a2567dadc8

--- /dev/null

+++ b/deploy/paddleserving/preprocess/preprocess_op.h

@@ -0,0 +1,81 @@

+// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#pragma once

+

+#include "opencv2/core.hpp"

+#include "opencv2/imgcodecs.hpp"

+#include "opencv2/imgproc.hpp"

+#include

+#include

+#include

+#include

+#include

+

+#include

+#include

+#include

+

+namespace Feature {

+

+class Normalize {

+public:

+ virtual void Run(cv::Mat *im, const std::vector &mean,

+ const std::vector &std, float scale);

+};

+

+// RGB -> CHW

+class Permute {

+public:

+ virtual void Run(const cv::Mat *im, float *data);

+};

+

+class CenterCropImg {

+public:

+ virtual void Run(cv::Mat &im, const int crop_size = 224);

+};

+

+class ResizeImg {

+public:

+ virtual void Run(const cv::Mat &img, cv::Mat &resize_img, int max_size_len,

+ int size = 0);

+};

+

+} // namespace Feature

+

+namespace PaddleClas {

+

+class Normalize {

+public:

+ virtual void Run(cv::Mat *im, const std::vector &mean,

+ const std::vector &scale, const bool is_scale = true);

+};

+

+// RGB -> CHW

+class Permute {

+public:

+ virtual void Run(const cv::Mat *im, float *data);

+};

+

+class CenterCropImg {

+public:

+ virtual void Run(cv::Mat &im, const int crop_size = 224);

+};

+

+class ResizeImg {

+public:

+ virtual void Run(const cv::Mat &img, cv::Mat &resize_img, int max_size_len);

+};

+

+} // namespace PaddleClas

diff --git a/deploy/paddleserving/recognition/config.yml b/deploy/paddleserving/recognition/config.yml

index 6ecc32e22435f07a549ffcdeb6a435b33c4901f1..e4108006e6f2ea1a3698e4fdf9c32f25dcbfbeb0 100644

--- a/deploy/paddleserving/recognition/config.yml

+++ b/deploy/paddleserving/recognition/config.yml

@@ -31,7 +31,7 @@ op:

#Fetch结果列表,以client_config中fetch_var的alias_name为准

fetch_list: ["features"]

-

+

det:

concurrency: 1

local_service_conf:

diff --git a/deploy/paddleserving/recognition/preprocess/general_PPLCNet_x2_5_lite_v1.0_client/serving_client_conf.prototxt b/deploy/paddleserving/recognition/preprocess/general_PPLCNet_x2_5_lite_v1.0_client/serving_client_conf.prototxt

new file mode 100644

index 0000000000000000000000000000000000000000..c781eb6f449fe06afbba7f96e01798c974bccf54

--- /dev/null

+++ b/deploy/paddleserving/recognition/preprocess/general_PPLCNet_x2_5_lite_v1.0_client/serving_client_conf.prototxt

@@ -0,0 +1,32 @@

+feed_var {

+ name: "x"

+ alias_name: "x"

+ is_lod_tensor: false

+ feed_type: 1

+ shape: 3

+ shape: 224

+ shape: 224

+}

+feed_var {

+ name: "boxes"

+ alias_name: "boxes"

+ is_lod_tensor: false

+ feed_type: 1

+ shape: 6

+}

+fetch_var {

+ name: "save_infer_model/scale_0.tmp_1"

+ alias_name: "features"

+ is_lod_tensor: false

+ fetch_type: 1

+ shape: 512

+}

+fetch_var {

+ name: "boxes"

+ alias_name: "boxes"

+ is_lod_tensor: false

+ fetch_type: 1

+ shape: 6

+}

+

+

diff --git a/deploy/paddleserving/recognition/preprocess/general_PPLCNet_x2_5_lite_v1.0_serving/serving_server_conf.prototxt b/deploy/paddleserving/recognition/preprocess/general_PPLCNet_x2_5_lite_v1.0_serving/serving_server_conf.prototxt

new file mode 100644

index 0000000000000000000000000000000000000000..04812f42ed90fbbd47c73b9ec706d57c04b4c571

--- /dev/null

+++ b/deploy/paddleserving/recognition/preprocess/general_PPLCNet_x2_5_lite_v1.0_serving/serving_server_conf.prototxt

@@ -0,0 +1,30 @@

+feed_var {

+ name: "x"

+ alias_name: "x"

+ is_lod_tensor: false

+ feed_type: 1

+ shape: 3

+ shape: 224

+ shape: 224

+}

+feed_var {

+ name: "boxes"

+ alias_name: "boxes"

+ is_lod_tensor: false

+ feed_type: 1

+ shape: 6

+}

+fetch_var {

+ name: "save_infer_model/scale_0.tmp_1"

+ alias_name: "features"

+ is_lod_tensor: false

+ fetch_type: 1

+ shape: 512

+}

+fetch_var {

+ name: "boxes"

+ alias_name: "boxes"

+ is_lod_tensor: false

+ fetch_type: 1

+ shape: 6

+}

diff --git a/deploy/paddleserving/recognition/preprocess/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_client/serving_client_conf.prototxt b/deploy/paddleserving/recognition/preprocess/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_client/serving_client_conf.prototxt

new file mode 100644

index 0000000000000000000000000000000000000000..d9ab81a8b3c275f638f314489a84deef46011d73

--- /dev/null

+++ b/deploy/paddleserving/recognition/preprocess/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_client/serving_client_conf.prototxt

@@ -0,0 +1,29 @@

+feed_var {

+ name: "im_shape"

+ alias_name: "im_shape"

+ is_lod_tensor: false

+ feed_type: 1

+ shape: 2

+}

+feed_var {

+ name: "image"

+ alias_name: "image"

+ is_lod_tensor: false

+ feed_type: 7

+ shape: -1

+ shape: -1

+ shape: 3

+}

+fetch_var {

+ name: "save_infer_model/scale_0.tmp_1"

+ alias_name: "save_infer_model/scale_0.tmp_1"

+ is_lod_tensor: true

+ fetch_type: 1

+ shape: -1

+}

+fetch_var {

+ name: "save_infer_model/scale_1.tmp_1"

+ alias_name: "save_infer_model/scale_1.tmp_1"

+ is_lod_tensor: false

+ fetch_type: 2

+}

diff --git a/deploy/paddleserving/recognition/preprocess/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_serving/serving_server_conf.prototxt b/deploy/paddleserving/recognition/preprocess/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_serving/serving_server_conf.prototxt

new file mode 100644

index 0000000000000000000000000000000000000000..d9ab81a8b3c275f638f314489a84deef46011d73

--- /dev/null

+++ b/deploy/paddleserving/recognition/preprocess/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_serving/serving_server_conf.prototxt

@@ -0,0 +1,29 @@

+feed_var {

+ name: "im_shape"

+ alias_name: "im_shape"

+ is_lod_tensor: false

+ feed_type: 1

+ shape: 2

+}

+feed_var {

+ name: "image"

+ alias_name: "image"

+ is_lod_tensor: false

+ feed_type: 7

+ shape: -1

+ shape: -1

+ shape: 3

+}

+fetch_var {

+ name: "save_infer_model/scale_0.tmp_1"

+ alias_name: "save_infer_model/scale_0.tmp_1"

+ is_lod_tensor: true

+ fetch_type: 1

+ shape: -1

+}

+fetch_var {

+ name: "save_infer_model/scale_1.tmp_1"

+ alias_name: "save_infer_model/scale_1.tmp_1"

+ is_lod_tensor: false

+ fetch_type: 2

+}

diff --git a/deploy/paddleserving/recognition/test_cpp_serving_client.py b/deploy/paddleserving/recognition/test_cpp_serving_client.py

index a2bf1ae3e9d0a69628319b9f845a1e6f7701b391..e2cd17e855ebfe8fb286ebaeff8ab63874e2e972 100644

--- a/deploy/paddleserving/recognition/test_cpp_serving_client.py

+++ b/deploy/paddleserving/recognition/test_cpp_serving_client.py

@@ -12,7 +12,6 @@

# See the License for the specific language governing permissions and

# limitations under the License.

-import sys

import numpy as np

from paddle_serving_client import Client

@@ -22,181 +21,101 @@ import faiss

import os

import pickle

-

-class MainbodyDetect():

- """

- pp-shitu mainbody detect.

- include preprocess, process, postprocess

- return detect results

- Attention: Postprocess include num limit and box filter; no nms

- """

-

- def __init__(self):

- self.preprocess = DetectionSequential([

- DetectionFile2Image(), DetectionNormalize(

- [0.485, 0.456, 0.406], [0.229, 0.224, 0.225], True),

- DetectionResize(

- (640, 640), False, interpolation=2), DetectionTranspose(

- (2, 0, 1))

- ])

-

- self.client = Client()

- self.client.load_client_config(

- "../../models/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_client/serving_client_conf.prototxt"

- )

- self.client.connect(['127.0.0.1:9293'])

-

- self.max_det_result = 5

- self.conf_threshold = 0.2

-

- def predict(self, imgpath):

- im, im_info = self.preprocess(imgpath)

- im_shape = np.array(im.shape[1:]).reshape(-1)

- scale_factor = np.array(list(im_info['scale_factor'])).reshape(-1)

-

- fetch_map = self.client.predict(

- feed={

- "image": im,

- "im_shape": im_shape,

- "scale_factor": scale_factor,

- },

- fetch=["save_infer_model/scale_0.tmp_1"],

- batch=False)

- return self.postprocess(fetch_map, imgpath)

-

- def postprocess(self, fetch_map, imgpath):

- #1. get top max_det_result

- det_results = fetch_map["save_infer_model/scale_0.tmp_1"]

- if len(det_results) > self.max_det_result:

- boxes_reserved = fetch_map[

- "save_infer_model/scale_0.tmp_1"][:self.max_det_result]

- else:

- boxes_reserved = det_results

-

- #2. do conf threshold

- boxes_list = []

- for i in range(boxes_reserved.shape[0]):

- if (boxes_reserved[i, 1]) > self.conf_threshold:

- boxes_list.append(boxes_reserved[i, :])

-

- #3. add origin image box

- origin_img = cv2.imread(imgpath)

- boxes_list.append(

- np.array([0, 1.0, 0, 0, origin_img.shape[1], origin_img.shape[0]]))

- return np.array(boxes_list)

-

-

-class ObjectRecognition():

- """

- pp-shitu object recognion for all objects detected by MainbodyDetect.

- include preprocess, process, postprocess

- preprocess include preprocess for each image and batching.

- Batch process

- postprocess include retrieval and nms

- """

-

- def __init__(self):

- self.client = Client()

- self.client.load_client_config(

- "../../models/general_PPLCNet_x2_5_lite_v1.0_client/serving_client_conf.prototxt"

- )

- self.client.connect(["127.0.0.1:9294"])

-

- self.seq = Sequential([

- BGR2RGB(), Resize((224, 224)), Div(255),

- Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225],

- False), Transpose((2, 0, 1))

- ])

-

- self.searcher, self.id_map = self.init_index()

-

- self.rec_nms_thresold = 0.05

- self.rec_score_thres = 0.5

- self.feature_normalize = True

- self.return_k = 1

-

- def init_index(self):

- index_dir = "../../drink_dataset_v1.0/index"

- assert os.path.exists(os.path.join(

- index_dir, "vector.index")), "vector.index not found ..."

- assert os.path.exists(os.path.join(

- index_dir, "id_map.pkl")), "id_map.pkl not found ... "

-

- searcher = faiss.read_index(os.path.join(index_dir, "vector.index"))

-

- with open(os.path.join(index_dir, "id_map.pkl"), "rb") as fd:

- id_map = pickle.load(fd)

- return searcher, id_map

-

- def predict(self, det_boxes, imgpath):

- #1. preprocess

- batch_imgs = []

- origin_img = cv2.imread(imgpath)

- for i in range(det_boxes.shape[0]):

- box = det_boxes[i]

- x1, y1, x2, y2 = [int(x) for x in box[2:]]

- cropped_img = origin_img[y1:y2, x1:x2, :].copy()

- tmp = self.seq(cropped_img)

- batch_imgs.append(tmp)

- batch_imgs = np.array(batch_imgs)

-

- #2. process

- fetch_map = self.client.predict(

- feed={"x": batch_imgs}, fetch=["features"], batch=True)

- batch_features = fetch_map["features"]

-

- #3. postprocess

- if self.feature_normalize:

- feas_norm = np.sqrt(

- np.sum(np.square(batch_features), axis=1, keepdims=True))

- batch_features = np.divide(batch_features, feas_norm)

- scores, docs = self.searcher.search(batch_features, self.return_k)

-

- results = []

- for i in range(scores.shape[0]):

- pred = {}

- if scores[i][0] >= self.rec_score_thres:

- pred["bbox"] = [int(x) for x in det_boxes[i, 2:]]

- pred["rec_docs"] = self.id_map[docs[i][0]].split()[1]

- pred["rec_scores"] = scores[i][0]

- results.append(pred)

- return self.nms_to_rec_results(results)

-

- def nms_to_rec_results(self, results):

- filtered_results = []

- x1 = np.array([r["bbox"][0] for r in results]).astype("float32")

- y1 = np.array([r["bbox"][1] for r in results]).astype("float32")

- x2 = np.array([r["bbox"][2] for r in results]).astype("float32")

- y2 = np.array([r["bbox"][3] for r in results]).astype("float32")

- scores = np.array([r["rec_scores"] for r in results])

-

- areas = (x2 - x1 + 1) * (y2 - y1 + 1)

- order = scores.argsort()[::-1]

- while order.size > 0:

- i = order[0]

- xx1 = np.maximum(x1[i], x1[order[1:]])

- yy1 = np.maximum(y1[i], y1[order[1:]])

- xx2 = np.minimum(x2[i], x2[order[1:]])

- yy2 = np.minimum(y2[i], y2[order[1:]])

-

- w = np.maximum(0.0, xx2 - xx1 + 1)

- h = np.maximum(0.0, yy2 - yy1 + 1)

- inter = w * h

- ovr = inter / (areas[i] + areas[order[1:]] - inter)

- inds = np.where(ovr <= self.rec_nms_thresold)[0]

- order = order[inds + 1]

- filtered_results.append(results[i])

- return filtered_results

-

-

+rec_nms_thresold = 0.05

+rec_score_thres = 0.5

+feature_normalize = True

+return_k = 1

+index_dir = "../../drink_dataset_v1.0/index"

+

+

+def init_index(index_dir):

+ assert os.path.exists(os.path.join(

+ index_dir, "vector.index")), "vector.index not found ..."

+ assert os.path.exists(os.path.join(

+ index_dir, "id_map.pkl")), "id_map.pkl not found ... "

+

+ searcher = faiss.read_index(os.path.join(index_dir, "vector.index"))

+

+ with open(os.path.join(index_dir, "id_map.pkl"), "rb") as fd:

+ id_map = pickle.load(fd)

+ return searcher, id_map

+

+

+#get box

+def nms_to_rec_results(results, thresh=0.1):

+ filtered_results = []

+

+ x1 = np.array([r["bbox"][0] for r in results]).astype("float32")

+ y1 = np.array([r["bbox"][1] for r in results]).astype("float32")

+ x2 = np.array([r["bbox"][2] for r in results]).astype("float32")

+ y2 = np.array([r["bbox"][3] for r in results]).astype("float32")

+ scores = np.array([r["rec_scores"] for r in results])

+

+ areas = (x2 - x1 + 1) * (y2 - y1 + 1)

+ order = scores.argsort()[::-1]

+ while order.size > 0:

+ i = order[0]

+ xx1 = np.maximum(x1[i], x1[order[1:]])

+ yy1 = np.maximum(y1[i], y1[order[1:]])

+ xx2 = np.minimum(x2[i], x2[order[1:]])

+ yy2 = np.minimum(y2[i], y2[order[1:]])

+

+ w = np.maximum(0.0, xx2 - xx1 + 1)

+ h = np.maximum(0.0, yy2 - yy1 + 1)

+ inter = w * h

+ ovr = inter / (areas[i] + areas[order[1:]] - inter)

+ inds = np.where(ovr <= thresh)[0]

+ order = order[inds + 1]

+ filtered_results.append(results[i])

+ return filtered_results

+

+

+def postprocess(fetch_dict, feature_normalize, det_boxes, searcher, id_map,

+ return_k, rec_score_thres, rec_nms_thresold):

+ batch_features = fetch_dict["features"]

+

+ #do feature norm

+ if feature_normalize:

+ feas_norm = np.sqrt(

+ np.sum(np.square(batch_features), axis=1, keepdims=True))

+ batch_features = np.divide(batch_features, feas_norm)

+

+ scores, docs = searcher.search(batch_features, return_k)

+

+ results = []

+ for i in range(scores.shape[0]):

+ pred = {}

+ if scores[i][0] >= rec_score_thres:

+ pred["bbox"] = [int(x) for x in det_boxes[i, 2:]]

+ pred["rec_docs"] = id_map[docs[i][0]].split()[1]

+ pred["rec_scores"] = scores[i][0]

+ results.append(pred)

+

+ #do nms

+ results = nms_to_rec_results(results, rec_nms_thresold)

+ return results

+

+

+#do client

if __name__ == "__main__":

- det = MainbodyDetect()

- rec = ObjectRecognition()

-

- #1. get det_results

- imgpath = "../../drink_dataset_v1.0/test_images/001.jpeg"

- det_results = det.predict(imgpath)

-

- #2. get rec_results

- rec_results = rec.predict(det_results, imgpath)

- print(rec_results)

+ client = Client()

+ client.load_client_config([

+ "../../models/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_client",

+ "../../models/general_PPLCNet_x2_5_lite_v1.0_client"

+ ])

+ client.connect(['127.0.0.1:9400'])

+

+ im = cv2.imread("../../drink_dataset_v1.0/test_images/001.jpeg")

+ im_shape = np.array(im.shape[:2]).reshape(-1)

+ fetch_map = client.predict(

+ feed={"image": im,

+ "im_shape": im_shape},

+ fetch=["features", "boxes"],

+ batch=False)

+

+ #add retrieval procedure

+ det_boxes = fetch_map["boxes"]

+ searcher, id_map = init_index(index_dir)

+ results = postprocess(fetch_map, feature_normalize, det_boxes, searcher,

+ id_map, return_k, rec_score_thres, rec_nms_thresold)

+ print(results)

diff --git a/deploy/paddleserving/test_cpp_serving_client.py b/deploy/paddleserving/test_cpp_serving_client.py

index 50794b363767c8236ccca1001a441b535a9f9db3..ba5399c90dcd5e0701df26e2d2f8337a4105ab51 100644

--- a/deploy/paddleserving/test_cpp_serving_client.py

+++ b/deploy/paddleserving/test_cpp_serving_client.py

@@ -12,16 +12,20 @@

# See the License for the specific language governing permissions and

# limitations under the License.

-import sys

+import base64

+import time

+

from paddle_serving_client import Client

-#app