-

-PULC实用图像分类模型效果展示

+ +

+PP-ShiTuV2图像识别系统效果展示

-

-PULC实用图像分类模型效果展示

+ +

+PP-ShiTuV2图像识别系统效果展示

-

-PP-ShiTu图像识别系统效果展示

- +

+ +

+PULC实用图像分类模型效果展示

-

- +

+

+

+PP-ShiTuV2 Android Demo

+ -

-

-

-

+

+ +

+ -

-PULC demo images

+

-

-PULC demo images

+ +

+PP-ShiTuV2 demo images

+

+ -PP-ShiTu demo images

+PULC demo images

-PP-ShiTu demo images

+PULC demo images

+

+PP-ShiTuV2 Android Demo

+ -Image recognition can be divided into three steps:

-- (1)Identify region proposal for target objects through a detection model;

-- (2)Extract features for each region proposal;

-- (3)Search features in the retrieval database and output results;

-

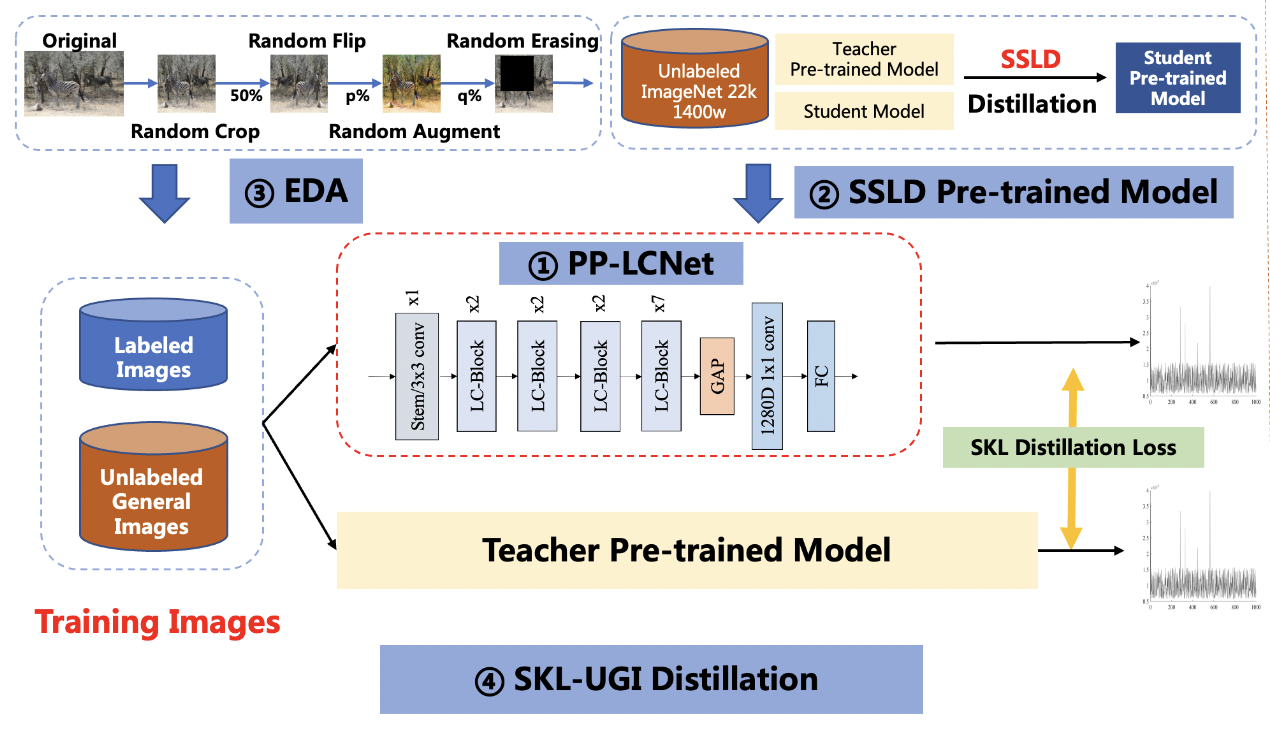

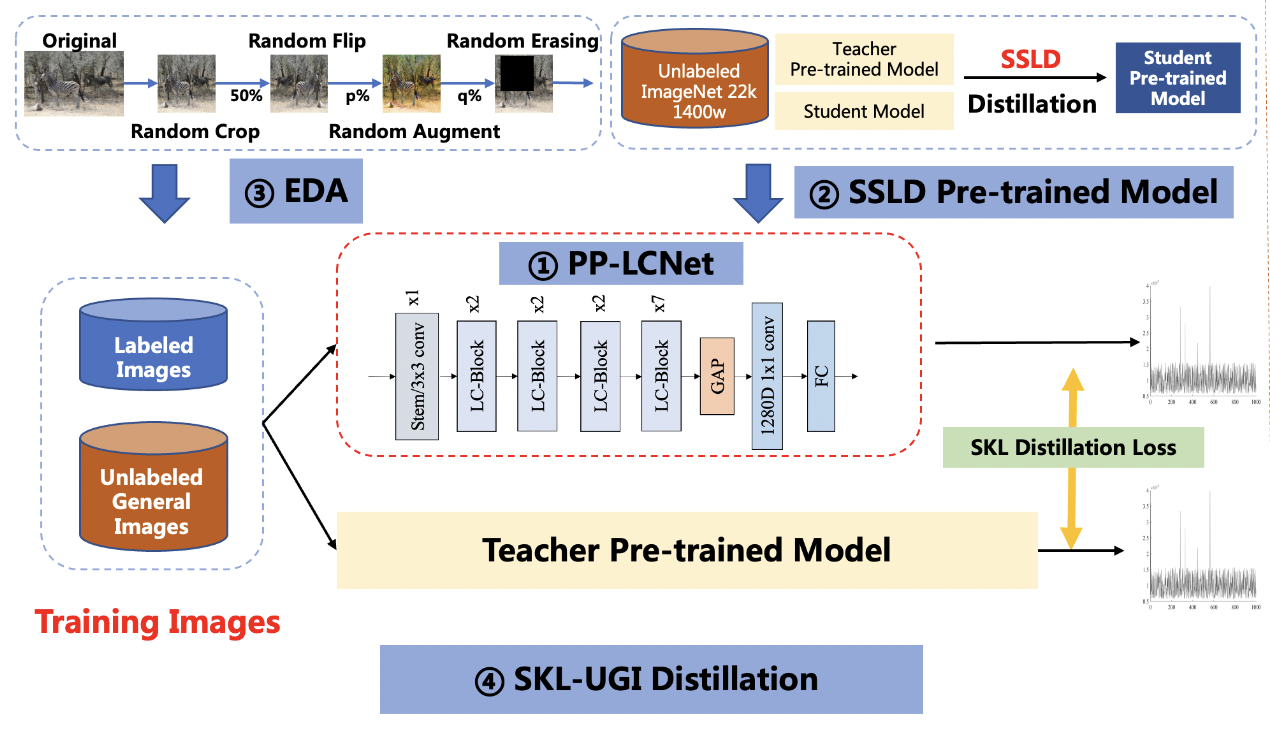

+PP-ShiTuV2 is a practical lightweight general image recognition system, which is mainly composed of three modules: mainbody detection model, feature extraction model and vector search tool. The system adopts a variety of strategies including backbone network, loss function, data augmentations, optimal hyperparameters, pre-training model, model pruning and quantization. Compared to V1, PP-ShiTuV2, Recall1 is improved by nearly 8 points. For more details, please refer to [PP-ShiTuV2 introduction](./docs/en/PPShiTu/PPShiTuV2_introduction.md).

For a new unknown category, there is no need to retrain the model, just prepare images of new category, extract features and update retrieval database and the category can be recognised.

-

-## PULC demo images

+

+## PP-ShiTuV2 Demo images

+

+- Drinks recognition

+

-Image recognition can be divided into three steps:

-- (1)Identify region proposal for target objects through a detection model;

-- (2)Extract features for each region proposal;

-- (3)Search features in the retrieval database and output results;

-

+PP-ShiTuV2 is a practical lightweight general image recognition system, which is mainly composed of three modules: mainbody detection model, feature extraction model and vector search tool. The system adopts a variety of strategies including backbone network, loss function, data augmentations, optimal hyperparameters, pre-training model, model pruning and quantization. Compared to V1, PP-ShiTuV2, Recall1 is improved by nearly 8 points. For more details, please refer to [PP-ShiTuV2 introduction](./docs/en/PPShiTu/PPShiTuV2_introduction.md).

For a new unknown category, there is no need to retrain the model, just prepare images of new category, extract features and update retrieval database and the category can be recognised.

-

-## PULC demo images

+

+## PP-ShiTuV2 Demo images

+

+- Drinks recognition

+

+

+

+

+ +

+

+The retrieval results obtained are visualized as follows:

-**Attention**

-1. If you do not have wget installed on Windows, you can download the model by copying the link into your browser and unzipping it in the appropriate folder; for Linux or macOS users, you can right-click and copy the download link to download it via the `wget` command.

-2. If you want to install `wget` on macOS, you can run the following command.

-3. The predict config file of the lightweight generic recognition model and the config file to build index database are used for the config of product recognition model of server-side. You can modify the path of the model to complete the index building and prediction.

+

+The retrieval results obtained are visualized as follows:

-**Attention**

-1. If you do not have wget installed on Windows, you can download the model by copying the link into your browser and unzipping it in the appropriate folder; for Linux or macOS users, you can right-click and copy the download link to download it via the `wget` command.

-2. If you want to install `wget` on macOS, you can run the following command.

-3. The predict config file of the lightweight generic recognition model and the config file to build index database are used for the config of product recognition model of server-side. You can modify the path of the model to complete the index building and prediction.

+ -```shell

-# install homebrew

-ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)";

-# install wget

-brew install wget

-```

+#### 1.2.2 Update Index

+Click the "photo upload" button above

-```shell

-# install homebrew

-ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)";

-# install wget

-brew install wget

-```

+#### 1.2.2 Update Index

+Click the "photo upload" button above  +

+

+

+ +

+ +

+ +

+界面按钮展示

+ +

+主要功能展示

+ +

+新建库

+ +

+打开库

+ +

+图像操作

+ |各种球类识别 | 0.9769 | [Balls](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Balls.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/balls-image-classification) |

+| 狗识别 |

|各种球类识别 | 0.9769 | [Balls](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Balls.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/balls-image-classification) |

+| 狗识别 |  | 狗细分类识别,包括69种狗的图像 | 0.9606 | [DogBreeds](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/DogBreeds.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/70-dog-breedsimage-data-set) |

+| 宝石 |

| 狗细分类识别,包括69种狗的图像 | 0.9606 | [DogBreeds](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/DogBreeds.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/70-dog-breedsimage-data-set) |

+| 宝石 |  | 宝石种类识别 | 0.9653 | [Gemstones](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Gemstones.tar) | [原数据下载地址](https://www.kaggle.com/datasets/lsind18/gemstones-images) |

+| 动物 |

| 宝石种类识别 | 0.9653 | [Gemstones](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Gemstones.tar) | [原数据下载地址](https://www.kaggle.com/datasets/lsind18/gemstones-images) |

+| 动物 |  |各种动物识别 | 0.9078 | [AnimalImageDataset](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/AnimalImageDataset.tar) | [原数据下载地址](https://www.kaggle.com/datasets/iamsouravbanerjee/animal-image-dataset-90-different-animals) |

+| 鸟类 |

|各种动物识别 | 0.9078 | [AnimalImageDataset](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/AnimalImageDataset.tar) | [原数据下载地址](https://www.kaggle.com/datasets/iamsouravbanerjee/animal-image-dataset-90-different-animals) |

+| 鸟类 |  |鸟细分类识别,包括400种各种姿态的鸟类图像 | 0.9673 | [Bird400](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Bird400.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/100-bird-species) |

+| 交通工具 |

|鸟细分类识别,包括400种各种姿态的鸟类图像 | 0.9673 | [Bird400](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Bird400.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/100-bird-species) |

+| 交通工具 |  |车、船等交通工具粗分类识别 | 0.9307 | [Vechicles](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Vechicles.tar) | [原数据下载地址](https://www.kaggle.com/datasets/rishabkoul1/vechicle-dataset) |

+| 花 |

|车、船等交通工具粗分类识别 | 0.9307 | [Vechicles](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Vechicles.tar) | [原数据下载地址](https://www.kaggle.com/datasets/rishabkoul1/vechicle-dataset) |

+| 花 |  |104种花细分类识别 | 0.9788 | [104flowers](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/104flowrs.tar) | [原数据下载地址](https://www.kaggle.com/datasets/msheriey/104-flowers-garden-of-eden) |

+| 运动种类 |

|104种花细分类识别 | 0.9788 | [104flowers](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/104flowrs.tar) | [原数据下载地址](https://www.kaggle.com/datasets/msheriey/104-flowers-garden-of-eden) |

+| 运动种类 |  |100种运动图像识别 | 0.9413 | [100sports](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/100sports.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/sports-classification) |

+| 乐器 |

|100种运动图像识别 | 0.9413 | [100sports](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/100sports.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/sports-classification) |

+| 乐器 |  |30种不同乐器种类识别 | 0.9467 | [MusicInstruments](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/MusicInstruments.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/musical-instruments-image-classification) |

+| 宝可梦 |

|30种不同乐器种类识别 | 0.9467 | [MusicInstruments](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/MusicInstruments.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/musical-instruments-image-classification) |

+| 宝可梦 |  |宝可梦神奇宝贝识别 | 0.9236 | [Pokemon](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Pokemon.tar) | [原数据下载地址](https://www.kaggle.com/datasets/lantian773030/pokemonclassification) |

+| 船 |

|宝可梦神奇宝贝识别 | 0.9236 | [Pokemon](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Pokemon.tar) | [原数据下载地址](https://www.kaggle.com/datasets/lantian773030/pokemonclassification) |

+| 船 |  |船种类识别 |0.9242 | [Boat](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Boat.tar) | [原数据下载地址](https://www.kaggle.com/datasets/imsparsh/dockship-boat-type-classification) |

+| 鞋子 |

|船种类识别 |0.9242 | [Boat](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Boat.tar) | [原数据下载地址](https://www.kaggle.com/datasets/imsparsh/dockship-boat-type-classification) |

+| 鞋子 |  |鞋子种类识别,包括靴子、拖鞋等 | 0.9000 | [Shoes](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Shoes.tar) | [原数据下载地址](https://www.kaggle.com/datasets/noobyogi0100/shoe-dataset) |

+| 巴黎建筑 |

|鞋子种类识别,包括靴子、拖鞋等 | 0.9000 | [Shoes](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Shoes.tar) | [原数据下载地址](https://www.kaggle.com/datasets/noobyogi0100/shoe-dataset) |

+| 巴黎建筑 |  |巴黎著名建筑景点识别,如:巴黎铁塔、圣母院等 | 1.000 | [Paris](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Paris.tar) | [原数据下载地址](https://www.kaggle.com/datasets/skylord/oxbuildings) |

+| 蝴蝶 |

|巴黎著名建筑景点识别,如:巴黎铁塔、圣母院等 | 1.000 | [Paris](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Paris.tar) | [原数据下载地址](https://www.kaggle.com/datasets/skylord/oxbuildings) |

+| 蝴蝶 |  |75种蝴蝶细分类识别 | 0.9360 | [Butterfly](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Butterfly.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/butterfly-images40-species) |

+| 野外植物 |

|75种蝴蝶细分类识别 | 0.9360 | [Butterfly](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Butterfly.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/butterfly-images40-species) |

+| 野外植物 |  |野外植物识别 | 0.9758 | [WildEdiblePlants](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/WildEdiblePlants.tar) | [原数据下载地址](https://www.kaggle.com/datasets/ryanpartridge01/wild-edible-plants) |

+| 天气 |

|野外植物识别 | 0.9758 | [WildEdiblePlants](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/WildEdiblePlants.tar) | [原数据下载地址](https://www.kaggle.com/datasets/ryanpartridge01/wild-edible-plants) |

+| 天气 |  |各种天气场景识别,如:雨天、打雷、下雪等 | 0.9924 | [WeatherImageRecognition](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/WeatherImageRecognition.tar) | [原数据下载地址](https://www.kaggle.com/datasets/jehanbhathena/weather-dataset) |

+| 坚果 |

|各种天气场景识别,如:雨天、打雷、下雪等 | 0.9924 | [WeatherImageRecognition](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/WeatherImageRecognition.tar) | [原数据下载地址](https://www.kaggle.com/datasets/jehanbhathena/weather-dataset) |

+| 坚果 |  |各种坚果种类识别 | 0.9412 | [TreeNuts](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/TreeNuts.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/tree-nuts-image-classification) |

+| 时装 |

|各种坚果种类识别 | 0.9412 | [TreeNuts](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/TreeNuts.tar) | [原数据下载地址](https://www.kaggle.com/datasets/gpiosenka/tree-nuts-image-classification) |

+| 时装 |  |首饰、挎包、化妆品等时尚商品识别 | 0.9555 | [FashionProductImageSmall](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/FashionProductImageSmall.tar) | [原数据下载地址](https://www.kaggle.com/datasets/paramaggarwal/fashion-product-images-small) |

+| 垃圾 |

|首饰、挎包、化妆品等时尚商品识别 | 0.9555 | [FashionProductImageSmall](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/FashionProductImageSmall.tar) | [原数据下载地址](https://www.kaggle.com/datasets/paramaggarwal/fashion-product-images-small) |

+| 垃圾 |  |12种垃圾分类识别 | 0.9845 | [Garbage12](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Garbage12.tar) | [原数据下载地址](https://www.kaggle.com/datasets/mostafaabla/garbage-classification) |

+| 航拍场景 |

|12种垃圾分类识别 | 0.9845 | [Garbage12](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Garbage12.tar) | [原数据下载地址](https://www.kaggle.com/datasets/mostafaabla/garbage-classification) |

+| 航拍场景 |  |各种航拍场景识别,如机场、火车站等 | 0.9797 | [AID](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/AID.tar) | [原数据下载地址](https://www.kaggle.com/datasets/jiayuanchengala/aid-scene-classification-datasets) |

+| 蔬菜 |

|各种航拍场景识别,如机场、火车站等 | 0.9797 | [AID](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/AID.tar) | [原数据下载地址](https://www.kaggle.com/datasets/jiayuanchengala/aid-scene-classification-datasets) |

+| 蔬菜 |  |各种蔬菜识别 | 0.8929 | [Veg200](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Veg200.tar) | [原数据下载地址](https://www.kaggle.com/datasets/zhaoyj688/vegfru) |

+| 商标 |

|各种蔬菜识别 | 0.8929 | [Veg200](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Veg200.tar) | [原数据下载地址](https://www.kaggle.com/datasets/zhaoyj688/vegfru) |

+| 商标 |  |两千多种logo识别 | 0.9313 | [Logo3k](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Logo3k.tar) | [原数据下载地址](https://github.com/Wangjing1551/LogoDet-3K-Dataset) |

+

+

+

+

+

+## 2. 使用说明

+

+

+

+### 2.1 环境配置

+- 安装:请先参考文档[环境准备](../installation/install_paddleclas.md)配置PaddleClas运行环境

+- 进入`deploy`运行目录,本部分所有内容与命令均需要在`deploy`目录下运行,可以通过下面命令进入`deploy`目录。

+```shell

+cd deploy

+```

+

+

+

+

+### 2.2 下载、解压场景库数据

+首先创建存放场景库的地址`deploy/datasets`:

+

+```shell

+mkdir datasets

+```

+下载并解压对应场景库到`deploy/datasets`中。

+```shell

+cd datasets

+

+# 下载并解压场景库数据

+wget {场景库下载链接} && tar -xf {压缩包的名称}

+```

+以`dataset_name`为例,解压完毕后,`datasets/dataset_name`文件夹下应有如下文件结构:

+```shel

+├── dataset_name/

+│ ├── Gallery/

+│ ├── Index/

+│ ├── Query/

+│ ├── gallery_list.txt/

+│ ├── query_list.txt/

+│ ├── label_list.txt/

+├── ...

+```

+其中,`Gallery`文件夹中存放的是用于构建索引库的原始图像,`Index`表示基于原始图像构建得到的索引库信息,`Query`文件夹存放的是用于检索的图像列表,`gallery_list.txt`和`query_list.txt`分别为索引库和检索图像的标签文件,`label_list.txt`是标签的中英文对照文件(注意:商标场景库文件不包含中英文对照文件)。

+

+

+

+### 2.3 准备模型

+创建存放模型的文件夹`deploy/models`,并下载轻量级主体检测、识别模型,命令如下:

+```shell

+cd ..

+mkdir models

+cd models

+

+# 下载检测模型并解压

+# wget {检测模型下载链接} && tar -xf {检测模型压缩包名称}

+wget https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/models/inference/PP-ShiTuV2/general_PPLCNetV2_base_pretrained_v1.0_infer.tar && tar -xf general_PPLCNetV2_base_pretrained_v1.0_infer.tar

+

+# 下载识别 inference 模型并解压

+#wget {识别模型下载链接} && tar -xf {识别模型压缩包名称}

+wget https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/models/inference/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_infer.tar && tar -xf picodet_PPLCNet_x2_5_mainbody_lite_v1.0_infer.tar

+```

+

+解压完成后,`models`文件夹下有如下文件结构:

+```

+├── inference_model_name

+│ ├── inference.pdiparams

+│ ├── inference.pdiparams.info

+│ └── inference.pdmodel

+└── det_model_name

+ ├── inference.pdiparams

+ ├── inference.pdiparams.info

+ └── inference.pdmodel

+```

+

+

+

+### 2.4 场景库识别与检索

+

+以`动物识别`场景为例,展示识别和检索过程(如果希望尝试其他场景库的识别与检索效果,在下载解压好对应的场景库数据和模型后,替换对应的配置文件即可完成预测)。

+

+注意,此部分使用了`faiss`作为检索库,安装方法如下:

+```shell

+pip install faiss-cpu==1.7.1post2

+```

+

+若使用时,不能正常引用,则`uninstall`之后,重新`install`,尤其是在windows下。

+

+

+

+#### 2.4.1 识别单张图像

+

+假设需要测试`./datasets/AnimalImageDataset/Query/羚羊/0a37838e99.jpg`这张图像识别和检索效果。

+

+首先分别修改配置文件`./configs/inference_general.yaml`中的`Global.det_inference_model_dir`和`Global.rec_inference_model_dir`字段为对应的检测和识别模型文件夹,以及修改测试图像地址字段`Global.infer_imgs`示例如下:

+

+```shell

+Global:

+ infer_imgs: './datasets/AnimalImageDataset/Query/羚羊/0a37838e99.jpg'

+ det_inference_model_dir: './models/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_infer.tar'

+ rec_inference_model_dir: './models/general_PPLCNetV2_base_pretrained_v1.0_infer.tar'

+```

+

+并修改配置文件`./configs/inference_general.yaml`中的`IndexProcess.index_dir`字段为对应场景index库地址:

+

+```shell

+IndexProcess:

+ index_dir:'./datasets/AnimalImageDataset/Index/'

+```

+

+

+运行下面的命令,对图像`./datasets/AnimalImageDataset/Query/羚羊/0a37838e99.jpg`进行识别与检索

+

+```shell

+# 使用下面的命令使用 GPU 进行预测

+python3.7 python/predict_system.py -c configs/inference_general.yaml

+

+# 使用下面的命令使用 CPU 进行预测

+python3.7 python/predict_system.py -c configs/inference_general.yaml -o Global.use_gpu=False

+```

+

+最终输出结果如下:

+```

+[{'bbox': [609, 70, 1079, 629], 'rec_docs': '羚羊', 'rec_scores': 0.6571544}]

+```

+其中`bbox`表示检测出的主体所在位置,`rec_docs`表示索引库中与检测框最为相似的类别,`rec_scores`表示对应的置信度。

+检测的可视化结果也保存在`output`文件夹下,对于本张图像,识别结果可视化如下所示。

+

+

+

+

+

+#### 2.4.2 基于文件夹的批量识别

+

+如果希望预测文件夹内的图像,可以直接修改配置文件中`Global.infer_imgs`字段,也可以通过下面的`-o`参数修改对应的配置。

+

+```shell

+# 使用下面的命令使用 GPU 进行预测,如果希望使用 CPU 预测,可以在命令后面添加 -o Global.use_gpu=False

+python3.7 python/predict_system.py -c configs/inference_general.yaml -o Global.infer_imgs="./datasets/AnimalImageDataset/Query/羚羊"

+```

+终端中会输出该文件夹内所有图像的识别结果,如下所示。

+```

+...

+[{'bbox': [0, 0, 1200, 675], 'rec_docs': '羚羊', 'rec_scores': 0.6153812}]

+[{'bbox': [0, 0, 275, 183], 'rec_docs': '羚羊', 'rec_scores': 0.77218026}]

+[{'bbox': [264, 79, 1088, 850], 'rec_docs': '羚羊', 'rec_scores': 0.81452656}]

+[{'bbox': [0, 0, 188, 268], 'rec_docs': '羚羊', 'rec_scores': 0.637074}]

+[{'bbox': [118, 41, 235, 161], 'rec_docs': '羚羊', 'rec_scores': 0.67315465}]

+[{'bbox': [0, 0, 175, 287], 'rec_docs': '羚羊', 'rec_scores': 0.68271667}]

+[{'bbox': [0, 0, 310, 163], 'rec_docs': '羚羊', 'rec_scores': 0.6706451}]

+...

+```

+所有图像的识别结果可视化图像也保存在`output`文件夹内。

diff --git a/docs/zh_CN/models/LeViT.md b/docs/zh_CN/models/LeViT.md

index 5f0e480047adc612850fdd1be9e8de8e978e898e..d8aaa744b4c7312d8c6a5c186ae48a0e671171c2 100644

--- a/docs/zh_CN/models/LeViT.md

+++ b/docs/zh_CN/models/LeViT.md

@@ -18,7 +18,7 @@ LeViT 是一种快速推理的、用于图像分类任务的混合神经网络

| Models | Top1 | Top5 | Reference

|两千多种logo识别 | 0.9313 | [Logo3k](https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/data/PP-ShiTuV2_application_dataset/Logo3k.tar) | [原数据下载地址](https://github.com/Wangjing1551/LogoDet-3K-Dataset) |

+

+

+

+

+

+## 2. 使用说明

+

+

+

+### 2.1 环境配置

+- 安装:请先参考文档[环境准备](../installation/install_paddleclas.md)配置PaddleClas运行环境

+- 进入`deploy`运行目录,本部分所有内容与命令均需要在`deploy`目录下运行,可以通过下面命令进入`deploy`目录。

+```shell

+cd deploy

+```

+

+

+

+

+### 2.2 下载、解压场景库数据

+首先创建存放场景库的地址`deploy/datasets`:

+

+```shell

+mkdir datasets

+```

+下载并解压对应场景库到`deploy/datasets`中。

+```shell

+cd datasets

+

+# 下载并解压场景库数据

+wget {场景库下载链接} && tar -xf {压缩包的名称}

+```

+以`dataset_name`为例,解压完毕后,`datasets/dataset_name`文件夹下应有如下文件结构:

+```shel

+├── dataset_name/

+│ ├── Gallery/

+│ ├── Index/

+│ ├── Query/

+│ ├── gallery_list.txt/

+│ ├── query_list.txt/

+│ ├── label_list.txt/

+├── ...

+```

+其中,`Gallery`文件夹中存放的是用于构建索引库的原始图像,`Index`表示基于原始图像构建得到的索引库信息,`Query`文件夹存放的是用于检索的图像列表,`gallery_list.txt`和`query_list.txt`分别为索引库和检索图像的标签文件,`label_list.txt`是标签的中英文对照文件(注意:商标场景库文件不包含中英文对照文件)。

+

+

+

+### 2.3 准备模型

+创建存放模型的文件夹`deploy/models`,并下载轻量级主体检测、识别模型,命令如下:

+```shell

+cd ..

+mkdir models

+cd models

+

+# 下载检测模型并解压

+# wget {检测模型下载链接} && tar -xf {检测模型压缩包名称}

+wget https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/models/inference/PP-ShiTuV2/general_PPLCNetV2_base_pretrained_v1.0_infer.tar && tar -xf general_PPLCNetV2_base_pretrained_v1.0_infer.tar

+

+# 下载识别 inference 模型并解压

+#wget {识别模型下载链接} && tar -xf {识别模型压缩包名称}

+wget https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/rec/models/inference/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_infer.tar && tar -xf picodet_PPLCNet_x2_5_mainbody_lite_v1.0_infer.tar

+```

+

+解压完成后,`models`文件夹下有如下文件结构:

+```

+├── inference_model_name

+│ ├── inference.pdiparams

+│ ├── inference.pdiparams.info

+│ └── inference.pdmodel

+└── det_model_name

+ ├── inference.pdiparams

+ ├── inference.pdiparams.info

+ └── inference.pdmodel

+```

+

+

+

+### 2.4 场景库识别与检索

+

+以`动物识别`场景为例,展示识别和检索过程(如果希望尝试其他场景库的识别与检索效果,在下载解压好对应的场景库数据和模型后,替换对应的配置文件即可完成预测)。

+

+注意,此部分使用了`faiss`作为检索库,安装方法如下:

+```shell

+pip install faiss-cpu==1.7.1post2

+```

+

+若使用时,不能正常引用,则`uninstall`之后,重新`install`,尤其是在windows下。

+

+

+

+#### 2.4.1 识别单张图像

+

+假设需要测试`./datasets/AnimalImageDataset/Query/羚羊/0a37838e99.jpg`这张图像识别和检索效果。

+

+首先分别修改配置文件`./configs/inference_general.yaml`中的`Global.det_inference_model_dir`和`Global.rec_inference_model_dir`字段为对应的检测和识别模型文件夹,以及修改测试图像地址字段`Global.infer_imgs`示例如下:

+

+```shell

+Global:

+ infer_imgs: './datasets/AnimalImageDataset/Query/羚羊/0a37838e99.jpg'

+ det_inference_model_dir: './models/picodet_PPLCNet_x2_5_mainbody_lite_v1.0_infer.tar'

+ rec_inference_model_dir: './models/general_PPLCNetV2_base_pretrained_v1.0_infer.tar'

+```

+

+并修改配置文件`./configs/inference_general.yaml`中的`IndexProcess.index_dir`字段为对应场景index库地址:

+

+```shell

+IndexProcess:

+ index_dir:'./datasets/AnimalImageDataset/Index/'

+```

+

+

+运行下面的命令,对图像`./datasets/AnimalImageDataset/Query/羚羊/0a37838e99.jpg`进行识别与检索

+

+```shell

+# 使用下面的命令使用 GPU 进行预测

+python3.7 python/predict_system.py -c configs/inference_general.yaml

+

+# 使用下面的命令使用 CPU 进行预测

+python3.7 python/predict_system.py -c configs/inference_general.yaml -o Global.use_gpu=False

+```

+

+最终输出结果如下:

+```

+[{'bbox': [609, 70, 1079, 629], 'rec_docs': '羚羊', 'rec_scores': 0.6571544}]

+```

+其中`bbox`表示检测出的主体所在位置,`rec_docs`表示索引库中与检测框最为相似的类别,`rec_scores`表示对应的置信度。

+检测的可视化结果也保存在`output`文件夹下,对于本张图像,识别结果可视化如下所示。

+

+

+

+

+

+#### 2.4.2 基于文件夹的批量识别

+

+如果希望预测文件夹内的图像,可以直接修改配置文件中`Global.infer_imgs`字段,也可以通过下面的`-o`参数修改对应的配置。

+

+```shell

+# 使用下面的命令使用 GPU 进行预测,如果希望使用 CPU 预测,可以在命令后面添加 -o Global.use_gpu=False

+python3.7 python/predict_system.py -c configs/inference_general.yaml -o Global.infer_imgs="./datasets/AnimalImageDataset/Query/羚羊"

+```

+终端中会输出该文件夹内所有图像的识别结果,如下所示。

+```

+...

+[{'bbox': [0, 0, 1200, 675], 'rec_docs': '羚羊', 'rec_scores': 0.6153812}]

+[{'bbox': [0, 0, 275, 183], 'rec_docs': '羚羊', 'rec_scores': 0.77218026}]

+[{'bbox': [264, 79, 1088, 850], 'rec_docs': '羚羊', 'rec_scores': 0.81452656}]

+[{'bbox': [0, 0, 188, 268], 'rec_docs': '羚羊', 'rec_scores': 0.637074}]

+[{'bbox': [118, 41, 235, 161], 'rec_docs': '羚羊', 'rec_scores': 0.67315465}]

+[{'bbox': [0, 0, 175, 287], 'rec_docs': '羚羊', 'rec_scores': 0.68271667}]

+[{'bbox': [0, 0, 310, 163], 'rec_docs': '羚羊', 'rec_scores': 0.6706451}]

+...

+```

+所有图像的识别结果可视化图像也保存在`output`文件夹内。

diff --git a/docs/zh_CN/models/LeViT.md b/docs/zh_CN/models/LeViT.md

index 5f0e480047adc612850fdd1be9e8de8e978e898e..d8aaa744b4c7312d8c6a5c186ae48a0e671171c2 100644

--- a/docs/zh_CN/models/LeViT.md

+++ b/docs/zh_CN/models/LeViT.md

@@ -18,7 +18,7 @@ LeViT 是一种快速推理的、用于图像分类任务的混合神经网络

| Models | Top1 | Top5 | Reference -

-体验整体图像识别系统,或查看特征库建立方法,详见[图像识别快速开始文档](../quick_start/quick_start_recognition.md)。其中,图像识别快速开始文档主要讲解整体流程的使用过程。以下内容,主要对上述三个步骤的训练部分进行介绍。

+在Android端或PC端体验整体图像识别系统,或查看特征库建立方法,可以参考 [图像识别快速开始文档](../quick_start/quick_start_recognition.md)。

-首先,请参考[安装指南](../installation/install_paddleclas.md)配置运行环境。

+以下内容,主要对上述三个步骤的训练部分进行介绍。

-## 目录

+在训练开始之前,请参考 [安装指南](../installation/install_paddleclas.md) 配置运行环境。

-- [1. 主体检测](#1)

-- [2. 特征模型训练](#2)

- - [2.1. 特征模型数据准备与处理](#2.1)

- - [2. 2 特征模型基于单卡 GPU 上的训练与评估](#2.2)

- - [2.2.1 特征模型训练](#2.2.2)

- - [2.2.2 特征模型恢复训练](#2.2.2)

- - [2.2.3 特征模型评估](#2.2.3)

- - [2.3 特征模型导出 inference 模型](#2.3)

-- [3. 特征检索](#3)

-- [4. 基础知识](#4)

+## 目录

-

+- [1. 主体检测](#1-主体检测)

+- [2. 特征提取模型训练](#2-特征提取模型训练)

+ - [2.1 特征提取模型数据的准备与处理](#21-特征提取模型数据的准备与处理)

+ - [2.2 特征提取模型在 GPU 上的训练与评估](#22-特征提取模型在-gpu-上的训练与评估)

+ - [2.2.1 特征提取模型训练](#221-特征提取模型训练)

+ - [2.2.2 特征提取模型恢复训练](#222-特征提取模型恢复训练)

+ - [2.2.3 特征提取模型评估](#223-特征提取模型评估)

+ - [2.3 特征提取模型导出 inference 模型](#23-特征提取模型导出-inference-模型)

+- [3. 特征检索](#3-特征检索)

+- [4. 基础知识](#4-基础知识)

+

+

## 1. 主体检测

@@ -38,142 +43,143 @@

[{u'id': 1, u'name': u'foreground', u'supercategory': u'foreground'}]

```

-关于主体检测训练方法可以参考: [PaddleDetection 训练教程](https://github.com/PaddlePaddle/PaddleDetection/blob/develop/docs/tutorials/GETTING_STARTED_cn.md#4-%E8%AE%AD%E7%BB%83)。

+关于主体检测数据集构造与模型训练方法可以参考: [30分钟快速上手PaddleDetection](https://github.com/PaddlePaddle/PaddleDetection/blob/develop/docs/tutorials/GETTING_STARTED_cn.md#30%E5%88%86%E9%92%9F%E5%BF%AB%E9%80%9F%E4%B8%8A%E6%89%8Bpaddledetection)。

更多关于 PaddleClas 中提供的主体检测的模型介绍与下载请参考:[主体检测教程](../image_recognition_pipeline/mainbody_detection.md)。

-

+

-## 2. 特征模型训练

+## 2. 特征提取模型训练

-

+为了快速体验 PaddleClas 图像检索模块,以下使用经典的200类鸟类细粒度分类数据集 [CUB_200_2011](http://vision.ucsd.edu/sites/default/files/WelinderEtal10_CUB-200.pdf) 为例,介绍特征提取模型训练过程。CUB_200_2011 下载方式请参考 [CUB_200_2011官网](https://www.vision.caltech.edu/datasets/cub_200_2011/)

-### 2.1 特征模型数据的准备与处理

+

-* 进入 `PaddleClas` 目录。

+### 2.1 特征提取模型数据的准备与处理

-```bash

-## linux or mac, $path_to_PaddleClas 表示 PaddleClas 的根目录,用户需要根据自己的真实目录修改

-cd $path_to_PaddleClas

-```

+* 进入 `PaddleClas` 目录

-* 进入 `dataset` 目录,为了快速体验 PaddleClas 图像检索模块,此处使用的数据集为 [CUB_200_2011](http://vision.ucsd.edu/sites/default/files/WelinderEtal10_CUB-200.pdf),其是一个包含 200 类鸟的细粒度鸟类数据集。首先,下载 CUB_200_2011 数据集,下载方式请参考[官网](http://www.vision.caltech.edu/visipedia/CUB-200-2011.html)。

+ ```shell

+ cd PaddleClas

+ ```

-```shell

-# linux or mac

-cd dataset

+* 进入 `dataset` 目录

-# 将下载后的数据拷贝到此目录

-cp {数据存放的路径}/CUB_200_2011.tgz .

+ ```shell

+ # 进入dataset目录

+ cd dataset

-# 解压

-tar -xzvf CUB_200_2011.tgz

+ # 将下载后的数据拷贝到dataset目录下

+ cp {数据存放的路径}/CUB_200_2011.tgz ./

-#进入 CUB_200_2011 目录

-cd CUB_200_2011

-```

+ # 解压该数据集

+ tar -xzvf CUB_200_2011.tgz

-该数据集在用作图像检索任务时,通常将前 100 类当做训练集,后 100 类当做测试集,所以此处需要将下载的数据集做一些后处理,来更好的适应 PaddleClas 的图像检索训练。

+ #进入 CUB_200_2011 目录

+ cd CUB_200_2011

+ ```

-```shell

-#新建 train 和 test 目录

-mkdir train && mkdir test

+* 该数据集在用作图像检索任务时,通常将前 100 类当做训练集,后 100 类当做测试集,所以此处需要将下载的数据集做一些后处理,来更好的适应 PaddleClas 的图像检索训练。

-#将数据分成训练集和测试集,前 100 类作为训练集,后 100 类作为测试集

-ls images | awk -F "." '{if(int($1)<101)print "mv images/"$0" train/"int($1)}' | sh

-ls images | awk -F "." '{if(int($1)>100)print "mv images/"$0" test/"int($1)}' | sh

+ ```shell

+ #新建 train 和 test 目录

+ mkdir train

+ mkdir test

-#生成 train_list 和 test_list

-tree -r -i -f train | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > train_list.txt

-tree -r -i -f test | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > test_list.txt

-```

-

-至此,现在已经得到 `CUB_200_2011` 的训练集(`train` 目录)、测试集(`test` 目录)、`train_list.txt`、`test_list.txt`。

+ #将数据分成训练集和测试集,前 100 类作为训练集,后 100 类作为测试集

+ ls images | awk -F "." '{if(int($1)<101)print "mv images/"$0" train/"int($1)}' | sh

+ ls images | awk -F "." '{if(int($1)>100)print "mv images/"$0" test/"int($1)}' | sh

-数据处理完毕后,`CUB_200_2011` 中的 `train` 目录下应有如下结构:

+ #生成 train_list 和 test_list

+ tree -r -i -f train | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > train_list.txt

+ tree -r -i -f test | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > test_list.txt

+ ```

-```

-├── 1

-│ ├── Black_Footed_Albatross_0001_796111.jpg

-│ ├── Black_Footed_Albatross_0002_55.jpg

- ...

-├── 10

-│ ├── Red_Winged_Blackbird_0001_3695.jpg

-│ ├── Red_Winged_Blackbird_0005_5636.jpg

-...

-```

+ 至此,现在已经得到 `CUB_200_2011` 的训练集(`train` 目录)、测试集(`test` 目录)、`train_list.txt`、`test_list.txt`。

-`train_list.txt` 应为:

+ 数据处理完毕后,`CUB_200_2011` 中的 `train` 目录下应有如下结构:

-```

-train/99/Ovenbird_0137_92639.jpg 99 1

-train/99/Ovenbird_0136_92859.jpg 99 2

-train/99/Ovenbird_0135_93168.jpg 99 3

-train/99/Ovenbird_0131_92559.jpg 99 4

-train/99/Ovenbird_0130_92452.jpg 99 5

-...

-```

-其中,分隔符为空格" ", 三列数据的含义分别是训练数据的路径、训练数据的 label 信息、训练数据的 unique id。

+ ```

+ CUB_200_2011/train/

+ ├── 1

+ │ ├── Black_Footed_Albatross_0001_796111.jpg

+ │ ├── Black_Footed_Albatross_0002_55.jpg

+ ...

+ ├── 10

+ │ ├── Red_Winged_Blackbird_0001_3695.jpg

+ │ ├── Red_Winged_Blackbird_0005_5636.jpg

+ ...

+ ```

-测试集格式与训练集格式相同。

+ `train_list.txt` 应为:

-**注意**:

+ ```

+ train/99/Ovenbird_0137_92639.jpg 99 1

+ train/99/Ovenbird_0136_92859.jpg 99 2

+ train/99/Ovenbird_0135_93168.jpg 99 3

+ train/99/Ovenbird_0131_92559.jpg 99 4

+ train/99/Ovenbird_0130_92452.jpg 99 5

+ ...

+ ```

+ 其中,分隔符为空格`" "`, 三列数据的含义分别是`训练数据的相对路径`、`训练数据的 label 标签`、`训练数据的 unique id`。测试集格式与训练集格式相同。

-* 当 gallery dataset 和 query dataset 相同时,为了去掉检索得到的第一个数据(检索图片本身无须评估),每个数据需要对应一个 unique id,用于后续评测 mAP、recall@1 等指标。关于 gallery dataset 与 query dataset 的解析请参考[图像检索数据集介绍](#图像检索数据集介绍), 关于 mAP、recall@1 等评测指标请参考[图像检索评价指标](#图像检索评价指标)。

+* 构建完毕后返回 `PaddleClas` 根目录

-返回 `PaddleClas` 根目录

+ ```shell

+ # linux or mac

+ cd ../../

+ ```

-```shell

-# linux or mac

-cd ../../

-```

+**注意**:

-

+* 当 gallery dataset 和 query dataset 相同时,为了去掉检索得到的第一个数据(检索图片本身不能出现在gallery中),每个数据需要对应一个 unique id(一般使用从1开始的自然数为unique id,如1,2,3,...),用于后续评测 `mAP`、`recall@1` 等指标。关于 gallery dataset 与 query dataset 的解析请参考[图像检索数据集介绍](#图像检索数据集介绍), 关于 `mAP`、`recall@1` 等评测指标请参考[图像检索评价指标](#图像检索评价指标)。

-### 2.2 特征模型 GPU 上的训练与评估

+

-在基于单卡 GPU 上训练与评估,推荐使用 `tools/train.py` 与 `tools/eval.py` 脚本。

+### 2.2 特征提取模型在 GPU 上的训练与评估

-PaddleClas 支持使用 VisualDL 可视化训练过程。VisualDL 是飞桨可视化分析工具,以丰富的图表呈现训练参数变化趋势、模型结构、数据样本、高维数据分布等。可帮助用户更清晰直观地理解深度学习模型训练过程及模型结构,进而实现高效的模型优化。更多细节请查看[VisualDL](../others/VisualDL.md)。

+下面以 MobileNetV1 模型为例,介绍特征提取模型在 GPU 上的训练与评估流程

-#### 2.2.1 特征模型训练

+#### 2.2.1 特征提取模型训练

准备好配置文件之后,可以使用下面的方式启动图像检索任务的训练。PaddleClas 训练图像检索任务的方法是度量学习,关于度量学习的解析请参考[度量学习](#度量学习)。

```shell

# 单卡 GPU

-python3 tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Arch.Backbone.pretrained=True \

- -o Global.device=gpu

+python3.7 tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Arch.Backbone.pretrained=True \

+-o Global.device=gpu

+

# 多卡 GPU

export CUDA_VISIBLE_DEVICES=0,1,2,3

-python3 -m paddle.distributed.launch tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Arch.Backbone.pretrained=True \

- -o Global.device=gpu

+python3.7 -m paddle.distributed.launch tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Arch.Backbone.pretrained=True \

+-o Global.device=gpu

```

-其中,`-c` 用于指定配置文件的路径,`-o` 用于指定需要修改或者添加的参数,其中 `-o Arch.Backbone.pretrained=True` 表示 Backbone 部分使用预训练模型,此外,`Arch.Backbone.pretrained` 也可以指定具体的模型权重文件的地址,使用时需要换成自己的预训练模型权重文件的路径。`-o Global.device=gpu` 表示使用 GPU 进行训练。如果希望使用 CPU 进行训练,则需要将 `Global.device` 设置为 `cpu`。

+**注**:其中,`-c` 用于指定配置文件的路径,`-o` 用于指定需要修改或者添加的参数,其中 `-o Arch.Backbone.pretrained=True` 表示 Backbone 在训练开始前会加载预训练模型;`-o Arch.Backbone.pretrained` 也可以指定为模型权重文件的路径,使用时换成自己的预训练模型权重文件的路径即可;`-o Global.device=gpu` 表示使用 GPU 进行训练。如果希望使用 CPU 进行训练,则设置 `-o Global.device=cpu`即可。

更详细的训练配置,也可以直接修改模型对应的配置文件。具体配置参数参考[配置文档](config_description.md)。

-运行上述命令,可以看到输出日志,示例如下:

+运行上述训练命令,可以看到输出日志,示例如下:

- ```

- ...

- [Train][Epoch 1/50][Avg]CELoss: 6.59110, TripletLossV2: 0.54044, loss: 7.13154

- ...

- [Eval][Epoch 1][Avg]recall1: 0.46962, recall5: 0.75608, mAP: 0.21238

- ...

- ```

-此处配置文件的 Backbone 是 MobileNetV1,如果想使用其他 Backbone,可以重写参数 `Arch.Backbone.name`,比如命令中增加 `-o Arch.Backbone.name={其他 Backbone}`。此外,由于不同模型 `Neck` 部分的输入维度不同,更换 Backbone 后可能需要改写此处的输入大小,改写方式类似替换 Backbone 的名字。

+ ```log

+ ...

+ [Train][Epoch 1/50][Avg]CELoss: 6.59110, TripletLossV2: 0.54044, loss: 7.13154

+ ...

+ [Eval][Epoch 1][Avg]recall1: 0.46962, recall5: 0.75608, mAP: 0.21238

+ ...

+ ```

+

+此处配置文件的 Backbone 是 MobileNetV1,如果想使用其他 Backbone,可以重写参数 `Arch.Backbone.name`,比如命令中增加 `-o Arch.Backbone.name={其他 Backbone 的名字}`。此外,由于不同模型 `Neck` 部分的输入维度不同,更换 Backbone 后可能需要改写 `Neck` 的输入大小,改写方式类似替换 Backbone 的名字。

在训练 Loss 部分,此处使用了 [CELoss](../../../ppcls/loss/celoss.py) 和 [TripletLossV2](../../../ppcls/loss/triplet.py),配置文件如下:

-```

+```yaml

Loss:

Train:

- CELoss:

@@ -183,110 +189,113 @@ Loss:

margin: 0.5

```

-最终的总 Loss 是所有 Loss 的加权和,其中 weight 定义了特定 Loss 在最终总 Loss 的权重。如果想替换其他 Loss,也可以在配置文件中更改 Loss 字段,目前支持的 Loss 请参考 [Loss](../../../ppcls/loss)。

+最终的总 Loss 是所有 Loss 的加权和,其中 weight 定义了特定 Loss 在最终总 Loss 的权重。如果想替换其他 Loss,也可以在配置文件中更改 Loss 字段,目前支持的 Loss 请参考 [Loss](../../../ppcls/loss/__init__.py)。

-#### 2.2.2 特征模型恢复训练

+#### 2.2.2 特征提取模型恢复训练

-如果训练任务因为其他原因被终止,也可以加载断点权重文件,继续训练:

+如果训练任务因为其他原因被终止,且训练过程中有保存权重文件,可以加载断点权重文件,继续训练:

```shell

-# 单卡

-python3 tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.checkpoints="./output/RecModel/epoch_5" \

- -o Global.device=gpu

-# 多卡

+# 单卡恢复训练

+python33.7 tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.checkpoints="./output/RecModel/epoch_5" \

+-o Global.device=gpu

+

+# 多卡恢复训练

export CUDA_VISIBLE_DEVICES=0,1,2,3

-python3 -m paddle.distributed.launch tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.checkpoints="./output/RecModel/epoch_5" \

- -o Global.device=gpu

+python3.7 -m paddle.distributed.launch tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.checkpoints="./output/RecModel/epoch_5" \

+-o Global.device=gpu

```

其中配置文件不需要做任何修改,只需要在继续训练时设置 `Global.checkpoints` 参数即可,表示加载的断点权重文件路径,使用该参数会同时加载保存的断点权重和学习率、优化器等信息。

**注意**:

-* `-o Global.checkpoints` 参数无需包含断点权重文件的后缀名,上述训练命令会在训练过程中生成如下所示的断点权重文件,若想从断点 `5` 继续训练,则 `Global.checkpoints` 参数只需设置为 `"./output/RecModel/epoch_5"`,PaddleClas 会自动补充后缀名。

-

- ```shell

- output/

- └── RecModel

- ├── best_model.pdopt

- ├── best_model.pdparams

- ├── best_model.pdstates

- ├── epoch_1.pdopt

- ├── epoch_1.pdparams

- ├── epoch_1.pdstates

- .

- .

- .

- ```

+* `-o Global.checkpoints` 后的参数无需包含断点权重文件的后缀名,上述训练命令会在训练过程中生成如下所示的断点权重文件,若想从断点 `epoch_5` 继续训练,则 `Global.checkpoints` 参数只需设置为 `"./output/RecModel/epoch_5"`,PaddleClas 会自动补充后缀名。

+

+ `epoch_5.pdparams`所在目录如下所示:

+

+ ```log

+ output/

+ └── RecModel

+ ├── best_model.pdopt

+ ├── best_model.pdparams

+ ├── best_model.pdstates

+ ├── epoch_5.pdopt

+ ├── epoch_5.pdparams

+ ├── epoch_5.pdstates

+ .

+ .

+ .

+ ```

-#### 2.2.3 特征模型评估

+#### 2.2.3 特征提取模型评估

-可以通过以下命令进行模型评估。

+可以通过以下命令进行指定模型进行评估。

```bash

-# 单卡

-python3 tools/eval.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.pretrained_model=./output/RecModel/best_model

-# 多卡

+# 单卡评估

+python3.7 tools/eval.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.pretrained_model=./output/RecModel/best_model

+

+# 多卡评估

export CUDA_VISIBLE_DEVICES=0,1,2,3

-python3 -m paddle.distributed.launch tools/eval.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.pretrained_model=./output/RecModel/best_model

+python3.7 -m paddle.distributed.launch tools/eval.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.pretrained_model=./output/RecModel/best_model

```

-上述命令将使用 `./configs/quick_start/MobileNetV1_retrieval.yaml` 作为配置文件,对上述训练得到的模型 `./output/RecModel/best_model` 进行评估。你也可以通过更改配置文件中的参数来设置评估,也可以通过 `-o` 参数更新配置,如上所示。

+上述命令将使用 `./configs/quick_start/MobileNetV1_retrieval.yaml` 作为配置文件,对上述训练得到的模型 `./output/RecModel/best_model.pdparams` 进行评估。你也可以通过更改配置文件中的参数来设置评估,也可以通过 `-o` 参数更新配置,如上所示。

可配置的部分评估参数说明如下:

-* `Arch.name`:模型名称

* `Global.pretrained_model`:待评估的模型的预训练模型文件路径,不同于 `Global.Backbone.pretrained`,此处的预训练模型是整个模型的权重,而 `Global.Backbone.pretrained` 只是 Backbone 部分的权重。当需要做模型评估时,需要加载整个模型的权重。

-* `Metric.Eval`:待评估的指标,默认评估 recall@1、recall@5、mAP。当你不准备评测某一项指标时,可以将对应的试标从配置文件中删除;当你想增加某一项评测指标时,也可以参考 [Metric](../../../ppcls/metric/metrics.py) 部分在配置文件 `Metric.Eval` 中添加相关的指标。

+* `Metric.Eval`:待评估的指标,默认评估 `recall@1`、`recall@5`、`mAP`。当你不准备评测某一项指标时,可以将对应的试标从配置文件中删除;当你想增加某一项评测指标时,也可以参考 [Metric](../../../ppcls/metric/metrics.py) 部分在配置文件 `Metric.Eval` 中添加相关的指标。

**注意:**

-* 在加载待评估模型时,需要指定模型文件的路径,但无需包含文件后缀名,PaddleClas 会自动补齐 `.pdparams` 的后缀,如 [2.2.2 特征模型恢复训练](#2.2.2)。

+* 在加载待评估模型时,需要指定模型文件的路径,但无需包含文件后缀名,PaddleClas 会自动补齐 `.pdparams` 的后缀,如 [2.2.2 特征提取模型恢复训练](#2.2.2)。

-* Metric learning 任务一般不评测 TopkAcc。

+* Metric learning 任务一般不评测 `TopkAcc` 指标。

-### 2.3 特征模型导出 inference 模型

+### 2.3 特征提取模型导出 inference 模型

通过导出 inference 模型,PaddlePaddle 支持使用预测引擎进行预测推理。对训练好的模型进行转换:

```bash

-python3 tools/export_model.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.pretrained_model=output/RecModel/best_model \

- -o Global.save_inference_dir=./inference

+python3.7 tools/export_model.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.pretrained_model=output/RecModel/best_model \

+-o Global.save_inference_dir=./inference

```

-其中,`Global.pretrained_model` 用于指定模型文件路径,该路径仍无需包含模型文件后缀名(如[2.2.2 特征模型恢复训练](#2.2.2))。当执行后,会在当前目录下生成 `./inference` 目录,目录下包含 `inference.pdiparams`、`inference.pdiparams.info`、`inference.pdmodel` 文件。`Global.save_inference_dir` 可以指定导出 inference 模型的路径。此处保存的 inference 模型在 embedding 特征层做了截断,即模型最终的输出为 n 维 embedding 特征。

+其中,`Global.pretrained_model` 用于指定模型文件路径,该路径仍无需包含模型文件后缀名(如[2.2.2 特征提取模型恢复训练](#2.2.2))。当执行后,会在当前目录下生成 `./inference` 目录,目录下包含 `inference.pdiparams`、`inference.pdiparams.info`、`inference.pdmodel` 文件。`Global.save_inference_dir` 可以指定导出 inference 模型文件夹的路径。此处保存的 inference 模型在 embedding 特征层做了截断,即模型的推理输出为 n 维特征。

-上述命令将生成模型结构文件(`inference.pdmodel`)和模型权重文件(`inference.pdiparams`),然后可以使用预测引擎进行推理。使用 inference 模型推理的流程可以参考[基于 Python 预测引擎预测推理](../inference_deployment/python_deploy.md)。

+有了上述命令将生成的模型结构文件(`inference.pdmodel`)和模型权重文件(`inference.pdiparams`),接下来就可以使用预测引擎进行推理。使用 inference 模型推理的流程可以参考[基于 Python 预测引擎预测推理](../inference_deployment/python_deploy.md)。

-

+

## 3. 特征检索

PaddleClas 图像检索部分目前支持的环境如下:

-```shell

-└── CPU/单卡 GPU

- ├── Linux

- ├── MacOS

- └── Windows

-```

+| 操作系统 | 推理硬件 |

+| :------- | :------- |

+| Linux | CPU/GPU |

+| Windows | CPU/GPU |

+| MacOS | CPU/GPU |

-此部分使用了 [Faiss](https://github.com/facebookresearch/faiss) 作为检索库,其是一个高效的特征检索及聚类的库。此库中集成了多种相似度检索算法,以满足不同的检索场景。在 PaddleClas 中,支持三种检索算法:

+

+此部分使用了第三方开源库 [Faiss](https://github.com/facebookresearch/faiss) 作为检索工具,它是一个高效的特征检索与聚类的库,集成了多种相似度检索算法,以满足不同的检索场景。PaddleClas 目前支持三种检索算法:

- **HNSW32**: 一种图索引方法。检索精度较高,速度较快。但是特征库只支持添加图像功能,不支持删除图像特征功能。(默认方法)

- **IVF**:倒排索引检索方法。速度较快,但是精度略低。特征库支持增加、删除图像特功能。

@@ -296,22 +305,27 @@ PaddleClas 图像检索部分目前支持的环境如下:

具体安装方法如下:

-```python

-pip install faiss-cpu==1.7.1post2

+```shell

+python3.7 -m pip install faiss-cpu==1.7.1post2

```

-若使用时,不能正常引用,则 `uninstall` 之后,重新 `install`,尤其是 `windows` 下。

+若无法正常使用faiss,可以按以下命令先将其卸载,然后重新安装(Windows系统中该问题比较常见)。

+

+```shell

+python3.7 -m pip uninstall faiss-cpu

+python3.7 -m pip install faiss-cpu==1.7.1post2

+```

## 4. 基础知识

-图像检索指的是给定一个包含特定实例(例如特定目标、场景、物品等)的查询图像,图像检索旨在从数据库图像中找到包含相同实例的图像。不同于图像分类,图像检索解决的是一个开集问题,训练集中可能不包含被识别的图像的类别。图像检索的整体流程为:首先将图像中表示为一个合适的特征向量,其次,对这些图像的特征向量用欧式距离或余弦距离进行最近邻搜索以找到底库中相似的图像,最后,可以使用一些后处理技术对检索结果进行微调,确定被识别图像的类别等信息。所以,决定一个图像检索算法性能的关键在于图像对应的特征向量的好坏。

+图像检索指的是给定一个包含特定实例(例如特定目标、场景、物品等)的查询图像,图像检索旨在从数据库图像中找到包含相同实例的图像。不同于图像分类,图像检索解决的是一个开集问题,训练集中可能不包含被识别的图像的类别。图像检索的整体流程为:首先将图像中表示为一个合适的特征向量,其次对这些图像的特征向量用合适的距离度量函数进行最近邻搜索以找到数据库图像中相似的图像,最后,可能会使用一些后处理对检索结果进行进一步优化,得到待识别图像的类别、相似度等信息。所以,图像检索算法性能的关键在于图像提取的特征向量的表示能力强弱。

- 度量学习(Metric Learning)

-度量学习研究如何在一个特定的任务上学习一个距离函数,使得该距离函数能够帮助基于近邻的算法(kNN、k-means 等)取得较好的性能。深度度量学习(Deep Metric Learning)是度量学习的一种方法,它的目标是学习一个从原始特征到低维稠密的向量空间(嵌入空间,embedding space)的映射,使得同类对象在嵌入空间上使用常用的距离函数(欧氏距离、cosine 距离等)计算的距离比较近,而不同类的对象之间的距离则比较远。深度度量学习在计算机视觉领域取得了非常多的成功的应用,比如人脸识别、商品识别、图像检索、行人重识别等。更详细的介绍请参考[此文档](../algorithm_introduction/metric_learning.md)。

+ 度量学习研究如何在一个特定的任务上学习一个距离函数,使得该距离函数能够帮助基于近邻的算法(kNN、k-means 等)取得较好的性能。深度度量学习(Deep Metric Learning)是度量学习的一种方法,它的目标是学习一个从原始特征到低维稠密的向量空间(嵌入空间,embedding space)的映射,使得同类对象在嵌入空间上使用常用的距离函数(欧氏距离、cosine 距离等)计算的距离比较近,而不同类的对象之间的距离则比较远。深度度量学习在计算机视觉领域取得了非常多的成功的应用,比如人脸识别、商品识别、图像检索、行人重识别等。更详细的介绍请参考[此文档](../algorithm_introduction/metric_learning.md)。

@@ -319,19 +333,17 @@ pip install faiss-cpu==1.7.1post2

- 训练集合(train dataset):用来训练模型,使模型能够学习该集合的图像特征。

- 底库数据集合(gallery dataset):用来提供图像检索任务中的底库数据,该集合可与训练集或测试集相同,也可以不同,当与训练集相同时,测试集的类别体系应与训练集的类别体系相同。

- - 测试集合(query dataset):用来测试模型的好坏,通常要对测试集的每一张测试图片进行特征提取,之后和底库数据的特征进行距离匹配,得到识别结果,后根据识别结果计算整个测试集的指标。

+ - 测试集合(query dataset):用来测试模型的检索性能,通常要对测试集的每一张测试图片进行特征提取,之后和底库数据的特征进行距离匹配,得到检索结果,后根据检索结果计算模型在整个测试集上的性能指标。

- 图像检索评价指标

- 召回率(recall):表示预测为正例且标签为正例的个数 / 标签为正例的个数

-

- - recall@1:检索的 top-1 中预测正例且标签为正例的个数 / 标签为正例的个数

- - recall@5:检索的 top-5 中所有预测正例且标签为正例的个数 / 标签为正例的个数

+ - `recall@k`:检索的 top-k 结果中预测为正例且标签为正例的个数 / 标签为正例的个数

- 平均检索精度(mAP)

- - AP: AP 指的是不同召回率上的正确率的平均值

- - mAP: 测试集中所有图片对应的 AP 的平均值

+ - `AP`: AP 指的是不同召回率上的正确率的平均值

+ - `mAP`: 测试集中所有图片对应的 AP 的平均值

diff --git a/docs/zh_CN/others/update_history.md b/docs/zh_CN/others/update_history.md

index 5ea649e52a9d53eb3aab5b8b9322d1a87920fefa..0e88a51362d7d04db960c966db72a5ec3a0ee787 100644

--- a/docs/zh_CN/others/update_history.md

+++ b/docs/zh_CN/others/update_history.md

@@ -1,5 +1,6 @@

# 更新日志

+- 2022.4.21 新增 CVPR2022 oral论文 [MixFormer](https://arxiv.org/pdf/2204.02557.pdf) 相关[代码](https://github.com/PaddlePaddle/PaddleClas/pull/1820/files)。

- 2021.11.1 发布[PP-ShiTu技术报告](https://arxiv.org/pdf/2111.00775.pdf),新增饮料识别demo。

- 2021.10.23 发布轻量级图像识别系统PP-ShiTu,CPU上0.2s即可完成在10w+库的图像识别。[点击这里](../quick_start/quick_start_recognition.md)立即体验。

- 2021.09.17 发布PP-LCNet系列超轻量骨干网络模型, 在Intel CPU上,单张图像预测速度约5ms,ImageNet-1K数据集上Top1识别准确率达到80.82%,超越ResNet152的模型效果。PP-LCNet的介绍可以参考[论文](https://arxiv.org/pdf/2109.15099.pdf), 或者[PP-LCNet模型介绍](../models/PP-LCNet.md),相关指标和预训练权重可以从 [这里](../algorithm_introduction/ImageNet_models.md)下载。

diff --git a/docs/zh_CN/quick_start/quick_start_recognition.md b/docs/zh_CN/quick_start/quick_start_recognition.md

index 38803ec9be510d3a4a96117fce3a1ccf537d3af9..550e455228f65b6886b13d8627413d4dd1387990 100644

--- a/docs/zh_CN/quick_start/quick_start_recognition.md

+++ b/docs/zh_CN/quick_start/quick_start_recognition.md

@@ -1,87 +1,131 @@

-# 图像识别快速开始

+## 图像识别快速体验

-本文档包含 3 个部分:环境配置、图像识别体验、未知类别的图像识别体验。

+本文档包含 2 个部分:PP-ShiTu android端 demo 快速体验与PP-ShiTu PC端 demo 快速体验。

如果图像类别已经存在于图像索引库中,那么可以直接参考[图像识别体验](#图像识别体验)章节,完成图像识别过程;如果希望识别未知类别的图像,即图像类别之前不存在于索引库中,那么可以参考[未知类别的图像识别体验](#未知类别的图像识别体验)章节,完成建立索引并识别的过程。

## 目录

-* [1. 环境配置](#环境配置)

-* [2. 图像识别体验](#图像识别体验)

- * [2.1 下载、解压 inference 模型与 demo 数据](#2.1)

- * [2.2 瓶装饮料识别与检索](#瓶装饮料识别与检索)

- * [2.2.1 识别单张图像](#识别单张图像)

- * [2.2.2 基于文件夹的批量识别](#基于文件夹的批量识别)

-* [3. 未知类别的图像识别体验](#未知类别的图像识别体验)

- * [3.1 准备新的数据与标签](#准备新的数据与标签)

- * [3.2 建立新的索引库](#建立新的索引库)

- * [3.3 基于新的索引库的图像识别](#基于新的索引库的图像识别)

-* [4. 服务端识别模型列表](#4)

+- [1. PP-ShiTu android demo 快速体验](#1-pp-shitu-android-demo-快速体验)

+ - [1.1 安装 PP-ShiTu android demo](#11-安装-pp-shitu-android-demo)

+ - [1.2 操作说明](#12-操作说明)

+- [2. PP-ShiTu PC端 demo 快速体验](#2-pp-shitu-pc端-demo-快速体验)

+ - [2.1 环境配置](#21-环境配置)

+ - [2.2 图像识别体验](#22-图像识别体验)

+ - [2.2.1 下载、解压 inference 模型与 demo 数据](#221-下载解压-inference-模型与-demo-数据)

+ - [2.2.2 瓶装饮料识别与检索](#222-瓶装饮料识别与检索)

+ - [2.2.2.1 识别单张图像](#2221-识别单张图像)

+ - [2.2.2.2 基于文件夹的批量识别](#2222-基于文件夹的批量识别)

+ - [2.3 未知类别的图像识别体验](#23-未知类别的图像识别体验)

+ - [2.3.1 准备新的数据与标签](#231-准备新的数据与标签)

+ - [2.3.2 建立新的索引库](#232-建立新的索引库)

+ - [2.3.3 基于新的索引库的图像识别](#233-基于新的索引库的图像识别)

+ - [2.4 服务端识别模型列表](#24-服务端识别模型列表)

+

+

+

+## 1. PP-ShiTu android demo 快速体验

+

+

+

+### 1.1 安装 PP-ShiTu android demo

+

+可以通过扫描二维码或者[点击链接](https://paddle-imagenet-models-name.bj.bcebos.com/demos/PP-ShiTu.apk)下载并安装APP

+

+

-

-体验整体图像识别系统,或查看特征库建立方法,详见[图像识别快速开始文档](../quick_start/quick_start_recognition.md)。其中,图像识别快速开始文档主要讲解整体流程的使用过程。以下内容,主要对上述三个步骤的训练部分进行介绍。

+在Android端或PC端体验整体图像识别系统,或查看特征库建立方法,可以参考 [图像识别快速开始文档](../quick_start/quick_start_recognition.md)。

-首先,请参考[安装指南](../installation/install_paddleclas.md)配置运行环境。

+以下内容,主要对上述三个步骤的训练部分进行介绍。

-## 目录

+在训练开始之前,请参考 [安装指南](../installation/install_paddleclas.md) 配置运行环境。

-- [1. 主体检测](#1)

-- [2. 特征模型训练](#2)

- - [2.1. 特征模型数据准备与处理](#2.1)

- - [2. 2 特征模型基于单卡 GPU 上的训练与评估](#2.2)

- - [2.2.1 特征模型训练](#2.2.2)

- - [2.2.2 特征模型恢复训练](#2.2.2)

- - [2.2.3 特征模型评估](#2.2.3)

- - [2.3 特征模型导出 inference 模型](#2.3)

-- [3. 特征检索](#3)

-- [4. 基础知识](#4)

+## 目录

-

+- [1. 主体检测](#1-主体检测)

+- [2. 特征提取模型训练](#2-特征提取模型训练)

+ - [2.1 特征提取模型数据的准备与处理](#21-特征提取模型数据的准备与处理)

+ - [2.2 特征提取模型在 GPU 上的训练与评估](#22-特征提取模型在-gpu-上的训练与评估)

+ - [2.2.1 特征提取模型训练](#221-特征提取模型训练)

+ - [2.2.2 特征提取模型恢复训练](#222-特征提取模型恢复训练)

+ - [2.2.3 特征提取模型评估](#223-特征提取模型评估)

+ - [2.3 特征提取模型导出 inference 模型](#23-特征提取模型导出-inference-模型)

+- [3. 特征检索](#3-特征检索)

+- [4. 基础知识](#4-基础知识)

+

+

## 1. 主体检测

@@ -38,142 +43,143 @@

[{u'id': 1, u'name': u'foreground', u'supercategory': u'foreground'}]

```

-关于主体检测训练方法可以参考: [PaddleDetection 训练教程](https://github.com/PaddlePaddle/PaddleDetection/blob/develop/docs/tutorials/GETTING_STARTED_cn.md#4-%E8%AE%AD%E7%BB%83)。

+关于主体检测数据集构造与模型训练方法可以参考: [30分钟快速上手PaddleDetection](https://github.com/PaddlePaddle/PaddleDetection/blob/develop/docs/tutorials/GETTING_STARTED_cn.md#30%E5%88%86%E9%92%9F%E5%BF%AB%E9%80%9F%E4%B8%8A%E6%89%8Bpaddledetection)。

更多关于 PaddleClas 中提供的主体检测的模型介绍与下载请参考:[主体检测教程](../image_recognition_pipeline/mainbody_detection.md)。

-

+

-## 2. 特征模型训练

+## 2. 特征提取模型训练

-

+为了快速体验 PaddleClas 图像检索模块,以下使用经典的200类鸟类细粒度分类数据集 [CUB_200_2011](http://vision.ucsd.edu/sites/default/files/WelinderEtal10_CUB-200.pdf) 为例,介绍特征提取模型训练过程。CUB_200_2011 下载方式请参考 [CUB_200_2011官网](https://www.vision.caltech.edu/datasets/cub_200_2011/)

-### 2.1 特征模型数据的准备与处理

+

-* 进入 `PaddleClas` 目录。

+### 2.1 特征提取模型数据的准备与处理

-```bash

-## linux or mac, $path_to_PaddleClas 表示 PaddleClas 的根目录,用户需要根据自己的真实目录修改

-cd $path_to_PaddleClas

-```

+* 进入 `PaddleClas` 目录

-* 进入 `dataset` 目录,为了快速体验 PaddleClas 图像检索模块,此处使用的数据集为 [CUB_200_2011](http://vision.ucsd.edu/sites/default/files/WelinderEtal10_CUB-200.pdf),其是一个包含 200 类鸟的细粒度鸟类数据集。首先,下载 CUB_200_2011 数据集,下载方式请参考[官网](http://www.vision.caltech.edu/visipedia/CUB-200-2011.html)。

+ ```shell

+ cd PaddleClas

+ ```

-```shell

-# linux or mac

-cd dataset

+* 进入 `dataset` 目录

-# 将下载后的数据拷贝到此目录

-cp {数据存放的路径}/CUB_200_2011.tgz .

+ ```shell

+ # 进入dataset目录

+ cd dataset

-# 解压

-tar -xzvf CUB_200_2011.tgz

+ # 将下载后的数据拷贝到dataset目录下

+ cp {数据存放的路径}/CUB_200_2011.tgz ./

-#进入 CUB_200_2011 目录

-cd CUB_200_2011

-```

+ # 解压该数据集

+ tar -xzvf CUB_200_2011.tgz

-该数据集在用作图像检索任务时,通常将前 100 类当做训练集,后 100 类当做测试集,所以此处需要将下载的数据集做一些后处理,来更好的适应 PaddleClas 的图像检索训练。

+ #进入 CUB_200_2011 目录

+ cd CUB_200_2011

+ ```

-```shell

-#新建 train 和 test 目录

-mkdir train && mkdir test

+* 该数据集在用作图像检索任务时,通常将前 100 类当做训练集,后 100 类当做测试集,所以此处需要将下载的数据集做一些后处理,来更好的适应 PaddleClas 的图像检索训练。

-#将数据分成训练集和测试集,前 100 类作为训练集,后 100 类作为测试集

-ls images | awk -F "." '{if(int($1)<101)print "mv images/"$0" train/"int($1)}' | sh

-ls images | awk -F "." '{if(int($1)>100)print "mv images/"$0" test/"int($1)}' | sh

+ ```shell

+ #新建 train 和 test 目录

+ mkdir train

+ mkdir test

-#生成 train_list 和 test_list

-tree -r -i -f train | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > train_list.txt

-tree -r -i -f test | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > test_list.txt

-```

-

-至此,现在已经得到 `CUB_200_2011` 的训练集(`train` 目录)、测试集(`test` 目录)、`train_list.txt`、`test_list.txt`。

+ #将数据分成训练集和测试集,前 100 类作为训练集,后 100 类作为测试集

+ ls images | awk -F "." '{if(int($1)<101)print "mv images/"$0" train/"int($1)}' | sh

+ ls images | awk -F "." '{if(int($1)>100)print "mv images/"$0" test/"int($1)}' | sh

-数据处理完毕后,`CUB_200_2011` 中的 `train` 目录下应有如下结构:

+ #生成 train_list 和 test_list

+ tree -r -i -f train | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > train_list.txt

+ tree -r -i -f test | grep jpg | awk -F "/" '{print $0" "int($2) " "NR}' > test_list.txt

+ ```

-```

-├── 1

-│ ├── Black_Footed_Albatross_0001_796111.jpg

-│ ├── Black_Footed_Albatross_0002_55.jpg

- ...

-├── 10

-│ ├── Red_Winged_Blackbird_0001_3695.jpg

-│ ├── Red_Winged_Blackbird_0005_5636.jpg

-...

-```

+ 至此,现在已经得到 `CUB_200_2011` 的训练集(`train` 目录)、测试集(`test` 目录)、`train_list.txt`、`test_list.txt`。

-`train_list.txt` 应为:

+ 数据处理完毕后,`CUB_200_2011` 中的 `train` 目录下应有如下结构:

-```

-train/99/Ovenbird_0137_92639.jpg 99 1

-train/99/Ovenbird_0136_92859.jpg 99 2

-train/99/Ovenbird_0135_93168.jpg 99 3

-train/99/Ovenbird_0131_92559.jpg 99 4

-train/99/Ovenbird_0130_92452.jpg 99 5

-...

-```

-其中,分隔符为空格" ", 三列数据的含义分别是训练数据的路径、训练数据的 label 信息、训练数据的 unique id。

+ ```

+ CUB_200_2011/train/

+ ├── 1

+ │ ├── Black_Footed_Albatross_0001_796111.jpg

+ │ ├── Black_Footed_Albatross_0002_55.jpg

+ ...

+ ├── 10

+ │ ├── Red_Winged_Blackbird_0001_3695.jpg

+ │ ├── Red_Winged_Blackbird_0005_5636.jpg

+ ...

+ ```

-测试集格式与训练集格式相同。

+ `train_list.txt` 应为:

-**注意**:

+ ```

+ train/99/Ovenbird_0137_92639.jpg 99 1

+ train/99/Ovenbird_0136_92859.jpg 99 2

+ train/99/Ovenbird_0135_93168.jpg 99 3

+ train/99/Ovenbird_0131_92559.jpg 99 4

+ train/99/Ovenbird_0130_92452.jpg 99 5

+ ...

+ ```

+ 其中,分隔符为空格`" "`, 三列数据的含义分别是`训练数据的相对路径`、`训练数据的 label 标签`、`训练数据的 unique id`。测试集格式与训练集格式相同。

-* 当 gallery dataset 和 query dataset 相同时,为了去掉检索得到的第一个数据(检索图片本身无须评估),每个数据需要对应一个 unique id,用于后续评测 mAP、recall@1 等指标。关于 gallery dataset 与 query dataset 的解析请参考[图像检索数据集介绍](#图像检索数据集介绍), 关于 mAP、recall@1 等评测指标请参考[图像检索评价指标](#图像检索评价指标)。

+* 构建完毕后返回 `PaddleClas` 根目录

-返回 `PaddleClas` 根目录

+ ```shell

+ # linux or mac

+ cd ../../

+ ```

-```shell

-# linux or mac

-cd ../../

-```

+**注意**:

-

+* 当 gallery dataset 和 query dataset 相同时,为了去掉检索得到的第一个数据(检索图片本身不能出现在gallery中),每个数据需要对应一个 unique id(一般使用从1开始的自然数为unique id,如1,2,3,...),用于后续评测 `mAP`、`recall@1` 等指标。关于 gallery dataset 与 query dataset 的解析请参考[图像检索数据集介绍](#图像检索数据集介绍), 关于 `mAP`、`recall@1` 等评测指标请参考[图像检索评价指标](#图像检索评价指标)。

-### 2.2 特征模型 GPU 上的训练与评估

+

-在基于单卡 GPU 上训练与评估,推荐使用 `tools/train.py` 与 `tools/eval.py` 脚本。

+### 2.2 特征提取模型在 GPU 上的训练与评估

-PaddleClas 支持使用 VisualDL 可视化训练过程。VisualDL 是飞桨可视化分析工具,以丰富的图表呈现训练参数变化趋势、模型结构、数据样本、高维数据分布等。可帮助用户更清晰直观地理解深度学习模型训练过程及模型结构,进而实现高效的模型优化。更多细节请查看[VisualDL](../others/VisualDL.md)。

+下面以 MobileNetV1 模型为例,介绍特征提取模型在 GPU 上的训练与评估流程

-#### 2.2.1 特征模型训练

+#### 2.2.1 特征提取模型训练

准备好配置文件之后,可以使用下面的方式启动图像检索任务的训练。PaddleClas 训练图像检索任务的方法是度量学习,关于度量学习的解析请参考[度量学习](#度量学习)。

```shell

# 单卡 GPU

-python3 tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Arch.Backbone.pretrained=True \

- -o Global.device=gpu

+python3.7 tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Arch.Backbone.pretrained=True \

+-o Global.device=gpu

+

# 多卡 GPU

export CUDA_VISIBLE_DEVICES=0,1,2,3

-python3 -m paddle.distributed.launch tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Arch.Backbone.pretrained=True \

- -o Global.device=gpu

+python3.7 -m paddle.distributed.launch tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Arch.Backbone.pretrained=True \

+-o Global.device=gpu

```

-其中,`-c` 用于指定配置文件的路径,`-o` 用于指定需要修改或者添加的参数,其中 `-o Arch.Backbone.pretrained=True` 表示 Backbone 部分使用预训练模型,此外,`Arch.Backbone.pretrained` 也可以指定具体的模型权重文件的地址,使用时需要换成自己的预训练模型权重文件的路径。`-o Global.device=gpu` 表示使用 GPU 进行训练。如果希望使用 CPU 进行训练,则需要将 `Global.device` 设置为 `cpu`。

+**注**:其中,`-c` 用于指定配置文件的路径,`-o` 用于指定需要修改或者添加的参数,其中 `-o Arch.Backbone.pretrained=True` 表示 Backbone 在训练开始前会加载预训练模型;`-o Arch.Backbone.pretrained` 也可以指定为模型权重文件的路径,使用时换成自己的预训练模型权重文件的路径即可;`-o Global.device=gpu` 表示使用 GPU 进行训练。如果希望使用 CPU 进行训练,则设置 `-o Global.device=cpu`即可。

更详细的训练配置,也可以直接修改模型对应的配置文件。具体配置参数参考[配置文档](config_description.md)。

-运行上述命令,可以看到输出日志,示例如下:

+运行上述训练命令,可以看到输出日志,示例如下:

- ```

- ...

- [Train][Epoch 1/50][Avg]CELoss: 6.59110, TripletLossV2: 0.54044, loss: 7.13154

- ...

- [Eval][Epoch 1][Avg]recall1: 0.46962, recall5: 0.75608, mAP: 0.21238

- ...

- ```

-此处配置文件的 Backbone 是 MobileNetV1,如果想使用其他 Backbone,可以重写参数 `Arch.Backbone.name`,比如命令中增加 `-o Arch.Backbone.name={其他 Backbone}`。此外,由于不同模型 `Neck` 部分的输入维度不同,更换 Backbone 后可能需要改写此处的输入大小,改写方式类似替换 Backbone 的名字。

+ ```log

+ ...

+ [Train][Epoch 1/50][Avg]CELoss: 6.59110, TripletLossV2: 0.54044, loss: 7.13154

+ ...

+ [Eval][Epoch 1][Avg]recall1: 0.46962, recall5: 0.75608, mAP: 0.21238

+ ...

+ ```

+

+此处配置文件的 Backbone 是 MobileNetV1,如果想使用其他 Backbone,可以重写参数 `Arch.Backbone.name`,比如命令中增加 `-o Arch.Backbone.name={其他 Backbone 的名字}`。此外,由于不同模型 `Neck` 部分的输入维度不同,更换 Backbone 后可能需要改写 `Neck` 的输入大小,改写方式类似替换 Backbone 的名字。

在训练 Loss 部分,此处使用了 [CELoss](../../../ppcls/loss/celoss.py) 和 [TripletLossV2](../../../ppcls/loss/triplet.py),配置文件如下:

-```

+```yaml

Loss:

Train:

- CELoss:

@@ -183,110 +189,113 @@ Loss:

margin: 0.5

```

-最终的总 Loss 是所有 Loss 的加权和,其中 weight 定义了特定 Loss 在最终总 Loss 的权重。如果想替换其他 Loss,也可以在配置文件中更改 Loss 字段,目前支持的 Loss 请参考 [Loss](../../../ppcls/loss)。

+最终的总 Loss 是所有 Loss 的加权和,其中 weight 定义了特定 Loss 在最终总 Loss 的权重。如果想替换其他 Loss,也可以在配置文件中更改 Loss 字段,目前支持的 Loss 请参考 [Loss](../../../ppcls/loss/__init__.py)。

-#### 2.2.2 特征模型恢复训练

+#### 2.2.2 特征提取模型恢复训练

-如果训练任务因为其他原因被终止,也可以加载断点权重文件,继续训练:

+如果训练任务因为其他原因被终止,且训练过程中有保存权重文件,可以加载断点权重文件,继续训练:

```shell

-# 单卡

-python3 tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.checkpoints="./output/RecModel/epoch_5" \

- -o Global.device=gpu

-# 多卡

+# 单卡恢复训练

+python33.7 tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.checkpoints="./output/RecModel/epoch_5" \

+-o Global.device=gpu

+

+# 多卡恢复训练

export CUDA_VISIBLE_DEVICES=0,1,2,3

-python3 -m paddle.distributed.launch tools/train.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.checkpoints="./output/RecModel/epoch_5" \

- -o Global.device=gpu

+python3.7 -m paddle.distributed.launch tools/train.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.checkpoints="./output/RecModel/epoch_5" \

+-o Global.device=gpu

```

其中配置文件不需要做任何修改,只需要在继续训练时设置 `Global.checkpoints` 参数即可,表示加载的断点权重文件路径,使用该参数会同时加载保存的断点权重和学习率、优化器等信息。

**注意**:

-* `-o Global.checkpoints` 参数无需包含断点权重文件的后缀名,上述训练命令会在训练过程中生成如下所示的断点权重文件,若想从断点 `5` 继续训练,则 `Global.checkpoints` 参数只需设置为 `"./output/RecModel/epoch_5"`,PaddleClas 会自动补充后缀名。

-

- ```shell

- output/

- └── RecModel

- ├── best_model.pdopt

- ├── best_model.pdparams

- ├── best_model.pdstates

- ├── epoch_1.pdopt

- ├── epoch_1.pdparams

- ├── epoch_1.pdstates

- .

- .

- .

- ```

+* `-o Global.checkpoints` 后的参数无需包含断点权重文件的后缀名,上述训练命令会在训练过程中生成如下所示的断点权重文件,若想从断点 `epoch_5` 继续训练,则 `Global.checkpoints` 参数只需设置为 `"./output/RecModel/epoch_5"`,PaddleClas 会自动补充后缀名。

+

+ `epoch_5.pdparams`所在目录如下所示:

+

+ ```log

+ output/

+ └── RecModel

+ ├── best_model.pdopt

+ ├── best_model.pdparams

+ ├── best_model.pdstates

+ ├── epoch_5.pdopt

+ ├── epoch_5.pdparams

+ ├── epoch_5.pdstates

+ .

+ .

+ .

+ ```

-#### 2.2.3 特征模型评估

+#### 2.2.3 特征提取模型评估

-可以通过以下命令进行模型评估。

+可以通过以下命令进行指定模型进行评估。

```bash

-# 单卡

-python3 tools/eval.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.pretrained_model=./output/RecModel/best_model

-# 多卡

+# 单卡评估

+python3.7 tools/eval.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.pretrained_model=./output/RecModel/best_model

+

+# 多卡评估

export CUDA_VISIBLE_DEVICES=0,1,2,3

-python3 -m paddle.distributed.launch tools/eval.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.pretrained_model=./output/RecModel/best_model

+python3.7 -m paddle.distributed.launch tools/eval.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.pretrained_model=./output/RecModel/best_model

```

-上述命令将使用 `./configs/quick_start/MobileNetV1_retrieval.yaml` 作为配置文件,对上述训练得到的模型 `./output/RecModel/best_model` 进行评估。你也可以通过更改配置文件中的参数来设置评估,也可以通过 `-o` 参数更新配置,如上所示。

+上述命令将使用 `./configs/quick_start/MobileNetV1_retrieval.yaml` 作为配置文件,对上述训练得到的模型 `./output/RecModel/best_model.pdparams` 进行评估。你也可以通过更改配置文件中的参数来设置评估,也可以通过 `-o` 参数更新配置,如上所示。

可配置的部分评估参数说明如下:

-* `Arch.name`:模型名称

* `Global.pretrained_model`:待评估的模型的预训练模型文件路径,不同于 `Global.Backbone.pretrained`,此处的预训练模型是整个模型的权重,而 `Global.Backbone.pretrained` 只是 Backbone 部分的权重。当需要做模型评估时,需要加载整个模型的权重。

-* `Metric.Eval`:待评估的指标,默认评估 recall@1、recall@5、mAP。当你不准备评测某一项指标时,可以将对应的试标从配置文件中删除;当你想增加某一项评测指标时,也可以参考 [Metric](../../../ppcls/metric/metrics.py) 部分在配置文件 `Metric.Eval` 中添加相关的指标。

+* `Metric.Eval`:待评估的指标,默认评估 `recall@1`、`recall@5`、`mAP`。当你不准备评测某一项指标时,可以将对应的试标从配置文件中删除;当你想增加某一项评测指标时,也可以参考 [Metric](../../../ppcls/metric/metrics.py) 部分在配置文件 `Metric.Eval` 中添加相关的指标。

**注意:**

-* 在加载待评估模型时,需要指定模型文件的路径,但无需包含文件后缀名,PaddleClas 会自动补齐 `.pdparams` 的后缀,如 [2.2.2 特征模型恢复训练](#2.2.2)。

+* 在加载待评估模型时,需要指定模型文件的路径,但无需包含文件后缀名,PaddleClas 会自动补齐 `.pdparams` 的后缀,如 [2.2.2 特征提取模型恢复训练](#2.2.2)。

-* Metric learning 任务一般不评测 TopkAcc。

+* Metric learning 任务一般不评测 `TopkAcc` 指标。

-### 2.3 特征模型导出 inference 模型

+### 2.3 特征提取模型导出 inference 模型

通过导出 inference 模型,PaddlePaddle 支持使用预测引擎进行预测推理。对训练好的模型进行转换:

```bash

-python3 tools/export_model.py \

- -c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

- -o Global.pretrained_model=output/RecModel/best_model \

- -o Global.save_inference_dir=./inference

+python3.7 tools/export_model.py \

+-c ./ppcls/configs/quick_start/MobileNetV1_retrieval.yaml \

+-o Global.pretrained_model=output/RecModel/best_model \

+-o Global.save_inference_dir=./inference

```

-其中,`Global.pretrained_model` 用于指定模型文件路径,该路径仍无需包含模型文件后缀名(如[2.2.2 特征模型恢复训练](#2.2.2))。当执行后,会在当前目录下生成 `./inference` 目录,目录下包含 `inference.pdiparams`、`inference.pdiparams.info`、`inference.pdmodel` 文件。`Global.save_inference_dir` 可以指定导出 inference 模型的路径。此处保存的 inference 模型在 embedding 特征层做了截断,即模型最终的输出为 n 维 embedding 特征。

+其中,`Global.pretrained_model` 用于指定模型文件路径,该路径仍无需包含模型文件后缀名(如[2.2.2 特征提取模型恢复训练](#2.2.2))。当执行后,会在当前目录下生成 `./inference` 目录,目录下包含 `inference.pdiparams`、`inference.pdiparams.info`、`inference.pdmodel` 文件。`Global.save_inference_dir` 可以指定导出 inference 模型文件夹的路径。此处保存的 inference 模型在 embedding 特征层做了截断,即模型的推理输出为 n 维特征。

-上述命令将生成模型结构文件(`inference.pdmodel`)和模型权重文件(`inference.pdiparams`),然后可以使用预测引擎进行推理。使用 inference 模型推理的流程可以参考[基于 Python 预测引擎预测推理](../inference_deployment/python_deploy.md)。

+有了上述命令将生成的模型结构文件(`inference.pdmodel`)和模型权重文件(`inference.pdiparams`),接下来就可以使用预测引擎进行推理。使用 inference 模型推理的流程可以参考[基于 Python 预测引擎预测推理](../inference_deployment/python_deploy.md)。

-

+

## 3. 特征检索

PaddleClas 图像检索部分目前支持的环境如下:

-```shell

-└── CPU/单卡 GPU

- ├── Linux

- ├── MacOS

- └── Windows

-```

+| 操作系统 | 推理硬件 |

+| :------- | :------- |

+| Linux | CPU/GPU |

+| Windows | CPU/GPU |

+| MacOS | CPU/GPU |

-此部分使用了 [Faiss](https://github.com/facebookresearch/faiss) 作为检索库,其是一个高效的特征检索及聚类的库。此库中集成了多种相似度检索算法,以满足不同的检索场景。在 PaddleClas 中,支持三种检索算法:

+

+此部分使用了第三方开源库 [Faiss](https://github.com/facebookresearch/faiss) 作为检索工具,它是一个高效的特征检索与聚类的库,集成了多种相似度检索算法,以满足不同的检索场景。PaddleClas 目前支持三种检索算法:

- **HNSW32**: 一种图索引方法。检索精度较高,速度较快。但是特征库只支持添加图像功能,不支持删除图像特征功能。(默认方法)

- **IVF**:倒排索引检索方法。速度较快,但是精度略低。特征库支持增加、删除图像特功能。

@@ -296,22 +305,27 @@ PaddleClas 图像检索部分目前支持的环境如下:

具体安装方法如下:

-```python

-pip install faiss-cpu==1.7.1post2

+```shell

+python3.7 -m pip install faiss-cpu==1.7.1post2

```

-若使用时,不能正常引用,则 `uninstall` 之后,重新 `install`,尤其是 `windows` 下。

+若无法正常使用faiss,可以按以下命令先将其卸载,然后重新安装(Windows系统中该问题比较常见)。

+

+```shell

+python3.7 -m pip uninstall faiss-cpu

+python3.7 -m pip install faiss-cpu==1.7.1post2

+```

## 4. 基础知识

-图像检索指的是给定一个包含特定实例(例如特定目标、场景、物品等)的查询图像,图像检索旨在从数据库图像中找到包含相同实例的图像。不同于图像分类,图像检索解决的是一个开集问题,训练集中可能不包含被识别的图像的类别。图像检索的整体流程为:首先将图像中表示为一个合适的特征向量,其次,对这些图像的特征向量用欧式距离或余弦距离进行最近邻搜索以找到底库中相似的图像,最后,可以使用一些后处理技术对检索结果进行微调,确定被识别图像的类别等信息。所以,决定一个图像检索算法性能的关键在于图像对应的特征向量的好坏。

+图像检索指的是给定一个包含特定实例(例如特定目标、场景、物品等)的查询图像,图像检索旨在从数据库图像中找到包含相同实例的图像。不同于图像分类,图像检索解决的是一个开集问题,训练集中可能不包含被识别的图像的类别。图像检索的整体流程为:首先将图像中表示为一个合适的特征向量,其次对这些图像的特征向量用合适的距离度量函数进行最近邻搜索以找到数据库图像中相似的图像,最后,可能会使用一些后处理对检索结果进行进一步优化,得到待识别图像的类别、相似度等信息。所以,图像检索算法性能的关键在于图像提取的特征向量的表示能力强弱。

- 度量学习(Metric Learning)

-度量学习研究如何在一个特定的任务上学习一个距离函数,使得该距离函数能够帮助基于近邻的算法(kNN、k-means 等)取得较好的性能。深度度量学习(Deep Metric Learning)是度量学习的一种方法,它的目标是学习一个从原始特征到低维稠密的向量空间(嵌入空间,embedding space)的映射,使得同类对象在嵌入空间上使用常用的距离函数(欧氏距离、cosine 距离等)计算的距离比较近,而不同类的对象之间的距离则比较远。深度度量学习在计算机视觉领域取得了非常多的成功的应用,比如人脸识别、商品识别、图像检索、行人重识别等。更详细的介绍请参考[此文档](../algorithm_introduction/metric_learning.md)。

+ 度量学习研究如何在一个特定的任务上学习一个距离函数,使得该距离函数能够帮助基于近邻的算法(kNN、k-means 等)取得较好的性能。深度度量学习(Deep Metric Learning)是度量学习的一种方法,它的目标是学习一个从原始特征到低维稠密的向量空间(嵌入空间,embedding space)的映射,使得同类对象在嵌入空间上使用常用的距离函数(欧氏距离、cosine 距离等)计算的距离比较近,而不同类的对象之间的距离则比较远。深度度量学习在计算机视觉领域取得了非常多的成功的应用,比如人脸识别、商品识别、图像检索、行人重识别等。更详细的介绍请参考[此文档](../algorithm_introduction/metric_learning.md)。

@@ -319,19 +333,17 @@ pip install faiss-cpu==1.7.1post2

- 训练集合(train dataset):用来训练模型,使模型能够学习该集合的图像特征。

- 底库数据集合(gallery dataset):用来提供图像检索任务中的底库数据,该集合可与训练集或测试集相同,也可以不同,当与训练集相同时,测试集的类别体系应与训练集的类别体系相同。

- - 测试集合(query dataset):用来测试模型的好坏,通常要对测试集的每一张测试图片进行特征提取,之后和底库数据的特征进行距离匹配,得到识别结果,后根据识别结果计算整个测试集的指标。

+ - 测试集合(query dataset):用来测试模型的检索性能,通常要对测试集的每一张测试图片进行特征提取,之后和底库数据的特征进行距离匹配,得到检索结果,后根据检索结果计算模型在整个测试集上的性能指标。

- 图像检索评价指标

- 召回率(recall):表示预测为正例且标签为正例的个数 / 标签为正例的个数

-

- - recall@1:检索的 top-1 中预测正例且标签为正例的个数 / 标签为正例的个数

- - recall@5:检索的 top-5 中所有预测正例且标签为正例的个数 / 标签为正例的个数

+ - `recall@k`:检索的 top-k 结果中预测为正例且标签为正例的个数 / 标签为正例的个数

- 平均检索精度(mAP)

- - AP: AP 指的是不同召回率上的正确率的平均值

- - mAP: 测试集中所有图片对应的 AP 的平均值

+ - `AP`: AP 指的是不同召回率上的正确率的平均值

+ - `mAP`: 测试集中所有图片对应的 AP 的平均值

diff --git a/docs/zh_CN/others/update_history.md b/docs/zh_CN/others/update_history.md

index 5ea649e52a9d53eb3aab5b8b9322d1a87920fefa..0e88a51362d7d04db960c966db72a5ec3a0ee787 100644

--- a/docs/zh_CN/others/update_history.md

+++ b/docs/zh_CN/others/update_history.md

@@ -1,5 +1,6 @@

# 更新日志

+- 2022.4.21 新增 CVPR2022 oral论文 [MixFormer](https://arxiv.org/pdf/2204.02557.pdf) 相关[代码](https://github.com/PaddlePaddle/PaddleClas/pull/1820/files)。

- 2021.11.1 发布[PP-ShiTu技术报告](https://arxiv.org/pdf/2111.00775.pdf),新增饮料识别demo。

- 2021.10.23 发布轻量级图像识别系统PP-ShiTu,CPU上0.2s即可完成在10w+库的图像识别。[点击这里](../quick_start/quick_start_recognition.md)立即体验。

- 2021.09.17 发布PP-LCNet系列超轻量骨干网络模型, 在Intel CPU上,单张图像预测速度约5ms,ImageNet-1K数据集上Top1识别准确率达到80.82%,超越ResNet152的模型效果。PP-LCNet的介绍可以参考[论文](https://arxiv.org/pdf/2109.15099.pdf), 或者[PP-LCNet模型介绍](../models/PP-LCNet.md),相关指标和预训练权重可以从 [这里](../algorithm_introduction/ImageNet_models.md)下载。

diff --git a/docs/zh_CN/quick_start/quick_start_recognition.md b/docs/zh_CN/quick_start/quick_start_recognition.md

index 38803ec9be510d3a4a96117fce3a1ccf537d3af9..550e455228f65b6886b13d8627413d4dd1387990 100644

--- a/docs/zh_CN/quick_start/quick_start_recognition.md

+++ b/docs/zh_CN/quick_start/quick_start_recognition.md

@@ -1,87 +1,131 @@

-# 图像识别快速开始

+## 图像识别快速体验

-本文档包含 3 个部分:环境配置、图像识别体验、未知类别的图像识别体验。

+本文档包含 2 个部分:PP-ShiTu android端 demo 快速体验与PP-ShiTu PC端 demo 快速体验。

如果图像类别已经存在于图像索引库中,那么可以直接参考[图像识别体验](#图像识别体验)章节,完成图像识别过程;如果希望识别未知类别的图像,即图像类别之前不存在于索引库中,那么可以参考[未知类别的图像识别体验](#未知类别的图像识别体验)章节,完成建立索引并识别的过程。

## 目录

-* [1. 环境配置](#环境配置)

-* [2. 图像识别体验](#图像识别体验)

- * [2.1 下载、解压 inference 模型与 demo 数据](#2.1)

- * [2.2 瓶装饮料识别与检索](#瓶装饮料识别与检索)

- * [2.2.1 识别单张图像](#识别单张图像)

- * [2.2.2 基于文件夹的批量识别](#基于文件夹的批量识别)

-* [3. 未知类别的图像识别体验](#未知类别的图像识别体验)

- * [3.1 准备新的数据与标签](#准备新的数据与标签)

- * [3.2 建立新的索引库](#建立新的索引库)

- * [3.3 基于新的索引库的图像识别](#基于新的索引库的图像识别)

-* [4. 服务端识别模型列表](#4)

+- [1. PP-ShiTu android demo 快速体验](#1-pp-shitu-android-demo-快速体验)

+ - [1.1 安装 PP-ShiTu android demo](#11-安装-pp-shitu-android-demo)

+ - [1.2 操作说明](#12-操作说明)

+- [2. PP-ShiTu PC端 demo 快速体验](#2-pp-shitu-pc端-demo-快速体验)

+ - [2.1 环境配置](#21-环境配置)

+ - [2.2 图像识别体验](#22-图像识别体验)

+ - [2.2.1 下载、解压 inference 模型与 demo 数据](#221-下载解压-inference-模型与-demo-数据)

+ - [2.2.2 瓶装饮料识别与检索](#222-瓶装饮料识别与检索)

+ - [2.2.2.1 识别单张图像](#2221-识别单张图像)

+ - [2.2.2.2 基于文件夹的批量识别](#2222-基于文件夹的批量识别)

+ - [2.3 未知类别的图像识别体验](#23-未知类别的图像识别体验)

+ - [2.3.1 准备新的数据与标签](#231-准备新的数据与标签)

+ - [2.3.2 建立新的索引库](#232-建立新的索引库)

+ - [2.3.3 基于新的索引库的图像识别](#233-基于新的索引库的图像识别)

+ - [2.4 服务端识别模型列表](#24-服务端识别模型列表)

+

+

+

+## 1. PP-ShiTu android demo 快速体验

+

+

+

+### 1.1 安装 PP-ShiTu android demo

+

+可以通过扫描二维码或者[点击链接](https://paddle-imagenet-models-name.bj.bcebos.com/demos/PP-ShiTu.apk)下载并安装APP

+

+

+

+得到的检索结果可视化如下:

+

+

+

+得到的检索结果可视化如下:

+

+ +

+#### (2)向检索库中添加新的类别或物体

+点击上方的“拍照上传”按钮

+

+#### (2)向检索库中添加新的类别或物体

+点击上方的“拍照上传”按钮