diff --git a/CMakeLists.txt b/CMakeLists.txt

index a28613647b32c44c472917b10cdcab7acab843d1..7a8f5e0a69aac3852cb2752c90d54d8f50b69483 100644

--- a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -16,12 +16,6 @@ cmake_minimum_required(VERSION 3.0)

set(CMAKE_MODULE_PATH ${CMAKE_MODULE_PATH} "${CMAKE_CURRENT_SOURCE_DIR}/cmake")

include(lite_utils)

-lite_option(WITH_PADDLE_MOBILE "Use the paddle-mobile legacy build" OFF)

-if (WITH_PADDLE_MOBILE)

- add_subdirectory(mobile)

- return()

-endif(WITH_PADDLE_MOBILE)

-

set(PADDLE_SOURCE_DIR ${CMAKE_CURRENT_SOURCE_DIR})

set(PADDLE_BINARY_DIR ${CMAKE_CURRENT_BINARY_DIR})

set(CMAKE_CXX_STANDARD 11)

diff --git a/README.md b/README.md

index 70c53a5775148c6608008d0a86a6966aca29c644..d995bcc327705228098c1b26753213928ad4a79d 100644

--- a/README.md

+++ b/README.md

@@ -43,7 +43,6 @@ Paddle Lite提供了C++、Java、Python三种API,并且提供了相应API的

- [iOS示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/ios_app_demo.html)

- [ARMLinux示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/linux_arm_demo.html)

- [X86示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/x86.html)

-- [CUDA示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/cuda.html)

- [OpenCL示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/opencl.html)

- [FPGA示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/fpga.html)

- [华为NPU示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/huawei_kirin_npu.html)

@@ -77,7 +76,6 @@ Paddle Lite提供了C++、Java、Python三种API,并且提供了相应API的

| CPU(32bit) |  |  |  |  |

| CPU(64bit) |  |  |  |  |

| OpenCL | - | - |  | - |

-| CUDA |  |  | - | - |

| FPGA | - |  | - | - |

| 华为NPU | - | - |  | - |

| 百度 XPU |  |  | - | - |

diff --git a/cmake/configure.cmake b/cmake/configure.cmake

index 69fba7968d75f0308acdc787313b48c2804d6caf..e980922d5b4869ede65e57e750b5b85676ed0dde 100644

--- a/cmake/configure.cmake

+++ b/cmake/configure.cmake

@@ -199,13 +199,10 @@ if (LITE_WITH_EXCEPTION)

add_definitions("-DLITE_WITH_EXCEPTION")

endif()

-if (LITE_ON_FLATBUFFERS_DESC_VIEW)

- add_definitions("-DLITE_ON_FLATBUFFERS_DESC_VIEW")

- message(STATUS "Flatbuffers will be used as cpp default program description.")

-endif()

-

if (LITE_ON_TINY_PUBLISH)

add_definitions("-DLITE_ON_TINY_PUBLISH")

+ add_definitions("-DLITE_ON_FLATBUFFERS_DESC_VIEW")

+ message(STATUS "Flatbuffers will be used as cpp default program description.")

else()

add_definitions("-DLITE_WITH_FLATBUFFERS_DESC")

endif()

diff --git a/cmake/device/huawei_ascend_npu.cmake b/cmake/device/huawei_ascend_npu.cmake

index 0bd9591eee702f4db914a8b547c4c99b21d0473b..a2b664abd13591214b9955993854ebccea9a4bf4 100644

--- a/cmake/device/huawei_ascend_npu.cmake

+++ b/cmake/device/huawei_ascend_npu.cmake

@@ -16,6 +16,11 @@ if(NOT LITE_WITH_HUAWEI_ASCEND_NPU)

return()

endif()

+# require -D_GLIBCXX_USE_CXX11_ABI=0 if GCC 7.3.0

+if(CMAKE_CXX_COMPILER_VERSION VERSION_GREATER 5.0)

+ set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -D_GLIBCXX_USE_CXX11_ABI=0")

+endif()

+

# 1. path to Huawei Ascend Install Path

if(NOT DEFINED HUAWEI_ASCEND_NPU_DDK_ROOT)

set(HUAWEI_ASCEND_NPU_DDK_ROOT $ENV{HUAWEI_ASCEND_NPU_DDK_ROOT})

diff --git a/cmake/external/flatbuffers.cmake b/cmake/external/flatbuffers.cmake

index 4c2413c620d3531399ceede234eed16e9f4f0b6b..47b3042234cfa482ca7187baf8e51275ea8d3ac8 100644

--- a/cmake/external/flatbuffers.cmake

+++ b/cmake/external/flatbuffers.cmake

@@ -27,7 +27,7 @@ SET(FLATBUFFERS_SOURCES_DIR ${CMAKE_SOURCE_DIR}/third-party/flatbuffers)

SET(FLATBUFFERS_INSTALL_DIR ${THIRD_PARTY_PATH}/install/flatbuffers)

SET(FLATBUFFERS_INCLUDE_DIR "${FLATBUFFERS_SOURCES_DIR}/include" CACHE PATH "flatbuffers include directory." FORCE)

IF(WIN32)

- set(FLATBUFFERS_LIBRARIES "${FLATBUFFERS_INSTALL_DIR}/${LIBDIR}/libflatbuffers.lib" CACHE FILEPATH "FLATBUFFERS_LIBRARIES" FORCE)

+ set(FLATBUFFERS_LIBRARIES "${FLATBUFFERS_INSTALL_DIR}/${LIBDIR}/flatbuffers.lib" CACHE FILEPATH "FLATBUFFERS_LIBRARIES" FORCE)

ELSE(WIN32)

set(FLATBUFFERS_LIBRARIES "${FLATBUFFERS_INSTALL_DIR}/${LIBDIR}/libflatbuffers.a" CACHE FILEPATH "FLATBUFFERS_LIBRARIES" FORCE)

ENDIF(WIN32)

@@ -64,13 +64,6 @@ ExternalProject_Add(

-DCMAKE_POSITION_INDEPENDENT_CODE:BOOL=ON

-DCMAKE_BUILD_TYPE:STRING=${THIRD_PARTY_BUILD_TYPE}

)

-IF(WIN32)

- IF(NOT EXISTS "${FLATBUFFERS_INSTALL_DIR}/${LIBDIR}/libflatbuffers.lib")

- add_custom_command(TARGET extern_flatbuffers POST_BUILD

- COMMAND cmake -E copy ${FLATBUFFERS_INSTALL_DIR}/${LIBDIR}/flatbuffers_static.lib ${FLATBUFFERS_INSTALL_DIR}/${LIBDIR}/libflatbuffers.lib

- )

- ENDIF()

-ENDIF(WIN32)

ADD_LIBRARY(flatbuffers STATIC IMPORTED GLOBAL)

SET_PROPERTY(TARGET flatbuffers PROPERTY IMPORTED_LOCATION ${FLATBUFFERS_LIBRARIES})

ADD_DEPENDENCIES(flatbuffers extern_flatbuffers)

diff --git a/cmake/external/protobuf.cmake b/cmake/external/protobuf.cmake

index 76cc7b21deab41a40869a68df3a4dce359177c21..eb6c26e38dcd86aa4e0a536ea0f4541651bed6fa 100644

--- a/cmake/external/protobuf.cmake

+++ b/cmake/external/protobuf.cmake

@@ -217,6 +217,10 @@ FUNCTION(build_protobuf TARGET_NAME BUILD_FOR_HOST)

SET(OPTIONAL_ARGS ${OPTIONAL_ARGS} "-DCMAKE_GENERATOR_PLATFORM=x64")

ENDIF()

+ IF(LITE_WITH_HUAWEI_ASCEND_NPU)

+ SET(OPTIONAL_ARGS ${OPTIONAL_ARGS} "-DCMAKE_CXX_FLAGS=${CMAKE_CXX_FLAGS}")

+ ENDIF()

+

if(LITE_WITH_LIGHT_WEIGHT_FRAMEWORK)

ExternalProject_Add(

${TARGET_NAME}

diff --git a/cmake/generic.cmake b/cmake/generic.cmake

index d859404d559282970d96a735c400f745481e8efa..af05db559123e6d7305c35f95e3dacd58eeb7e19 100644

--- a/cmake/generic.cmake

+++ b/cmake/generic.cmake

@@ -267,6 +267,10 @@ function(cc_library TARGET_NAME)

list(REMOVE_ITEM cc_library_DEPS warpctc)

add_dependencies(${TARGET_NAME} warpctc)

endif()

+ if("${cc_library_DEPS};" MATCHES "fbs_headers;")

+ list(REMOVE_ITEM cc_library_DEPS fbs_headers)

+ add_dependencies(${TARGET_NAME} fbs_headers)

+ endif()

# Only deps libmklml.so, not link

if("${cc_library_DEPS};" MATCHES "mklml;")

list(REMOVE_ITEM cc_library_DEPS mklml)

diff --git a/docs/api_reference/cv.md b/docs/api_reference/cv.md

index d660bd7e382d80ac7151acacef3fd30caeb902bc..2192f4c7bbd1c020e65f5485c9292716ae12df84 100644

--- a/docs/api_reference/cv.md

+++ b/docs/api_reference/cv.md

@@ -91,14 +91,24 @@ ImagePreprocess::ImagePreprocess(ImageFormat srcFormat, ImageFormat dstFormat, T

// 方法二

void ImagePreprocess::imageCovert(const uint8_t* src,

uint8_t* dst, ImageFormat srcFormat, ImageFormat dstFormat);

+ // 方法三

+ void ImagePreprocess::imageCovert(const uint8_t* src,

+ uint8_t* dst, ImageFormat srcFormat, ImageFormat dstFormat,

+ int srcw, int srch);

```

+ 第一个 `imageCovert` 接口,缺省参数来源于 `ImagePreprocess` 类的成员变量。故在初始化 `ImagePreprocess` 类的对象时,必须要给以下成员变量赋值:

- param srcFormat:`ImagePreprocess` 类的成员变量`srcFormat_`

- param dstFormat:`ImagePreprocess` 类的成员变量`dstFormat_`

+ - param srcw: `ImagePreprocess` 类的成员变量`transParam_`结构体中的`iw`变量

+ - param srch: `ImagePreprocess` 类的成员变量`transParam_`结构体中的`ih`变量

- - 第二个`imageCovert` 接口,可以直接使用

+ - 第二个`imageCovert` 接口,缺省参数来源于 `ImagePreprocess` 类的成员变量。故在初始化 `ImagePreprocess` 类的对象时,必须要给以下成员变量赋值:

+ - param srcw: `ImagePreprocess` 类的成员变量`transParam_`结构体中的`iw`变量

+ - param srch: `ImagePreprocess` 类的成员变量`transParam_`结构体中的`ih`变量

+ - 第二个`imageCovert` 接口, 可以直接使用

+

### 缩放 Resize

`Resize` 功能支持颜色空间:GRAY、NV12(NV21)、RGB(BGR)和RGBA(BGRA)

diff --git a/docs/demo_guides/baidu_xpu.md b/docs/demo_guides/baidu_xpu.md

index 242188e0fd1397494db545757e0679c0fd957da1..ae60f9038707218fd204369f4b3ebbbda82f7aca 100644

--- a/docs/demo_guides/baidu_xpu.md

+++ b/docs/demo_guides/baidu_xpu.md

@@ -16,69 +16,12 @@ Paddle Lite已支持百度XPU在x86和arm服务器(例如飞腾 FT-2000+/64)

### 已支持的Paddle模型

-- [ResNet50](https://paddlelite-demo.bj.bcebos.com/models/resnet50_fp32_224_fluid.tar.gz)

-- [BERT](https://paddlelite-demo.bj.bcebos.com/models/bert_fp32_fluid.tar.gz)

-- [ERNIE](https://paddlelite-demo.bj.bcebos.com/models/ernie_fp32_fluid.tar.gz)

-- YOLOv3

-- Mask R-CNN

-- Faster R-CNN

-- UNet

-- SENet

-- SSD

+- [开源模型支持列表](../introduction/support_model_list)

- 百度内部业务模型(由于涉密,不方便透露具体细节)

### 已支持(或部分支持)的Paddle算子(Kernel接入方式)

-- scale

-- relu

-- tanh

-- sigmoid

-- stack

-- matmul

-- pool2d

-- slice

-- lookup_table

-- elementwise_add

-- elementwise_sub

-- cast

-- batch_norm

-- mul

-- layer_norm

-- softmax

-- conv2d

-- io_copy

-- io_copy_once

-- __xpu__fc

-- __xpu__multi_encoder

-- __xpu__resnet50

-- __xpu__embedding_with_eltwise_add

-

-### 已支持(或部分支持)的Paddle算子(子图/XTCL接入方式)

-

-- relu

-- tanh

-- conv2d

-- depthwise_conv2d

-- elementwise_add

-- pool2d

-- softmax

-- mul

-- batch_norm

-- stack

-- gather

-- scale

-- lookup_table

-- slice

-- transpose

-- transpose2

-- reshape

-- reshape2

-- layer_norm

-- gelu

-- dropout

-- matmul

-- cast

-- yolo_box

+- [算子支持列表](../introduction/support_operation_list)

## 参考示例演示

@@ -233,7 +176,7 @@ $ ./lite/tools/build.sh --arm_os=armlinux --arm_abi=armv8 --arm_lang=gcc --build

```

- 将编译生成的build.lite.x86/inference_lite_lib/cxx/include替换PaddleLite-linux-demo/libs/PaddleLite/amd64/include目录;

-- 将编译生成的build.lite.x86/inference_lite_lib/cxx/include/lib/libpaddle_full_api_shared.so替换PaddleLite-linux-demo/libs/PaddleLite/amd64/lib/libpaddle_full_api_shared.so文件;

+- 将编译生成的build.lite.x86/inference_lite_lib/cxx/lib/libpaddle_full_api_shared.so替换PaddleLite-linux-demo/libs/PaddleLite/amd64/lib/libpaddle_full_api_shared.so文件;

- 将编译生成的build.lite.armlinux.armv8.gcc/inference_lite_lib.armlinux.armv8.xpu/cxx/include替换PaddleLite-linux-demo/libs/PaddleLite/arm64/include目录;

- 将编译生成的build.lite.armlinux.armv8.gcc/inference_lite_lib.armlinux.armv8.xpu/cxx/lib/libpaddle_full_api_shared.so替换PaddleLite-linux-demo/libs/PaddleLite/arm64/lib/libpaddle_full_api_shared.so文件。

diff --git a/docs/demo_guides/cuda.md b/docs/demo_guides/cuda.md

index f863fd86864194c6d022e4cf1fc75eb46725cc2c..6460d327a4f30753a2d6942d4a931f709641e3ab 100644

--- a/docs/demo_guides/cuda.md

+++ b/docs/demo_guides/cuda.md

@@ -1,5 +1,7 @@

# PaddleLite使用CUDA预测部署

+**注意**: Lite CUDA仅作为Nvidia GPU加速库,支持模型有限,如有需要请使用[PaddleInference](https://paddle-inference.readthedocs.io/en/latest)。

+

Lite支持在x86_64,arm64架构上(如:TX2)进行CUDA的编译运行。

## 编译

diff --git a/docs/images/architecture.png b/docs/images/architecture.png

index 1af783d77dbd52923aa5facc90e00633c908f575..9397ed49a8a0071cf25b4551438d24a86de96bbb 100644

Binary files a/docs/images/architecture.png and b/docs/images/architecture.png differ

diff --git a/docs/images/workflow.png b/docs/images/workflow.png

new file mode 100644

index 0000000000000000000000000000000000000000..98201e78e1a35c830231881d19fb2c0acbdbaeba

Binary files /dev/null and b/docs/images/workflow.png differ

diff --git a/docs/index.rst b/docs/index.rst

index 24dac7f3692649f99bbeabafab53896c2221c29c..88170c3f6ee177b55631b008c888cb88eda866d3 100644

--- a/docs/index.rst

+++ b/docs/index.rst

@@ -57,7 +57,6 @@ Welcome to Paddle-Lite's documentation!

demo_guides/ios_app_demo

demo_guides/linux_arm_demo

demo_guides/x86

- demo_guides/cuda

demo_guides/opencl

demo_guides/fpga

demo_guides/huawei_kirin_npu

diff --git a/docs/introduction/architecture.md b/docs/introduction/architecture.md

index 1a94494af0b44a03988266d341be5788c46f96c2..8af678a5bf2bb1355e21df91752b777c466faee9 100644

--- a/docs/introduction/architecture.md

+++ b/docs/introduction/architecture.md

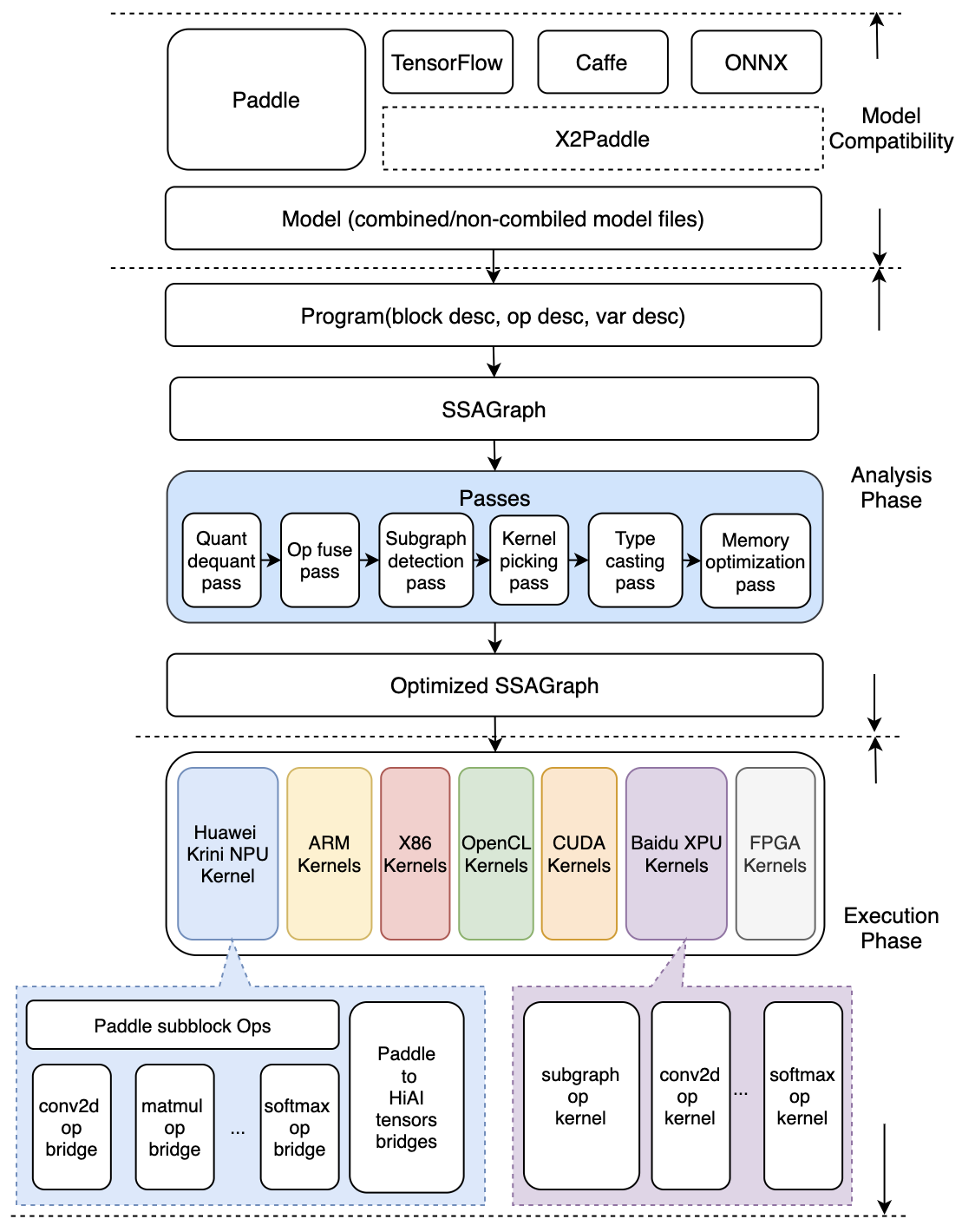

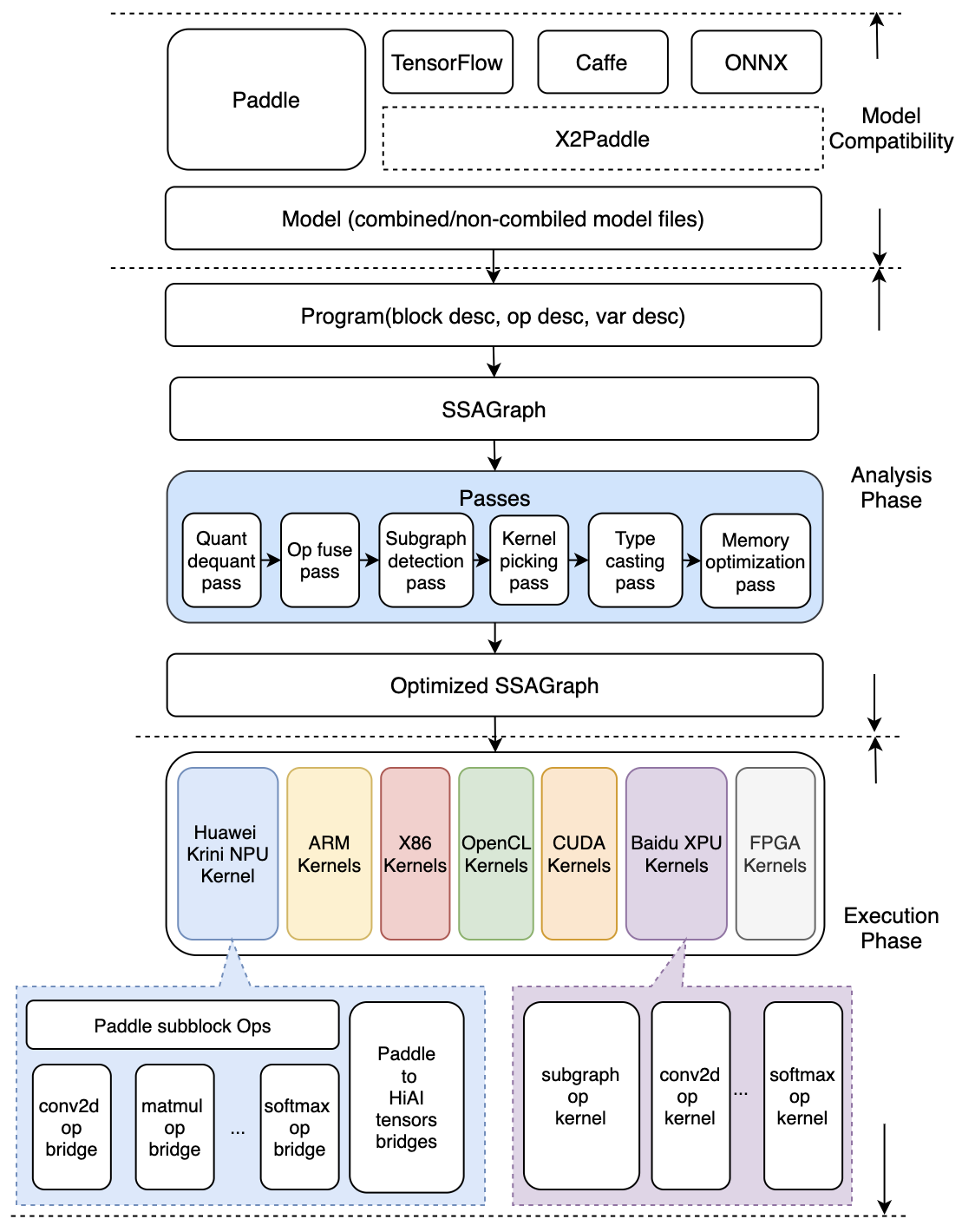

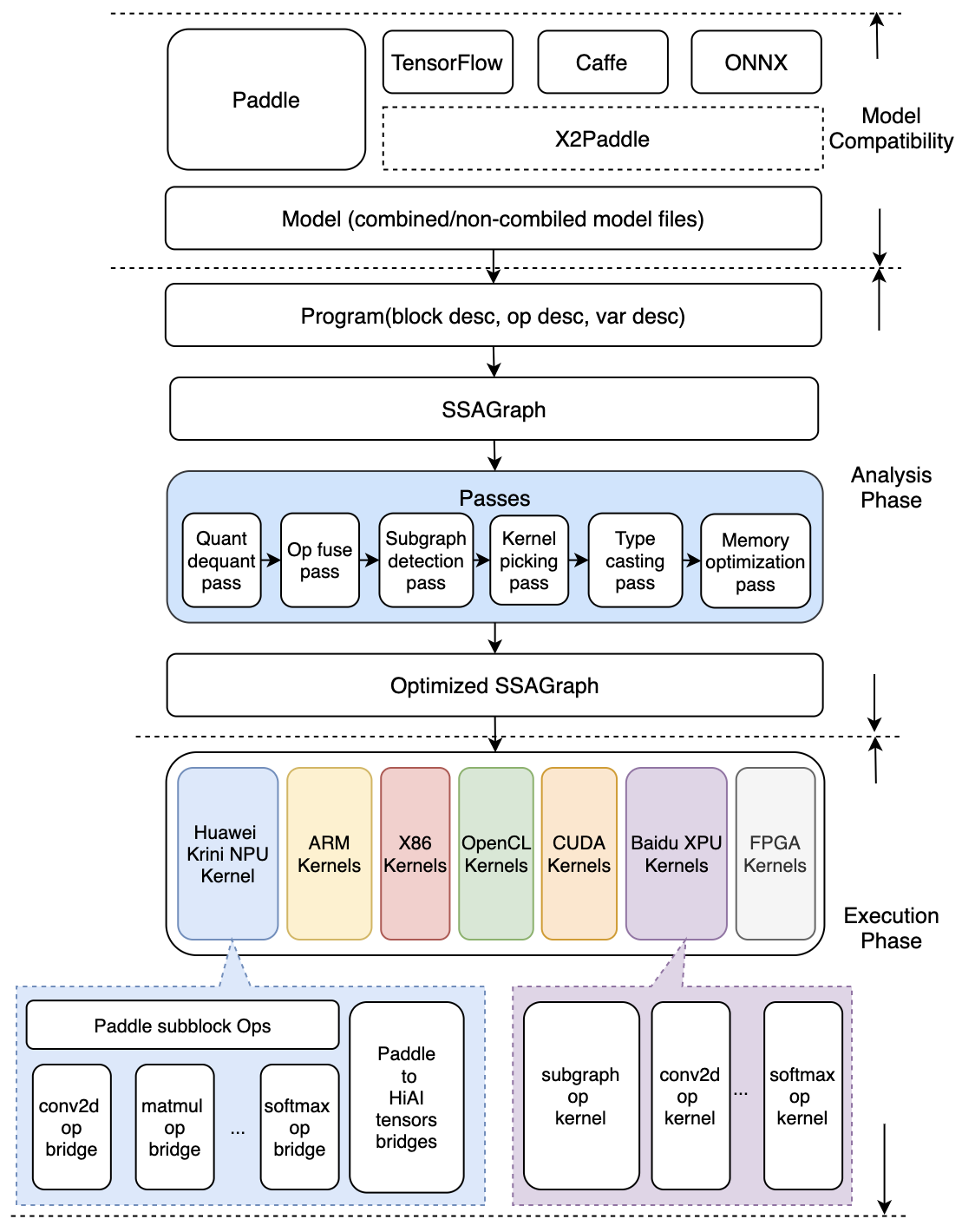

@@ -5,23 +5,25 @@ Mobile 在这次升级为 Lite 架构, 侧重多硬件、高性能的支持,

- 引入 Type system,强化多硬件、量化方法、data layout 的混合调度能力

- 硬件细节隔离,通过不同编译开关,对支持的任何硬件可以自由插拔

- 引入 MIR(Machine IR) 的概念,强化带执行环境下的优化支持

-- 优化期和执行期严格隔离,保证预测时轻量和高效率

+- 图优化模块和执行引擎实现了良好的解耦拆分,保证预测执行阶段的轻量和高效率

架构图如下

-

+

-## 编译期和执行期严格隔离设计

+## 模型优化阶段和预测执行阶段的隔离设计

-- compile time 优化完毕可以将优化信息存储到模型中;execution time 载入并执行

-- 两套 API 及对应的预测lib,满足不同场景

- - `CxxPredictor` 打包了 `Compile Time` 和 `Execution Time`,可以 runtime 在具体硬件上做分析和优化,得到最优效果

- - `MobilePredictor` 只打包 `Execution Time`,保持部署和执行的轻量

+- Analysis Phase为模型优化阶段,输入为Paddle的推理模型,通过Lite的模型加速和优化策略对计算图进行相关的优化分析,包含算子融合,计算裁剪,存储优化,量化精度转换、存储优化、Kernel优选等多类图优化手段。优化后的模型更轻量级,在相应的硬件上运行时耗费资源更少,并且执行速度也更快。

+- Execution Phase为预测执行阶段,输入为优化后的Lite模型,仅做模型加载和预测执行两步操作,支持极致的轻量级部署,无任何第三方依赖。

-## `Execution Time` 轻量级设计和实现

+Lite设计了两套 API 及对应的预测库,满足不同场景需求:

+ - `CxxPredictor` 同时包含 `Analysis Phase` 和 `Execution Phase`,支持一站式的预测任务,同时支持模型进行分析优化与预测执行任务,适用于对预测库大小不敏感的硬件场景。

+ - `MobilePredictor` 只包含 `Execution Phase`,保持预测部署和执行的轻量级和高性能,支持从内存或者文件中加载优化后的模型,并进行预测执行。

-- 每个 batch 实际执行只包含两个步骤执行

- - `Op.InferShape`

+## Execution Phase轻量级设计和实现

+

+- 在预测执行阶段,每个 batch 实际执行只包含两个步骤执行

+ - `OpLite.InferShape` 基于输入推断得到输出的维度

- `Kernel.Run`,Kernel 相关参数均使用指针提前确定,后续无查找或传参消耗

- 设计目标,执行时,只有 kernel 计算本身消耗

- 轻量级 `Op` 及 `Kernel` 设计,避免框架额外消耗

diff --git a/docs/introduction/support_hardware.md b/docs/introduction/support_hardware.md

index b1a6823d26d4fe8838afee00732707608b836599..3fa1b358aba0b2dd01328fad0e81efc95d75450d 100644

--- a/docs/introduction/support_hardware.md

+++ b/docs/introduction/support_hardware.md

@@ -29,7 +29,8 @@ Paddle Lite支持[ARM Cortex-A系列处理器](https://en.wikipedia.org/wiki/ARM

Paddle Lite支持移动端GPU和Nvidia端上GPU设备,支持列表如下:

- ARM Mali G 系列

- Qualcomm Adreno 系列

-- Nvida tegra系列: tx1, tx2, nano, xavier

+

+ Nvida tegra系列: tx1, tx2, nano, xavier

## NPU

Paddle Lite支持NPU,支持列表如下:

diff --git a/docs/introduction/support_model_list.md b/docs/introduction/support_model_list.md

index b30bcd729929de06848285bb27a4d38cec723e67..11f39134b5457703cc00b2dde93d5ab286e48636 100644

--- a/docs/introduction/support_model_list.md

+++ b/docs/introduction/support_model_list.md

@@ -1,32 +1,38 @@

# 支持模型

-目前已严格验证24个模型的精度和性能,对视觉类模型做到了较为充分的支持,覆盖分类、检测和定位,包含了特色的OCR模型的支持,并在不断丰富中。

+目前已严格验证28个模型的精度和性能,对视觉类模型做到了较为充分的支持,覆盖分类、检测和定位,包含了特色的OCR模型的支持,并在不断丰富中。

-| 类别 | 类别细分 | 模型 | 支持Int8 | 支持平台 |

-|-|-|:-:|:-:|-:|

-| CV | 分类 | mobilenetv1 | Y | ARM,X86,NPU,RKNPU,APU |

-| CV | 分类 | mobilenetv2 | Y | ARM,X86,NPU |

-| CV | 分类 | resnet18 | Y | ARM,NPU |

-| CV | 分类 | resnet50 | Y | ARM,X86,NPU,XPU |

-| CV | 分类 | mnasnet | | ARM,NPU |

-| CV | 分类 | efficientnet | | ARM |

-| CV | 分类 | squeezenetv1.1 | | ARM,NPU |

-| CV | 分类 | ShufflenetV2 | Y | ARM |

-| CV | 分类 | shufflenet | Y | ARM |

-| CV | 分类 | inceptionv4 | Y | ARM,X86,NPU |

-| CV | 分类 | vgg16 | Y | ARM |

-| CV | 分类 | googlenet | Y | ARM,X86 |

-| CV | 检测 | mobilenet_ssd | Y | ARM,NPU* |

-| CV | 检测 | mobilenet_yolov3 | Y | ARM,NPU* |

-| CV | 检测 | Faster RCNN | | ARM |

-| CV | 检测 | Mask RCNN | | ARM |

-| CV | 分割 | Deeplabv3 | Y | ARM |

-| CV | 分割 | unet | | ARM |

-| CV | 人脸 | facedetection | | ARM |

-| CV | 人脸 | facebox | | ARM |

-| CV | 人脸 | blazeface | Y | ARM |

-| CV | 人脸 | mtcnn | | ARM |

-| CV | OCR | ocr_attention | | ARM |

-| NLP | 机器翻译 | transformer | | ARM,NPU* |

+| 类别 | 类别细分 | 模型 | 支持平台 |

+|-|-|:-|:-|

+| CV | 分类 | [MobileNetV1](https://paddlelite-demo.bj.bcebos.com/models/mobilenet_v1_fp32_224_fluid.tar.gz) | ARM,X86,NPU,RKNPU,APU |

+| CV | 分类 | [MobileNetV2](https://paddlelite-demo.bj.bcebos.com/models/mobilenet_v2_fp32_224_fluid.tar.gz) | ARM,X86,NPU |

+| CV | 分类 | [ResNet18](https://paddlelite-demo.bj.bcebos.com/models/resnet18_fp32_224_fluid.tar.gz) | ARM,NPU |

+| CV | 分类 | [ResNet50](https://paddlelite-demo.bj.bcebos.com/models/resnet50_fp32_224_fluid.tar.gz) | ARM,X86,NPU,XPU |

+| CV | 分类 | [MnasNet](https://paddlelite-demo.bj.bcebos.com/models/mnasnet_fp32_224_fluid.tar.gz) | ARM,NPU |

+| CV | 分类 | [EfficientNet*](https://github.com/PaddlePaddle/PaddleClas) | ARM |

+| CV | 分类 | [SqueezeNet](https://paddlelite-demo.bj.bcebos.com/models/squeezenet_fp32_224_fluid.tar.gz) | ARM,NPU |

+| CV | 分类 | [ShufflenetV2*](https://github.com/PaddlePaddle/PaddleClas) | ARM |

+| CV | 分类 | [ShuffleNet](https://paddlepaddle-inference-banchmark.bj.bcebos.com/shufflenet_inference.tar.gz) | ARM |

+| CV | 分类 | [InceptionV4](https://paddle-inference-dist.bj.bcebos.com/inception_v4_simple.tar.gz) | ARM,X86,NPU |

+| CV | 分类 | [VGG16](https://paddlepaddle-inference-banchmark.bj.bcebos.com/VGG16_inference.tar) | ARM |

+| CV | 分类 | [VGG19](https://paddlepaddle-inference-banchmark.bj.bcebos.com/VGG19_inference.tar) | XPU|

+| CV | 分类 | [GoogleNet](https://paddlepaddle-inference-banchmark.bj.bcebos.com/GoogleNet_inference.tar) | ARM,X86,XPU |

+| CV | 检测 | [MobileNet-SSD](https://paddlelite-demo.bj.bcebos.com/models/ssd_mobilenet_v1_pascalvoc_fp32_300_fluid.tar.gz) | ARM,NPU* |

+| CV | 检测 | [YOLOv3-MobileNetV3](https://paddlelite-demo.bj.bcebos.com/models/yolov3_mobilenet_v3_prune86_FPGM_320_fp32_fluid.tar.gz) | ARM,NPU* |

+| CV | 检测 | [Faster RCNN](https://paddlepaddle-inference-banchmark.bj.bcebos.com/faster_rcnn.tar) | ARM |

+| CV | 检测 | [Mask RCNN*](https://github.com/PaddlePaddle/PaddleDetection/blob/release/0.4/docs/MODEL_ZOO_cn.md) | ARM |

+| CV | 分割 | [Deeplabv3](https://paddlelite-demo.bj.bcebos.com/models/deeplab_mobilenet_fp32_fluid.tar.gz) | ARM |

+| CV | 分割 | [UNet](https://paddlelite-demo.bj.bcebos.com/models/Unet.zip) | ARM |

+| CV | 人脸 | [FaceDetection](https://paddlelite-demo.bj.bcebos.com/models/facedetection_fp32_240_430_fluid.tar.gz) | ARM |

+| CV | 人脸 | [FaceBoxes*](https://github.com/PaddlePaddle/PaddleDetection/blob/release/0.4/docs/featured_model/FACE_DETECTION.md#FaceBoxes) | ARM |

+| CV | 人脸 | [BlazeFace*](https://github.com/PaddlePaddle/PaddleDetection/blob/release/0.4/docs/featured_model/FACE_DETECTION.md#BlazeFace) | ARM |

+| CV | 人脸 | [MTCNN](https://paddlelite-demo.bj.bcebos.com/models/mtcnn.zip) | ARM |

+| CV | OCR | [OCR-Attention](https://paddle-inference-dist.bj.bcebos.com/ocr_attention.tar.gz) | ARM |

+| CV | GAN | [CycleGAN*](https://github.com/PaddlePaddle/models/tree/release/1.7/PaddleCV/gan/cycle_gan) | NPU |

+| NLP | 机器翻译 | [Transformer*](https://github.com/PaddlePaddle/models/tree/release/1.8/PaddleNLP/machine_translation/transformer) | ARM,NPU* |

+| NLP | 机器翻译 | [BERT](https://paddle-inference-dist.bj.bcebos.com/PaddleLite/models_and_data_for_unittests/bert.tar.gz) | XPU |

+| NLP | 语义表示 | [ERNIE](https://paddle-inference-dist.bj.bcebos.com/PaddleLite/models_and_data_for_unittests/ernie.tar.gz) | XPU |

-> **注意:** NPU* 代表ARM+NPU异构计算

+**注意:**

+1. 模型列表中 * 代表该模型链接来自[PaddlePaddle/models](https://github.com/PaddlePaddle/models),否则为推理模型的下载链接

+2. 支持平台列表中 NPU* 代表ARM+NPU异构计算,否则为NPU计算

diff --git a/docs/quick_start/release_lib.md b/docs/quick_start/release_lib.md

index c2c441bbfa7dea0ae2ebd54f5545ae61590604ec..9c722df1537d49a2c7b8a009b5273b93ff68ffbe 100644

--- a/docs/quick_start/release_lib.md

+++ b/docs/quick_start/release_lib.md

@@ -76,7 +76,6 @@ pip install paddlelite

- [ArmLinux源码编译](../source_compile/compile_linux)

- [x86源码编译](../demo_guides/x86)

- [opencl源码编译](../demo_guides/opencl)

-- [CUDA源码编译](../demo_guides/cuda)

- [FPGA源码编译](../demo_guides/fpga)

- [华为NPU源码编译](../demo_guides/huawei_kirin_npu)

- [百度XPU源码编译](../demo_guides/baidu_xpu)

diff --git a/docs/quick_start/tutorial.md b/docs/quick_start/tutorial.md

index a7eb1327f812917e3f1609d097acaeec2a96997d..e5a63be350fe3111d480ba66e907b7f7613b1425 100644

--- a/docs/quick_start/tutorial.md

+++ b/docs/quick_start/tutorial.md

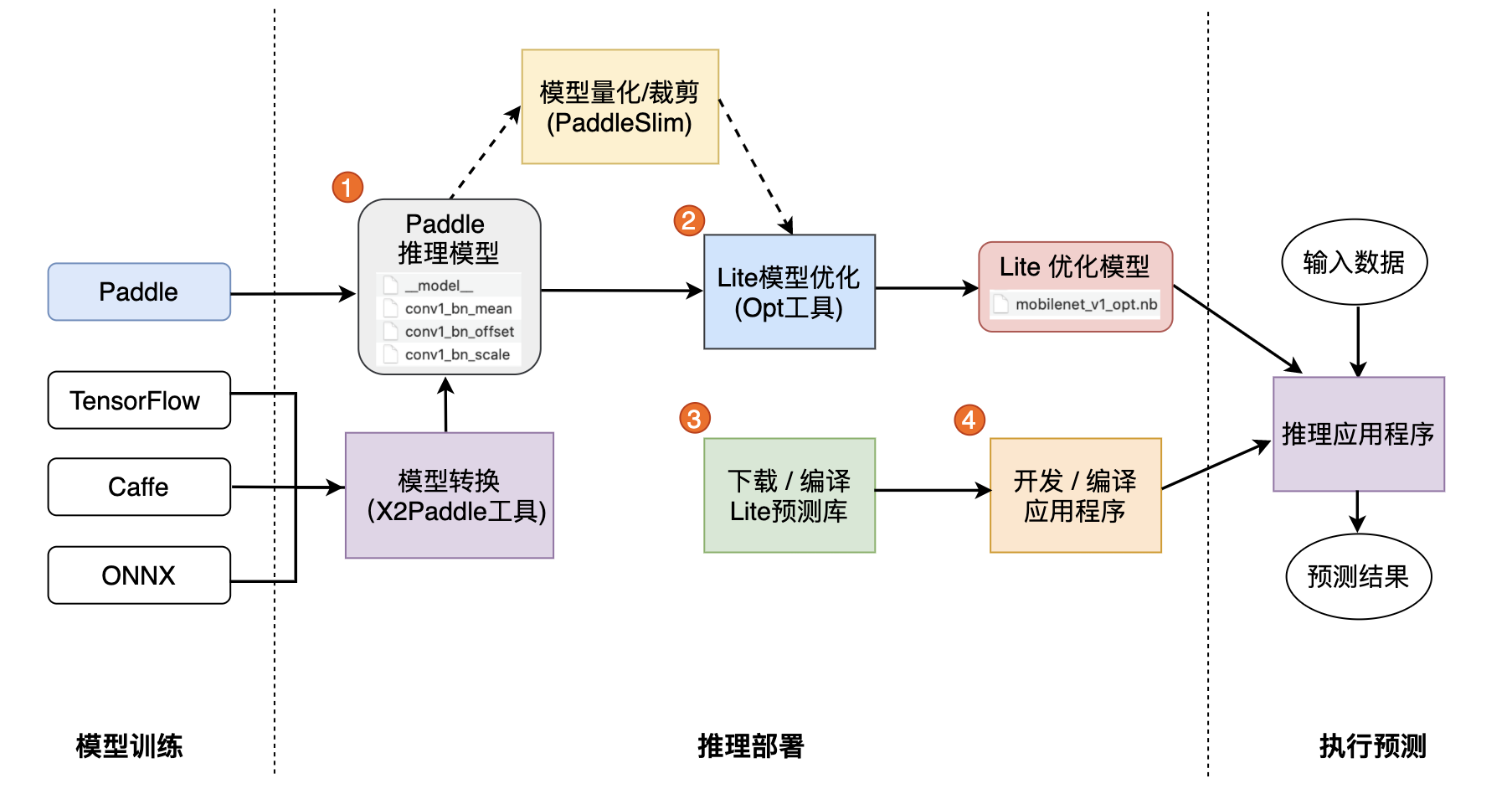

@@ -2,51 +2,63 @@

Lite是一种轻量级、灵活性强、易于扩展的高性能的深度学习预测框架,它可以支持诸如ARM、OpenCL、NPU等等多种终端,同时拥有强大的图优化及预测加速能力。如果您希望将Lite框架集成到自己的项目中,那么只需要如下几步简单操作即可。

-## 一. 准备模型

-Lite框架目前支持的模型结构为[PaddlePaddle](https://github.com/PaddlePaddle/Paddle)深度学习框架产出的模型格式。因此,在您开始使用 Lite 框架前您需要准备一个由PaddlePaddle框架保存的模型。

-如果您手中的模型是由诸如Caffe2、Tensorflow等框架产出的,那么我们推荐您使用 [X2Paddle](https://github.com/PaddlePaddle/X2Paddle) 工具进行模型格式转换。

+

-## 二. 模型优化

+**一. 准备模型**

-Lite框架拥有强大的加速、优化策略及实现,其中包含诸如量化、子图融合、Kernel优选等等优化手段,为了方便您使用这些优化策略,我们提供了[opt](../user_guides/model_optimize_tool)帮助您轻松进行模型优化。优化后的模型更轻量级,耗费资源更少,并且执行速度也更快。

+Paddle Lite框架直接支持模型结构为[PaddlePaddle](https://github.com/PaddlePaddle/Paddle)深度学习框架产出的模型格式。目前PaddlePaddle用于推理的模型是通过[save_inference_model](https://www.paddlepaddle.org.cn/documentation/docs/zh/api_cn/io_cn/save_inference_model_cn.html#save-inference-model)这个API保存下来的。

+如果您手中的模型是由诸如Caffe、Tensorflow、PyTorch等框架产出的,那么您可以使用 [X2Paddle](https://github.com/PaddlePaddle/X2Paddle) 工具将模型转换为PadddlePaddle格式。

-opt的详细介绍,请您参考 [模型优化方法](../user_guides/model_optimize_tool)。

+**二. 模型优化**

-下载opt工具后执行以下代码:

+Paddle Lite框架拥有优秀的加速、优化策略及实现,包含量化、子图融合、Kernel优选等优化手段。优化后的模型更轻量级,耗费资源更少,并且执行速度也更快。

+这些优化通过Paddle Lite提供的opt工具实现。opt工具还可以统计并打印出模型中的算子信息,并判断不同硬件平台下Paddle Lite的支持情况。您获取PaddlePaddle格式的模型之后,一般需要通该opt工具做模型优化。opt工具的下载和使用,请参考 [模型优化方法](https://paddle-lite.readthedocs.io/zh/latest/user_guides/model_optimize_tool.html)。

-``` shell

-$ ./opt \

- --model_dir= \

- --model_file= \

- --param_file= \

- --optimize_out_type=(protobuf|naive_buffer) \

- --optimize_out= \

- --valid_targets=(arm|opencl|x86)

-```

+**注意**: 为了减少第三方库的依赖、提高Lite预测框架的通用性,在移动端使用Lite API您需要准备Naive Buffer存储格式的模型。

-其中,optimize_out为您希望的优化模型的输出路径。optimize_out_type则可以指定输出模型的序列化方式,其目前支持Protobuf与Naive Buffer两种方式,其中Naive Buffer是一种更轻量级的序列化/反序列化实现。如果你需要使用Lite在mobile端进行预测,那么您需要设置optimize_out_type=naive_buffer。

+**三. 下载或编译**

-## 三. 使用Lite框架执行预测

+Paddle Lite提供了Android/iOS/X86平台的官方Release预测库下载,我们优先推荐您直接下载 [Paddle Lite预编译库](https://paddle-lite.readthedocs.io/zh/latest/quick_start/release_lib.html)。

+您也可以根据目标平台选择对应的[源码编译方法](https://paddle-lite.readthedocs.io/zh/latest/quick_start/release_lib.html#id2)。Paddle Lite 提供了源码编译脚本,位于 `lite/tools/`文件夹下,只需要 [准备环境](https://paddle-lite.readthedocs.io/zh/latest/source_compile/compile_env.html) 和 [调用编译脚本](https://paddle-lite.readthedocs.io/zh/latest/quick_start/release_lib.html#id2) 两个步骤即可一键编译得到目标平台的Paddle Lite预测库。

-在上一节中,我们已经通过`opt`获取到了优化后的模型,使用优化模型进行预测也十分的简单。为了方便您的使用,Lite进行了良好的API设计,隐藏了大量您不需要投入时间研究的细节。您只需要简单的五步即可使用Lite在移动端完成预测(以C++ API进行说明):

+**四. 开发应用程序**

+Paddle Lite提供了C++、Java、Python三种API,只需简单五步即可完成预测(以C++ API为例):

-1. 声明MobileConfig。在config中可以设置**从文件加载模型**也可以设置**从memory加载模型**。从文件加载模型需要声明模型文件路径,如 `config.set_model_from_file(FLAGS_model_file)` ;从memory加载模型方法现只支持加载优化后模型的naive buffer,实现方法为:

-`void set_model_from_buffer(model_buffer) `

+1. 声明`MobileConfig`,设置第二步优化后的模型文件路径,或选择从内存中加载模型

+2. 创建`Predictor`,调用`CreatePaddlePredictor`接口,一行代码即可完成引擎初始化

+3. 准备输入,通过`predictor->GetInput(i)`获取输入变量,并为其指定输入大小和输入值

+4. 执行预测,只需要运行`predictor->Run()`一行代码,即可使用Lite框架完成预测执行

+5. 获得输出,使用`predictor->GetOutput(i)`获取输出变量,并通过`data`取得输出值

-2. 创建Predictor。Predictor即为Lite框架的预测引擎,为了方便您的使用我们提供了 `CreatePaddlePredictor` 接口,你只需要简单的执行一行代码即可完成预测引擎的初始化,`std::shared_ptr predictor = CreatePaddlePredictor(config)` 。

-3. 准备输入。执行predictor->GetInput(0)您将会获得输入的第0个field,同样的,如果您的模型有多个输入,那您可以执行 `predictor->GetInput(i)` 来获取相应的输入变量。得到输入变量后您可以使用Resize方法指定其具体大小,并填入输入值。

-4. 执行预测。您只需要执行 `predictor->Run()` 即可使用Lite框架完成预测。

-5. 获取输出。与输入类似,您可以使用 `predictor->GetOutput(i)` 来获得输出的第i个变量。您可以通过其shape()方法获取输出变量的维度,通过 `data()` 模板方法获取其输出值。

+Paddle Lite提供了C++、Java、Python三种API的完整使用示例和开发说明文档,您可以参考示例中的说明快速了解使用方法,并集成到您自己的项目中去。

+- [C++完整示例](cpp_demo.html)

+- [Java完整示例](java_demo.html)

+- [Python完整示例](python_demo.html)

+针对不同的硬件平台,Paddle Lite提供了各个平台的完整示例:

+- [Android示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/android_app_demo.html)

+- [iOS示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/ios_app_demo.html)

+- [ARMLinux示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/linux_arm_demo.html)

+- [X86示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/x86.html)

+- [OpenCL示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/opencl.html)

+- [FPGA示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/fpga.html)

+- [华为NPU示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/huawei_kirin_npu.html)

+- [百度XPU示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/baidu_xpu.html)

+- [瑞芯微NPU示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/rockchip_npu.html)

+- [联发科APU示例](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/mediatek_apu.html)

-## 四. Lite API

+您也可以下载以下基于Paddle-Lite开发的预测APK程序,安装到Andriod平台上,先睹为快:

-为了方便您的使用,我们提供了C++、Java、Python三种API,并且提供了相应的api的完整使用示例:[C++完整示例](cpp_demo)、[Java完整示例](java_demo)、[Python完整示例](python_demo),您可以参考示例中的说明快速了解C++/Java/Python的API使用方法,并集成到您自己的项目中去。需要说明的是,为了减少第三方库的依赖、提高Lite预测框架的通用性,在移动端使用Lite API您需要准备Naive Buffer存储格式的模型,具体方法可参考第2节`模型优化`。

+- [图像分类](https://paddlelite-demo.bj.bcebos.com/apps/android/mobilenet_classification_demo.apk)

+- [目标检测](https://paddlelite-demo.bj.bcebos.com/apps/android/yolo_detection_demo.apk)

+- [口罩检测](https://paddlelite-demo.bj.bcebos.com/apps/android/mask_detection_demo.apk)

+- [人脸关键点](https://paddlelite-demo.bj.bcebos.com/apps/android/face_keypoints_detection_demo.apk)

+- [人像分割](https://paddlelite-demo.bj.bcebos.com/apps/android/human_segmentation_demo.apk)

-## 五. 测试工具

+## 更多测试工具

为了使您更好的了解并使用Lite框架,我们向有进一步使用需求的用户开放了 [Debug工具](../user_guides/debug) 和 [Profile工具](../user_guides/debug)。Lite Model Debug Tool可以用来查找Lite框架与PaddlePaddle框架在执行预测时模型中的对应变量值是否有差异,进一步快速定位问题Op,方便复现与排查问题。Profile Monitor Tool可以帮助您了解每个Op的执行时间消耗,其会自动统计Op执行的次数,最长、最短、平均执行时间等等信息,为性能调优做一个基础参考。您可以通过 [相关专题](../user_guides/debug) 了解更多内容。

diff --git a/docs/source_compile/compile_env.md b/docs/source_compile/compile_env.md

index 5322558afbf2c3ad09f04e0596ddc18f967ffabb..7c32311cda212091796a2cff7d60bbefbb751e7c 100644

--- a/docs/source_compile/compile_env.md

+++ b/docs/source_compile/compile_env.md

@@ -19,7 +19,6 @@ Paddle Lite提供了Android/iOS/X86平台的官方Release预测库下载,如

- [ArmLinux源码编译](../source_compile/compile_linux)

- [X86源码编译](../demo_guides/x86)

- [OpenCL源码编译](../demo_guides/opencl)

-- [CUDA源码编译](../demo_guides/cuda)

- [FPGA源码编译](../demo_guides/fpga)

- [华为NPU源码编译](../demo_guides/huawei_kirin_npu)

- [百度XPU源码编译](../demo_guides/baidu_xpu)

diff --git a/lite/CMakeLists.txt b/lite/CMakeLists.txt

index 228b09bcff8a30869d7828a2a5a71fa0cb802292..d69f6d6d9e77668c5789baff3f2f1051afe5df46 100755

--- a/lite/CMakeLists.txt

+++ b/lite/CMakeLists.txt

@@ -40,7 +40,8 @@ endif()

if (WITH_TESTING)

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "lite_naive_model.tar.gz")

if(LITE_WITH_LIGHT_WEIGHT_FRAMEWORK)

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1_int16.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v2_relu.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "resnet50.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "inception_v4_simple.tar.gz")

@@ -51,11 +52,19 @@ if (WITH_TESTING)

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "GoogleNet_inference.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v2_relu.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "resnet50.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "inception_v4_simple.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "step_rnn.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "bert.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "ernie.tar.gz")

+

+ set(LITE_URL_FOR_UNITTESTS "http://paddle-inference-dist.bj.bcebos.com/PaddleLite/models_and_data_for_unittests")

+ # models

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "resnet50.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "bert.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "ernie.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "GoogLeNet.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "VGG19.tar.gz")

+ # data

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "ILSVRC2012_small.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "bert_data.tar.gz")

endif()

endif()

diff --git a/lite/api/CMakeLists.txt b/lite/api/CMakeLists.txt

index 5be30b1ea5ec649e81d4e28dca2f412816cef361..3e8fd5fd637c02842e068801278fab94ac7d5d4f 100644

--- a/lite/api/CMakeLists.txt

+++ b/lite/api/CMakeLists.txt

@@ -15,7 +15,6 @@ if ((NOT LITE_ON_TINY_PUBLISH) AND (LITE_WITH_CUDA OR LITE_WITH_X86 OR LITE_WITH

#full api dynamic library

lite_cc_library(paddle_full_api_shared SHARED SRCS paddle_api.cc light_api.cc cxx_api.cc cxx_api_impl.cc light_api_impl.cc

DEPS paddle_api paddle_api_light paddle_api_full)

- target_sources(paddle_full_api_shared PUBLIC ${__lite_cc_files})

add_dependencies(paddle_full_api_shared op_list_h kernel_list_h framework_proto op_registry fbs_headers)

target_link_libraries(paddle_full_api_shared framework_proto op_registry)

if(LITE_WITH_X86)

@@ -70,6 +69,10 @@ else()

set(TARGET_COMIPILE_FLAGS "-fdata-sections")

if (NOT (ARM_TARGET_LANG STREQUAL "clang")) #gcc

set(TARGET_COMIPILE_FLAGS "${TARGET_COMIPILE_FLAGS} -flto")

+ # TODO (hong19860320): Disable lto temporarily since it causes fail to catch the exceptions in android when toolchain is gcc.

+ if (ARM_TARGET_OS STREQUAL "android" AND LITE_WITH_EXCEPTION)

+ set(TARGET_COMIPILE_FLAGS "")

+ endif()

endif()

set_target_properties(paddle_light_api_shared PROPERTIES COMPILE_FLAGS "${TARGET_COMIPILE_FLAGS}")

add_dependencies(paddle_light_api_shared op_list_h kernel_list_h fbs_headers)

@@ -288,6 +291,14 @@ if(LITE_WITH_LIGHT_WEIGHT_FRAMEWORK AND WITH_TESTING)

set(LINK_FLAGS "-Wl,--version-script ${PADDLE_SOURCE_DIR}/lite/core/lite.map")

set_target_properties(test_mobilenetv1 PROPERTIES LINK_FLAGS "${LINK_FLAGS}")

endif()

+

+ lite_cc_test(test_mobilenetv1_int16 SRCS mobilenetv1_int16_test.cc

+ DEPS ${lite_model_test_DEPS} ${light_lib_DEPS}

+ CL_DEPS ${opencl_kernels}

+ NPU_DEPS ${npu_kernels} ${npu_bridges}

+ ARGS --cl_path=${CMAKE_SOURCE_DIR}/lite/backends/opencl

+ --model_dir=${LITE_MODEL_DIR}/mobilenet_v1_int16 SERIAL)

+ add_dependencies(test_mobilenetv1 extern_lite_download_mobilenet_v1_int16_tar_gz)

lite_cc_test(test_mobilenetv2 SRCS mobilenetv2_test.cc

DEPS ${lite_model_test_DEPS}

diff --git a/lite/api/android/jni/native/CMakeLists.txt b/lite/api/android/jni/native/CMakeLists.txt

index 4638ed5fdfb360c1475ad6e2d1a8eb2051673eb1..1aa9aeeeff6f2737aa3a2a31beaedb0dbf4184f8 100644

--- a/lite/api/android/jni/native/CMakeLists.txt

+++ b/lite/api/android/jni/native/CMakeLists.txt

@@ -17,7 +17,6 @@ if (NOT LITE_ON_TINY_PUBLISH)

# Unlike static library, module library has to link target to be able to work

# as a single .so lib.

target_link_libraries(paddle_lite_jni ${lib_DEPS} ${arm_kernels} ${npu_kernels})

- add_dependencies(paddle_lite_jni fbs_headers)

if (LITE_WITH_NPU)

# Strips the symbols of our protobuf functions to fix the conflicts during

# loading HIAI builder libs (libhiai_ir.so and libhiai_ir_build.so)

diff --git a/lite/api/benchmark.cc b/lite/api/benchmark.cc

index 1dccbb49a4b15a397ae37b1373b5df3cf95e7e9f..b72a6e6bdb2dd170460d0cbb2f3257e337625671 100644

--- a/lite/api/benchmark.cc

+++ b/lite/api/benchmark.cc

@@ -30,8 +30,6 @@

#include

#include

#include "lite/api/paddle_api.h"

-#include "lite/api/paddle_use_kernels.h"

-#include "lite/api/paddle_use_ops.h"

#include "lite/core/device_info.h"

#include "lite/utils/cp_logging.h"

#include "lite/utils/string.h"

diff --git a/lite/api/cxx_api_impl.cc b/lite/api/cxx_api_impl.cc

index 3b3337139b3c5e3d475503ac682194a0ed348e4f..0b5b9ad94c47a3d97492cd5b91618b184c9ef122 100644

--- a/lite/api/cxx_api_impl.cc

+++ b/lite/api/cxx_api_impl.cc

@@ -58,6 +58,16 @@ void CxxPaddleApiImpl::Init(const lite_api::CxxConfig &config) {

config.mlu_input_layout(),

config.mlu_firstconv_param());

#endif // LITE_WITH_MLU

+

+#ifdef LITE_WITH_BM

+ Env::Init();

+ int device_id = 0;

+ if (const char *c_id = getenv("BM_VISIBLE_DEVICES")) {

+ device_id = static_cast(*c_id) - 48;

+ }

+ TargetWrapper::SetDevice(device_id);

+#endif // LITE_WITH_BM

+

auto use_layout_preprocess_pass =

config.model_dir().find("OPENCL_PRE_PRECESS");

VLOG(1) << "use_layout_preprocess_pass:" << use_layout_preprocess_pass;

@@ -86,7 +96,7 @@ void CxxPaddleApiImpl::Init(const lite_api::CxxConfig &config) {

config.subgraph_model_cache_dir());

#endif

#if (defined LITE_WITH_X86) && (defined PADDLE_WITH_MKLML) && \

- !(defined LITE_ON_MODEL_OPTIMIZE_TOOL)

+ !(defined LITE_ON_MODEL_OPTIMIZE_TOOL) && !defined(__APPLE__)

int num_threads = config.x86_math_library_num_threads();

int real_num_threads = num_threads > 1 ? num_threads : 1;

paddle::lite::x86::MKL_Set_Num_Threads(real_num_threads);

diff --git a/lite/api/cxx_api_test.cc b/lite/api/cxx_api_test.cc

index 768480b1475c3609137f255cbac9ae9d4785a96b..8a28722799c4a2bb7f3512402b2f364fa84831ad 100644

--- a/lite/api/cxx_api_test.cc

+++ b/lite/api/cxx_api_test.cc

@@ -131,7 +131,8 @@ TEST(CXXApi, save_model) {

predictor.Build(FLAGS_model_dir, "", "", valid_places);

LOG(INFO) << "Save optimized model to " << FLAGS_optimized_model;

- predictor.SaveModel(FLAGS_optimized_model);

+ predictor.SaveModel(FLAGS_optimized_model,

+ lite_api::LiteModelType::kProtobuf);

predictor.SaveModel(FLAGS_optimized_model + ".naive",

lite_api::LiteModelType::kNaiveBuffer);

}

diff --git a/lite/api/light_api.cc b/lite/api/light_api.cc

index fbcf171726d741ef0073f423bc4a600c9f9389d0..56461fded536f87ee59ecc8efbe2d3463c7c3822 100644

--- a/lite/api/light_api.cc

+++ b/lite/api/light_api.cc

@@ -46,7 +46,6 @@ void LightPredictor::Build(const std::string& model_dir,

case lite_api::LiteModelType::kProtobuf:

LoadModelPb(model_dir, "", "", scope_.get(), program_desc_.get());

break;

-#endif

case lite_api::LiteModelType::kNaiveBuffer: {

if (model_from_memory) {

LoadModelNaiveFromMemory(

@@ -56,6 +55,7 @@ void LightPredictor::Build(const std::string& model_dir,

}

break;

}

+#endif

default:

LOG(FATAL) << "Unknown model type";

}

diff --git a/lite/api/light_api_impl.cc b/lite/api/light_api_impl.cc

index c9c34377e2a82b72d26e3148a694fe0662e985ce..3c5be7b9cdd340fe0fe82c589706c77875de0030 100644

--- a/lite/api/light_api_impl.cc

+++ b/lite/api/light_api_impl.cc

@@ -17,6 +17,10 @@

#include "lite/api/paddle_api.h"

#include "lite/core/version.h"

#include "lite/model_parser/model_parser.h"

+#ifndef LITE_ON_TINY_PUBLISH

+#include "lite/api/paddle_use_kernels.h"

+#include "lite/api/paddle_use_ops.h"

+#endif

namespace paddle {

namespace lite {

diff --git a/lite/api/mobilenetv1_int16_test.cc b/lite/api/mobilenetv1_int16_test.cc

new file mode 100644

index 0000000000000000000000000000000000000000..266052044ef6543a0f00ad50bc9b89b70656bbe6

--- /dev/null

+++ b/lite/api/mobilenetv1_int16_test.cc

@@ -0,0 +1,83 @@

+// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include

+#include

+#include

+#include "lite/api/cxx_api.h"

+#include "lite/api/light_api.h"

+#include "lite/api/paddle_use_kernels.h"

+#include "lite/api/paddle_use_ops.h"

+#include "lite/api/paddle_use_passes.h"

+#include "lite/api/test_helper.h"

+#include "lite/core/op_registry.h"

+

+DEFINE_string(optimized_model,

+ "/data/local/tmp/int16_model",

+ "optimized_model");

+DEFINE_int32(N, 1, "input_batch");

+DEFINE_int32(C, 3, "input_channel");

+DEFINE_int32(H, 224, "input_height");

+DEFINE_int32(W, 224, "input_width");

+

+namespace paddle {

+namespace lite {

+

+void TestModel(const std::vector& valid_places,

+ const std::string& model_dir) {

+ DeviceInfo::Init();

+ DeviceInfo::Global().SetRunMode(lite_api::LITE_POWER_NO_BIND, FLAGS_threads);

+

+ LOG(INFO) << "Optimize model.";

+ lite::Predictor cxx_predictor;

+ cxx_predictor.Build(model_dir, "", "", valid_places);

+ cxx_predictor.SaveModel(FLAGS_optimized_model,

+ paddle::lite_api::LiteModelType::kNaiveBuffer);

+

+ LOG(INFO) << "Load optimized model.";

+ lite::LightPredictor predictor(FLAGS_optimized_model + ".nb", false);

+

+ auto* input_tensor = predictor.GetInput(0);

+ input_tensor->Resize(DDim(

+ std::vector({FLAGS_N, FLAGS_C, FLAGS_H, FLAGS_W})));

+ auto* data = input_tensor->mutable_data();

+ auto item_size = FLAGS_N * FLAGS_C * FLAGS_H * FLAGS_W;

+ for (int i = 0; i < item_size; i++) {

+ data[i] = 1.;

+ }

+

+ LOG(INFO) << "Predictor run.";

+ predictor.Run();

+

+ auto* out = predictor.GetOutput(0);

+ const auto* pdata = out->data();

+

+ std::vector ref = {

+ 0.000191383, 0.000592063, 0.000112282, 6.27426e-05, 0.000127522};

+ double eps = 1e-5;

+ for (int i = 0; i < ref.size(); ++i) {

+ EXPECT_NEAR(pdata[i], ref[i], eps);

+ }

+}

+

+TEST(MobileNetV1_Int16, test_arm) {

+ std::vector valid_places({

+ Place{TARGET(kARM), PRECISION(kFloat)},

+ });

+ std::string model_dir = FLAGS_model_dir;

+ TestModel(valid_places, model_dir);

+}

+

+} // namespace lite

+} // namespace paddle

diff --git a/lite/api/model_test.cc b/lite/api/model_test.cc

index 90575280873c8cda9310cfc951645f4614c2ce30..3cce247750341b37bf9aff07fce8ec54ee1428fe 100644

--- a/lite/api/model_test.cc

+++ b/lite/api/model_test.cc

@@ -25,8 +25,6 @@

#include "lite/core/profile/basic_profiler.h"

#endif // LITE_WITH_PROFILE

#include

-#include "lite/api/paddle_use_kernels.h"

-#include "lite/api/paddle_use_ops.h"

using paddle::lite::profile::Timer;

diff --git a/lite/api/paddle_api.cc b/lite/api/paddle_api.cc

index a3d29dff93155b4a1eaefd91d35080831601eedf..d37657206d093f666ab486dff5aa1c151efce0eb 100644

--- a/lite/api/paddle_api.cc

+++ b/lite/api/paddle_api.cc

@@ -356,5 +356,13 @@ void MobileConfig::set_model_buffer(const char *model_buffer,

model_from_memory_ = true;

}

+// This is the method for allocating workspace_size according to L3Cache size

+void MobileConfig::SetArmL3CacheSize(L3CacheSetMethod method,

+ int absolute_val) {

+#ifdef LITE_WITH_ARM

+ lite::DeviceInfo::Global().SetArmL3CacheSize(method, absolute_val);

+#endif

+}

+

} // namespace lite_api

} // namespace paddle

diff --git a/lite/api/paddle_api.h b/lite/api/paddle_api.h

index 42a4b2228b5dc007bc0d6053f15e843bd6343c8f..7df7f7889af5b059a60aa191540a02e9f2ec755f 100644

--- a/lite/api/paddle_api.h

+++ b/lite/api/paddle_api.h

@@ -32,6 +32,14 @@ using shape_t = std::vector;

using lod_t = std::vector>;

enum class LiteModelType { kProtobuf = 0, kNaiveBuffer, UNK };

+// Methods for allocating L3Cache on Arm platform

+enum class L3CacheSetMethod {

+ kDeviceL3Cache = 0, // Use the system L3 Cache size, best performance.

+ kDeviceL2Cache = 1, // Use the system L2 Cache size, trade off performance

+ // with less memory consumption.

+ kAbsolute = 2, // Use the external setting.

+ // kAutoGrow = 3, // Not supported yet, least memory consumption.

+};

// return true if current device supports OpenCL model

LITE_API bool IsOpenCLBackendValid();

@@ -294,6 +302,11 @@ class LITE_API MobileConfig : public ConfigBase {

// NOTE: This is a deprecated API and will be removed in latter release.

const std::string& param_buffer() const { return param_buffer_; }

+

+ // This is the method for allocating workspace_size according to L3Cache size

+ void SetArmL3CacheSize(

+ L3CacheSetMethod method = L3CacheSetMethod::kDeviceL3Cache,

+ int absolute_val = -1);

};

template

diff --git a/lite/api/paddle_api_test.cc b/lite/api/paddle_api_test.cc

index c381546dfba9326d48b27e094a39dd4cd082c462..41799bdc2c6582e6d987d7d896db1f499eb4cdf4 100644

--- a/lite/api/paddle_api_test.cc

+++ b/lite/api/paddle_api_test.cc

@@ -15,8 +15,6 @@

#include "lite/api/paddle_api.h"

#include

#include

-#include "lite/api/paddle_use_kernels.h"

-#include "lite/api/paddle_use_ops.h"

#include "lite/utils/cp_logging.h"

#include "lite/utils/io.h"

@@ -109,7 +107,8 @@ TEST(CxxApi, share_external_data) {

TEST(LightApi, run) {

lite_api::MobileConfig config;

config.set_model_from_file(FLAGS_model_dir + ".opt2.naive.nb");

-

+ // disable L3 cache on workspace_ allocating

+ config.SetArmL3CacheSize(L3CacheSetMethod::kDeviceL2Cache);

auto predictor = lite_api::CreatePaddlePredictor(config);

auto inputs = predictor->GetInputNames();

@@ -150,6 +149,8 @@ TEST(MobileConfig, LoadfromMemory) {

// set model buffer and run model

lite_api::MobileConfig config;

config.set_model_from_buffer(model_buffer);

+ // allocate 1M initial space for workspace_

+ config.SetArmL3CacheSize(L3CacheSetMethod::kAbsolute, 1024 * 1024);

auto predictor = lite_api::CreatePaddlePredictor(config);

auto input_tensor = predictor->GetInput(0);

diff --git a/lite/api/paddle_use_passes.h b/lite/api/paddle_use_passes.h

index cea2a45c5db15891a4de679265a9c2cd2779d0fb..a4ea030cbf3ae7ead5836f02638ff440335f89fe 100644

--- a/lite/api/paddle_use_passes.h

+++ b/lite/api/paddle_use_passes.h

@@ -62,6 +62,7 @@ USE_MIR_PASS(quantized_op_attributes_inference_pass);

USE_MIR_PASS(control_flow_op_unused_inputs_and_outputs_eliminate_pass)

USE_MIR_PASS(lite_scale_activation_fuse_pass);

USE_MIR_PASS(__xpu__resnet_fuse_pass);

+USE_MIR_PASS(__xpu__resnet_d_fuse_pass);

USE_MIR_PASS(__xpu__resnet_cbam_fuse_pass);

USE_MIR_PASS(__xpu__multi_encoder_fuse_pass);

USE_MIR_PASS(__xpu__embedding_with_eltwise_add_fuse_pass);

diff --git a/lite/api/python/pybind/CMakeLists.txt b/lite/api/python/pybind/CMakeLists.txt

index 1f8ee66a0dbce37480672cc213a60d87d28c4142..b0b897b5d47089eb4331bf4909b4e778092a6a7b 100644

--- a/lite/api/python/pybind/CMakeLists.txt

+++ b/lite/api/python/pybind/CMakeLists.txt

@@ -9,7 +9,7 @@ if(WIN32)

target_link_libraries(lite_pybind ${os_dependency_modules})

else()

lite_cc_library(lite_pybind SHARED SRCS pybind.cc DEPS ${PYBIND_DEPS})

- target_sources(lite_pybind PUBLIC ${__lite_cc_files})

+ target_sources(lite_pybind PUBLIC ${__lite_cc_files} fbs_headers)

endif(WIN32)

if (LITE_ON_TINY_PUBLISH)

diff --git a/lite/backends/apu/neuron_adapter.cc b/lite/backends/apu/neuron_adapter.cc

index 953c92d1828848bd030a65cb2a8af0eac0674ca1..ff08507504b8bd7e5342c5705afb17550f37469e 100644

--- a/lite/backends/apu/neuron_adapter.cc

+++ b/lite/backends/apu/neuron_adapter.cc

@@ -82,16 +82,20 @@ void NeuronAdapter::InitFunctions() {

PADDLE_DLSYM(NeuronModel_setOperandValue);

PADDLE_DLSYM(NeuronModel_setOperandSymmPerChannelQuantParams);

PADDLE_DLSYM(NeuronModel_addOperation);

+ PADDLE_DLSYM(NeuronModel_addOperationExtension);

PADDLE_DLSYM(NeuronModel_identifyInputsAndOutputs);

PADDLE_DLSYM(NeuronCompilation_create);

PADDLE_DLSYM(NeuronCompilation_free);

PADDLE_DLSYM(NeuronCompilation_finish);

+ PADDLE_DLSYM(NeuronCompilation_createForDevices);

PADDLE_DLSYM(NeuronExecution_create);

PADDLE_DLSYM(NeuronExecution_free);

PADDLE_DLSYM(NeuronExecution_setInput);

PADDLE_DLSYM(NeuronExecution_setOutput);

PADDLE_DLSYM(NeuronExecution_compute);

-

+ PADDLE_DLSYM(Neuron_getDeviceCount);

+ PADDLE_DLSYM(Neuron_getDevice);

+ PADDLE_DLSYM(NeuronDevice_getName);

#undef PADDLE_DLSYM

}

@@ -146,6 +150,25 @@ int NeuronModel_addOperation(NeuronModel* model,

model, type, inputCount, inputs, outputCount, outputs);

}

+int NeuronModel_addOperationExtension(NeuronModel* model,

+ const char* name,

+ const char* vendor,

+ const NeuronDevice* device,

+ uint32_t inputCount,

+ const uint32_t* inputs,

+ uint32_t outputCount,

+ const uint32_t* outputs) {

+ return paddle::lite::NeuronAdapter::Global()

+ ->NeuronModel_addOperationExtension()(model,

+ name,

+ vendor,

+ device,

+ inputCount,

+ inputs,

+ outputCount,

+ outputs);

+}

+

int NeuronModel_identifyInputsAndOutputs(NeuronModel* model,

uint32_t inputCount,

const uint32_t* inputs,

@@ -172,6 +195,15 @@ int NeuronCompilation_finish(NeuronCompilation* compilation) {

compilation);

}

+int NeuronCompilation_createForDevices(NeuronModel* model,

+ const NeuronDevice* const* devices,

+ uint32_t numDevices,

+ NeuronCompilation** compilation) {

+ return paddle::lite::NeuronAdapter::Global()

+ ->NeuronCompilation_createForDevices()(

+ model, devices, numDevices, compilation);

+}

+

int NeuronExecution_create(NeuronCompilation* compilation,

NeuronExecution** execution) {

return paddle::lite::NeuronAdapter::Global()->NeuronExecution_create()(

@@ -205,3 +237,18 @@ int NeuronExecution_compute(NeuronExecution* execution) {

return paddle::lite::NeuronAdapter::Global()->NeuronExecution_compute()(

execution);

}

+

+int Neuron_getDeviceCount(uint32_t* numDevices) {

+ return paddle::lite::NeuronAdapter::Global()->Neuron_getDeviceCount()(

+ numDevices);

+}

+

+int Neuron_getDevice(uint32_t devIndex, NeuronDevice** device) {

+ return paddle::lite::NeuronAdapter::Global()->Neuron_getDevice()(devIndex,

+ device);

+}

+

+int NeuronDevice_getName(const NeuronDevice* device, const char** name) {

+ return paddle::lite::NeuronAdapter::Global()->NeuronDevice_getName()(device,

+ name);

+}

diff --git a/lite/backends/apu/neuron_adapter.h b/lite/backends/apu/neuron_adapter.h

index c08db73279ea3969300c8f298016a976e30a7ac4..c1b9669a98626699b126913dcc840906de4de8e0 100644

--- a/lite/backends/apu/neuron_adapter.h

+++ b/lite/backends/apu/neuron_adapter.h

@@ -42,12 +42,25 @@ class NeuronAdapter final {

const uint32_t *,

uint32_t,

const uint32_t *);

+ using NeuronModel_addOperationExtension_Type = int (*)(NeuronModel *,

+ const char *,

+ const char *,

+ const NeuronDevice *,

+ uint32_t,

+ const uint32_t *,

+ uint32_t,

+ const uint32_t *);

using NeuronModel_identifyInputsAndOutputs_Type = int (*)(

NeuronModel *, uint32_t, const uint32_t *, uint32_t, const uint32_t *);

using NeuronCompilation_create_Type = int (*)(NeuronModel *,

NeuronCompilation **);

using NeuronCompilation_free_Type = void (*)(NeuronCompilation *);

using NeuronCompilation_finish_Type = int (*)(NeuronCompilation *);

+ using NeuronCompilation_createForDevices_Type =

+ int (*)(NeuronModel *,

+ const NeuronDevice *const *,

+ uint32_t,

+ NeuronCompilation **);

using NeuronExecution_create_Type = int (*)(NeuronCompilation *,

NeuronExecution **);

using NeuronExecution_free_Type = void (*)(NeuronExecution *);

@@ -59,6 +72,10 @@ class NeuronAdapter final {

using NeuronExecution_setOutput_Type = int (*)(

NeuronExecution *, int32_t, const NeuronOperandType *, void *, size_t);

using NeuronExecution_compute_Type = int (*)(NeuronExecution *);

+ using Neuron_getDeviceCount_Type = int (*)(uint32_t *);

+ using Neuron_getDevice_Type = int (*)(uint32_t, NeuronDevice **);

+ using NeuronDevice_getName_Type = int (*)(const NeuronDevice *,

+ const char **);

Neuron_getVersion_Type Neuron_getVersion() {

CHECK(Neuron_getVersion_ != nullptr) << "Cannot load Neuron_getVersion!";

@@ -105,6 +122,12 @@ class NeuronAdapter final {

return NeuronModel_addOperation_;

}

+ NeuronModel_addOperationExtension_Type NeuronModel_addOperationExtension() {

+ CHECK(NeuronModel_addOperationExtension_ != nullptr)

+ << "Cannot load NeuronModel_addOperationExtension!";

+ return NeuronModel_addOperationExtension_;

+ }

+

NeuronModel_identifyInputsAndOutputs_Type

NeuronModel_identifyInputsAndOutputs() {

CHECK(NeuronModel_identifyInputsAndOutputs_ != nullptr)

@@ -130,6 +153,12 @@ class NeuronAdapter final {

return NeuronCompilation_finish_;

}

+ NeuronCompilation_createForDevices_Type NeuronCompilation_createForDevices() {

+ CHECK(NeuronCompilation_createForDevices_ != nullptr)

+ << "Cannot load NeuronCompilation_createForDevices!";

+ return NeuronCompilation_createForDevices_;

+ }

+

NeuronExecution_create_Type NeuronExecution_create() {

CHECK(NeuronExecution_create_ != nullptr)

<< "Cannot load NeuronExecution_create!";

@@ -160,6 +189,23 @@ class NeuronAdapter final {

return NeuronExecution_compute_;

}

+ Neuron_getDeviceCount_Type Neuron_getDeviceCount() {

+ CHECK(Neuron_getDeviceCount_ != nullptr)

+ << "Cannot load Neuron_getDeviceCount!";

+ return Neuron_getDeviceCount_;

+ }

+

+ Neuron_getDevice_Type Neuron_getDevice() {

+ CHECK(Neuron_getDevice_ != nullptr) << "Cannot load Neuron_getDevice!";

+ return Neuron_getDevice_;

+ }

+

+ NeuronDevice_getName_Type NeuronDevice_getName() {

+ CHECK(NeuronDevice_getName_ != nullptr)

+ << "Cannot load NeuronDevice_getName!";

+ return NeuronDevice_getName_;

+ }

+

private:

NeuronAdapter();

NeuronAdapter(const NeuronAdapter &) = delete;

@@ -176,16 +222,23 @@ class NeuronAdapter final {

NeuronModel_setOperandSymmPerChannelQuantParams_Type

NeuronModel_setOperandSymmPerChannelQuantParams_{nullptr};

NeuronModel_addOperation_Type NeuronModel_addOperation_{nullptr};

+ NeuronModel_addOperationExtension_Type NeuronModel_addOperationExtension_{

+ nullptr};

NeuronModel_identifyInputsAndOutputs_Type

NeuronModel_identifyInputsAndOutputs_{nullptr};

NeuronCompilation_create_Type NeuronCompilation_create_{nullptr};

NeuronCompilation_free_Type NeuronCompilation_free_{nullptr};

NeuronCompilation_finish_Type NeuronCompilation_finish_{nullptr};

+ NeuronCompilation_createForDevices_Type NeuronCompilation_createForDevices_{

+ nullptr};

NeuronExecution_create_Type NeuronExecution_create_{nullptr};

NeuronExecution_free_Type NeuronExecution_free_{nullptr};

NeuronExecution_setInput_Type NeuronExecution_setInput_{nullptr};

NeuronExecution_setOutput_Type NeuronExecution_setOutput_{nullptr};

NeuronExecution_compute_Type NeuronExecution_compute_{nullptr};

+ Neuron_getDeviceCount_Type Neuron_getDeviceCount_{nullptr};

+ Neuron_getDevice_Type Neuron_getDevice_{nullptr};

+ NeuronDevice_getName_Type NeuronDevice_getName_{nullptr};

};

} // namespace lite

} // namespace paddle

diff --git a/lite/backends/arm/math/CMakeLists.txt b/lite/backends/arm/math/CMakeLists.txt

index 244467d62492bc3017ebdb6144b49ccb9fcd30c1..88c449e6a9d8b8078802e90dded5db1162459d3f 100644

--- a/lite/backends/arm/math/CMakeLists.txt

+++ b/lite/backends/arm/math/CMakeLists.txt

@@ -127,8 +127,10 @@ if (NOT HAS_ARM_MATH_LIB_DIR)

anchor_generator.cc

split_merge_lod_tenosr.cc

reduce_prod.cc

+ reduce_sum.cc

lstm.cc

clip.cc

pixel_shuffle.cc

+ scatter.cc

DEPS ${lite_kernel_deps} context tensor)

endif()

diff --git a/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc b/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc

index c998ddc3a34c2f6194a5156b7d04b7a9db3fbcef..b4539db98c3ffb1a143c38dd3c4dd9e9924bd63e 100644

--- a/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc

+++ b/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc

@@ -25,6 +25,73 @@ namespace paddle {

namespace lite {

namespace arm {

namespace math {

+void conv_3x3s1_depthwise_fp32_bias(const float* i_data,

+ float* o_data,

+ int bs,

+ int oc,

+ int oh,

+ int ow,

+ int ic,

+ int ih,

+ int win,

+ const float* weights,

+ const float* bias,

+ float* relu_ptr,

+ float* six_ptr,

+ float* scale_ptr,

+ const operators::ConvParam& param,

+ ARMContext* ctx);

+

+void conv_3x3s1_depthwise_fp32_relu(const float* i_data,

+ float* o_data,

+ int bs,

+ int oc,

+ int oh,

+ int ow,

+ int ic,

+ int ih,

+ int win,

+ const float* weights,

+ const float* bias,

+ float* relu_ptr,

+ float* six_ptr,

+ float* scale_ptr,

+ const operators::ConvParam& param,

+ ARMContext* ctx);

+

+void conv_3x3s1_depthwise_fp32_relu6(const float* i_data,

+ float* o_data,

+ int bs,

+ int oc,

+ int oh,

+ int ow,

+ int ic,

+ int ih,

+ int win,

+ const float* weights,

+ const float* bias,

+ float* relu_ptr,

+ float* six_ptr,

+ float* scale_ptr,

+ const operators::ConvParam& param,

+ ARMContext* ctx);

+

+void conv_3x3s1_depthwise_fp32_leakyRelu(const float* i_data,

+ float* o_data,

+ int bs,

+ int oc,

+ int oh,

+ int ow,

+ int ic,

+ int ih,

+ int win,

+ const float* weights,

+ const float* bias,

+ float* relu_ptr,

+ float* six_ptr,

+ float* scale_ptr,

+ const operators::ConvParam& param,

+ ARMContext* ctx);

// clang-format off

#ifdef __aarch64__

#define COMPUTE \

@@ -335,7 +402,6 @@ namespace math {

"ldr r0, [%[outl]] @ load outc00 to r0\n" \

"vmla.f32 q12, q5, q0 @ w8 * inr32\n" \

"vmla.f32 q13, q5, q1 @ w8 * inr33\n" \

- "ldr r5, [%[outl], #36] @ load flag_relu to r5\n" \

"vmla.f32 q14, q5, q2 @ w8 * inr34\n" \

"vmla.f32 q15, q5, q3 @ w8 * inr35\n" \

"ldr r1, [%[outl], #4] @ load outc10 to r1\n" \

@@ -406,7 +472,6 @@ namespace math {

"vtrn.32 q10, q11 @ r0: q10: a2a3c2c3, q11: b2b3d2d3\n" \

"vtrn.32 q12, q13 @ r1: q12: a0a1c0c1, q13: b0b1d0d1\n" \

"vtrn.32 q14, q15 @ r1: q14: a2a3c2c3, q15: b2b3d2d3\n" \

- "ldr r5, [%[outl], #20] @ load outc11 to r5\n" \

"vswp d17, d20 @ r0: q8 : a0a1a2a3, q10: c0c1c2c3 \n" \

"vswp d19, d22 @ r0: q9 : b0b1b2b3, q11: d0d1d2d3 \n" \

"vswp d25, d28 @ r1: q12: a0a1a2a3, q14: c0c1c2c3 \n" \

@@ -417,12 +482,13 @@ namespace math {

"vst1.32 {d18-d19}, [r1] @ save outc10\n" \

"vst1.32 {d20-d21}, [r2] @ save outc20\n" \

"vst1.32 {d22-d23}, [r3] @ save outc30\n" \

+ "ldr r0, [%[outl], #20] @ load outc11 to r5\n" \

+ "ldr r1, [%[outl], #24] @ load outc21 to r0\n" \

+ "ldr r2, [%[outl], #28] @ load outc31 to r1\n" \

"vst1.32 {d24-d25}, [r4] @ save outc01\n" \

- "vst1.32 {d26-d27}, [r5] @ save outc11\n" \

- "ldr r0, [%[outl], #24] @ load outc21 to r0\n" \

- "ldr r1, [%[outl], #28] @ load outc31 to r1\n" \

- "vst1.32 {d28-d29}, [r0] @ save outc21\n" \

- "vst1.32 {d30-d31}, [r1] @ save outc31\n" \

+ "vst1.32 {d26-d27}, [r0] @ save outc11\n" \

+ "vst1.32 {d28-d29}, [r1] @ save outc21\n" \

+ "vst1.32 {d30-d31}, [r2] @ save outc31\n" \

"b 3f @ branch end\n" \

"2: \n" \

"vst1.32 {d16-d17}, [%[out0]]! @ save remain to pre_out\n" \

@@ -436,291 +502,86 @@ namespace math {

"3: \n"

#endif

// clang-format on

-void act_switch_3x3s1(const float* inr0,

- const float* inr1,

- const float* inr2,

- const float* inr3,

- float* out0,

- const float* weight_c,

- float flag_mask,

- void* outl_ptr,

- float32x4_t w0,

- float32x4_t w1,

- float32x4_t w2,

- float32x4_t w3,

- float32x4_t w4,

- float32x4_t w5,

- float32x4_t w6,

- float32x4_t w7,

- float32x4_t w8,

- float32x4_t vbias,

- const operators::ActivationParam act_param) {

- bool has_active = act_param.has_active;

- if (has_active) {

+void conv_3x3s1_depthwise_fp32(const float* i_data,

+ float* o_data,

+ int bs,

+ int oc,

+ int oh,

+ int ow,

+ int ic,

+ int ih,

+ int win,

+ const float* weights,

+ const float* bias,

+ const operators::ConvParam& param,

+ const operators::ActivationParam act_param,

+ ARMContext* ctx) {

+ float six_ptr[4] = {0.f, 0.f, 0.f, 0.f};

+ float scale_ptr[4] = {1.f, 1.f, 1.f, 1.f};

+ float relu_ptr[4] = {0.f, 0.f, 0.f, 0.f};

+ if (act_param.has_active) {

switch (act_param.active_type) {

case lite_api::ActivationType::kRelu:

-#ifdef __aarch64__

- asm volatile(COMPUTE RELU STORE

- : [inr0] "+r"(inr0),

- [inr1] "+r"(inr1),

- [inr2] "+r"(inr2),

- [inr3] "+r"(inr3),

- [out] "+r"(out0)

- : [w0] "w"(w0),

- [w1] "w"(w1),

- [w2] "w"(w2),

- [w3] "w"(w3),

- [w4] "w"(w4),

- [w5] "w"(w5),

- [w6] "w"(w6),

- [w7] "w"(w7),

- [w8] "w"(w8),

- [vbias] "w"(vbias),

- [outl] "r"(outl_ptr),

- [flag_mask] "r"(flag_mask)

- : "cc",

- "memory",

- "v0",

- "v1",

- "v2",

- "v3",

- "v4",

- "v5",

- "v6",

- "v7",

- "v8",

- "v9",

- "v10",

- "v11",

- "v15",

- "v16",

- "v17",

- "v18",

- "v19",

- "v20",

- "v21",

- "v22",

- "x0",

- "x1",

- "x2",

- "x3",

- "x4",

- "x5",

- "x6",

- "x7");

-#else

-#if 1 // def LITE_WITH_ARM_CLANG

-#else

- asm volatile(COMPUTE RELU STORE

- : [r0] "+r"(inr0),

- [r1] "+r"(inr1),

- [r2] "+r"(inr2),

- [r3] "+r"(inr3),

- [out0] "+r"(out0),

- [wc0] "+r"(weight_c)

- : [flag_mask] "r"(flag_mask), [outl] "r"(outl_ptr)

- : "cc",

- "memory",

- "q0",

- "q1",

- "q2",

- "q3",

- "q4",

- "q5",

- "q6",

- "q7",

- "q8",

- "q9",

- "q10",

- "q11",

- "q12",

- "q13",

- "q14",

- "q15",

- "r0",

- "r1",

- "r2",

- "r3",

- "r4",

- "r5");

-#endif

-#endif

+ conv_3x3s1_depthwise_fp32_relu(i_data,

+ o_data,

+ bs,

+ oc,

+ oh,

+ ow,

+ ic,

+ ih,

+ win,

+ weights,

+ bias,

+ relu_ptr,

+ six_ptr,

+ scale_ptr,

+ param,

+ ctx);

break;

case lite_api::ActivationType::kRelu6:

-#ifdef __aarch64__

- asm volatile(COMPUTE RELU RELU6 STORE

- : [inr0] "+r"(inr0),

- [inr1] "+r"(inr1),

- [inr2] "+r"(inr2),

- [inr3] "+r"(inr3),

- [out] "+r"(out0)

- : [w0] "w"(w0),

- [w1] "w"(w1),

- [w2] "w"(w2),

- [w3] "w"(w3),

- [w4] "w"(w4),

- [w5] "w"(w5),

- [w6] "w"(w6),

- [w7] "w"(w7),

- [w8] "w"(w8),

- [vbias] "w"(vbias),

- [outl] "r"(outl_ptr),

- [flag_mask] "r"(flag_mask)

- : "cc",

- "memory",

- "v0",

- "v1",

- "v2",

- "v3",

- "v4",

- "v5",

- "v6",

- "v7",

- "v8",

- "v9",

- "v10",

- "v11",

- "v15",

- "v16",

- "v17",

- "v18",

- "v19",

- "v20",

- "v21",

- "v22",

- "x0",

- "x1",

- "x2",

- "x3",

- "x4",

- "x5",

- "x6",

- "x7");

-#else

-#if 1 // def LITE_WITH_ARM_CLANG

-#else

- asm volatile(COMPUTE RELU RELU6 STORE

- : [r0] "+r"(inr0),

- [r1] "+r"(inr1),

- [r2] "+r"(inr2),

- [r3] "+r"(inr3),

- [out0] "+r"(out0),

- [wc0] "+r"(weight_c)

- : [flag_mask] "r"(flag_mask), [outl] "r"(outl_ptr)

- : "cc",

- "memory",

- "q0",

- "q1",

- "q2",

- "q3",

- "q4",

- "q5",

- "q6",

- "q7",

- "q8",

- "q9",

- "q10",

- "q11",

- "q12",

- "q13",

- "q14",

- "q15",

- "r0",

- "r1",

- "r2",

- "r3",

- "r4",

- "r5");

-#endif

-#endif

+ six_ptr[0] = act_param.Relu_clipped_coef;

+ six_ptr[1] = act_param.Relu_clipped_coef;

+ six_ptr[2] = act_param.Relu_clipped_coef;

+ six_ptr[3] = act_param.Relu_clipped_coef;

+ conv_3x3s1_depthwise_fp32_relu6(i_data,

+ o_data,

+ bs,

+ oc,

+ oh,

+ ow,

+ ic,

+ ih,

+ win,

+ weights,

+ bias,

+ relu_ptr,

+ six_ptr,

+ scale_ptr,

+ param,

+ ctx);

break;

case lite_api::ActivationType::kLeakyRelu:

-#ifdef __aarch64__

- asm volatile(COMPUTE LEAKY_RELU STORE

- : [inr0] "+r"(inr0),

- [inr1] "+r"(inr1),

- [inr2] "+r"(inr2),

- [inr3] "+r"(inr3),

- [out] "+r"(out0)

- : [w0] "w"(w0),

- [w1] "w"(w1),

- [w2] "w"(w2),

- [w3] "w"(w3),

- [w4] "w"(w4),

- [w5] "w"(w5),

- [w6] "w"(w6),

- [w7] "w"(w7),

- [w8] "w"(w8),

- [vbias] "w"(vbias),

- [outl] "r"(outl_ptr),

- [flag_mask] "r"(flag_mask)

- : "cc",

- "memory",

- "v0",

- "v1",

- "v2",

- "v3",

- "v4",

- "v5",

- "v6",

- "v7",

- "v8",

- "v9",

- "v10",

- "v11",

- "v15",

- "v16",

- "v17",

- "v18",

- "v19",

- "v20",

- "v21",

- "v22",

- "x0",

- "x1",

- "x2",

- "x3",

- "x4",

- "x5",

- "x6",

- "x7");

-#else

-#if 1 // def LITE_WITH_ARM_CLANG

-#else

- asm volatile(COMPUTE LEAKY_RELU STORE

- : [r0] "+r"(inr0),

- [r1] "+r"(inr1),

- [r2] "+r"(inr2),

- [r3] "+r"(inr3),

- [out0] "+r"(out0),

- [wc0] "+r"(weight_c)

- : [flag_mask] "r"(flag_mask), [outl] "r"(outl_ptr)

- : "cc",

- "memory",

- "q0",

- "q1",

- "q2",

- "q3",

- "q4",

- "q5",

- "q6",

- "q7",

- "q8",

- "q9",

- "q10",

- "q11",

- "q12",

- "q13",

- "q14",

- "q15",

- "r0",

- "r1",

- "r2",

- "r3",

- "r4",

- "r5");

-#endif

-#endif

+ scale_ptr[0] = act_param.Leaky_relu_alpha;

+ scale_ptr[1] = act_param.Leaky_relu_alpha;

+ scale_ptr[2] = act_param.Leaky_relu_alpha;

+ scale_ptr[3] = act_param.Leaky_relu_alpha;

+ conv_3x3s1_depthwise_fp32_leakyRelu(i_data,

+ o_data,

+ bs,

+ oc,

+ oh,

+ ow,

+ ic,

+ ih,

+ win,

+ weights,

+ bias,

+ relu_ptr,

+ six_ptr,

+ scale_ptr,

+ param,

+ ctx);

break;

default:

LOG(FATAL) << "this act_type: "

@@ -728,108 +589,289 @@ void act_switch_3x3s1(const float* inr0,

<< " fuse not support";

}

} else {

-#ifdef __aarch64__

- asm volatile(COMPUTE STORE

- : [inr0] "+r"(inr0),

- [inr1] "+r"(inr1),

- [inr2] "+r"(inr2),

- [inr3] "+r"(inr3),

- [out] "+r"(out0)

- : [w0] "w"(w0),

- [w1] "w"(w1),

- [w2] "w"(w2),

- [w3] "w"(w3),

- [w4] "w"(w4),

- [w5] "w"(w5),

- [w6] "w"(w6),

- [w7] "w"(w7),

- [w8] "w"(w8),

- [vbias] "w"(vbias),

- [outl] "r"(outl_ptr),

- [flag_mask] "r"(flag_mask)

- : "cc",

- "memory",

- "v0",

- "v1",

- "v2",

- "v3",

- "v4",

- "v5",

- "v6",

- "v7",

- "v8",

- "v9",

- "v10",

- "v11",

- "v15",

- "v16",

- "v17",

- "v18",

- "v19",

- "v20",

- "v21",

- "v22",

- "x0",

- "x1",

- "x2",

- "x3",

- "x4",

- "x5",

- "x6",

- "x7");

-#else

-#if 1 // def LITE_WITH_ARM_CLANG

+ conv_3x3s1_depthwise_fp32_bias(i_data,

+ o_data,

+ bs,

+ oc,

+ oh,

+ ow,

+ ic,

+ ih,

+ win,

+ weights,

+ bias,

+ relu_ptr,

+ six_ptr,

+ scale_ptr,

+ param,

+ ctx);

+ }

+}

+

+void conv_3x3s1_depthwise_fp32_bias(const float* i_data,

+ float* o_data,

+ int bs,

+ int oc,

+ int oh,

+ int ow,

+ int ic,

+ int ih,

+ int win,

+ const float* weights,

+ const float* bias,

+ float* relu_ptr,

+ float* six_ptr,

+ float* scale_ptr,

+ const operators::ConvParam& param,

+ ARMContext* ctx) {

+ int threads = ctx->threads();

+

+ auto paddings = *param.paddings;

+ const int pad_h = paddings[0];

+ const int pad_w = paddings[2];

+

+ const int out_c_block = 4;

+ const int out_h_kernel = 2;

+ const int out_w_kernel = 4;

+ const int win_ext = ow + 2;

+ const int ow_round = ROUNDUP(ow, 4);

+ const int win_round = ROUNDUP(win_ext, 4);

+ const int hin_round = oh + 2;

+ const int prein_size = win_round * hin_round * out_c_block;

+ auto workspace_size =

+ threads * prein_size + win_round /*tmp zero*/ + ow_round /*tmp writer*/;

+ ctx->ExtendWorkspace(sizeof(float) * workspace_size);

+

+ bool flag_bias = param.bias != nullptr;

+

+ /// get workspace

+ LOG(INFO) << "conv_3x3s1_depthwise_fp32_bias: ";

+ float* ptr_zero = ctx->workspace_data();

+ memset(ptr_zero, 0, sizeof(float) * win_round);

+ float* ptr_write = ptr_zero + win_round;

+

+ int size_in_channel = win * ih;

+ int size_out_channel = ow * oh;

+

+ int ws = -pad_w;

+ int we = ws + win_round;

+ int hs = -pad_h;

+ int he = hs + hin_round;

+ int w_loop = ow_round / 4;

+ auto remain = w_loop * 4 - ow;

+ bool flag_remain = remain > 0;

+ remain = 4 - remain;

+ remain = remain > 0 ? remain : 0;

+ int row_len = win_round * out_c_block;

+

+ for (int n = 0; n < bs; ++n) {

+ const float* din_batch = i_data + n * ic * size_in_channel;

+ float* dout_batch = o_data + n * oc * size_out_channel;

+#pragma omp parallel for num_threads(threads)

+ for (int c = 0; c < oc; c += out_c_block) {

+#ifdef ARM_WITH_OMP

+ float* pre_din = ptr_write + ow_round + omp_get_thread_num() * prein_size;

#else

- asm volatile(COMPUTE STORE

- : [r0] "+r"(inr0),

- [r1] "+r"(inr1),

- [r2] "+r"(inr2),

- [r3] "+r"(inr3),

- [out0] "+r"(out0),

- [wc0] "+r"(weight_c)

- : [flag_mask] "r"(flag_mask), [outl] "r"(outl_ptr)

- : "cc",

- "memory",

- "q0",

- "q1",

- "q2",

- "q3",

- "q4",

- "q5",

- "q6",

- "q7",

- "q8",

- "q9",

- "q10",

- "q11",

- "q12",

- "q13",

- "q14",

- "q15",

- "r0",

- "r1",

- "r2",

- "r3",

- "r4",

- "r5");

+ float* pre_din = ptr_write + ow_round;

#endif

+ /// const array size

+ float pre_out[out_c_block * out_w_kernel * out_h_kernel]; // NOLINT

+ prepack_input_nxwc4_dw(

+ din_batch, pre_din, c, hs, he, ws, we, ic, win, ih, ptr_zero);

+ const float* weight_c = weights + c * 9; // kernel_w * kernel_h

+ float* dout_c00 = dout_batch + c * size_out_channel;

+ float bias_local[4] = {0, 0, 0, 0};

+ if (flag_bias) {

+ bias_local[0] = bias[c];

+ bias_local[1] = bias[c + 1];

+ bias_local[2] = bias[c + 2];

+ bias_local[3] = bias[c + 3];

+ }

+ float32x4_t vbias = vld1q_f32(bias_local);

+#ifdef __aarch64__

+ float32x4_t w0 = vld1q_f32(weight_c); // w0, v23

+ float32x4_t w1 = vld1q_f32(weight_c + 4); // w1, v24

+ float32x4_t w2 = vld1q_f32(weight_c + 8); // w2, v25

+ float32x4_t w3 = vld1q_f32(weight_c + 12); // w3, v26

+ float32x4_t w4 = vld1q_f32(weight_c + 16); // w4, v27

+ float32x4_t w5 = vld1q_f32(weight_c + 20); // w5, v28

+ float32x4_t w6 = vld1q_f32(weight_c + 24); // w6, v29

+ float32x4_t w7 = vld1q_f32(weight_c + 28); // w7, v30

+ float32x4_t w8 = vld1q_f32(weight_c + 32); // w8, v31

+#endif

+ for (int h = 0; h < oh; h += out_h_kernel) {

+ float* outc00 = dout_c00 + h * ow;

+ float* outc01 = outc00 + ow;

+ float* outc10 = outc00 + size_out_channel;

+ float* outc11 = outc10 + ow;

+ float* outc20 = outc10 + size_out_channel;

+ float* outc21 = outc20 + ow;

+ float* outc30 = outc20 + size_out_channel;

+ float* outc31 = outc30 + ow;

+ const float* inr0 = pre_din + h * row_len;

+ const float* inr1 = inr0 + row_len;

+ const float* inr2 = inr1 + row_len;

+ const float* inr3 = inr2 + row_len;

+ if (c + out_c_block > oc) {

+ switch (c + out_c_block - oc) {

+ case 3: // outc10-outc30 is ptr_write and extra

+ outc10 = ptr_write;

+ outc11 = ptr_write;

+ case 2: // outc20-outc30 is ptr_write and extra

+ outc20 = ptr_write;

+ outc21 = ptr_write;

+ case 1: // outc30 is ptr_write and extra

+ outc30 = ptr_write;

+ outc31 = ptr_write;

+ default:

+ break;

+ }

+ }

+ if (h + out_h_kernel > oh) {

+ outc01 = ptr_write;

+ outc11 = ptr_write;

+ outc21 = ptr_write;

+ outc31 = ptr_write;

+ }

+

+ float* outl[] = {outc00,

+ outc10,

+ outc20,

+ outc30,

+ outc01,

+ outc11,

+ outc21,

+ outc31,

+ reinterpret_cast(bias_local),

+ reinterpret_cast(relu_ptr),

+ reinterpret_cast(six_ptr),

+ reinterpret_cast(scale_ptr)};

+ void* outl_ptr = reinterpret_cast(outl);

+ for (int w = 0; w < w_loop; ++w) {

+ bool flag_mask = (w == w_loop - 1) && flag_remain;

+ float* out0 = pre_out;

+#ifdef __aarch64__

+ asm volatile(COMPUTE STORE

+ : [inr0] "+r"(inr0),

+ [inr1] "+r"(inr1),

+ [inr2] "+r"(inr2),

+ [inr3] "+r"(inr3),

+ [out] "+r"(out0)

+ : [w0] "w"(w0),

+ [w1] "w"(w1),

+ [w2] "w"(w2),

+ [w3] "w"(w3),

+ [w4] "w"(w4),

+ [w5] "w"(w5),

+ [w6] "w"(w6),

+ [w7] "w"(w7),

+ [w8] "w"(w8),

+ [vbias] "w"(vbias),

+ [outl] "r"(outl_ptr),

+ [flag_mask] "r"(flag_mask)

+ : "cc",

+ "memory",

+ "v0",

+ "v1",

+ "v2",

+ "v3",

+ "v4",

+ "v5",

+ "v6",

+ "v7",

+ "v8",

+ "v9",

+ "v10",

+ "v11",

+ "v15",

+ "v16",

+ "v17",

+ "v18",

+ "v19",

+ "v20",

+ "v21",

+ "v22",

+ "x0",

+ "x1",

+ "x2",

+ "x3",

+ "x4",

+ "x5",

+ "x6",

+ "x7");

+#else

+ asm volatile(COMPUTE STORE

+ : [r0] "+r"(inr0),

+ [r1] "+r"(inr1),

+ [r2] "+r"(inr2),

+ [r3] "+r"(inr3),

+ [out0] "+r"(out0),

+ [wc0] "+r"(weight_c)

+ : [flag_mask] "r"(flag_mask), [outl] "r"(outl_ptr)

+ : "cc",

+ "memory",

+ "q0",

+ "q1",

+ "q2",

+ "q3",

+ "q4",

+ "q5",

+ "q6",

+ "q7",

+ "q8",

+ "q9",

+ "q10",

+ "q11",

+ "q12",

+ "q13",

+ "q14",

+ "q15",

+ "r0",

+ "r1",

+ "r2",

+ "r3",

+ "r4");

#endif

+ outl[0] += 4;

+ outl[1] += 4;

+ outl[2] += 4;

+ outl[3] += 4;

+ outl[4] += 4;

+ outl[5] += 4;

+ outl[6] += 4;

+ outl[7] += 4;

+ if (flag_mask) {

+ memcpy(outl[0] - 4, pre_out, remain * sizeof(float));

+ memcpy(outl[1] - 4, pre_out + 4, remain * sizeof(float));

+ memcpy(outl[2] - 4, pre_out + 8, remain * sizeof(float));

+ memcpy(outl[3] - 4, pre_out + 12, remain * sizeof(float));

+ memcpy(outl[4] - 4, pre_out + 16, remain * sizeof(float));

+ memcpy(outl[5] - 4, pre_out + 20, remain * sizeof(float));

+ memcpy(outl[6] - 4, pre_out + 24, remain * sizeof(float));

+ memcpy(outl[7] - 4, pre_out + 28, remain * sizeof(float));

+ }

+ }

+ }

+ }

}

}

-void conv_3x3s1_depthwise_fp32(const float* i_data,

- float* o_data,

- int bs,

- int oc,

- int oh,

- int ow,

- int ic,

- int ih,

- int win,

- const float* weights,

- const float* bias,

- const operators::ConvParam& param,

- const operators::ActivationParam act_param,

- ARMContext* ctx) {

+

+void conv_3x3s1_depthwise_fp32_relu(const float* i_data,

+ float* o_data,

+ int bs,

+ int oc,

+ int oh,

+ int ow,

+ int ic,

+ int ih,

+ int win,

+ const float* weights,

+ const float* bias,

+ float* relu_ptr,

+ float* six_ptr,

+ float* scale_ptr,

+ const operators::ConvParam& param,

+ ARMContext* ctx) {

int threads = ctx->threads();

auto paddings = *param.paddings;

@@ -869,31 +911,6 @@ void conv_3x3s1_depthwise_fp32(const float* i_data,

remain = remain > 0 ? remain : 0;

int row_len = win_round * out_c_block;

- float six_ptr[4] = {0.f, 0.f, 0.f, 0.f};

- float scale_ptr[4] = {1.f, 1.f, 1.f, 1.f};

- float relu_ptr[4] = {0.f, 0.f, 0.f, 0.f};

- if (act_param.has_active) {

- switch (act_param.active_type) {

- case lite_api::ActivationType::kRelu:

- break;

- case lite_api::ActivationType::kRelu6:

- six_ptr[0] = act_param.Relu_clipped_coef;

- six_ptr[1] = act_param.Relu_clipped_coef;

- six_ptr[2] = act_param.Relu_clipped_coef;

- six_ptr[3] = act_param.Relu_clipped_coef;

- break;

- case lite_api::ActivationType::kLeakyRelu:

- scale_ptr[0] = act_param.Leaky_relu_alpha;

- scale_ptr[1] = act_param.Leaky_relu_alpha;

- scale_ptr[2] = act_param.Leaky_relu_alpha;

- scale_ptr[3] = act_param.Leaky_relu_alpha;

- break;

- default:

- LOG(FATAL) << "this act_type: "

- << static_cast(act_param.active_type)

- << " fuse not support";

- }

- }

for (int n = 0; n < bs; ++n) {

const float* din_batch = i_data + n * ic * size_in_channel;

float* dout_batch = o_data + n * oc * size_out_channel;

@@ -944,13 +961,13 @@ void conv_3x3s1_depthwise_fp32(const float* i_data,

const float* inr3 = inr2 + row_len;