Merge branch 'develop' of https://github.com/PaddlePaddle/paddle-mobile into dev-latest

Showing

benchmark/arm_benchmark.md

0 → 100644

benchmark/metal_benchmark.md

0 → 100644

doc/development_arm_linux.md

0 → 100644

doc/development_ios.md

0 → 100644

doc/images/devices.png

已删除

100644 → 0

116.0 KB

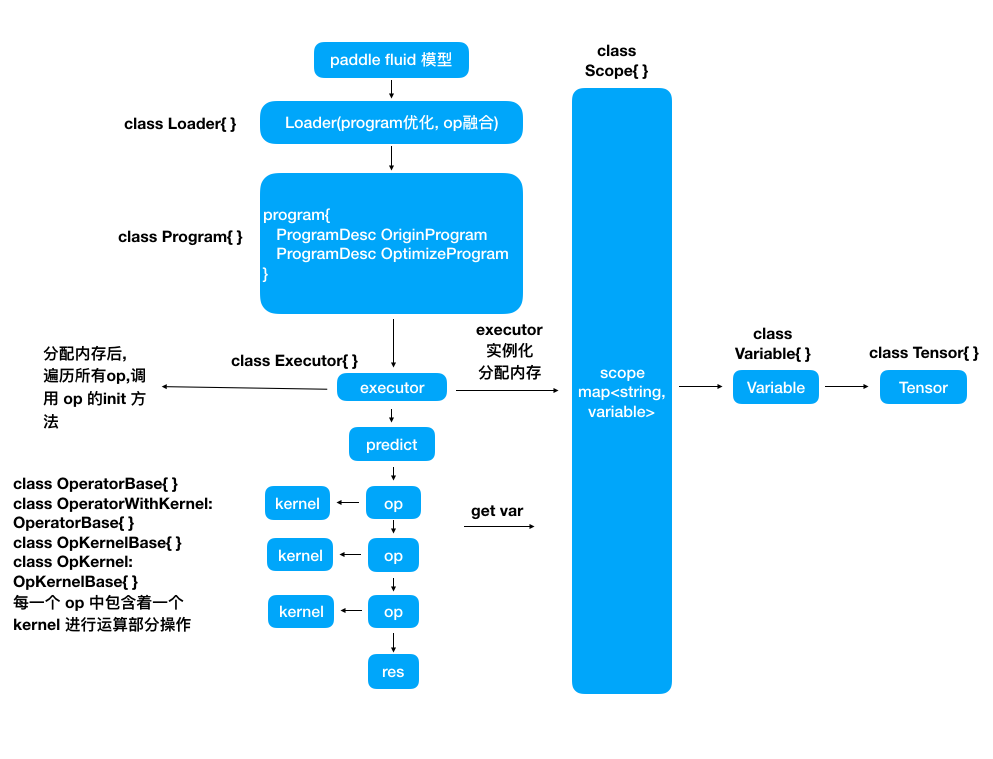

doc/images/flow_chart.png

已删除

100644 → 0

110.3 KB

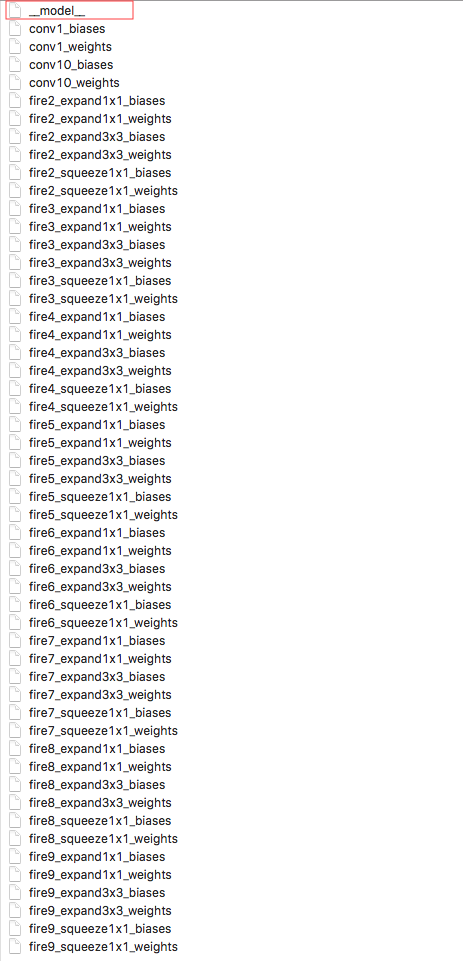

doc/images/model_desc.png

已删除

100644 → 0

162.1 KB

10.1 KB

src/framework/mixed_vector.h

0 → 100644

src/framework/selected_rows.cpp

0 → 100644

src/framework/selected_rows.h

0 → 100644

src/operators/kernel/sum_kernel.h

0 → 100644

src/operators/math/gpc.cpp

0 → 100644

此差异已折叠。

src/operators/math/gpc.h

0 → 100644

此差异已折叠。

src/operators/math/poly_util.cpp

0 → 100644

src/operators/math/poly_util.h

0 → 100644

此差异已折叠。

此差异已折叠。

src/operators/sum_op.cpp

0 → 100644

此差异已折叠。

src/operators/sum_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

test/operators/test_sum_op.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

文件已移动

文件已移动

此差异已折叠。

此差异已折叠。

此差异已折叠。

文件已移动

文件已移动

此差异已折叠。

此差异已折叠。