Merge pull request #1 from PaddlePaddle/develop

Merge newest version

Showing

文件已添加

doc/build.md

0 → 100644

doc/design_doc.md

0 → 100644

doc/development_doc.md

0 → 100644

doc/images/devices.png

0 → 100644

116.0 KB

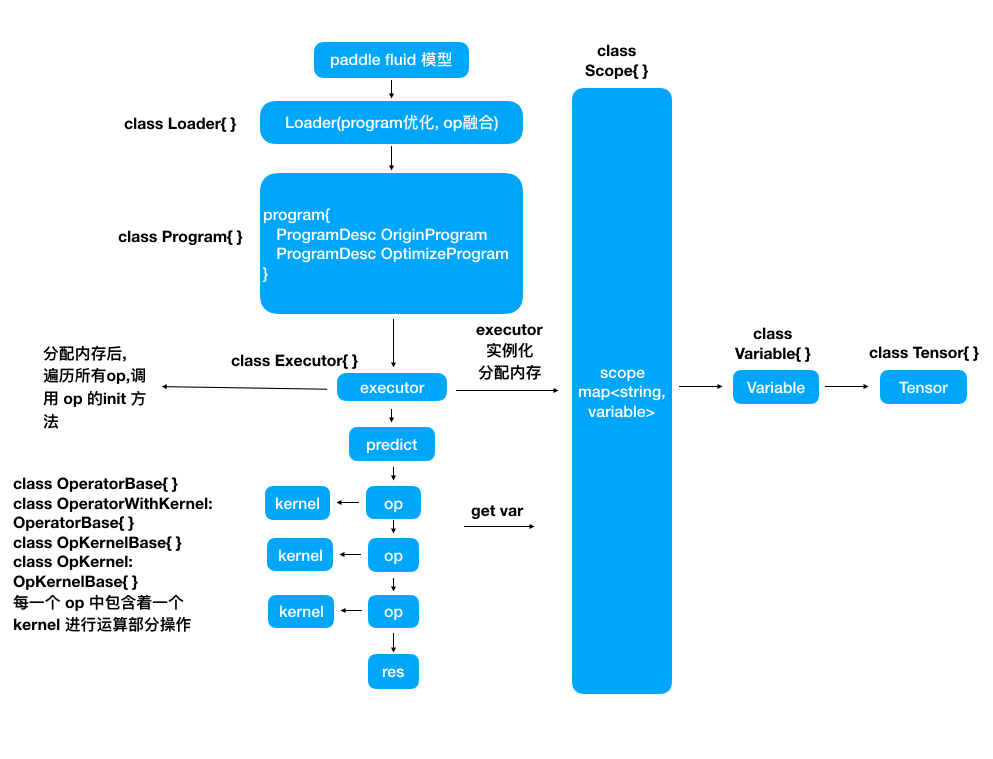

doc/images/flow_chart.png

0 → 100644

110.3 KB

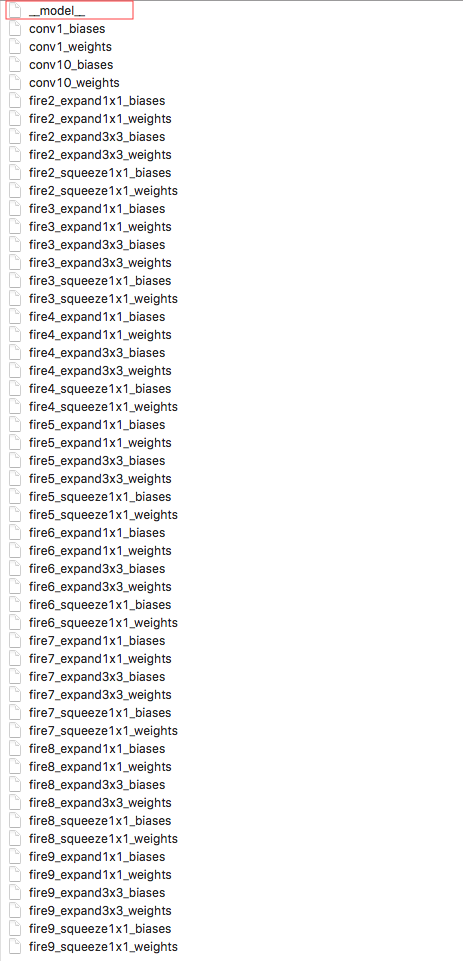

doc/images/model_desc.png

0 → 100644

162.1 KB

10.1 KB

src/common/common.h

0 → 100644

src/io/loader.cpp

0 → 100644

src/io/loader.h

0 → 100644

src/io/paddle_mobile.cpp

0 → 100644

src/io/paddle_mobile.h

0 → 100644

src/ios_io/PaddleMobile.h

0 → 100644

src/ios_io/PaddleMobile.mm

0 → 100644

src/ios_io/op_symbols.h

0 → 100644

src/operators/dropout_op.cpp

0 → 100644

src/operators/dropout_op.h

0 → 100644

src/operators/im2sequence_op.cpp

0 → 100644

src/operators/im2sequence_op.h

0 → 100644

tools/arm-platform.cmake

0 → 100644

tools/op.cmake

0 → 100644