diff --git a/README.md b/README.md

index 83d0a986da1d73151b8915ec60e5aa2f711837b5..22b84888294b5ef60c3d91d7a7909aef8f601d81 100644

--- a/README.md

+++ b/README.md

@@ -1 +1,74 @@

-编译方法: ./lite/tools/build_bm.sh --target_name=bm --bm_sdk_root=/Paddle-Lite/third-party/bmnnsdk2-bm1684_v2.0.1 bm

+[中文版](./README_cn.md)

+

+# Paddle Lite

+

+

+[](https://paddlepaddle.github.io/Paddle-Lite/)

+[](LICENSE)

+

+

+

+Paddle Lite is an updated version of Paddle-Mobile, an open-open source deep learning framework designed to make it easy to perform inference on mobile, embeded, and IoT devices. It is compatible with PaddlePaddle and pre-trained models from other sources.

+

+For tutorials, please see [PaddleLite Document](https://paddlepaddle.github.io/Paddle-Lite/).

+

+## Key Features

+

+### Light Weight

+

+On mobile devices, execution module can be deployed without third-party libraries, because our excecution module and analysis module are decoupled.

+

+On ARM V7, only 800KB are taken up, while on ARM V8, 1.3MB are taken up with the 80 operators and 85 kernels in the dynamic libraries provided by Paddle Lite.

+

+Paddle Lite enables immediate inference without extra optimization.

+

+### High Performance

+

+Paddle Lite enables device-optimized kernels, maximizing ARM CPU performance.

+

+It also supports INT8 quantizations with [PaddleSlim model compression tools](https://github.com/PaddlePaddle/models/tree/v1.5/PaddleSlim), reducing the size of models and increasing the performance of models.

+

+On Huawei NPU and FPGA, the performance is also boosted.

+

+The latest benchmark is located at [benchmark](https://paddlepaddle.github.io/Paddle-Lite/develop/benchmark/)

+

+### High Compatibility

+

+Hardware compatibility: Paddle Lite supports a diversity of hardwares — ARM CPU, Mali GPU, Adreno GPU, Huawei NPU and FPGA. In the near future, we will also support AI microchips from Cambricon and Bitmain.

+

+Model compatibility: The Op of Paddle Lite is fully compatible to that of PaddlePaddle. The accuracy and performance of 18 models (mostly CV models and OCR models) and 85 operators have been validated. In the future, we will also support other models.

+

+Framework compatibility: In addition to models trained on PaddlePaddle, those trained on Caffe and TensorFlow can also be converted to be used on Paddle Lite, via [X2Paddle](https://github.com/PaddlePaddle/X2Paddle). In the future to come, we will also support models of ONNX format.

+

+## Architecture

+

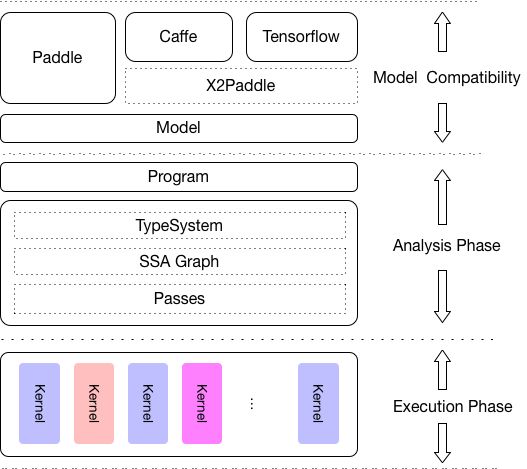

+Paddle Lite is designed to support a wide range of hardwares and devices, and it enables mixed execution of a single model on multiple devices, optimization on various phases, and leight-weighted applications on devices.

+

+

+

+As is shown in the figure above, analysis phase includes Machine IR module, and it enables optimizations like Op fusion and redundant computation pruning. Besides, excecution phase only involves Kernal exevution, so it can be deployed on its own to ensure maximized light-weighted deployment.

+

+## Key Info about the Update

+

+The earlier Paddle-Mobile was designed to be compatible with PaddlePaddle and multiple hardwares, including ARM CPU, Mali GPU, Adreno GPU, FPGA, ARM-Linux and Apple's GPU Metal. Within Baidu, inc, many product lines have been using Paddle-Mobile. For more details, please see: [mobile/README](https://github.com/PaddlePaddle/Paddle-Lite/blob/develop/mobile/README.md).

+

+As an update of Paddle-Mobile, Paddle Lite has incorporated many older capabilities into the [new architecture](https://github.com/PaddlePaddle/Paddle-Lite/tree/develop/lite). For the time being, the code of Paddle-mobile will be kept under the directory `mobile/`, before complete transfer to Paddle Lite.

+

+For demands of Apple's GPU Metal and web front end inference, please see `./metal` and `./web` . These two modules will be further developed and maintained.

+

+## Special Thanks

+

+Paddle Lite has referenced the following open-source projects:

+

+- [ARM compute library](http://agroup.baidu.com/paddle-infer/md/article/%28https://github.com/ARM-software/ComputeLibrary%29)

+- [Anakin](https://github.com/PaddlePaddle/Anakin). The optimizations under Anakin has been incorporated into Paddle Lite, and so there will not be any future updates of Anakin. As another high-performance inference project under PaddlePaddle, Anakin has been forward-looking and helpful to the making of Paddle Lite.

+

+

+## Feedback and Community Support

+

+- Questions, reports, and suggestions are welcome through Github Issues!

+- Forum: Opinions and questions are welcome at our [PaddlePaddle Forum](https://ai.baidu.com/forum/topic/list/168)!

+- WeChat Official Account: PaddlePaddle

+- QQ Group Chat: 696965088

+

+ WeChat Official Account QQ Group Chat

diff --git a/cmake/cross_compiling/ios.cmake b/cmake/cross_compiling/ios.cmake

index 76f62765aff791594123d689341b0876b3d0184d..0597ef0cc4ba4c0bcec172c767d66d0f362e1459 100644

--- a/cmake/cross_compiling/ios.cmake

+++ b/cmake/cross_compiling/ios.cmake

@@ -120,6 +120,7 @@

#

## Lite settings

+set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -flto")

if (ARM_TARGET_OS STREQUAL "ios")

set(PLATFORM "OS")

elseif(ARM_TARGET_OS STREQUAL "ios64")

diff --git a/cmake/cross_compiling/npu.cmake b/cmake/cross_compiling/npu.cmake

index 25aa4d2bc8c1c145e7a103c9164e1c9e231a8f9e..c22bb1db4fbf8a7370ff3e7c9aca40cc94d550a2 100644

--- a/cmake/cross_compiling/npu.cmake

+++ b/cmake/cross_compiling/npu.cmake

@@ -30,7 +30,7 @@ if(NOT NPU_DDK_INC)

message(FATAL_ERROR "Can not find HiAiModelManagerService.h in ${NPU_DDK_ROOT}/include")

endif()

-include_directories("${NPU_DDK_ROOT}")

+include_directories("${NPU_DDK_ROOT}/include")

set(NPU_SUB_LIB_PATH "lib64")

if(ARM_TARGET_ARCH_ABI STREQUAL "armv8")

diff --git a/lite/CMakeLists.txt b/lite/CMakeLists.txt

index c053d4ec2bd72258438694143fd08957cd0d35c0..cb6a872e061a51f142bd2301171f0559a1ccb129 100644

--- a/lite/CMakeLists.txt

+++ b/lite/CMakeLists.txt

@@ -224,10 +224,14 @@ if (LITE_WITH_LIGHT_WEIGHT_FRAMEWORK AND LITE_WITH_ARM)

COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/mobile_full/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/mobile_full/Makefile"

COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/mobile_light" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/mobile_light/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/mobile_light/Makefile"

- COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/mobile_detection" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

- COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/mobile_detection/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/mobile_detection/Makefile"

+ COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/ssd_detection" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

+ COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/ssd_detection/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/ssd_detection/Makefile"

+ COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/yolov3_detection" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

+ COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/yolov3_detection/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/yolov3_detection/Makefile"

COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/mobile_classify" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/mobile_classify/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/mobile_classify/Makefile"

+ COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/test_cv" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

+ COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/test_cv/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/test_cv/Makefile"

)

add_dependencies(publish_inference_android_cxx_demos logging gflags)

add_dependencies(publish_inference_cxx_lib publish_inference_android_cxx_demos)

@@ -239,10 +243,14 @@ if (LITE_WITH_LIGHT_WEIGHT_FRAMEWORK AND LITE_WITH_ARM)

COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/README.md" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/mobile_light" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/mobile_light/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/mobile_light/Makefile"

- COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/mobile_detection" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

- COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/mobile_detection/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/mobile_detection/Makefile"

+ COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/ssd_detection" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

+ COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/ssd_detection/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/ssd_detection/Makefile"

+ COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/yolov3_detection" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

+ COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/yolov3_detection/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/yolov3_detection/Makefile"

COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/mobile_classify" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/mobile_classify/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/mobile_classify/Makefile"

+ COMMAND cp -r "${CMAKE_SOURCE_DIR}/lite/demo/cxx/test_cv" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx"

+ COMMAND cp "${CMAKE_SOURCE_DIR}/lite/demo/cxx/makefiles/test_cv/Makefile.${ARM_TARGET_OS}.${ARM_TARGET_ARCH_ABI}" "${INFER_LITE_PUBLISH_ROOT}/demo/cxx/test_cv/Makefile"

)

add_dependencies(tiny_publish_cxx_lib publish_inference_android_cxx_demos)

endif()

diff --git a/lite/api/CMakeLists.txt b/lite/api/CMakeLists.txt

index d57496487a2bb2756f6755916b2761c04aa626d5..a1fde4c152c003e3b1adcea77aa78446ba7a1df5 100644

--- a/lite/api/CMakeLists.txt

+++ b/lite/api/CMakeLists.txt

@@ -35,6 +35,7 @@ if ((NOT LITE_ON_TINY_PUBLISH) AND (LITE_WITH_CUDA OR LITE_WITH_X86 OR ARM_TARGE

NPU_DEPS ${npu_kernels})

target_link_libraries(paddle_light_api_shared ${light_lib_DEPS} ${arm_kernels} ${npu_kernels})

+

if (LITE_WITH_NPU)

# Strips the symbols of our protobuf functions to fix the conflicts during

# loading HIAI builder libs (libhiai_ir.so and libhiai_ir_build.so)

@@ -45,8 +46,8 @@ else()

if ((ARM_TARGET_OS STREQUAL "android") OR (ARM_TARGET_OS STREQUAL "armlinux"))

add_library(paddle_light_api_shared SHARED "")

target_sources(paddle_light_api_shared PUBLIC ${__lite_cc_files} paddle_api.cc light_api.cc light_api_impl.cc)

- set_target_properties(paddle_light_api_shared PROPERTIES COMPILE_FLAGS "-flto -fdata-sections")

- add_dependencies(paddle_light_api_shared op_list_h kernel_list_h)

+ set_target_properties(paddle_light_api_shared PROPERTIES COMPILE_FLAGS "-flto -fdata-sections")

+ add_dependencies(paddle_light_api_shared op_list_h kernel_list_h)

if (LITE_WITH_NPU)

# Need to add HIAI runtime libs (libhiai.so) dependency

target_link_libraries(paddle_light_api_shared ${npu_builder_libs} ${npu_runtime_libs})

@@ -91,6 +92,7 @@ if (NOT LITE_ON_TINY_PUBLISH)

SRCS cxx_api.cc

DEPS ${cxx_api_deps} ${ops} ${host_kernels} program

X86_DEPS ${x86_kernels}

+ CUDA_DEPS ${cuda_kernels}

ARM_DEPS ${arm_kernels}

CV_DEPS paddle_cv_arm

NPU_DEPS ${npu_kernels}

@@ -129,7 +131,9 @@ if(WITH_TESTING)

DEPS cxx_api mir_passes lite_api_test_helper

${ops} ${host_kernels}

X86_DEPS ${x86_kernels}

+ CUDA_DEPS ${cuda_kernels}

ARM_DEPS ${arm_kernels}

+ CV_DEPS paddle_cv_arm

NPU_DEPS ${npu_kernels}

XPU_DEPS ${xpu_kernels}

CL_DEPS ${opencl_kernels}

@@ -293,12 +297,13 @@ if (LITE_ON_MODEL_OPTIMIZE_TOOL)

message(STATUS "Compiling model_optimize_tool")

lite_cc_binary(model_optimize_tool SRCS model_optimize_tool.cc cxx_api_impl.cc paddle_api.cc cxx_api.cc

DEPS gflags kernel op optimizer mir_passes utils)

- add_dependencies(model_optimize_tool op_list_h kernel_list_h all_kernel_faked_cc)

+ add_dependencies(model_optimize_tool op_list_h kernel_list_h all_kernel_faked_cc supported_kernel_op_info_h)

endif(LITE_ON_MODEL_OPTIMIZE_TOOL)

lite_cc_test(test_paddle_api SRCS paddle_api_test.cc DEPS paddle_api_full paddle_api_light

${ops}

ARM_DEPS ${arm_kernels}

+ CV_DEPS paddle_cv_arm

NPU_DEPS ${npu_kernels}

XPU_DEPS ${xpu_kernels}

CL_DEPS ${opencl_kernels}

@@ -327,13 +332,14 @@ if(NOT IOS)

lite_cc_binary(benchmark_bin SRCS benchmark.cc DEPS paddle_api_full paddle_api_light gflags utils

${ops} ${host_kernels}

ARM_DEPS ${arm_kernels}

+ CV_DEPS paddle_cv_arm

NPU_DEPS ${npu_kernels}

XPU_DEPS ${xpu_kernels}

CL_DEPS ${opencl_kernels}

FPGA_DEPS ${fpga_kernels}

X86_DEPS ${x86_kernels}

CUDA_DEPS ${cuda_kernels})

- lite_cc_binary(multithread_test SRCS lite_multithread_test.cc DEPS paddle_api_full paddle_api_light gflags utils

+ lite_cc_binary(multithread_test SRCS lite_multithread_test.cc DEPS paddle_api_full paddle_api_light gflags utils

${ops} ${host_kernels}

ARM_DEPS ${arm_kernels}

CV_DEPS paddle_cv_arm

diff --git a/lite/api/cxx_api.cc b/lite/api/cxx_api.cc

index 990d08f18f541088d797510e9dbd4881d42b164f..c1e9fc422450adf96d62c68d622907bd7e15b405 100644

--- a/lite/api/cxx_api.cc

+++ b/lite/api/cxx_api.cc

@@ -201,7 +201,11 @@ void Predictor::Build(const lite_api::CxxConfig &config,

const std::string &model_file = config.model_file();

const std::string ¶m_file = config.param_file();

const bool model_from_memory = config.model_from_memory();

- LOG(INFO) << "load from memory " << model_from_memory;

+ if (model_from_memory) {

+ LOG(INFO) << "Load model from memory.";

+ } else {

+ LOG(INFO) << "Load model from file.";

+ }

Build(model_path,

model_file,

diff --git a/lite/api/cxx_api_impl.cc b/lite/api/cxx_api_impl.cc

index 3e6e10103e9f3af51923459a5921f9781431f352..81ea60eac66849f8ce42fb8cb210226d18bbfa9b 100644

--- a/lite/api/cxx_api_impl.cc

+++ b/lite/api/cxx_api_impl.cc

@@ -42,11 +42,11 @@ void CxxPaddleApiImpl::Init(const lite_api::CxxConfig &config) {

#if (defined LITE_WITH_X86) && (defined PADDLE_WITH_MKLML) && \

!(defined LITE_ON_MODEL_OPTIMIZE_TOOL)

- int num_threads = config.cpu_math_library_num_threads();

+ int num_threads = config.x86_math_library_num_threads();

int real_num_threads = num_threads > 1 ? num_threads : 1;

paddle::lite::x86::MKL_Set_Num_Threads(real_num_threads);

omp_set_num_threads(real_num_threads);

- VLOG(3) << "set_cpu_math_library_math_threads() is set successfully and the "

+ VLOG(3) << "set_x86_math_library_math_threads() is set successfully and the "

"number of threads is:"

<< num_threads;

#endif

diff --git a/lite/api/lite_multithread_test.cc b/lite/api/lite_multithread_test.cc

old mode 100755

new mode 100644

diff --git a/lite/api/model_optimize_tool.cc b/lite/api/model_optimize_tool.cc

index b678c7ecd24c5ffbf3e9e3531264ac195c6a7325..fc23e0b54be41bff5b7b65b4e58908546b186bb4 100644

--- a/lite/api/model_optimize_tool.cc

+++ b/lite/api/model_optimize_tool.cc

@@ -16,8 +16,9 @@

#ifdef PADDLE_WITH_TESTING

#include

#endif

-// "all_kernel_faked.cc" and "kernel_src_map.h" are created automatically during

-// model_optimize_tool's compiling period

+// "supported_kernel_op_info.h", "all_kernel_faked.cc" and "kernel_src_map.h"

+// are created automatically during model_optimize_tool's compiling period

+#include

#include "all_kernel_faked.cc" // NOLINT

#include "kernel_src_map.h" // NOLINT

#include "lite/api/cxx_api.h"

@@ -25,8 +26,11 @@

#include "lite/api/paddle_use_ops.h"

#include "lite/api/paddle_use_passes.h"

#include "lite/core/op_registry.h"

+#include "lite/model_parser/compatible_pb.h"

+#include "lite/model_parser/pb/program_desc.h"

#include "lite/utils/cp_logging.h"

#include "lite/utils/string.h"

+#include "supported_kernel_op_info.h" // NOLINT

DEFINE_string(model_dir,

"",

@@ -62,10 +66,16 @@ DEFINE_string(valid_targets,

"The targets this model optimized for, should be one of (arm, "

"opencl, x86), splitted by space");

DEFINE_bool(prefer_int8_kernel, false, "Prefer to run model with int8 kernels");

+DEFINE_bool(print_supported_ops,

+ false,

+ "Print supported operators on the inputed target");

+DEFINE_bool(print_all_ops,

+ false,

+ "Print all the valid operators of Paddle-Lite");

+DEFINE_bool(print_model_ops, false, "Print operators in the input model");

namespace paddle {

namespace lite_api {

-

//! Display the kernel information.

void DisplayKernels() {

LOG(INFO) << ::paddle::lite::KernelRegistry::Global().DebugString();

@@ -130,9 +140,7 @@ void RunOptimize(const std::string& model_dir,

config.set_model_dir(model_dir);

config.set_model_file(model_file);

config.set_param_file(param_file);

-

config.set_valid_places(valid_places);

-

auto predictor = lite_api::CreatePaddlePredictor(config);

LiteModelType model_type;

@@ -168,6 +176,202 @@ void CollectModelMetaInfo(const std::string& output_dir,

lite::WriteLines(std::vector(total.begin(), total.end()),

output_path);

}

+void PrintOpsInfo(std::set valid_ops = {}) {

+ std::vector targets = {"kHost",

+ "kX86",

+ "kCUDA",

+ "kARM",

+ "kOpenCL",

+ "kFPGA",

+ "kNPU",

+ "kXPU",

+ "kAny",

+ "kUnk"};

+ int maximum_optype_length = 0;

+ for (auto it = supported_ops.begin(); it != supported_ops.end(); it++) {

+ maximum_optype_length = it->first.size() > maximum_optype_length

+ ? it->first.size()

+ : maximum_optype_length;

+ }

+ std::cout << std::setiosflags(std::ios::internal);

+ std::cout << std::setw(maximum_optype_length) << "OP_name";

+ for (int i = 0; i < targets.size(); i++) {

+ std::cout << std::setw(10) << targets[i].substr(1);

+ }

+ std::cout << std::endl;

+ if (valid_ops.empty()) {

+ for (auto it = supported_ops.begin(); it != supported_ops.end(); it++) {

+ std::cout << std::setw(maximum_optype_length) << it->first;

+ auto ops_valid_places = it->second;

+ for (int i = 0; i < targets.size(); i++) {

+ if (std::find(ops_valid_places.begin(),

+ ops_valid_places.end(),

+ targets[i]) != ops_valid_places.end()) {

+ std::cout << std::setw(10) << "Y";

+ } else {

+ std::cout << std::setw(10) << " ";

+ }

+ }

+ std::cout << std::endl;

+ }

+ } else {

+ for (auto op = valid_ops.begin(); op != valid_ops.end(); op++) {

+ std::cout << std::setw(maximum_optype_length) << *op;

+ // Check: If this kernel doesn't match any operator, we will skip it.

+ if (supported_ops.find(*op) == supported_ops.end()) {

+ continue;

+ }

+ // Print OP info.

+ auto ops_valid_places = supported_ops.at(*op);

+ for (int i = 0; i < targets.size(); i++) {

+ if (std::find(ops_valid_places.begin(),

+ ops_valid_places.end(),

+ targets[i]) != ops_valid_places.end()) {

+ std::cout << std::setw(10) << "Y";

+ } else {

+ std::cout << std::setw(10) << " ";

+ }

+ }

+ std::cout << std::endl;

+ }

+ }

+}

+/// Print help information

+void PrintHelpInfo() {

+ // at least one argument should be inputed

+ const char help_info[] =

+ "At least one argument should be inputed. Valid arguments are listed "

+ "below:\n"

+ " Arguments of model optimization:\n"

+ " `--model_dir=`\n"

+ " `--model_file=`\n"

+ " `--param_file=`\n"

+ " `--optimize_out_type=(protobuf|naive_buffer)`\n"

+ " `--optimize_out=`\n"

+ " `--valid_targets=(arm|opencl|x86|npu|xpu)`\n"

+ " `--prefer_int8_kernel=(true|false)`\n"

+ " `--record_tailoring_info=(true|false)`\n"

+ " Arguments of model checking and ops information:\n"

+ " `--print_all_ops=true` Display all the valid operators of "

+ "Paddle-Lite\n"

+ " `--print_supported_ops=true "

+ "--valid_targets=(arm|opencl|x86|npu|xpu)`"

+ " Display valid operators of input targets\n"

+ " `--print_model_ops=true --model_dir= "

+ "--valid_targets=(arm|opencl|x86|npu|xpu)`"

+ " Display operators in the input model\n";

+ std::cout << help_info << std::endl;

+ exit(1);

+}

+

+// Parse Input command

+void ParseInputCommand() {

+ if (FLAGS_print_all_ops) {

+ std::cout << "All OPs supported by Paddle-Lite: " << supported_ops.size()

+ << " ops in total." << std::endl;

+ PrintOpsInfo();

+ exit(1);

+ } else if (FLAGS_print_supported_ops) {

+ auto valid_places = paddle::lite_api::ParserValidPlaces();

+ // get valid_targets string

+ std::vector target_types = {};

+ for (int i = 0; i < valid_places.size(); i++) {

+ target_types.push_back(valid_places[i].target);

+ }

+ std::string targets_str = TargetToStr(target_types[0]);

+ for (int i = 1; i < target_types.size(); i++) {

+ targets_str = targets_str + TargetToStr(target_types[i]);

+ }

+

+ std::cout << "Supported OPs on '" << targets_str << "': " << std::endl;

+ target_types.push_back(TARGET(kHost));

+ target_types.push_back(TARGET(kUnk));

+

+ std::set valid_ops;

+ for (int i = 0; i < target_types.size(); i++) {

+ auto ops = supported_ops_target[static_cast(target_types[i])];

+ valid_ops.insert(ops.begin(), ops.end());

+ }

+ PrintOpsInfo(valid_ops);

+ exit(1);

+ }

+}

+// test whether this model is supported

+void CheckIfModelSupported() {

+ // 1. parse valid places and valid targets

+ auto valid_places = paddle::lite_api::ParserValidPlaces();

+ // set valid_ops

+ auto valid_ops = supported_ops_target[static_cast(TARGET(kHost))];

+ auto valid_unktype_ops = supported_ops_target[static_cast(TARGET(kUnk))];

+ valid_ops.insert(

+ valid_ops.end(), valid_unktype_ops.begin(), valid_unktype_ops.end());

+ for (int i = 0; i < valid_places.size(); i++) {

+ auto target = valid_places[i].target;

+ auto ops = supported_ops_target[static_cast(target)];

+ valid_ops.insert(valid_ops.end(), ops.begin(), ops.end());

+ }

+ // get valid ops

+ std::set valid_ops_set(valid_ops.begin(), valid_ops.end());

+

+ // 2.Load model into program to get ops in model

+ std::string prog_path = FLAGS_model_dir + "/__model__";

+ if (!FLAGS_model_file.empty() && !FLAGS_param_file.empty()) {

+ prog_path = FLAGS_model_file;

+ }

+ lite::cpp::ProgramDesc cpp_prog;

+ framework::proto::ProgramDesc pb_proto_prog =

+ *lite::LoadProgram(prog_path, false);

+ lite::pb::ProgramDesc pb_prog(&pb_proto_prog);

+ // Transform to cpp::ProgramDesc

+ lite::TransformProgramDescAnyToCpp(pb_prog, &cpp_prog);

+

+ std::set unsupported_ops;

+ std::set input_model_ops;

+ for (int index = 0; index < cpp_prog.BlocksSize(); index++) {

+ auto current_block = cpp_prog.GetBlock(index);

+ for (size_t i = 0; i < current_block->OpsSize(); ++i) {

+ auto& op_desc = *current_block->GetOp(i);

+ auto op_type = op_desc.Type();

+ input_model_ops.insert(op_type);

+ if (valid_ops_set.count(op_type) == 0) {

+ unsupported_ops.insert(op_type);

+ }

+ }

+ }

+ // 3. Print ops_info of input model and check if this model is supported

+ if (FLAGS_print_model_ops) {

+ std::cout << "OPs in the input model include:\n";

+ PrintOpsInfo(input_model_ops);

+ }

+ if (!unsupported_ops.empty()) {

+ std::string unsupported_ops_str = *unsupported_ops.begin();

+ for (auto op_str = ++unsupported_ops.begin();

+ op_str != unsupported_ops.end();

+ op_str++) {

+ unsupported_ops_str = unsupported_ops_str + ", " + *op_str;

+ }

+ std::vector targets = {};

+ for (int i = 0; i < valid_places.size(); i++) {

+ targets.push_back(valid_places[i].target);

+ }

+ std::sort(targets.begin(), targets.end());

+ targets.erase(unique(targets.begin(), targets.end()), targets.end());

+ std::string targets_str = TargetToStr(targets[0]);

+ for (int i = 1; i < targets.size(); i++) {

+ targets_str = targets_str + "," + TargetToStr(targets[i]);

+ }

+

+ LOG(ERROR) << "Error: This model is not supported, because "

+ << unsupported_ops.size() << " ops are not supported on '"

+ << targets_str << "'. These unsupported ops are: '"

+ << unsupported_ops_str << "'.";

+ exit(1);

+ }

+ if (FLAGS_print_model_ops) {

+ std::cout << "Paddle-Lite supports this model!" << std::endl;

+ exit(1);

+ }

+}

void Main() {

if (FLAGS_display_kernels) {

@@ -241,7 +445,13 @@ void Main() {

} // namespace paddle

int main(int argc, char** argv) {

+ // If there is none input argument, print help info.

+ if (argc < 2) {

+ paddle::lite_api::PrintHelpInfo();

+ }

google::ParseCommandLineFlags(&argc, &argv, false);

+ paddle::lite_api::ParseInputCommand();

+ paddle::lite_api::CheckIfModelSupported();

paddle::lite_api::Main();

return 0;

}

diff --git a/lite/api/model_test.cc b/lite/api/model_test.cc

index dc9fac96ee848d73ca14c8dc4555c0f44951400a..5b063a8ef19c85d3818d2ca57659170d7d86357d 100644

--- a/lite/api/model_test.cc

+++ b/lite/api/model_test.cc

@@ -86,6 +86,7 @@ void Run(const std::vector>& input_shapes,

for (int i = 0; i < input_shapes[j].size(); ++i) {

input_num *= input_shapes[j][i];

}

+

for (int i = 0; i < input_num; ++i) {

input_data[i] = 1.f;

}

diff --git a/lite/api/paddle_api.h b/lite/api/paddle_api.h

index a014719c5783bcec3988bba65c53cc8cf52f0b4c..6308699ac91900d161a55ee121e4d9777947fede 100644

--- a/lite/api/paddle_api.h

+++ b/lite/api/paddle_api.h

@@ -133,7 +133,9 @@ class LITE_API CxxConfig : public ConfigBase {

std::string model_file_;

std::string param_file_;

bool model_from_memory_{false};

- int cpu_math_library_math_threads_ = 1;

+#ifdef LITE_WITH_X86

+ int x86_math_library_math_threads_ = 1;

+#endif

public:

void set_valid_places(const std::vector& x) { valid_places_ = x; }

@@ -153,12 +155,14 @@ class LITE_API CxxConfig : public ConfigBase {

std::string param_file() const { return param_file_; }

bool model_from_memory() const { return model_from_memory_; }

- void set_cpu_math_library_num_threads(int threads) {

- cpu_math_library_math_threads_ = threads;

+#ifdef LITE_WITH_X86

+ void set_x86_math_library_num_threads(int threads) {

+ x86_math_library_math_threads_ = threads;

}

- int cpu_math_library_num_threads() const {

- return cpu_math_library_math_threads_;

+ int x86_math_library_num_threads() const {

+ return x86_math_library_math_threads_;

}

+#endif

};

/// MobileConfig is the config for the light weight predictor, it will skip

diff --git a/lite/api/test_step_rnn_lite_x86.cc b/lite/api/test_step_rnn_lite_x86.cc

index 075d314df6f46ab9dc8531b26c23d05d24e63bb4..013fd82b19bc22ace22184389249a7b2d9bf237e 100644

--- a/lite/api/test_step_rnn_lite_x86.cc

+++ b/lite/api/test_step_rnn_lite_x86.cc

@@ -30,7 +30,9 @@ TEST(Step_rnn, test_step_rnn_lite_x86) {

std::string model_dir = FLAGS_model_dir;

lite_api::CxxConfig config;

config.set_model_dir(model_dir);

- config.set_cpu_math_library_num_threads(1);

+#ifdef LITE_WITH_X86

+ config.set_x86_math_library_num_threads(1);

+#endif

config.set_valid_places({lite_api::Place{TARGET(kX86), PRECISION(kInt64)},

lite_api::Place{TARGET(kX86), PRECISION(kFloat)},

lite_api::Place{TARGET(kHost), PRECISION(kFloat)}});

diff --git a/lite/backends/arm/math/conv3x3s1_depthwise_fp32.cc b/lite/backends/arm/math/conv3x3s1_depthwise_fp32.cc

deleted file mode 100644

index 99aeea8bdea2a50795dcdca18464a196ee877291..0000000000000000000000000000000000000000

--- a/lite/backends/arm/math/conv3x3s1_depthwise_fp32.cc

+++ /dev/null

@@ -1,538 +0,0 @@

-// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-#include "lite/backends/arm/math/conv_block_utils.h"

-#include "lite/backends/arm/math/conv_impl.h"

-#include "lite/core/context.h"

-#include "lite/operators/op_params.h"

-#ifdef ARM_WITH_OMP

-#include

-#endif

-

-namespace paddle {

-namespace lite {

-namespace arm {

-namespace math {

-void conv_3x3s1_depthwise_fp32(const float* i_data,

- float* o_data,

- int bs,

- int oc,

- int oh,

- int ow,

- int ic,

- int ih,

- int win,

- const float* weights,

- const float* bias,

- const operators::ConvParam& param,

- ARMContext* ctx) {

- int threads = ctx->threads();

- const int pad_h = param.paddings[0];

- const int pad_w = param.paddings[1];

- const int out_c_block = 4;

- const int out_h_kernel = 2;

- const int out_w_kernel = 4;

- const int win_ext = ow + 2;

- const int ow_round = ROUNDUP(ow, 4);

- const int win_round = ROUNDUP(win_ext, 4);

- const int hin_round = oh + 2;

- const int prein_size = win_round * hin_round * out_c_block;

- auto workspace_size =

- threads * prein_size + win_round /*tmp zero*/ + ow_round /*tmp writer*/;

- ctx->ExtendWorkspace(sizeof(float) * workspace_size);

-

- bool flag_relu = param.fuse_relu;

- bool flag_bias = param.bias != nullptr;

-

- /// get workspace

- float* ptr_zero = ctx->workspace_data();

- memset(ptr_zero, 0, sizeof(float) * win_round);

- float* ptr_write = ptr_zero + win_round;

-

- int size_in_channel = win * ih;

- int size_out_channel = ow * oh;

-

- int ws = -pad_w;

- int we = ws + win_round;

- int hs = -pad_h;

- int he = hs + hin_round;

- int w_loop = ow_round / 4;

- auto remain = w_loop * 4 - ow;

- bool flag_remain = remain > 0;

- remain = 4 - remain;

- remain = remain > 0 ? remain : 0;

- int row_len = win_round * out_c_block;

-

- for (int n = 0; n < bs; ++n) {

- const float* din_batch = i_data + n * ic * size_in_channel;

- float* dout_batch = o_data + n * oc * size_out_channel;

-#pragma omp parallel for num_threads(threads)

- for (int c = 0; c < oc; c += out_c_block) {

-#ifdef ARM_WITH_OMP

- float* pre_din = ptr_write + ow_round + omp_get_thread_num() * prein_size;

-#else

- float* pre_din = ptr_write + ow_round;

-#endif

- /// const array size

- float pre_out[out_c_block * out_w_kernel * out_h_kernel]; // NOLINT

- prepack_input_nxwc4_dw(

- din_batch, pre_din, c, hs, he, ws, we, ic, win, ih, ptr_zero);

- const float* weight_c = weights + c * 9; // kernel_w * kernel_h

- float* dout_c00 = dout_batch + c * size_out_channel;

- float bias_local[4] = {0, 0, 0, 0};

- if (flag_bias) {

- bias_local[0] = bias[c];

- bias_local[1] = bias[c + 1];

- bias_local[2] = bias[c + 2];

- bias_local[3] = bias[c + 3];

- }

- float32x4_t vbias = vld1q_f32(bias_local);

-#ifdef __aarch64__

- float32x4_t w0 = vld1q_f32(weight_c); // w0, v23

- float32x4_t w1 = vld1q_f32(weight_c + 4); // w1, v24

- float32x4_t w2 = vld1q_f32(weight_c + 8); // w2, v25

- float32x4_t w3 = vld1q_f32(weight_c + 12); // w3, v26

- float32x4_t w4 = vld1q_f32(weight_c + 16); // w4, v27

- float32x4_t w5 = vld1q_f32(weight_c + 20); // w5, v28

- float32x4_t w6 = vld1q_f32(weight_c + 24); // w6, v29

- float32x4_t w7 = vld1q_f32(weight_c + 28); // w7, v30

- float32x4_t w8 = vld1q_f32(weight_c + 32); // w8, v31

-#endif

- for (int h = 0; h < oh; h += out_h_kernel) {

- float* outc00 = dout_c00 + h * ow;

- float* outc01 = outc00 + ow;

- float* outc10 = outc00 + size_out_channel;

- float* outc11 = outc10 + ow;

- float* outc20 = outc10 + size_out_channel;

- float* outc21 = outc20 + ow;

- float* outc30 = outc20 + size_out_channel;

- float* outc31 = outc30 + ow;

- const float* inr0 = pre_din + h * row_len;

- const float* inr1 = inr0 + row_len;

- const float* inr2 = inr1 + row_len;

- const float* inr3 = inr2 + row_len;

- if (c + out_c_block > oc) {

- switch (c + out_c_block - oc) {

- case 3:

- outc10 = ptr_write;

- outc11 = ptr_write;

- case 2:

- outc20 = ptr_write;

- outc21 = ptr_write;

- case 1:

- outc30 = ptr_write;

- outc31 = ptr_write;

- default:

- break;

- }

- }

- if (h + out_h_kernel > oh) {

- outc01 = ptr_write;

- outc11 = ptr_write;

- outc21 = ptr_write;

- outc31 = ptr_write;

- }

- float* outl[] = {outc00,

- outc10,

- outc20,

- outc30,

- outc01,

- outc11,

- outc21,

- outc31,

- reinterpret_cast(bias_local),

- reinterpret_cast(flag_relu)};

- void* outl_ptr = reinterpret_cast(outl);

- for (int w = 0; w < w_loop; ++w) {

- bool flag_mask = (w == w_loop - 1) && flag_remain;

- float* out0 = pre_out;

-// clang-format off

-#ifdef __aarch64__

- asm volatile(

- "ldp q0, q1, [%[inr0]], #32\n" /* load input r0*/

- "ldp q6, q7, [%[inr1]], #32\n" /* load input r1*/

- "ldp q2, q3, [%[inr0]], #32\n" /* load input r0*/

- "ldp q8, q9, [%[inr1]], #32\n" /* load input r1*/

- "ldp q4, q5, [%[inr0]]\n" /* load input r0*/

- "ldp q10, q11, [%[inr1]]\n" /* load input r1*/

- /* r0, r1, mul w0, get out r0, r1 */

- "fmul v15.4s , %[w0].4s, v0.4s\n" /* outr00 = w0 * r0, 0*/

- "fmul v16.4s , %[w0].4s, v1.4s\n" /* outr01 = w0 * r0, 1*/

- "fmul v17.4s , %[w0].4s, v2.4s\n" /* outr02 = w0 * r0, 2*/

- "fmul v18.4s , %[w0].4s, v3.4s\n" /* outr03 = w0 * r0, 3*/

- "fmul v19.4s , %[w0].4s, v6.4s\n" /* outr10 = w0 * r1, 0*/

- "fmul v20.4s , %[w0].4s, v7.4s\n" /* outr11 = w0 * r1, 1*/

- "fmul v21.4s , %[w0].4s, v8.4s\n" /* outr12 = w0 * r1, 2*/

- "fmul v22.4s , %[w0].4s, v9.4s\n" /* outr13 = w0 * r1, 3*/

- /* r0, r1, mul w1, get out r0, r1 */

- "fmla v15.4s , %[w1].4s, v1.4s\n" /* outr00 = w1 * r0[1]*/

- "ldp q0, q1, [%[inr2]], #32\n" /* load input r2*/

- "fmla v16.4s , %[w1].4s, v2.4s\n" /* outr01 = w1 * r0[2]*/

- "fmla v17.4s , %[w1].4s, v3.4s\n" /* outr02 = w1 * r0[3]*/

- "fmla v18.4s , %[w1].4s, v4.4s\n" /* outr03 = w1 * r0[4]*/

- "fmla v19.4s , %[w1].4s, v7.4s\n" /* outr10 = w1 * r1[1]*/

- "fmla v20.4s , %[w1].4s, v8.4s\n" /* outr11 = w1 * r1[2]*/

- "fmla v21.4s , %[w1].4s, v9.4s\n" /* outr12 = w1 * r1[3]*/

- "fmla v22.4s , %[w1].4s, v10.4s\n"/* outr13 = w1 * r1[4]*/

- /* r0, r1, mul w2, get out r0, r1 */

- "fmla v15.4s , %[w2].4s, v2.4s\n" /* outr00 = w2 * r0[2]*/

- "fmla v16.4s , %[w2].4s, v3.4s\n" /* outr01 = w2 * r0[3]*/

- "ldp q2, q3, [%[inr2]], #32\n" /* load input r2*/

- "fmla v17.4s , %[w2].4s, v4.4s\n" /* outr02 = w2 * r0[4]*/

- "fmla v18.4s , %[w2].4s, v5.4s\n" /* outr03 = w2 * r0[5]*/

- "ldp q4, q5, [%[inr2]]\n" /* load input r2*/

- "fmla v19.4s , %[w2].4s, v8.4s\n" /* outr10 = w2 * r1[2]*/

- "fmla v20.4s , %[w2].4s, v9.4s\n" /* outr11 = w2 * r1[3]*/

- "fmla v21.4s , %[w2].4s, v10.4s\n"/* outr12 = w2 * r1[4]*/

- "fmla v22.4s , %[w2].4s, v11.4s\n"/* outr13 = w2 * r1[5]*/

- /* r1, r2, mul w3, get out r0, r1 */

- "fmla v15.4s , %[w3].4s, v6.4s\n" /* outr00 = w3 * r1[0]*/

- "fmla v16.4s , %[w3].4s, v7.4s\n" /* outr01 = w3 * r1[1]*/

- "fmla v17.4s , %[w3].4s, v8.4s\n" /* outr02 = w3 * r1[2]*/

- "fmla v18.4s , %[w3].4s, v9.4s\n" /* outr03 = w3 * r1[3]*/

- "fmla v19.4s , %[w3].4s, v0.4s\n" /* outr10 = w3 * r2[0]*/

- "fmla v20.4s , %[w3].4s, v1.4s\n" /* outr11 = w3 * r2[1]*/

- "fmla v21.4s , %[w3].4s, v2.4s\n" /* outr12 = w3 * r2[2]*/

- "fmla v22.4s , %[w3].4s, v3.4s\n" /* outr13 = w3 * r2[3]*/

- /* r1, r2, mul w4, get out r0, r1 */

- "fmla v15.4s , %[w4].4s, v7.4s\n" /* outr00 = w4 * r1[1]*/

- "ldp q6, q7, [%[inr3]], #32\n" /* load input r3*/

- "fmla v16.4s , %[w4].4s, v8.4s\n" /* outr01 = w4 * r1[2]*/

- "fmla v17.4s , %[w4].4s, v9.4s\n" /* outr02 = w4 * r1[3]*/

- "fmla v18.4s , %[w4].4s, v10.4s\n"/* outr03 = w4 * r1[4]*/

- "ldp x0, x1, [%[outl]] \n"

- "fmla v19.4s , %[w4].4s, v1.4s\n" /* outr10 = w4 * r2[1]*/

- "fmla v20.4s , %[w4].4s, v2.4s\n" /* outr11 = w4 * r2[2]*/

- "fmla v21.4s , %[w4].4s, v3.4s\n" /* outr12 = w4 * r2[3]*/

- "fmla v22.4s , %[w4].4s, v4.4s\n" /* outr13 = w4 * r2[4]*/

- /* r1, r2, mul w5, get out r0, r1 */

- "fmla v15.4s , %[w5].4s, v8.4s\n" /* outr00 = w5 * r1[2]*/

- "fmla v16.4s , %[w5].4s, v9.4s\n" /* outr01 = w5 * r1[3]*/

- "ldp q8, q9, [%[inr3]], #32\n" /* load input r3*/

- "fmla v17.4s , %[w5].4s, v10.4s\n"/* outr02 = w5 * r1[4]*/

- "fmla v18.4s , %[w5].4s, v11.4s\n"/* outr03 = w5 * r1[5]*/

- "ldp q10, q11, [%[inr3]]\n" /* load input r3*/

- "fmla v19.4s , %[w5].4s, v2.4s\n" /* outr10 = w5 * r2[2]*/

- "fmla v20.4s , %[w5].4s, v3.4s\n" /* outr11 = w5 * r2[3]*/

- "fmla v21.4s , %[w5].4s, v4.4s\n" /* outr12 = w5 * r2[4]*/

- "fmla v22.4s , %[w5].4s, v5.4s\n" /* outr13 = w5 * r2[5]*/

- /* r2, r3, mul w6, get out r0, r1 */

- "fmla v15.4s , %[w6].4s, v0.4s\n" /* outr00 = w6 * r2[0]*/

- "fmla v16.4s , %[w6].4s, v1.4s\n" /* outr01 = w6 * r2[1]*/

- "fmla v17.4s , %[w6].4s, v2.4s\n" /* outr02 = w6 * r2[2]*/

- "fmla v18.4s , %[w6].4s, v3.4s\n" /* outr03 = w6 * r2[3]*/

- "ldp x2, x3, [%[outl], #16] \n"

- "fmla v19.4s , %[w6].4s, v6.4s\n" /* outr10 = w6 * r3[0]*/

- "fmla v20.4s , %[w6].4s, v7.4s\n" /* outr11 = w6 * r3[1]*/

- "fmla v21.4s , %[w6].4s, v8.4s\n" /* outr12 = w6 * r3[2]*/

- "fmla v22.4s , %[w6].4s, v9.4s\n" /* outr13 = w6 * r3[3]*/

- /* r2, r3, mul w7, get out r0, r1 */

- "fmla v15.4s , %[w7].4s, v1.4s\n" /* outr00 = w7 * r2[1]*/

- "fmla v16.4s , %[w7].4s, v2.4s\n" /* outr01 = w7 * r2[2]*/

- "fmla v17.4s , %[w7].4s, v3.4s\n" /* outr02 = w7 * r2[3]*/

- "fmla v18.4s , %[w7].4s, v4.4s\n" /* outr03 = w7 * r2[4]*/

- "ldp x4, x5, [%[outl], #32] \n"

- "fmla v19.4s , %[w7].4s, v7.4s\n" /* outr10 = w7 * r3[1]*/

- "fmla v20.4s , %[w7].4s, v8.4s\n" /* outr11 = w7 * r3[2]*/

- "fmla v21.4s , %[w7].4s, v9.4s\n" /* outr12 = w7 * r3[3]*/

- "fmla v22.4s , %[w7].4s, v10.4s\n"/* outr13 = w7 * r3[4]*/

- /* r2, r3, mul w8, get out r0, r1 */

- "fmla v15.4s , %[w8].4s, v2.4s\n" /* outr00 = w8 * r2[2]*/

- "fmla v16.4s , %[w8].4s, v3.4s\n" /* outr01 = w8 * r2[3]*/

- "fmla v17.4s , %[w8].4s, v4.4s\n" /* outr02 = w8 * r2[0]*/

- "fmla v18.4s , %[w8].4s, v5.4s\n" /* outr03 = w8 * r2[1]*/

- "ldp x6, x7, [%[outl], #48] \n"

- "fmla v19.4s , %[w8].4s, v8.4s\n" /* outr10 = w8 * r3[2]*/

- "fmla v20.4s , %[w8].4s, v9.4s\n" /* outr11 = w8 * r3[3]*/

- "fmla v21.4s , %[w8].4s, v10.4s\n"/* outr12 = w8 * r3[0]*/

- "fmla v22.4s , %[w8].4s, v11.4s\n"/* outr13 = w8 * r3[1]*/

-

- "fadd v15.4s, v15.4s, %[vbias].4s\n"/* add bias */

- "fadd v16.4s, v16.4s, %[vbias].4s\n"/* add bias */

- "fadd v17.4s, v17.4s, %[vbias].4s\n"/* add bias */

- "fadd v18.4s, v18.4s, %[vbias].4s\n"/* add bias */

- "fadd v19.4s, v19.4s, %[vbias].4s\n"/* add bias */

- "fadd v20.4s, v20.4s, %[vbias].4s\n"/* add bias */

- "fadd v21.4s, v21.4s, %[vbias].4s\n"/* add bias */

- "fadd v22.4s, v22.4s, %[vbias].4s\n"/* add bias */

-

- /* transpose */

- "trn1 v0.4s, v15.4s, v16.4s\n" /* r0: a0a1c0c1*/

- "trn2 v1.4s, v15.4s, v16.4s\n" /* r0: b0b1d0d1*/

- "trn1 v2.4s, v17.4s, v18.4s\n" /* r0: a2a3c2c3*/

- "trn2 v3.4s, v17.4s, v18.4s\n" /* r0: b2b3d2d3*/

- "trn1 v4.4s, v19.4s, v20.4s\n" /* r1: a0a1c0c1*/

- "trn2 v5.4s, v19.4s, v20.4s\n" /* r1: b0b1d0d1*/

- "trn1 v6.4s, v21.4s, v22.4s\n" /* r1: a2a3c2c3*/

- "trn2 v7.4s, v21.4s, v22.4s\n" /* r1: b2b3d2d3*/

- "trn1 v15.2d, v0.2d, v2.2d\n" /* r0: a0a1a2a3*/

- "trn2 v19.2d, v0.2d, v2.2d\n" /* r0: c0c1c2c3*/

- "trn1 v17.2d, v1.2d, v3.2d\n" /* r0: b0b1b2b3*/

- "trn2 v21.2d, v1.2d, v3.2d\n" /* r0: d0d1d2d3*/

- "trn1 v16.2d, v4.2d, v6.2d\n" /* r1: a0a1a2a3*/

- "trn2 v20.2d, v4.2d, v6.2d\n" /* r1: c0c1c2c3*/

- "trn1 v18.2d, v5.2d, v7.2d\n" /* r1: b0b1b2b3*/

- "trn2 v22.2d, v5.2d, v7.2d\n" /* r1: d0d1d2d3*/

-

- "cbz %w[flag_relu], 0f\n" /* skip relu*/

- "movi v0.4s, #0\n" /* for relu */

- "fmax v15.4s, v15.4s, v0.4s\n"

- "fmax v16.4s, v16.4s, v0.4s\n"

- "fmax v17.4s, v17.4s, v0.4s\n"

- "fmax v18.4s, v18.4s, v0.4s\n"

- "fmax v19.4s, v19.4s, v0.4s\n"

- "fmax v20.4s, v20.4s, v0.4s\n"

- "fmax v21.4s, v21.4s, v0.4s\n"

- "fmax v22.4s, v22.4s, v0.4s\n"

- "0:\n"

- "cbnz %w[flag_mask], 1f\n"

- "str q15, [x0]\n" /* save outc00 */

- "str q16, [x4]\n" /* save outc01 */

- "str q17, [x1]\n" /* save outc10 */

- "str q18, [x5]\n" /* save outc11 */

- "str q19, [x2]\n" /* save outc20 */

- "str q20, [x6]\n" /* save outc21 */

- "str q21, [x3]\n" /* save outc30 */

- "str q22, [x7]\n" /* save outc31 */

- "b 2f\n"

- "1:\n"

- "str q15, [%[out]], #16 \n" /* save remain to pre_out */

- "str q17, [%[out]], #16 \n" /* save remain to pre_out */

- "str q19, [%[out]], #16 \n" /* save remain to pre_out */

- "str q21, [%[out]], #16 \n" /* save remain to pre_out */

- "str q16, [%[out]], #16 \n" /* save remain to pre_out */

- "str q18, [%[out]], #16 \n" /* save remain to pre_out */

- "str q20, [%[out]], #16 \n" /* save remain to pre_out */

- "str q22, [%[out]], #16 \n" /* save remain to pre_out */

- "2:\n"

- :[inr0] "+r"(inr0), [inr1] "+r"(inr1),

- [inr2] "+r"(inr2), [inr3] "+r"(inr3),

- [out]"+r"(out0)

- :[w0] "w"(w0), [w1] "w"(w1), [w2] "w"(w2),

- [w3] "w"(w3), [w4] "w"(w4), [w5] "w"(w5),

- [w6] "w"(w6), [w7] "w"(w7), [w8] "w"(w8),

- [vbias]"w" (vbias), [outl] "r" (outl_ptr),

- [flag_mask] "r" (flag_mask), [flag_relu] "r" (flag_relu)

- : "cc", "memory",

- "v0","v1","v2","v3","v4","v5","v6","v7",

- "v8", "v9", "v10", "v11", "v15",

- "v16","v17","v18","v19","v20","v21","v22",

- "x0", "x1", "x2", "x3", "x4", "x5", "x6", "x7"

- );

-#else

- asm volatile(

- /* load weights */

- "vld1.32 {d10-d13}, [%[wc0]]! @ load w0, w1, to q5, q6\n"

- "vld1.32 {d14-d15}, [%[wc0]]! @ load w2, to q7\n"

- /* load r0, r1 */

- "vld1.32 {d0-d3}, [%[r0]]! @ load r0, q0, q1\n"

- "vld1.32 {d4-d7}, [%[r0]]! @ load r0, q2, q3\n"

- /* main loop */

- "0: @ main loop\n"

- /* mul r0 with w0, w1, w2, get out r0 */

- "vmul.f32 q8, q5, q0 @ w0 * inr00\n"

- "vmul.f32 q9, q5, q1 @ w0 * inr01\n"

- "vmul.f32 q10, q5, q2 @ w0 * inr02\n"

- "vmul.f32 q11, q5, q3 @ w0 * inr03\n"

- "vmla.f32 q8, q6, q1 @ w1 * inr01\n"

- "vld1.32 {d0-d3}, [%[r0]] @ load r0, q0, q1\n"

- "vmla.f32 q9, q6, q2 @ w1 * inr02\n"

- "vmla.f32 q10, q6, q3 @ w1 * inr03\n"

- "vmla.f32 q11, q6, q0 @ w1 * inr04\n"

- "vmla.f32 q8, q7, q2 @ w2 * inr02\n"

- "vmla.f32 q9, q7, q3 @ w2 * inr03\n"

- "vld1.32 {d4-d7}, [%[r1]]! @ load r0, q2, q3\n"

- "vmla.f32 q10, q7, q0 @ w2 * inr04\n"

- "vmla.f32 q11, q7, q1 @ w2 * inr05\n"

- "vld1.32 {d0-d3}, [%[r1]]! @ load r0, q0, q1\n"

- "vld1.32 {d8-d9}, [%[wc0]]! @ load w3 to q4\n"

- /* mul r1 with w0-w5, get out r0, r1 */

- "vmul.f32 q12, q5, q2 @ w0 * inr10\n"

- "vmul.f32 q13, q5, q3 @ w0 * inr11\n"

- "vmul.f32 q14, q5, q0 @ w0 * inr12\n"

- "vmul.f32 q15, q5, q1 @ w0 * inr13\n"

- "vld1.32 {d10-d11}, [%[wc0]]! @ load w4 to q5\n"

- "vmla.f32 q8, q4, q2 @ w3 * inr10\n"

- "vmla.f32 q9, q4, q3 @ w3 * inr11\n"

- "vmla.f32 q10, q4, q0 @ w3 * inr12\n"

- "vmla.f32 q11, q4, q1 @ w3 * inr13\n"

- /* mul r1 with w1, w4, get out r1, r0 */

- "vmla.f32 q8, q5, q3 @ w4 * inr11\n"

- "vmla.f32 q12, q6, q3 @ w1 * inr11\n"

- "vld1.32 {d4-d7}, [%[r1]] @ load r1, q2, q3\n"

- "vmla.f32 q9, q5, q0 @ w4 * inr12\n"

- "vmla.f32 q13, q6, q0 @ w1 * inr12\n"

- "vmla.f32 q10, q5, q1 @ w4 * inr13\n"

- "vmla.f32 q14, q6, q1 @ w1 * inr13\n"

- "vmla.f32 q11, q5, q2 @ w4 * inr14\n"

- "vmla.f32 q15, q6, q2 @ w1 * inr14\n"

- "vld1.32 {d12-d13}, [%[wc0]]! @ load w5 to q6\n"

- /* mul r1 with w2, w5, get out r1, r0 */

- "vmla.f32 q12, q7, q0 @ w2 * inr12\n"

- "vmla.f32 q13, q7, q1 @ w2 * inr13\n"

- "vmla.f32 q8, q6, q0 @ w5 * inr12\n"

- "vmla.f32 q9, q6, q1 @ w5 * inr13\n"

- "vld1.32 {d0-d3}, [%[r2]]! @ load r2, q0, q1\n"

- "vmla.f32 q14, q7, q2 @ w2 * inr14\n"

- "vmla.f32 q15, q7, q3 @ w2 * inr15\n"

- "vmla.f32 q10, q6, q2 @ w5 * inr14\n"

- "vmla.f32 q11, q6, q3 @ w5 * inr15\n"

- "vld1.32 {d4-d7}, [%[r2]]! @ load r2, q0, q1\n"

- "vld1.32 {d14-d15}, [%[wc0]]! @ load w6, to q7\n"

- /* mul r2 with w3-w8, get out r0, r1 */

- "vmla.f32 q12, q4, q0 @ w3 * inr20\n"

- "vmla.f32 q13, q4, q1 @ w3 * inr21\n"

- "vmla.f32 q14, q4, q2 @ w3 * inr22\n"

- "vmla.f32 q15, q4, q3 @ w3 * inr23\n"

- "vld1.32 {d8-d9}, [%[wc0]]! @ load w7, to q4\n"

- "vmla.f32 q8, q7, q0 @ w6 * inr20\n"

- "vmla.f32 q9, q7, q1 @ w6 * inr21\n"

- "vmla.f32 q10, q7, q2 @ w6 * inr22\n"

- "vmla.f32 q11, q7, q3 @ w6 * inr23\n"

- /* mul r2 with w4, w7, get out r1, r0 */

- "vmla.f32 q8, q4, q1 @ w7 * inr21\n"

- "vmla.f32 q12, q5, q1 @ w4 * inr21\n"

- "vld1.32 {d0-d3}, [%[r2]] @ load r2, q0, q1\n"

- "vmla.f32 q9, q4, q2 @ w7 * inr22\n"

- "vmla.f32 q13, q5, q2 @ w4 * inr22\n"

- "vmla.f32 q10, q4, q3 @ w7 * inr23\n"

- "vmla.f32 q14, q5, q3 @ w4 * inr23\n"

- "vmla.f32 q11, q4, q0 @ w7 * inr24\n"

- "vmla.f32 q15, q5, q0 @ w4 * inr24\n"

- "vld1.32 {d10-d11}, [%[wc0]]! @ load w8 to q5\n"

- /* mul r1 with w5, w8, get out r1, r0 */

- "vmla.f32 q12, q6, q2 @ w5 * inr22\n"

- "vmla.f32 q13, q6, q3 @ w5 * inr23\n"

- "vmla.f32 q8, q5, q2 @ w8 * inr22\n"

- "vmla.f32 q9, q5, q3 @ w8 * inr23\n"

- "vld1.32 {d4-d7}, [%[r3]]! @ load r3, q2, q3\n"

- "ldr r4, [%[outl], #32] @ load bias addr to r4\n"

- "vmla.f32 q14, q6, q0 @ w5 * inr24\n"

- "vmla.f32 q15, q6, q1 @ w5 * inr25\n"

- "vmla.f32 q10, q5, q0 @ w8 * inr24\n"

- "vmla.f32 q11, q5, q1 @ w8 * inr25\n"

- "vld1.32 {d0-d3}, [%[r3]]! @ load r3, q0, q1\n"

- "sub %[wc0], %[wc0], #144 @ wc0 - 144 to start address\n"

- /* mul r3 with w6, w7, w8, get out r1 */

- "vmla.f32 q12, q7, q2 @ w6 * inr30\n"

- "vmla.f32 q13, q7, q3 @ w6 * inr31\n"

- "vmla.f32 q14, q7, q0 @ w6 * inr32\n"

- "vmla.f32 q15, q7, q1 @ w6 * inr33\n"

- "vmla.f32 q12, q4, q3 @ w7 * inr31\n"

- "vld1.32 {d4-d7}, [%[r3]] @ load r3, q2, q3\n"

- "vld1.32 {d12-d13}, [r4] @ load bias\n"

- "vmla.f32 q13, q4, q0 @ w7 * inr32\n"

- "vmla.f32 q14, q4, q1 @ w7 * inr33\n"

- "vmla.f32 q15, q4, q2 @ w7 * inr34\n"

- "ldr r0, [%[outl]] @ load outc00 to r0\n"

- "vmla.f32 q12, q5, q0 @ w8 * inr32\n"

- "vmla.f32 q13, q5, q1 @ w8 * inr33\n"

- "ldr r5, [%[outl], #36] @ load flag_relu to r5\n"

- "vmla.f32 q14, q5, q2 @ w8 * inr34\n"

- "vmla.f32 q15, q5, q3 @ w8 * inr35\n"

- "ldr r1, [%[outl], #4] @ load outc10 to r1\n"

- "vadd.f32 q8, q8, q6 @ r00 add bias\n"

- "vadd.f32 q9, q9, q6 @ r01 add bias\n"

- "vadd.f32 q10, q10, q6 @ r02 add bias\n"

- "vadd.f32 q11, q11, q6 @ r03 add bias\n"

- "ldr r2, [%[outl], #8] @ load outc20 to r2\n"

- "vadd.f32 q12, q12, q6 @ r10 add bias\n"

- "vadd.f32 q13, q13, q6 @ r11 add bias\n"

- "vadd.f32 q14, q14, q6 @ r12 add bias\n"

- "vadd.f32 q15, q15, q6 @ r13 add bias\n"

- "ldr r3, [%[outl], #12] @ load outc30 to r3\n"

- "vmov.u32 q7, #0 @ mov zero to q7\n"

- "cmp r5, #0 @ cmp flag relu\n"

- "beq 1f @ skip relu\n"

- "vmax.f32 q8, q8, q7 @ r00 relu\n"

- "vmax.f32 q9, q9, q7 @ r01 relu\n"

- "vmax.f32 q10, q10, q7 @ r02 relu\n"

- "vmax.f32 q11, q11, q7 @ r03 relu\n"

- "vmax.f32 q12, q12, q7 @ r10 relu\n"

- "vmax.f32 q13, q13, q7 @ r11 relu\n"

- "vmax.f32 q14, q14, q7 @ r12 relu\n"

- "vmax.f32 q15, q15, q7 @ r13 relu\n"

- "1:\n"

- "ldr r4, [%[outl], #16] @ load outc01 to r4\n"

- "vtrn.32 q8, q9 @ r0: q8 : a0a1c0c1, q9 : b0b1d0d1\n"

- "vtrn.32 q10, q11 @ r0: q10: a2a3c2c3, q11: b2b3d2d3\n"

- "vtrn.32 q12, q13 @ r1: q12: a0a1c0c1, q13: b0b1d0d1\n"

- "vtrn.32 q14, q15 @ r1: q14: a2a3c2c3, q15: b2b3d2d3\n"

- "ldr r5, [%[outl], #20] @ load outc11 to r5\n"

- "vswp d17, d20 @ r0: q8 : a0a1a2a3, q10: c0c1c2c3 \n"

- "vswp d19, d22 @ r0: q9 : b0b1b2b3, q11: d0d1d2d3 \n"

- "vswp d25, d28 @ r1: q12: a0a1a2a3, q14: c0c1c2c3 \n"

- "vswp d27, d30 @ r1: q13: b0b1b2b3, q15: d0d1d2d3 \n"

- "cmp %[flag_mask], #0 @ cmp flag mask\n"

- "bne 2f\n"

- "vst1.32 {d16-d17}, [r0] @ save outc00\n"

- "vst1.32 {d18-d19}, [r1] @ save outc10\n"

- "vst1.32 {d20-d21}, [r2] @ save outc20\n"

- "vst1.32 {d22-d23}, [r3] @ save outc30\n"

- "vst1.32 {d24-d25}, [r4] @ save outc01\n"

- "vst1.32 {d26-d27}, [r5] @ save outc11\n"

- "ldr r0, [%[outl], #24] @ load outc21 to r0\n"

- "ldr r1, [%[outl], #28] @ load outc31 to r1\n"

- "vst1.32 {d28-d29}, [r0] @ save outc21\n"

- "vst1.32 {d30-d31}, [r1] @ save outc31\n"

- "b 3f @ branch end\n"

- "2: \n"

- "vst1.32 {d16-d17}, [%[out0]]! @ save remain to pre_out\n"

- "vst1.32 {d18-d19}, [%[out0]]! @ save remain to pre_out\n"

- "vst1.32 {d20-d21}, [%[out0]]! @ save remain to pre_out\n"

- "vst1.32 {d22-d23}, [%[out0]]! @ save remain to pre_out\n"

- "vst1.32 {d24-d25}, [%[out0]]! @ save remain to pre_out\n"

- "vst1.32 {d26-d27}, [%[out0]]! @ save remain to pre_out\n"

- "vst1.32 {d28-d29}, [%[out0]]! @ save remain to pre_out\n"

- "vst1.32 {d30-d31}, [%[out0]]! @ save remain to pre_out\n"

- "3: \n"

- : [r0] "+r"(inr0), [r1] "+r"(inr1),

- [r2] "+r"(inr2), [r3] "+r"(inr3),

- [out0] "+r"(out0), [wc0] "+r"(weight_c)

- : [flag_mask] "r" (flag_mask), [outl] "r" (outl_ptr)

- : "cc", "memory",

- "q0", "q1", "q2", "q3", "q4", "q5", "q6", "q7", "q8", "q9",

- "q10", "q11", "q12", "q13","q14", "q15", "r0", "r1", "r2", "r3", "r4", "r5"

- );

-#endif // __arch64__

- // clang-format on

- outl[0] += 4;

- outl[1] += 4;

- outl[2] += 4;

- outl[3] += 4;

- outl[4] += 4;

- outl[5] += 4;

- outl[6] += 4;

- outl[7] += 4;

- if (flag_mask) {

- memcpy(outl[0] - 4, pre_out, remain * sizeof(float));

- memcpy(outl[1] - 4, pre_out + 4, remain * sizeof(float));

- memcpy(outl[2] - 4, pre_out + 8, remain * sizeof(float));

- memcpy(outl[3] - 4, pre_out + 12, remain * sizeof(float));

- memcpy(outl[4] - 4, pre_out + 16, remain * sizeof(float));

- memcpy(outl[5] - 4, pre_out + 20, remain * sizeof(float));

- memcpy(outl[6] - 4, pre_out + 24, remain * sizeof(float));

- memcpy(outl[7] - 4, pre_out + 28, remain * sizeof(float));

- }

- }

- }

- }

- }

-}

-

-} // namespace math

-} // namespace arm

-} // namespace lite

-} // namespace paddle

diff --git a/lite/backends/arm/math/conv3x3s2_depthwise_fp32.cc b/lite/backends/arm/math/conv3x3s2_depthwise_fp32.cc

deleted file mode 100644

index 2d75323a9677f1cfbed726a1a28920dd77131688..0000000000000000000000000000000000000000

--- a/lite/backends/arm/math/conv3x3s2_depthwise_fp32.cc

+++ /dev/null

@@ -1,361 +0,0 @@

-// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-#include "lite/backends/arm/math/conv_block_utils.h"

-#include "lite/backends/arm/math/conv_impl.h"

-#include "lite/core/context.h"

-#include "lite/operators/op_params.h"

-#ifdef ARM_WITH_OMP

-#include

-#endif

-

-namespace paddle {

-namespace lite {

-namespace arm {

-namespace math {

-

-void conv_3x3s2_depthwise_fp32(const float* i_data,

- float* o_data,

- int bs,

- int oc,

- int oh,

- int ow,

- int ic,

- int ih,

- int win,

- const float* weights,

- const float* bias,

- const operators::ConvParam& param,

- ARMContext* ctx) {

- int threads = ctx->threads();

- const int pad_h = param.paddings[0];

- const int pad_w = param.paddings[1];

- const int out_c_block = 4;

- const int out_h_kernel = 1;

- const int out_w_kernel = 4;

- const int win_ext = ow * 2 + 1;

- const int ow_round = ROUNDUP(ow, 4);

- const int win_round = ROUNDUP(win_ext, 4);

- const int hin_round = oh * 2 + 1;

- const int prein_size = win_round * hin_round * out_c_block;

- auto workspace_size =

- threads * prein_size + win_round /*tmp zero*/ + ow_round /*tmp writer*/;

- ctx->ExtendWorkspace(sizeof(float) * workspace_size);

-

- bool flag_relu = param.fuse_relu;

- bool flag_bias = param.bias != nullptr;

-

- /// get workspace

- auto ptr_zero = ctx->workspace_data();

- memset(ptr_zero, 0, sizeof(float) * win_round);

- float* ptr_write = ptr_zero + win_round;

-

- int size_in_channel = win * ih;

- int size_out_channel = ow * oh;

-

- int ws = -pad_w;

- int we = ws + win_round;

- int hs = -pad_h;

- int he = hs + hin_round;

- int w_loop = ow_round / 4;

- auto remain = w_loop * 4 - ow;

- bool flag_remain = remain > 0;

- remain = 4 - remain;

- remain = remain > 0 ? remain : 0;

- int row_len = win_round * out_c_block;

-

- for (int n = 0; n < bs; ++n) {

- const float* din_batch = i_data + n * ic * size_in_channel;

- float* dout_batch = o_data + n * oc * size_out_channel;

-#pragma omp parallel for num_threads(threads)

- for (int c = 0; c < oc; c += out_c_block) {

-#ifdef ARM_WITH_OMP

- float* pre_din = ptr_write + ow_round + omp_get_thread_num() * prein_size;

-#else

- float* pre_din = ptr_write + ow_round;

-#endif

- /// const array size

- prepack_input_nxwc4_dw(

- din_batch, pre_din, c, hs, he, ws, we, ic, win, ih, ptr_zero);

- const float* weight_c = weights + c * 9; // kernel_w * kernel_h

- float* dout_c00 = dout_batch + c * size_out_channel;

- float bias_local[4] = {0, 0, 0, 0};

- if (flag_bias) {

- bias_local[0] = bias[c];

- bias_local[1] = bias[c + 1];

- bias_local[2] = bias[c + 2];

- bias_local[3] = bias[c + 3];

- }

-#ifdef __aarch64__

- float32x4_t w0 = vld1q_f32(weight_c); // w0, v23

- float32x4_t w1 = vld1q_f32(weight_c + 4); // w1, v24

- float32x4_t w2 = vld1q_f32(weight_c + 8); // w2, v25

- float32x4_t w3 = vld1q_f32(weight_c + 12); // w3, v26

- float32x4_t w4 = vld1q_f32(weight_c + 16); // w4, v27

- float32x4_t w5 = vld1q_f32(weight_c + 20); // w5, v28

- float32x4_t w6 = vld1q_f32(weight_c + 24); // w6, v29

- float32x4_t w7 = vld1q_f32(weight_c + 28); // w7, v30

- float32x4_t w8 = vld1q_f32(weight_c + 32); // w8, v31

-#endif

- for (int h = 0; h < oh; h += out_h_kernel) {

- float* outc0 = dout_c00 + h * ow;

- float* outc1 = outc0 + size_out_channel;

- float* outc2 = outc1 + size_out_channel;

- float* outc3 = outc2 + size_out_channel;

- const float* inr0 = pre_din + h * 2 * row_len;

- const float* inr1 = inr0 + row_len;

- const float* inr2 = inr1 + row_len;

- if (c + out_c_block > oc) {

- switch (c + out_c_block - oc) {

- case 3:

- outc1 = ptr_write;

- case 2:

- outc2 = ptr_write;

- case 1:

- outc3 = ptr_write;

- default:

- break;

- }

- }

- auto c0 = outc0;

- auto c1 = outc1;

- auto c2 = outc2;

- auto c3 = outc3;

- float pre_out[16];

- for (int w = 0; w < w_loop; ++w) {

- bool flag_mask = (w == w_loop - 1) && flag_remain;

- if (flag_mask) {

- c0 = outc0;

- c1 = outc1;

- c2 = outc2;

- c3 = outc3;

- outc0 = pre_out;

- outc1 = pre_out + 4;

- outc2 = pre_out + 8;

- outc3 = pre_out + 12;

- }

-// clang-format off

-#ifdef __aarch64__

- asm volatile(

- "ldr q8, [%[bias]]\n" /* load bias */

- "ldp q0, q1, [%[inr0]], #32\n" /* load input r0*/

- "and v19.16b, v8.16b, v8.16b\n"

- "ldp q2, q3, [%[inr0]], #32\n" /* load input r0*/

- "and v20.16b, v8.16b, v8.16b\n"

- "ldp q4, q5, [%[inr0]], #32\n" /* load input r0*/

- "and v21.16b, v8.16b, v8.16b\n"

- "ldp q6, q7, [%[inr0]], #32\n" /* load input r0*/

- "and v22.16b, v8.16b, v8.16b\n"

- "ldr q8, [%[inr0]]\n" /* load input r0*/

- /* r0 mul w0-w2, get out */

- "fmla v19.4s , %[w0].4s, v0.4s\n" /* outr0 = w0 * r0, 0*/

- "fmla v20.4s , %[w0].4s, v2.4s\n" /* outr1 = w0 * r0, 2*/

- "fmla v21.4s , %[w0].4s, v4.4s\n" /* outr2 = w0 * r0, 4*/

- "fmla v22.4s , %[w0].4s, v6.4s\n" /* outr3 = w0 * r0, 6*/

- "fmla v19.4s , %[w1].4s, v1.4s\n" /* outr0 = w1 * r0, 1*/

- "ldp q0, q1, [%[inr1]], #32\n" /* load input r1*/

- "fmla v20.4s , %[w1].4s, v3.4s\n" /* outr1 = w1 * r0, 3*/

- "fmla v21.4s , %[w1].4s, v5.4s\n" /* outr2 = w1 * r0, 5*/

- "fmla v22.4s , %[w1].4s, v7.4s\n" /* outr3 = w1 * r0, 7*/

- "fmla v19.4s , %[w2].4s, v2.4s\n" /* outr0 = w0 * r0, 2*/

- "ldp q2, q3, [%[inr1]], #32\n" /* load input r1*/

- "fmla v20.4s , %[w2].4s, v4.4s\n" /* outr1 = w0 * r0, 4*/

- "ldp q4, q5, [%[inr1]], #32\n" /* load input r1*/

- "fmla v21.4s , %[w2].4s, v6.4s\n" /* outr2 = w0 * r0, 6*/

- "ldp q6, q7, [%[inr1]], #32\n" /* load input r1*/

- "fmla v22.4s , %[w2].4s, v8.4s\n" /* outr3 = w0 * r0, 8*/

- "ldr q8, [%[inr1]]\n" /* load input r1*/

- /* r1, mul w3-w5, get out */

- "fmla v19.4s , %[w3].4s, v0.4s\n" /* outr0 = w3 * r1, 0*/

- "fmla v20.4s , %[w3].4s, v2.4s\n" /* outr1 = w3 * r1, 2*/

- "fmla v21.4s , %[w3].4s, v4.4s\n" /* outr2 = w3 * r1, 4*/

- "fmla v22.4s , %[w3].4s, v6.4s\n" /* outr3 = w3 * r1, 6*/

- "fmla v19.4s , %[w4].4s, v1.4s\n" /* outr0 = w4 * r1, 1*/

- "ldp q0, q1, [%[inr2]], #32\n" /* load input r2*/

- "fmla v20.4s , %[w4].4s, v3.4s\n" /* outr1 = w4 * r1, 3*/

- "fmla v21.4s , %[w4].4s, v5.4s\n" /* outr2 = w4 * r1, 5*/

- "fmla v22.4s , %[w4].4s, v7.4s\n" /* outr3 = w4 * r1, 7*/

- "fmla v19.4s , %[w5].4s, v2.4s\n" /* outr0 = w5 * r1, 2*/

- "ldp q2, q3, [%[inr2]], #32\n" /* load input r2*/

- "fmla v20.4s , %[w5].4s, v4.4s\n" /* outr1 = w5 * r1, 4*/

- "ldp q4, q5, [%[inr2]], #32\n" /* load input r2*/

- "fmla v21.4s , %[w5].4s, v6.4s\n" /* outr2 = w5 * r1, 6*/

- "ldp q6, q7, [%[inr2]], #32\n" /* load input r2*/

- "fmla v22.4s , %[w5].4s, v8.4s\n" /* outr3 = w5 * r1, 8*/

- "ldr q8, [%[inr2]]\n" /* load input r2*/

- /* r2, mul w6-w8, get out r0, r1 */

- "fmla v19.4s , %[w6].4s, v0.4s\n" /* outr0 = w6 * r2, 0*/

- "fmla v20.4s , %[w6].4s, v2.4s\n" /* outr1 = w6 * r2, 2*/

- "fmla v21.4s , %[w6].4s, v4.4s\n" /* outr2 = w6 * r2, 4*/

- "fmla v22.4s , %[w6].4s, v6.4s\n" /* outr3 = w6 * r2, 6*/

- "fmla v19.4s , %[w7].4s, v1.4s\n" /* outr0 = w7 * r2, 1*/

- "fmla v20.4s , %[w7].4s, v3.4s\n" /* outr1 = w7 * r2, 3*/

- "fmla v21.4s , %[w7].4s, v5.4s\n" /* outr2 = w7 * r2, 5*/

- "fmla v22.4s , %[w7].4s, v7.4s\n" /* outr3 = w7 * r2, 7*/

- "fmla v19.4s , %[w8].4s, v2.4s\n" /* outr0 = w8 * r2, 2*/

- "fmla v20.4s , %[w8].4s, v4.4s\n" /* outr1 = w8 * r2, 4*/

- "fmla v21.4s , %[w8].4s, v6.4s\n" /* outr2 = w8 * r2, 6*/

- "fmla v22.4s , %[w8].4s, v8.4s\n" /* outr3 = w8 * r2, 8*/

- /* transpose */

- "trn1 v0.4s, v19.4s, v20.4s\n" /* r0: a0a1c0c1*/

- "trn2 v1.4s, v19.4s, v20.4s\n" /* r0: b0b1d0d1*/

- "trn1 v2.4s, v21.4s, v22.4s\n" /* r0: a2a3c2c3*/

- "trn2 v3.4s, v21.4s, v22.4s\n" /* r0: b2b3d2d3*/

- "trn1 v19.2d, v0.2d, v2.2d\n" /* r0: a0a1a2a3*/

- "trn2 v21.2d, v0.2d, v2.2d\n" /* r0: c0c1c2c3*/

- "trn1 v20.2d, v1.2d, v3.2d\n" /* r0: b0b1b2b3*/

- "trn2 v22.2d, v1.2d, v3.2d\n" /* r0: d0d1d2d3*/

- /* relu */

- "cbz %w[flag_relu], 0f\n" /* skip relu*/

- "movi v0.4s, #0\n" /* for relu */

- "fmax v19.4s, v19.4s, v0.4s\n"

- "fmax v20.4s, v20.4s, v0.4s\n"

- "fmax v21.4s, v21.4s, v0.4s\n"

- "fmax v22.4s, v22.4s, v0.4s\n"

- /* save result */

- "0:\n"

- "str q19, [%[outc0]], #16\n"

- "str q20, [%[outc1]], #16\n"

- "str q21, [%[outc2]], #16\n"

- "str q22, [%[outc3]], #16\n"

- :[inr0] "+r"(inr0), [inr1] "+r"(inr1),

- [inr2] "+r"(inr2),

- [outc0]"+r"(outc0), [outc1]"+r"(outc1),

- [outc2]"+r"(outc2), [outc3]"+r"(outc3)

- :[w0] "w"(w0), [w1] "w"(w1), [w2] "w"(w2),

- [w3] "w"(w3), [w4] "w"(w4), [w5] "w"(w5),

- [w6] "w"(w6), [w7] "w"(w7), [w8] "w"(w8),

- [bias] "r" (bias_local), [flag_relu]"r"(flag_relu)

- : "cc", "memory",

- "v0","v1","v2","v3","v4","v5","v6","v7",

- "v8", "v19","v20","v21","v22"

- );

-#else

- asm volatile(

- /* fill with bias */

- "vld1.32 {d16-d17}, [%[bias]]\n" /* load bias */

- /* load weights */

- "vld1.32 {d18-d21}, [%[wc0]]!\n" /* load w0-2, to q9-11 */

- "vld1.32 {d0-d3}, [%[r0]]!\n" /* load input r0, 0,1*/

- "vand.i32 q12, q8, q8\n"

- "vld1.32 {d4-d7}, [%[r0]]!\n" /* load input r0, 2,3*/

- "vand.i32 q13, q8, q8\n"

- "vld1.32 {d8-d11}, [%[r0]]!\n" /* load input r0, 4,5*/

- "vand.i32 q14, q8, q8\n"

- "vld1.32 {d12-d15}, [%[r0]]!\n" /* load input r0, 6,7*/

- "vand.i32 q15, q8, q8\n"

- "vld1.32 {d16-d17}, [%[r0]]\n" /* load input r0, 8*/

- /* mul r0 with w0, w1, w2 */

- "vmla.f32 q12, q9, q0 @ w0 * inr0\n"

- "vmla.f32 q13, q9, q2 @ w0 * inr2\n"

- "vld1.32 {d22-d23}, [%[wc0]]!\n" /* load w2, to q11 */

- "vmla.f32 q14, q9, q4 @ w0 * inr4\n"

- "vmla.f32 q15, q9, q6 @ w0 * inr6\n"

- "vmla.f32 q12, q10, q1 @ w1 * inr1\n"

- "vld1.32 {d0-d3}, [%[r1]]! @ load r1, 0, 1\n"

- "vmla.f32 q13, q10, q3 @ w1 * inr3\n"

- "vmla.f32 q14, q10, q5 @ w1 * inr5\n"

- "vmla.f32 q15, q10, q7 @ w1 * inr7\n"

- "vld1.32 {d18-d21}, [%[wc0]]!\n" /* load w3-4, to q9-10 */

- "vmla.f32 q12, q11, q2 @ w2 * inr2\n"

- "vld1.32 {d4-d7}, [%[r1]]! @ load r1, 2, 3\n"

- "vmla.f32 q13, q11, q4 @ w2 * inr4\n"

- "vld1.32 {d8-d11}, [%[r1]]! @ load r1, 4, 5\n"

- "vmla.f32 q14, q11, q6 @ w2 * inr6\n"

- "vld1.32 {d12-d15}, [%[r1]]! @ load r1, 6, 7\n"

- "vmla.f32 q15, q11, q8 @ w2 * inr8\n"

- /* mul r1 with w3, w4, w5 */

- "vmla.f32 q12, q9, q0 @ w3 * inr0\n"

- "vmla.f32 q13, q9, q2 @ w3 * inr2\n"

- "vld1.32 {d22-d23}, [%[wc0]]!\n" /* load w5, to q11 */

- "vmla.f32 q14, q9, q4 @ w3 * inr4\n"

- "vmla.f32 q15, q9, q6 @ w3 * inr6\n"

- "vld1.32 {d16-d17}, [%[r1]]\n" /* load input r1, 8*/

- "vmla.f32 q12, q10, q1 @ w4 * inr1\n"

- "vld1.32 {d0-d3}, [%[r2]]! @ load r2, 0, 1\n"

- "vmla.f32 q13, q10, q3 @ w4 * inr3\n"

- "vmla.f32 q14, q10, q5 @ w4 * inr5\n"

- "vmla.f32 q15, q10, q7 @ w4 * inr7\n"

- "vld1.32 {d18-d21}, [%[wc0]]!\n" /* load w6-7, to q9-10 */

- "vmla.f32 q12, q11, q2 @ w5 * inr2\n"

- "vld1.32 {d4-d7}, [%[r2]]! @ load r2, 2, 3\n"

- "vmla.f32 q13, q11, q4 @ w5 * inr4\n"

- "vld1.32 {d8-d11}, [%[r2]]! @ load r2, 4, 5\n"

- "vmla.f32 q14, q11, q6 @ w5 * inr6\n"

- "vld1.32 {d12-d15}, [%[r2]]! @ load r2, 6, 7\n"

- "vmla.f32 q15, q11, q8 @ w5 * inr8\n"

- /* mul r2 with w6, w7, w8 */

- "vmla.f32 q12, q9, q0 @ w6 * inr0\n"

- "vmla.f32 q13, q9, q2 @ w6 * inr2\n"

- "vld1.32 {d22-d23}, [%[wc0]]!\n" /* load w8, to q11 */

- "vmla.f32 q14, q9, q4 @ w6 * inr4\n"

- "vmla.f32 q15, q9, q6 @ w6 * inr6\n"

- "vld1.32 {d16-d17}, [%[r2]]\n" /* load input r2, 8*/

- "vmla.f32 q12, q10, q1 @ w7 * inr1\n"

- "vmla.f32 q13, q10, q3 @ w7 * inr3\n"

- "vmla.f32 q14, q10, q5 @ w7 * inr5\n"

- "vmla.f32 q15, q10, q7 @ w7 * inr7\n"

- "sub %[wc0], %[wc0], #144 @ wc0 - 144 to start address\n"

- "vmla.f32 q12, q11, q2 @ w8 * inr2\n"

- "vmla.f32 q13, q11, q4 @ w8 * inr4\n"

- "vmla.f32 q14, q11, q6 @ w8 * inr6\n"

- "vmla.f32 q15, q11, q8 @ w8 * inr8\n"

- /* transpose */

- "vtrn.32 q12, q13\n" /* a0a1c0c1, b0b1d0d1*/

- "vtrn.32 q14, q15\n" /* a2a3c2c3, b2b3d2d3*/

- "vswp d25, d28\n" /* a0a1a2a3, c0c1c2c3*/

- "vswp d27, d30\n" /* b0b1b2b3, d0d1d2d3*/

- "cmp %[flag_relu], #0\n"

- "beq 0f\n" /* skip relu*/

- "vmov.u32 q0, #0\n"

- "vmax.f32 q12, q12, q0\n"

- "vmax.f32 q13, q13, q0\n"

- "vmax.f32 q14, q14, q0\n"

- "vmax.f32 q15, q15, q0\n"

- "0:\n"

- "vst1.32 {d24-d25}, [%[outc0]]!\n" /* save outc0*/

- "vst1.32 {d26-d27}, [%[outc1]]!\n" /* save outc1*/

- "vst1.32 {d28-d29}, [%[outc2]]!\n" /* save outc2*/

- "vst1.32 {d30-d31}, [%[outc3]]!\n" /* save outc3*/

- :[r0] "+r"(inr0), [r1] "+r"(inr1),

- [r2] "+r"(inr2), [wc0] "+r" (weight_c),

- [outc0]"+r"(outc0), [outc1]"+r"(outc1),

- [outc2]"+r"(outc2), [outc3]"+r"(outc3)

- :[bias] "r" (bias_local),

- [flag_relu]"r"(flag_relu)

- :"cc", "memory",

- "q0","q1","q2","q3","q4","q5","q6","q7",

- "q8", "q9","q10","q11","q12","q13","q14","q15"

- );

-#endif // __arch64__

- // clang-format off

- if (flag_mask) {

- for (int i = 0; i < remain; ++i) {

- c0[i] = pre_out[i];

- c1[i] = pre_out[i + 4];

- c2[i] = pre_out[i + 8];

- c3[i] = pre_out[i + 12];

- }

- }

- }

- }

- }

- }

-}

-

-} // namespace math

-} // namespace arm

-} // namespace lite

-} // namespace paddle

diff --git a/lite/backends/arm/math/conv_depthwise_3x3p0.cc b/lite/backends/arm/math/conv_depthwise_3x3p0.cc

deleted file mode 100644

index 0c050ffe6fb0f064f5c26ea0da6acee17f4403ae..0000000000000000000000000000000000000000

--- a/lite/backends/arm/math/conv_depthwise_3x3p0.cc

+++ /dev/null

@@ -1,4178 +0,0 @@

-// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include "lite/backends/arm/math/conv_depthwise.h"

-#include

-

-namespace paddle {

-namespace lite {

-namespace arm {

-namespace math {

-

-void conv_depthwise_3x3s1p0_bias(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-//! for input width <= 4

-void conv_depthwise_3x3s1p0_bias_s(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-void conv_depthwise_3x3s2p0_bias(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-//! for input width <= 4

-void conv_depthwise_3x3s2p0_bias_s(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-void conv_depthwise_3x3s1p0_bias_relu(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-//! for input width <= 4

-void conv_depthwise_3x3s1p0_bias_s_relu(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-void conv_depthwise_3x3s2p0_bias_relu(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-//! for input width <= 4

-void conv_depthwise_3x3s2p0_bias_s_relu(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx);

-

-void conv_depthwise_3x3p0_fp32(const float* din,

- float* dout,

- int num,

- int ch_out,

- int h_out,

- int w_out,

- int ch_in,

- int h_in,

- int w_in,

- const float* weights,

- const float* bias,

- int stride,

- bool flag_bias,

- bool flag_relu,

- ARMContext* ctx) {

- if (stride == 1) {

- if (flag_relu) {

- if (w_in > 5) {

- conv_depthwise_3x3s1p0_bias_relu(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- } else {

- conv_depthwise_3x3s1p0_bias_s_relu(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- }

- } else {

- if (w_in > 5) {

- conv_depthwise_3x3s1p0_bias(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- } else {

- conv_depthwise_3x3s1p0_bias_s(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- }

- }

- } else { //! stride = 2

- if (flag_relu) {

- if (w_in > 8) {

- conv_depthwise_3x3s2p0_bias_relu(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- } else {

- conv_depthwise_3x3s2p0_bias_s_relu(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- }

- } else {

- if (w_in > 8) {

- conv_depthwise_3x3s2p0_bias(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- } else {

- conv_depthwise_3x3s2p0_bias_s(dout,

- din,

- weights,

- bias,

- flag_bias,

- num,

- ch_in,

- h_in,

- w_in,

- h_out,

- w_out,

- ctx);

- }

- }

- }

-}

-/**

- * \brief depthwise convolution, kernel size 3x3, stride 1, pad 1, with bias,

- * width > 4

- */

-// 4line

-void conv_depthwise_3x3s1p0_bias(float* dout,

- const float* din,

- const float* weights,

- const float* bias,

- bool flag_bias,

- const int num,

- const int ch_in,

- const int h_in,

- const int w_in,

- const int h_out,

- const int w_out,

- ARMContext* ctx) {

- //! pad is done implicit

- const float zero[8] = {0.f, 0.f, 0.f, 0.f, 0.f, 0.f, 0.f, 0.f};

- //! for 4x6 convolution window

- const unsigned int right_pad_idx[8] = {5, 4, 3, 2, 1, 0, 0, 0};

-

- float* zero_ptr = ctx->workspace_data();

- memset(zero_ptr, 0, w_in * sizeof(float));

- float* write_ptr = zero_ptr + w_in;

-

- int size_in_channel = w_in * h_in;

- int size_out_channel = w_out * h_out;

- int w_stride = 9;

-

- int tile_w = w_out >> 2;

- int remain = w_out % 4;

-

- unsigned int size_pad_right = (unsigned int)(6 + (tile_w << 2) - w_in);

- const int remian_idx[4] = {0, 1, 2, 3};

-

- uint32x4_t vmask_rp1 =

- vcgeq_u32(vld1q_u32(right_pad_idx), vdupq_n_u32(size_pad_right));

- uint32x4_t vmask_rp2 =

- vcgeq_u32(vld1q_u32(right_pad_idx + 4), vdupq_n_u32(size_pad_right));

- uint32x4_t vmask_result =

- vcgtq_s32(vdupq_n_s32(remain), vld1q_s32(remian_idx));

-

- unsigned int vmask[8];

- vst1q_u32(vmask, vmask_rp1);

- vst1q_u32(vmask + 4, vmask_rp2);

-

- unsigned int rmask[4];

- vst1q_u32(rmask, vmask_result);

-

- float32x4_t vzero = vdupq_n_f32(0.f);

-