diff --git a/cmake/external/flatbuffers.cmake b/cmake/external/flatbuffers.cmake

index 47b3042234cfa482ca7187baf8e51275ea8d3ac8..d679eb19f7061720aa9b4b3340fca620bf75861f 100644

--- a/cmake/external/flatbuffers.cmake

+++ b/cmake/external/flatbuffers.cmake

@@ -45,7 +45,7 @@ SET(OPTIONAL_ARGS "-DCMAKE_CXX_COMPILER=${HOST_CXX_COMPILER}"

ExternalProject_Add(

extern_flatbuffers

${EXTERNAL_PROJECT_LOG_ARGS}

- GIT_REPOSITORY "https://github.com/google/flatbuffers.git"

+ GIT_REPOSITORY "https://github.com/Shixiaowei02/flatbuffers.git"

GIT_TAG "v1.12.0"

SOURCE_DIR ${FLATBUFFERS_SOURCES_DIR}

PREFIX ${FLATBUFFERS_PREFIX_DIR}

diff --git a/docs/index.rst b/docs/index.rst

index 88170c3f6ee177b55631b008c888cb88eda866d3..adc52db898ce48818db3352cdecc8bc1ae6ed6bb 100644

--- a/docs/index.rst

+++ b/docs/index.rst

@@ -46,6 +46,7 @@ Welcome to Paddle-Lite's documentation!

user_guides/post_quant_with_data

user_guides/post_quant_no_data

user_guides/model_quantization

+ user_guides/model_visualization

user_guides/debug

.. toctree::

diff --git a/docs/user_guides/model_visualization.md b/docs/user_guides/model_visualization.md

new file mode 100644

index 0000000000000000000000000000000000000000..0d7d9fe1c0f509b66f1e8856b59bec987e9898c8

--- /dev/null

+++ b/docs/user_guides/model_visualization.md

@@ -0,0 +1,214 @@

+# 模型可视化方法

+

+Paddle Lite框架中主要使用到的模型结构有2种:(1) 为[PaddlePaddle](https://github.com/PaddlePaddle/Paddle)深度学习框架产出的模型格式; (2) 使用[Lite模型优化工具opt](model_optimize_tool)优化后的模型格式。因此本章节包含内容如下:

+

+1. [Paddle推理模型可视化](model_visualization.html#paddle)

+2. [Lite优化模型可视化](model_visualization.html#lite)

+3. [Lite子图方式下模型可视化](model_visualization.html#id2)

+

+## Paddle推理模式可视化

+

+Paddle用于推理的模型是通过[save_inference_model](https://www.paddlepaddle.org.cn/documentation/docs/zh/api_cn/io_cn/save_inference_model_cn.html#save-inference-model)这个API保存下来的,存储格式有两种,由save_inference_model接口中的 `model_filename` 和 `params_filename` 变量控制:

+

+- **non-combined形式**:参数保存到独立的文件,如设置 `model_filename` 为 `None` , `params_filename` 为 `None`

+

+ ```bash

+ $ ls -l recognize_digits_model_non-combined/

+ total 192K

+ -rw-r--r-- 1 root root 28K Sep 24 09:39 __model__ # 模型文件

+ -rw-r--r-- 1 root root 104 Sep 24 09:39 conv2d_0.b_0 # 独立权重文件

+ -rw-r--r-- 1 root root 2.0K Sep 24 09:39 conv2d_0.w_0 # 独立权重文件

+ -rw-r--r-- 1 root root 224 Sep 24 09:39 conv2d_1.b_0 # ...

+ -rw-r--r-- 1 root root 98K Sep 24 09:39 conv2d_1.w_0

+ -rw-r--r-- 1 root root 64 Sep 24 09:39 fc_0.b_0

+ -rw-r--r-- 1 root root 32K Sep 24 09:39 fc_0.w_0

+ ```

+

+- **combined形式**:参数保存到同一个文件,如设置 `model_filename` 为 `model` , `params_filename` 为 `params`

+

+ ```bash

+ $ ls -l recognize_digits_model_combined/

+ total 160K

+ -rw-r--r-- 1 root root 28K Sep 24 09:42 model # 模型文件

+ -rw-r--r-- 1 root root 132K Sep 24 09:42 params # 权重文件

+ ```

+

+通过以上方式保存下来的模型文件都可以通过[Netron](https://lutzroeder.github.io/netron/)工具来打开查看模型的网络结构。

+

+**注意:**[Netron](https://github.com/lutzroeder/netron)当前要求PaddlePaddle的保存模型文件名必须为`__model__`,否则无法识别。如果是通过第二种方式保存下来的combined形式的模型文件,需要将文件重命名为`__model__`。

+

+

+

+## Lite优化模型可视化

+

+Paddle Lite在执行模型推理之前需要使用[模型优化工具opt](model_optimize_tool)来对模型进行优化,优化后的模型结构同样可以使用[Netron](https://lutzroeder.github.io/netron/)工具进行查看,但是必须保存为`protobuf`格式,而不是`naive_buffer`格式。

+

+**注意**: 为了减少第三方库的依赖、提高Lite预测框架的通用性,在移动端使用Lite API您需要准备Naive Buffer存储格式的模型(该模型格式是以`.nb`为后缀的单个文件)。但是Naive Buffer格式的模型为序列化模型,不支持可视化。

+

+这里以[paddle_lite_opt](opt/opt_python)工具为例:

+

+- 当模型输入为`non-combined`格式的Paddle模型时,需要通过`--model_dir`来指定模型文件夹

+

+ ```bash

+ $ paddle_lite_opt \

+ --model_dir=./recognize_digits_model_non-combined/ \

+ --valid_targets=arm \

+ --optimize_out_type=protobuf \ # 注意:这里必须输出为protobuf格式

+ --optimize_out=model_opt_dir_non-combined

+ ```

+

+ 优化后的模型文件会存储在由`--optimize_out`指定的输出文件夹下,格式如下

+

+ ```bash

+ $ ls -l model_opt_dir_non-combined/

+ total 152K

+ -rw-r--r-- 1 root root 17K Sep 24 09:51 model # 优化后的模型文件

+ -rw-r--r-- 1 root root 132K Sep 24 09:51 params # 优化后的权重文件

+ ```

+

+- 当模式输入为`combined`格式的Paddle模型时,需要同时输入`--model_file`和`--param_file`来分别指定Paddle模型的模型文件和权重文件

+

+ ```bash

+ $ paddle_lite_opt \

+ --model_file=./recognize_digits_model_combined/model \

+ --param_file=./recognize_digits_model_combined/params \

+ --valid_targets=arm \

+ --optimize_out_type=protobuf \ # 注意:这里必须输出为protobuf格式

+ --optimize_out=model_opt_dir_combined

+ ```

+ 优化后的模型文件同样存储在由`--optimize_out`指定的输出文件夹下,格式相同

+

+ ```bash

+ ls -l model_opt_dir_combined/

+ total 152K

+ -rw-r--r-- 1 root root 17K Sep 24 09:56 model # 优化后的模型文件

+ -rw-r--r-- 1 root root 132K Sep 24 09:56 params # 优化后的权重文件

+ ```

+

+

+将通过以上步骤输出的优化后的模型文件`model`重命名为`__model__`,然后用[Netron](https://lutzroeder.github.io/netron/)工具打开即可查看优化后的模型结构。将优化前后的模型进行对比,即可发现优化后的模型比优化前的模型更轻量级,在推理任务中耗费资源更少且执行速度也更快。

+

+

+

+

+## Lite子图方式下模型可视化

+

+当模型优化的目标硬件平台为 [华为NPU](../demo_guides/huawei_kirin_npu), [百度XPU](../demo_guides/baidu_xpu), [瑞芯微NPU](../demo_guides/rockchip_npu), [联发科APU](../demo_guides/mediatek_apu) 等通过子图方式接入的硬件平台时,得到的优化后的`protobuf`格式模型中运行在这些硬件平台上的算子都由`subgraph`算子包含,无法查看具体的网络结构。

+

+以[华为NPU](../demo_guides/huawei_kirin_npu)为例,运行以下命令进行模型优化,得到输出文件夹下的`model, params`两个文件。

+

+```bash

+$ paddle_lite_opt \

+ --model_dir=./recognize_digits_model_non-combined/ \

+ --valid_targets=npu,arm \ # 注意:这里的目标硬件平台为NPU,ARM

+ --optimize_out_type=protobuf \

+ --optimize_out=model_opt_dir_npu

+```

+

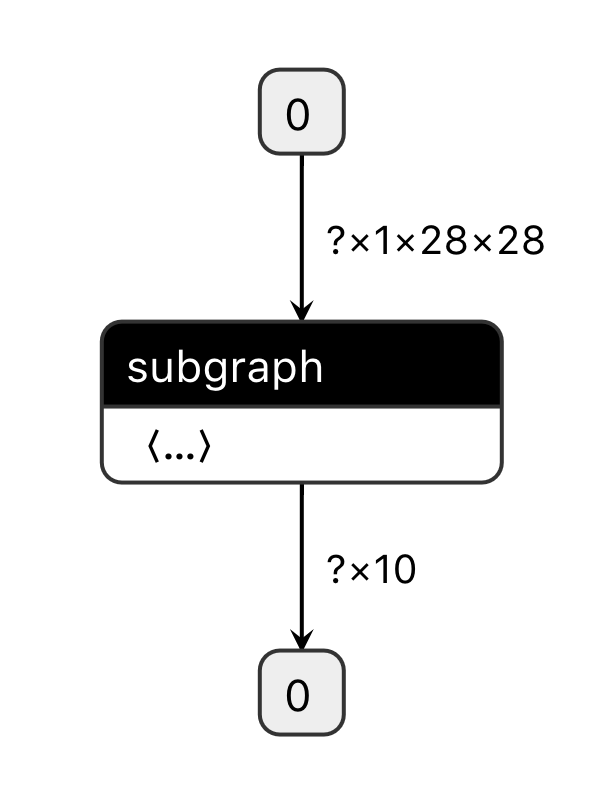

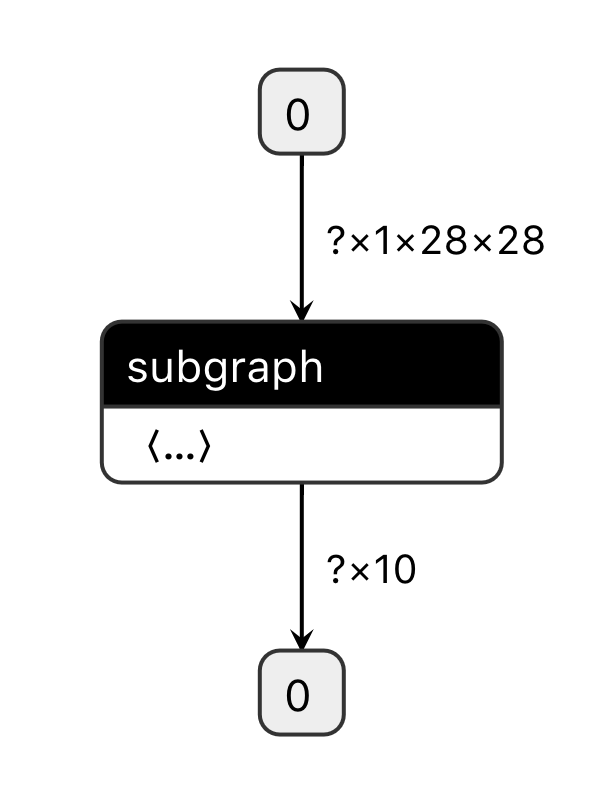

+将优化后的模型文件`model`重命名为`__model__`,然后用[Netron](https://lutzroeder.github.io/netron/)工具打开,只看到单个的subgraph算子,如下图所示:

+

+

+

+如果想要查看subgraph中的具体模型结构和算子信息需要打开Lite Debug Log,Lite在优化过程中会以.dot文本形式输出模型的拓扑结构,将.dot的文本内容复制到[webgraphviz](http://www.webgraphviz.com/)即可查看模型结构。

+

+```bash

+$ export GLOG_v=5 # 注意:这里打开Lite中Level为5及以下的的Debug Log信息

+$ paddle_lite_opt \

+ --model_dir=./recognize_digits_model_non-combined/ \

+ --valid_targets=npu,arm \

+ --optimize_out_type=protobuf \

+ --optimize_out=model_opt_dir_npu > debug_log.txt 2>&1

+# 以上命令会将所有的debug log存储在debug_log.txt文件中

+```

+

+打开debug_log.txt文件,将会看到多个由以下格式构成的拓扑图定义,由于recognize_digits模型在优化后仅存在一个subgraph,所以在文本搜索`subgraphs`的关键词,即可得到子图拓扑如下:

+

+```shell

+I0924 10:50:12.715279 122828 optimizer.h:202] == Running pass: npu_subgraph_pass

+I0924 10:50:12.715335 122828 ssa_graph.cc:27] node count 33

+I0924 10:50:12.715412 122828 ssa_graph.cc:27] node count 33

+I0924 10:50:12.715438 122828 ssa_graph.cc:27] node count 33

+subgraphs: 1 # 注意:搜索subgraphs:这个关键词,

+digraph G {

+ node_30[label="fetch"]

+ node_29[label="fetch0" shape="box" style="filled" color="black" fillcolor="white"]

+ node_28[label="save_infer_model/scale_0.tmp_0"]

+ node_26[label="fc_0.tmp_1"]

+ node_24[label="fc_0.w_0"]

+ node_23[label="fc0_subgraph_0" shape="box" style="filled" color="black" fillcolor="red"]

+ ...

+ node_15[label="batch_norm_0.tmp_1"]

+ node_17[label="conv2d1_subgraph_0" shape="box" style="filled" color="black" fillcolor="red"]

+ node_19[label="conv2d_1.b_0"]

+ node_1->node_0

+ node_0->node_2

+ node_2->node_3

+ ...

+ node_28->node_29

+ node_29->node_30

+} // end G

+I0924 10:50:12.715745 122828 op_lite.h:62] valid places 0

+I0924 10:50:12.715764 122828 op_registry.cc:32] creating subgraph kernel for host/float/NCHW

+I0924 10:50:12.715770 122828 op_lite.cc:89] pick kernel for subgraph host/float/NCHW get 0 kernels

+```

+

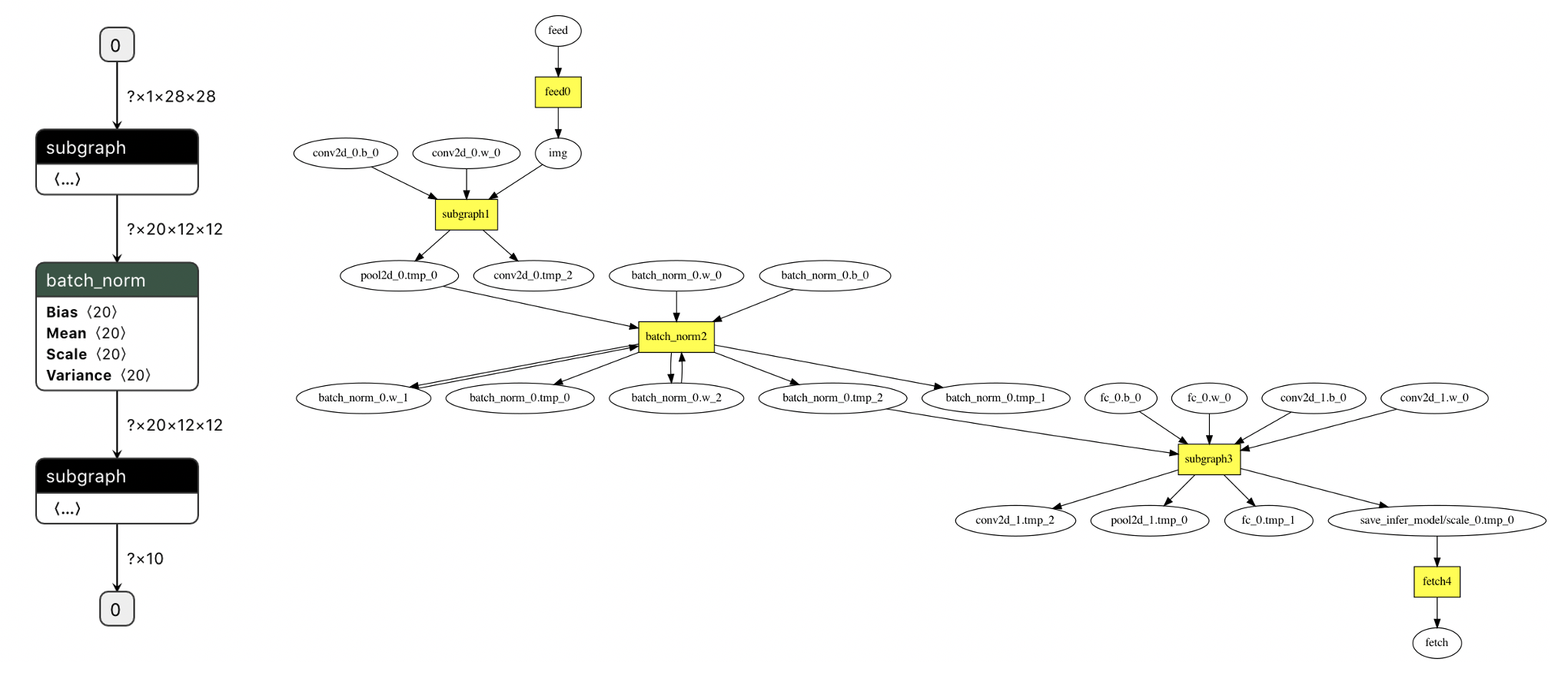

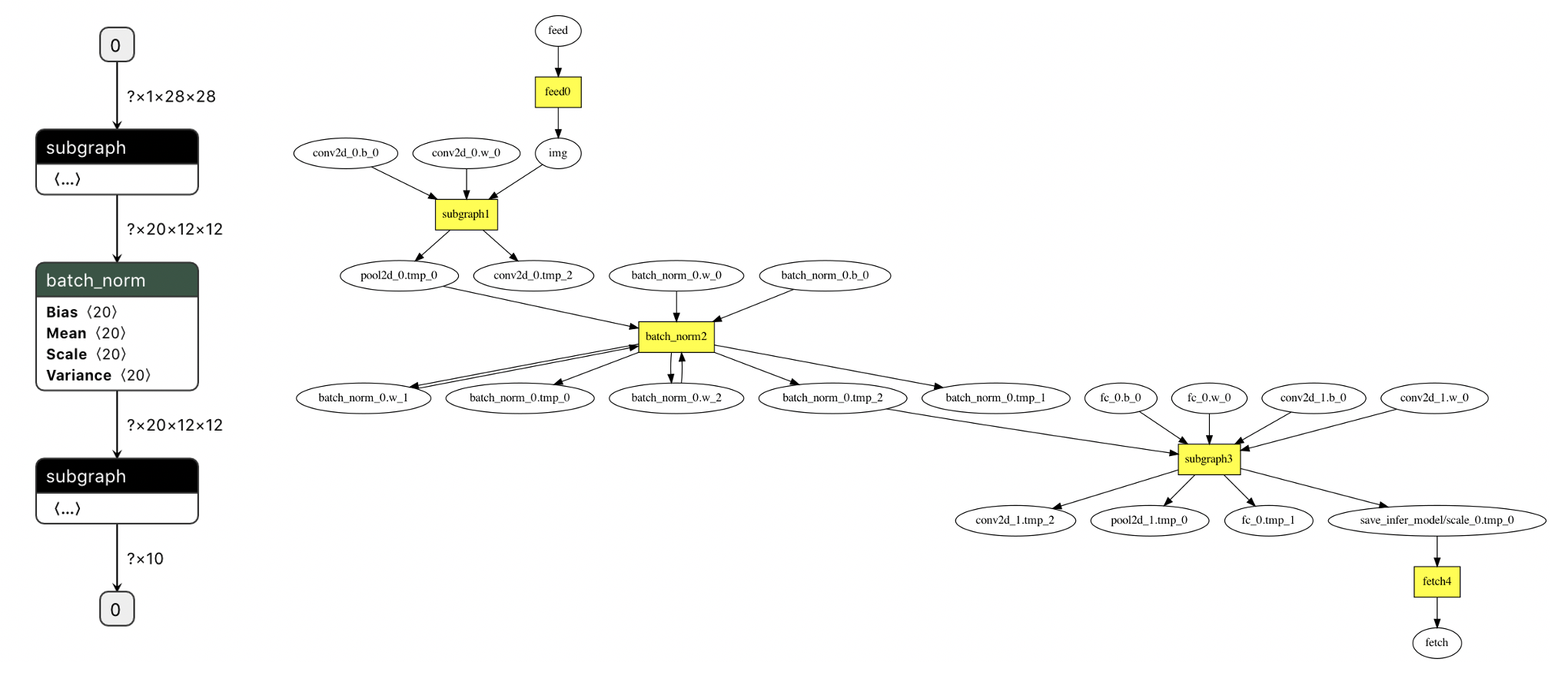

+将以上文本中以`digraph G {`开头和以`} // end G`结尾的这段文本复制粘贴到[webgraphviz](http://www.webgraphviz.com/),即可看到子图中的具体模型结构,如下图。其中高亮的方形节点为算子,椭圆形节点为变量或张量。

+

+

+

+

+

+若模型中存在多个子图,以上方法同样可以得到所有子图的具体模型结构。

+

+同样以[华为NPU](../demo_guides/huawei_kirin_npu)和ARM平台混合调度为例,子图的产生往往是由于模型中存在部分算子无法运行在NPU平台上(比如NPU不支持的算子),这会�导致整个模型被切分为多个子图,子图中包含的算子会运行在NPU平台上,而子图与子图之间的一个或多个算子则只能运行在ARM平台上。这里可以通过[华为NPU](../demo_guides/huawei_kirin_npu)的[自定义子图分割](../demo_guides/huawei_kirin_npu.html#npuarm-cpu)功能,将recognize_digits模型中的`batch_norm`设置为禁用NPU的算子,从而将模型分割为具有两个子图的模型:

+

+```bash

+# 此txt配置文件文件中的内容为 batch_norm

+$ export SUBGRAPH_CUSTOM_PARTITION_CONFIG_FILE=./subgraph_custom_partition_config_file.txt

+$ export GLOG_v=5 # 继续打开Lite的Debug Log信息

+$ paddle_lite_opt \

+ --model_dir=./recognize_digits_model_non-combined/ \

+ --valid_targets=npu,arm \

+ --optimize_out_type=protobuf \

+ --optimize_out=model_opt_dir_npu > debug_log.txt 2>&1 #

+```

+

+将执行以上命令之后,得到的优化后模型文件`model`重命名为`__model__`,然后用[Netron](https://lutzroeder.github.io/netron/)工具打开,就可以看到优化后的模型中存在2个subgraph算子,如左图所示,两个子图中间即为通过环境变量和配置文件指定的禁用NPU的`batch_norm`算子。

+

+打开新保存的debug_log.txt文件,搜索`final program`关键字,拷贝在这之后的以`digraph G {`开头和以`} // end G`结尾的文本用[webgraphviz](http://www.webgraphviz.com/)查看,也是同样的模型拓扑结构,存在`subgraph1`和`subgraph3`两个子图,两个子图中间同样是被禁用NPU的`batch_norm`算子,如右图所示。

+

+

+

+之后继续在debug_log.txt文件中,搜索`subgraphs`关键字,可以得到所有子图的.dot格式内容如下:

+

+```bash

+digraph G {

+ node_30[label="fetch"]

+ node_29[label="fetch0" shape="box" style="filled" color="black" fillcolor="white"]

+ node_28[label="save_infer_model/scale_0.tmp_0"]

+ node_26[label="fc_0.tmp_1"]

+ node_24[label="fc_0.w_0"]

+ ...

+ node_17[label="conv2d1_subgraph_0" shape="box" style="filled" color="black" fillcolor="red"]

+ node_19[label="conv2d_1.b_0"]

+ node_0[label="feed0" shape="box" style="filled" color="black" fillcolor="white"]

+ node_5[label="conv2d_0.b_0"]

+ node_1[label="feed"]

+ node_23[label="fc0_subgraph_0" shape="box" style="filled" color="black" fillcolor="red"]

+ node_7[label="pool2d0_subgraph_1" shape="box" style="filled" color="black" fillcolor="green"]

+ node_21[label="pool2d1_subgraph_0" shape="box" style="filled" color="black" fillcolor="red"]

+ ...

+ node_18[label="conv2d_1.w_0"]

+ node_1->node_0

+ node_0->node_2

+ ...

+ node_28->node_29

+ node_29->node_30

+} // end G

+```

+

+将以上文本复制到[webgraphviz](http://www.webgraphviz.com/)查看,即可显示两个子图分别在整个模型中的结构,如下图所示。可以看到图中绿色高亮的方形节点的为`subgraph1`中的算子,红色高亮的方形节点为`subgraph2`中的算子,两个子图中间白色不高亮的方形节点即为被禁用NPU的`batch_norm`算子。

+

+

+

+**注意:** 本章节用到的recognize_digits模型代码位于[PaddlePaddle/book](https://github.com/PaddlePaddle/book/tree/develop/02.recognize_digits)

diff --git a/lite/CMakeLists.txt b/lite/CMakeLists.txt

index d69f6d6d9e77668c5789baff3f2f1051afe5df46..abb769261f1e756d140d7dcf64fb5730fbe7b775 100755

--- a/lite/CMakeLists.txt

+++ b/lite/CMakeLists.txt

@@ -38,34 +38,31 @@ if (LITE_WITH_LIGHT_WEIGHT_FRAMEWORK AND NOT LITE_ON_TINY_PUBLISH)

endif()

if (WITH_TESTING)

+ set(LITE_URL_FOR_UNITTESTS "http://paddle-inference-dist.bj.bcebos.com/PaddleLite/models_and_data_for_unittests")

+ # models

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "lite_naive_model.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v2_relu.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "inception_v4_simple.tar.gz")

if(LITE_WITH_LIGHT_WEIGHT_FRAMEWORK)

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1_int16.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v2_relu.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "resnet50.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "inception_v4_simple.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "MobileNetV1_quant.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "transformer_with_mask_fp32.tar.gz")

- endif()

- if(NOT LITE_WITH_LIGHT_WEIGHT_FRAMEWORK)

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "mobilenet_v1_int8_for_mediatek_apu.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "mobilenet_v1_int8_for_rockchip_npu.tar.gz")

+ else()

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "GoogleNet_inference.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v1.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "mobilenet_v2_relu.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "inception_v4_simple.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL} "step_rnn.tar.gz")

-

- set(LITE_URL_FOR_UNITTESTS "http://paddle-inference-dist.bj.bcebos.com/PaddleLite/models_and_data_for_unittests")

- # models

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "resnet50.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "bert.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "ernie.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "GoogLeNet.tar.gz")

lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "VGG19.tar.gz")

- # data

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "ILSVRC2012_small.tar.gz")

- lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "bert_data.tar.gz")

endif()

+ # data

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "ILSVRC2012_small.tar.gz")

+ lite_download_and_uncompress(${LITE_MODEL_DIR} ${LITE_URL_FOR_UNITTESTS} "bert_data.tar.gz")

endif()

# ----------------------------- PUBLISH -----------------------------

diff --git a/lite/backends/arm/math/conv3x3s1p01_depthwise_fp32_relu.cc b/lite/backends/arm/math/conv3x3s1p01_depthwise_fp32_relu.cc

index 3e02eddfdb2de33b7f75e2448c3a5809ebcb88d7..bca36f5f0baa02fa780aada094700f0a7b5ae378 100644

--- a/lite/backends/arm/math/conv3x3s1p01_depthwise_fp32_relu.cc

+++ b/lite/backends/arm/math/conv3x3s1p01_depthwise_fp32_relu.cc

@@ -2307,12 +2307,10 @@ void conv_depthwise_3x3s1p0_bias_no_relu(float *dout,

//! process bottom pad

if (i + 3 >= h_in) {

switch (i + 3 - h_in) {

- case 3:

- din_ptr1 = zero_ptr;

case 2:

- din_ptr2 = zero_ptr;

+ din_ptr1 = zero_ptr;

case 1:

- din_ptr3 = zero_ptr;

+ din_ptr2 = zero_ptr;

case 0:

din_ptr3 = zero_ptr;

default:

@@ -2591,12 +2589,10 @@ void conv_depthwise_3x3s1p0_bias_relu(float *dout,

//! process bottom pad

if (i + 3 >= h_in) {

switch (i + 3 - h_in) {

- case 3:

- din_ptr1 = zero_ptr;

case 2:

- din_ptr2 = zero_ptr;

+ din_ptr1 = zero_ptr;

case 1:

- din_ptr3 = zero_ptr;

+ din_ptr2 = zero_ptr;

case 0:

din_ptr3 = zero_ptr;

default:

@@ -2730,12 +2726,10 @@ void conv_depthwise_3x3s1p0_bias_s_no_relu(float *dout,

if (j + 3 >= h_in) {

switch (j + 3 - h_in) {

- case 3:

- dr1 = zero_ptr;

case 2:

- dr2 = zero_ptr;

+ dr1 = zero_ptr;

case 1:

- dr3 = zero_ptr;

+ dr2 = zero_ptr;

doutr1 = trash_buf;

case 0:

dr3 = zero_ptr;

@@ -2889,12 +2883,10 @@ void conv_depthwise_3x3s1p0_bias_s_relu(float *dout,

if (j + 3 >= h_in) {

switch (j + 3 - h_in) {

- case 3:

- dr1 = zero_ptr;

case 2:

- dr2 = zero_ptr;

+ dr1 = zero_ptr;

case 1:

- dr3 = zero_ptr;

+ dr2 = zero_ptr;

doutr1 = trash_buf;

case 0:

dr3 = zero_ptr;

diff --git a/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc b/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc

index b4539db98c3ffb1a143c38dd3c4dd9e9924bd63e..25ee9f940481a0c92f354e819d6d2b8d45eff169 100644

--- a/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc

+++ b/lite/backends/arm/math/conv3x3s1px_depthwise_fp32.cc

@@ -645,7 +645,6 @@ void conv_3x3s1_depthwise_fp32_bias(const float* i_data,

bool flag_bias = param.bias != nullptr;

/// get workspace

- LOG(INFO) << "conv_3x3s1_depthwise_fp32_bias: ";

float* ptr_zero = ctx->workspace_data();

memset(ptr_zero, 0, sizeof(float) * win_round);

float* ptr_write = ptr_zero + win_round;

diff --git a/lite/backends/arm/math/conv3x3s2p01_depthwise_fp32_relu.cc b/lite/backends/arm/math/conv3x3s2p01_depthwise_fp32_relu.cc

index 61f446137144b20b51df31c872fe708ddac68e33..7a3e6e9348da12a0f362cbbe6c652ed70ee94fea 100644

--- a/lite/backends/arm/math/conv3x3s2p01_depthwise_fp32_relu.cc

+++ b/lite/backends/arm/math/conv3x3s2p01_depthwise_fp32_relu.cc

@@ -713,7 +713,7 @@ void conv_depthwise_3x3s2p1_bias_relu(float* dout,

cnt_col++;

size_right_remain -= 8;

}

- int cnt_remain = (size_right_remain == 8) ? 4 : (w_out % 4); //

+ int cnt_remain = (size_right_remain == 8 && w_out % 4 == 0) ? 4 : (w_out % 4);

int size_right_pad = w_out * 2 - w_in;

@@ -966,7 +966,7 @@ void conv_depthwise_3x3s2p1_bias_no_relu(float* dout,

cnt_col++;

size_right_remain -= 8;

}

- int cnt_remain = (size_right_remain == 8) ? 4 : (w_out % 4); //

+ int cnt_remain = (size_right_remain == 8 && w_out % 4 == 0) ? 4 : (w_out % 4);

int size_right_pad = w_out * 2 - w_in;

diff --git a/lite/backends/arm/math/conv_impl.cc b/lite/backends/arm/math/conv_impl.cc

index fa2f85311b3ff4247d52505d750566ec80e47256..af722fd6413c22c2be7474ba38b54d3f30d0011c 100644

--- a/lite/backends/arm/math/conv_impl.cc

+++ b/lite/backends/arm/math/conv_impl.cc

@@ -620,10 +620,8 @@ void conv_depthwise_3x3_fp32(const void* din,

int pad = pad_w;

bool flag_bias = param.bias != nullptr;

bool pads_less = ((paddings[1] < 2) && (paddings[3] < 2));

- bool ch_four = ch_in <= 4 * w_in;

if (stride == 1) {

- if (ch_four && pads_less && (pad_h == pad_w) &&

- (pad < 2)) { // support pad = [0, 1]

+ if (pads_less && (pad_h == pad_w) && (pad < 2)) { // support pad = [0, 1]

conv_depthwise_3x3s1_fp32(reinterpret_cast(din),

reinterpret_cast(dout),

num,

@@ -656,8 +654,7 @@ void conv_depthwise_3x3_fp32(const void* din,

ctx);

}

} else if (stride == 2) {

- if (ch_four && pads_less && pad_h == pad_w &&

- (pad < 2)) { // support pad = [0, 1]

+ if (pads_less && pad_h == pad_w && (pad < 2)) { // support pad = [0, 1]

conv_depthwise_3x3s2_fp32(reinterpret_cast(din),

reinterpret_cast(dout),

num,

diff --git a/lite/backends/arm/math/interpolate.cc b/lite/backends/arm/math/interpolate.cc

index 4345c2e8137dbe0d0d1031cb4b41a2163d49ed57..1c53142fc53bc785efcbf28fa007d403ad99ab70 100644

--- a/lite/backends/arm/math/interpolate.cc

+++ b/lite/backends/arm/math/interpolate.cc

@@ -70,8 +70,7 @@ void bilinear_interp(const float* src,

int h_out,

float scale_x,

float scale_y,

- bool align_corners,

- bool align_mode) {

+ bool with_align) {

int* buf = new int[w_out + h_out + w_out * 2 + h_out * 2];

int* xofs = buf;

@@ -79,13 +78,14 @@ void bilinear_interp(const float* src,

float* alpha = reinterpret_cast(buf + w_out + h_out);

float* beta = reinterpret_cast(buf + w_out + h_out + w_out * 2);

- bool with_align = (align_mode == 0 && !align_corners);

float fx = 0.0f;

float fy = 0.0f;

int sx = 0;

int sy = 0;

- if (!with_align) {

+ if (with_align) {

+ scale_x = static_cast(w_in - 1) / (w_out - 1);

+ scale_y = static_cast(h_in - 1) / (h_out - 1);

// calculate x axis coordinate

for (int dx = 0; dx < w_out; dx++) {

fx = dx * scale_x;

@@ -105,6 +105,8 @@ void bilinear_interp(const float* src,

beta[dy * 2 + 1] = fy;

}

} else {

+ scale_x = static_cast(w_in) / w_out;

+ scale_y = static_cast(h_in) / h_out;

// calculate x axis coordinate

for (int dx = 0; dx < w_out; dx++) {

fx = scale_x * (dx + 0.5f) - 0.5f;

@@ -466,9 +468,15 @@ void nearest_interp(const float* src,

float* dst,

int w_out,

int h_out,

- float scale_w_new,

- float scale_h_new,

+ float scale_x,

+ float scale_y,

bool with_align) {

+ float scale_w_new = (with_align)

+ ? (static_cast(w_in - 1) / (w_out - 1))

+ : (static_cast(w_in) / (w_out));

+ float scale_h_new = (with_align)

+ ? (static_cast(h_in - 1) / (h_out - 1))

+ : (static_cast(h_in) / (h_out));

if (with_align) {

for (int h = 0; h < h_out; ++h) {

float* dst_p = dst + h * w_out;

@@ -498,8 +506,7 @@ void interpolate(lite::Tensor* X,

int out_height,

int out_width,

float scale,

- bool align_corners,

- bool align_mode,

+ bool with_align,

std::string interpolate_type) {

int in_h = X->dims()[2];

int in_w = X->dims()[3];

@@ -524,12 +531,12 @@ void interpolate(lite::Tensor* X,

out_width = out_size_data[1];

}

}

- // float height_scale = scale;

- // float width_scale = scale;

- // if (out_width > 0 && out_height > 0) {

- // height_scale = static_cast(out_height / X->dims()[2]);

- // width_scale = static_cast(out_width / X->dims()[3]);

- // }

+ float height_scale = scale;

+ float width_scale = scale;

+ if (out_width > 0 && out_height > 0) {

+ height_scale = static_cast(out_height / X->dims()[2]);

+ width_scale = static_cast(out_width / X->dims()[3]);

+ }

int num_cout = X->dims()[0];

int c_cout = X->dims()[1];

Out->Resize({num_cout, c_cout, out_height, out_width});

@@ -544,10 +551,6 @@ void interpolate(lite::Tensor* X,

int spatial_in = in_h * in_w;

int spatial_out = out_h * out_w;

- float scale_x = (align_corners) ? (static_cast(in_w - 1) / (out_w - 1))

- : (static_cast(in_w) / (out_w));

- float scale_y = (align_corners) ? (static_cast(in_h - 1) / (out_h - 1))

- : (static_cast(in_h) / (out_h));

if ("Bilinear" == interpolate_type) {

#pragma omp parallel for

for (int i = 0; i < count; ++i) {

@@ -557,10 +560,9 @@ void interpolate(lite::Tensor* X,

dout + spatial_out * i,

out_w,

out_h,

- scale_x,

- scale_y,

- align_corners,

- align_mode);

+ 1.f / width_scale,

+ 1.f / height_scale,

+ with_align);

}

} else if ("Nearest" == interpolate_type) {

#pragma omp parallel for

@@ -571,9 +573,9 @@ void interpolate(lite::Tensor* X,

dout + spatial_out * i,

out_w,

out_h,

- scale_x,

- scale_y,

- align_corners);

+ 1.f / width_scale,

+ 1.f / height_scale,

+ with_align);

}

}

}

diff --git a/lite/backends/arm/math/interpolate.h b/lite/backends/arm/math/interpolate.h

index 82c4c068b69567c01d37cfa901f9b58626574865..e9c41c5bc86c8f00d57e096e3cd2b5f37df3a474 100644

--- a/lite/backends/arm/math/interpolate.h

+++ b/lite/backends/arm/math/interpolate.h

@@ -30,8 +30,7 @@ void bilinear_interp(const float* src,

int h_out,

float scale_x,

float scale_y,

- bool align_corners,

- bool align_mode);

+ bool with_align);

void nearest_interp(const float* src,

int w_in,

@@ -41,7 +40,7 @@ void nearest_interp(const float* src,

int h_out,

float scale_x,

float scale_y,

- bool align_corners);

+ bool with_align);

void interpolate(lite::Tensor* X,

lite::Tensor* OutSize,

@@ -51,8 +50,7 @@ void interpolate(lite::Tensor* X,

int out_height,

int out_width,

float scale,

- bool align_corners,

- bool align_mode,

+ bool with_align,

std::string interpolate_type);

} /* namespace math */

diff --git a/lite/core/arena/CMakeLists.txt b/lite/core/arena/CMakeLists.txt

index 53988f063b89ae3e75f4c27cc1d937d12bb6dae5..d5adf8475364b99fec07af1959a6dd5569a6572b 100644

--- a/lite/core/arena/CMakeLists.txt

+++ b/lite/core/arena/CMakeLists.txt

@@ -6,5 +6,5 @@ endif()

lite_cc_library(arena_framework SRCS framework.cc DEPS program gtest)

if((NOT LITE_WITH_OPENCL) AND (LITE_WITH_X86 OR LITE_WITH_ARM))

- lite_cc_test(test_arena_framework SRCS framework_test.cc DEPS arena_framework ${rknpu_kernels} ${mlu_kernels} ${bm_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${xpu_kernels} ${x86_kernels} ${cuda_kernels} ${fpga_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

+ lite_cc_test(test_arena_framework SRCS framework_test.cc DEPS arena_framework ${rknpu_kernels} ${mlu_kernels} ${bm_kernels} ${npu_kernels} ${apu_kernels} ${huawei_ascend_npu_kernels} ${xpu_kernels} ${x86_kernels} ${cuda_kernels} ${fpga_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

endif()

diff --git a/lite/core/mir/fusion/conv_conv_fuse_pass.cc b/lite/core/mir/fusion/conv_conv_fuse_pass.cc

index b2c5d8d15ab95fbcc43adc01c4189ae83b1316ed..e7f816ae4c99b3d27e9473c0937936a2f25a232b 100644

--- a/lite/core/mir/fusion/conv_conv_fuse_pass.cc

+++ b/lite/core/mir/fusion/conv_conv_fuse_pass.cc

@@ -27,7 +27,7 @@ namespace mir {

void ConvConvFusePass::Apply(const std::unique_ptr& graph) {

// initialze fuser params

std::vector conv_has_bias_cases{true, false};

- std::vector conv_type_cases{"conv2d", "depthwise_conv2d"};

+ std::vector conv_type_cases{"conv2d"};

bool has_int8 = false;

bool has_weight_quant = false;

for (auto& place : graph->valid_places()) {

diff --git a/lite/core/mir/fusion/conv_conv_fuser.cc b/lite/core/mir/fusion/conv_conv_fuser.cc

index f2e24d06fa089ea4f575116d26f333060757e789..2393ff533007460f6f3d15dce11ef73ca09e802b 100644

--- a/lite/core/mir/fusion/conv_conv_fuser.cc

+++ b/lite/core/mir/fusion/conv_conv_fuser.cc

@@ -132,8 +132,8 @@ void ConvConvFuser::BuildPattern() {

VLOG(5) << "The kernel size of the second conv must be 1x1";

continue;

}

- if (groups1 != 1) {

- VLOG(5) << "The groups of weight1_dim must be 1";

+ if (groups0 != 1 || groups1 != 1) {

+ VLOG(5) << "The all groups of weight_dim must be 1";

continue;

}

if (ch_out_0 != ch_in_1) {

diff --git a/lite/kernels/arm/conv_depthwise.cc b/lite/kernels/arm/conv_depthwise.cc

index c5b43a31a0f495f3635d389939acf44e979a3dc7..e04e774cce3af5bd6f8b67c6adfeba06fa814768 100644

--- a/lite/kernels/arm/conv_depthwise.cc

+++ b/lite/kernels/arm/conv_depthwise.cc

@@ -32,11 +32,10 @@ void DepthwiseConv::PrepareForRun() {

auto hin = param.x->dims()[2];

auto win = param.x->dims()[3];

auto paddings = *param.paddings;

- bool ch_four = channel <= 4 * win;

// select dw conv kernel

if (kw == 3) {

bool pads_less = ((paddings[1] < 2) && (paddings[3] < 2));

- if (ch_four && pads_less && paddings[0] == paddings[2] &&

+ if (pads_less && paddings[0] == paddings[2] &&

(paddings[0] == 0 || paddings[0] == 1)) {

flag_trans_weights_ = false;

} else {

diff --git a/lite/kernels/arm/interpolate_compute.cc b/lite/kernels/arm/interpolate_compute.cc

index 8593758d5af6ea7d5badc6870ea51e13a443ed99..760b2fcf0630a632d1f1bbaeda7760d2de25a7a4 100644

--- a/lite/kernels/arm/interpolate_compute.cc

+++ b/lite/kernels/arm/interpolate_compute.cc

@@ -35,7 +35,6 @@ void BilinearInterpCompute::Run() {

int out_w = param.out_w;

int out_h = param.out_h;

bool align_corners = param.align_corners;

- bool align_mode = param.align_mode;

std::string interp_method = "Bilinear";

lite::arm::math::interpolate(X,

OutSize,

@@ -46,7 +45,6 @@ void BilinearInterpCompute::Run() {

out_w,

scale,

align_corners,

- align_mode,

interp_method);

}

@@ -61,7 +59,6 @@ void NearestInterpCompute::Run() {

int out_w = param.out_w;

int out_h = param.out_h;

bool align_corners = param.align_corners;

- bool align_mode = param.align_mode;

std::string interp_method = "Nearest";

lite::arm::math::interpolate(X,

OutSize,

@@ -72,7 +69,6 @@ void NearestInterpCompute::Run() {

out_w,

scale,

align_corners,

- align_mode,

interp_method);

}

diff --git a/lite/kernels/x86/activation_compute.cc b/lite/kernels/x86/activation_compute.cc

index 9b4c2fadd9ce427db272a9bb0cfd0e0a10716f11..aee6bd6bd3f41972e759fb2b87fb1b1c549975e2 100644

--- a/lite/kernels/x86/activation_compute.cc

+++ b/lite/kernels/x86/activation_compute.cc

@@ -88,3 +88,14 @@ REGISTER_LITE_KERNEL(sigmoid,

.BindInput("X", {LiteType::GetTensorTy(TARGET(kX86))})

.BindOutput("Out", {LiteType::GetTensorTy(TARGET(kX86))})

.Finalize();

+

+// float

+REGISTER_LITE_KERNEL(relu6,

+ kX86,

+ kFloat,

+ kNCHW,

+ paddle::lite::kernels::x86::Relu6Compute,

+ def)

+ .BindInput("X", {LiteType::GetTensorTy(TARGET(kX86))})

+ .BindOutput("Out", {LiteType::GetTensorTy(TARGET(kX86))})

+ .Finalize();

diff --git a/lite/kernels/x86/activation_compute.h b/lite/kernels/x86/activation_compute.h

index 520adaf44f808748c75960f88cd07799c9f2d4ed..b76e94398e6824759372bc5eb91ed3cea8acaf6e 100644

--- a/lite/kernels/x86/activation_compute.h

+++ b/lite/kernels/x86/activation_compute.h

@@ -248,6 +248,42 @@ class SoftsignCompute : public KernelLite {

virtual ~SoftsignCompute() = default;

};

+// relu6(x) = min(max(0, x), 6)

+template

+struct Relu6Functor {

+ float threshold;

+ explicit Relu6Functor(float threshold_) : threshold(threshold_) {}

+

+ template

+ void operator()(Device d, X x, Out out) const {

+ out.device(d) =

+ x.cwiseMax(static_cast(0)).cwiseMin(static_cast(threshold));

+ }

+};

+

+template

+class Relu6Compute : public KernelLite {

+ public:

+ using param_t = operators::ActivationParam;

+

+ void Run() override {

+ auto& param = *param_.get_mutable();

+

+ param.Out->template mutable_data();

+ auto X = param.X;

+ auto Out = param.Out;

+ auto place = lite::fluid::EigenDeviceType();

+ CHECK(X);

+ CHECK(Out);

+ auto x = lite::fluid::EigenVector::Flatten(*X);

+ auto out = lite::fluid::EigenVector::Flatten(*Out);

+ Relu6Functor functor(param.threshold);

+ functor(place, x, out);

+ }

+

+ virtual ~Relu6Compute() = default;

+};

+

} // namespace x86

} // namespace kernels

} // namespace lite

diff --git a/lite/kernels/x86/reduce_compute.cc b/lite/kernels/x86/reduce_compute.cc

index f95f4cfb881fef329ea940ca8b9fa6b4fd6ff7b6..edeac0a84eb60ca1e34ab6e7437e54ffe8922815 100644

--- a/lite/kernels/x86/reduce_compute.cc

+++ b/lite/kernels/x86/reduce_compute.cc

@@ -23,3 +23,13 @@ REGISTER_LITE_KERNEL(reduce_sum,

.BindInput("X", {LiteType::GetTensorTy(TARGET(kX86))})

.BindOutput("Out", {LiteType::GetTensorTy(TARGET(kX86))})

.Finalize();

+

+REGISTER_LITE_KERNEL(reduce_mean,

+ kX86,

+ kFloat,

+ kNCHW,

+ paddle::lite::kernels::x86::ReduceMeanCompute,

+ def)

+ .BindInput("X", {LiteType::GetTensorTy(TARGET(kX86))})

+ .BindOutput("Out", {LiteType::GetTensorTy(TARGET(kX86))})

+ .Finalize();

diff --git a/lite/kernels/x86/reduce_compute.h b/lite/kernels/x86/reduce_compute.h

index 1b7c99eeef9dd80525eb9ed249bdf6ed1e493443..fb02348759014578a1cf7a17c27903ce84dfe54b 100644

--- a/lite/kernels/x86/reduce_compute.h

+++ b/lite/kernels/x86/reduce_compute.h

@@ -31,11 +31,18 @@ struct SumFunctor {

}

};

-#define HANDLE_DIM(NDIM, RDIM) \

- if (ndim == NDIM && rdim == RDIM) { \

- paddle::lite::kernels::x86:: \

- ReduceFunctor( \

- *input, output, dims, keep_dim); \

+struct MeanFunctor {

+ template

+ void operator()(X* x, Y* y, const Dim& dim) {

+ y->device(lite::fluid::EigenDeviceType()) = x->mean(dim);

+ }

+};

+

+#define HANDLE_DIM(NDIM, RDIM, FUNCTOR) \

+ if (ndim == NDIM && rdim == RDIM) { \

+ paddle::lite::kernels::x86:: \

+ ReduceFunctor( \

+ *input, output, dims, keep_dim); \

}

template

@@ -64,19 +71,58 @@ class ReduceSumCompute : public KernelLite {

} else {

int ndim = input->dims().size();

int rdim = dims.size();

- HANDLE_DIM(4, 3);

- HANDLE_DIM(4, 2);

- HANDLE_DIM(4, 1);

- HANDLE_DIM(3, 2);

- HANDLE_DIM(3, 1);

- HANDLE_DIM(2, 1);

- HANDLE_DIM(1, 1);

+ HANDLE_DIM(4, 3, SumFunctor);

+ HANDLE_DIM(4, 2, SumFunctor);

+ HANDLE_DIM(4, 1, SumFunctor);

+ HANDLE_DIM(3, 2, SumFunctor);

+ HANDLE_DIM(3, 1, SumFunctor);

+ HANDLE_DIM(2, 1, SumFunctor);

+ HANDLE_DIM(1, 1, SumFunctor);

}

}

virtual ~ReduceSumCompute() = default;

};

+template

+class ReduceMeanCompute : public KernelLite {

+ public:

+ using param_t = operators::ReduceParam;

+

+ void Run() override {

+ auto& param = *param_.get_mutable();

+ // auto& context = ctx_->As();

+ auto* input = param.x;

+ auto* output = param.output;

+ param.output->template mutable_data();

+

+ const auto& dims = param.dim;

+ bool keep_dim = param.keep_dim;

+

+ if (dims.size() == 0) {

+ // Flatten and reduce 1-D tensor

+ auto x = lite::fluid::EigenVector::Flatten(*input);

+ auto out = lite::fluid::EigenScalar::From(output);

+ // auto& place = *platform::CPUDeviceContext().eigen_device();

+ auto reduce_dim = Eigen::array({{0}});

+ MeanFunctor functor;

+ functor(&x, &out, reduce_dim);

+ } else {

+ int ndim = input->dims().size();

+ int rdim = dims.size();

+ HANDLE_DIM(4, 3, MeanFunctor);

+ HANDLE_DIM(4, 2, MeanFunctor);

+ HANDLE_DIM(4, 1, MeanFunctor);

+ HANDLE_DIM(3, 2, MeanFunctor);

+ HANDLE_DIM(3, 1, MeanFunctor);

+ HANDLE_DIM(2, 1, MeanFunctor);

+ HANDLE_DIM(1, 1, MeanFunctor);

+ }

+ }

+

+ virtual ~ReduceMeanCompute() = default;

+};

+

} // namespace x86

} // namespace kernels

} // namespace lite

diff --git a/lite/operators/activation_ops.cc b/lite/operators/activation_ops.cc

index 9b20f4348b4090abfb2138547915e44f7c3418c0..a25297f01206dd157484c720d6dd134186d2a7bd 100644

--- a/lite/operators/activation_ops.cc

+++ b/lite/operators/activation_ops.cc

@@ -89,6 +89,9 @@ bool ActivationOp::AttachImpl(const cpp::OpDesc& opdesc, lite::Scope* scope) {

} else if (opdesc.Type() == "elu") {

param_.active_type = lite_api::ActivationType::kElu;

param_.Elu_alpha = opdesc.GetAttr("alpha");

+ } else if (opdesc.Type() == "relu6") {

+ param_.active_type = lite_api::ActivationType::kRelu6;

+ param_.threshold = opdesc.GetAttr("threshold");

}

VLOG(4) << "opdesc.Type():" << opdesc.Type();

diff --git a/lite/operators/op_params.h b/lite/operators/op_params.h

index 2fccbb9593f87ceb3c841790373609c1b47178de..d1533c4cf6638afa2ffa31ce2e780354153b0d6e 100644

--- a/lite/operators/op_params.h

+++ b/lite/operators/op_params.h

@@ -403,6 +403,8 @@ struct ActivationParam : ParamBase {

float relu_threshold{1.0f};

// elu

float Elu_alpha{1.0f};

+ // relu6

+ float threshold{6.0f};

///////////////////////////////////////////////////////////////////////////////////

// get a vector of input tensors

diff --git a/lite/tests/api/CMakeLists.txt b/lite/tests/api/CMakeLists.txt

index 795b195a03e6dac8366f8b05f52984983c10676d..636d9d557400152c871bded938f26f74e282dd1e 100644

--- a/lite/tests/api/CMakeLists.txt

+++ b/lite/tests/api/CMakeLists.txt

@@ -1,52 +1,71 @@

-if(LITE_WITH_ARM)

- lite_cc_test(test_transformer_with_mask_fp32_arm SRCS test_transformer_with_mask_fp32_arm.cc

+function(lite_cc_test_with_model_and_data TARGET)

+ if(NOT WITH_TESTING)

+ return()

+ endif()

+

+ set(options "")

+ set(oneValueArgs MODEL DATA CONFIG ARGS)

+ set(multiValueArgs "")

+ cmake_parse_arguments(args "${options}" "${oneValueArgs}" "${multiValueArgs}" ${ARGN})

+

+ set(ARGS "")

+ if(DEFINED args_MODEL)

+ set(ARGS "${ARGS} --model_dir=${LITE_MODEL_DIR}/${args_MODEL}")

+ endif()

+ if(DEFINED args_DATA)

+ set(ARGS "${ARGS} --data_dir=${LITE_MODEL_DIR}/${args_DATA}")

+ endif()

+ if(DEFINED args_CONFIG)

+ set(ARGS "${ARGS} --config_dir=${LITE_MODEL_DIR}/${args_CONFIG}")

+ endif()

+ if(DEFINED args_ARGS)

+ set(ARGS "${ARGS} ${args_ARGS}")

+ endif()

+ lite_cc_test(${TARGET} SRCS ${TARGET}.cc

DEPS ${lite_model_test_DEPS} paddle_api_full

ARM_DEPS ${arm_kernels}

- ARGS --model_dir=${LITE_MODEL_DIR}/transformer_with_mask_fp32 SERIAL)

- if(WITH_TESTING)

- add_dependencies(test_transformer_with_mask_fp32_arm extern_lite_download_transformer_with_mask_fp32_tar_gz)

+ X86_DEPS ${x86_kernels}

+ NPU_DEPS ${npu_kernels} ${npu_bridges}

+ HUAWEI_ASCEND_NPU_DEPS ${huawei_ascend_npu_kernels} ${huawei_ascend_npu_bridges}

+ XPU_DEPS ${xpu_kernels} ${xpu_bridges}

+ APU_DEPS ${apu_kernels} ${apu_bridges}

+ RKNPU_DEPS ${rknpu_kernels} ${rknpu_bridges}

+ BM_DEPS ${bm_kernels} ${bm_bridges}

+ MLU_DEPS ${mlu_kernels} ${mlu_bridges}

+ ARGS ${ARGS} SERIAL)

+ if(DEFINED args_MODEL)

+ add_dependencies(${TARGET} extern_lite_download_${args_MODEL}_tar_gz)

endif()

-endif()

-

-function(xpu_x86_without_xtcl_test TARGET MODEL DATA)

- if(${DATA} STREQUAL "")

- lite_cc_test(${TARGET} SRCS ${TARGET}.cc

- DEPS mir_passes lite_api_test_helper paddle_api_full paddle_api_light gflags utils

- ${ops} ${host_kernels} ${x86_kernels} ${xpu_kernels}

- ARGS --model_dir=${LITE_MODEL_DIR}/${MODEL})

- else()

- lite_cc_test(${TARGET} SRCS ${TARGET}.cc

- DEPS mir_passes lite_api_test_helper paddle_api_full paddle_api_light gflags utils

- ${ops} ${host_kernels} ${x86_kernels} ${xpu_kernels}

- ARGS --model_dir=${LITE_MODEL_DIR}/${MODEL} --data_dir=${LITE_MODEL_DIR}/${DATA})

+ if(DEFINED args_DATA)

+ add_dependencies(${TARGET} extern_lite_download_${args_DATA}_tar_gz)

endif()

-

- if(WITH_TESTING)

- add_dependencies(${TARGET} extern_lite_download_${MODEL}_tar_gz)

- if(NOT ${DATA} STREQUAL "")

- add_dependencies(${TARGET} extern_lite_download_${DATA}_tar_gz)

- endif()

+ if(DEFINED args_CONFIG)

+ add_dependencies(${TARGET} extern_lite_download_${args_CONFIG}_tar_gz)

endif()

endfunction()

+if(LITE_WITH_ARM)

+ lite_cc_test_with_model_and_data(test_transformer_with_mask_fp32_arm MODEL transformer_with_mask_fp32 ARGS)

+endif()

+

+if(LITE_WITH_NPU)

+ lite_cc_test_with_model_and_data(test_mobilenetv1_fp32_huawei_kirin_npu MODEL mobilenet_v1 DATA ILSVRC2012_small)

+ lite_cc_test_with_model_and_data(test_mobilenetv2_fp32_huawei_kirin_npu MODEL mobilenet_v2_relu DATA ILSVRC2012_small)

+ lite_cc_test_with_model_and_data(test_resnet50_fp32_huawei_kirin_npu MODEL resnet50 DATA ILSVRC2012_small)

+endif()

+

if(LITE_WITH_XPU AND NOT LITE_WITH_XTCL)

- xpu_x86_without_xtcl_test(test_resnet50_fp32_xpu resnet50 ILSVRC2012_small)

- xpu_x86_without_xtcl_test(test_googlenet_fp32_xpu GoogLeNet ILSVRC2012_small)

- xpu_x86_without_xtcl_test(test_vgg19_fp32_xpu VGG19 ILSVRC2012_small)

- xpu_x86_without_xtcl_test(test_ernie_fp32_xpu ernie bert_data)

- xpu_x86_without_xtcl_test(test_bert_fp32_xpu bert bert_data)

+ lite_cc_test_with_model_and_data(test_resnet50_fp32_xpu MODEL resnet50 DATA ILSVRC2012_small)

+ lite_cc_test_with_model_and_data(test_googlenet_fp32_xpu MODEL GoogLeNet DATA ILSVRC2012_small)

+ lite_cc_test_with_model_and_data(test_vgg19_fp32_xpu MODEL VGG19 DATA ILSVRC2012_small)

+ lite_cc_test_with_model_and_data(test_ernie_fp32_xpu MODEL ernie DATA bert_data)

+ lite_cc_test_with_model_and_data(test_bert_fp32_xpu MODEL bert DATA bert_data)

endif()

if(LITE_WITH_RKNPU)

- lite_cc_test(test_mobilenetv1_int8_rknpu SRCS test_mobilenetv1_int8_rknpu.cc

- DEPS ${lite_model_test_DEPS} paddle_api_full

- RKNPU_DEPS ${rknpu_kernels} ${rknpu_bridges}

- ARGS --model_dir=${LITE_MODEL_DIR}/MobilenetV1_full_quant SERIAL)

+ lite_cc_test_with_model_and_data(test_mobilenetv1_int8_rockchip_npu MODEL mobilenet_v1_int8_for_rockchip_npu DATA ILSVRC2012_small)

endif()

if(LITE_WITH_APU)

- lite_cc_test(test_mobilenetv1_int8_apu SRCS test_mobilenetv1_int8_apu.cc

- DEPS ${lite_model_test_DEPS} paddle_api_full

- APU_DEPS ${apu_kernels} ${apu_bridges}

- ARGS --model_dir=${LITE_MODEL_DIR}/MobilenetV1_full_quant SERIAL)

+ lite_cc_test_with_model_and_data(test_mobilenetv1_int8_mediatek_apu MODEL mobilenet_v1_int8_for_mediatek_apu DATA ILSVRC2012_small)

endif()

diff --git a/lite/tests/api/test_mobilenetv1_fp32_huawei_kirin_npu.cc b/lite/tests/api/test_mobilenetv1_fp32_huawei_kirin_npu.cc

new file mode 100644

index 0000000000000000000000000000000000000000..0b890cdd0855bb629a1aa9ea1ebf62d15240f7cd

--- /dev/null

+++ b/lite/tests/api/test_mobilenetv1_fp32_huawei_kirin_npu.cc

@@ -0,0 +1,101 @@

+// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include

+#include

+#include

+#include "lite/api/lite_api_test_helper.h"

+#include "lite/api/paddle_api.h"

+#include "lite/api/paddle_use_kernels.h"

+#include "lite/api/paddle_use_ops.h"

+#include "lite/api/paddle_use_passes.h"

+#include "lite/api/test_helper.h"

+#include "lite/tests/api/ILSVRC2012_utility.h"

+#include "lite/utils/cp_logging.h"

+

+DEFINE_string(data_dir, "", "data dir");

+DEFINE_int32(iteration, 100, "iteration times to run");

+DEFINE_int32(batch, 1, "batch of image");

+DEFINE_int32(channel, 3, "image channel");

+

+namespace paddle {

+namespace lite {

+

+TEST(MobileNetV1, test_mobilenetv1_fp32_huawei_kirin_npu) {

+ lite_api::CxxConfig config;

+ config.set_model_dir(FLAGS_model_dir);

+ config.set_valid_places({lite_api::Place{TARGET(kARM), PRECISION(kFloat)},

+ lite_api::Place{TARGET(kNPU), PRECISION(kFloat)}});

+ auto predictor = lite_api::CreatePaddlePredictor(config);

+

+ std::string raw_data_dir = FLAGS_data_dir + std::string("/raw_data");

+ std::vector input_shape{

+ FLAGS_batch, FLAGS_channel, FLAGS_im_width, FLAGS_im_height};

+ auto raw_data = ReadRawData(raw_data_dir, input_shape, FLAGS_iteration);

+

+ int input_size = 1;

+ for (auto i : input_shape) {

+ input_size *= i;

+ }

+

+ for (int i = 0; i < FLAGS_warmup; ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ for (int j = 0; j < input_size; j++) {

+ data[j] = 0.f;

+ }

+ predictor->Run();

+ }

+

+ std::vector> out_rets;

+ out_rets.resize(FLAGS_iteration);

+ double cost_time = 0;

+ for (size_t i = 0; i < raw_data.size(); ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ memcpy(data, raw_data[i].data(), sizeof(float) * input_size);

+

+ double start = GetCurrentUS();

+ predictor->Run();

+ cost_time += GetCurrentUS() - start;

+

+ auto output_tensor = predictor->GetOutput(0);

+ auto output_shape = output_tensor->shape();

+ auto output_data = output_tensor->data();

+ ASSERT_EQ(output_shape.size(), 2UL);

+ ASSERT_EQ(output_shape[0], 1);

+ ASSERT_EQ(output_shape[1], 1000);

+

+ int output_size = output_shape[0] * output_shape[1];

+ out_rets[i].resize(output_size);

+ memcpy(&(out_rets[i].at(0)), output_data, sizeof(float) * output_size);

+ }

+

+ LOG(INFO) << "================== Speed Report ===================";

+ LOG(INFO) << "Model: " << FLAGS_model_dir << ", threads num " << FLAGS_threads

+ << ", warmup: " << FLAGS_warmup << ", batch: " << FLAGS_batch

+ << ", iteration: " << FLAGS_iteration << ", spend "

+ << cost_time / FLAGS_iteration / 1000.0 << " ms in average.";

+

+ std::string labels_dir = FLAGS_data_dir + std::string("/labels.txt");

+ float out_accuracy = CalOutAccuracy(out_rets, labels_dir);

+ ASSERT_GE(out_accuracy, 0.57f);

+}

+

+} // namespace lite

+} // namespace paddle

diff --git a/lite/tests/api/test_mobilenetv1_int8_apu.cc b/lite/tests/api/test_mobilenetv1_int8_apu.cc

deleted file mode 100644

index 730ed3e82341d04e79c96a5cacefdf4c48715e61..0000000000000000000000000000000000000000

--- a/lite/tests/api/test_mobilenetv1_int8_apu.cc

+++ /dev/null

@@ -1,160 +0,0 @@

-// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-#include

-#include

-#include

-#include

-

-#include "lite/api/paddle_api.h"

-#include "lite/api/paddle_use_kernels.h"

-#include "lite/api/paddle_use_ops.h"

-#include "lite/api/paddle_use_passes.h"

-using namespace paddle::lite_api; // NOLINT

-

-inline double GetCurrentUS() {

- struct timeval time;

- gettimeofday(&time, NULL);

- return 1e+6 * time.tv_sec + time.tv_usec;

-}

-

-inline int64_t ShapeProduction(std::vector shape) {

- int64_t s = 1;

- for (int64_t dim : shape) {

- s *= dim;

- }

- return s;

-}

-

-int main(int argc, char** argv) {

- if (argc < 2) {

- std::cerr << "[ERROR] usage: ./" << argv[0]

- << " model_dir [thread_num] [warmup_times] [repeat_times] "

- "[input_data_path] [output_data_path]"

- << std::endl;

- return -1;

- }

- std::string model_dir = argv[1];

- int thread_num = 1;

- if (argc > 2) {

- thread_num = atoi(argv[2]);

- }

- int warmup_times = 5;

- if (argc > 3) {

- warmup_times = atoi(argv[3]);

- }

- int repeat_times = 10;

- if (argc > 4) {

- repeat_times = atoi(argv[4]);

- }

- std::string input_data_path;

- if (argc > 5) {

- input_data_path = argv[5];

- }

- std::string output_data_path;

- if (argc > 6) {

- output_data_path = argv[6];

- }

- paddle::lite_api::CxxConfig config;

- config.set_model_dir(model_dir);

- config.set_threads(thread_num);

- config.set_power_mode(paddle::lite_api::LITE_POWER_HIGH);

- config.set_valid_places(

- {paddle::lite_api::Place{

- TARGET(kARM), PRECISION(kFloat), DATALAYOUT(kNCHW)},

- paddle::lite_api::Place{

- TARGET(kARM), PRECISION(kInt8), DATALAYOUT(kNCHW)},

- paddle::lite_api::Place{

- TARGET(kAPU), PRECISION(kInt8), DATALAYOUT(kNCHW)}});

- auto predictor = paddle::lite_api::CreatePaddlePredictor(config);

-

- std::unique_ptr input_tensor(

- std::move(predictor->GetInput(0)));

- input_tensor->Resize({1, 3, 224, 224});

- auto input_data = input_tensor->mutable_data();

- auto input_size = ShapeProduction(input_tensor->shape());

-

- // test loop

- int total_imgs = 500;

- float test_num = 0;

- float top1_num = 0;

- float top5_num = 0;

- int output_len = 1000;

- std::vector index(1000);

- bool debug = true; // false;

- int show_step = 500;

- for (int i = 0; i < total_imgs; i++) {

- // set input

- std::string filename = input_data_path + "/" + std::to_string(i);

- std::ifstream fs(filename, std::ifstream::binary);

- if (!fs.is_open()) {

- std::cout << "open input file fail.";

- }

- auto input_data_tmp = input_data;

- for (int i = 0; i < input_size; ++i) {

- fs.read(reinterpret_cast(input_data_tmp), sizeof(*input_data_tmp));

- input_data_tmp++;

- }

- int label = 0;

- fs.read(reinterpret_cast(&label), sizeof(label));

- fs.close();

-

- if (debug && i % show_step == 0) {

- std::cout << "input data:" << std::endl;

- std::cout << input_data[0] << " " << input_data[10] << " "

- << input_data[input_size - 1] << std::endl;

- std::cout << "label:" << label << std::endl;

- }

-

- // run

- predictor->Run();

- auto output0 = predictor->GetOutput(0);

- auto output0_data = output0->data();

-

- // get output

- std::iota(index.begin(), index.end(), 0);

- std::stable_sort(

- index.begin(), index.end(), [output0_data](size_t i1, size_t i2) {

- return output0_data[i1] > output0_data[i2];

- });

- test_num++;

- if (label == index[0]) {

- top1_num++;

- }

- for (int i = 0; i < 5; i++) {

- if (label == index[i]) {

- top5_num++;

- }

- }

-

- if (debug && i % show_step == 0) {

- std::cout << index[0] << " " << index[1] << " " << index[2] << " "

- << index[3] << " " << index[4] << std::endl;

- std::cout << output0_data[index[0]] << " " << output0_data[index[1]]

- << " " << output0_data[index[2]] << " "

- << output0_data[index[3]] << " " << output0_data[index[4]]

- << std::endl;

- std::cout << output0_data[630] << std::endl;

- }

- if (i % show_step == 0) {

- std::cout << "step " << i << "; top1 acc:" << top1_num / test_num

- << "; top5 acc:" << top5_num / test_num << std::endl;

- }

- }

- std::cout << "final result:" << std::endl;

- std::cout << "top1 acc:" << top1_num / test_num << std::endl;

- std::cout << "top5 acc:" << top5_num / test_num << std::endl;

- return 0;

-}

diff --git a/lite/tests/api/test_mobilenetv1_int8_mediatek_apu.cc b/lite/tests/api/test_mobilenetv1_int8_mediatek_apu.cc

new file mode 100644

index 0000000000000000000000000000000000000000..76b3722d2d6d4d15fb57a00b055d714ad8d2e1c5

--- /dev/null

+++ b/lite/tests/api/test_mobilenetv1_int8_mediatek_apu.cc

@@ -0,0 +1,102 @@

+// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include

+#include

+#include

+#include "lite/api/lite_api_test_helper.h"

+#include "lite/api/paddle_api.h"

+#include "lite/api/paddle_use_kernels.h"

+#include "lite/api/paddle_use_ops.h"

+#include "lite/api/paddle_use_passes.h"

+#include "lite/api/test_helper.h"

+#include "lite/tests/api/ILSVRC2012_utility.h"

+#include "lite/utils/cp_logging.h"

+

+DEFINE_string(data_dir, "", "data dir");

+DEFINE_int32(iteration, 100, "iteration times to run");

+DEFINE_int32(batch, 1, "batch of image");

+DEFINE_int32(channel, 3, "image channel");

+

+namespace paddle {

+namespace lite {

+

+TEST(MobileNetV1, test_mobilenetv1_int8_mediatek_apu) {

+ lite_api::CxxConfig config;

+ config.set_model_dir(FLAGS_model_dir);

+ config.set_valid_places({lite_api::Place{TARGET(kARM), PRECISION(kFloat)},

+ lite_api::Place{TARGET(kARM), PRECISION(kInt8)},

+ lite_api::Place{TARGET(kAPU), PRECISION(kInt8)}});

+ auto predictor = lite_api::CreatePaddlePredictor(config);

+

+ std::string raw_data_dir = FLAGS_data_dir + std::string("/raw_data");

+ std::vector input_shape{

+ FLAGS_batch, FLAGS_channel, FLAGS_im_width, FLAGS_im_height};

+ auto raw_data = ReadRawData(raw_data_dir, input_shape, FLAGS_iteration);

+

+ int input_size = 1;

+ for (auto i : input_shape) {

+ input_size *= i;

+ }

+

+ for (int i = 0; i < FLAGS_warmup; ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ for (int j = 0; j < input_size; j++) {

+ data[j] = 0.f;

+ }

+ predictor->Run();

+ }

+

+ std::vector> out_rets;

+ out_rets.resize(FLAGS_iteration);

+ double cost_time = 0;

+ for (size_t i = 0; i < raw_data.size(); ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ memcpy(data, raw_data[i].data(), sizeof(float) * input_size);

+

+ double start = GetCurrentUS();

+ predictor->Run();

+ cost_time += GetCurrentUS() - start;

+

+ auto output_tensor = predictor->GetOutput(0);

+ auto output_shape = output_tensor->shape();

+ auto output_data = output_tensor->data();

+ ASSERT_EQ(output_shape.size(), 2UL);

+ ASSERT_EQ(output_shape[0], 1);

+ ASSERT_EQ(output_shape[1], 1000);

+

+ int output_size = output_shape[0] * output_shape[1];

+ out_rets[i].resize(output_size);

+ memcpy(&(out_rets[i].at(0)), output_data, sizeof(float) * output_size);

+ }

+

+ LOG(INFO) << "================== Speed Report ===================";

+ LOG(INFO) << "Model: " << FLAGS_model_dir << ", threads num " << FLAGS_threads

+ << ", warmup: " << FLAGS_warmup << ", batch: " << FLAGS_batch

+ << ", iteration: " << FLAGS_iteration << ", spend "

+ << cost_time / FLAGS_iteration / 1000.0 << " ms in average.";

+

+ std::string labels_dir = FLAGS_data_dir + std::string("/labels.txt");

+ float out_accuracy = CalOutAccuracy(out_rets, labels_dir);

+ ASSERT_GE(out_accuracy, 0.55f);

+}

+

+} // namespace lite

+} // namespace paddle

diff --git a/lite/tests/api/test_mobilenetv1_int8_rknpu.cc b/lite/tests/api/test_mobilenetv1_int8_rknpu.cc

deleted file mode 100644

index 8c123088b3f69560abf3555dd2e459af926426ef..0000000000000000000000000000000000000000

--- a/lite/tests/api/test_mobilenetv1_int8_rknpu.cc

+++ /dev/null

@@ -1,127 +0,0 @@

-// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-#include

-#include

-#include

-#include

-#include "lite/api/paddle_api.h"

-#include "lite/api/paddle_use_kernels.h"

-#include "lite/api/paddle_use_ops.h"

-#include "lite/api/paddle_use_passes.h"

-

-inline double GetCurrentUS() {

- struct timeval time;

- gettimeofday(&time, NULL);

- return 1e+6 * time.tv_sec + time.tv_usec;

-}

-

-inline int64_t ShapeProduction(std::vector shape) {

- int64_t s = 1;

- for (int64_t dim : shape) {

- s *= dim;

- }

- return s;

-}

-

-int main(int argc, char** argv) {

- if (argc < 2) {

- std::cerr << "[ERROR] usage: ./" << argv[0]

- << " model_dir [thread_num] [warmup_times] [repeat_times] "

- "[input_data_path] [output_data_path]"

- << std::endl;

- return -1;

- }

- std::string model_dir = argv[1];

- int thread_num = 1;

- if (argc > 2) {

- thread_num = atoi(argv[2]);

- }

- int warmup_times = 5;

- if (argc > 3) {

- warmup_times = atoi(argv[3]);

- }

- int repeat_times = 10;

- if (argc > 4) {

- repeat_times = atoi(argv[4]);

- }

- std::string input_data_path;

- if (argc > 5) {

- input_data_path = argv[5];

- }

- std::string output_data_path;

- if (argc > 6) {

- output_data_path = argv[6];

- }

- paddle::lite_api::CxxConfig config;

- config.set_model_dir(model_dir);

- config.set_threads(thread_num);

- config.set_power_mode(paddle::lite_api::LITE_POWER_HIGH);

- config.set_valid_places(

- {paddle::lite_api::Place{

- TARGET(kARM), PRECISION(kFloat), DATALAYOUT(kNCHW)},

- paddle::lite_api::Place{

- TARGET(kARM), PRECISION(kInt8), DATALAYOUT(kNCHW)},

- paddle::lite_api::Place{

- TARGET(kARM), PRECISION(kInt8), DATALAYOUT(kNCHW)},

- paddle::lite_api::Place{

- TARGET(kRKNPU), PRECISION(kInt8), DATALAYOUT(kNCHW)}});

- auto predictor = paddle::lite_api::CreatePaddlePredictor(config);

-

- std::unique_ptr input_tensor(

- std::move(predictor->GetInput(0)));

- input_tensor->Resize({1, 3, 224, 224});

- auto input_data = input_tensor->mutable_data();

- auto input_size = ShapeProduction(input_tensor->shape());

- if (input_data_path.empty()) {

- for (int i = 0; i < input_size; i++) {

- input_data[i] = 1;

- }

- } else {

- std::fstream fs(input_data_path, std::ios::in);

- if (!fs.is_open()) {

- std::cerr << "open input data file failed." << std::endl;

- return -1;

- }

- for (int i = 0; i < input_size; i++) {

- fs >> input_data[i];

- }

- }

-

- for (int i = 0; i < warmup_times; ++i) {

- predictor->Run();

- }

-

- auto start = GetCurrentUS();

- for (int i = 0; i < repeat_times; ++i) {

- predictor->Run();

- }

-

- std::cout << "Model: " << model_dir << ", threads num " << thread_num

- << ", warmup times: " << warmup_times

- << ", repeat times: " << repeat_times << ", spend "

- << (GetCurrentUS() - start) / repeat_times / 1000.0

- << " ms in average." << std::endl;

-

- std::unique_ptr output_tensor(

- std::move(predictor->GetOutput(0)));

- auto output_data = output_tensor->data();

- auto output_size = ShapeProduction(output_tensor->shape());

- std::cout << "output data:";

- for (int i = 0; i < output_size; i += 100) {

- std::cout << "[" << i << "] " << output_data[i] << std::endl;

- }

- return 0;

-}

diff --git a/lite/tests/api/test_mobilenetv1_int8_rockchip_npu.cc b/lite/tests/api/test_mobilenetv1_int8_rockchip_npu.cc

new file mode 100644

index 0000000000000000000000000000000000000000..7b52e4398a5db2e11499a2a96a07ffe4971f6100

--- /dev/null

+++ b/lite/tests/api/test_mobilenetv1_int8_rockchip_npu.cc

@@ -0,0 +1,102 @@

+// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include

+#include

+#include

+#include "lite/api/lite_api_test_helper.h"

+#include "lite/api/paddle_api.h"

+#include "lite/api/paddle_use_kernels.h"

+#include "lite/api/paddle_use_ops.h"

+#include "lite/api/paddle_use_passes.h"

+#include "lite/api/test_helper.h"

+#include "lite/tests/api/ILSVRC2012_utility.h"

+#include "lite/utils/cp_logging.h"

+

+DEFINE_string(data_dir, "", "data dir");

+DEFINE_int32(iteration, 100, "iteration times to run");

+DEFINE_int32(batch, 1, "batch of image");

+DEFINE_int32(channel, 3, "image channel");

+

+namespace paddle {

+namespace lite {

+

+TEST(MobileNetV1, test_mobilenetv1_int8_rockchip_apu) {

+ lite_api::CxxConfig config;

+ config.set_model_dir(FLAGS_model_dir);

+ config.set_valid_places({lite_api::Place{TARGET(kARM), PRECISION(kFloat)},

+ lite_api::Place{TARGET(kARM), PRECISION(kInt8)},

+ lite_api::Place{TARGET(kRKNPU), PRECISION(kInt8)}});

+ auto predictor = lite_api::CreatePaddlePredictor(config);

+

+ std::string raw_data_dir = FLAGS_data_dir + std::string("/raw_data");

+ std::vector input_shape{

+ FLAGS_batch, FLAGS_channel, FLAGS_im_width, FLAGS_im_height};

+ auto raw_data = ReadRawData(raw_data_dir, input_shape, FLAGS_iteration);

+

+ int input_size = 1;

+ for (auto i : input_shape) {

+ input_size *= i;

+ }

+

+ for (int i = 0; i < FLAGS_warmup; ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ for (int j = 0; j < input_size; j++) {

+ data[j] = 0.f;

+ }

+ predictor->Run();

+ }

+

+ std::vector> out_rets;

+ out_rets.resize(FLAGS_iteration);

+ double cost_time = 0;

+ for (size_t i = 0; i < raw_data.size(); ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ memcpy(data, raw_data[i].data(), sizeof(float) * input_size);

+

+ double start = GetCurrentUS();

+ predictor->Run();

+ cost_time += GetCurrentUS() - start;

+

+ auto output_tensor = predictor->GetOutput(0);

+ auto output_shape = output_tensor->shape();

+ auto output_data = output_tensor->data();

+ ASSERT_EQ(output_shape.size(), 2UL);

+ ASSERT_EQ(output_shape[0], 1);

+ ASSERT_EQ(output_shape[1], 1000);

+

+ int output_size = output_shape[0] * output_shape[1];

+ out_rets[i].resize(output_size);

+ memcpy(&(out_rets[i].at(0)), output_data, sizeof(float) * output_size);

+ }

+

+ LOG(INFO) << "================== Speed Report ===================";

+ LOG(INFO) << "Model: " << FLAGS_model_dir << ", threads num " << FLAGS_threads

+ << ", warmup: " << FLAGS_warmup << ", batch: " << FLAGS_batch

+ << ", iteration: " << FLAGS_iteration << ", spend "

+ << cost_time / FLAGS_iteration / 1000.0 << " ms in average.";

+

+ std::string labels_dir = FLAGS_data_dir + std::string("/labels.txt");

+ float out_accuracy = CalOutAccuracy(out_rets, labels_dir);

+ ASSERT_GE(out_accuracy, 0.52f);

+}

+

+} // namespace lite

+} // namespace paddle

diff --git a/lite/tests/api/test_mobilenetv2_fp32_huawei_kirin_npu.cc b/lite/tests/api/test_mobilenetv2_fp32_huawei_kirin_npu.cc

new file mode 100644

index 0000000000000000000000000000000000000000..11fa9df4206b65d0c82c4ab09dc35d302c13282b

--- /dev/null

+++ b/lite/tests/api/test_mobilenetv2_fp32_huawei_kirin_npu.cc

@@ -0,0 +1,101 @@

+// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include

+#include

+#include

+#include "lite/api/lite_api_test_helper.h"

+#include "lite/api/paddle_api.h"

+#include "lite/api/paddle_use_kernels.h"

+#include "lite/api/paddle_use_ops.h"

+#include "lite/api/paddle_use_passes.h"

+#include "lite/api/test_helper.h"

+#include "lite/tests/api/ILSVRC2012_utility.h"

+#include "lite/utils/cp_logging.h"

+

+DEFINE_string(data_dir, "", "data dir");

+DEFINE_int32(iteration, 100, "iteration times to run");

+DEFINE_int32(batch, 1, "batch of image");

+DEFINE_int32(channel, 3, "image channel");

+

+namespace paddle {

+namespace lite {

+

+TEST(MobileNetV2, test_mobilenetv2_fp32_huawei_kirin_npu) {

+ lite_api::CxxConfig config;

+ config.set_model_dir(FLAGS_model_dir);

+ config.set_valid_places({lite_api::Place{TARGET(kARM), PRECISION(kFloat)},

+ lite_api::Place{TARGET(kNPU), PRECISION(kFloat)}});

+ auto predictor = lite_api::CreatePaddlePredictor(config);

+

+ std::string raw_data_dir = FLAGS_data_dir + std::string("/raw_data");

+ std::vector input_shape{

+ FLAGS_batch, FLAGS_channel, FLAGS_im_width, FLAGS_im_height};

+ auto raw_data = ReadRawData(raw_data_dir, input_shape, FLAGS_iteration);

+

+ int input_size = 1;

+ for (auto i : input_shape) {

+ input_size *= i;

+ }

+

+ for (int i = 0; i < FLAGS_warmup; ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ for (int j = 0; j < input_size; j++) {

+ data[j] = 0.f;

+ }

+ predictor->Run();

+ }

+

+ std::vector> out_rets;

+ out_rets.resize(FLAGS_iteration);

+ double cost_time = 0;

+ for (size_t i = 0; i < raw_data.size(); ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ memcpy(data, raw_data[i].data(), sizeof(float) * input_size);

+

+ double start = GetCurrentUS();

+ predictor->Run();

+ cost_time += GetCurrentUS() - start;

+

+ auto output_tensor = predictor->GetOutput(0);

+ auto output_shape = output_tensor->shape();

+ auto output_data = output_tensor->data();

+ ASSERT_EQ(output_shape.size(), 2UL);

+ ASSERT_EQ(output_shape[0], 1);

+ ASSERT_EQ(output_shape[1], 1000);

+

+ int output_size = output_shape[0] * output_shape[1];

+ out_rets[i].resize(output_size);

+ memcpy(&(out_rets[i].at(0)), output_data, sizeof(float) * output_size);

+ }

+

+ LOG(INFO) << "================== Speed Report ===================";

+ LOG(INFO) << "Model: " << FLAGS_model_dir << ", threads num " << FLAGS_threads

+ << ", warmup: " << FLAGS_warmup << ", batch: " << FLAGS_batch

+ << ", iteration: " << FLAGS_iteration << ", spend "

+ << cost_time / FLAGS_iteration / 1000.0 << " ms in average.";

+

+ std::string labels_dir = FLAGS_data_dir + std::string("/labels.txt");

+ float out_accuracy = CalOutAccuracy(out_rets, labels_dir);

+ ASSERT_GE(out_accuracy, 0.57f);

+}

+

+} // namespace lite

+} // namespace paddle

diff --git a/lite/tests/api/test_resnet50_fp32_huawei_kirin_npu.cc b/lite/tests/api/test_resnet50_fp32_huawei_kirin_npu.cc

new file mode 100644

index 0000000000000000000000000000000000000000..af48c5c5dbad3ba3e5958fefddaf2b88c660e301

--- /dev/null

+++ b/lite/tests/api/test_resnet50_fp32_huawei_kirin_npu.cc

@@ -0,0 +1,101 @@

+// Copyright (c) 2019 PaddlePaddle Authors. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+#include

+#include

+#include

+#include "lite/api/lite_api_test_helper.h"

+#include "lite/api/paddle_api.h"

+#include "lite/api/paddle_use_kernels.h"

+#include "lite/api/paddle_use_ops.h"

+#include "lite/api/paddle_use_passes.h"

+#include "lite/api/test_helper.h"

+#include "lite/tests/api/ILSVRC2012_utility.h"

+#include "lite/utils/cp_logging.h"

+

+DEFINE_string(data_dir, "", "data dir");

+DEFINE_int32(iteration, 100, "iteration times to run");

+DEFINE_int32(batch, 1, "batch of image");

+DEFINE_int32(channel, 3, "image channel");

+

+namespace paddle {

+namespace lite {

+

+TEST(ResNet50, test_resnet50_fp32_huawei_kirin_npu) {

+ lite_api::CxxConfig config;

+ config.set_model_dir(FLAGS_model_dir);

+ config.set_valid_places({lite_api::Place{TARGET(kARM), PRECISION(kFloat)},

+ lite_api::Place{TARGET(kNPU), PRECISION(kFloat)}});

+ auto predictor = lite_api::CreatePaddlePredictor(config);

+

+ std::string raw_data_dir = FLAGS_data_dir + std::string("/raw_data");

+ std::vector input_shape{

+ FLAGS_batch, FLAGS_channel, FLAGS_im_width, FLAGS_im_height};

+ auto raw_data = ReadRawData(raw_data_dir, input_shape, FLAGS_iteration);

+

+ int input_size = 1;

+ for (auto i : input_shape) {

+ input_size *= i;

+ }

+

+ for (int i = 0; i < FLAGS_warmup; ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ for (int j = 0; j < input_size; j++) {

+ data[j] = 0.f;

+ }

+ predictor->Run();

+ }

+

+ std::vector> out_rets;

+ out_rets.resize(FLAGS_iteration);

+ double cost_time = 0;

+ for (size_t i = 0; i < raw_data.size(); ++i) {

+ auto input_tensor = predictor->GetInput(0);

+ input_tensor->Resize(

+ std::vector(input_shape.begin(), input_shape.end()));

+ auto* data = input_tensor->mutable_data();

+ memcpy(data, raw_data[i].data(), sizeof(float) * input_size);

+

+ double start = GetCurrentUS();

+ predictor->Run();

+ cost_time += GetCurrentUS() - start;

+

+ auto output_tensor = predictor->GetOutput(0);

+ auto output_shape = output_tensor->shape();

+ auto output_data = output_tensor->data();

+ ASSERT_EQ(output_shape.size(), 2UL);

+ ASSERT_EQ(output_shape[0], 1);

+ ASSERT_EQ(output_shape[1], 1000);

+

+ int output_size = output_shape[0] * output_shape[1];

+ out_rets[i].resize(output_size);

+ memcpy(&(out_rets[i].at(0)), output_data, sizeof(float) * output_size);

+ }

+

+ LOG(INFO) << "================== Speed Report ===================";

+ LOG(INFO) << "Model: " << FLAGS_model_dir << ", threads num " << FLAGS_threads

+ << ", warmup: " << FLAGS_warmup << ", batch: " << FLAGS_batch

+ << ", iteration: " << FLAGS_iteration << ", spend "

+ << cost_time / FLAGS_iteration / 1000.0 << " ms in average.";

+

+ std::string labels_dir = FLAGS_data_dir + std::string("/labels.txt");

+ float out_accuracy = CalOutAccuracy(out_rets, labels_dir);

+ ASSERT_GE(out_accuracy, 0.64f);

+}

+

+} // namespace lite

+} // namespace paddle

diff --git a/lite/tests/kernels/CMakeLists.txt b/lite/tests/kernels/CMakeLists.txt

index ad909bef694bfa5a36370abc6869d6a482e4c52b..bff045522f6a057bcfd0801eb87289ebb4e62b7d 100644

--- a/lite/tests/kernels/CMakeLists.txt

+++ b/lite/tests/kernels/CMakeLists.txt

@@ -1,100 +1,100 @@

-if((NOT LITE_WITH_OPENCL AND NOT LITE_WITH_FPGA AND NOT LITE_WITH_BM AND NOT LITE_WITH_MLU AND NOT LITE_WITH_RKNPU) AND (LITE_WITH_X86 OR LITE_WITH_ARM))

- lite_cc_test(test_kernel_conv_compute SRCS conv_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_conv_transpose_compute SRCS conv_transpose_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_scale_compute SRCS scale_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_power_compute SRCS power_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_shuffle_channel_compute SRCS shuffle_channel_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_yolo_box_compute SRCS yolo_box_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_fc_compute SRCS fc_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_elementwise_compute SRCS elementwise_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})

- lite_cc_test(test_kernel_lrn_compute SRCS lrn_compute_test.cc DEPS arena_framework ${xpu_kernels} ${npu_kernels} ${huawei_ascend_npu_kernels} ${x86_kernels} ${cuda_kernels} ${arm_kernels} ${lite_ops} ${host_kernels})