/api' |

+| open_browser | boolean | 是否打开浏览器,设置为True则在启动后自动打开浏览器并访问VisualDL面板,若设置api_only,则忽略此参数 |

针对上一步生成的日志,我们的启动脚本为:

@@ -155,7 +186,7 @@ app.run(logdir="./log")

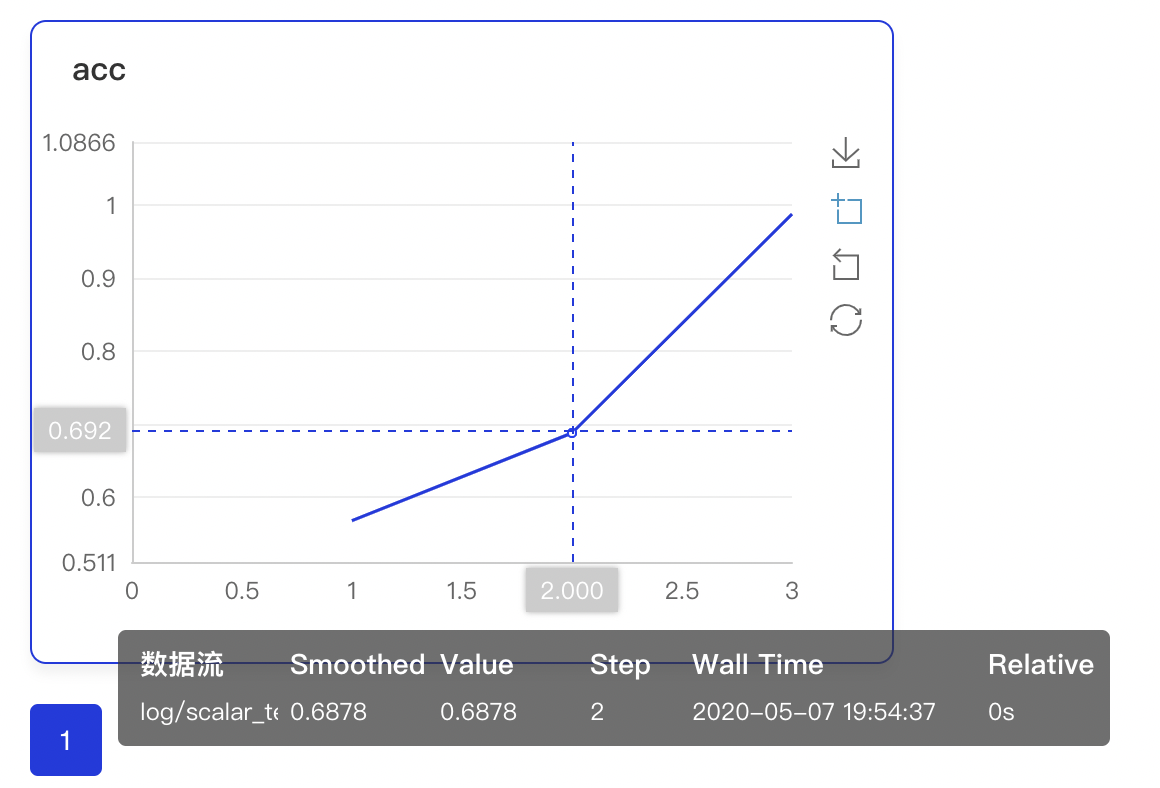

在使用任意一种方式启动VisualDL面板后,打开浏览器访问VisualDL面板,即可查看日志的可视化结果,如图:

-

@@ -163,27 +194,31 @@ app.run(logdir="./log")

## 可视化功能概览

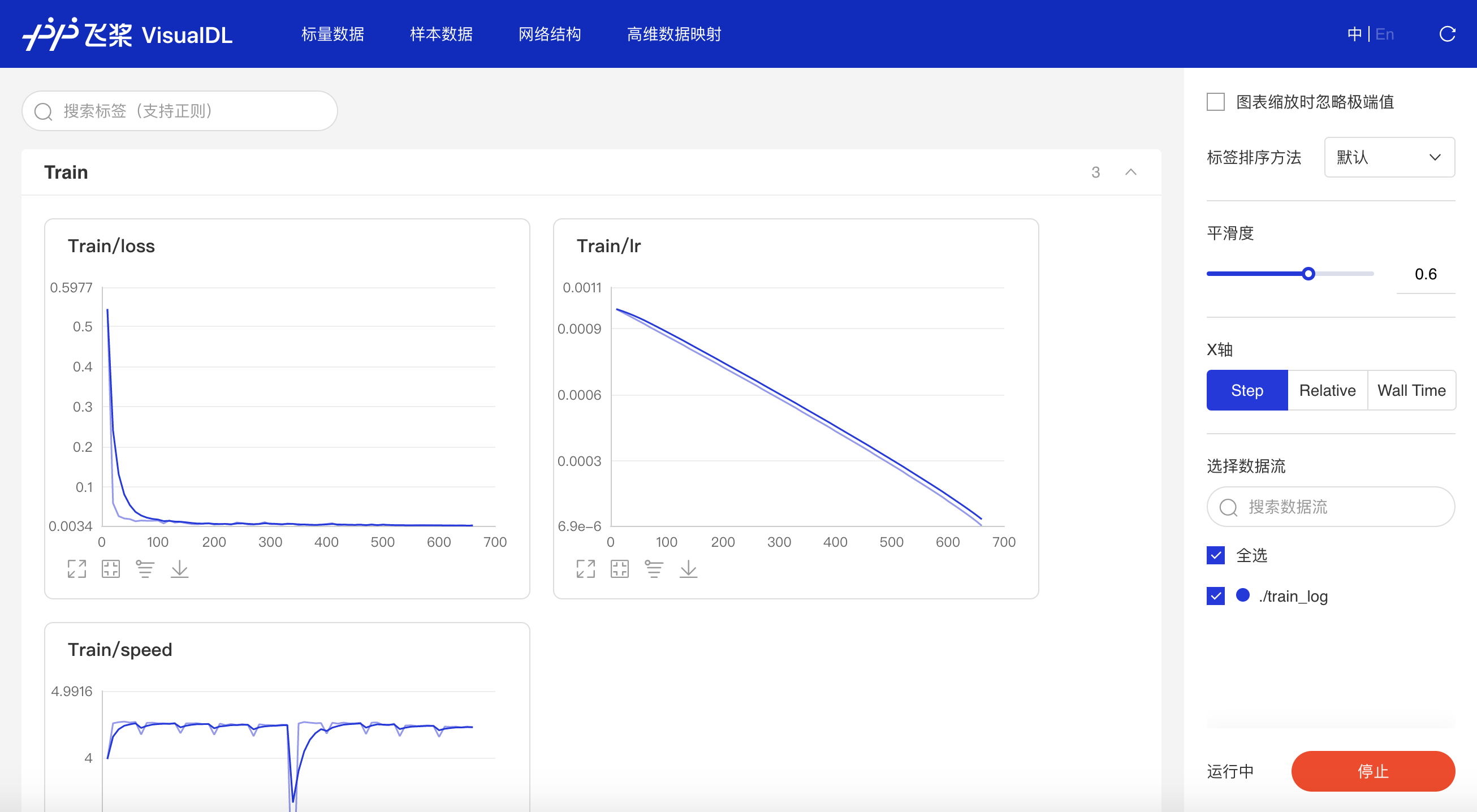

### Scalar

+

以图表形式实时展示训练过程参数,如loss、accuracy。让用户通过观察单组或多组训练参数变化,了解训练过程,加速模型调优。具有两大特点:

#### 动态展示

-在启动VisualDL Board后,LogReader将不断增量的读取日志中数据并供前端调用展示,因此能够在训练中同步观测指标变化,如下图:

+在启动VisualDL后,LogReader将不断增量的读取日志中数据并供前端调用展示,因此能够在训练中同步观测指标变化,如下图:

+

#### 多实验对比

-只需在启动VisualDL Board的时将每个实验日志所在路径同时传入即可,每个实验中相同tag的指标将绘制在一张图中同步呈现,如下图:

+只需在启动VisualDL时将每个实验日志所在路径同时传入即可,每个实验中相同tag的指标将绘制在一张图中同步呈现,如下图:

+

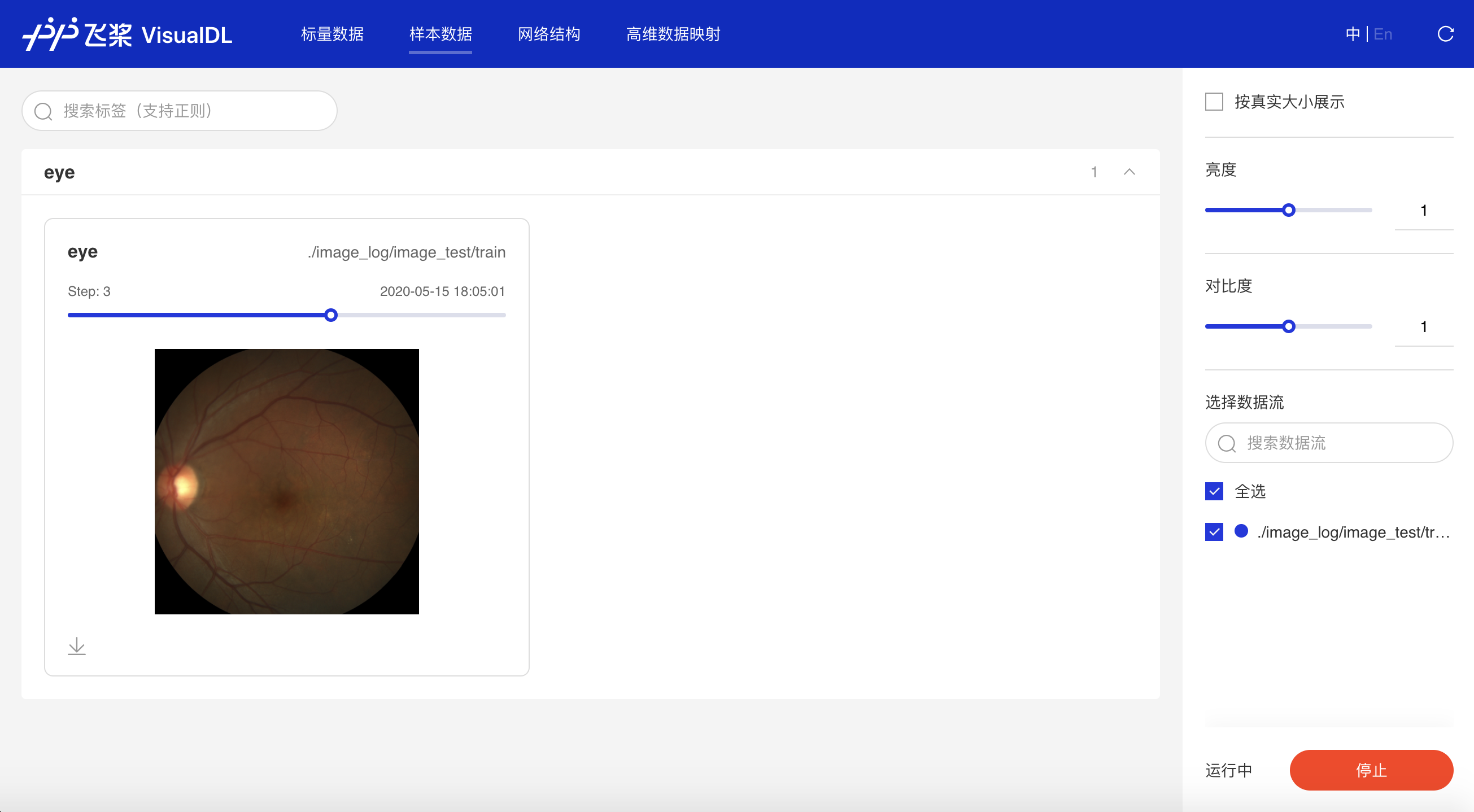

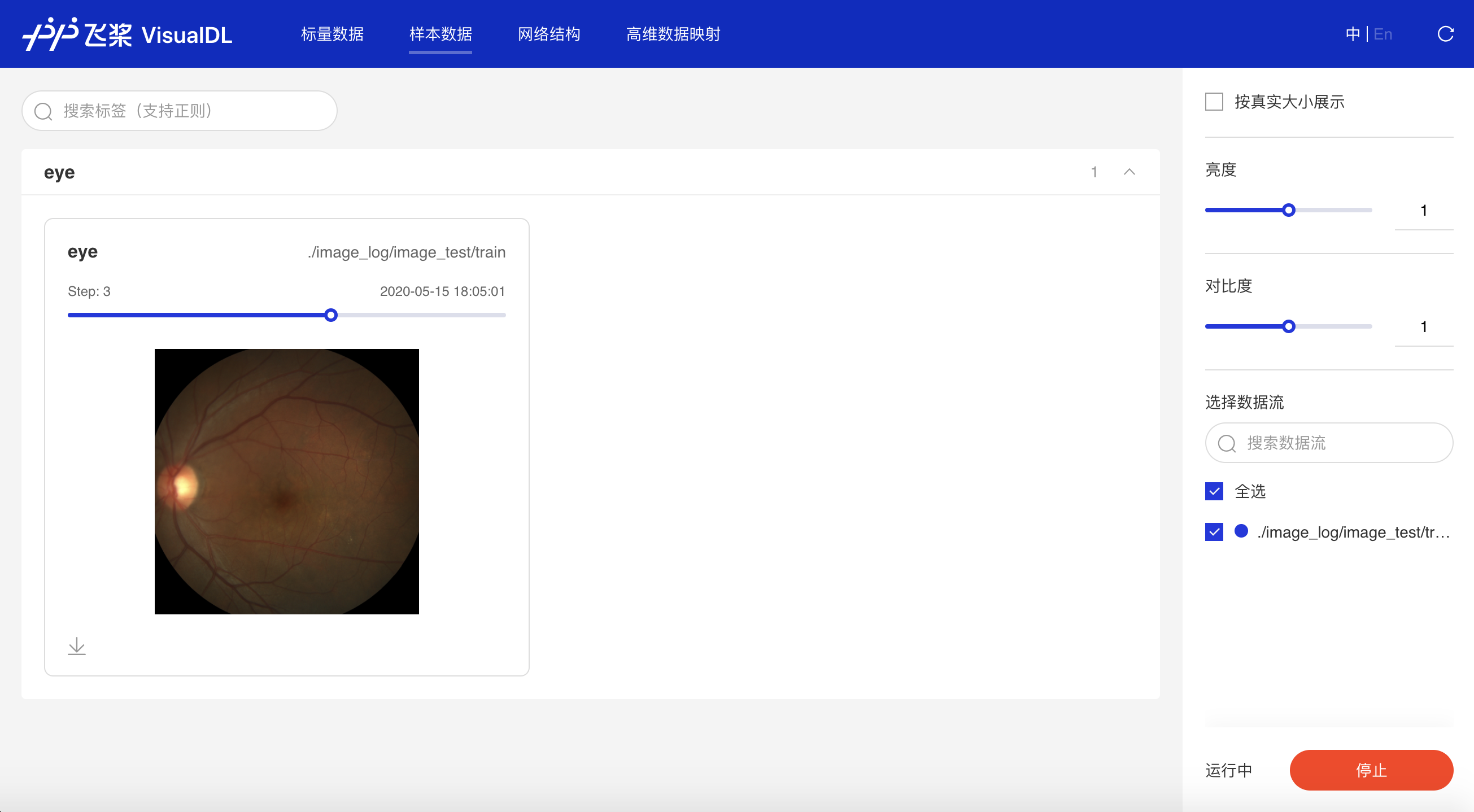

### Image

+

实时展示训练过程中的图像数据,用于观察不同训练阶段的图像变化,进而深入了解训练过程及效果。

@@ -191,6 +226,56 @@ app.run(logdir="./log")

+

+### Audio

+

+实时查看训练过程中的音频数据,监控语音识别与合成等任务的训练过程。

+

+

+

+

+

+

+### Graph

+

+一键可视化模型的网络结构。可查看模型属性、节点信息、节点输入输出等,并支持节点搜索,辅助用户快速分析模型结构与了解数据流向。

+

+

+

+

+

+

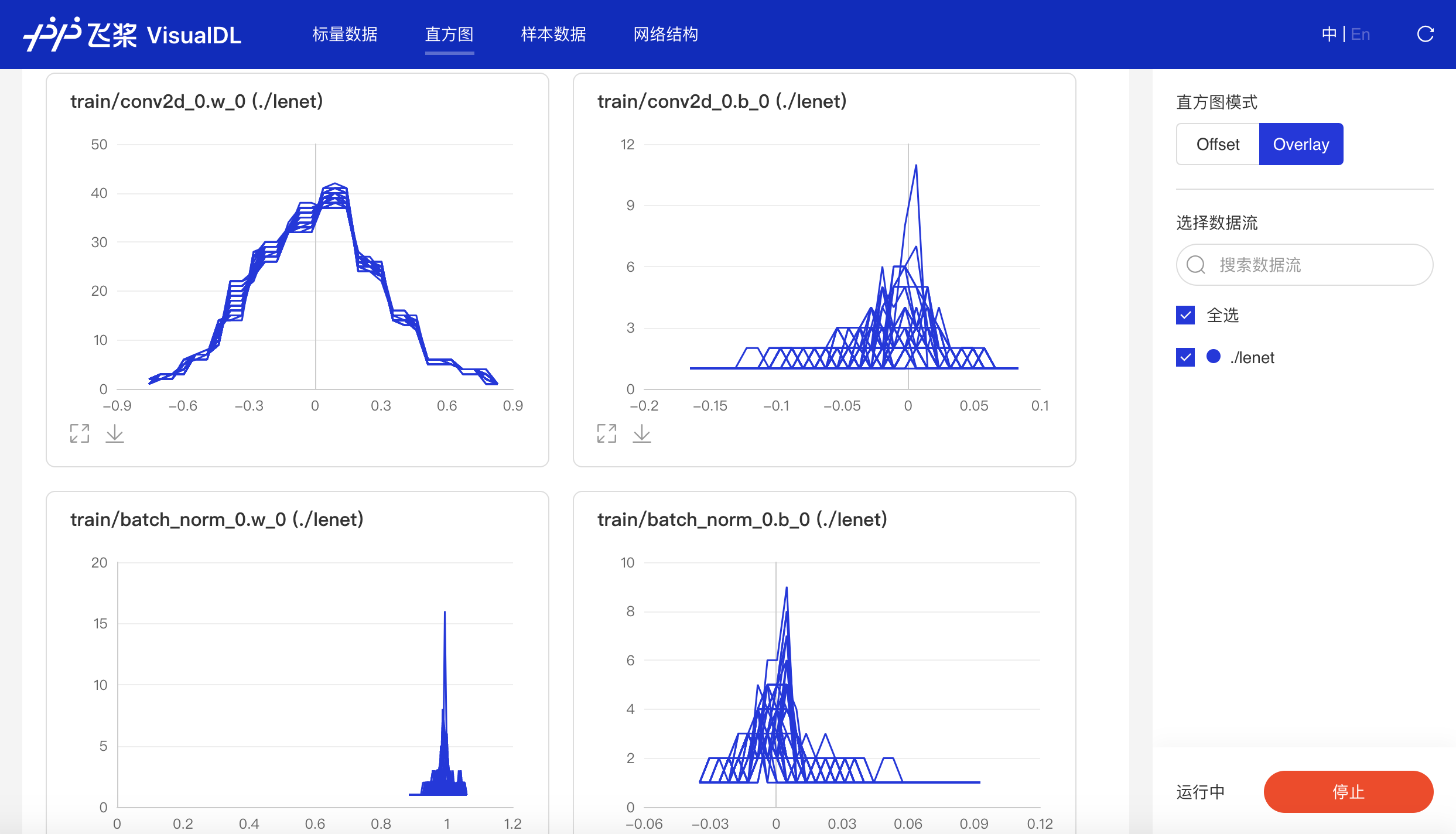

+### Histogram

+

+以直方图形式展示Tensor(weight、bias、gradient等)数据在训练过程中的变化趋势。深入了解模型各层效果,帮助开发者精准调整模型结构。

+

+- Offset模式

+

+

+

+

+

+

+- Overlay模式

+

+

+

+

+

+

+### PR Curve

+

+精度-召回率曲线,帮助开发者权衡模型精度和召回率之间的平衡,设定最佳阈值。

+

+

+

+

+

### High Dimensional

将高维数据进行降维展示,目前支持T-SNE、PCA两种降维方式,用于深入分析高维数据间的关系,方便用户根据数据特征进行算法优化。

@@ -201,9 +286,15 @@ app.run(logdir="./log")

## 开源贡献

-VisualDL 是由 [PaddlePaddle](http://www.paddlepaddle.org/) 和 [ECharts](http://echarts.baidu.com/) 合作推出的开源项目。欢迎所有人使用,提意见以及贡献代码。

+VisualDL 是由 [PaddlePaddle](https://www.paddlepaddle.org/) 和 [ECharts](https://echarts.apache.org/) 合作推出的开源项目。

+Graph 相关功能由 [Netron](https://github.com/lutzroeder/netron) 提供技术支持。

+欢迎所有人使用,提意见以及贡献代码。

## 更多细节

-想了解更多关于VisualDL可视化功能的使用详情介绍,请查看**Visual DL 使用指南**。

+想了解更多关于VisualDL可视化功能的使用详情介绍,请查看**VisualDL使用指南**。

+

+## 技术交流

+

+欢迎您加入VisualDL官方QQ群:1045783368 与飞桨团队以及其他用户共同针对VisualDL进行讨论与交流。

diff --git a/doc/fluid/advanced_guide/evaluation_debugging/debug/visualdl_usage.md b/doc/fluid/advanced_guide/evaluation_debugging/debug/visualdl_usage.md

index f191aa8cef12caf67d2e42666683fb2155aae437..e6a6445e3d4a89501f236bba6cf5623304ab3024 100644

--- a/doc/fluid/advanced_guide/evaluation_debugging/debug/visualdl_usage.md

+++ b/doc/fluid/advanced_guide/evaluation_debugging/debug/visualdl_usage.md

@@ -1,20 +1,20 @@

-

-

# VisualDL 使用指南

### 概述

VisualDL 是一个面向深度学习任务设计的可视化工具。VisualDL 利用了丰富的图表来展示数据,用户可以更直观、清晰地查看数据的特征与变化趋势,有助于分析数据、及时发现错误,进而改进神经网络模型的设计。

-目前,VisualDL 支持 scalar, image, high dimensional 三个组件,项目正处于高速迭代中,敬请期待新组件的加入。

-

-| 组件名称 | 展示图表 | 作用 |

-| :----------------------------------------------------------: | :--------: | :----------------------------------------------------------- |

-| [ Scalar](#Scalar -- 折线图组件) | 折线图 | 动态展示损失函数值、准确率等标量数据 |

-| [Image](#Image -- 图片可视化组件) | 图片可视化 | 显示图片,可显示输入图片和处理后的结果,便于查看中间过程的变化 |

-| [High Dimensional](#High Dimensional -- 数据降维组件) | 数据降维 | 将高维数据映射到 2D/3D 空间来可视化嵌入,便于观察不同数据的相关性 |

-

+目前,VisualDL 支持 scalar, image, audio, graph, histogram, pr curve, high dimensional 七个组件,项目正处于高速迭代中,敬请期待新组件的加入。

+| 组件名称 | 展示图表 | 作用 |

+| :-------------------------------------------------: | :--------: | :----------------------------------------------------------- |

+| [ Scalar](#Scalar--标量组件) | 折线图 | 动态展示损失函数值、准确率等标量数据 |

+| [Image](#Image--图片可视化组件) | 图片可视化 | 显示图片,可显示输入图片和处理后的结果,便于查看中间过程的变化 |

+| [Audio](#Audio--音频播放组件) | 音频播放 | 播放训练过程中的音频数据,监控语音识别与合成等任务的训练过程 |

+| [Graph](#Graph--网络结构组件) | 网络结构 | 展示网络结构、节点属性及数据流向,辅助学习、优化网络结构 |

+| [Histogram](#Histogram--直方图组件) | 直方图 | 展示训练过程中权重、梯度等张量的分布 |

+| [PR Curve](#PR-Curve--PR曲线组件) | 折线图 | 权衡精度与召回率之间的平衡关系,便于选择最佳阈值 |

+| [High Dimensional](#High-Dimensional--数据降维组件) | 数据降维 | 将高维数据映射到 2D/3D 空间来可视化嵌入,便于观察不同数据的相关性 |

## Scalar -- 折线图组件

@@ -29,16 +29,22 @@ Scalar 组件的记录接口如下:

```python

add_scalar(tag, value, step, walltime=None)

```

+

接口参数说明如下:

-|参数|格式|含义|

-|-|-|-|

-|tag|string|记录指标的标志,如`train/loss`,不能含有`%`|

-|value|float|要记录的数据值|

-|step|int|记录的步数|

-|walltime|int|记录数据的时间戳,默认为当前时间戳|

+

+| 参数 | 格式 | 含义 |

+| -------- | ------ | ------------------------------------------- |

+| tag | string | 记录指标的标志,如`train/loss`,不能含有`%` |

+| value | float | 要记录的数据值 |

+| step | int | 记录的步数 |

+| walltime | int | 记录数据的时间戳,默认为当前时间戳 |

### Demo

-下面展示了使用 Scalar 组件记录数据的示例,代码见[Scalar组件](../../demo/components/scalar_test.py)

+

+- 基础使用

+

+下面展示了使用 Scalar 组件记录数据的示例,代码文件请见[Scalar组件](https://github.com/PaddlePaddle/VisualDL/blob/develop/demo/components/scalar_test.py)

+

```python

from visualdl import LogWriter

@@ -52,7 +58,9 @@ if __name__ == '__main__':

# 向记录器添加一个tag为`loss`的数据

writer.add_scalar(tag="loss", step=step, value=1/(value[step] + 1))

```

+

运行上述程序后,在命令行执行

+

```shell

visualdl --logdir ./log --port 8080

```

@@ -60,11 +68,58 @@ visualdl --logdir ./log --port 8080

接着在浏览器打开`http://127.0.0.1:8080`,即可查看以下折线图。

-

+- 多组实验对比

+

+下面展示了使用Scalar组件实现多组实验对比

+

+多组实验对比的实现分为两步:

+

+1. 创建子日志文件储存每组实验的参数数据

+2. 将数据写入scalar组件时,**使用相同的tag**,即可实现对比**不同实验**的**同一类型参数**

+

+```python

+from visualdl import LogWriter

+

+if __name__ == '__main__':

+ value = [i/1000.0 for i in range(1000)]

+ # 步骤一:创建父文件夹:log与子文件夹:scalar_test

+ with LogWriter(logdir="./log/scalar_test") as writer:

+ for step in range(1000):

+ # 步骤二:向记录器添加一个tag为`train/acc`的数据

+ writer.add_scalar(tag="train/acc", step=step, value=value[step])

+ # 步骤二:向记录器添加一个tag为`train/loss`的数据

+ writer.add_scalar(tag="train/loss", step=step, value=1/(value[step] + 1))

+ # 步骤一:创建第二个子文件夹scalar_test2

+ value = [i/500.0 for i in range(1000)]

+ with LogWriter(logdir="./log/scalar_test2") as writer:

+ for step in range(1000):

+ # 步骤二:在同样名为`train/acc`下添加scalar_test2的accuracy的数据

+ writer.add_scalar(tag="train/acc", step=step, value=value[step])

+ # 步骤二:在同样名为`train/loss`下添加scalar_test2的loss的数据

+ writer.add_scalar(tag="train/loss", step=step, value=1/(value[step] + 1))

+```

+

+运行上述程序后,在命令行执行

+

+```shell

+visualdl --logdir ./log --port 8080

+```

+

+接着在浏览器打开`http://127.0.0.1:8080`,即可查看以下折线图,对比「scalar_test」和「scalar_test2」的Accuracy和Loss。

+

+

+

+

+

+*多组实验对比的应用案例可参考AI Studio项目:[VisualDL 2.0--眼疾识别训练可视化](https://aistudio.baidu.com/aistudio/projectdetail/502834)

+

+

### 功能操作说明

* 支持数据卡片「最大化」、「还原」、「坐标系转化」(y轴对数坐标)、「下载」折线图

@@ -75,6 +130,8 @@ visualdl --logdir ./log --port 8080

+

+

* 数据点Hover展示详细信息

@@ -83,6 +140,8 @@ visualdl --logdir ./log --port 8080

+

+

* 可搜索卡片标签,展示目标图像

@@ -91,6 +150,8 @@ visualdl --logdir ./log --port 8080

+

+

* 可搜索打点数据标签,展示特定数据

@@ -98,6 +159,8 @@ visualdl --logdir ./log --port 8080

+

+

* X轴有三种衡量尺度

1. Step:迭代次数

@@ -107,6 +170,8 @@ visualdl --logdir ./log --port 8080

+

+

* 可调整曲线平滑度,以便更好的展现参数整体的变化趋势

@@ -114,6 +179,8 @@ visualdl --logdir ./log --port 8080

+

+

## Image -- 图片可视化组件

### 介绍

@@ -127,16 +194,20 @@ Image 组件的记录接口如下:

```python

add_image(tag, img, step, walltime=None)

```

+

接口参数说明如下:

-|参数|格式|含义|

-|-|-|-|

-|tag|string|记录指标的标志,如`train/loss`,不能含有`%`|

-|img|numpy.ndarray|以ndarray格式表示的图片|

-|step|int|记录的步数|

-|walltime|int|记录数据的时间戳,默认为当前时间戳|

+

+| 参数 | 格式 | 含义 |

+| -------- | ------------- | ------------------------------------------- |

+| tag | string | 记录指标的标志,如`train/loss`,不能含有`%` |

+| img | numpy.ndarray | 以ndarray格式表示的图片 |

+| step | int | 记录的步数 |

+| walltime | int | 记录数据的时间戳,默认为当前时间戳 |

### Demo

-下面展示了使用 Image 组件记录数据的示例,代码文件请见[Image组件](../../demo/components/image_test.py)

+

+下面展示了使用 Image 组件记录数据的示例,代码文件请见[Image组件](https://github.com/PaddlePaddle/VisualDL/blob/develop/demo/components/image_test.py)

+

```python

import numpy as np

from PIL import Image

@@ -159,11 +230,13 @@ if __name__ == '__main__':

with LogWriter(logdir="./log/image_test/train") as writer:

for step in range(6):

# 添加一个图片数据

- writer.add_image(tag="doge",

+ writer.add_image(tag="eye",

img=random_crop("../../docs/images/eye.jpg"),

step=step)

```

+

运行上述程序后,在命令行执行

+

```shell

visualdl --logdir ./log --port 8080

```

@@ -171,10 +244,12 @@ visualdl --logdir ./log --port 8080

在浏览器输入`http://127.0.0.1:8080`,即可查看图片数据。

-

+

+

### 功能操作说明

可搜索图片标签显示对应图片数据

@@ -184,6 +259,8 @@ visualdl --logdir ./log --port 8080

+

+

支持滑动Step/迭代次数查看不同迭代次数下的图片数据

@@ -191,6 +268,442 @@ visualdl --logdir ./log --port 8080

+

+

+## Audio--音频播放组件

+

+### 介绍

+

+Audio组件实时查看训练过程中的音频数据,监控语音识别与合成等任务的训练过程。

+

+### 记录接口

+

+Audio 组件的记录接口如下:

+

+```python

+add_audio(tag, audio_array, step, sample_rate)

+```

+

+接口参数说明如下:

+

+| 参数 | 格式 | 含义 |

+| ----------- | ------------- | ------------------------------------------ |

+| tag | string | 记录指标的标志,如`audio_tag`,不能含有`%` |

+| audio_arry | numpy.ndarray | 以ndarray格式表示的音频 |

+| step | int | 记录的步数 |

+| sample_rate | int | 采样率,**注意正确填写对应音频的原采样率** |

+

+### Demo

+

+下面展示了使用 Audio 组件记录数据的示例,代码文件请见[Audio组件](https://github.com/PaddlePaddle/VisualDL/blob/develop/demo/components/audio_test.py)

+

+```python

+from visualdl import LogWriter

+import numpy as np

+import wave

+

+

+def read_audio_data(audio_path):

+ """

+ Get audio data.

+ """

+ CHUNK = 4096

+ f = wave.open(audio_path, "rb")

+ wavdata = []

+ chunk = f.readframes(CHUNK)

+ while chunk:

+ data = np.frombuffer(chunk, dtype='uint8')

+ wavdata.extend(data)

+ chunk = f.readframes(CHUNK)

+ # 8k sample rate, 16bit frame, 1 channel

+ shape = [8000, 2, 1]

+ return shape, wavdata

+

+

+if __name__ == '__main__':

+ with LogWriter(logdir="./log") as writer:

+ audio_shape, audio_data = read_audio_data("./testing.wav")

+ audio_data = np.array(audio_data)

+ writer.add_audio(tag="audio_tag",

+ audio_array=audio_data,

+ step=0,

+ sample_rate=8000)

+```

+

+运行上述程序后,在命令行执行

+

+```shell

+visualdl --logdir ./log --port 8080

+```

+

+在浏览器输入`http://127.0.0.1:8080`,即可查看音频数据。

+

+

+

+

+

+

+### 功能操作说明

+

+- 可搜索音频标签显示对应音频数据

+

+

+

+

+

+

+- 支持滑动Step/迭代次数试听不同迭代次数下的音频数据

+

+

+

+

+

+

+- 支持播放/暂停音频数据

+

+

+

+

+

+

+- 支持音量调节

+

+

+

+

+

+

+- 支持音频下载

+

+

+

+

+

+

+

+## Graph--网络结构组件

+

+### 介绍

+

+Graph组件一键可视化模型的网络结构。用于查看模型属性、节点信息、节点输入输出等,并进行节点搜索,协助开发者们快速分析模型结构与了解数据流向。

+

+### Demo

+

+共有两种启动方式:

+

+- 前端模型文件拖拽上传:

+

+ - 如只需使用Graph组件,则无需添加任何参数,在命令行执行`visualdl`后即可启动面板进行上传。

+ - 如果同时需使用其他功能,在命令行指定日志文件路径(以`./log`为例)即可启动面板进行上传:

+

+ ```shell

+ visualdl --logdir ./log --port 8080

+ ```

+

+

+

+

+

+

+- 后端启动Graph:

+

+ - 在命令行加入参数`--model`并指定**模型文件**路径(非文件夹路径),即可启动并查看网络结构可视化:

+

+ ```shell

+ visualdl --model ./log/model --port 8080

+ ```

+

+

+

+

+

+

+### 功能操作说明

+

+- 一键上传模型

+ - 支持模型格式:PaddlePaddle、ONNX、Keras、Core ML、Caffe、Caffe2、Darknet、MXNet、ncnn、TensorFlow Lite

+ - 实验性支持模型格式:TorchScript、PyTorch、Torch、 ArmNN、BigDL、Chainer、CNTK、Deeplearning4j、MediaPipe、ML.NET、MNN、OpenVINO、Scikit-learn、Tengine、TensorFlow.js、TensorFlow

+

+

+

+

+

+

+- 支持上下左右任意拖拽模型、放大和缩小模型

+

+

+

+

+

+

+- 搜索定位到对应节点

+

+

+

+

+

+

+- 点击查看模型属性

+

+

+

+

+

+

+

+

+

+

+

+- 支持选择模型展示的信息

+

+

+

+

+

+

+- 支持以PNG、SVG格式导出模型结构图

+

+

+

+

+

+

+- 点击节点即可展示对应属性信息

+

+

+

+

+

+

+- 支持一键更换模型

+

+

+

+

+

+

+## Histogram--直方图组件

+

+### 介绍

+

+Histogram组件以直方图形式展示Tensor(weight、bias、gradient等)数据在训练过程中的变化趋势。深入了解模型各层效果,帮助开发者精准调整模型结构。

+

+### 记录接口

+

+Histogram 组件的记录接口如下:

+

+```python

+add_histogram(tag, values, step, walltime=None, buckets=10)

+```

+

+接口参数说明如下:

+

+| 参数 | 格式 | 含义 |

+| -------- | --------------------- | ------------------------------------------- |

+| tag | string | 记录指标的标志,如`train/loss`,不能含有`%` |

+| values | numpy.ndarray or list | 以ndarray或list格式表示的数据 |

+| step | int | 记录的步数 |

+| walltime | int | 记录数据的时间戳,默认为当前时间戳 |

+| buckets | int | 生成直方图的分段数,默认为10 |

+

+### Demo

+

+下面展示了使用 Histogram组件记录数据的示例,代码文件请见[Histogram组件](https://github.com/PaddlePaddle/VisualDL/blob/develop/demo/components/histogram_test.py)

+

+```python

+from visualdl import LogWriter

+import numpy as np

+

+

+if __name__ == '__main__':

+ values = np.arange(0, 1000)

+ with LogWriter(logdir="./log/histogram_test/train") as writer:

+ for index in range(1, 101):

+ interval_start = 1 + 2 * index / 100.0

+ interval_end = 6 - 2 * index / 100.0

+ data = np.random.uniform(interval_start, interval_end, size=(10000))

+ writer.add_histogram(tag='default tag',

+ values=data,

+ step=index,

+ buckets=10)

+```

+

+运行上述程序后,在命令行执行

+

+```shell

+visualdl --logdir ./log --port 8080

+```

+

+在浏览器输入`http://127.0.0.1:8080`,即可查看训练参数直方图。

+

+### 功能操作说明

+

+- 支持数据卡片「最大化」、直方图「下载」

+

+

+

+

+- 可选择Offset或Overlay模式

+

+

+

+

+

+ - Offset模式

+

+

+

+

+

+

+ - Overlay模式

+

+

+

+

+

+- 数据点Hover展示参数值、训练步数、频次

+

+ - 在第240次训练步数时,权重为-0.0031,且出现的频次是2734次

+

+

+

+

+- 可搜索卡片标签,展示目标直方图

+

+

+

+

+- 可搜索打点数据标签,展示特定数据流

+

+

+

+

+## PR Curve--PR曲线组件

+

+### 介绍

+

+PR Curve以折线图形式呈现精度与召回率的权衡分析,清晰直观了解模型训练效果,便于分析模型是否达到理想标准。

+

+### 记录接口

+

+PR Curve组件的记录接口如下:

+

+```python

+add_pr_curve(tag, labels, predictions, step=None, num_thresholds=10)

+```

+

+接口参数说明如下:

+

+| 参数 | 格式 | 含义 |

+| -------------- | --------------------- | ------------------------------------------- |

+| tag | string | 记录指标的标志,如`train/loss`,不能含有`%` |

+| labels | numpy.ndarray or list | 以ndarray或list格式表示的实际类别 |

+| predictions | numpy.ndarray or list | 以ndarray或list格式表示的预测类别 |

+| step | int | 记录的步数 |

+| num_thresholds | int | 阈值设置的个数,默认为10,最大值为127 |

+

+### Demo

+

+下面展示了使用 PR Curve 组件记录数据的示例,代码文件请见[PR Curve组件](#https://github.com/PaddlePaddle/VisualDL/blob/develop/demo/components/pr_curve_test.py)

+

+```python

+from visualdl import LogWriter

+import numpy as np

+

+with LogWriter("./log/pr_curve_test/train") as writer:

+ for step in range(3):

+ labels = np.random.randint(2, size=100)

+ predictions = np.random.rand(100)

+ writer.add_pr_curve(tag='pr_curve',

+ labels=labels,

+ predictions=predictions,

+ step=step,

+ num_thresholds=5)

+```

+

+运行上述程序后,在命令行执行

+

+```shell

+visualdl --logdir ./log --port 8080

+```

+

+接着在浏览器打开`http://127.0.0.1:8080`,即可查看PR Curve

+

+

+

+

+

+

+### 功能操作说明

+

+- 支持数据卡片「最大化」,「还原」、「下载」PR曲线

+

+

+

+

+- 数据点Hover展示详细信息:阈值对应的TP、TN、FP、FN

+

+

+

+

+- 可搜索卡片标签,展示目标图表

+

+

+

+

+- 可搜索打点数据标签,展示特定数据

+

+

+

+

+

+- 支持查看不同训练步数下的PR曲线

+

+

+

+

+- X轴-时间显示类型有三种衡量尺度

+

+ - Step:迭代次数

+ - Walltime:训练绝对时间

+ - Relative:训练时长

+

+

+

+

## High Dimensional -- 数据降维组件

### 介绍

@@ -207,16 +720,20 @@ High Dimensional 组件的记录接口如下:

```python

add_embeddings(tag, labels, hot_vectors, walltime=None)

```

+

接口参数说明如下:

-|参数|格式|含义|

-|-|-|-|

-|tag|string|记录指标的标志,如`default`,不能含有`%`|

-|labels|numpy.array 或 list|一维数组表示的标签,每个元素是一个string类型的字符串|

-|hot_vectors|numpy.array or list|与labels一一对应,每个元素可以看作是某个标签的特征|

-|walltime|int|记录数据的时间戳,默认为当前时间戳|

+

+| 参数 | 格式 | 含义 |

+| ----------- | ------------------- | ---------------------------------------------------- |

+| tag | string | 记录指标的标志,如`default`,不能含有`%` |

+| labels | numpy.array 或 list | 一维数组表示的标签,每个元素是一个string类型的字符串 |

+| hot_vectors | numpy.array or list | 与labels一一对应,每个元素可以看作是某个标签的特征 |

+| walltime | int | 记录数据的时间戳,默认为当前时间戳 |

### Demo

-下面展示了使用 High Dimensional 组件记录数据的示例,代码见[High Dimensional组件](../../demo/components/high_dimensional_test.py)

+

+下面展示了使用 High Dimensional 组件记录数据的示例,代码文件请见[High Dimensional组件](https://github.com/PaddlePaddle/VisualDL/blob/develop/demo/components/high_dimensional_test.py)

+

```python

from visualdl import LogWriter

@@ -237,7 +754,9 @@ if __name__ == '__main__':

labels=labels,

hot_vectors=hot_vectors)

```

+

运行上述程序后,在命令行执行

+

```shell

visualdl --logdir ./log --port 8080

```

@@ -245,5 +764,11 @@ visualdl --logdir ./log --port 8080

接着在浏览器打开`http://127.0.0.1:8080`,即可查看降维后的可视化数据。

-

+

+

+

+

+

+#

diff --git a/doc/fluid/advanced_guide/flags/flags_cn.rst b/doc/fluid/advanced_guide/flags/flags_cn.rst

index 6968eaddcdd44f689f108deb3f932a90471974bf..5d0d414725666c1d90f5d58c26dc4536f08f439f 100644

--- a/doc/fluid/advanced_guide/flags/flags_cn.rst

+++ b/doc/fluid/advanced_guide/flags/flags_cn.rst

@@ -2,11 +2,22 @@

环境变量FLAGS

==================

+调用说明

+----------

+

+PaddlePaddle中的环境变量FLAGS支持两种设置方式。

+

+- 通过export来设置环境变量,如 :code:`export FLAGS_eager_delete_tensor_gb = 1.0` 。

+

+- 通过API::code:`get_flag` 和 :code:`set_flags` 来打印和设置环境变量FLAGS。API使用详情请参考 :ref:`cn_api_fluid_get_flags` 与 :ref:`cn_api_fluid_set_flags` 。

+

+

+环境变量FLAGS功能分类

+----------------------

.. toctree::

:maxdepth: 1

-

cudnn_cn.rst

data_cn.rst

debug_cn.rst

diff --git a/doc/fluid/advanced_guide/flags/flags_en.rst b/doc/fluid/advanced_guide/flags/flags_en.rst

index 247c38f16d3cd73b498f0cfa32c8cc05767160dd..b24c551c78d7bc74a76901c717b792f78b4237e3 100644

--- a/doc/fluid/advanced_guide/flags/flags_en.rst

+++ b/doc/fluid/advanced_guide/flags/flags_en.rst

@@ -2,6 +2,17 @@

FLAGS

==================

+Usage

+------

+These FLAGS in PaddlePaddle can be set in two ways.

+

+- Set the FLAGS through export. For example: :code:`export FLAGS_eager_delete_tensor_gb = 1.0` .

+

+- Through :code:`get_flags` and :code:`set_flags` to print and set the environment variables. For more information of using these API, please refer to :ref:`api_fluid_get_flags` and :ref:`api_fluid_get_flags` .

+

+

+FLAGS Quick Search

+------------------

.. toctree::

:maxdepth: 1

diff --git a/doc/fluid/advanced_guide/flags/memory_cn.rst b/doc/fluid/advanced_guide/flags/memory_cn.rst

index cbafa94a0e5b28570cbb16a92f17a947bd3458fd..94676721c2d0baca9a2d744e7dbc7064c7eed279 100644

--- a/doc/fluid/advanced_guide/flags/memory_cn.rst

+++ b/doc/fluid/advanced_guide/flags/memory_cn.rst

@@ -11,13 +11,14 @@ FLAGS_allocator_strategy

取值范围

---------------

-String型,['naive_best_fit', 'auto_growth']中的一个。缺省值为'naive_best_fit'。

+String型,['naive_best_fit', 'auto_growth']中的一个。缺省值如果编译Paddle CMake时使用-DON_INFER=ON为'naive_best_fit'。

+其他默认情况为'auto_growth'。PaddlePaddle pip安装包的默认策略也是'auto_growth'

示例

--------

-FLAGS_allocator_strategy=naive_best_fit - 使用预分配best fit分配器。

+FLAGS_allocator_strategy=naive_best_fit - 使用预分配best fit分配器,PaddlePaddle会先占用大多比例的可用内存/显存,在Paddle具体数据使用时分配,这种方式预占空间较大,但内存/显存碎片较少(比如能够支持模型的最大batch size会变大)。

-FLAGS_allocator_strategy=auto_growth - 使用auto growth分配器。

+FLAGS_allocator_strategy=auto_growth - 使用auto growth分配器。PaddlePaddle会随着真实数据需要再占用内存/显存,但内存/显存可能会产生碎片(比如能够支持模型的最大batch size会变小)。

FLAGS_eager_delete_scope

diff --git a/doc/fluid/advanced_guide/flags/memory_en.rst b/doc/fluid/advanced_guide/flags/memory_en.rst

index 8702a4082006ab05b0a983f3b117fba7617b558f..0e630e7d93d51e668397b9c88fbfd75ad45f9395 100644

--- a/doc/fluid/advanced_guide/flags/memory_en.rst

+++ b/doc/fluid/advanced_guide/flags/memory_en.rst

@@ -11,13 +11,13 @@ Use to choose allocator strategy of PaddlePaddle.

Values accepted

---------------

-String, enum in ['naive_best_fit', 'auto_growth']. The default value is 'naive_best_fit'.

+String, enum in ['naive_best_fit', 'auto_growth']. The default value will be 'naive_best_fit' if users compile PaddlePaddle with -DON_INFER=ON CMake flag, otherwise is 'auto_growth'. The default PaddlePaddle pip package uses 'auto_growth'.

Example

--------

-FLAGS_allocator_strategy=naive_best_fit would use the pre-allocated best fit allocator.

+FLAGS_allocator_strategy=naive_best_fit would use the pre-allocated best fit allocator. 'naive_best_fit' strategy would occupy almost all GPU memory by default but leads to less memory fragmentation (i.e., maximum batch size of models may be larger).

-FLAGS_allocator_strategy=auto_growth would use the auto growth allocator.

+FLAGS_allocator_strategy=auto_growth would use the auto growth allocator. 'auto_growth' strategy would allocate GPU memory on demand but may lead to more memory fragmentation (i.e., maximum batch size of models may be smaller).

diff --git a/doc/fluid/advanced_guide/index_cn.rst b/doc/fluid/advanced_guide/index_cn.rst

index 74bdd3d0da0669a9350bf22a24682111f766c559..d866963d281d2c6faba85ee053dce238c9c42355 100644

--- a/doc/fluid/advanced_guide/index_cn.rst

+++ b/doc/fluid/advanced_guide/index_cn.rst

@@ -11,4 +11,4 @@

:hidden:

inference_deployment/index_cn.rst

-

+ flags/flags_cn.rst

diff --git a/doc/fluid/advanced_guide/index_en.rst b/doc/fluid/advanced_guide/index_en.rst

index 0e926da511f6f79ae2eb2348a9a8f211e1136851..d6401bceb56b9c185ee0559aeb9aa3234436d9c8 100644

--- a/doc/fluid/advanced_guide/index_en.rst

+++ b/doc/fluid/advanced_guide/index_en.rst

@@ -16,5 +16,5 @@ So far you have already been familiar with PaddlePaddle. And the next expectatio

:hidden:

inference_deployment/index_en.rst

-

+ flags/flags_en.rst

diff --git a/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_cn.rst b/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_cn.rst

index a0fe4b9fa4d9bf571f061c77f39e2aa14447d651..c1bfba460db6c12651ac6a04f823812642490c9f 100644

--- a/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_cn.rst

+++ b/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_cn.rst

@@ -7,15 +7,15 @@

-------------

.. csv-table::

- :header: "版本说明", "预测库(1.8.1版本)", "预测库(develop版本)"

+ :header: "版本说明", "预测库(1.8.3版本)", "预测库(develop版本)"

:widths: 3, 2, 2

- "ubuntu14.04_cpu_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cpu_avx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cpu_noavx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cuda9.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cuda10.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cuda10.1_cudnn7.6_avx_mkl_trt6", "`fluid_inference.tgz `_",

+ "ubuntu14.04_cpu_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cpu_avx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cpu_noavx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cuda9.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cuda10.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cuda10.1_cudnn7.6_avx_mkl_trt6", "`fluid_inference.tgz `_",

"nv-jetson-cuda10-cudnn7.5-trt5", "`fluid_inference.tar.gz `_",

diff --git a/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_en.rst b/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_en.rst

index 96205dc72b2657377ae728065e13cdcb15aa262d..545aba61360b0018e3d3a1c28f4e56f4f6005925 100644

--- a/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_en.rst

+++ b/doc/fluid/advanced_guide/inference_deployment/inference/build_and_install_lib_en.rst

@@ -7,15 +7,15 @@ Direct Download and Installation

---------------------------------

.. csv-table:: c++ inference library list

- :header: "version description", "inference library(1.8.1 version)", "inference library(develop version)"

+ :header: "version description", "inference library(1.8.3 version)", "inference library(develop version)"

:widths: 3, 2, 2

- "ubuntu14.04_cpu_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cpu_avx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cpu_noavx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cuda9.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cuda10.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

- "ubuntu14.04_cuda10.1_cudnn7.6_avx_mkl_trt6", "`fluid_inference.tgz `_",

+ "ubuntu14.04_cpu_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cpu_avx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cpu_noavx_openblas", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cuda9.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cuda10.0_cudnn7_avx_mkl", "`fluid_inference.tgz `_", "`fluid_inference.tgz `_"

+ "ubuntu14.04_cuda10.1_cudnn7.6_avx_mkl_trt6", "`fluid_inference.tgz `_",

"nv-jetson-cuda10-cudnn7.5-trt5", "`fluid_inference.tar.gz `_",

Build from Source Code

diff --git a/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference.md b/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference.md

index 71dc617d96e8f1b4076c90d6d570a6a864bab21e..417eaf1e182535b69596876be2ca8cfb3304f6bd 100644

--- a/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference.md

+++ b/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference.md

@@ -5,13 +5,13 @@

下载安装包与对应的测试环境

-------------

-| 版本说明 | 预测库(1.8.1版本) | 编译器 | 构建工具 | cuDNN | CUDA |

+| 版本说明 | 预测库(1.8.3版本) | 编译器 | 构建工具 | cuDNN | CUDA |

|:---------|:-------------------|:-------------------|:----------------|:--------|:-------|

-| cpu_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/mkl/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

-| cpu_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/open/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

-| cuda9.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/mkl/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

-| cuda9.0_cudnn7_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/open/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

-| cuda10.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/mkl/post107/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 10.0 |

+| cpu_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/mkl/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

+| cpu_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/open/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

+| cuda9.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/mkl/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

+| cuda9.0_cudnn7_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/open/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

+| cuda10.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/mkl/post107/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.4.1 | 10.0 |

### 硬件环境

diff --git a/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference_en.md b/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference_en.md

index 0f0e16fd3dc28e50419c83f276c12f49ee366f51..e25ae184810153421013c60c96c9533b00261ae0 100644

--- a/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference_en.md

+++ b/doc/fluid/advanced_guide/inference_deployment/inference/windows_cpp_inference_en.md

@@ -5,13 +5,13 @@ Install and Compile C++ Inference Library on Windows

Direct Download and Install

-------------

-| Version | Inference Libraries(v1.8.1) | Compiler | Build tools | cuDNN | CUDA |

+| Version | Inference Libraries(v1.8.3) | Compiler | Build tools | cuDNN | CUDA |

|:---------|:-------------------|:-------------------|:----------------|:--------|:-------|

-| cpu_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/mkl/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

-| cpu_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/open/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

-| cuda9.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/mkl/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

-| cuda9.0_cudnn7_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/open/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

-| cuda10.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.1/win-infer/mkl/post107/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 10.0 |

+| cpu_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/mkl/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

+| cpu_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/open/cpu/fluid_inference_install_dir.zip) | MSVC 2015 update 3| CMake v3.16.0 |

+| cuda9.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/mkl/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

+| cuda9.0_cudnn7_avx_openblas | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/open/post97/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.3.1 | 9.0 |

+| cuda10.0_cudnn7_avx_mkl | [fluid_inference.zip](https://paddle-wheel.bj.bcebos.com/1.8.3/win-infer/mkl/post107/fluid_inference_install_dir.zip) | MSVC 2015 update 3 | CMake v3.16.0 | 7.4.1 | 10.0 |

### Hardware Environment

diff --git a/doc/fluid/advanced_guide/performance_improving/amp/amp.md b/doc/fluid/advanced_guide/performance_improving/amp/amp.md

new file mode 100644

index 0000000000000000000000000000000000000000..3a41a447f78cf3bc119abb7754292edbbc23050a

--- /dev/null

+++ b/doc/fluid/advanced_guide/performance_improving/amp/amp.md

@@ -0,0 +1,171 @@

+# 混合精度训练最佳实践

+

+Automatic Mixed Precision (AMP) 是一种自动混合使用半精度(FP16)和单精度(FP32)来加速模型训练的技术。AMP技术可方便用户快速将使用 FP32 训练的模型修改为使用混合精度训练,并通过黑白名单和动态`loss scaling`来保证训练时的数值稳定性进而避免梯度Infinite或者NaN(Not a Number)。借力于新一代NVIDIA GPU中Tensor Cores的计算性能,PaddlePaddle AMP技术在ResNet50、Transformer等模型上训练速度相对于FP32训练加速比可达1.5~2.9。

+

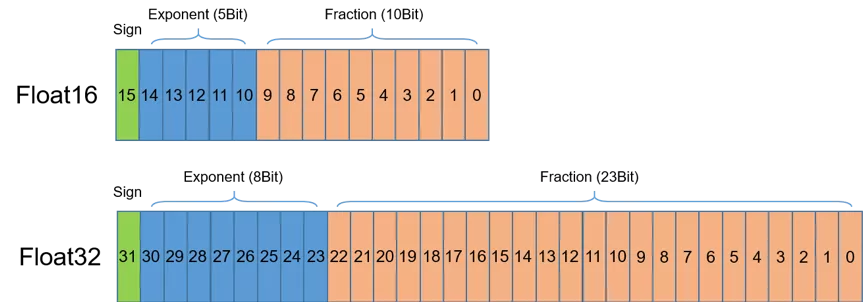

+### 半精度浮点类型FP16

+

+如图 1 所示,半精度(Float Precision16,FP16)是一种相对较新的浮点类型,在计算机中使用2字节(16位)存储。在IEEE 754-2008标准中,它亦被称作binary16。与计算中常用的单精度(FP32)和双精度(FP64)类型相比,FP16更适于在精度要求不高的场景中使用。

+

+

+ 图 1. 半精度和单精度数据示意图

+

+### 英伟达GPU的FP16算力

+

+在使用相同的超参数下,混合精度训练使用半精度浮点(FP16)和单精度(FP32)浮点即可达到与使用纯单精度训练相同的准确率,并可加速模型的训练速度。这主要得益于英伟达推出的Volta及Turing架构GPU在使用FP16计算时具有如下特点:

+

+* FP16可降低一半的内存带宽和存储需求,这使得在相同的硬件条件下研究人员可使用更大更复杂的模型以及更大的batch size大小。

+* FP16可以充分利用英伟达Volta及Turing架构GPU提供的Tensor Cores技术。在相同的GPU硬件上,Tensor Cores的FP16计算吞吐量是FP32的8倍。

+

+### PaddlePaddle AMP功能——牛刀小试

+

+如前文所述,使用FP16数据类型可能会造成计算精度上的损失,但对深度学习领域而言,并不是所有计算都要求很高的精度,一些局部的精度损失对最终训练效果影响很微弱,却能使吞吐和训练速度带来大幅提升。因此,混合精度计算的需求应运而生。具体而言,训练过程中将一些对精度损失不敏感且能利用Tensor Cores进行加速的运算使用半精度处理,而对精度损失敏感部分依然保持FP32计算精度,用以最大限度提升访存和计算效率。

+

+为了避免对每个具体模型人工地去设计和尝试精度混合的方法,PaddlePaadle框架提供自动混合精度训练(AMP)功能,解放"炼丹师"的双手。在PaddlePaddle中使用AMP训练是一件十分容易的事情,用户只需要增加一行代码即可将原有的FP32训练转变为AMP训练。下面以`MNIST`为例介绍PaddlePaddle AMP功能的使用示例。

+

+**MNIST网络定义**

+

+```python

+import paddle.fluid as fluid

+

+def MNIST(data, class_dim):

+ conv1 = fluid.layers.conv2d(data, 16, 5, 1, act=None, data_format='NHWC')

+ bn1 = fluid.layers.batch_norm(conv1, act='relu', data_layout='NHWC')

+ pool1 = fluid.layers.pool2d(bn1, 2, 'max', 2, data_format='NHWC')

+ conv2 = fluid.layers.conv2d(pool1, 64, 5, 1, act=None, data_format='NHWC')

+ bn2 = fluid.layers.batch_norm(conv2, act='relu', data_layout='NHWC')

+ pool2 = fluid.layers.pool2d(bn2, 2, 'max', 2, data_format='NHWC')

+ fc1 = fluid.layers.fc(pool2, size=64, act='relu')

+ fc2 = fluid.layers.fc(fc1, size=class_dim, act='softmax')

+ return fc2

+```

+

+针对CV(Computer Vision)类模型组网,为获得更高的训练性能需要注意如下三点:

+

+* `conv2d`、`batch_norm`以及`pool2d`等需要将数据布局设置为`NHWC`,这样有助于使用TensorCore技术加速计算过程1 2 3

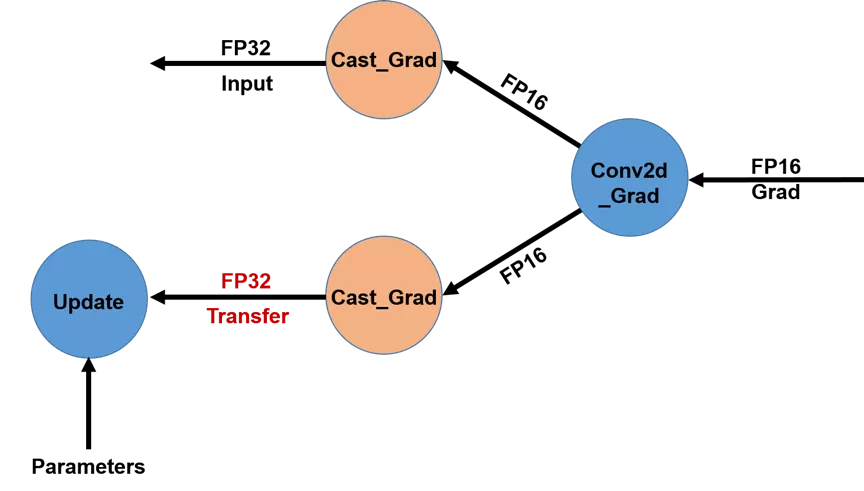

+ 图 2. 不同GPU卡之间传输梯度使用FP32数据类型(优化前)

+

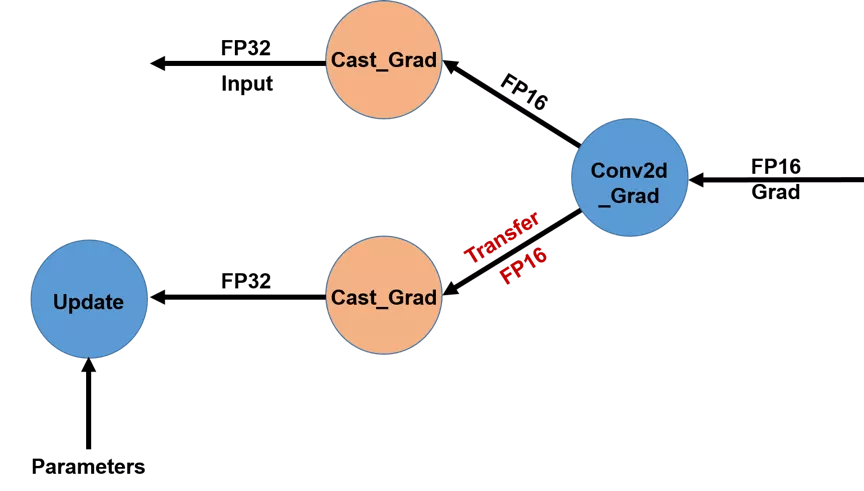

+为了降低GPU多卡之间的梯度传输带宽,我们将梯度传输提前至`Cast`操作之前,而每个GPU卡在得到对应的FP16梯度后再执行`Cast`操作将其转变为FP32类型,具体操作详见图2。这一优化在训练大模型时对减少带宽占用尤其有效,如多卡训练BERT-Large模型。

+

+

+ 图 3. 不同GPU卡之间传输梯度使用FP16数据类型(优化后)

+

+### 训练性能对比(AMP VS FP32)

+

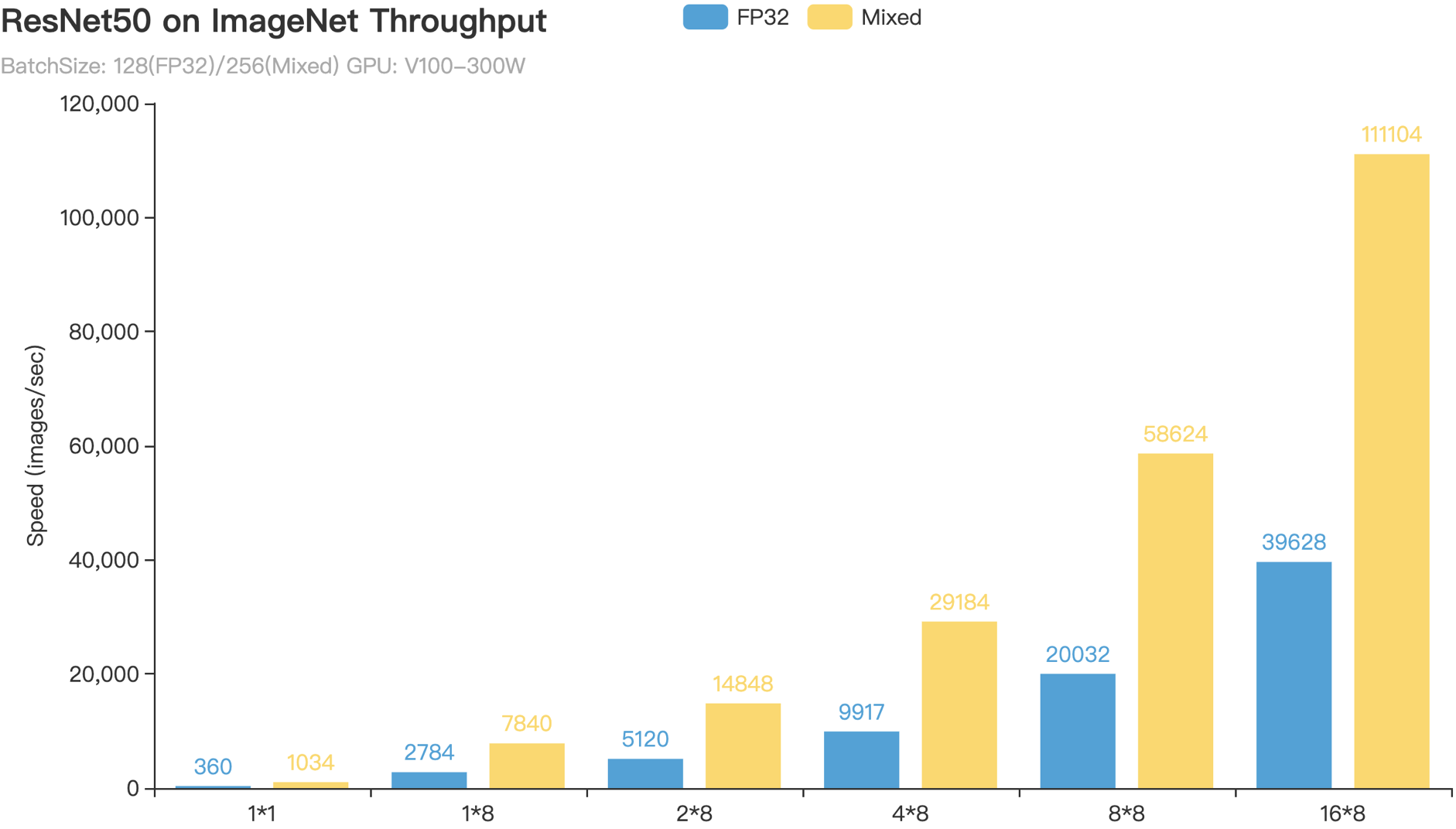

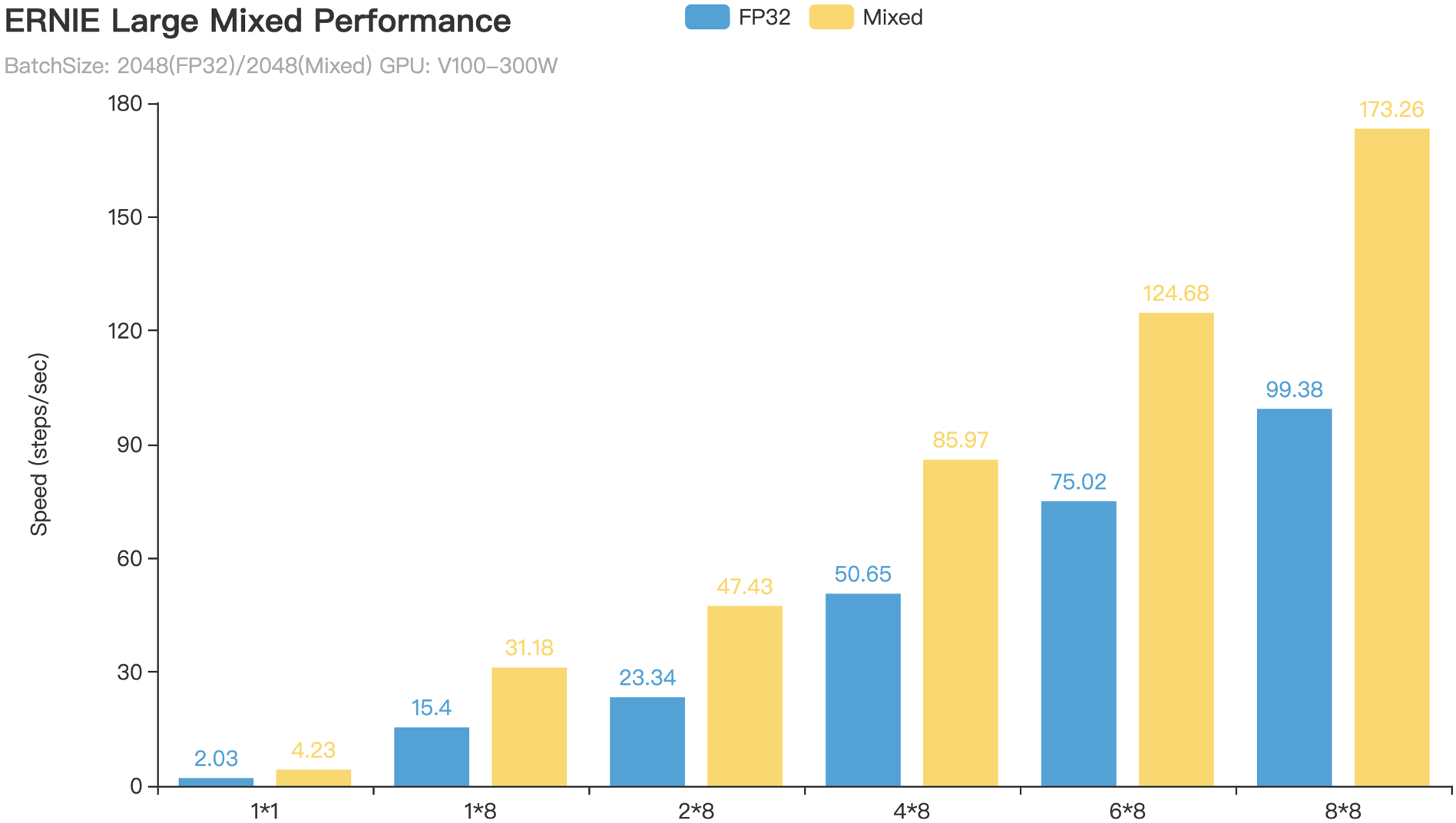

+PaddlePaddle AMP技术在ResNet50、Transformer等模型上训练速度相对于FP32训练上均有可观的加速比,下面是ResNet50和ERNIE Large模型的AMP训练相对于FP32训练的加速效果。

+

+

+图 4. Paddle AMP训练加速效果(横坐标为卡数,如8*8代表8机8卡)

+

+

+

+

+

+从图4所示的图表可以看出,ResNet50的AMP训练相对与FP32训练加速比可达$2.8 \times$以上,而ERNIE Large的AMP训练相对与FP32训练加速比亦可达 $1.7 \times -- 2.1 \times$ 。

+

+### 参考文献

+

+* Mixed Precision Training

+* 使用自动混合精度加速 PaddlePaddle 训练

+* Tensor Layouts In Memory: NCHW vs NHWC ↩

+* Channels In And Out Requirements ↩

+* Matrix-Matrix Multiplication Requirements ↩

diff --git a/doc/fluid/advanced_guide/performance_improving/index_cn.rst b/doc/fluid/advanced_guide/performance_improving/index_cn.rst

index a40594d13bf74398518f23ad923900ad1bed81d8..b50f091f8c70328d37c7cf3dc92a5b0f14a08f33 100644

--- a/doc/fluid/advanced_guide/performance_improving/index_cn.rst

+++ b/doc/fluid/advanced_guide/performance_improving/index_cn.rst

@@ -8,6 +8,7 @@

singlenode_training_improving/training_best_practice.rst

singlenode_training_improving/memory_optimize.rst

device_switching/device_switching.md

+ amp/amp.md

multinode_training_improving/cpu_train_best_practice.rst

multinode_training_improving/dist_training_gpu.rst

multinode_training_improving/gpu_training_with_recompute.rst

diff --git a/doc/fluid/api/dygraph.rst b/doc/fluid/api/dygraph.rst

index ac8ca052197935c9d23f2bab10ed5ceb26fa7f4b..397419a8bee35b419f7d23b7a7ce47eb764c56fd 100644

--- a/doc/fluid/api/dygraph.rst

+++ b/doc/fluid/api/dygraph.rst

@@ -31,6 +31,7 @@ fluid.dygraph

dygraph/guard.rst

dygraph/InstanceNorm.rst

dygraph/InverseTimeDecay.rst

+ dygraph/jit.rst

dygraph/Layer.rst

dygraph/LayerList.rst

dygraph/LayerNorm.rst

@@ -48,10 +49,12 @@ fluid.dygraph

dygraph/PRelu.rst

dygraph/prepare_context.rst

dygraph/ProgramTranslator.rst

+ dygraph/ReduceLROnPlateau.rst

dygraph/save_dygraph.rst

dygraph/Sequential.rst

dygraph/SpectralNorm.rst

dygraph/to_variable.rst

dygraph/TracedLayer.rst

dygraph/Tracer.rst

+ dygraph/TranslatedLayer.rst

dygraph/TreeConv.rst

diff --git a/doc/fluid/api/dygraph/ReduceLROnPlateau.rst b/doc/fluid/api/dygraph/ReduceLROnPlateau.rst

new file mode 100644

index 0000000000000000000000000000000000000000..d03ce41e1d45d51c2fe611c3f5607a399ca6cf3b

--- /dev/null

+++ b/doc/fluid/api/dygraph/ReduceLROnPlateau.rst

@@ -0,0 +1,12 @@

+.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

+ !DO NOT EDIT THIS FILE MANUALLY!

+

+.. _api_fluid_dygraph_ReduceLROnPlateau:

+

+ReduceLROnPlateau

+-----------------

+

+.. autoclass:: paddle.fluid.dygraph.ReduceLROnPlateau

+ :members:

+ :noindex:

+

diff --git a/doc/fluid/api/dygraph/TranslatedLayer.rst b/doc/fluid/api/dygraph/TranslatedLayer.rst

new file mode 100644

index 0000000000000000000000000000000000000000..a6f7fd9411e5179999a8bda3f1ae197092343a7a

--- /dev/null

+++ b/doc/fluid/api/dygraph/TranslatedLayer.rst

@@ -0,0 +1,8 @@

+.. _api_fluid_dygraph_TranslatedLayer:

+

+TranslatedLayer

+-----------------------

+

+.. autoclass:: paddle.fluid.dygraph.TranslatedLayer

+ :members:

+ :noindex:

diff --git a/doc/fluid/api/dygraph/jit.rst b/doc/fluid/api/dygraph/jit.rst

new file mode 100644

index 0000000000000000000000000000000000000000..7853a048535c045bae18f71c8b4d7f1e44cc65eb

--- /dev/null

+++ b/doc/fluid/api/dygraph/jit.rst

@@ -0,0 +1,10 @@

+===

+jit

+===

+

+.. toctree::

+ :maxdepth: 1

+

+ jit/save.rst

+ jit/load.rst

+ jit/SaveLoadConfig.rst

diff --git a/doc/fluid/api/dygraph/jit/SaveLoadConfig.rst b/doc/fluid/api/dygraph/jit/SaveLoadConfig.rst

new file mode 100644

index 0000000000000000000000000000000000000000..e8d1d3bfbc35eca0c05594b540a0cd15c19cebe1

--- /dev/null

+++ b/doc/fluid/api/dygraph/jit/SaveLoadConfig.rst

@@ -0,0 +1,8 @@

+.. _api_fluid_dygraph_jit_SaveLoadConfig:

+

+SaveLoadConfig

+-------------------------------

+

+.. autoclass:: paddle.fluid.dygraph.jit.SaveLoadConfig

+ :members:

+ :noindex:

\ No newline at end of file

diff --git a/doc/fluid/api/dygraph/jit/load.rst b/doc/fluid/api/dygraph/jit/load.rst

new file mode 100644

index 0000000000000000000000000000000000000000..51f59909873dd46bb43e42bdc2258a990580c24c

--- /dev/null

+++ b/doc/fluid/api/dygraph/jit/load.rst

@@ -0,0 +1,7 @@

+.. _api_fluid_dygraph_jit_load:

+

+load

+------------

+

+.. autofunction:: paddle.fluid.dygraph.jit.load

+ :noindex:

diff --git a/doc/fluid/api/dygraph/jit/save.rst b/doc/fluid/api/dygraph/jit/save.rst

new file mode 100644

index 0000000000000000000000000000000000000000..fb55029c2870b8c56edd93c4907ae0894036eabe

--- /dev/null

+++ b/doc/fluid/api/dygraph/jit/save.rst

@@ -0,0 +1,7 @@

+.. _api_fluid_dygraph_jit_save:

+

+save

+------------

+

+.. autofunction:: paddle.fluid.dygraph.jit.save

+ :noindex:

diff --git a/doc/fluid/api/gen_doc.sh b/doc/fluid/api/gen_doc.sh

index fe9612775fd31bfda1fe409de47527fe3f7fee57..b2ea86c2a0a99290935d6d9c112edfe3d86da869 100644

--- a/doc/fluid/api/gen_doc.sh

+++ b/doc/fluid/api/gen_doc.sh

@@ -10,7 +10,7 @@ python gen_doc.py --module_name "" --module_prefix "" --output fluid --output_na

python gen_module_index.py fluid fluid

# tensor

-for module in math random stat

+for module in math random stat linalg search

do

python gen_doc.py --module_name ${module} --module_prefix ${module} --output ${module} --output_name tensor --to_multiple_files True --output_dir tensor

python gen_module_index.py tensor.${module} ${module}

diff --git a/doc/fluid/api/gen_index.py b/doc/fluid/api/gen_index.py

index 16bea3fd471e4d08ceb71d8a1150589f041292c9..4cc7272b03aa0fec3eefe543d7ff7ad791d6e1fd 100644

--- a/doc/fluid/api/gen_index.py

+++ b/doc/fluid/api/gen_index.py

@@ -4,7 +4,7 @@ import glob

import os

if __name__ == '__main__':

- with open('index_en.rst', 'w') as file_object:

+ with open('index_en.rst', 'w') as file_object:

file_object = open('index_en.rst', 'w')

file_object.write('''=============

API Reference

@@ -25,16 +25,16 @@ API Reference

else:

pattern = target_dir + '/*.rst'

file_names.extend(glob.glob(pattern))

-

+

for file_name in sorted(file_names):

- with open(file_name, 'r')as f:

+ with open(file_name, 'r') as f:

for i in range(2):

line = f.readline().strip()

if line.find('paddle.') != -1:

- file_object.write(' '+file_name + "\n")

+ file_object.write(' ' + file_name + "\n")

file_names.remove(file_name)

- file_object.write(' '+'fluid.rst' + "\n")

+ file_object.write(' ' + 'fluid.rst' + "\n")

for file_name in sorted(file_names):

- if file_name not in ['index_en.rst', 'fluid.rst']:

- file_object.write(' '+file_name + "\n")

+ if file_name not in ['index_en.rst']:

+ file_object.write(' ' + file_name + "\n")

diff --git a/doc/fluid/api/imperative.rst b/doc/fluid/api/imperative.rst

index 48aebcbfddf188d74dfa182559cffa25997bead4..f138e06701b138dc109dab2e3b1c17832658d390 100644

--- a/doc/fluid/api/imperative.rst

+++ b/doc/fluid/api/imperative.rst

@@ -13,6 +13,7 @@ paddle.imperative

imperative/grad.rst

imperative/guard.rst

imperative/InverseTimeDecay.rst

+ imperative/jit.rst

imperative/load.rst

imperative/NaturalExpDecay.rst

imperative/no_grad.rst

@@ -25,3 +26,4 @@ paddle.imperative

imperative/save.rst

imperative/to_variable.rst

imperative/TracedLayer.rst

+ imperative/TranslatedLayer.rst

diff --git a/doc/fluid/api/imperative/TranslatedLayer.rst b/doc/fluid/api/imperative/TranslatedLayer.rst

new file mode 100644

index 0000000000000000000000000000000000000000..0299a9f57392e267ae015947345249784fd929f5

--- /dev/null

+++ b/doc/fluid/api/imperative/TranslatedLayer.rst

@@ -0,0 +1,5 @@

+.. _api_imperative_TranslatedLayer:

+

+TranslatedLayer

+-------------------------------

+:doc_source: paddle.fluid.dygraph.io.TranslatedLayer

diff --git a/doc/fluid/api/imperative/jit.rst b/doc/fluid/api/imperative/jit.rst

new file mode 100644

index 0000000000000000000000000000000000000000..7853a048535c045bae18f71c8b4d7f1e44cc65eb

--- /dev/null

+++ b/doc/fluid/api/imperative/jit.rst

@@ -0,0 +1,10 @@

+===

+jit

+===

+

+.. toctree::

+ :maxdepth: 1

+

+ jit/save.rst

+ jit/load.rst

+ jit/SaveLoadConfig.rst

diff --git a/doc/fluid/api/imperative/jit/SaveLoadConfig.rst b/doc/fluid/api/imperative/jit/SaveLoadConfig.rst

new file mode 100644

index 0000000000000000000000000000000000000000..cab85776ec33f9cab2dc788ebbb3081fca1d4035

--- /dev/null

+++ b/doc/fluid/api/imperative/jit/SaveLoadConfig.rst

@@ -0,0 +1,5 @@

+.. _api_imperative_jit_SaveLoadConfig:

+

+SaveLoadConfig

+-------------------------------

+:doc_source: paddle.fluid.dygraph.jit.SaveLoadConfig

diff --git a/doc/fluid/api/imperative/jit/load.rst b/doc/fluid/api/imperative/jit/load.rst

new file mode 100644

index 0000000000000000000000000000000000000000..723a87936a8f26653eb2b34f361aa35a4b3fd74f

--- /dev/null

+++ b/doc/fluid/api/imperative/jit/load.rst

@@ -0,0 +1,5 @@

+.. _api_imperative_jit_load:

+

+load

+-------------------------------

+:doc_source: paddle.fluid.dygraph.jit.load

diff --git a/doc/fluid/api/imperative/jit/save.rst b/doc/fluid/api/imperative/jit/save.rst

new file mode 100644

index 0000000000000000000000000000000000000000..b809a99166e35edd65af253dffe40053776a68dc

--- /dev/null

+++ b/doc/fluid/api/imperative/jit/save.rst

@@ -0,0 +1,5 @@

+.. _api_imperative_jit_save:

+

+save

+-------------------------------

+:doc_source: paddle.fluid.dygraph.jit.save

diff --git a/doc/fluid/api/index_en.rst b/doc/fluid/api/index_en.rst

index efbe319fb04e9ad1cce54a760e955b51c311943f..f360715383903c2f6efae7a01e662a710fafc340 100644

--- a/doc/fluid/api/index_en.rst

+++ b/doc/fluid/api/index_en.rst

@@ -6,15 +6,30 @@ API Reference

:maxdepth: 1

../api_guides/index_en.rst

- paddle.rst

dataset.rst

- tensor.rst

- nn.rst

- imperative.rst

declarative.rst

- optimizer.rst

- metric.rst

framework.rst

+ imperative.rst

io.rst

- utils.rst

- incubate.rst

+ metric.rst

+ nn.rst

+ optimizer.rst

+ tensor.rst

+ fluid.rst

+ backward.rst

+ clip.rst

+ data/data_reader.rst

+ data/dataset.rst

+ dygraph.rst

+ executor.rst

+ fluid.rst

+ initializer.rst

+ layers.rst

+ metrics.rst

+ nets.rst

+ paddle.rst

+ profiler.rst

+ regularizer.rst

+ transpiler.rst

+ unique_name.rst

+ review_tmp.rst

diff --git a/doc/fluid/api/layers.rst b/doc/fluid/api/layers.rst

index 3ad2eeaa2941b7a0b6df18eb51aaf7b3691504b5..0f1fe3c222c5266deacca8603ff10ce9fed33429 100644

--- a/doc/fluid/api/layers.rst

+++ b/doc/fluid/api/layers.rst

@@ -182,6 +182,7 @@ fluid.layers

layers/mul.rst

layers/multi_box_head.rst

layers/multiclass_nms.rst

+ layers/matrix_nms.rst

layers/multiplex.rst

layers/MultivariateNormalDiag.rst

layers/natural_exp_decay.rst

diff --git a/doc/fluid/api/layers/matrix_nms.rst b/doc/fluid/api/layers/matrix_nms.rst

new file mode 100644

index 0000000000000000000000000000000000000000..60bbbeb151bdd87861c37b625139988ce7db9467

--- /dev/null

+++ b/doc/fluid/api/layers/matrix_nms.rst

@@ -0,0 +1,11 @@

+.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

+ !DO NOT EDIT THIS FILE MANUALLY!

+

+.. _api_fluid_layers_matrix_nms:

+

+matrix_nms

+--------------

+

+.. autofunction:: paddle.fluid.layers.matrix_nms

+ :noindex:

+

diff --git a/doc/fluid/api/nn.rst b/doc/fluid/api/nn.rst

index b64a884221bdf398f2351ea26ae6d46eceb59c51..3d8ad814db7dfe9da66f6324117ad7c6c83c18fb 100644

--- a/doc/fluid/api/nn.rst

+++ b/doc/fluid/api/nn.rst

@@ -49,6 +49,7 @@ paddle.nn

nn/exponential_decay.rst

nn/filter_by_instag.rst

nn/fsp_matrix.rst

+ nn/functional.rst

nn/gather_tree.rst

nn/gelu.rst

nn/generate_mask_labels.rst

@@ -67,6 +68,7 @@ paddle.nn

nn/huber_loss.rst

nn/image_resize.rst

nn/image_resize_short.rst

+ nn/initializer.rst

nn/inverse_time_decay.rst

nn/iou_similarity.rst

nn/kldiv_loss.rst

@@ -82,7 +84,7 @@ paddle.nn

nn/logsigmoid.rst

nn/loss.rst

nn/lrn.rst

- nn/margin_rank_loss.rst

+ nn/matrix_nms.rst

nn/maxout.rst

nn/mse_loss.rst

nn/multiclass_nms.rst

@@ -91,14 +93,13 @@ paddle.nn

nn/npair_loss.rst

nn/one_hot.rst

nn/pad.rst

- nn/pad_constant_like.rst

nn/pad2d.rst

+ nn/pad_constant_like.rst

nn/ParameterList.rst

nn/piecewise_decay.rst

nn/pixel_shuffle.rst

nn/polygon_box_transform.rst

nn/polynomial_decay.rst

- nn/pool2d.rst

nn/Pool2D.rst

nn/pool3d.rst

nn/prior_box.rst

@@ -148,3 +149,5 @@ paddle.nn

nn/while_loop.rst

nn/yolo_box.rst

nn/yolov3_loss.rst

+ nn/functional/loss/margin_ranking_loss.rst

+ nn/layer/loss/MarginRankingLoss.rst

diff --git a/doc/fluid/api/nn/functional.rst b/doc/fluid/api/nn/functional.rst

new file mode 100644

index 0000000000000000000000000000000000000000..551924348e956066edf7affedb78a60e7adf2df4

--- /dev/null

+++ b/doc/fluid/api/nn/functional.rst

@@ -0,0 +1,9 @@

+==========

+functional

+==========

+

+.. toctree::

+ :maxdepth: 1

+

+ functional/l1_loss.rst

+ functional/nll_loss.rst

diff --git a/doc/fluid/api/nn/functional/l1_loss.rst b/doc/fluid/api/nn/functional/l1_loss.rst

new file mode 100644

index 0000000000000000000000000000000000000000..01a3ea06e7d034eb70744146816e6d0a166b749d

--- /dev/null

+++ b/doc/fluid/api/nn/functional/l1_loss.rst

@@ -0,0 +1,10 @@

+.. _api_nn_functional_l1_loss:

+

+l1_loss

+------

+

+.. autoclass:: paddle.nn.functional.l1_loss

+ :members:

+ :inherited-members:

+ :noindex:

+

diff --git a/doc/fluid/api/nn/functional/loss/margin_ranking_loss.rst b/doc/fluid/api/nn/functional/loss/margin_ranking_loss.rst

new file mode 100644

index 0000000000000000000000000000000000000000..e92eadc126a49d8a46bcfc06960eb39dcdc35fec

--- /dev/null

+++ b/doc/fluid/api/nn/functional/loss/margin_ranking_loss.rst

@@ -0,0 +1,11 @@

+.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

+ !DO NOT EDIT THIS FILE MANUALLY!

+

+.. _api_nn_functional_loss_margin_ranking_loss:

+

+margin_ranking_loss

+-------------------

+

+.. autofunction:: paddle.nn.functional.loss.margin_ranking_loss

+ :noindex:

+

diff --git a/doc/fluid/api/nn/functional/nll_loss.rst b/doc/fluid/api/nn/functional/nll_loss.rst

new file mode 100644

index 0000000000000000000000000000000000000000..6f0ce4093ac8a9cefc202e4346457edd4b2c6ae1

--- /dev/null

+++ b/doc/fluid/api/nn/functional/nll_loss.rst

@@ -0,0 +1,10 @@

+.. _api_nn_functional_nll_loss:

+

+nll_loss

+-------------------------------

+

+.. autoclass:: paddle.nn.functional.nll_loss

+ :members:

+ :inherited-members:

+ :noindex:

+

diff --git a/doc/fluid/api/nn/layer/loss/MarginRankingLoss.rst b/doc/fluid/api/nn/layer/loss/MarginRankingLoss.rst

new file mode 100644

index 0000000000000000000000000000000000000000..d69d1deff5defab24b2f12ea877c3a208a801478

--- /dev/null

+++ b/doc/fluid/api/nn/layer/loss/MarginRankingLoss.rst

@@ -0,0 +1,13 @@

+.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

+ !DO NOT EDIT THIS FILE MANUALLY!

+

+.. _api_nn_layer_loss_MarginRankingLoss:

+

+MarginRankingLoss

+-----------------

+

+.. autoclass:: paddle.nn.layer.loss.MarginRankingLoss

+ :members:

+ :inherited-members:

+ :noindex:

+

diff --git a/doc/fluid/api/transpiler/RoundRobin.rst b/doc/fluid/api/nn/loss/NLLLoss.rst

similarity index 56%

rename from doc/fluid/api/transpiler/RoundRobin.rst

rename to doc/fluid/api/nn/loss/NLLLoss.rst

index 547757d20e8388b3ea51b52a0b4c9e23116f0645..c1a0c26de51b8869a8eccb2150c8e5635159f1de 100644

--- a/doc/fluid/api/transpiler/RoundRobin.rst

+++ b/doc/fluid/api/nn/loss/NLLLoss.rst

@@ -1,12 +1,12 @@

.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

!DO NOT EDIT THIS FILE MANUALLY!

-.. _api_fluid_transpiler_RoundRobin:

+.. _api_nn_loss_NLLLoss:

-RoundRobin

-----------

+NLLLoss

+-------------------------------

-.. autoclass:: paddle.fluid.transpiler.RoundRobin

+.. autoclass:: paddle.nn.loss.NLLLoss

:members:

:inherited-members:

:noindex:

diff --git a/doc/fluid/api/nn/margin_rank_loss.rst b/doc/fluid/api/nn/margin_rank_loss.rst

deleted file mode 100644

index 1ef924d8728dce215f20372bd4f6ea4a87a27874..0000000000000000000000000000000000000000

--- a/doc/fluid/api/nn/margin_rank_loss.rst

+++ /dev/null

@@ -1,7 +0,0 @@

-.. _api_nn_margin_rank_loss:

-

-margin_rank_loss

--------------------------------

-:doc_source: paddle.fluid.layers.margin_rank_loss

-

-

diff --git a/doc/fluid/api/nn/matrix_nms.rst b/doc/fluid/api/nn/matrix_nms.rst

new file mode 100644

index 0000000000000000000000000000000000000000..49529d0faf1118dc3c61018d1be232b5d7ff5b63

--- /dev/null

+++ b/doc/fluid/api/nn/matrix_nms.rst

@@ -0,0 +1,5 @@

+.. _api_nn_matrix_nms:

+

+matrix_nms

+-------------------------------

+:doc_source: paddle.fluid.layers.matrix_nms

diff --git a/doc/fluid/api/nn/softmax.rst b/doc/fluid/api/nn/softmax.rst

index 5eba38ad90a2d587f09a01a653dc01c7f3f877bb..bb18407af36005b23ab911390b8be880c9695101 100644

--- a/doc/fluid/api/nn/softmax.rst

+++ b/doc/fluid/api/nn/softmax.rst

@@ -1,7 +1,11 @@

+.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

+ !DO NOT EDIT THIS FILE MANUALLY!

+

.. _api_nn_softmax:

softmax

--------------------------------

-:doc_source: paddle.fluid.layers.softmax

+-------

+.. autofunction:: paddle.nn.functional.softmax

+ :noindex:

diff --git a/doc/fluid/api/optimizer.rst b/doc/fluid/api/optimizer.rst

index a3a1736f15d8b0da8823d8b9dad8992c3b8581b6..06ccc695574c9d060dfe4c853c7d6c2c4ed8eb4f 100644

--- a/doc/fluid/api/optimizer.rst

+++ b/doc/fluid/api/optimizer.rst

@@ -28,7 +28,6 @@ paddle.optimizer

optimizer/ModelAverage.rst

optimizer/Momentum.rst

optimizer/MomentumOptimizer.rst

- optimizer/PipelineOptimizer.rst

optimizer/RecomputeOptimizer.rst

optimizer/RMSPropOptimizer.rst

optimizer/SGD.rst

diff --git a/doc/fluid/api/optimizer/PipelineOptimizer.rst b/doc/fluid/api/optimizer/PipelineOptimizer.rst

deleted file mode 100644

index 87e6f4026d49f4db11dec390faf325082bb1fdbe..0000000000000000000000000000000000000000

--- a/doc/fluid/api/optimizer/PipelineOptimizer.rst

+++ /dev/null

@@ -1,14 +0,0 @@

-.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

- !DO NOT EDIT THIS FILE MANUALLY!

-

-.. _api_fluid_optimizer_PipelineOptimizer:

-

-PipelineOptimizer

------------------

-

-.. autoclass:: paddle.fluid.optimizer.PipelineOptimizer

- :members:

- :inherited-members:

- :exclude-members: apply_gradients, apply_optimize, backward, load

- :noindex:

-

diff --git a/doc/fluid/api/paddle.rst b/doc/fluid/api/paddle.rst

index c4af7870125e5c794621f8d828bc29db91e29efe..1d69e4df54808d97d7876289469b0b5e6cf7fa91 100644

--- a/doc/fluid/api/paddle.rst

+++ b/doc/fluid/api/paddle.rst

@@ -45,10 +45,7 @@ paddle

paddle/dot.rst

paddle/elementwise_add.rst

paddle/elementwise_div.rst

- paddle/elementwise_equal.rst

paddle/elementwise_floordiv.rst

- paddle/elementwise_max.rst

- paddle/elementwise_min.rst

paddle/elementwise_mod.rst

paddle/elementwise_mul.rst

paddle/elementwise_pow.rst

@@ -56,6 +53,7 @@ paddle

paddle/elementwise_sum.rst

paddle/enable_imperative.rst

paddle/equal.rst

+ paddle/equal_all.rst

paddle/erf.rst

paddle/ExecutionStrategy.rst

paddle/Executor.rst

@@ -99,9 +97,11 @@ paddle

paddle/manual_seed.rst

paddle/matmul.rst

paddle/max.rst

+ paddle/maximum.rst

paddle/mean.rst

paddle/meshgrid.rst

paddle/min.rst

+ paddle/minimum.rst

paddle/mm.rst

paddle/mul.rst

paddle/multiplex.rst

diff --git a/doc/fluid/api/paddle/ExecutionStrategy.rst b/doc/fluid/api/paddle/ExecutionStrategy.rst

index fd36fd620edda583bbd6570b6a1da78951a567be..6df5ca375f2e26b5bd9d4fe999461c41be9ad315 100644

--- a/doc/fluid/api/paddle/ExecutionStrategy.rst

+++ b/doc/fluid/api/paddle/ExecutionStrategy.rst

@@ -2,6 +2,6 @@

ExecutionStrategy

-------------------------------

-:doc_source: paddle.framework.ExecutionStrategy

+:doc_source: paddle.fluid.ExecutionStrategy

diff --git a/doc/fluid/api/paddle/argsort.rst b/doc/fluid/api/paddle/argsort.rst

index 43be9de959815defb112d674db64f06410ffd4b7..716f7e79312bcc0f83abff33bf3684b6a6b68500 100644

--- a/doc/fluid/api/paddle/argsort.rst

+++ b/doc/fluid/api/paddle/argsort.rst

@@ -2,6 +2,6 @@

argsort

-------------------------------

-:doc_source: paddle.fluid.layers.argsort

+:doc_source: paddle.tensor.argsort

diff --git a/doc/fluid/api/paddle/cumsum.rst b/doc/fluid/api/paddle/cumsum.rst

index 26211d9321da87942f4469ef47bfd78fa7173d64..673296e8836d1116f16d65b73a4f781241538dd4 100644

--- a/doc/fluid/api/paddle/cumsum.rst

+++ b/doc/fluid/api/paddle/cumsum.rst

@@ -2,6 +2,6 @@

cumsum

-------------------------------

-:doc_source: paddle.fluid.layers.cumsum

+:doc_source: paddle.tensor.cumsum

diff --git a/doc/fluid/api/paddle/elementwise_equal.rst b/doc/fluid/api/paddle/elementwise_equal.rst

deleted file mode 100644

index 485738ee2b32b6735e3209638b2fa162546a41fc..0000000000000000000000000000000000000000

--- a/doc/fluid/api/paddle/elementwise_equal.rst

+++ /dev/null

@@ -1,7 +0,0 @@

-.. _api_paddle_elementwise_equal:

-

-elementwise_equal

--------------------------------

-:doc_source: paddle.fluid.layers.equal

-

-

diff --git a/doc/fluid/api/paddle/elementwise_max.rst b/doc/fluid/api/paddle/elementwise_max.rst

deleted file mode 100644

index 76f9148ef2f600ada77f099fdd69a781aa72ab40..0000000000000000000000000000000000000000

--- a/doc/fluid/api/paddle/elementwise_max.rst

+++ /dev/null

@@ -1,7 +0,0 @@

-.. _api_paddle_elementwise_max:

-

-elementwise_max

--------------------------------

-:doc_source: paddle.fluid.layers.elementwise_max

-

-

diff --git a/doc/fluid/api/paddle/elementwise_min.rst b/doc/fluid/api/paddle/elementwise_min.rst

deleted file mode 100644

index f2258a309201d5ea23a518a70d79dd8ca9d06929..0000000000000000000000000000000000000000

--- a/doc/fluid/api/paddle/elementwise_min.rst

+++ /dev/null

@@ -1,7 +0,0 @@

-.. _api_paddle_elementwise_min:

-

-elementwise_min

--------------------------------

-:doc_source: paddle.fluid.layers.elementwise_min

-

-

diff --git a/doc/fluid/api/paddle/equal_all.rst b/doc/fluid/api/paddle/equal_all.rst

new file mode 100644

index 0000000000000000000000000000000000000000..58fc331acc2b3f564dc73bb8c039c17b9b4720f2

--- /dev/null

+++ b/doc/fluid/api/paddle/equal_all.rst

@@ -0,0 +1,7 @@

+.. _api_paddle_equal_all

+

+equal_all

+-------------------------------

+:doc_source: paddle.tensor.equal_all

+

+

diff --git a/doc/fluid/api/paddle/greater_equal.rst b/doc/fluid/api/paddle/greater_equal.rst

index 8739113f3208d15efdd9c00a2619ae612a6e1873..54afe57ffab5185fc2c3fb92a671e0b726108ab3 100644

--- a/doc/fluid/api/paddle/greater_equal.rst

+++ b/doc/fluid/api/paddle/greater_equal.rst

@@ -2,6 +2,6 @@

greater_equal

-------------------------------

-:doc_source: paddle.fluid.layers.greater_equal

+:doc_source: paddle.tensor.greater_equal

diff --git a/doc/fluid/api/paddle/greater_than.rst b/doc/fluid/api/paddle/greater_than.rst

index f54f0e026f520176bc60b00a59e28adc69358915..04a874dd929d7dae274898c87029059b1b1d6261 100644

--- a/doc/fluid/api/paddle/greater_than.rst

+++ b/doc/fluid/api/paddle/greater_than.rst

@@ -2,6 +2,6 @@

greater_than

-------------------------------

-:doc_source: paddle.fluid.layers.greater_than

+:doc_source: paddle.tensor.greater_than

diff --git a/doc/fluid/api/paddle/less_equal.rst b/doc/fluid/api/paddle/less_equal.rst

index 16cc1a647457e370ed105172936b61afad04f00c..3fc5e2ce2b819dfed7ca8b64841836229c86d3e4 100644

--- a/doc/fluid/api/paddle/less_equal.rst

+++ b/doc/fluid/api/paddle/less_equal.rst

@@ -2,6 +2,6 @@

less_equal

-------------------------------

-:doc_source: paddle.fluid.layers.less_equal

+:doc_source: paddle.tensor.less_equal

diff --git a/doc/fluid/api/paddle/less_than.rst b/doc/fluid/api/paddle/less_than.rst

index 2c13074ad988e5a5138a76cff50619963964d55d..7df6eb441d37a2fe8bf95e43a48df8471115ad2c 100644

--- a/doc/fluid/api/paddle/less_than.rst

+++ b/doc/fluid/api/paddle/less_than.rst

@@ -2,6 +2,6 @@

less_than

-------------------------------

-:doc_source: paddle.fluid.layers.less_than

+:doc_source: paddle.tensor.less_than

diff --git a/doc/fluid/api/paddle/max.rst b/doc/fluid/api/paddle/max.rst

index 695f4d5b6bd97f460624650a206affe6b2140c41..0d28148a8dcc0ac31744450c954e9a125e475add 100644

--- a/doc/fluid/api/paddle/max.rst

+++ b/doc/fluid/api/paddle/max.rst

@@ -2,6 +2,6 @@

max

-------------------------------

-:doc_source: paddle.fluid.layers.reduce_max

+:doc_source: paddle.tensor.max

diff --git a/doc/fluid/api/paddle/maximum.rst b/doc/fluid/api/paddle/maximum.rst

new file mode 100644

index 0000000000000000000000000000000000000000..c85f8a97710efb559e5f73c586eb45798224e8db

--- /dev/null

+++ b/doc/fluid/api/paddle/maximum.rst

@@ -0,0 +1,7 @@

+.. _api_paddle_maximum:

+

+maximum

+-------------------------------

+:doc_source: paddle.tensor.maximum

+

+

diff --git a/doc/fluid/api/paddle/min.rst b/doc/fluid/api/paddle/min.rst

index a05dd553f4827dd8feeb4ddc56ccfa3ce7d11eb9..bb99109471c0ab684fdd7646fc446abe8aafe6cb 100644

--- a/doc/fluid/api/paddle/min.rst

+++ b/doc/fluid/api/paddle/min.rst

@@ -2,6 +2,6 @@

min

-------------------------------

-:doc_source: paddle.fluid.layers.reduce_min

+:doc_source: paddle.tensor.min

diff --git a/doc/fluid/api/paddle/minimum.rst b/doc/fluid/api/paddle/minimum.rst

new file mode 100644

index 0000000000000000000000000000000000000000..41391741da78620231f5fe1a9c5ee3ea73ce70be

--- /dev/null

+++ b/doc/fluid/api/paddle/minimum.rst

@@ -0,0 +1,7 @@

+.. _api_paddle_minimum:

+

+minimum

+-------------------------------

+:doc_source: paddle.tensor.minimum

+

+

diff --git a/doc/fluid/api/paddle/not_equal.rst b/doc/fluid/api/paddle/not_equal.rst

index fb5de71d0a79ec9be46c43c02414492acd087f89..4fd1cbe809d9dded938f2014124ee9b738b1d9cd 100644

--- a/doc/fluid/api/paddle/not_equal.rst

+++ b/doc/fluid/api/paddle/not_equal.rst

@@ -2,6 +2,6 @@

not_equal

-------------------------------

-:doc_source: paddle.fluid.layers.not_equal

+:doc_source: paddle.tensor.not_equal

diff --git a/doc/fluid/api/paddle/sort.rst b/doc/fluid/api/paddle/sort.rst

index e22a93a5d3e1f657060756e6a49153423a344ef3..5f87357ccb39b52e975ef73c33b557f220c292a2 100644

--- a/doc/fluid/api/paddle/sort.rst

+++ b/doc/fluid/api/paddle/sort.rst

@@ -2,6 +2,6 @@

sort

-------------------------------

-:doc_source: paddle.fluid.layers.argsort

+:doc_source: paddle.tensor.sort

diff --git a/doc/fluid/api/review_tmp.rst b/doc/fluid/api/review_tmp.rst

new file mode 100644

index 0000000000000000000000000000000000000000..e39366bcef08a15baa15c3cfbb318022a2dc47b2

--- /dev/null

+++ b/doc/fluid/api/review_tmp.rst

@@ -0,0 +1,9 @@

+=================

+paddle.review_tmp

+=================

+

+.. toctree::

+ :maxdepth: 1

+

+ review_tmp/MarginRankingLoss.rst

+ review_tmp/margin_ranking_loss.rst

diff --git a/doc/fluid/api/review_tmp/MarginRankingLoss.rst b/doc/fluid/api/review_tmp/MarginRankingLoss.rst

new file mode 100644

index 0000000000000000000000000000000000000000..edc5d1cc57c85be5eb37312c6dc9b8b204b4d9b1

--- /dev/null

+++ b/doc/fluid/api/review_tmp/MarginRankingLoss.rst

@@ -0,0 +1,9 @@

+.. _api_nn_loss_MarginRankingLoss_tmp:

+

+MarginRankingLoss

+-----------------

+

+.. autoclass:: paddle.nn.loss.MarginRankingLoss

+ :members:

+ :inherited-members:

+ :noindex:

diff --git a/doc/fluid/api/review_tmp/margin_ranking_loss.rst b/doc/fluid/api/review_tmp/margin_ranking_loss.rst

new file mode 100644

index 0000000000000000000000000000000000000000..289d1928bf05925dc81238c7ff0dad2623a4d3fc

--- /dev/null

+++ b/doc/fluid/api/review_tmp/margin_ranking_loss.rst

@@ -0,0 +1,7 @@

+.. _api_nn_functional_margin_ranking_loss_tmp:

+

+margin_ranking_loss

+-------------------

+

+.. autofunction:: paddle.nn.functional.margin_ranking_loss

+ :noindex:

diff --git a/doc/fluid/api/tensor.rst b/doc/fluid/api/tensor.rst

index 84b1501a1ed928960447bee4886d4c32be80b45d..a8eb2516782826e475c067311c765b50fddf4aaa 100644

--- a/doc/fluid/api/tensor.rst

+++ b/doc/fluid/api/tensor.rst

@@ -20,19 +20,18 @@ paddle.tensor

tensor/cos.rst

tensor/create_tensor.rst

tensor/crop_tensor.rst

+ tensor/cross.rst

tensor/cumsum.rst

tensor/diag.rst

tensor/div.rst

tensor/elementwise_add.rst

tensor/elementwise_div.rst

- tensor/elementwise_equal.rst

tensor/elementwise_floordiv.rst

- tensor/elementwise_max.rst

- tensor/elementwise_min.rst

tensor/elementwise_mod.rst

tensor/elementwise_mul.rst

tensor/elementwise_pow.rst

tensor/elementwise_sub.rst

+ tensor/equal_all.rst

tensor/erf.rst

tensor/exp.rst

tensor/expand.rst

@@ -63,8 +62,10 @@ paddle.tensor

tensor/logical_xor.rst

tensor/math.rst

tensor/max.rst

+ tensor/maximum.rst

tensor/mean.rst

tensor/min.rst

+ tensor/minimum.rst

tensor/mm.rst

tensor/mul.rst

tensor/multiplex.rst

@@ -92,6 +93,7 @@ paddle.tensor

tensor/scatter.rst

tensor/scatter_nd.rst

tensor/scatter_nd_add.rst

+ tensor/search.rst

tensor/shape.rst

tensor/shard_index.rst

tensor/shuffle.rst

diff --git a/doc/fluid/api/tensor/argsort.rst b/doc/fluid/api/tensor/argsort.rst

index 927d474d151f63a9bb204f3adc54085574b0f1a6..2168777783e8ff4a2ba5e217ce3f9982f4f97d8f 100644

--- a/doc/fluid/api/tensor/argsort.rst

+++ b/doc/fluid/api/tensor/argsort.rst

@@ -2,6 +2,6 @@

argsort

-------------------------------

-:doc_source: paddle.fluid.layers.argsort

+:doc_source: paddle.tensor.argsort

diff --git a/doc/fluid/api/tensor/cross.rst b/doc/fluid/api/tensor/cross.rst

new file mode 100644

index 0000000000000000000000000000000000000000..3bb049f74d7232bd42020fee1b702c313395ba85

--- /dev/null

+++ b/doc/fluid/api/tensor/cross.rst

@@ -0,0 +1,7 @@

+.. _api_tensor_cn_cos:

+

+cross

+-------------------------------

+:doc_source: paddle.tensor.cross

+

+

diff --git a/doc/fluid/api/tensor/cumsum.rst b/doc/fluid/api/tensor/cumsum.rst

index 835c7231150c7cf6d9de61f4907aca2cd558a192..96c1bf0abf8c06621b93624941025e4929652add 100644

--- a/doc/fluid/api/tensor/cumsum.rst

+++ b/doc/fluid/api/tensor/cumsum.rst

@@ -2,6 +2,6 @@

cumsum

-------------------------------

-:doc_source: paddle.fluid.layers.cumsum

+:doc_source: paddle.tensor.cumsum

diff --git a/doc/fluid/api/tensor/elementwise_equal.rst b/doc/fluid/api/tensor/elementwise_equal.rst

deleted file mode 100644

index ae7944446507328d83969df26d22427aabee1777..0000000000000000000000000000000000000000

--- a/doc/fluid/api/tensor/elementwise_equal.rst

+++ /dev/null

@@ -1,7 +0,0 @@

-.. _api_tensor_cn_elementwise_equal:

-

-elementwise_equal

--------------------------------

-:doc_source: paddle.fluid.layers.equal

-

-

diff --git a/doc/fluid/api/tensor/elementwise_max.rst b/doc/fluid/api/tensor/elementwise_max.rst

deleted file mode 100644

index 5f96581bba4dba88df4bfd4676e0e81050004844..0000000000000000000000000000000000000000

--- a/doc/fluid/api/tensor/elementwise_max.rst

+++ /dev/null

@@ -1,7 +0,0 @@

-.. _api_tensor_cn_elementwise_max:

-

-elementwise_max

--------------------------------

-:doc_source: paddle.fluid.layers.elementwise_max

-

-

diff --git a/doc/fluid/api/tensor/elementwise_min.rst b/doc/fluid/api/tensor/elementwise_min.rst

deleted file mode 100644

index 9b5641099c4afc4738bd6b495d61a323387a74d9..0000000000000000000000000000000000000000

--- a/doc/fluid/api/tensor/elementwise_min.rst

+++ /dev/null

@@ -1,7 +0,0 @@

-.. _api_tensor_cn_elementwise_min:

-

-elementwise_min

--------------------------------

-:doc_source: paddle.fluid.layers.elementwise_min

-

-

diff --git a/doc/fluid/api/tensor/equal_all.rst b/doc/fluid/api/tensor/equal_all.rst

new file mode 100644

index 0000000000000000000000000000000000000000..5149e6101d64b1e2c8626a1d35693fd503b2d230

--- /dev/null

+++ b/doc/fluid/api/tensor/equal_all.rst

@@ -0,0 +1,7 @@

+.. _api_tensor_cn_equal_all:

+

+equal_all

+-------------------------------

+:doc_source: paddle.tensor.equal_all

+

+

diff --git a/doc/fluid/api/tensor/greater_equal.rst b/doc/fluid/api/tensor/greater_equal.rst

index ab967838e629e67052ae574e93098ebcae00c0bf..1a1394de05e7b4bf7b4cbfb463e3c9e79206d9cc 100644

--- a/doc/fluid/api/tensor/greater_equal.rst

+++ b/doc/fluid/api/tensor/greater_equal.rst

@@ -2,6 +2,6 @@

greater_equal

-------------------------------

-:doc_source: paddle.fluid.layers.greater_equal

+:doc_source: paddle.tensor.greater_equal

diff --git a/doc/fluid/api/tensor/greater_than.rst b/doc/fluid/api/tensor/greater_than.rst

index 789f212a75130d76546833207afd5761fea499ee..b0ff74910eb094120568dc4f3c7f792e221c91b7 100644

--- a/doc/fluid/api/tensor/greater_than.rst

+++ b/doc/fluid/api/tensor/greater_than.rst

@@ -2,6 +2,6 @@

greater_than

-------------------------------

-:doc_source: paddle.fluid.layers.greater_than

+:doc_source: paddle.tensor.greater_than

diff --git a/doc/fluid/api/tensor/less_equal.rst b/doc/fluid/api/tensor/less_equal.rst

index 5e7c7180a4899d380c6c1f2d49aba9597e8b456b..4adbeb1ccf2972ccb30cb1fb762dbea7a74114a4 100644

--- a/doc/fluid/api/tensor/less_equal.rst

+++ b/doc/fluid/api/tensor/less_equal.rst

@@ -2,6 +2,6 @@

less_equal

-------------------------------

-:doc_source: paddle.fluid.layers.less_equal

+:doc_source: paddle.tensor.less_equal

diff --git a/doc/fluid/api/tensor/less_than.rst b/doc/fluid/api/tensor/less_than.rst

index c4614acf5f666af3242b01027aa379a1b4ad0cfc..592dc48d66bbdd4c6506e118c98b654bd55e93fe 100644

--- a/doc/fluid/api/tensor/less_than.rst

+++ b/doc/fluid/api/tensor/less_than.rst

@@ -2,6 +2,6 @@

less_than

-------------------------------

-:doc_source: paddle.fluid.layers.less_than

+:doc_source: paddle.tensor.less_than

diff --git a/doc/fluid/api/tensor/max.rst b/doc/fluid/api/tensor/max.rst

index cdd8e4239bf6e73b64775a9858ec0b9661e4d73c..61a8667f8cab06a8433d9ab9e143390d3c1ccbc8 100644

--- a/doc/fluid/api/tensor/max.rst

+++ b/doc/fluid/api/tensor/max.rst

@@ -2,6 +2,6 @@

max

-------------------------------

-:doc_source: paddle.fluid.layers.reduce_max

+:doc_source: paddle.tensor.max

diff --git a/doc/fluid/api/tensor/maximum.rst b/doc/fluid/api/tensor/maximum.rst

new file mode 100644

index 0000000000000000000000000000000000000000..7c91c5f2bd465a17ceae3a2f602addbd115ed273

--- /dev/null

+++ b/doc/fluid/api/tensor/maximum.rst

@@ -0,0 +1,7 @@

+.. _api_tensor_cn_maximum:

+

+maximum

+-------------------------------

+:doc_source: paddle.tensor.maximum

+

+

diff --git a/doc/fluid/api/tensor/mean.rst b/doc/fluid/api/tensor/mean.rst

index dce657a2e57aa3b8d78136c9b9c311f8d23c76aa..d226a37107af8e67ef4d8ea0bf9a17e536fede36 100644

--- a/doc/fluid/api/tensor/mean.rst

+++ b/doc/fluid/api/tensor/mean.rst

@@ -1,7 +1,11 @@

-.. _api_tensor_cn_mean:

+.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

+ !DO NOT EDIT THIS FILE MANUALLY!

+

+.. _api_tensor_mean:

mean

--------------------------------

-:doc_source: paddle.fluid.layers.mean

+---------

+.. autofunction:: paddle.tensor.mean

+ :noindex:

diff --git a/doc/fluid/api/tensor/min.rst b/doc/fluid/api/tensor/min.rst

index ea16448a6752464a582e3a2ea63960dcdcee6e40..cdb8df5c370ce66a5e8e39555699e09730bdcf23 100644

--- a/doc/fluid/api/tensor/min.rst

+++ b/doc/fluid/api/tensor/min.rst

@@ -2,6 +2,6 @@

min

-------------------------------

-:doc_source: paddle.fluid.layers.reduce_min

+:doc_source: paddle.tensor.min

diff --git a/doc/fluid/api/tensor/minimum.rst b/doc/fluid/api/tensor/minimum.rst

new file mode 100644

index 0000000000000000000000000000000000000000..725aaeb8a7f2fa0cf7b1a7fa1d8611a4c4967ac7

--- /dev/null

+++ b/doc/fluid/api/tensor/minimum.rst

@@ -0,0 +1,7 @@

+.. _api_tensor_cn_minimum:

+

+minimum

+-------------------------------

+:doc_source: paddle.tensor.minimum

+

+

diff --git a/doc/fluid/api/tensor/not_equal.rst b/doc/fluid/api/tensor/not_equal.rst

index d4f506f99e814b0a578d474a2bcb78cc6dd7c582..8aeac42d73c7683ba037bef31a6b68c2acf01064 100644

--- a/doc/fluid/api/tensor/not_equal.rst

+++ b/doc/fluid/api/tensor/not_equal.rst

@@ -2,6 +2,6 @@

not_equal

-------------------------------

-:doc_source: paddle.fluid.layers.not_equal

+:doc_source: paddle.tensor.not_equal

diff --git a/doc/fluid/api/tensor/ones_like.rst b/doc/fluid/api/tensor/ones_like.rst

index 4e1ae1407c2717619bb211a3e642e1d0e197db26..47ecd764f36b11d425863b0a09a111040aed31d2 100644

--- a/doc/fluid/api/tensor/ones_like.rst

+++ b/doc/fluid/api/tensor/ones_like.rst

@@ -1,7 +1,11 @@

-.. _api_tensor_cn_ones_like:

+.. THIS FILE IS GENERATED BY `gen_doc.{py|sh}`

+ !DO NOT EDIT THIS FILE MANUALLY!

+

+.. _api_tensor_ones_like:

ones_like

--------------------------------

-:doc_source: paddle.fluid.layers.ones_like

+---------

+.. autofunction:: paddle.tensor.ones_like

+ :noindex:

diff --git a/doc/fluid/api/tensor/random.rst b/doc/fluid/api/tensor/random.rst

index 687c6d5af6475436efc4c12c5ff93023cb32d526..fdc985d3de06f89ecc75c5f52dfe59d8f3747987 100644

--- a/doc/fluid/api/tensor/random.rst

+++ b/doc/fluid/api/tensor/random.rst

@@ -5,6 +5,7 @@ random

.. toctree::

:maxdepth: 1

+ random/rand.rst